UNIT 2

TEXT AND AUDIO

A text is a piece of writing that you read or create.

The type or the characteristics of a text are very important for any work of summarization on it.

The types of texts depend on their purpose, structure and language features.

There are three main categories:

- Expository texts

- It identifies and characterizes experiences, facts, situations, and actions in either abstract or real elements.

- They are meant to explain, inform or describe and they are the most frequently use to write structures.

- They are classified into five categories:

description,

procedure or sequence

comparison

cause-effect explanation

problem-solution presentation

2. Narrative texts

- It entertains, instructs or informs readers by telling a story.

- It deals with imaginary or real world and can be fictional and non-fictional.

3. Argumentative texts.

- It aims to change the readers’ beliefs.

- They comprises of negative qualities or characteristics of something/someone, or try to persuade their readers that an object, product, idea is in some way better than others.

- The Text and Images template delivers long form text, and have some images ready to associate with them.

- The Image Gallery template delivers paragraphs of related text, without having to use images to break up the content.

- The Text Sequence is great at emphasizing one paragraph of important text to learners.

- The Expandable list template delivers a lot of text on one topic, related to different subcategories which can be expanded on.

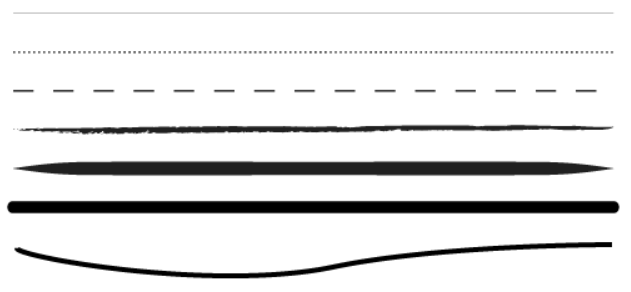

1. Line

- It is the stroke of the pen or pencil but in graphic design, it’s any two connected points.

- They are used for dividing space and drawing the eye to a specific location.

2. Color

- It is an important element of design used for both the user and the designer.

- It can be applied to other elements, like lines, shapes, textures or typography.

- Color creates a mood within the piece and tells a story about the brand.

3. Shape

- Shapes add interest whether geometric or organic.

- They are defined by boundaries, such as a lines or color, and they are often used to emphasize a portion of the page.

4. Space

- Space can be positive or negative.

- Negative space is underutilized and misunderstood aspects of designing for the page.

- The parts of the site that are left blank, whether that’s white or some other color, help to create an overall image.

5. Texture

- They can create a more three-dimensional appearance on this two-dimensional surface.

- It also helps build an immersive world.

- However, Websites and graphic design do rely on the look and impression of texture on the screen.

6. Typography

- It is the single most important part of graphic and web design is typography.

- It includes color, texture, shapes, fonts etc.

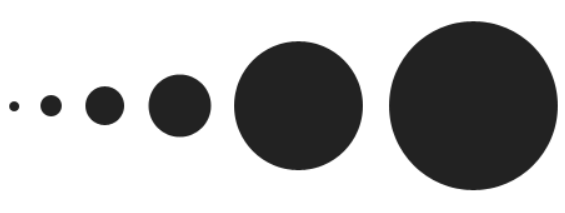

7. Scale (Size)

- The scale and size of your objects, shapes, type and other elements add interest and emphasis.

- The amount of variation will depend heavily on the content within.

8. Dominance and Emphasis

- The element of emphasis has more to do with an object, color or style dominating another for a heightened sense of contrast.

- Contrast is intriguing, and it creates a focal point.

9. Balance

- It includes symmetry and asymmetry.

- For its eye-catching nature, symmetry does have its place.

10. Harmony

- Harmony is the main goal of graphic design and the Elements of Graphic Design

- Harmony is what you get when all the pieces work together.

- Nothing is superfluous.

- Great design is just enough and never too much.

A character is any letter, number, space, punctuation mark, or symbol that can be typed on a computer.

It is defined as the valid characters that can be used in source programs or interpreted when a program is running.

The source character set is available for the source text.

The execution character set is available when executing a program.

Most ANSI-compatible C compilers accept the following ASCII characters for both the source and execution character sets. Each ASCII character corresponds to a numeric value.

- The 26 lowercase Roman characters:

- a b c d e f g h i j k l m n o p q r s t u v w x y z

- The 26 uppercase Roman characters:

- A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

- The 10 decimal digits:

- 0 1 2 3 4 5 6 7 8 9

- The 30 graphic characters:

- ! # % ^ & * ( ) - _ = + ~ ' " : ; ? / | \ { } [ ] , . < > $

- Five white space characters:

Space | ( ) |

Horizontal tab | (\t) |

Form feed | (\f) |

Vertical tab | (\v) |

New-line character | (\n) |

The ASCII execution character set also includes the following control characters:

- New-line character (represented by \n in the source file),

- Alert (bell) tone (\a )

- Backspace (\b )

- Carriage return (\r )

- Null character (\0 )

- It describes a specific encoding for characters as defined in the code page.

- Each character code defines how the bits in a stream of text are mapped to the characters they represent.

- ASCII is the basis of most code pages; for example, the value for a character "C" is represented by 67 in ASCII.

- Unicode is a computing industry standard for the consistent encoding, representation and handling of text.

- It is developed in conjunction with the Universal Character Set standard and published in book form as The Unicode Standard.

- It consists of a repertoire of more than 109,000 characters covering 93 scripts, a set of code charts for visual reference, an encoding methodology and set of standard character encodings, an enumeration of character properties such as upper and lower case, a set of reference data computer files, and a number of related items, such as character properties, rules for normalization, decomposition, collation, rendering, and bidirectional display order.

- It can be implemented by different character encodings.

- The most commonly used encodings are UTF-8, the now-obsolete UCS-2 and UTF-16.

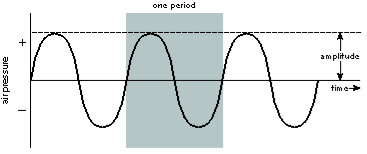

Sound is a physical phenomenon produced by the vibration of matter. The matter can be almost anything: a violin string or a block of wood, for example. As the matter vibrates, pressure variations are created in the air surrounding it. This alternation of high and low pressure is propagated through the air in a wave-like motion. When the wave reaches our ears, we hear a sound.

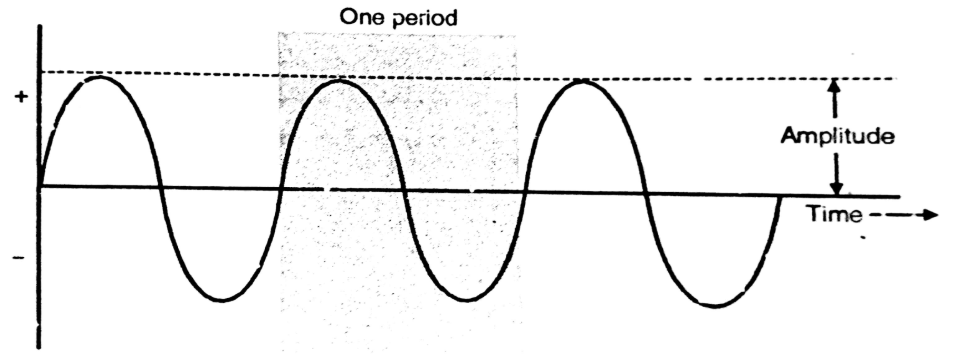

The pattern of the pressure oscillation is called a waveform.This portion of the waveform is called a period. A waveform with a clearly defined period occurring at regular intervals is called aperiodic waveform.

Frequency

The frequency of a sound―the number of times the pressure rises and falls, or oscillates, in a second―is measured in hertz (Hz).

The frequency range of normal human hearing extends from around 20 Hz up to about 20 kHz.

The frequency axis is logarithmic, not linear.

To traverse the audio range from low to high by equal-sounding steps, each successive frequency increment must be greater than the last.

Amplitude

A sound also has an amplitude, a property subjectively heard as loudness.

The amplitude of a sound is the measure of the displacement of air pressure from its mean, or quiescent state.

The greater the amplitude, the louder the sound.

1. Soft

2. Loud

3. Pleasant

4. Unpleasant

5. Musical

6. Audible

7. Inaudible etc.

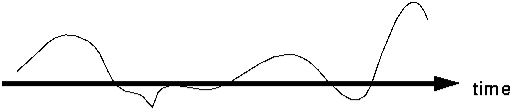

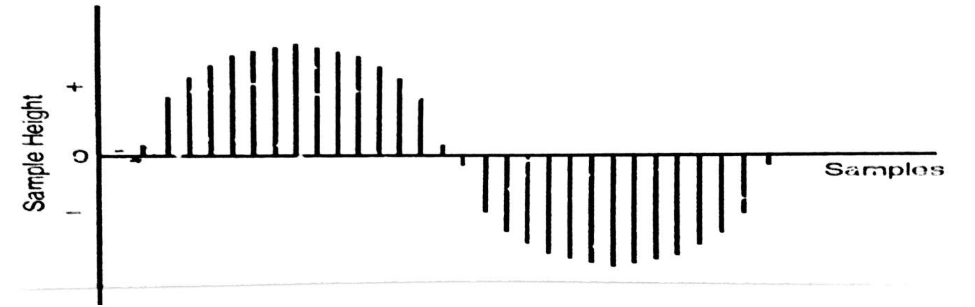

Sound is required input into a computer: it needs to sampled or digitized.

Microphones, video cameras produce analog signals.

To get audio or video into a computer, we have to digitize it that is convert it into a stream of numbers.

Hence, discrete sampling (both time and voltage) is done by:

Sampling - divide the horizontal axis (the time dimension) into discrete pieces. Uniform sampling is ubiquitous.

Quantization - divide the vertical axis (signal strength) into pieces. Sometimes, a non-linear function is applied. 8 bit quantization divides the vertical axis into 256 levels. 16 bit gives you 65536 levels.

A computer measures the amplitude of the waveform at regular time intervals to produce a series of numbers.

• Each of these measurements is called a sample.

• Fig below illustrates one period of a digitally sampled waveform.

• Each vertical bar represents single sample. The height of the bar indicates the value of that sample.

1. PCM

PCM stands for Pulse-Code Modulation.

It is a digital representation of raw analog audio signals.

This digital audio format has a “sampling rate” and a “bit depth”.

There is no compression involved.

The digital recording is a close-to-exact representation of analog sound.

It is used in CDs and DVDs.

2. WAV

WAV stands for Waveform Audio File Format.

It’s a standard that was developed by Microsoft and IBM back in 1991.

It is a Windows container for different audio formats.

It could potentially contain compressed audio, but it’s rarely used for that.

Most WAV files contain uncompressed audio in PCM format.

3. AIFF

AIFF stands for Audio Interchange File Format.

It contains multiple kinds of audio formats. For example, there is a compressed version called AIFF-C and another version called Apple Loops which is used by GarageBand and Logic Audio.

They both use the same AIFF extension.

Most AIFF files contain uncompressed audio in PCM format.

It is just a wrapper for the PCM encoding, and Windows systems can usually open AIFF files without any issues.

4. MP3

MP3 stands for MPEG-1 Audio Layer 3.

It was released back in 1993 and exploded in popularity, eventually becoming the most popular audio format in the world for music files.

The main goal of MP3 is three-fold: 1) to drop all the sound data that exists beyond the hearing range of normal people, and 2) to reduce the quality of sounds that aren’t easy to hear, then 3) to compress all other audio data as efficiently as possible.

Nearly every digital device in the world with audio playback can read and play MP3 files.

5. AAC

AAC stands for Advanced Audio Coding.

It was developed in 1997 as the successor to MP3.

The compression algorithm used by AAC is much more advanced and technical than MP3, so when you compare the same recording in MP3 and AAC formats at the same bitrates, the AAC one will generally have better sound quality.

Even though MP3 is more of a household format, AAC is still widely used today. In fact, it’s the standard audio compression method used by YouTube, Android, iOS, iTunes, later Nintendo portables, and later PlayStations.

6. OGG (Vorbis)

OGG doesn’t stand for anything. Actually, it’s not even a compression format.

OGG is a multimedia container that can hold all kinds of compression formats, but is most commonly used to hold Vorbis files.

Vorbis was first released in 2000 and grew in popularity due to two reasons: 1) it adheres to the principles of open source software, and 2) it performs significantly better than most other lossy compression formats.

7. WMA (Lossy)

WMA stands for Windows Media Audio.

It was first released in 1999 and has gone through several evolutions since then, all while keeping the same WMA name and extension.

It’s a proprietary format created by Microsoft.

In terms of objective compression quality, WMA is actually better than MP3.

8. FLAC

FLAC stands for Free Lossless Audio Codec.

A bit on the nose maybe, but it has quickly become one of the most popular lossless formats available since its introduction in 2001.

It can compress an original source file by up to 60 percent without losing a single bit of data.

It is an open-source and royalty-free audio file format, so it doesn’t impose any intellectual property constraints.

FLAC is supported by most major programs and devices and is the main alternative to MP3 for music.

9. ALAC

ALAC stands for Apple Lossless Audio Codec.

It was developed and launched in 2004 as a proprietary format but eventually became open-source and royalty-free in 2011.

ALAC is sometimes referred to as Apple Lossless.

While ALAC is good, it’s slightly less efficient than FLAC when it comes to compression

10. WMA (Lossless)

WMA stands for Windows Media Audio.

It is the worst in terms of compression efficiency.

It’s a proprietary format so it’s no good for fans of open-source software, but it’s supported natively on both Windows and Mac systems.

The biggest issue with WMA Lossless is the limited hardware support.

Audio Tools is the #1 audio and acoustic test & measurement platform in use by audio professionals around the world. The Tools and Utilities pages for all of the great helpers, add-ons, and built-in powerful features that are available on the Audio Tools platform.

MIDI (Musical Instrument Digital Interface) is a protocol developed in the 1980's which allows electronic instruments and other digital musical tools to communicate with each other.

MIDI itself does not make sound, it is just a series of messages like "note on," "note off," "note/pitch," "pitchbend," and many more.

These messages are interpreted by a MIDI instrument to produce sound. A MIDI instrument can be a piece of hardware (electronic keyboard, synthesizer) or part of a software environment (ableton, garageband, digital performer, logic...).

Advantages:

It is compact .

It is easy to modify/manipulate notes

It can change instruments.

References:

- Multimedia : Computing, Communications & Applications by Ralf Steinmetz and Klara Nahrstedt, Pearson Ed.

2. Multimedia Systems Design by Prabhat K. Andleigh & Kiran Thakrar, PHI.

3. Principles of Multimedia by Parekh, TMH.