Unit - 4

Pipelining and Parallel Processors

The term Pipelining refers to a technique of decomposing a sequential process into sub-operations, with each sub-operation being executed in a dedicated segment that operates concurrently with all other segments.

The most important characteristic of a pipeline technique is that several computations can be in progress in distinct segments at the same time. The overlapping of computation is made possible by associating a register with each segment in the pipeline. The registers provide isolation between each segment so that each can operate on distinct data simultaneously.

The structure of a pipeline organization can be represented simply by including an input register for each segment followed by a combinational circuit.

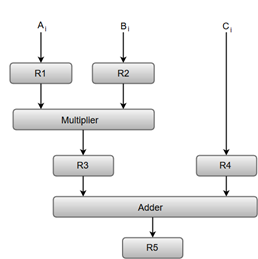

Let us consider an example of combined multiplication and addition operation to get a better understanding of the pipeline organization.

The combined multiplication and addition operation is done with a stream of numbers such as:

Ai* Bi + Ci for i = 1, 2, 3, ......., 7

The operation to be performed on the numbers is decomposed into sub-operations with each sub-operation to be implemented in a segment within a pipeline.

The sub-operations performed in each segment of the pipeline are defined as:

R1 ← Ai, R2 ← Bi Input Ai, and Bi

R3 ← R1 * R2, R4 ← Ci Multiply, and input Ci

R5 ← R3 + R4 Add Ci to product

The following block diagram represents the combined as well as the sub-operations performed in each segment of the pipeline.

Fig. 1 – Pipeline process

Registers R1, R2, R3, and R4 hold the data and the combinational circuits operate in a particular segment.

The output generated by the combinational circuit in a given segment is applied as an input register of the next segment. For instance, from the block diagram, we can see that the register R3 is used as one of the input registers for the combinational adder circuit.

In general, the pipeline organization is applicable for two areas of computer design which includes:

Instruction Pipeline

Pipeline processing can occur not only in the data stream but in the instruction stream as well.

Most digital computers with complex instructions require an instruction pipeline to carry out operations like fetch, decode and execute instructions.

In general, the computer needs to process each instruction with the following sequence of steps.

Each step is executed in a particular segment, and there are times when different segments may take different times to operate on the incoming information. Moreover, there are times when two or more segments may require memory access at the same time, causing one segment to wait until another is finished with the memory.

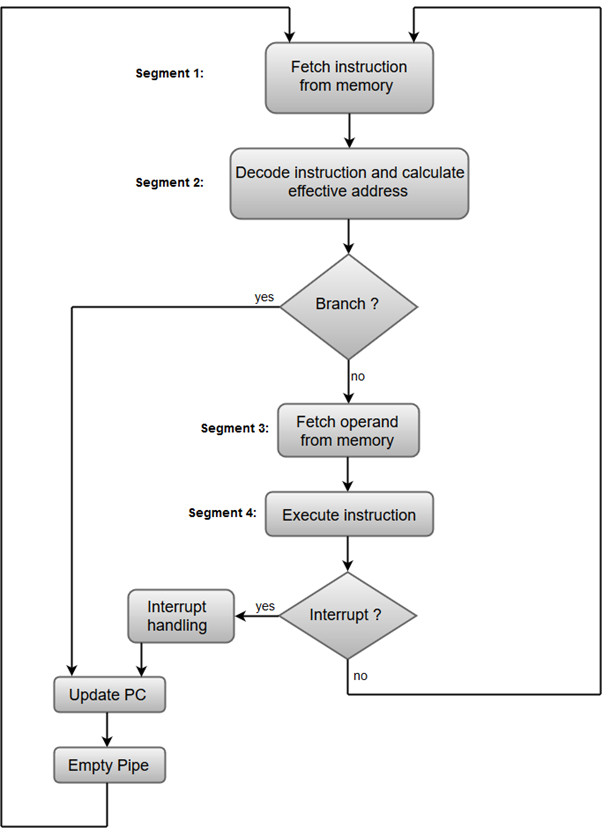

The organization of an instruction pipeline will be more efficient if the instruction cycle is divided into segments of equal duration. One of the most common examples of this type of organization is a Four-segment instruction pipeline.

A four-segment instruction pipeline combines two or more different segments and makes it a single one. For instance, the decoding of the instruction can be combined with the calculation of the effective address into one segment.

The following block diagram shows a typical example of a four-segment instruction pipeline. The instruction cycle is completed in four segments.

Fig. 2 – Instruction Cycle

Segment 1:

The instruction fetch segment can be implemented using a first-in, first-out (FIFO) buffer.

Segment 2:

The instruction fetched from memory is decoded in the second segment, and eventually, the effective address is calculated in a separate arithmetic circuit.

Segment 3:

An operand from memory is fetched in the third segment.

Segment 4:

The instructions are finally executed in the last segment of the pipeline organization.

Arithmetic Pipeline

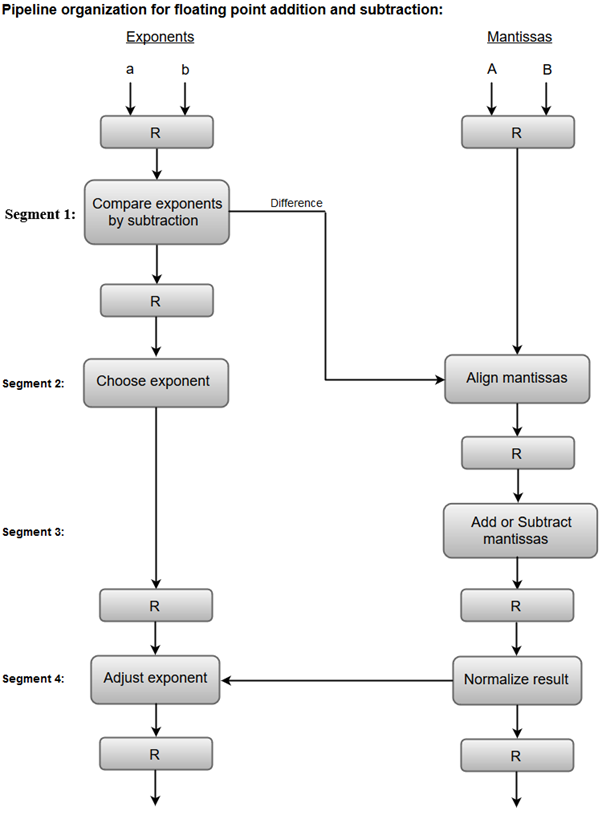

Arithmetic Pipelines are mostly used in high-speed computers. They are used to implement floating-point operations, multiplication of fixed-point numbers, and similar computations encountered in scientific problems.

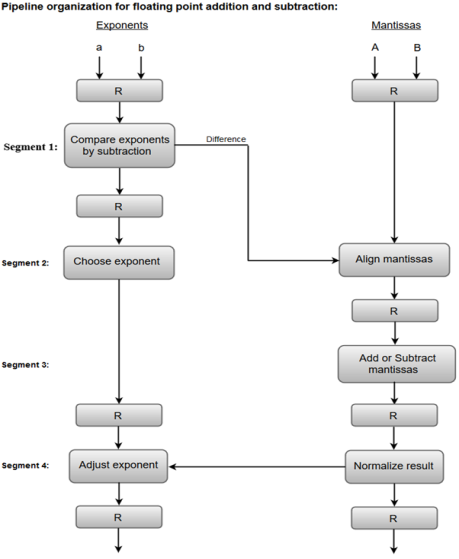

To understand the concepts of arithmetic pipeline in a more convenient way, let us consider an example of a pipeline unit for floating-point addition and subtraction.

The inputs to the floating-point adder pipeline are two normalized floating-point binary numbers defined as:

X = A * 2a = 0.9504 * 103

Y = B * 2b = 0.8200 * 102

Where A and B are two fractions that represent the mantissa and a and b are the exponents.

The combined operation of floating-point addition and subtraction is divided into four segments. Each segment contains the corresponding suboperation to be performed in the given pipeline. The suboperations that are shown in the four segments are:

We will discuss each suboperation in a more detailed manner later in this section.

The following block diagram represents the sub-operations performed in each segment of the pipeline.

Fig. 3 – Sub Operations

Note: Registers are placed after each suboperation to store the intermediate results.

1. Compare exponents by subtraction:

The exponents are compared by subtracting them to determine their difference. The larger exponent is chosen as the exponent of the result.

The difference of the exponents, i.e., 3 - 2 = 1 determines how many times the mantissa associated with the smaller exponent must be shifted to the right.

2. Align the mantissa:

The mantissa associated with the smaller exponent is shifted according to the difference of exponents determined in segment one.

X = 0.9504 * 103

Y = 0.08200 * 103

3. Add mantissas:

The two mantissas are added in segment three.

Z = X + Y = 1.0324 * 103

4. Normalize the result:

After normalization, the result is written as:

Z = 0.1324 * 104

Key takeaway

The term Pipelining refers to a technique of decomposing a sequential process into sub-operations, with each sub-operation being executed in a dedicated segment that operates concurrently with all other segments.

The most important characteristic of a pipeline technique is that several computations can be in progress in distinct segments at the same time. The overlapping of computation is made possible by associating a register with each segment in the pipeline. The registers provide isolation between each segment so that each can operate on distinct data simultaneously.

To improve the performance of a CPU we have two options:

1) Improve the hardware by introducing faster circuits.

2) Arrange the hardware such that more than one operation can be performed at the same time.

Since there is a limit on the speed of hardware and the cost of faster circuits is quite high, we have to adopt the 2nd option.

Pipelining : Pipelining is a process of arrangement of hardware elements of the CPU such that its overall performance is increased. Simultaneous execution of more than one instruction takes place in a pipelined processor.

Let us see a real-life example that works on the concept of pipelined operation. Consider a water bottle packaging plant. Let there be 3 stages that a bottle should pass through, Inserting the bottle(I), Filling water in the bottle(F), and sealing the bottle(S). Let us consider these stages as stage 1, stage 2, and stage 3 respectively. Let each stage take 1 minute to complete its operation.

Now, in a non-pipelined operation, a bottle is first inserted in the plant, after 1 minute it is moved to stage 2 where water is filled. Now, in stage 1 nothing is happening. Similarly, when the bottle moves to stage 3, both stage 1 and stage 2 are idle. But in pipelined operation, when the bottle is in stage 2, another bottle can be loaded at stage 1. Similarly, when the bottle is in stage 3, there can be one bottle each in stage 1 and stage 2. So, after each minute, we get a new bottle at the end of stage 3. Hence, the average time taken to manufacture 1 bottle is:

Without pipelining = 9/3 minutes = 3m

I F S | | | | | |

| | | I F S | | |

| | | | | | I F S (9 minutes)

With pipelining = 5/3 minutes = 1.67m

I F S | |

| I F S |

| | I F S (5 minutes)

Thus, pipelined operation increases the efficiency of a system.

Design of a basic pipeline

In a pipelined processor, a pipeline has two ends, the input end, and the output end. Between these ends, there are multiple stages/segments such that the output of one stage is connected to the input of the next stage and each stage performs a specific operation.

Interface registers are used to hold the intermediate output between two stages. These interface registers are also called latch or buffer.

All the stages in the pipeline along with the interface registers are controlled by a common clock.

Execution in a pipelined processor

Execution sequence of instructions in a pipelined processor can be visualized using a space-time diagram. For example, consider a processor having 4 stages and let there be 2 instructions to be executed. We can visualize the execution sequence through the following space-time diagrams:

Non overlapped execution:

Stage / Cycle | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

S1 | I1 |

|

|

| I2 |

|

|

|

S2 |

| I1 |

|

|

| I2 |

|

|

S3 |

|

| I1 |

|

|

| I2 |

|

S4 |

|

|

| I1 |

|

|

| I2 |

Fig 4 - Non overlapped execution

Total time = 8 Cycle

Overlapped execution:

Stage / Cycle | 1 | 2 | 3 | 4 | 5 |

S1 | I1 | I2 |

|

|

|

S2 |

| I1 | I2 |

|

|

S3 |

|

| I1 | I2 |

|

S4 |

|

|

| I1 | I2 |

Fig 5 - Overlapped execution

Total time = 5 Cycle

Pipeline Stages

RISC processor has 5 stage instruction pipelines to execute all the instructions in the RISC instruction set. Following are the 5 stages of the RISC pipeline with their respective operations:

Stage 1 (Instruction Fetch)

In this stage, the CPU reads instructions from the address in the memory whose value is present in the program counter.

Stage 2 (Instruction Decode)

In this stage, instruction is decoded and the register file is accessed to get the values from the registers used in the instruction.

Stage 3 (Instruction Execute)

In this stage, ALU operations are performed.

Stage 4 (Memory Access)

In this stage, memory operands are read and written from/to the memory that is present in the instruction.

Stage 5 (Write Back)

In this stage, the computed/fetched value is written back to the register present in the instructions.

Performance of a pipelined processor

Consider a ‘k’ segment pipeline with clock cycle time as ‘Tp’. Let there be ‘n’ tasks to be completed in the pipelined processor. Now, the first instruction is going to take ‘k’ cycles to come out of the pipeline but the other ‘n – 1’ instructions will take only ‘1’ cycle each, i.e., a total of ‘n – 1’ cycles. So, time is taken to execute ‘n’ instructions in a pipelined processor:

ETpipeline = k + n – 1 cycles

= (k + n – 1) Tp

In the same case, for a non-pipelined processor, execution time of ‘n’ instructions will be:

ETnon-pipeline = n * k * Tp

So, speedup (S) of the pipelined processor over non-pipelined processor, when ‘n’ tasks are executed on the same processor is:

S = Performance of pipelined processor /

Performance of Non-pipelined processor

As the performance of a processor is inversely proportional to the execution time, we have,

S = ETnon-pipeline / ETpipeline

=> S = [n * k * Tp] / [(k + n – 1) * Tp]

S = [n * k] / [k + n – 1]

When the number of tasks ‘n’ are significantly larger than k, that is, n >> k

S = n * k / n

S = k

where ‘k’ is the number of stages in the pipeline.

Also, Efficiency = Given speed up / Max speed up = S / Smax. We know that, Smax = k

So, Efficiency = S / k

Throughput = Number of instructions / Total time to complete the instructions

So, Throughput = n / (k + n – 1) * Tp

Note: The cycles per instruction (CPI) value of an ideal pipelined processor is 1

Key takeaway

To improve the performance of a CPU we have two options:

Improve the hardware by introducing faster circuits.

Arrange the hardware such that more than one operation can be performed at the same time.

Since there is a limit on the speed of hardware and the cost of faster circuits is quite high, we have to adopt the 2nd option.

The objectives of this module are to discuss the various hazards associated with pipelining.

We discussed the basics of pipelining and the MIPS pipeline implementation in the previous module. We made the following observations about pipelining:

Pipelining doesn’t help latency of single task, it helps throughput of the entire workload

Pipeline rate limited by slowest pipeline stage of Multiple tasks operating simultaneously

Potential speedup = Number of pipe stages

Unbalanced lengths of pipe stages reduce speedup

Time to “fill” pipeline and time to “drain” reduces speedup o Unbalanced lengths of pipe stages reduces speedup

Execute billions of instructions, so throughput is what matters of Data path design – Ideally, we expect a CPI value of 1

What is desirable in instruction sets for pipelining?

Variable-length instructions vs. all instruction’s same length?

Memory operands part of any operation vs. memory operands only in loads or stores?

Register operand many places in instruction format vs. registers located in the same place?

Ideally, we expect a CPI value of 1 and a speedup equal to the number of stages in the pipeline. But some factors limit this. The problems that occur in the pipeline are called hazards. Hazards that arise in the pipeline prevent the next instruction from executing during its designated clock cycle. There are three types of hazards:

Structural hazards: Hardware cannot support certain combinations of instructions (two instructions in the pipeline require the same resource).

Data hazards: Instruction depends on the result of prior instruction still in the pipeline

Control hazards: Caused by the delay between the fetching of instructions and decisions about changes in control flow (branches and jumps).

Structural hazards arise because there is not enough duplication of resources.

Resolving structural hazards:

Solution 1: Wait

Must detect the hazard

Must have a mechanism to stall

Low cost and simple

Increases CPI

Used for rare cases

Solution 2: Throw more hardware at the problem

Pipeline hardware resource

Replicate resource

Figure shows one possibility of a structural hazard in the MIPS pipeline. Instruction 3 is accessing memory for an instruction fetch and instruction 1 is accessing memory for the data access (load/store). These two are conflicting requirements and gives rise to a hazard. We should either stall one of the operations as shown in Figure 11.2 or have two separate memories for code and data. Structural hazards will have to be handled at the design time itself.

Next, we shall discuss data dependences and the associated hazards. There are two types of data dependence – true data dependencies and name dependences.

An instruction j is data-dependent on instruction i if either of the following holds:

A name dependence occurs when two instructions use the same register or memory location, called a name, but there is no flow of data between the instructions associated with that name

Last, of all, we discuss control dependences. Control dependencies determine the ordering of an instruction with respect to a branch instruction so that an instruction i is executed in the correct program order. There are two general constraints imposed by control dependences:

Having introduced the various types of data dependencies and control dependence, let us discuss how these dependencies cause problems in the pipeline. Dependences are properties of programs and whether the dependences turn out to be hazards and cause stalls in the pipeline are properties of the pipeline organization.

Data hazards may be classified as one of three types, depending on the order of reading and write accesses in the instructions:

RAW (Read After Write)

Corresponds to a true data dependence

Program order must be preserved

This hazard results from an actual need for communication

Considering two instructions i and j, instruction j reads the data before i writes it

i: ADD R1, R2, R3

j : SUB R4, R1, R3

Add modifies R1 and then Sub should read it. If this order is changed, there is a RAW hazard

WAW (Write After Write)

Corresponds to an output dependence

Occurs when there are multiple writes or a short integer pipeline and a longer floating-point pipeline or when an instruction proceeds when a previous instruction is stalled WAW (write after write)

This is caused by a name dependence. There is no actual data transfer. It is the same name that causes the problem

Considering two instructions i and j, instruction j should write after instruction i has written the data

i: SUB R1, R4, R3

j: ADD R1, R2, R3

Instruction i have to modify register r1 first, and then j has to modify it. Otherwise, there is a WAW hazard. There is a problem because of R1. If some other register had been used, there will not be a problem

The solution is register renaming, that is, use some other register. The hardware can do the renaming or the compiler can do the renaming

WAR (Write After Read)

Arises from an anti-dependence

Cannot occur in most static issue pipelines

Occurs either when there are early writes and late reads, or when instructions are re-ordered

There is no actual data transfer. It is the same name that causes the problem

Considering two instructions i and j, instruction j should write after instruction i has read the data.

i: SUB R4, R1, R3

j: ADD R1, R2, R3

Instruction i has to read register r1 first, and then j has to modify it. Otherwise, there is a WAR hazard. There is a problem because of R1. If some other register had been used, there will not be a problem

• Solution is register renaming, that is, use some other register. The hardware can do the renaming or the compiler can do the renaming

Figure 11.3 gives a situation of having true data dependencies. The use of the result of the ADD instruction in the next three instructions causes a hazard since the register is not written until after those instructions read it. The write-back for the ADD instruction happens only in the fifth clock cycle, whereas the next three instructions read the register values before that, and hence will read the wrong data. This gives rise to RAW hazards.

A control hazard is when we need to find the destination of a branch, and can’t fetch any new instructions until we know that destination. Figure 11.4 illustrates a control hazard. The first instruction is a branch and it gets resolved only in the fourth clock cycle. So, the next three instructions fetched may be correct, or wrong, depending on the outcome of the branch. This is an example of a control hazard.

Now, having discussed the various dependencies and the hazards that they might lead to, we shall see what are the hazards that can happen in our simple MIPS pipeline.

Structural hazard

Conflict for use of a resource

• In MIPS pipeline with a single memory

– Load/store requires data access

– Instruction fetch would have to stall for that cycle

• Would cause a pipeline “bubble”

• Hence, pipelined data paths require separate instruction/data memories or separate instruction/data caches

RAW hazards – can happen in any architecture

WAR hazards – Can’t happen in MIPS 5 stage pipeline because all instructions take 5 stages, and reads are always in stage 2, and writes are always in stage 5

WAW hazards – Can’t happen in MIPS 5 stage pipeline because all instructions take 5 stages, and writes are always in stage 5

Control hazards

Can happen

The penalty depends on when the branch is resolved – in the second clock cycle or the third clock cycle

More aggressive implementations resolve the branch in the second clock cycle itself, leading to one clock cycle penalty

Let us look at the speedup equation with stalls and look at an example problem.

CPIpipelined = Ideal CPI + Average Stall cycles per Inst

Let us assume we want to compare the performance of two machines. Which machine is faster?

Machine A: Dual ported memory – so there are no memory stalls

Machine B: Single ported memory, but its pipelined implementation has a 1.05 times faster clock rate

Assume:

Ideal CPI = 1 for both

Loads are 40% of instructions executed

SpeedupA = Pipeline Depth/ (1+0) x(clockunpipe/clockpipe)

= Pipeline Depth

SpeedupB = Pipeline Depth/ (1 + 0.4 x 1) x (clockunpipe/ (clockunpipe / 1.05)

= (Pipeline Depth/1.4) x 1.05

= 75 x Pipeline Depth

SpeedupA / SpeedupB = Pipeline Depth / (0.75 x Pipeline Depth) = 1.33 Machine A is 1.33 times faster.

To summarize, we have discussed the various hazards that might occur in a pipeline. Structural hazards happen because there is not enough duplication of resources and they have to be handled at design time itself. Data hazards happen because of true data dependencies and name dependences. Control hazards are caused by branches. The solutions for all these hazards will be discussed in the subsequent modules.

Key takeaway

Pipelining doesn’t help latency of a single task, it helps throughput of the entire workload

Pipeline rate limited by slowest pipeline stage of Multiple tasks operating simultaneously

Potential speedup = Number of pipe stages

Unbalanced lengths of pipe stages reduce speedup

Time to “fill” pipeline and time to “drain” reduces speedup o Unbalanced lengths of pipe stages reduces speedup

Execute billions of instructions, so throughput is what matters of Data path design – Ideally, we expect a CPI value of 1

Parallel processing can be described as a class of techniques that enables the system to achieve simultaneous data-processing tasks to increase the computational speed of a computer system.

A parallel processing system can carry out simultaneous data-processing to achieve faster execution time. For instance, while an instruction is being processed in the ALU component of the CPU, the next instruction can be read from memory.

The primary purpose of parallel processing is to enhance the computer processing capability and increase its throughput, i.e., the amount of processing that can be accomplished during a given interval of time.

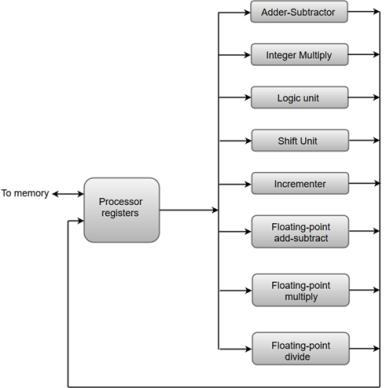

A parallel processing system can be achieved by having a multiplicity of functional units that perform identical or different operations simultaneously. The data can be distributed among various multiple functional units.

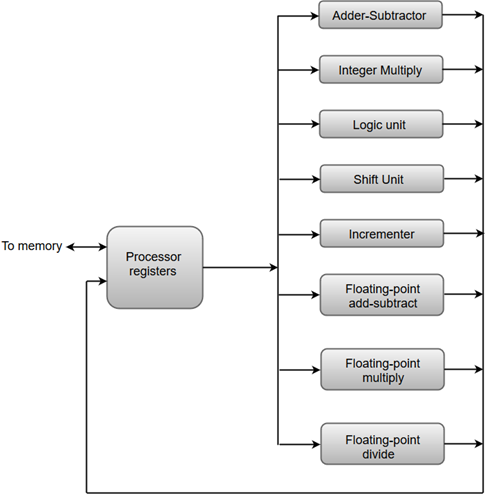

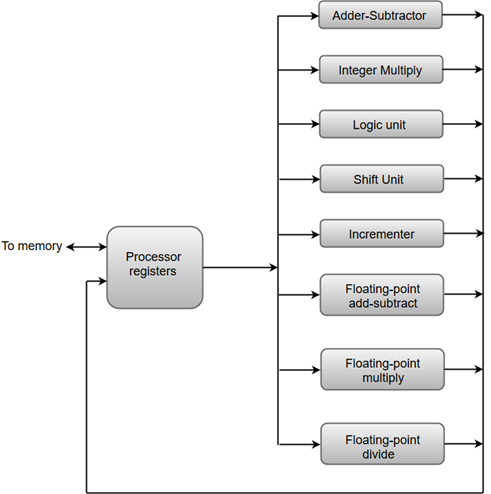

The following diagram shows one possible way of separating the execution unit into eight functional units operating in parallel.

The operation performed in each functional unit is indicated in each block if the diagram:

Fig 6 - an operation performed in each functional unit

The adder and integer multiplier performs the arithmetic operation with integer numbers.

The floating-point operations are separated into three circuits operating in parallel.

The logic, shift, and increment operations can be performed concurrently on different data. All units are independent of each other, so one number can be shifted while another number is being incremented.

Key takeaway

Parallel processing can be described as a class of techniques that enables the system to achieve simultaneous data-processing tasks to increase the computational speed of a computer system.

A parallel processing system can carry out simultaneous data-processing to achieve faster execution time. For instance, while an instruction is being processed in the ALU component of the CPU, the next instruction can be read from memory.

The primary purpose of parallel processing is to enhance the computer processing capability and increase its throughput, i.e., the amount of processing that can be accomplished during a given interval of time.

Parallel processing has been developed as an effective technology in modern computers to meet the demand for higher performance, lower cost, and accurate results in real-life applications. Concurrent events are common in today’s computers due to the practice of multiprogramming, multiprocessing, or multi computing.

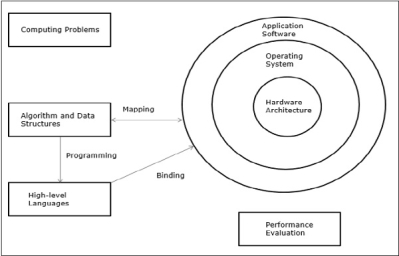

Modern computers have powerful and extensive software packages. To analyze the development of the performance of computers, first, we have to understand the basic development of hardware and software.

Computer Development Milestones − There are two major stages of the development of computers - mechanical or electromechanical parts. Modern computers evolved after the introduction of electronic components. High mobility electrons in electronic computers replaced the operational parts in mechanical computers. For information transmission, an electric signal which travels almost at the speed of light replaced mechanical gears or levers.

Elements of Modern computers − A modern computer system consists of computer hardware, instruction sets, application programs, system software, and user interface.

Fig. 7 – Elements of modern computers

The computing problems are categorized as numerical computing, logical reasoning, and transaction processing. Some complex problems may need the combination of all three processing modes.

Evolution of Computer Architecture − In the last four decades, computer architecture has gone through revolutionary changes. We started with Von Neumann architecture and now we have multicomputer and multiprocessors.

Performance of a computer system − The performance of a computer system depends both on machine capability and program behavior. Machine capability can be improved with better hardware technology, advanced architectural features, and efficient resource management. Program behavior is unpredictable as it is dependent on the application and run-time conditions

Multiprocessors and Multicomputers

In this section, we will discuss two types of parallel computers −

Multiprocessors

Multicomputers

Shared-Memory Multicomputers

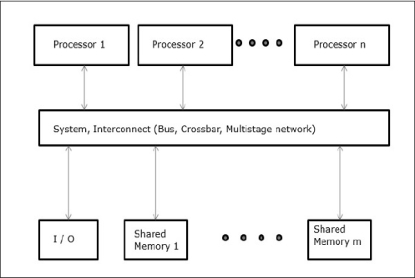

The three most common shared memory multiprocessors models are −

Uniform Memory Access (UMA)

In this model, all the processors share the physical memory uniformly. All the processors have equal access time to all the memory words. Each processor may have a private cache memory. Same rule is followed for peripheral devices.

When all the processors have equal access to all the peripheral devices, the system is called a symmetric multiprocessor. When only one or a few processors can access the peripheral devices, the system is called an asymmetric multiprocessor.

Fig 8 - Uniform Memory Access

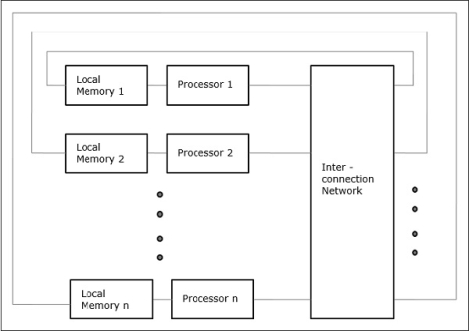

Non-uniform Memory Access (NUMA)

In the NUMA multiprocessor model, the access time varies with the location of the memory word. Here, the shared memory is physically distributed among all the processors, called local memories. The collection of all local memories forms a global address space that can be accessed by all the processors.

Fig 9 - Non-uniform Memory Access

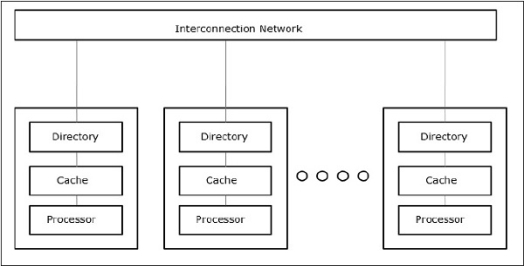

Cache Only Memory Architecture (COMA)

The COMA model is a special case of the NUMA model. Here, all the distributed main memories are converted to cache memories.

Fig 10 - Cache Only Memory Architecture

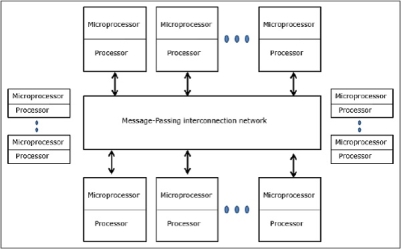

Distributed - Memory Multicomputers − A distributed memory multicomputer system consists of multiple computers, known as nodes, interconnected by a message-passing network. Each node acts as an autonomous computer having a processor, local memory, and sometimes I/O devices. In this case, all local memories are private and are accessible only to the local processors. This is why the traditional machines are called no-remote-memory-access (NORMA) machines.

Fig 11 - Distributed - Memory Multi computers

Multivector and SIMD Computers

In this section, we will discuss supercomputers and parallel processors for vector processing and data parallelism.

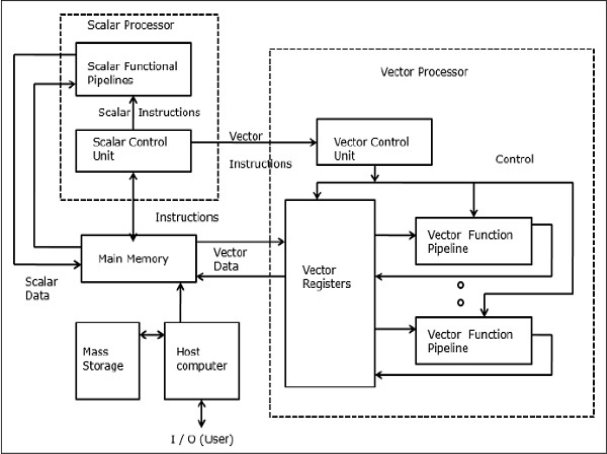

Vector Supercomputers

In a vector computer, a vector processor is attached to the scalar processor as an optional feature. The host computer first loads program and data to the main memory. Then the scalar control unit decodes all the instructions. If the decoded instructions are scalar operations or program operations, the scalar processor executes those operations using scalar functional pipelines.

On the other hand, if the decoded instructions are vector operations, then the instructions will be sent to the vector control unit.

Fig 12 - Vector Supercomputers

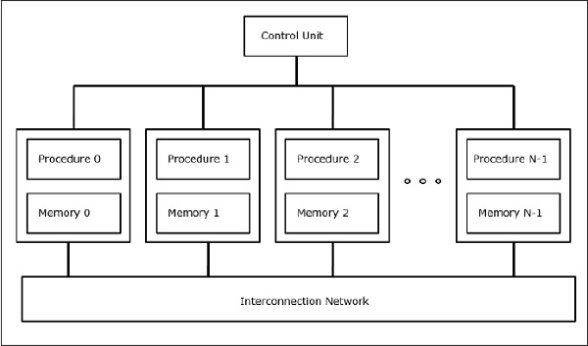

SIMD Supercomputers

In SIMD computers, the ‘N’ number of processors are connected to a control unit and all the processors have their memory units. All the processors are connected by an interconnection network.

Fig 13 - SIMD Supercomputers

PRAM and VLSI Models

The ideal model gives a suitable framework for developing parallel algorithms without considering the physical constraints or implementation details.

The models can be enforced to obtain theoretical performance bounds on parallel computers or to evaluate VLSI complexity on-chip area and operational time before the chip is fabricated.

Parallel Random-Access Machines

Sheperdson and Sturgis (1963) modeled the conventional Uniprocessor computers as random-access-machines (RAM). Fortune and Wyllie (1978) developed a parallel random-access-machine (PRAM) model for modeling an idealized parallel computer with zero memory access overhead and synchronization.

Fig 14 - Parallel Random-Access Machines

An N-processor PRAM has a shared memory unit. This shared memory can be centralized or distributed among the processors. These processors operate on a synchronized read-memory, write-memory, and compute cycle. So, these models specify how concurrent read and write operations are handled.

Following are the possible memory update operations −

Exclusive read (ER) − In this method, in each cycle, only one processor is allowed to read from any memory location.

Exclusive write (EW) − In this method, at least one processor is allowed to write into a memory location at a time.

Concurrent read (CR) − It allows multiple processors to read the same information from the same memory location in the same cycle.

Concurrent write (CW) − It allows simultaneous write operations to the same memory location. To avoid write conflict some policies are set up.

VLSI Complexity Model

Parallel computers use VLSI chips to fabricate processor arrays, memory arrays, and large-scale switching networks.

Nowadays, VLSI technologies are 2-dimensional. The size of a VLSI chip is proportional to the amount of storage (memory) space available in that chip.

We can calculate the space complexity of an algorithm by the chip area (A) of the VLSI chip implementation of that algorithm. If T is the time (latency) needed to execute the algorithm, then A.T gives an upper bound on the total number of bits processed through the chip (or I/O). For certain computing, there exists a lower bound, f(s), such that

A.T2 >= O (f(s))

Where A=chip area and T=time

Architectural Development Tracks

The evolution of parallel computers I spread along the following tracks −

Multiple Processor Tracks

Multiple data tracks

Multiple threads track

In a multiple-processor track, it is assumed that different threads execute concurrently on different processors and communicate through shared memory (multiprocessor track) or message passing (multicomputer track) system.

In multiple data tracks, it is assumed that the same code is executed on the massive amount of data. It is done by executing the same instructions on a sequence of data elements (vector track) or through the execution of the same sequence of instructions on a similar set of data (SIMD track).

In multiple threads track, it is assumed that the interleaved execution of various threads on the same processor to hide synchronization delays among threads executing on different processors. Thread interleaving can be coarse (multithreaded track) or fine (dataflow track).

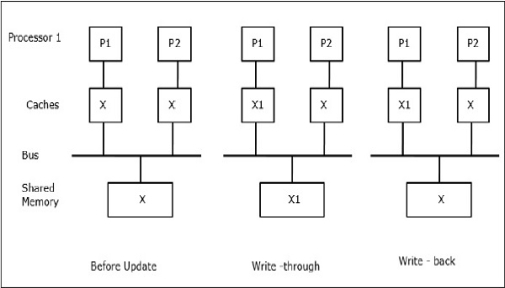

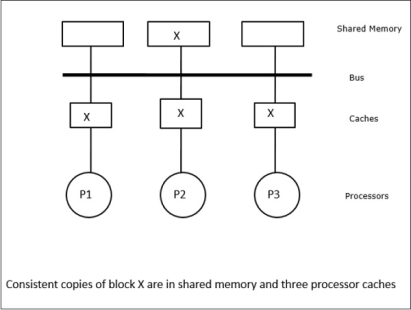

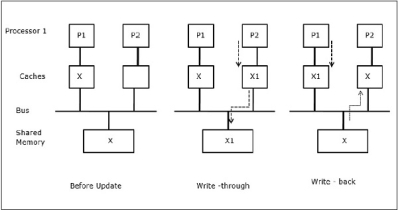

The Cache Coherence Problem

In a multiprocessor system, data inconsistency may occur among adjacent levels or within the same level of the memory hierarchy. For example, the cache and the main memory may have inconsistent copies of the same object.

As multiple processors operating in parallel, and independently multiple caches may possess different copies of the same memory block, this creates a cache coherence problem. Cache coherence schemes help to avoid this problem by maintaining a uniform state for each cached block of data.

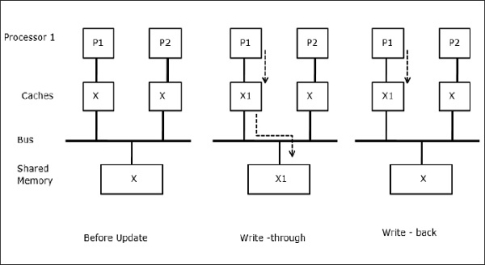

Fig 15 - Cache coherence schemes

Let X be an element of shared data that has been referenced by two processors, P1 and P2. In the beginning, three copies of X are consistent. If the processor P1 writes a new data X1 into the cache, by using a write-through policy, the same copy will be written immediately into the shared memory. In this case, inconsistency occurs between cache memory and the main memory. When a write-back policy is used, the main memory will be updated when the modified data in the cache is replaced or invalidated.

In general, there are three sources of inconsistency problem −

Sharing of writable data

Process migration

I/O activity

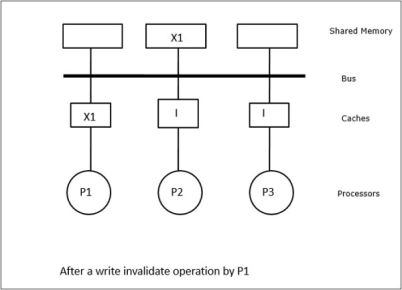

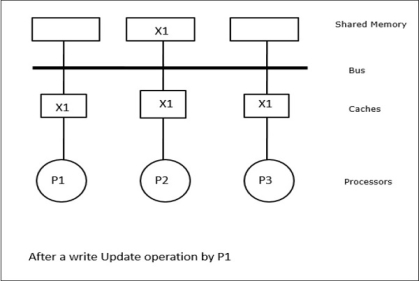

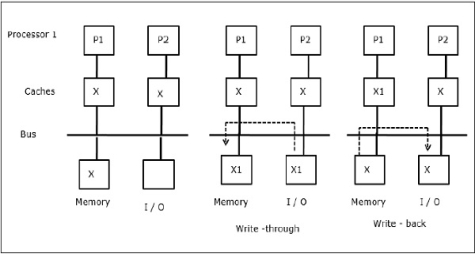

Snoopy Bus Protocols

Snoopy protocols achieve data consistency between the cache memory and the shared memory through a bus-based memory system. Write-invalidate and write-update policies are used for maintaining cache consistency.

Fig 16 - Snoopy Bus Protocols

Fig 17 - Example

In this case, we have three processors P1, P2, and P3 having a consistent copy of data element ‘X’ in their local cache memory and the shared memory (Figure-a). Processor P1 writes X1 in its cache memory using the write-invalidate protocol. So, all other copies are invalidated via the bus. It is denoted by ‘I’ (Figure-b). Invalidated blocks are also known as dirty, i.e., they should not be used. The write-update protocol updates all the cache copies via the bus. By using the write-back cache, the memory copy is also updated (Figure-c).

Fig 18 - Example

Cache Events and Actions

Following events and actions occur on the execution of memory-access and invalidation commands −

Read-miss − When a processor wants to read a block and it is not in the cache, a read-miss occurs. This initiates a bus-read operation. If no dirty copy exists, then the main memory that has a consistent copy supplies a copy to the requesting cache memory. If a dirty copy exists in remote cache memory, that cache will restrain the main memory and send a copy to the requesting cache memory. In both cases, the cached copy will enter the valid state after a read miss.

Write-hit − If the copy is in a dirty or reserved state, write is done locally and the new state is dirty. If the new state is valid, the write-invalidate command is broadcasted to all the caches, invalidating their copies. When the shared memory is written through, the resulting state is reserved after this first write.

Write-miss − If a processor fails to write in the local cache memory, the copy must come either from the main memory or from a remote cache memory with a dirty block. This is done by sending a read-invalidate command, which will invalidate all cached copies. Then the local copy is updated with a dirty state.

Read-hit − Read-hit is always performed in local cache memory without causing a transition of state or using the snoopy bus for invalidation.

Block replacement − When a copy is dirty, it is to be written back to the main memory by the block replacement method. However, when the copy is either in a valid or reserved, or invalid state, no replacement will take place.

Directory-Based Protocols

By using a multistage network for building a large multiprocessor with hundreds of processors, the snoopy cache protocols need to be modified to suit the network capabilities. Broadcasting being very expensive to perform in a multistage network, the consistency commands are sent only to those caches that keep a copy of the block. This is the reason for the development of directory-based protocols for network-connected multiprocessors.

In a directory-based protocols system, data to be shared are placed in a common directory that maintains the coherence among the caches. Here, the directory acts as a filter where the processors ask permission to load an entry from the primary memory to its cache memory. If an entry is changed the directory either update it or invalidates the other caches with that entry.

Hardware Synchronization Mechanisms

A synchronization is a special form of communication where instead of data control, information is exchanged between communicating processes residing in the same or different processors.

Multiprocessor systems use hardware mechanisms to implement low-level synchronization operations. Most multiprocessors have hardware mechanisms to impose atomic operations such as memory read, write, or read-modify-write operations to implement some synchronization primitives. Other than atomic memory operations, some inter-processor interrupts are also used for synchronization purposes.

Cache Coherency in Shared Memory Machines

Maintaining cache coherency is a problem in the multiprocessor system when the processors contain local cache memory. Data inconsistency between different caches easily occurs in this system.

The major concern areas are −

Sharing of writable data

Process migration

I/O activity

Sharing of writable data

When two processors (P1 and P2) have the same data element (X) in their local caches and one process (P1) writes to the data element (X), as the caches are a write-through local cache of P1, the main memory is also updated. Now when P2 tries to read data element (X), it does not find X because the data element in the cache of P2 has become outdated.

Fig 19 - Sharing of writable data

Process migration

In the first stage, a cache of P1 has data element X, whereas P2 does not have anything. A process on P2 first writes on X and then migrates to P1. Now, the process starts reading data element X, but as the processor P1 has outdated data the process cannot read it. So, a process on P1 writes to the data element X and then migrates to P2. After migration, a process on P2 starts reading the data element X but it finds an outdated version of X in the main memory.

Fig 20 - Process migration

I/O activity

As illustrated in the figure, an I/O device is added to the bus in a two-processor multiprocessor architecture. In the beginning, both the caches contain the data element X. When the I/O device receives a new element X, it stores the new element directly in the main memory. Now, when either P1 or P2 (assume P1) tries to read element X it gets an outdated copy. So, P1 writes to element X. Now, if the I/O device tries to transmit X it gets an outdated copy.

Fig 21 - I/O activity

Uniform Memory Access (UMA)

Uniform Memory Access (UMA) architecture means the shared memory is the same for all processors in the system. Popular classes of UMA machines, which are commonly used for (file-) servers, are the so-called Symmetric Multiprocessors (SMPs). In an SMP, all system resources like memory, disks, other I/O devices, etc. are accessible by the processors in a uniform manner.

Non-Uniform Memory Access (NUMA)

In NUMA architecture, multiple SMP clusters are having an internal indirect/shared network, which is connected in a scalable message-passing network. So, NUMA architecture is logically shared physically distributed memory architecture.

In a NUMA machine, the cache-controller of a processor determines whether a memory reference is local to the SMP’s memory or it is remote. To reduce the number of remote memory accesses, NUMA architectures usually apply caching processors that can cache the remote data. But when caches are involved, cache coherency needs to be maintained. So, these systems are also known as CC-NUMA (Cache Coherent NUMA).

Cache Only Memory Architecture (COMA)

COMA machines are similar to NUMA machines, with the only difference that the main memories of COMA machines act as direct-mapped or set-associative caches. The data blocks are hashed to a location in the DRAM cache according to their addresses. Data that is fetched remotely is stored in the local main memory. Moreover, data blocks do not have a fixed home location, they can freely move throughout the system.

COMA architectures mostly have a hierarchical message-passing network. A switch in such a tree contains a directory with data elements as its sub-tree. Since data has no home location, it must be explicitly searched for. This means that remote access requires a traversal along the switches in the tree to search their directories for the required data. So, if a switch in the network receives multiple requests from its subtree for the same data, it combines them into a single request which is sent to the parent of the switch. When the requested data returns, the switch sends multiple copies of it down its subtree.

COMA versus CC-NUMA

Following are the differences between COMA and CC-NUMA.

COMA tends to be more flexible than CC-NUMA because COMA transparently supports the migration and replication of data without the need for the OS.

COMA machines are expensive and complex to build because they need non-standard memory management hardware and the coherency protocol is harder to implement.

Remote accesses in COMA are often slower than those in CC-NUMA since the tree network needs to be traversed to find the data.

Key takeaway

Parallel processing has been developed as an effective technology in modern computers to meet the demand for higher performance, lower cost, and accurate results in real-life applications. Concurrent events are common in today’s computers due to the practice of multiprogramming, multiprocessing, or multi computing.

Modern computers have powerful and extensive software packages. To analyze the development of the performance of computers, first, we have to understand the basic development of hardware and software.

Your computer's central processing unit (CPU) handles computation—basically, running programs. Modern CPUs, on the other hand, have features including multiple cores and hyper-threading. Some computers also have several processors. We're here to assist you in making sense of it all.

When comparing results, a CPU's clock speed used to be sufficient. Things aren't as straightforward as they once were. A CPU with multiple cores or hyper-threading can outperform a single-core CPU with the same clock speed that does not have hyper-threading. PCs with multiple CPUs may also have a significant benefit. Both of these features are designed to make it easier for PCs to run several processes at once, boosting efficiency when multitasking or when dealing with demanding apps like video encoders and modern games. So, let's take a look at each of these characteristics and what they could mean to you.

Multiple Cores

CPUs used to have only one heart. This meant that the actual CPU only had one central processing unit. Manufacturers add more "cores," or central processing units, to boost performance. Since a dual-core CPU has two central processing units, the operating system sees it as two CPUs. For example, a CPU with two cores might run two separate processes at the same time. Since your machine can do many tasks at once, this speeds up your system.

There are no tricks here, unlike hyper-threading: a dual - core CPU has two central processing units on the same chip. A quad - core CPU has four central processing units, while an octa- core CPU has eight.

This improves performance significantly while keeping the physical CPU unit small enough to fit in a single socket. There should only be one CPU socket with one CPU unit inserted into it, rather than four CPU sockets with four different CPUs, each requiring its own power, cooling, and other hardware. Since the cores are all on the same chip, they can interact more easily, resulting in less latency.

The Task Manager in Windows is a good example of this. You will see that this machine has one real CPU (socket) and four cores, for example. Because of hyper-threading, each core appears to the operating system as two CPUs, resulting in eight logical processors.

Multiple CPUs

A single CPU is used in the majority of computers. And if the single CPU has multiple cores or uses hyper-threading technology, it's still only one physical CPU unit plugged into a single CPU socket on the motherboard.

Prior to the introduction of hyper-threading and multi-core CPUs, people attempted to increase the computing power of computers by introducing more CPUs. A motherboard with multiple CPU sockets is needed for this. Additional hardware is needed on the motherboard to link the CPU sockets to the RAM and other resources. This type of setup has a lot of overhead.

Multiple CPU systems aren't very popular among today's home PCs. Even a powerful gaming desktop with several graphics’ cards would usually have just one CPU. Multiple CPU systems can be used in supercomputers, servers, and other high-end systems that need as much number-crunching capacity as possible.

Hyper - Threading

Intel's first effort to introduce parallel computing to user PCs was called hyper-threading. It first appeared on desktop computers with the Pentium 4 HT in 2002. The Pentium 4 of the time had only one CPU core, so it could only do one thing at a time, even though it could move between tasks fast enough to appear to be multitasking. Hyper-threading was created to compensate for this.

To an operating system, a single physical CPU core with hyper-threading appears as two logical CPUs. It's a bit of a scam since the CPU is still a single CPU. While the operating system perceives each core as having two CPUs, the real CPU hardware only has one set of execution resources for each core. The CPU acts as though it has more cores than it really does, and it speeds up program execution by using its own logic. To put it another way, the operating system is fooled into thinking there are two CPUs for each real CPU core.

Hyper-threading allows the physical execution resources of the two logical CPU cores to be shared. If one virtual CPU is stuck and waiting, the other virtual CPU will borrow its execution resources to speed things up. Hyper-threading will make the machine run faster, but it's no substitute for having additional cores.

Key takeaway

• Manufacturers add more "cores," or central processing units, to boost performance.

• A single CPU is used in the majority of computers.

• Multiple CPU systems can be used in supercomputers, servers, and other high-end systems that need as much number-crunching capacity as possible.

• Hyper-threading allows the physical execution resources of the two logical CPU cores to be shared

A multiprocessor device is made up of several processors sharing memory. A multiprocessor is a device that has more than one processor. We use multiprocessors because the load on the processor can be very high at times, but input output from other functions is not necessary. This type of operating system is more dependable because even if one processor fails, the others will keep working.

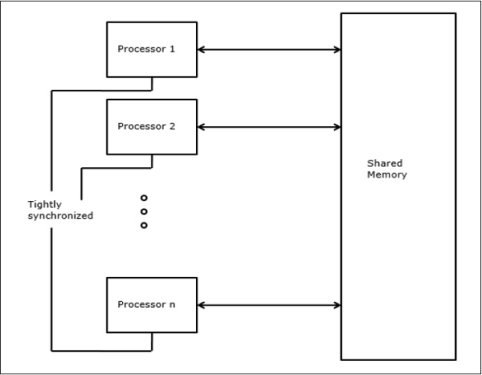

Since we only have two versions of the processor, other equipment such as input-output and memory are shared, this system is relatively inexpensive. All of the processors in a multiprocessor system are regulated by a single operating system. The processor's multiplicity and how the processors interact are also clear to each other.

In this case, the user has no idea which processor their process is running on. A process is divided into several smaller processes, each of which runs on its own processor. Multiprogramming refers to having several programs running at the same time, while multiprocessing refers to having more than one physical and processor.

There are several CPUs in this diagram, and they share a similar memory.

Fig 22 - multiprocessor operating system

Multiprocessing Scheduling

Multiple CPUs share the load in multiprocessor scheduling, allowing multiple processes to run at the same time. When opposed to single processor scheduling, multiprocessor scheduling is more complicated. There are several processors in the multiprocessor scheduling, all of which are identical, allowing us to run any process at any time.

The system's multiple CPUs are in constant contact, sharing a shared bus, memory, and other peripheral devices. As a result, we can classify the system as tightly coupled. When we need to process a large amount of data, we use these systems. These systems are mostly used in satellites, weather forecasting, and other similar applications.

The symmetric multiprocessing model is used in multiprocessing systems. Each processor in this system works on an identical copy of the operating system, which communicates with one another. We will save money with the aid of this machine because of other devices such as peripherals. Other machines, such as power supplies, are exchanged.

The most significant aspect is that we can complete more work in less time. If one of the systems in a multiprocessor system fails, the entire system will not come to a halt; only the processor's speed will be slowed. The operating system is in control of the multiprocessing system's overall performance.

Different tasks are assigned to different processors in the system by the operating system. The mechanism is broken down into threads in a multiprocessing system, and each thread will operate independently. Threads can run on multiple processors at the same time in this form of system. Since these systems run several processes in parallel, they are referred to as parallel processors. Parallel processing refers to the CPU's ability to run several processes at the same time. The resources of the different processors are dynamically shared in a multiprocessing system.

A multiprocessor operating system is a type of standard OS that can handle multiple system calls at once, manage memory, and manage files as well as input-output devices.

A multiprocessor can perform the following additional functions:

• Process synchronization

• Resource management

• Scheduling

There are some multiprocessor operating system organizations:

1. Each CPU has its own OS

There are several Central Processing Units in this form of organization, and each CPU has its own private operating system. Memory is shared by all processors, and the input-output system is also shared. A single bus connects the whole system.

Fig 23 - Single bus with own OS

2. Master slave multiprocessor

A single data structure keeps track of the ready processes in this form of multiprocessor model. In this model, one central processing unit serves as the master, while the other serves as the slave. All of the processors are controlled by a single processor, referred to as the master server. The operating system is run by the master server, while the user processes are run by the slave server. The memory and input-output devices are shared by all processors, and they are all connected to a single bus. This method is called Asymmetric multiprocessing because it is easy and eliminates data sharing.

Fig 24 - Master slave multiprocessor

3. Symmetric multiprocessor

The third model is Symmetric Multiprocessors (SMP). Although there is only one copy of the operating system in memory in this model, it can be run on any central processing unit. When a system call is made now, the central processing unit that made the system call traps to the kernel and processes the system call. This model dynamically balances processes and memory. This method employs symmetric multiprocessing, in which each processor schedules itself. The scheduling continues with each processor's scheduler examining the ready queue and selecting a process to run.

Fig 25 - symmetric multiprocessor

It's likely that all of the processes in this system are in a single ready queue, or that each processor has its own private ready queue.

In a multiprocessor operating system, there are primarily three points of contention.

• Locking System

Since the resources in a multiprocessor system are shared, they must be protected in order for multiple processors to have secure access to them. The primary goal of a locking scheme is to serialize resource access through multiple processors.

• Shared Data

When multiple processors access the same data at the same time, there is a risk of data inconsistency, so we need to use certain protocols or locking schemes to secure ourselves.

• Cache Coherence

The data from shared resources is stored in various local caches. If two clients each have a cached copy of memory, and one of them changes the memory block, the other client can be left with an invalid cache without being notified of the change. This type of dispute can be avoided by keeping a coherence view of the data.

Key takeaway

• A multiprocessor device is made up of several processors sharing memory.

• A multiprocessor is a device that has more than one processor.

• Multiple CPUs share the load in multiprocessor scheduling, allowing multiple processes to run at the same time.

• There are several processors in the multiprocessor scheduling, all of which are identical, allowing us to run any process at any time.

References:

1. “Computer Organization and Design: The Hardware/Software Interface”, 5th

Edition by David A. Patterson and John L. Hennessy, Elsevier.

2. “Computer Organization and Embedded Systems”, 6th Edition by CarlHamacher, McGraw Hill Higher Education.

3. “Computer Architecture and Organization”, 3rd Edition by John P. Hayes, WCB/McGraw-Hill

4. “Computer Organization and Architecture: Designing for Performance”, 10th Edition by William Stallings, Pearson Education.

5. “Computer System Design and Architecture”, 2nd Edition by Vincent P. Heuring

and Harry F. Jordan, Pearson Education.