Unit – 3

Uncertainty

We must be able to appraise the possibility of various outcomes in order to act rationally in the face of uncertainty. In FOL, a fact F is only useful if it can be determined if it is true or false. We must, however, be able to determine the likelihood that F is right. By evaluating the probabilities of events, we can develop ways for responding rationally under uncertainty (probabilities).

We had previously acquired knowledge representation using first-order logic and propositional logic with certainty, implying that we knew the predicates. With this knowledge representation, we could write AB, which implies that if A is true, then B is true; however, if we aren't sure if A is true or not, we won't be able to make this assertion; this is known as uncertainty.

Using tools and techniques from probability, a field dedicated to dealing with uncertainty, it is possible to manage the uncertainty that is inherent in machine learning for predictive modeling.

So, we require uncertain reasoning or probabilistic reasoning to describe uncertain information, when we aren't confident about the predicates.

Uncertainty is the most difficult aspect of machine learning for newcomers, especially developers.

The three basic sources of uncertainty in machine learning are noise in the data, poor domain coverage, and imperfect models.

In applied machine learning, probability provides the framework and methods for quantifying, addressing, and harnessing uncertainty

Key takeaway

● Sample spaces S with events Ai, probabilities P(Ai); union A ∪ B and intersection AB, complement Ac.

● Axioms: P(A) ≤ 1; P(S) = 1; for exclusive Ai, P(∪iAi) = Σi P(Ai).

● Conditional probability: P(A|B) = P(AB)/P(B); P(A) = P(A|B) P(B) + P (A|Bc) P (Bc)

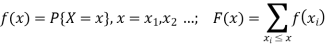

● Random variables (RVs) X; the cumulative distribution function (cdf) F(x) = P {X ≤ x};

for a discrete RV, probability mass function (pmf)

for a continuous RV, probability density function (pdf)

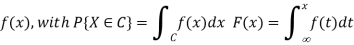

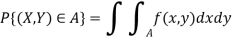

● Generalizations for more than one variable, e.g.

two RVs X and Y: joint cdf F (x, y) = P {X ≤ x, Y ≤ y};

pmf f (x, y) = P {X = x, Y = y}; or

pdf f (x, y), with

independent X and Y iff f (x, y) = fX(x) fY (y)

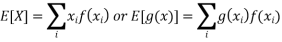

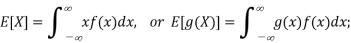

● Expected value or mean: for RV X, µ = E[X]; discrete RVs

continuous RVs

Probability Theory provides the formal techniques and principles for manipulating probabilistically represented propositions. The following are the three assumptions of probability theory:

● 0 <= P(A=a) <= 1 for all a in sample space of A

● P(True)=1, P(False)=0

● P (A v B) = P(A) + P(B) - P (A ^ B)

The following qualities can be deduced from these axioms:

● P(~A) = 1 - P(A)

● P(A) = P (A ^ B) + P (A ^ ~B)

● Sum{P(A=a)} = 1, where the sum is over all possible values a in the sample space of A

The condition probability distribution P (Xi |Parent (Xi)) determines the parent's effect on each node in the Bayesian network.

The Bayesian network is built on the foundations of the Joint Probability Distribution and Conditional Probability. Let's start with the joint probability distribution:

If we have variables x1, x2, x3,....., xn, then the probabilities of a different combination of x1, x2, x3.. xn, are known as Joint probability distribution.

P [x1, x2, x3,....., xn], it can be written as the following way in terms of the joint probability distribution.

= P [x1| x2, x3,....., xn] P [x2, x3,....., xn]

= P[x1| x2, x3,....., xn]P[x2|x3,....., xn]....P[xn-1|xn]P[xn].

In general, we can express the equation as follows for each variable Xi:

P(Xi|Xi-1,........., X1) = P(Xi |Parents(Xi ))

Given an application domain in which we have determined a sufficient set of random variables to encode all of the relevant information about that domain, we can completely specify all of the possible probabilistic information by constructing the full joint probability distribution, P (V1=v1, V2=v2, Vn=vn), which assigns probabilities to all possible combinations of values to all random variables.

Consider the three Boolean random variables that describe the domains Bird, Flier, and Young. Then we can build a table with all of the possible interpretations and their chances of being correct:

Bird Flier Young Probability

T T T 0.0

T T F 0.2

T F T 0.04

T F F 0.01

F T T 0.01

F T F 0.01

F F T 0.23

F F F 0.5

The fact that the above table contains 8 rows indicates the fact that the three Boolean variables can be assigned values in 23 distinct ways. If there are n Boolean variables, the table will be 2n rows long. The table will be size kn if each of the n variables has k potential values.

The total of the probability in the right column must also equal 1 because we know the set of all potential values for each variable. This means that in order to completely fill in the table, 2n -1 values must be calculated for n Boolean random variables.

If all of the probabilities for a whole joint probability distribution table are known, we can construct any probabilistic assertion about the domain. We can compute using the table above, for example.

● P(Bird=T) = P(B) = 0.0 + 0.2 + 0.04 + 0.01 = 0.25

● P (Bird=T, Flier=F) = P (B, ~F) = P (B, ~F, Y) + F (B, ~F, ~Y) = 0.04 + 0.01 = 0.05

Key takeaway

Bayes' theorem is a mathematical formula for calculating the probability of an event based on ambiguous information. It is also known as Bayes' rule, Bayes' law, or Bayesian reasoning.

In probability theory, it relates the conditional and marginal probabilities of two random events.

The inspiration for Bayes' theorem came from British mathematician Thomas Bayes. Bayesian inference is a technique for applying Bayes' theorem, which is at the heart of Bayesian statistics.

It's a method for calculating the value of P(B|A) using P(A|B) knowledge.

Bayes' theorem can update the probability forecast of an occurrence based on new information from the real world.

Example: If cancer is linked to one's age, we can apply Bayes' theorem to more precisely forecast cancer risk based on age.

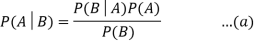

Bayes' theorem can be derived using the product rule and conditional probability of event A with known event B:

As a result of the product rule, we can write:

P (A ⋀ B) = P(A|B) P(B) or

In the same way, the likelihood of event B if event A is known is:

P (A ⋀ B) = P(B|A) P(A)

When we combine the right-hand sides of both equations, we get:

The above equation is known as Bayes' rule or Bayes' theorem (a). This equation is the starting point for most current AI systems for probabilistic inference.

It displays the simple relationship between joint and conditional probabilities. Here,

P(A|B) is the posterior, which we must compute, and it stands for Probability of hypothesis A when evidence B is present.

The likelihood is defined as P(B|A), in which the probability of evidence is computed after assuming that the hypothesis is valid.

Prior probability, or the likelihood of a hypothesis before taking into account the evidence, is denoted by P(A).

Marginal probability, or the likelihood of a single piece of evidence, is denoted by P(B).

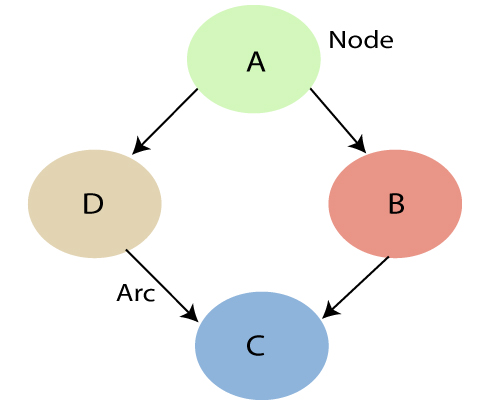

In general, we can write P (B) = P(A)*P(B|Ai) in the equation (a), therefore the Bayes' rule can be expressed as:

Where A1, A2, A3,..... is a set of mutually exclusive and exhaustive events, and An is a set of mutually exclusive and exhaustive events.

Use of Bayes’ Theorem

The purpose of doing an expert task, such as medical diagnosis, is to identify identifications (diseases) based on observations (symptoms). A relationship like this can be found in Bayes' Theorem.

P (A | B) = P (B | A) * P(A) / P(B)

Suppose: A=Patient has measles, B =has a rash

Then: P(measles/rash) =P(rash/measles) * P(measles) / P(rash)

Based on the known statistical values on the right, the desired diagnostic relationship on the left can be determined.

Key takeaway

Causes of uncertainty:

In the real world, the following are some of the most common sources of uncertainty.

● Information occurred from unreliable sources.

● Experimental Errors

● Equipment fault

● Temperature variation

● Climate change.

Probabilistic reasoning is a knowledge representation method that uses the concept of probability to indicate uncertainty in knowledge. We employ probabilistic reasoning, which integrates probability theory and logic, to deal with uncertainty.

Probability is employed in probabilistic reasoning because it helps us to deal with uncertainty caused by someone's indifference or ignorance.

There are many situations in the real world when nothing is certain, such as "it will rain today," "behavior of someone in specific surroundings," and "a competition between two teams or two players." These are probable sentences for which we can presume that something will happen but are unsure, thus we utilize probabilistic reasoning in this case.

Probability: Probability is a measure of the likelihood of an uncertain event occurring. It's a numerical representation of the likelihood of something happening. The probability value is always between 0 and 1, indicating complete unpredictability.

0 ≤ P(A) ≤ 1, where P(A) is the probability of an event A.

P(A) = 0, indicates total uncertainty in an event A.

P(A) =1, indicates total certainty in an event A.

We can compute the probability of an uncertain event using the formula below.

P(¬A) = probability of a not happening event.

P(¬A) + P(A) = 1.

Event: An event is the name given to each conceivable consequence of a variable.

Sample space: Sample space is a collection of all potential events.

Random variables: Random variables are used to represent real-world events and objects.

Prior probability: The prior probability of an occurrence is the probability calculated before fresh information is observed.

Posterior Probability: After all evidence or information has been considered, the likelihood is computed. It's a mix of known probabilities and new information.

Conditional probability: The likelihood of an event occurring when another event has already occurred is known as conditional probability.

Key takeaway

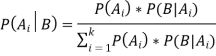

● Bayesian Networks, also known as Bayes Nets, Belief Nets, Causal Nets, and Probability Nets, are a data structure for capturing all of the information in a domain's entire joint probability distribution. The Bayesian Net can compute every value in the entire joint probability distribution of a collection of random variables.

● This variable represents all direct causal relationships between variables.

● To construct a Bayesian net for a given set of variables, draw arcs from cause variables to immediate effects.

● It saves space by taking advantage of the fact that variable dependencies are generally local in many real-world problem domains, resulting in a high number of conditionally independent variables.

● In both qualitative and quantitative terms, the link between variables is captured.

● It's possible to use it to construct a case.

● Predictive reasoning (sometimes referred to as causal reasoning) is a sort of reasoning that proceeds from the top down (from causes to effects).

● From effects to causes, diagnostic thinking goes backwards (bottom-up).

● When A has a direct causal influence on B, a Bayesian Net is a directed acyclic graph (DAG) in which each random variable has its own node and a directed arc from A to B. As a result, the nodes represent states of affairs, whereas the arcs represent causal relationships. When A is present, B is more likely to occur, and vice versa. The backward influence is referred to as "diagnostic" or "evidential" support for A due to the presence of B.

● Each node A in a network is conditionally independent of any subset of nodes that are not children of A, given its parents.

A Bayesian network that depicts and solves decision issues under uncertain knowledge is known as an influence diagram.

Fig 1: Bayesian network graph

● Each node represents a random variable that can be continuous or discontinuous in nature.

● Arcs or directed arrows represent the causal relationship or conditional probabilities between random variables. The graph's two nodes are connected by these directed links, also known as arrows.

These ties imply that one node has a direct influence on another, and nodes are independent of one another if there are no directed relationships.

Key takeaway

● Because they define the process of amassing evidence and revising probabilities based on new evidence, conditional probabilities are critical for reasoning.

● For example, if we know there is a 4% chance of a person having a cavity, we can represent this as the prior (aka unconditional) probability P(Cavity)=0.04.

● Let's say the person now has a toothache symptom. Given this additional data, what is the posterior probability of a Cavity. That is, compute P (Cavity | Toothache).

● If P(A|B) = 1, this is equivalent to the sentence in Propositional Logic B => A. Similarly, if P(A|B) =0.9, then this is like saying B => A with 90% certainty.

● To put it another way, we've made inference a little hazy because nothing is guaranteed.

● Given several measurements and other "evidence", E1, ..., Ek, we will formulate queries as P (Q | E1, E2, ..., Ek) meaning "what is the degree of belief that Q is true given that we know E1, ..., Ek and nothing else."

Conditional probability is defined as: P(A|B) = P (A ^ B)/P(B) = P (A, B)/P(B)

One way of looking at this definition is as a normalized (using P(B)) joint probability (P (A, B)).

● Example Computing Conditional Probability from the Joint Probability Distribution Say we want to compute P (~Bird | Flier) and we know the full joint probability distribution function given above.

● We can do this as follows:

● P(~B|F) = P (~B, F) / P(F)

= (P (~B, F, Y) + P (~B, F, ~Y)) / P(F)

= (.01 + .01)/P(F)

Next, we can compute the marginal probability P(F) from the whole joint probability distribution, or we can use a technique called normalization, which requires obtaining the full joint probability distribution first.

P(B|F) = P (B, F) / P(F)

= (P (B, F, Y) + P (B, F, ~Y)) / P(F)

= (0.0 + 0.2)/P(F)

Now we also know that P(~B|F) + P(B|F) = 1, so substituting from above and solving for P(F) we get P(F) = 0.22. Hence, P(~B|F) = 0.02/0.22 = 0.091.

While this is a good method for estimating conditional probabilities, it is inefficient in general since it requires computing and storing the entire joint probability distribution table, which is exponentially large.

Some important rules related to conditional probability are:

● Rewriting the definition of conditional probability, we get the Product Rule: P (A, B) = P(A|B) P(B)

● Chain Rule: P (A, B, C, D) = P (A|B, C, D) P (B|C, D) P(C|D) P(D), which generalizes the product rule for a joint probability of an arbitrary number of variables. Note that ordering the variables results in a different expression, but all have the same resulting value.

● Conditionalized version of the Chain Rule: P (A, B|C) = P (A|B, C) P(B|C)

● Bayes's Rule: P(A|B) = (P(A)P(B|A))/P(B), which can be written as follows to more clearly emphasize the "updating" aspect of the rule: P(A|B) = P(A) * [P(B|A)/P(B)] Note: The terms P(A) and P(B) are called the prior (or marginal) probabilities. The term P(A|B) is called the posterior probability because it is derived from or depends on the value of B.

● Conditionalized version of Bayes's Rule: P (A|B, C) = P (B|A, C) P(A|C)/P(B|C)

● Conditioning (aka Addition) Rule: P(A) = Sum{P(A|B=b) P(B=b)} where the sum is over all possible values b in the sample space of B.

● P(~B|A) = 1 - P(B|A)

● Assuming conditional independence of B and C given A, we can simplify Bayes's Rule for two pieces of evidence B and C:

P (A | B, C) = (P(A)P (B, C | A))/P (B, C)

= (P(A)P(B|A) P(C|A))/(P(B)P(C|B))

= P(A) * [P(B|A)/P(B)] * [P(C|A)/P(C|B)]

= (P(A) * P(B|A) * P(C|A))/P (B, C)

Key takeaway

● In general, Bayes Net inference is an NP-hard problem (exponential in the size of the graph).

● There are linear time techniques based on belief propagation for singly-connected networks or polytrees with no undirected loops.

● Local evidence messages are sent by each node to their children and parents.

● Based on incoming messages from its neighbors, each node updates its belief in each of its possible values and propagates evidence to its neighbors.

● Inference for generic networks can be approximated using loopy belief propagation, which iteratively refines probabilities until they reach an exact limit.

Temporal model

Direct sampling method

The most basic sort of random sampling process for Bayesian networks generates events from a network with no evidence associated with them. The idea is that each variable is sampled in topological order. The value is drawn from a probability distribution based on the values that have previously been assigned to the variable's parents.

function PRIOR-SAMPLE(bn) returns an event sampled from the prior specified by bn

inputs: bn, a Bayesian network specifying joint distribution

event with n elements

event with n elements

foreach variable  in

in

a random sample from P(

a random sample from P(

Likelihood weighting

Likelihood weighting eliminates the inefficiencies of rejection sampling by creating only events that are consistent with the evidence. It's a tailored version of the general statistical approach of importance sampling for Bayesian network inference.

Function LIKELIHOOD-WEIGHTING (X, e, bn, N) returns an estimate of

Inputs: X, the query variable

e, observed values for variables E

bn, a Bayesian network specifying joint distribution

N, the total number of samples to be generated

Local variables: W, a vector of weighted counts for each value of X, initially zero

For j=1 to N do

WEIGHTED-SAMPLE(bn,e)

WEIGHTED-SAMPLE(bn,e)

x is the value of X in x

x is the value of X in x

NORMALIZE (W)

NORMALIZE (W)

Inference by Markov chain simulation

Rejection sampling and likelihood weighting are not the same as Markov chain (MCMC) approaches. Rather of starting from scratch, MCMC approaches create each sample by making a random change to the preceding sample. It's easier to think of an MCMC algorithm as being in a current state where each variable has a value and then randomly modifying that state to get a new state.

Key takeaway

References: