UNIT 2

Concurrent Processes

- A process is a program in execution. The execution of a process must go in sequentially.

- The process isn't as same as program code yet much more than it.

- A process is an 'active' element instead of a program which is viewed as a 'passive' entity.

- Properties held by process incorporate hardware state, memory, CPU, and so forth.

- Based on the operating system (OS), a process might be comprised of numerous threads of execution that execute instructions simultaneously.

- While a computer program is an inactive collection of instructions, a process is the real execution of those instructions. A few processes might be related to a similar program; for instance, opening up a few occurrences of a similar program regularly brings about more than one process being executed.

- Process memory is isolated into four segments for effective working :

- The Text section is comprised of the compiled program code, read in from non-volatile storage when the program is launched.

- The Data section is made up of global and static variables, allotted and introduced before executing the primary.

- The Heap is utilized for the dynamic memory distribution, and is managed through calls to new, delete, malloc, free, and so on.

- The Stack is utilized for local variables. Space on the stack is saved for local variables when they are declared.

Max

Stack |

|

Heap |

Data |

Text |

0

A process can be comprehensively ordered into the following two types depending on its execution:

- I/O-Bound Process - An I/O-bound process is a process whose execution time is resolved principally by the measure of time it spends finishing I/O operations.

- CPU-Bound Process - A CPU-bound process is a process whose execution time is dictated by the speed of the CPU it keeps running on. A CPU-bound process can finish its execution quicker in the event that it is running on a quicker processor.

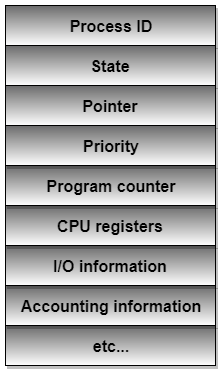

Process Control Back (PCB)

- A Process Control Block is a data structure kept up by the Operating System for each process.

- The PCB is distinguished by a number process ID (PID).

- The job of the PCBs is main in process management: they are accessed and/or altered by most OS utilities, incorporating those associated with scheduling, memory, and I/O resource access and performance monitoring.

- It very well may be said that the arrangement of the PCBs characterizes the present condition of the operating system.

- A PCB keeps all the data expected to monitor a process as mentioned below −

- Process State- The present condition of the process i.e., regardless of whether it is ready, running, waiting, or whatever.

- Process privileges- This is required to permit/deny access to system resources.

- Process ID- It kept the unique identification number for every process in the t system.

- Pointer- It keeps the pointer to the parent process.

- Program Counter- It is a pointer to the location of the next instruction that is to be executed by the process.

- CPU registers- Different CPU registers are used where the process should be kept for execution in a running state.

- CPU Scheduling Information- This keeps process priority and other scheduling information that is needed to schedule a process.

- Memory management information- This incorporates the data of page table, memory limits, Segment table based upon memory utilized by the operating system.

- Accounting information- This incorporates the amount of CPU utilized for process execution, time limits, execution ID, and so forth.

- IO status information- This keeps a record of all the I/O devices assigned to the process.

- The engineering of a PCB is subject to the Operating System and may contain distinctive data in various operating systems. Here is an improved graph of a PCB −

Fig 1 - PCB

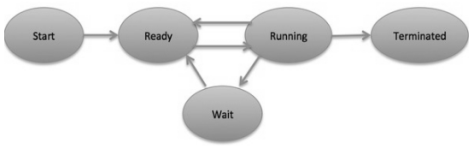

Process State

- At some point when a process executes, it goes through various states.

- These stages may contrast in various operating systems, and the names of these states are likewise not fixed.

- All in all, a process can have one of the accompanying five states one after another.

- Start- This is the underlying state when a process is first started/created.

- Ready- The process is waiting to be assigned out to a processor. Ready processes are waiting to have the processor dispensed to them by the operating system with the goal that they can run. The process may come into this state after Start state or while running it by however interrupted by the scheduler to appoint CPU to some different process.

- Running- When the process has been allotted to a processor by the OS scheduler, the process state is set to running and the processor executes its instructions.

- Waiting- Process moves into the waiting upstate on the off chance that it needs to wait tight for a resource, for example, sitting waiting for user input, or waiting that a file will end up accessible.

- Terminated or Exit- When the process completes its execution, or it is ended by the operating system, it is moved to the terminate state where it waits back to be expelled from main memory.

Fig 2 – process state

KEY TAKEAWAY

- A process is a program in execution. The execution of a process must go in sequentially.

- The process isn't as same as program code yet much more than it.

- A process is an 'active' element instead of a program which is viewed as a 'passive' entity.

- Properties held by process incorporate hardware state, memory, CPU, and so forth.

- Based on the operating system (OS), a process might be comprised of numerous threads of execution that execute instructions simultaneously.

Concurrency is the execution of multiple instruction sequences at a similar time. It happens within the software once there area unit many method threads running in parallel. The running method threads perpetually communicate with one another through shared memory or message passing. Concurrency leads to the sharing of resources result in issues like deadlocks and resource starvation.

It helps in techniques like coordinating the execution of processes, memory allocation, and execution planning for increasing output.

Principles of Concurrency :

Both interleaved and overlapped processes may be viewed as samples of synchronal processes, they each gift similar issues.

The relative speed of execution can't be expected. It depends on the following:

• The activities of different processes

• The means software handles interrupts

• The planning policies of the software

Problems in Concurrency :

• Sharing world resources –

Sharing of worldwide resources safely is troublesome. If 2 processes each building use of a worldwide variable and each performs browse and write that variable, then the order in that numerous browse and write area unit dead is essential.

• Optimal allocation of resources –

It is troublesome for the software to manage the allocation of resources optimally.

• Locating programming errors –

It is troublesome to find a software error as a result of reports area unit sometimes not consistent.

• Locking the channel –

It may be inefficient for the software to easily lock the channel and prevents its use by different processes.

Advantages of Concurrency

• Running of multiple applications –

It modifies to run multiple applications at a similar time.

• Better resource utilization –

It allows that the resources that area unit unused by one application may be used for different applications.

• Better average reaction time –

Without concurrency, every application must be run to completion before succeeding one may be run.

• Better performance –

It allows the higher performance by the software. Once one application uses solely the processor and another application uses solely the disc drive then the time to run each application at the same time to completion is shorter than the time to run every application consecutively.

Drawbacks of Concurrency :

• It is needed to safeguard multiple applications from each other.

• It is needed to coordinate multiple applications through extra mechanisms.

• Additional performance overheads and complexities in operational systems area unit needed for switch among applications.

• Sometimes running too several applications at the same time results in severely degraded performance.

Issues of Concurrency :

• Non-atomic –

Operations that area unit non-atomic however interruptible by multiple processes will cause issues.

• Race conditions –

A race condition happens if the result depends on that of many processes gets to some extent initial.

• Blocking –

Processes will block watching for resources. A method can be blocked for a long amount of your time watching for input from a terminal. If the method is needed to periodically update some knowledge, this can be undesirable.

• Starvation –

It happens once a method doesn't get service to progress.

• Deadlock –

It happens once 2 processes area units are blocked and thus neither will proceed to execute.

KEY TAKEAWAY

Concurrency is the execution of multiple instruction sequences at a similar time. It happens within the software once there area unit many method threads running in parallel. The running method threads perpetually communicate with one another through shared memory or message passing. Concurrency leads to the sharing of resources result in issues like deadlocks and resource starvation.

The producer shopper downside could be a synchronization downside. There's a hard and fast size buffer and therefore the producer produces things and enters them into the buffer. The patron removes the things from the buffer and consumes them.

A turn out shouldn't produce things into the buffer once the patron is overwhelming AN item from the buffer and the other way around. That the buffer ought to solely be accessed by the producer or shopper at a time.

The producer shopper downside will be resolved victimization semaphores. The codes for the producer and shopper method area unit given as follows −

Producer method

The code that defines the producer method is given below −

Do {

.

. Turn out ITEM

.

Wait(empty);

Wait(mutex);

.

. Place ITEM IN BUFFER

.

Signal(mutex);

Signal(full);

} while(1);

In the on top of code, mutex, empty, and full area unit semaphores. Here mutex is initialized to one, empty is initialized to n (maximum size of the buffer) and full is initialized to zero.

The mutex semaphore ensures mutual exclusion. The empty and full semaphores count the quantity of empty and full areas within the buffer.

After the item is made, the wait operation is applied on empty. This means that the empty house within the buffer has been bated by one. Then wait for operation is applied on mutex so that the shopper method cannot interfere.

After the item is placed within the buffer, the signal operation is applied on mutex and full. The previous indicates that the shopper method will currently act and therefore the latter shows that the buffer is full by one.

Consumer method

The code that defines the patron method is given below:

Do take away ITEM FROM BUFFER

.

Signal(mutex);

Signal(empty);

.

. CONSUME ITEM

.

} while(1);

The wait operation is applied in full. This means that things within the buffer have been bated by one. Then wait for operation is applied on mutex so that the producer method cannot interfere.

Then the item is off from the buffer. After that, the signal operation is applied to mutex and empty. The previous indicates that the shopper method will currently act and therefore the latter shows that the empty house within the buffer has exaggerated by one.

KEY TAKEAWAY

The producer shopper downside could be a synchronization downside. There's a hard and fast size buffer and therefore the producer produces things and enters them into the buffer. The patron removes the things from the buffer and consumes them.

A turn out shouldn't produce things into the buffer once the patron is overwhelming AN item from the buffer and the other way around. That the buffer ought to solely be accessed by the producer or shopper at a time.

During the execution of processes, processes got to enter the important section (or the section of the program shared across processes) now and then for execution. It'd therefore happen that thanks to the execution of multiple processes promptly, the values keep within the important section become inconsistent. In different words, the values rely upon the sequence of execution of directions – additionally called a race condition. The first task of method synchronization is to urge obviate race conditions whereas corporal punishment the important section.

This is primarily achieved through mutual exclusion.

Mutual exclusion may be a property of method synchronization that states that “no 2 processes will exist within the important section at any given purpose of time”. The term was 1st coined by Djikstra. Any method synchronization technique being employed should satisfy the property of mutual exclusion, while not that it'd not be attainable to urge obviate a race condition.

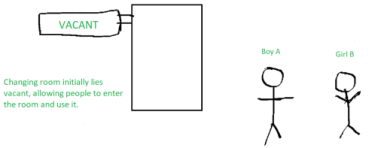

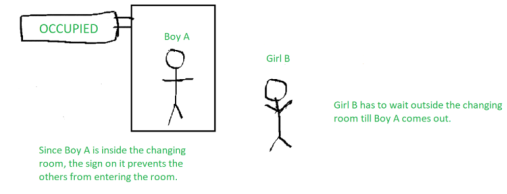

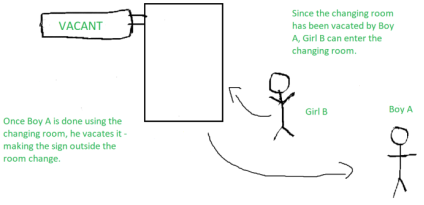

To understand mutual exclusion, let’s take AN example.

Example:

In the garments section of a market, 2 folks square measure buying garments.

Boy A decides upon some garments to shop for and heads to the dynamic area to do them out. Now, whereas boy A is within the dynamic area, there's AN ‘occupied’ register it – indicating that nobody else will are available. Lady B should use the dynamic area too, therefore she should wait until boy A is completed victimization the dynamic area.

Once boy A comes out of the dynamic area, the register changes from ‘occupied’ to ‘vacant’ – indicating that another person will use it. Hence, lady B payoff to using the dynamic area, whereas the sign displays ‘occupied’ once more.

The dynamic area is nothing however the important section, boy A and lady B square measure 2 different processes, whereas the sign outside the dynamic area indicates the method synchronization mechanism being employed.

KEY TAKEAWAY

During the execution of processes, processes got to enter the important section (or the section of the program shared across processes) now and then for execution. It'd therefore happen that thanks to the execution of multiple processes promptly, the values keep within the important section become inconsistent. In different words, the values rely upon the sequence of execution of directions – additionally called a race condition. The first task of method synchronization is to urge obviate race conditions whereas corporal punishment the important section.

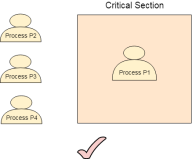

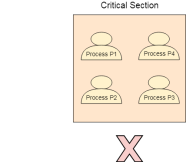

The critical Section is the part of a program that tries to access shared resources. That resource is also any resource in a very pc sort of a memory location, organization, computer hardware, or any IO device.

The vital section can not be dead by quite one method at an identical time; software faces difficulties in permitting and disallowing the processes from coming into the vital section.

The vital section downside is employed to style a group of protocols which might make sure that the Race condition among the processes can ne'er arise.

To synchronize the cooperative processes, our main task is to resolve the vital section downside. We want to supply an answer in such a way that the subsequent conditions are glad.

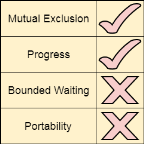

Requirements of Synchronization mechanisms

Fig 3 - Synchronization mechanisms

- Progress

Progress means that if one process doesn't need to execute into a critical section then it should not stop other processes to get into the critical section.

Secondary

- Bounded Waiting

We should be able to predict the waiting time for every process to get into the critical section. The process must not be endlessly waiting for getting into the critical section.

2. Architectural Neutrality

Our mechanism must be architectural natural. It means that if our solution is working fine on one architecture then it should also run on the other ones as well.

KEY TAKEAWAY

The critical Section is the part of a program that tries to access shared resources. That resource is also any resource in a very pc sort of a memory location, organization, computer hardware, or any IO device.

The vital section can not be dead by quite one method at an identical time; software faces difficulties in permitting and disallowing the processes from coming into the vital section.

Dekker’s algorithm

Dekker’s algorithm is the first solution to the critical section problem. There are many versions of these algorithms, the 5th or final version satisfies all the conditions below and is the most efficient among all of them.

The solution to the critical section problem must ensure the following three conditions:

- Mutual Exclusion

- Progress

- Bounded Waiting

First version

- Dekker’s algorithm succeeds to achieve mutual exclusion.

- It uses variables to control thread execution.

- It constantly checks whether a critical section is available.

Example

Main(){

Int thread_no = 1;

StartThreads();

}

Thread1(){

Do {

// entry section

// wait until threadno is 1

While (threado == 2)

;

// critical section

// exit section

// give access to the other thread

Threadno = 2;

// remainder section

} while (completed == false)

}

Thread2(){

Do {

// entry section

// wait until threadno is 2

While (threadno == 1)

;

// critical section

// exit section

// give access to the other thread

Threadno = 1;

// remainder section

} while (completed == false)

}

Problem

The problem of this first version of Dekker’s algorithm is the implementation of lockstep synchronization. It means each thread depends on the other to complete its execution. If one of the two processes completes its execution, then the second process runs. Then it gives access to the completed one and waits for its run. But the completed one would never run and so it would never return access to the second process. Thus the second process waits for an infinite time.

Second Version

In the Second version of Dekker’s algorithm, lockstep synchronization is removed. It is done by using two flags to indicate its current status and updates them accordingly at the entry and exit section.

Example

Main(){

// flags to indicate whether each thread is in

// its critial section or not.

Boolean th1 = false;

Boolean th2 = false;

StartThreads();

}

Thread1(){

Do {

// entry section

// wait until th2 is in its critical section

While (th2 == true);

// indicate thread1 entering its critical section

Th1 = true;

// critical section

// exit section

// indicate th1 exiting its critical section

Th1 = false;

// remainder section

} while (completed == false)

}

Thread2(){

Do {

// entry section

// wait until th1 is in its critical section

While (th1 == true);

// indicate th2 entering its critical section

Th2 = true;

// critical section

// exit section

// indicate th2 exiting its critical section

Th2 = false;

// remainder section

} while (completed == false)

}

Problem

Mutual exclusion is violated in this version. During flag update, if threads are preempted then both the threads enter into the critical section. Once the preempted thread is restarted, also the same can be observed at the start itself, when both the flags are false.

Third Version

In this version, critical section flag is set before entering critical section test to ensure mutual exclusion.

Main(){

// flags to indicate whether each thread is in

// queue to enter its critical section

Boolean th1wantstoenter = false;

Boolean th2wantstoenter = false;

StartThreads();

}

Thread1(){

Do {

Th1wantstoenter = true;

// entry section

// wait until th2 wants to enter

// its critical section

While (th2wantstoenter == true)

;

// critical section

// exit section

// indicate th1 has completed

// its critical section

Th1wantstoenter = false;

// remainder section

} while (completed == false)

}

Thread2(){

Do {

Th2wantstoenter = true;

// entry section

// wait until th1 wants to enter

// its critical section

While (th1wantstoenter == true)

;

// critical section

// exit section

// indicate th2 has completed

// its critical section

Th2wantstoenter = false;

// remainder section

} while (completed == false)

}

Problem

This version failed to solve the problem of mutual exclusion. It also introduces the deadlock possibility, both threads could get flag simultaneously and they will wait for an infinite time.

Fourth Version

In this version of Dekker’s algorithm, it sets the flag to false for a small period to provide control and solves the problem of mutual exclusion and deadlock.

Example

Main(){

// flags to indicate whether each thread is in

// queue to enter its critical section

Boolean th1wantstoenter = false;

Boolean th2wantstoenter = false;

StartThreads();

}

Thread1(){

Do {

Th1wantstoenter = true;

While (th2wantstoenter == true) {

// gives access to other thread

// wait for random amount of time

Th1wantstoenter = false;

Th1wantstoenter = true;

}

// entry section

// wait until th2 wants to enter

// its critical section

// critical section

// exit section

// indicate th1 has completed

// its critical section

Th1wantstoenter = false;

// remainder section

} while (completed == false)

}

Thread2(){

Do {

Th2wantstoenter = true;

While (th1wantstoenter == true) {

// gives access to other thread

// wait for random amount of time

Th2wantstoenter = false;

Th2wantstoenter = true;

}

// entry section

// wait until th1 wants to enter

// its critical section

// critical section

// exit section

// indicate th2 has completed

// its critical section

Th2wantstoenter = false;

// remainder section

} while (completed == false)

}

Problem

Indefinite postponement is the problem of this version. A random amount of time is unpredictable depending upon the situation in which the algorithm is being implemented, hence it is not acceptable in the case of business-critical systems.

Fifth Version (Final Solution)

In this version, flavored thread motion is used to determine entry to the critical section. It provides mutual exclusion and avoiding deadlock, indefinite postponement, or lockstep synchronization by resolving the conflict that which thread should execute first. This version of Dekker’s algorithm provides a complete solution to critical section problems.

Example

Main(){

// to denote which thread will enter next

Int favouredthread = 1;

// flags to indicate whether each thread is in

// queue to enter its critical section

Boolean th1wantstoenter = false;

Boolean th2wantstoenter = false;

StartThreads();

}

Thread1(){

Do {

Thread1wantstoenter = true;

// entry section

// wait until th2 wants to enter

// its critical section

While (th2wantstoenter == true) {

// if 2nd thread is more favored

If (favaouredthread == 2) {

// gives access to other thread

Th1wantstoenter = false;

// wait until this thread is favored

While (favouredthread == 2);

Th1wantstoenter = true;

}

}

// critical section

// favor the 2nd thread

Favouredthread = 2;

// exit section

// indicate th1 has completed

// its critical section

Th1wantstoenter = false;

// remainder section

} while (completed == false)

}

Thread2(){

Do {

Th2wantstoenter = true;

// entry section

// wait until th1 wants to enter

// its critical section

While (th1wantstoenter == true) {

// if 1st thread is more favored

If (favaouredthread == 1) {

// gives access to other thread

Th2wantstoenter = false;

// wait until this thread is favored

While (favouredthread == 1);

Th2wantstoenter = true;

}

}

// critical section

// favour the 1st thread

Favouredthread = 1;

// exit section

// indicate th2 has completed

// its critical section

Th2wantstoenter = false;

// remainder section

} while (completed == false)

}

KEY TAKEAWAY

Dekker’s algorithm

Dekker’s algorithm is the first solution to the critical section problem. There are many versions of this algorithm, the 5th or final version satisfies all the conditions below and is the most efficient among all of them.

The solution to the critical section problem must ensure the following three conditions:

- Mutual Exclusion

- Progress

- Bounded Waiting

This is a software mechanism implemented in user mode. It is a busy waiting solution that can be implemented for only two processes. It uses two variables that are turn variable and interesting variable.

The Code of the solution is given below

- # define N 2

- # define TRUE 1

- # define FALSE 0

- Int interested[N] = FALSE;

- Int turn;

- VoidEntry_Section (int process)

- {

- Int other;

- Other = 1-process;

- Interested[process] = TRUE;

- Turn = process;

- While (interested [other] =True && TURN=process);

- }

- VoidExit_Section (int process)

- {

- Interested [process] = FALSE;

- }

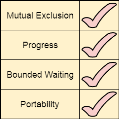

Till now, each of our solutions is affected by one or the other problem. However, the Peterson solution provides you all the requirements such as Mutual Exclusion, Progress, Bounded Waiting, and Portability.

Analysis of Peterson Solution

- VoidEntry_Section (int process)

- {

- 1. int other;

- 2. other = 1-process;

- 3. interested[process] = TRUE;

- 4. turn = process;

- 5. while (interested [other] =True && TURN=process);

- }

- Critical Section

- VoidExit_Section (int process)

- {

- 6. interested [process] = FALSE;

- }

This is a two-process solution. Let us consider two cooperative processes P1 and P2. The entry section and exit section are shown below. Initially, the value of interesting variables and turn variable is 0.

Initially, process P1 arrives and wants to enter into the critical section. It sets its interesting variable to True (instruction line 3) and also sets turn to 1 (line number 4). Since the condition given in line number 5 is completely satisfied by P1 therefore it will enter the critical section.

- P1 → 1 2 3 4 5 CS

Meanwhile, Process P1 got preempted and process P2 got scheduled. P2 also wants to enter the critical section and executes instructions 1, 2, 3, and 4 of the entry section. On instruction 5, it got stuck since it doesn't satisfy the condition (the value of the other interesting variable is still true). Therefore it gets into the busy waiting.

- P2 → 1 2 3 4 5

P1 again got scheduled and finish the critical section by executing instruction no. 6 (setting an interesting variable to false). Now if P2 checks then it is going to satisfy the condition since the other process's interested variable becomes false. P2 will also get to enter the critical section.

- P1 → 6

- P2 → 5 CS

Any of the processes may enter the critical section multiple numbers of times. Hence the procedure occurs in the cyclic order.

Mutual Exclusion

The method provides a mutual exclusion for sure. In the entry section, the while condition involves the criteria for two variables, therefore, a process cannot enter the critical section until the other process is interesting and the process is the last one to update the turn variable.

Progress

An uninterested process will never stop the other interesting process from entering the critical section. If the other process is also interested then the process will wait.

Bounded waiting

The interesting variable mechanism failed because it was not providing bounded waiting. However, in Peterson's solution, A deadlock can never happen because the process which first sets the turn variable will enter in the critical section for sure. Therefore, if a process is preempted after executing line number 4 of the entry section then it will get into the critical section in its next chance.

Portability

This is the complete software solution and therefore it is portable on every hardware.

KEY TAKEAWAY

This is a software mechanism implemented in user mode. It is a busy waiting solution that can be implemented for only two processes. It uses two variables that are turn variable and interesting variable.

Semaphores square measure number variables that square measure accustomed to solve the important section downside by exploitation 2 atomic operations, wait and signal that square measure used for method synchronization.

The definitions of wait and signal square measure as follows −

• Wait

The wait operation decrements the worth of its argument S if it's positive. If S is negative or zero, then no operation is performed.

Wait(S)

Whereas (S<=0);

S--;

}

• Signal

The signal operation increments the worth of its argument S.

Signal(S)

Types of Semaphores

There square measure 2 main kinds of semaphores i.e. reckoning semaphores and binary semaphores. Details concerning these square measure given as follows –

• Counting Semaphores

These square measure number worth semaphores Associate in Nursingd has an unrestricted worth domain. These semaphores square measure accustomed coordinate the resource access, wherever the semaphore count is that the range of accessible resources. If the resources square measure extra, the semaphore count is mechanically incremented and if the resources square measure is removed, the count is decremented.

• Binary Semaphores

The binary semaphores square measure like reckoning semaphores however their worth is restrict-ed to zero and one. The wait operation solely works once the semaphore is one and therefore the signal operation succeeds once the semaphore is zero. It's typically easier to implement binary semaphores than reckoning semaphores.

Advantages of Semaphores

Some of the benefits of semaphores square measure as follows −

• Semaphores permit only 1 method into the important section. They follow the mutual Pauli exclusion principle strictly and square measure far more economical than another way of synchronization.

• There isn't any resource wastage as a result of busy waiting in semaphores as processor time isn't wasted unnecessarily to ascertain if a condition is consummated to permit a method to access the important section.

• Semaphores square measure enforced within the machine freelance code of the microkernel. So that they square measure machine freelance.

Disadvantages of Semaphores

Some of the disadvantages of semaphores square measure as follows −

• Semaphores square measure difficult therefore the wait and signal operations should be implemented within the correct order to stop deadlocks.

• Semaphores square measure impractical for last scale use as their use ends up in the loss of modularity. This happens as a result of the wait and signal operations stop the creation of a structured layout for the system.

• Semaphores might cause a priority inversion wherever low priority processes might access the important section 1st and high priority processes later.

KEY TAKEAWAY

Semaphores square measure number variables that square measure accustomed to solve the important section downside by exploitation 2 atomic operations, wait and signal that square measure used for method synchronization.

Modification within the assembly code

In the lock variable mechanism, the general method reads the recent worth of the lock variable and enters the essential section. Thanks to this reason, quite one method may get into the essential section. However, the code shown within half one among the subsequent section will be replaced with the code shown within half 2. This does not affect the rule however, by doing this, we can manage to produce the mutual exclusion to some extent however not fully.

In the updated version of code, {the worth|the worth} of Lock is loaded into the native register R0 so the value of lock is about to one.

However, in step 3, the previous worth of lock (that is currently kept into R0) is compared with zero. If this can be zero then the method can merely enter into the essential section otherwise can wait by capital punishment unceasingly within the loop.

The advantage of setting the lock now to one by the method itself is that currently, the method that enters into the essential section carries the updated worth of lock variable that's one.

In this case, once it gets preempted and regular once more then conjointly it'll not enter the essential section no matter this worth of the lock variable because it already is aware of what the updated worth of the lock variable is.

Section 1 Section a pair of

1. Load Lock, R0

2. CMP R0, #0

3. JNZ step1

4. Store #1, Lock 1. Load Lock, R0

2. Store #1, Lock

3. CMP R0, #0

4. JNZ steps 1

TSL Instruction

However, the answer provided within the on top of phase provides mutual exclusion to some extent however it does not make certain that the mutual exclusion can forever be there. There's a break of getting quite one method within the essential section.

What if the method gets preempted simply when capital punishment the primary instruction of the assembly code written in section 2? therein case, it'll carry the recent worth of lock variable with it and it'll enter into the essential section no matter knowing this worth of lock variable. This might create the 2 processes gift within the essential section at constant time.

To get eliminate this drawback, we've to form positive that the preemption should not ensue simply when loading the previous worth of lock variable and before setting it to one. The matter will be solved if we will} be able to merge the primary 2 directions.

To deal with the matter, the software provides a special instruction referred to as take a look at Set Lock (TSL) instruction that merely masses the worth of lock variable into the native register R0 and sets it to one at the same time

The method that executes the TSL initial can enter into the essential section and no alternative method subsequently will enter till the primary process comes out. No method will execute the essential section even within the case of preemption of the primary method.

The assembly code of the answer can appear as if following.

1. TSL Lock, R0

2. CMP R0, #0

3. JNZ steps 1

Let's examine TSL on the premise of the four conditions.

• Mutual Exclusion

Mutual Exclusion is secured in the TSL mechanism since a method will ne'er be preempted simply before setting the lock variable. Just one method will see the lock variable as zero at a specific time and that is why the mutual exclusion is secured.

• Progress

According to the definition of progress, a method that does not wish to enter within the essential section shouldn't stop alternative processes to induce into it. In the TSL mechanism, a method can execute the TSL instruction only if it needs to induce into the essential section. The worth of the lock can forever be zero if no method does not wish to enter into the essential section thence the progress is often guar-anteed in TSL.

• Bounded Waiting

Bounded Waiting isn't secured in TSL. Some methods won't get an opportunity for this long. We tend to cannot predict for a method that it'll positively get an opportunity to enter in the essential section when an exact time.

• Architectural Neutrality

TSL does not give discipline Neutrality. It depends on the hardware platform. TSL instruction is provided by the software. Some platforms won't give that. Thence it's not disciplined natural.

KEY TAKEAWAY

Modification within the assembly code

In the lock variable mechanism, the general method reads the recent worth of the lock variable and enters the essential section. Thanks to this reason, quite one method may get into the essential section. However, the code shown within half one among the subsequent section will be replaced with the code shown within half 2. This does not affect the rule however, by doing this, we can manage to produce the mutual exclusion to some extent however not fully.

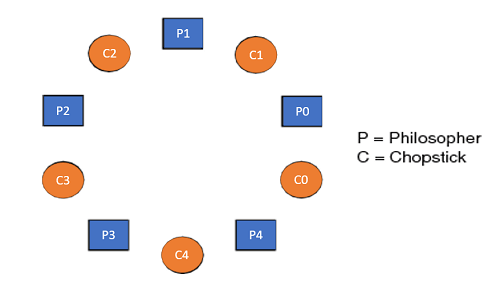

The dining philosopher's problem is the classical problem of synchronization which says that Five philosophers are sitting around a circular table and their job is to think and eat alternatively. A bowl of noodles is placed at the center of the table along with five chopsticks for each of the philosophers. To eat a philosopher needs both their right and a left chopstick. A philosopher can only eat if both immediate left and right chopsticks of the philosopher is available. In case if both immediate left and right chopsticks of the philosopher are not available then the philosopher puts down their (either left or right) chopstick and starts thinking again.

The dining philosopher demonstrates a large class of concurrency control problems hence it's a classic synchronization problem.

Fig 5 - Five Philosophers sitting around the table

Dining Philosophers Problem- Let's understand the Dining Philosophers Problem with the below code, we have used fig 1 as a reference to make you understand the problem exactly. The five Philosophers are represented as P0, P1, P2, P3, and P4 and five chopsticks by C0, C1, C2, C3, and C4.

- Void Philosopher

- {

- While(1)

- {

- Take_chopstick[i];

- Take_chopstick[ (i+1) % 5] ;

- . .

- . EATING THE NOODLE

- .

- Put_chopstick[i] );

- Put_chopstick[ (i+1) % 5] ;

- .

- . THINKING

- }

- }

Let's discuss the above code:

Suppose Philosopher P0 wants to eat, it will enter in Philosopher() function, and execute take_chopstick[i]; by doing this it holds C0 chopstick after that it execute take_chopstick[ (i+1) % 5]; by doing this it holds C1 chopstick( since i =0, therefore (0 + 1) % 5 = 1)

Similarly suppose now Philosopher P1 wants to eat, it will enter in Philosopher() function, and execute take_chopstick[i]; by doing this it holds C1 chopstick after that it execute take_chopstick[ (i+1) % 5]; by doing this it holds C2 chopstick( since i =1, therefore (1 + 1) % 5 = 2)

But Practically Chopstick C1 is not available as it has already been taken by philosopher P0, hence the above code generates problems and produces race conditions.

The solution to the Dining Philosophers Problem

We use a semaphore to represent a chopstick and this truly acts as a solution to the Dining Philosophers Problem. Wait and Signal operations will be used for the solution of the Dining Philosophers Problem, for picking a chopstick wait operation can be executed while for releasing a chopstick signal semaphore can be executed.

Semaphore: A semaphore is an integer variable in S, that apart from initialization is accessed by only two standard atomic operations - wait and signal, whose definitions are as follows:

- 1. wait( S )

- {

- While( S <= 0) ;

- S--;

- }

- 2. signal( S )

- {

- S++;

- }

From the above definitions of wait, it is clear that if the value of S <= 0 then it will enter into an infinite loop(because of the semicolon; after while loop). Whereas the job of the signal is to increment the value of S.

The structure of the chopstick is an array of a semaphore which is represented as shown below -

- Semaphore C[5];

Initially, each element of the semaphore C0, C1, C2, C3, and C4 are initialized to 1 as the chopsticks are on the table and not picked up by any of the philosophers.

Let's modify the above code of the Dining Philosopher Problem by using semaphore operations wait and signal, the desired code looks like

- Void Philosopher

- {

- While(1)

- {

- Wait( take_chopstickC[i] );

- Wait( take_chopstickC[(i+1) % 5] ) ;

- . .

- . EATING THE NOODLE

- .

- Signal( put_chopstickC[i] );

- Signal( put_chopstickC[ (i+1) % 5] ) ;

- .

- . THINKING

- }

- }

In the above code, first wait operation is performed on take_chopstickC[i] and take_chopstickC [ (i+1) % 5]. This shows philosopher i have picked up the chopsticks from its left and right. The eating function is performed after that.

On completion of eating by philosopher i the, signal operation is performed on take_chopstickC[i] and take_chopstickC [ (i+1) % 5]. This shows that the philosopher i have eaten and put down both the left and right chopsticks. Finally, the philosopher starts thinking again.

Let's understand how the above code is giving a solution to the dining philosopher problem?

Let value of i = 0( initial value ), Suppose Philosopher P0 wants to eat, it will enter in Philosopher() function, and execute Wait( take_chopstickC[i] ); by doing this it holds C0 chopstick and reduces semaphore C0 to 0, after that it execute Wait( take_chopstickC[(i+1) % 5] ); by doing this it holds C1 chopstick( since i =0, therefore (0 + 1) % 5 = 1) and reduces semaphore C1 to 0

Similarly, suppose now Philosopher P1 wants to eat, it will enter in Philosopher() function, and execute Wait( take_chopstickC[i] ); by doing this it will try to hold C1 chopstick but will not be able to do that, since the value of semaphore C1 has already been set to 0 by philosopher P0, therefore it will enter into an infinite loop because of which philosopher P1 will not be able to pick chopstick C1 whereas if Philosopher P2 wants to eat, it will enter in Philosopher() function, and execute Wait( take_chopstickC[i] ); by doing this it holds C2 chopstick and reduces semaphore C2 to 0, after that, it executes Wait( take_chopstickC[(i+1) % 5] ); by doing this it holds C3 chopstick( since i =2, therefore (2 + 1) % 5 = 3) and reduces semaphore C3 to 0.

Hence the above code is providing a solution to the dining philosopher problem, A philosopher can only eat if both immediate left and right chopsticks of the philosopher are available else the philosopher needs to wait. Also at one go, two independent philosophers can eat simultaneously (i.e., philosopher P0 and P2, P1 and P3 & P2 and P4 can eat simultaneously as all are the independent processes and they are following the above constraint of dining philosopher problem)

The drawback of the above solution of the dining philosopher problem

From the above solution of the dining philosopher problem, we have proved that no two neighboring philosophers can eat at the same point in time. The drawback of the above solution is that this solution can lead to a deadlock condition. This situation happens if all the philosophers pick their left chopstick at the same time, which leads to the condition of deadlock and none of the philosophers can eat.

To avoid deadlock, some of the solutions are as follows -

- The maximum number of philosophers on the table should not be more than four, in this case, chopstick C4 will be available for philosopher P3, so P3 will start eating and after the finish of his eating procedure, he will put down both the chopstick C3 and C4, i.e. semaphore C3 and C4 will now be incremented to 1. Now philosopher P2 which was holding chopstick C2 will also have chopstick C3 available, hence similarly, he will put down his chopstick after eating and enable other philosophers to eat.

- A philosopher at an even position should pick the right chopstick and then the left chopstick while a philosopher at an odd position should pick the left chopstick and then the right chopstick.

- Only in case if both the chopsticks ( left and right ) are available at the same time, only then a philosopher should be allowed to pick their chopsticks

- All the four starting philosophers ( P0, P1, P2, and P3) should pick the left chopstick and then the right chopstick, whereas the last philosopher P4 should pick the right chopstick and then the left chopstick. This will force P4 to hold his right chopstick first since the right chopstick of P4 is C0, which is already held by philosopher P0 and its value is set to 0, i.e C0 is already 0, because of which P4 will get trapped into an infinite loop and chopstick C4 remains vacant. Hence philosopher P3 has both left C3 and right C4 chopstick available, therefore it will start eating and will put down both chopsticks once finishes and let others eat which removes the problem of deadlock.

The design of the problem was to illustrate the challenges of avoiding deadlock, a deadlock state of a system is a state in which no progress of the system is possible. Consider a proposal where each philosopher is instructed to behave as follows:

- The philosopher is instructed to think till the left fork is available, when it is available, hold it.

- The philosopher is instructed to think till the right fork is available, when it is available, hold it.

- The philosopher is instructed to eat when both forks are available.

- Then, put the right fork down first

- Then, put the left fork down next

- Repeat from the beginning.

KEY TAKEAWAY

The dining philosopher's problem is the classical problem of synchronization which says that Five philosophers are sitting around a circular table and their job is to think and eat alternatively. A bowl of noodles is placed at the center of the table along with five chopsticks for each of the philosophers. To eat a philosopher needs both their right and a left chopstick. A philosopher can only eat if both immediate left and right chopsticks of the philosopher is available. In case if both immediate left and right chopsticks of the philosopher are not available then the philosopher puts down their (either left or right) chopstick and starts thinking again.

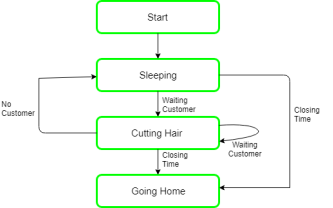

Problem: The analogy is predicated upon a theoretical barber look with one barber. There's a barber look that has one barber, one chair, and n chairs for waiting for patrons if there are any to sit down on the chair.

• If there's no client, then the barber sleeps in his chair.

• When a client arrives, he has got to awaken the barber.

• If there are many purchasers and therefore the barber is cutting a customer’s hair, then the remaining customers either wait if their ar empty chairs within the {waiting room|lounge|waiting ara|room} or they leave if no chairs are empty.

Fig 6 - example

Solution: the answer to the current downside includes 3 semaphores. First is for the customer that counts the quantity of consumers' gift within the room (customer within the chair isn't enclosed as a result of he's not waiting). Second, the barber zero or one is employed to inform whether or not the barber is idle or is functioning, and therefore the third mutex is employed to supply the mutual exclusion that is needed for the method to execute. Within the solution, the client has the record of the quantity {of clients|of consumers|of shoppers} waiting within the room if the quantity of consumers is adequate to the number of chairs within the room then the approaching customer leaves the store.

When the barber shows up within the morning, he executes the procedure barber, inflicting him to the dam on the semaphore customers as a result of it's at the start zero. Then the barber goes to sleep till the primary client comes up.

When a client arrives, he executes the client procedure the client acquires the mutex for coming into the crucial region, if another client enters thenceforth, the other won't be able to something till the primary one has free the mutex. The client then checks the chairs within the {waiting room|lounge|waiting ara|room} if waiting customers are less then the number of chairs then he sits otherwise he leaves and releases the mutex.

If the chair is accessible then the client sits within the room and increments the variable waiting price and additionally will increase the customer’s semaphore this wakes up the barber if he's sleeping.

At now, the client and barber are each awake, and therefore the barber is prepared to relinquish that person a haircut. Once the haircut is over, the client exits the procedure, and if there aren't any customers in the room barber sleeps.

Fig 7 - example

Algorithm for Sleeping Barber problem:

Semaphore Customers = 0; Semaphore Barber = 0; Mutex Seats = 1; Int FreeSeats = N;

Barber { While(true) { /* waits for a customer (sleeps). */ Down(Customers);

/* mutex to protect the number of available seats.*/ Down(Seats);

/* a chair gets free.*/ FreeSeats++;

/* bring customer for haircut.*/ Up(Barber);

/* release the mutex on the chair.*/ Up(Seats); /* barber is cutting hair.*/ } }

Customer { While(true) { /* protects seats so only 1 customer tries to sit In a chair if that's the case.*/ Down(Seats); //This line should not be here. If(FreeSeats > 0) {

/* sitting down.*/ FreeSeats--;

/* notify the barber. */ Up(Customers);

/* release the lock */ Up(Seats);

/* wait in the waiting room if barber is busy. */ Down(Barber); // customer is having hair cut } else { /* release the lock */ Up(Seats); // customer leaves } } } |

KEY TAKEAWAY

Problem: The analogy is predicated upon a theoretical barber look with one barber. There's a barber look that has one barber, one chair, and n chairs for waiting for patrons if there are any to sit down on the chair.

• If there's no client, then the barber sleeps in his chair.

• When a client arrives, he has got to awaken the barber.

• If there are many purchasers and therefore the barber is cutting a customer’s hair, then the remaining customers either wait if there are empty chairs within the {waiting room|lounge|waiting ara|room} or they leave if no chairs are empty.

Inter-process communication (IPC) is a system that permits the trading of data between processes.

By providing a user with a lot of programming interfaces, IPC enables a software engineer to compose the activities among various processes.

IPC enables one application to control another application, by empowering data sharing without interference.

IPC empowers data communication by enabling processes to utilize segments, semaphores, and different strategies to share memory and data.

IPC encourages effective message move between processes.

The base of IPC depends on Task Control Architecture (TCA).

It is an adaptable method that can send and get variable length clusters, data structures, and lists.

There are various explanations behind giving a domain or circumstance which permits process co-operation:

Data sharing: Since certain users might be interested in a similar piece of data (for instance, a shared file), you should give a circumstance to enabling simultaneous access to that data.

Computation speedup: If you need specific work to run quickly, you should break it into sub-tasks where every one of them will get executed in parallel with other tasks. Note that such an accelerate can be achieved just when the computer has compound or different processing components like CPUs or I/O channels.

Modularity: You might need to build the system in a modular manner by partitioning the system functions into split processes or threads.

Convenience: Even a single user will be able to work on many tasks simultaneously. For instance, a user might be editing, formatting, printing, and compiling in parallel.

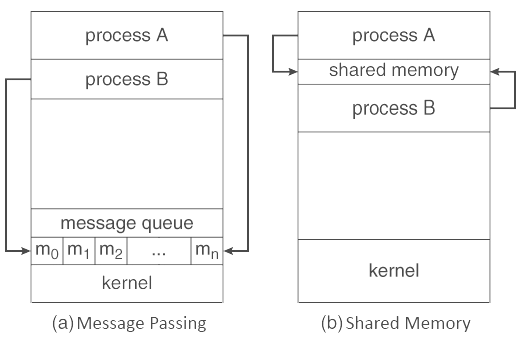

Cooperating with different processes requires an interprocess communication (IPC) strategy which will enable them to exchange data with different data. There are two essential models of interprocess communication:

Shared memory and

Message passing.

The figure below exhibits the shared memory model and the message passing model is shown below:

Fig 8 - Models of Interprocess Communication

Shared Memory Model

In the shared-memory model, an area of memory that is shared by cooperating processes gets set up.

Processes can be then ready to share data by reading and writing every data to the shared region.

Shared memory is the memory that can be at the same time access by different processes.

This is done as such that the processes can speak with one another.

All POSIX systems, just as Windows operating systems utilize shared memory.

Advantages of Shared Memory Model

Memory communication is quicker on the shared memory model when contrasted with the message passing model on a similar machine.

Disadvantages of Shared Memory Model

Every one of the processes that utilize the shared memory model needs to ensure that they are not writing to the same memory area.

A shared memory model may make issues, for example, synchronization and memory protection that should be tended to.

Message Passing Model

In the message-passing structure, communication happens by the method of messages exchange among the cooperating processes.

Various processes can read and write data to the message queue without being associated with one another.

Messages are stored on the queue until their respective recipient recovers them.

Message queues are very helpful for interprocess communication and are utilized by most operating systems.

Advantages of Messaging Passing Model

The message-passing model is a lot simpler to design than the shared memory model.

The disadvantage of Messaging Passing Model

The message-passing model has more slow communication than the shared memory model because the connection implementation requires more time.

KEY TAKEAWAY

Inter-process communication (IPC) is a system that permits the trading of data between processes.

By providing a user with a lot of programming interfaces, IPC enables a software engineer to compose the activities among various processes.

IPC enables one application to control another application, by empowering data sharing without interference.

Definition

The process scheduling is the activity of the process manager that handles the removal of the running process from the CPU and the selection of another process based on a particular strategy.

Process scheduling is an essential part of Multiprogramming operating systems. Such operating systems allow more than one process to be loaded into the executable memory at a time and the loaded process shares the CPU using time multiplexing.

Process Scheduling Queues

The OS maintains all PCBs in Process Scheduling Queues. The OS maintains a separate queue for each of the process states and PCBs of all processes in the same execution state are placed in the same queue. When the state of a process is changed, its PCB is unlinked from its current queue and moved to its new state queue.

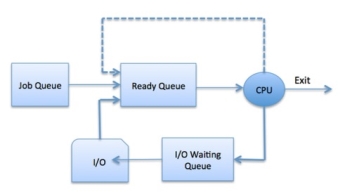

The Operating System maintains the following important process scheduling queues −

- Job queue − This queue keeps all the processes in the system.

- Ready queue − This queue keeps a set of all processes residing in main memory, ready and waiting to execute. A new process is always put in this queue.

- Device queues − The processes which are blocked due to the unavailability of an I/O device constitute this queue.

Fig 9 – Process state

The OS can use different policies to manage each queue (FIFO, Round Robin, Priority, etc.). The OS scheduler determines how to move processes between the ready and run queues which can only have one entry per processor core on the system; in the above diagram, it has been merged with the CPU.

Two-State Process Model

The two-state process model refers to running and non-running states which are described below −

S.N. | State & Description |

1 | Running When a new process is created, it enters into the system as in the running state. |

2 | Not Running Processes that are not running are kept in the queue, waiting for their turn to execute. Each entry in the queue is a pointer to a particular process. The queue is implemented by using a linked list. The use of dispatcher is as follows. When a process is interrupted, that process is transferred to the waiting queue. If the process has been completed or aborted, the process is discarded. In either case, the dispatcher then selects a process from the queue to execute. |

Schedulers

Schedulers are special system software that handles process scheduling in various ways. Their main task is to select the jobs to be submitted into the system and to decide which process to run. Schedulers are of three types −

- Long-Term Scheduler

- Short-Term Scheduler

- Medium-Term Scheduler

Long Term Scheduler

It is also called a job scheduler. A long-term scheduler determines which programs are admitted to the system for processing. It selects processes from the queue and loads them into memory for execution. Process loads into the memory for CPU scheduling.

The primary objective of the job scheduler is to provide a balanced mix of jobs, such as I/O bound and processor bound. It also controls the degree of multiprogramming. If the degree of multiprogramming is stable, then the average rate of process creation must be equal to the average departure rate of processes leaving the system.

On some systems, the long-term scheduler may not be available or minimal. Time-sharing operating systems have no long term scheduler. When a process changes the state from new to ready, then there is the use of a long-term scheduler.

Short Term Scheduler

It is also called a CPU scheduler. Its main objective is to increase system performance in accordance with the chosen set of criteria. It is the change of ready state to the running state of the process. CPU scheduler selects a process among the processes that are ready to execute and allocates CPU to one of them.

Short-term schedulers, also known as dispatchers, decide which process to execute next. Short-term schedulers are faster than long-term schedulers.

Medium Term Scheduler

Medium-term scheduling is a part of swapping. It removes the processes from the memory. It reduces the degree of multiprogramming. The medium-term scheduler is in charge of handling the swapped out-processes.

A running process may become suspended if it makes an I/O request. A suspended process cannot make any progress towards completion. In this condition, to remove the process from memory and make space for other processes, the suspended process is moved to secondary storage. This process is called swapping, and the process is said to be swapped out or rolled out. Swapping may be necessary to improve the process mix.

Comparison among Scheduler

S.N. | Long-Term Scheduler | Short-Term Scheduler | Medium-Term Scheduler |

1 | It is a job scheduler | It is a CPU scheduler | It is a process swapping scheduler. |

2 | Speed is lesser than short term scheduler | Speed is fastest among the other two | Speed is in between both short and long term scheduler. |

3 | It controls the degree of multiprogramming | It provides lesser control over the degree of multiprogramming | It reduces the degree of multiprogramming. |

4 | It is almost absent or minimal in a time-sharing system | It is also minimal in a time-sharing system | It is a part of Time-sharing systems. |

5 | It selects processes from the pool and loads them into memory for execution | It selects those processes which are ready to execute | It can re-introduce the process into memory and execution can be continued. |

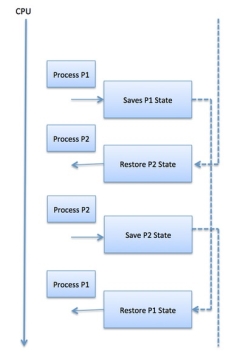

Context Switch

A context switch is a mechanism to store and restore the state or context of a CPU in a Process Control block so that a process execution can be resumed from the same point at a later time. Using this technique, a context switcher enables multiple processes to share a single CPU. Context switching is an essential part of a multitasking operating system's features.

When the scheduler switches the CPU from executing one process to execute another, the state from the current running process is stored in the process control block. After this, the state for the process to run next is loaded from its PCB and used to set the PC, registers, etc. At that point, the second process can start executing.

Fig 10 - Example

Context switches are computationally intensive since register and memory state must be saved and restored. To avoid the amount of context switching time, some hardware systems employ two or more sets of processor registers. When the process is switched, the following information is stored for later use.

- Program Counter

- Scheduling information

- Base and limit register value

- Currently used register

- Changed State

- I/O State information

- Accounting information

KEY TAKEAWAY

Definition

The process scheduling is the activity of the process manager that handles the removal of the running process from the CPU and the selection of another process based on a particular strategy.

Process scheduling is an essential part of Multiprogramming operating systems. Such operating systems allow more than one process to be loaded into the executable memory at a time and the loaded process shares the CPU using time multiplexing.

References:

1. Silberschatz, Galvin and Gagne, “Operating Systems Concepts”, Wiley

2. Sibsankar Halder and Alex A Aravind, “Operating Systems”, Pearson Education

3. Harvey M Dietel, “ An Introduction to Operating System”, Pearson Education

4. D M Dhamdhere, “Operating Systems: A Concept-based Approach”, 2nd Edition,

5. TMH 5. William Stallings, “Operating Systems: Internals and Design Principles ”, 6th Edition, Pearson Education