Unit - 1

An overview to AI

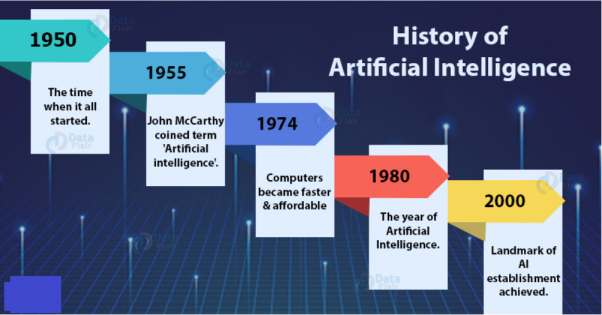

Figure 1. Evolution of AI

John McCarthy who is known as the founder of Artificial Intelligence introduced the term ‘Artificial Intelligence’ in the year 1955.

McCarthy along with Alan Turing, Allen Newell, Herbert A. Simon, and Marvin Minsky are known as the founders of AI. Alan was the first to suggest that if humans make use of the available information to reason and solve problems to make decisions – then why can’t we use the same concept with machines?

1974 – Computers flourished!

The wave of computers gradually started with time. They became faster, more affordable and were able to store more information. The best part was that they could think abstractly, able to self-recognize and achieved Natural Language Processing.

1980 – The year of AI

In 1980, AI research fired back up with an expansion of funds and algorithmic tools. With deep learning techniques, the computer learned with the user experience.

2000’s – Landed to the Landmark

After all the failed attempts, the technology was successfully established, however it was in the 2000s that the landmark goals were achieved. At that time, AI thrived despite a lack of government funds and public attention.

Presently AI collects and organizes large amounts of information to make insights and guesses that are beyond the human capabilities of manual processing. With the increase in organizational efficiencies, the likelihood of a mistake and detected irregular patterns is reduced. It provides best real time business solutions in terms of security.

There are four types of Artificial Intelligence approaches – Reactive Machines, Limited Memory, Theory of Mind, and self-awareness.

Reactive Machines

The most basic form of AI applications are the machines. Examples of reactive machines are games like Deep Blue, IBM’s chess-playing supercomputer. This is the same computer that beat the world’s then Grand Master Gary Kasparov. Based on the move made by the opponent, the machine decides/predicts the next move.

Limited Memory

These machines belong to the class II category of AI applications. Self-driven cars are the perfect example. These machines are fed with data and are trained with other cars’ speed and direction, lane markings, traffic lights, curves of roads, and other important factors, over time.

Theory of Mind

Theory of mind is the concept where the bots will be able to understand the human emotions, thoughts, and how they react to them. If AI powered machines are ever to mingle with us and move around with us, understanding human behaviour is imperative. And then, reacting to such behaviours accordingly is the requirement.

Self-Awareness

These machines are the extension of the Class III type of AI. It is one step ahead of understanding human emotions. This is the phase where the AI teams build machines with self-awareness factor programmed in them. Building self-aware machines seem far-fetched from where we stand today. For example when someone is honking from behind, the machines should be able to feel the emotion. That is when they understand how it feels when they honk at someone back.

If engineers need to work with AI they need to know three basic programming styles that is function-based programming, object-oriented programming, and rule-based programming as well as the use of Lisp and Prolog.

AI is a programming style that has much in common with engineering practice:

Generative AI

Recent advances in AI have allowed many companies to develop algorithms and tools to generate artificial 3D and 2D images automatically. These algorithms essentially form Generative AI, which enables machines to use things like text, audio files, and

Generative AI also leverages neural networks by exploiting the generative adversarial networks (GANs). GANs share similar functionalities and applications like generative AI, but it is also notorious for being misused to create deepfakes for cybercrimes. GANs are also used in research areas for projecting astronomical simulations, interpreting large data sets and much more.

Federated Learning

Federated learning is defined as a learning technique that allows users to collectively reap the benefits of shared models trained from this rich data, without the need to centrally store it.

Data is an essential key to training machine learning models. This process involves setting up servers at points where models are trained on data via a cloud computing platform. Federated learning brings machine learning models to the data source (or edge nodes) rather than bringing the data to the model. It then links together multiple computational devices into a decentralized system that allows the individual devices that collect data to assist in training the model. This enables devices to collaboratively learn a shared prediction model while keeping all the training data on the individual device itself.

Neural Network Compression

The key disadvantage of any neural network is that it is computationally intensive and memory intensive, which makes it difficult to deploy on embedded systems with limited hardware resources. Further, with the increasing size of the DNN for carrying complex computation, the storage needs are also rising. To address these issues, researchers have come with an AI technique called neural network compression.

Generally, a neural network contains far more weights, represented at higher precision than required for the specific task, which they are trained to perform. If we wish to bring real-time intelligence or boost edge applications, neural network models must be smaller. For compressing the models, researchers rely on the following methods: parameter pruning and sharing, quantization, low-rank factorization, transferred or compact convolutional filters, and knowledge distillation.

Will AI replace human workers?

The most immediate concern for many is that AI-enabled systems will replace workers across a wide range of industries. As has happened with every wave of technology, from the automatic weaving looms of the early industrial revolution to the computers of today we see that jobs are not destroyed, but rather employment shifts from one place to another and entirely new categories of employment are created. We can and should expect the same in the AI-enabled economy. Research and experience is showing that it’s inevitable that AI will replace entire categories of work, especially in transportation, retail, government, professional services employment, and customer service. On the other hand, companies will be freed up to put their human resources to much better, higher value tasks instead of taking orders, fielding simple customer service requests or complaints, or data entry related tasks.

Will intelligent machines have rights?

As machines become more intelligent and we ask more and more of our machines, how should they be treated and viewed in society? Once machines can simulate emotion and act like human beings, how should they be governed? Should we consider machines as humans, animals, or inanimate objects?

Despite perhaps dramatic evidence that machine-driven vehicles have overall far lower fatality rates than human-driven vehicles, the issue of liability and control is primarily one of ethics. So, we need to be asking these questions, figuring out what we can accept and what is ethical, and put laws and regulations in place now to safeguard against future tragedies.

Creating transparency in AI decision-making

There are many approaches used in machine learning, however, no machine algorithm has re-invigorated the AI market quite like deep learning. Deep learning, however, is a “black box”. We aren’t really sure how deep learning works and this can be a big problem when we rely on this technology to make critical decisions such as loan applications, who gets paroled, and who gets hired. AI systems that are unexplainable should not be acceptable, especially in high- risk situations.

References:

1. Artificial Intelligence: A Modern Approach by Stuart Russell and Peter Norvig, Prentice Hall

2. Artificial Intelligence by Kevin Knight, Elaine Rich, Shivashankar B. Nair, Publisher: McGraw

Hill

3. Data Mining: Concepts and Techniques by Jiawei Han, Micheline Kamber, Jian Pei,

Publisher: Elsevier Science.

4. Speech & Language Processing by Dan Jurafsky, Publisher: Pearson Education

5. Neural Networks and Deep Learning A Textbook by Charu C. Aggarwal, Publisher: Springer

International Publishing

6. Introduction to Artificial Intelligence By Rajendra Akerkar, Publisher : PHI Learning