Unit - 1

Linear Algebra- Matrices

- Definition:

An arrangement of m.n numbers in m rows and n columns is called a matrix of order mxn.

Generally a matrix is denoted by capital letters. Like, A, B, C, ….. Etc.

2. Types of matrices:- (Review)

- Row matrix

- Column matrix

- Square matrix

- Diagonal matrix

- Trace of a matrix

- Determinant of a square matrix

- Singular matrix

- Non – singular matrix

- Zero/ null matrix

- Unit/ Identity matrix

- Scaler matrix

- Transpose of a matrix

- Triangular matrices

Upper triangular and lower triangular matrices,

14. Conjugate of a matrix

15. Symmetric matrix

16. Skew – symmetric matrix

3. Operations on matrices:

- Equality of two matrices

- Multiplication of A by a scalar k.

- Addition and subtraction of two matrices

- Product of two matrices

- Inverse of a matrix

4. Elementary transformations

a) Elementary row transformation

These are three elementary transformations

- Interchanging any two rows (Rij)

- Multiplying all elements in ist row by a non – zero constant k is denoted by KRi

- Adding to the elements in ith row by the kth multiple of jth row is denoted by

.

.

b) Elementary column transformations:

There are three elementary column transformations.

- Interchanging ith and jth column. Is denoted by Cij.

- Multiplying ith column by a non – zero constant k is denoted by kCj.

- Adding to the element of ith column by the kth multiple of jth column is denoted by Ci + kCj.

Rank of a matrix:

Let A be a given rectangular matrix or square matrix. From this matrix select any r rows from these r rows select any r columns thus getting a square matrix of order r x r. The determinant of this matrix of order r x r is called minor or order r.

e.g.

If

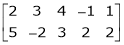

For example select 2nd and 3rd row. i.e.

Now select any two columns. Suppose, 1st and 2nd.

i.e.

The rank of a matrix is the largest order of the non zero minor of the matrix( we search at least one non zero minor to find the rank of the matrix).

A matrix A is said to have the rank r if and only if there exist at least one non zero minor of the given matrix of order r and every minor greater than order r is zero.

This is denoted by  .

.

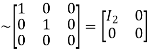

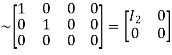

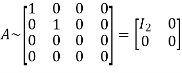

Normal form of a matrix:

Every non singular matrix A of rank r can be converted by applying sequence of the elementary transformation in the form of

Is called the normal form of the matrix A.

Also the rank of a matrix can be calculated by converting it into normal form then the order of identity matrix obtained is the rank of the given matrix.

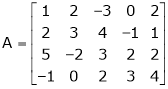

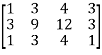

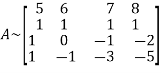

Example1: Reduce the following matrix into normal form and find its rank,

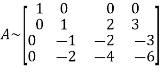

Let A =

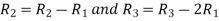

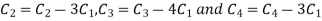

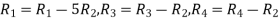

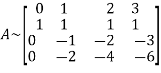

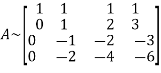

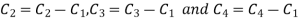

Apply  we get

we get

A

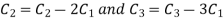

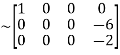

Apply  we get

we get

A

Apply

A

Apply

A

Apply

A

Hence the rank of matrix A is 2 i.e.  .

.

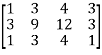

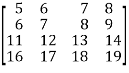

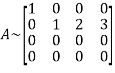

Example2: Reduce the following matrix into normal form and find its rank,

Let A =

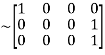

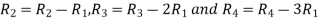

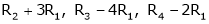

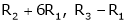

Apply  and

and

A

Apply

A

Apply

A

Apply

A

Apply

A

Hence the rank of the matrix A is 2 i.e.  .

.

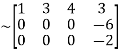

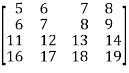

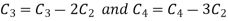

Example3: Reduce the following matrix into normal form and find its rank,

Let A =

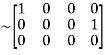

Apply

Apply

Apply

Apply

Apply  and

and

Apply

Hence the rank of matrix A is 2 i.e.  .

.

Introduction:

In this chapter we are going to study a very important theorem viz first we have to study of eigen values and eigen vector.

- Vector

An ordered n – touple of numbers is called an n – vector. Thus the ‘n’ numbers x1, x2, ………… xn taken in order denote the vector x. i.e. x = (x1, x2, ……., xn).

Where the numbers x1, x2, ……….., xn are called component or co – ordinates of a vector x. A vector may be written as row vector or a column vector.

If A be an mxn matrix then each row will be an n – vector & each column will be an m – vector.

2. Linear Dependence

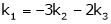

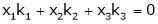

A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, ……., kr not all zero such that

k1 + x2k2 + …………….. + xr kr = 0 … (1)

3. Linear Independence

A set of r vectors x1, x2, …………., xr is said to be linearly independent if there exist scalars k1, k2, …………, kr all zero such that

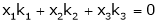

x1 k1 + x2 k2 + …….. + xr kr = 0

Note:-

- Equation (1) is known as a vector equation.

- If the vector equation has non – zero solution i.e. k1, k2, …., kr not all zero. Then the vector x1, x2, ………. xr are said to be linearly dependent.

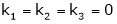

- If the vector equation has only trivial solution i.e.

k1 = k2 = …….= kr = 0. Then the vector x1, x2, ……, xr are said to linearly independent.

4. Linear combination

A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xr kr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

Results:

- A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors.

- A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

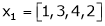

Example 1

Are the vectors  ,

,  ,

,  linearly dependent. If so, express x1 as a linear combination of the others.

linearly dependent. If so, express x1 as a linear combination of the others.

Solution:

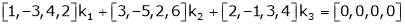

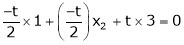

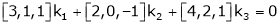

Consider a vector equation,

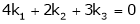

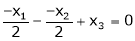

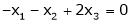

i.e.

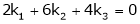

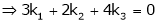

Which can be written in matrix form as,

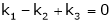

Here  & no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

& no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

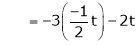

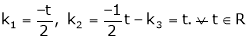

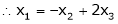

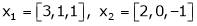

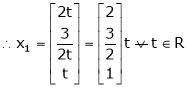

Put

and

and

Thus

i.e.

i.e.

Since F11 k2, k3 not all zero. Hence  are linearly dependent.

are linearly dependent.

Example 2

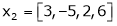

Examine whether the following vectors are linearly independent or not.

and

and  .

.

Solution:

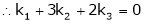

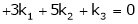

Consider the vector equation,

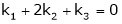

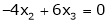

i.e.  … (1)

… (1)

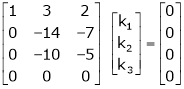

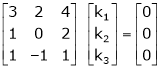

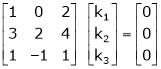

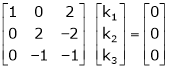

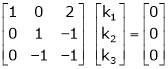

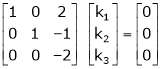

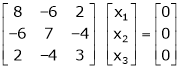

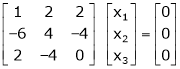

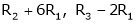

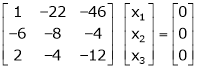

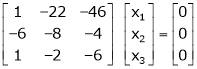

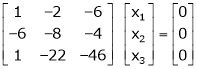

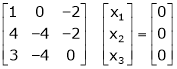

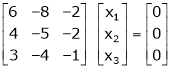

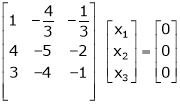

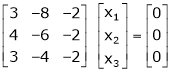

Which can be written in matrix form as,

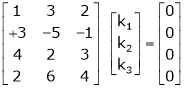

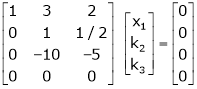

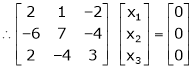

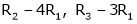

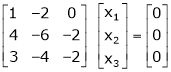

R12

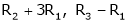

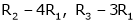

R2 – 3R1, R3 – R1

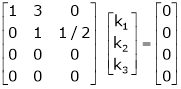

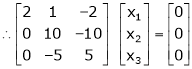

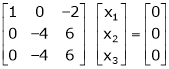

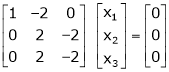

R3 + R2

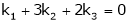

Here Rank of coefficient matrix is equal to the no. Of unknowns. i.e. r = n = 3.

Hence the system has unique trivial solution.

i.e.

i.e. vector equation (1) has only trivial solution. Hence the given vectors x1, x2, x3 are linearly independent.

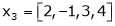

Example 3

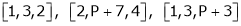

At what value of P the following vectors are linearly independent.

Solution:

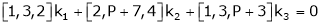

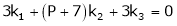

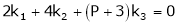

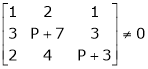

Consider the vector equation.

i.e.

Which is a homogeneous system of three equations in 3 unknowns and has a unique trivial solution.

If and only if Determinant of coefficient matrix is non zero.

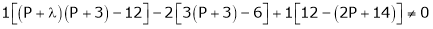

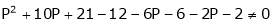

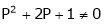

consider

consider  .

.

.

.

i.e.

Thus for  the system has only trivial solution and Hence the vectors are linearly independent.

the system has only trivial solution and Hence the vectors are linearly independent.

Note:-

If the rank of the coefficient matrix is r, it contains r linearly independent variables & the remaining vectors (if any) can be expressed as linear combination of these vectors.

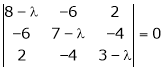

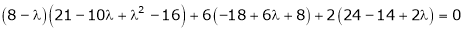

Characteristic equation:-

Let A he a square matrix,  be any scaler then

be any scaler then  is called characteristic equation of a matrix A.

is called characteristic equation of a matrix A.

Note:

Let a be a square matrix and ‘ ’ be any scaler then,

’ be any scaler then,

1)  is called characteristic matrix

is called characteristic matrix

2)  is called characteristic polynomial.

is called characteristic polynomial.

The roots of a characteristic equations are known as characteristic root or latent roots, eigen values or proper values of a matrix A.

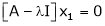

Eigen vector:-

Suppose  be an eigen value of a matrix A. Then

be an eigen value of a matrix A. Then  a non – zero vector x1 such that.

a non – zero vector x1 such that.

… (1)

… (1)

Such a vector ‘x1’ is called as eigen vector corresponding to the eigen value  .

.

Properties of Eigen values:-

- Then sum of the eigen values of a matrix A is equal to sum of the diagonal elements of a matrix A.

- The product of all eigen values of a matrix A is equal to the value of the determinant.

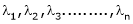

- If

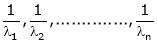

are n eigen values of square matrix A then

are n eigen values of square matrix A then  are m eigen values of a matrix A-1.

are m eigen values of a matrix A-1. - The eigen values of a symmetric matrix are all real.

- If all eigen values are non – zen then A-1 exist and conversely.

- The eigen values of A and A’ are same.

Properties of eigen vector:-

- Eigen vector corresponding to distinct eigen values are linearly independent.

- If two are more eigen values are identical then the corresponding eigen vectors may or may not be linearly independent.

- The eigen vectors corresponding to distinct eigen values of a real symmetric matrix are orthogonal.

Example 1

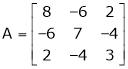

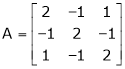

Determine the eigen values of eigen vector of the matrix.

Solution:

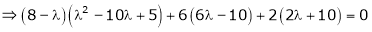

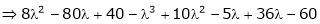

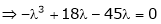

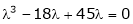

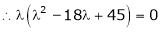

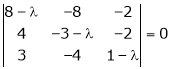

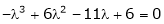

Consider the characteristic equation as,

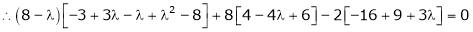

i.e.

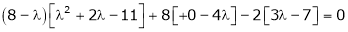

i.e.

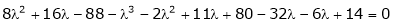

i.e.

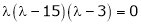

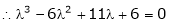

Which is the required characteristic equation.

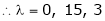

are the required eigen values.

are the required eigen values.

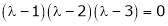

Now consider the equation

… (1)

… (1)

Case I:

If  Equation (1) becomes

Equation (1) becomes

R1 + R2

Thus

independent variable.

independent variable.

Now rewrite equation as,

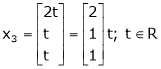

Put x3 = t

&

&

Thus  .

.

Is the eigen vector corresponding to  .

.

Case II:

If  equation (1) becomes,

equation (1) becomes,

Here

independent variables

independent variables

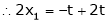

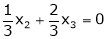

Now rewrite the equations as,

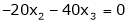

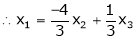

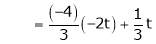

Put

&

&

.

.

Is the eigen vector corresponding to  .

.

Case III:

If  equation (1) becomes,

equation (1) becomes,

Here rank of

independent variable.

independent variable.

Now rewrite the equations as,

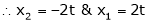

Put

Thus  .

.

Is the eigen vector for  .

.

Example 2

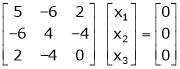

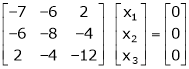

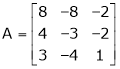

Find the eigen values of eigen vector for the matrix.

Solution:

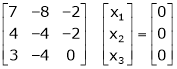

Consider the characteristic equation as

i.e.

i.e.

are the required eigen values.

are the required eigen values.

Now consider the equation

… (1)

… (1)

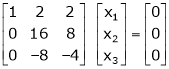

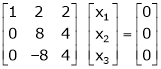

Case I:

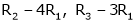

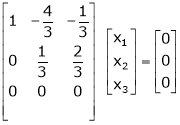

Equation (1) becomes,

Equation (1) becomes,

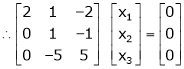

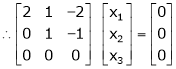

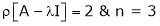

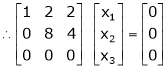

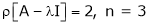

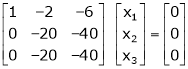

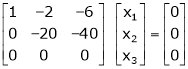

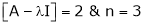

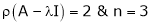

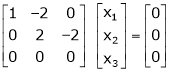

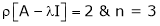

Thus  and n = 3

and n = 3

3 – 2 = 1 independent variables.

3 – 2 = 1 independent variables.

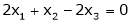

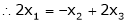

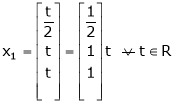

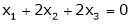

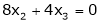

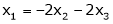

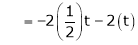

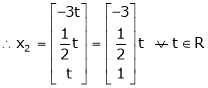

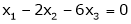

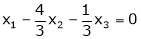

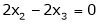

Now rewrite the equations as,

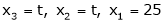

Put

,

,

i.e. the eigen vector for

Case II:

If  equation (1) becomes,

equation (1) becomes,

Thus

Independent variables.

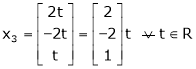

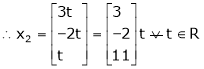

Now rewrite the equations as,

Put

Is the eigen vector for

Now

Case II:-

If  equation (1) gives,

equation (1) gives,

R1 – R2

Thus

independent variables

independent variables

Now

Put

Thus

Is the eigen vector for  .

.

Every square matrix satisfied it’s characteristic equation

Ex.

Verify cayley – Hamilton theorem and use it to find A4 and A-1

Ex. Verify cayley – Hamilton theorem and hence find A-1, A-2, A-3

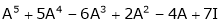

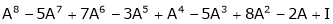

Ex. For  find the value of

find the value of  , using cayley Hamilton theorem.

, using cayley Hamilton theorem.

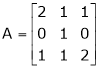

Ex. Find the characteristic equation of the matrix

And hence find the matrix represented by

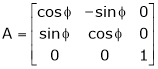

Verify whether the following matrix is orthogonal or not if so find A-1

Reference Books

1) Higher Engineering Mathematics by B. V. Ramana, Tata McGraw-Hill Publications, New Delhi.

2) A Text Book of Engineering Mathematics by Peter O’ Neil, Thomson Asia Pte Ltd. ,Singapore.

3) Advanced Engineering Mathematics by C. R. Wylie & L. C. Barrett, Tata Mcgraw-Hill Publishing Company Ltd., New Delhi.