Unit-2

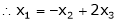

Eigen values and Eigen vectors

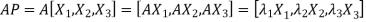

Linear transformation-

Suppose that ‘A’ is a m× n matrix. Then a function f(x) = Ax is a linear transform of matrix A. Where f: .

.

For examples,

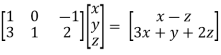

Suppose we have a 2×3 matrix as below,

A =  , now if we multiply this matrix with vector X = (x,y,z)

, now if we multiply this matrix with vector X = (x,y,z)

Then,

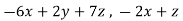

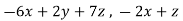

AX =  = (x – z ,

= (x – z ,  )

)

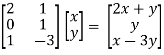

Example: suppose you have a matrix A =  , then find the linear transformation of A.

, then find the linear transformation of A.

Sol. Here we have,

A =

Multiply matrix A by vector (x,y).

X = (x,y)

Ax =

We get, f(x,y) = (2x + y , y , x – 3y)

Which is the linear transformation of matrix A.

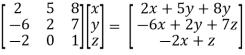

Example: find the linear transformation of the matrix A.

A =

Sol. We have,

A =

Multiply the matrix by vector x = (x , y , z) , we get

Ax =

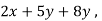

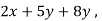

= (

)

)

f(x , y , z) = (

)

)

Which is the linear transformation of A.

Orthogonal transformation-

Let us suppose  be a vector space of size -2 column vector. This vector space has an inner product defined by < v , w> =

be a vector space of size -2 column vector. This vector space has an inner product defined by < v , w> =  linear transformation T:

linear transformation T:  is called orthogonal transformation for every for all v,w belongs to

is called orthogonal transformation for every for all v,w belongs to  ,

,

< T(v) , T(w)> = <v , w>

First we will go through some important definitions before studying Eigen values and Eigen vectors.

1. Vector-

An ordered n – touple of numbers is called an n – vector. Thus the ‘n’ numbers x1, x2, ………… xn taken in order denote the vector x. i.e. x = (x1, x2, ……., xn).

Where the numbers x1, x2, ……….., xn are called component or co – ordinates of a vector x. A vector may be written as row vector or a column vector.

If A be an mxn matrix then each row will be an n – vector & each column will be an m – vector.

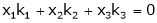

2. Linear dependence-

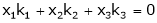

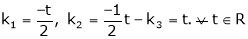

A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, ……., kr not all zero such that

k1 + x2k2 + …………….. + xr kr = 0 … (1)

3. Linear independence-

A set of r vectors x1, x2, …………., xr is said to be linearly independent if there exist scalars k1, k2, …………, kr all zero such that

x1 k1 + x2 k2 + …….. + xr kr = 0

Important notes-

- Equation (1) is known as a vector equation.

- If the vector equation has non – zero solution i.e. k1, k2, …., kr not all zero. Then the vector x1, x2, ………. xr are said to be linearly dependent.

- If the vector equation has only trivial solution i.e.

k1 = k2 = …….= kr = 0. Then the vector x1, x2, ……, xr are said to linearly independent.

4. Linear combination-

A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xr kr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

Results:

- A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors.

- A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

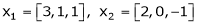

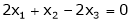

Example 1

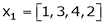

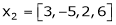

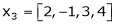

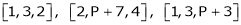

Are the vectors  ,

,  ,

,  linearly dependent. If so, express x1 as a linear combination of the others.

linearly dependent. If so, express x1 as a linear combination of the others.

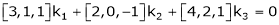

Solution:

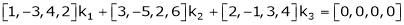

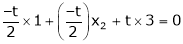

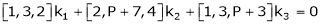

Consider a vector equation,

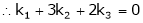

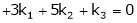

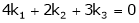

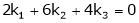

i.e.

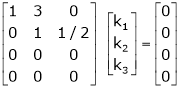

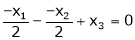

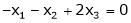

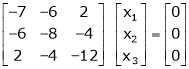

Which can be written in matrix form as,

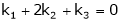

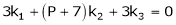

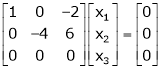

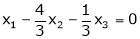

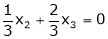

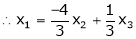

Here  & no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

& no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

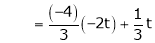

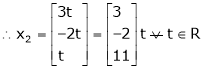

Put

and

and

Thus

i.e.

i.e.

Since F11 k2, k3 not all zero. Hence  are linearly dependent.

are linearly dependent.

Example 2

Examine whether the following vectors are linearly independent or not.

and

and  .

.

Solution:

Consider the vector equation,

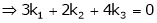

i.e.  … (1)

… (1)

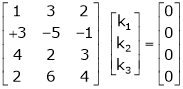

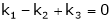

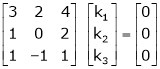

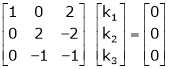

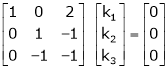

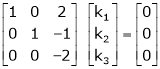

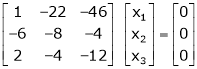

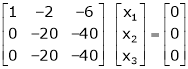

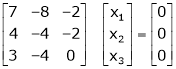

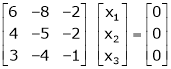

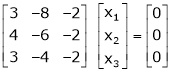

Which can be written in matrix form as,

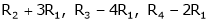

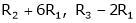

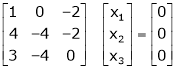

R12

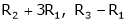

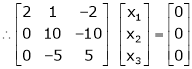

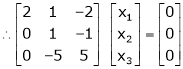

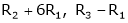

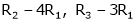

R2 – 3R1, R3 – R1

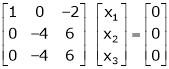

R3 + R2

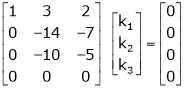

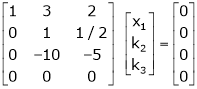

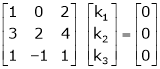

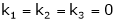

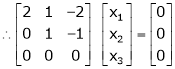

Here Rank of coefficient matrix is equal to the no. Of unknowns. i.e. r = n = 3.

Hence the system has unique trivial solution.

i.e.

i.e. vector equation (1) has only trivial solution. Hence the given vectors x1, x2, x3 are linearly independent.

Example 3

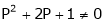

At what value of P the following vectors are linearly independent.

Solution:

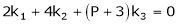

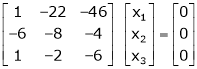

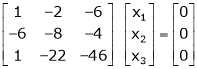

Consider the vector equation.

i.e.

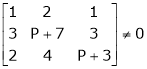

This is a homogeneous system of three equations in 3 unknowns and has a unique trivial solution.

If and only if Determinant of coefficient matrix is non zero.

consider

consider  .

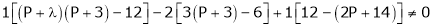

.

.

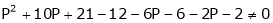

.

i.e.

Thus for  the system has only trivial solution and Hence the vectors are linearly independent.

the system has only trivial solution and Hence the vectors are linearly independent.

Note:-

If the rank of the coefficient matrix is r, it contains r linearly independent variables & the remaining vectors (if any) can be expressed as linear combination of these vectors.

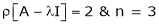

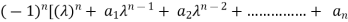

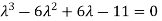

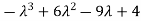

Characteristic equation:-

Let A he a square matrix,  be any scaler then

be any scaler then  is called characteristic equation of a matrix A.

is called characteristic equation of a matrix A.

Note:

Let a be a square matrix and ‘ ’ be any scaler then,

’ be any scaler then,

1)  is called characteristic matrix

is called characteristic matrix

2)  is called characteristic polynomial.

is called characteristic polynomial.

The roots of a characteristic equations are known as characteristic root or latent roots, eigen values or proper values of a matrix A.

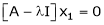

Eigen vector:-

Suppose  be an eigen value of a matrix A. Then

be an eigen value of a matrix A. Then  a non – zero vector x1 such that.

a non – zero vector x1 such that.

… (1)

… (1)

Such a vector ‘x1’ is called as eigen vector corresponding to the eigen value  .

.

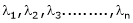

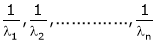

Properties of Eigen values:-

- Then sum of the eigen values of a matrix A is equal to sum of the diagonal elements of a matrix A.

- The product of all eigen values of a matrix A is equal to the value of the determinant.

- If

are n eigen values of square matrix A then

are n eigen values of square matrix A then  are m eigen values of a matrix A-1.

are m eigen values of a matrix A-1. - The eigen values of a symmetric matrix are all real.

- If all eigen values are non – zen then A-1 exist and conversely.

- The eigen values of A and A’ are same.

Properties of eigen vector:-

- Eigen vector corresponding to distinct eigen values are linearly independent.

- If two are more eigen values are identical then the corresponding eigen vectors may or may not be linearly independent.

- The eigen vectors corresponding to distinct eigen values of a real symmetric matrix are orthogonal.

Example-1:

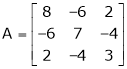

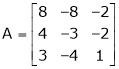

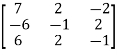

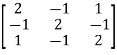

Determine the eigen values of eigen vector of the matrix.

Solution:

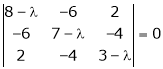

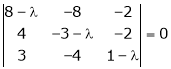

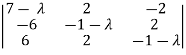

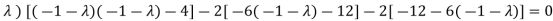

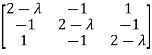

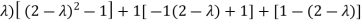

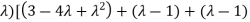

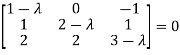

Consider the characteristic equation as,

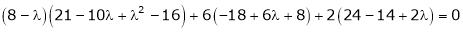

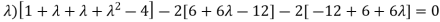

i.e.

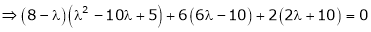

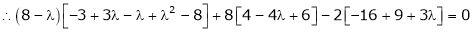

i.e.

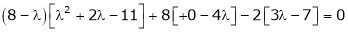

i.e.

Which is the required characteristic equation.

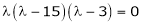

are the required eigen values.

are the required eigen values.

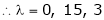

Now consider the equation

… (1)

… (1)

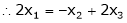

Case I:

If  Equation (1) becomes

Equation (1) becomes

R1 + R2

Thus

independent variable.

independent variable.

Now rewrite equation as,

Put x3 = t

&

&

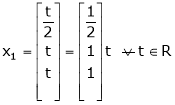

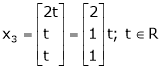

Thus  .

.

Is the eigen vector corresponding to  .

.

Case II:

If  equation (1) becomes,

equation (1) becomes,

Here

independent variables

independent variables

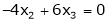

Now rewrite the equations as,

Put

&

&

.

.

Is the eigen vector corresponding to  .

.

Case III:

If  equation (1) becomes,

equation (1) becomes,

Here rank of

independent variable.

independent variable.

Now rewrite the equations as,

Put

Thus  .

.

Is the eigen vector for  .

.

Example 2

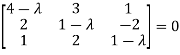

Find the eigen values of eigen vector for the matrix.

Solution:

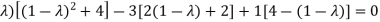

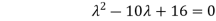

Consider the characteristic equation as

i.e.

i.e.

are the required eigen values.

are the required eigen values.

Now consider the equation

… (1)

… (1)

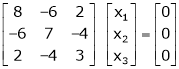

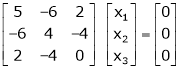

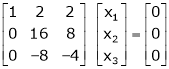

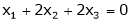

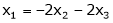

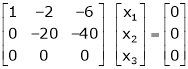

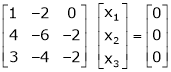

Case I:

Equation (1) becomes,

Equation (1) becomes,

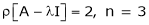

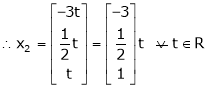

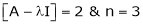

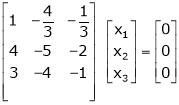

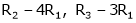

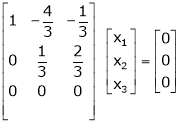

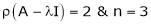

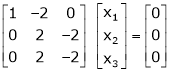

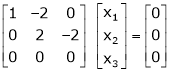

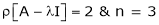

Thus  and n = 3

and n = 3

3 – 2 = 1 independent variables.

3 – 2 = 1 independent variables.

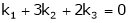

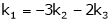

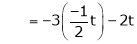

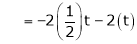

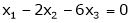

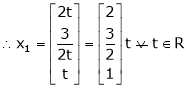

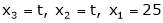

Now rewrite the equations as,

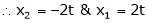

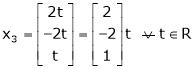

Put

,

,

i.e. the eigen vector for

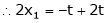

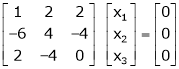

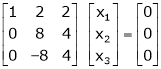

Case II:

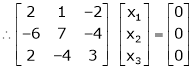

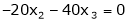

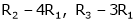

If  equation (1) becomes,

equation (1) becomes,

Thus

Independent variables.

Now rewrite the equations as,

Put

Is the eigen vector for

Now

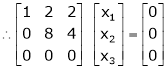

Case II:-

If  equation (1) gives,

equation (1) gives,

R1 – R2

Thus

independent variables

independent variables

Now

Put

Thus

Is the eigen vector for

Two square matrix  and A of same order n are said to be similar if and only if

and A of same order n are said to be similar if and only if

for some non-singular matrix P.

for some non-singular matrix P.

Such transformation of the matrix A into  with the help of non singular matrix P is known as similarity transformation.

with the help of non singular matrix P is known as similarity transformation.

Similar matrices have the same Eigen values.

If X is an Eigen vector of matrix A then  is Eigen vector of the matrix

is Eigen vector of the matrix

Reduction to Diagonal Form:

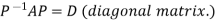

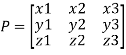

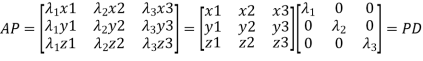

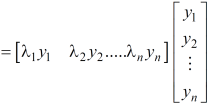

Let A be a square matrix of order n has n linearly independent Eigen vectors which form the matrix P such that

Where P is called the modal matrix and D is known as spectral matrix.

Procedure: let A be a square matrix of order 3.

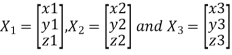

Let three Eigen vectors of A are  corresponding to Eigen values

corresponding to Eigen values

Let

{by characteristics equation of A}

{by characteristics equation of A}

Or

Or

Note: The method of diagonalization is helpful in calculating power of a matrix.

.Then for an integer n we have

.Then for an integer n we have

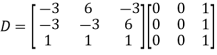

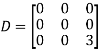

We are using the example of 1.6*

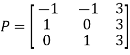

Example1: Diagonalize the matrix

Let A=

The three Eigen vectors obtained are (-1,1,0), (-1,0,1) and (3,3,3) corresponding to Eigen values  .

.

Then  and

and

Also we know that

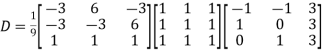

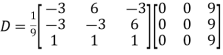

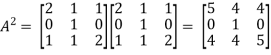

Example2: Diagonalize the matrix

Let A =

The Eigen vectors are (4,1),(1,-1) corresponding to Eigen values  .

.

Then  and also

and also

Also we know that

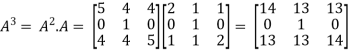

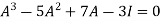

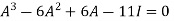

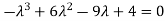

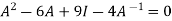

Statement-

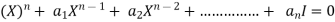

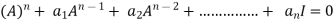

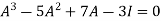

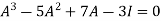

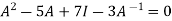

Every square matrix satisfies its characteristic equation, that means for every square matrix of order n,

|A -  | =

| =

Then the matrix equation-

Is satisfied by X = A

That means

Example-1: Find the characteristic equation of the matrix A =  and Verify cayley-Hamlton theorem.

and Verify cayley-Hamlton theorem.

Sol. Characteristic equation of the matrix, we can be find as follows-

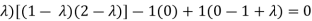

Which is,

( 2 - , which gives

, which gives

According to Cayley-Hamilton theorem,

…………(1)

…………(1)

Now we will verify equation (1),

Put the required values in equation (1) , we get

Hence the cayley-Hamilton theorem is verified.

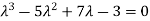

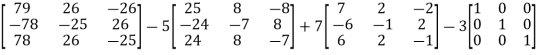

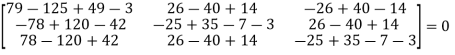

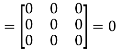

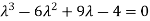

Example-2: Find the characteristic equation of the the matrix A and verify Cayley-Hamilton theorem as well.

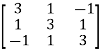

A =

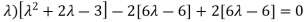

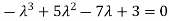

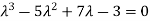

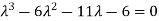

Sol. Characteristic equation will be-

= 0

= 0

( 7 -

(7-

(7-

Which gives,

Or

According to cayley-Hamilton theorem,

…………………….(1)

…………………….(1)

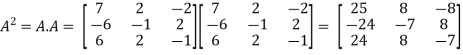

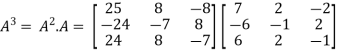

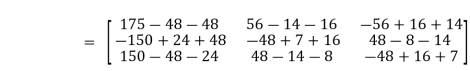

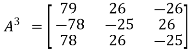

In order to verify cayley-Hamilton theorem , we will find the values of

So that,

Now

Put these values in equation(1), we get

= 0

= 0

Hence the cayley-hamilton theorem is verified.

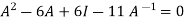

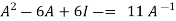

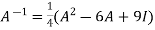

Inverse of a matrix by Cayley-Hamilton theorem-

We can find the inverse of any matrix by multiplying the characteristic equation with  .

.

For example , suppose we have a characteristic equation  then multiply this by

then multiply this by  , then it becomes

, then it becomes

Then we can find  by solving the above equation.

by solving the above equation.

Example-1: Find the inverse of matrix A by using Cayley-Hamilton theorem.

A =

Sol. The characteristic equation will be,

|A -  | = 0

| = 0

Which gives,

(4-

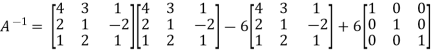

According to Cayley-Hamilton theorem,

Multiplying by

That means

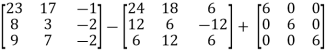

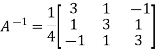

On solving ,

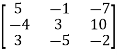

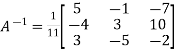

11

=

=

So that,

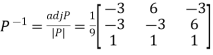

Example-2: Find the inverse of matrix A by using Cayley-Hamilton theorem.

A =

Sol. The characteristic equation will be,

|A -  | = 0

| = 0

=

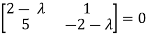

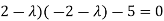

= (2-

= (2 -

=

That is,

Or

We know that by Cayley-Hamilton theorem,

…………………….(1)t,

…………………….(1)t,

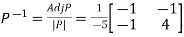

Multiply equation(1) by  , we get

, we get

Or

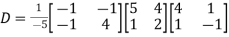

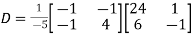

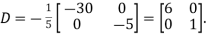

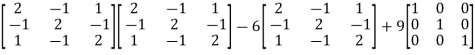

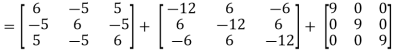

Now we will find

=

=

Hence the inverse of matrix A is,

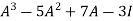

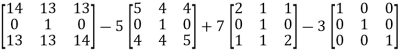

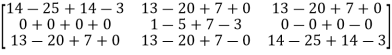

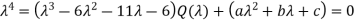

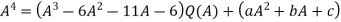

Power of a matrix by Cayley-Hamilton theorem-

Any positive integral power  of matrix A is linearly expressible in the terms of those of lower degree, where m is the positive integer and n is the degree of characteristic equation, such that m>n

of matrix A is linearly expressible in the terms of those of lower degree, where m is the positive integer and n is the degree of characteristic equation, such that m>n

Example-1: Find  of matrix A by using Cayley-Hamilton theorem.

of matrix A by using Cayley-Hamilton theorem.

Sol. First we will find out the characteristic equation of matrix A,

|A -  | = 0

| = 0

We get,

Which gives,

(

We get,

Or  I ……………………..(1)

I ……………………..(1)

In order to find find  we take cube of eq. (1)

we take cube of eq. (1)

We get,

729I we know that-

729I we know that-

729

729  we know that- value of I =

we know that- value of I =

Example-2: Find  of matrix A by using Cayley-Hamilton theorem.

of matrix A by using Cayley-Hamilton theorem.

A =

Sol. Here we have ,

A =

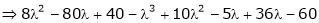

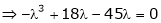

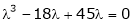

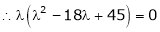

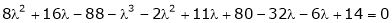

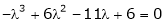

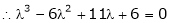

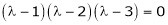

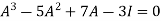

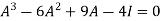

Characteristics equation will be,

|A -  | = 0

| = 0

On factorization , we get

( )(

)( )(

)( ) = 0

) = 0

Hence eigen values are – 1,2,3

Suppose,

………………….(1)

………………….(1)

Now put the values of  in equation (1), we get

in equation (1), we get

Put

a+b+c = 1……………..(2)

4a+2b+c = 16……………(3)

9a+3b+c = 81…………….(4)

On solving these three eq. , we get

a = 25 , b = -60 , c = 36

Replace  by A in eq.(1), we get

by A in eq.(1), we get

= O +

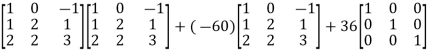

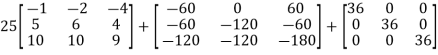

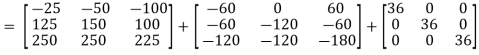

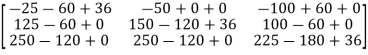

Put the corresponding values,

= 25

=

=

=

Which is the recuired answer.

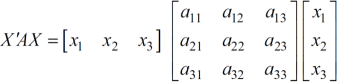

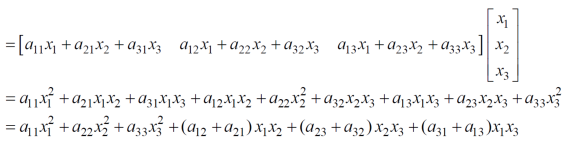

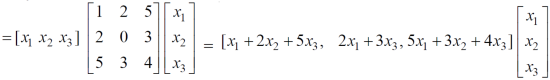

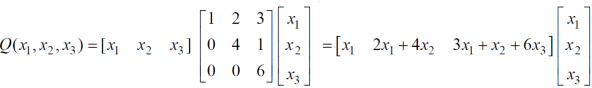

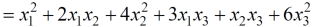

Quadratic form can be expressed as a product of matrices.

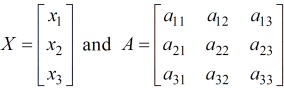

Q(x) = X’ AX,

Where,

X’ is the transpose of X,

Which is the quadratic form.

Example: find out the quadratic form of following matrix.

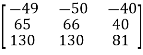

A =

Solution: Quadratic form is,

X’ AX

Which is the quadratic form of a matrix.

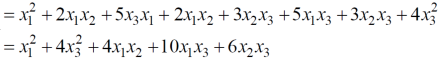

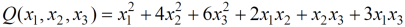

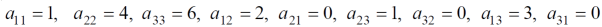

Example: find the real matrix of the following quadratic form:

Sol. Here we will compare the coefficients with the standard quadratic equation,

We get,

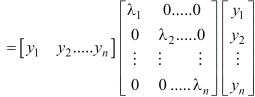

A real symmetric matrix ‘A’ can be reduced to a diagonal form M’AM = D ………(1)

Where M is the normalized orthogonal modal matrix of A and D is its spectoral matrix.

Suppose the orthogonal transformation is-

X =MY

Q = X’AX = (MY)’ A (MY) = (Y’M’) A (MY) = Y’(M’AM) Y

= Y’DY

= Y’ diagonal ( )Y

)Y

Which is called canonical form.

Step by step method for Reduction of quadratic form to canonical form by orthogonal transformation –

1. Construct the symmetric matrix A associated to the given quadratic form  .

.

2. Now for the characteristic equation-

|A -  | = 0

| = 0

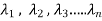

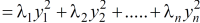

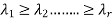

Then find the eigen values of A. Let  be the positive eigen values arranged in decreasing order , that means ,

be the positive eigen values arranged in decreasing order , that means ,

3. An orthogonal canonical reduction of the given quadratic form is-

4. Obtain an order system of n orthonormal vector  consisting of eigen vectors corresponding to the eigen values

consisting of eigen vectors corresponding to the eigen values  .

.

5. Construct the orthogonal matrix P whose columns are the eigen vectors

6. The required change of basis is given by X = PY

7. The new basis { } is called the canonical basis and its elements are principal axes of the given quadratic form.

} is called the canonical basis and its elements are principal axes of the given quadratic form.

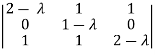

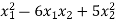

Example: Find the orthogonal canonical form of the quadratic form.

5

Sol. The matrix form of this quadratic equation can be written as,

A =

We can find the eigen values of A as –

|A -  | = 0

| = 0

= 0

= 0

Which gives,

The required orthogonal canonical reduction will be,

8 .

.