Unit - 4

Probability and Statistics

A random variable is a real-valued function whose domain is a set of possible outcomes of a random experiment and range is a sub-set of the set of real numbers and has the following properties:

i) Each particular value of the random variable can be assigned some probability

Ii) Uniting all the probabilities associated with all the different values of the random variable gives the value 1.

A random variable is denoted by X, Y, Z etc.

For example if a random experiment E consists of tossing a pair of dice, the sum X of the two numbers which turn up have the value 2,3,4,…12 depending on chance. Then X is a random variable

Discrete random variable-

A random variable is said to be discrete if it has either a finite or a countable number of values. Countable number of values means the values which can be arranged in a sequence.

Note- if a random variable takes a finite set of values it is called discrete and if if a random variable takes an infinite number of uncountable values it is called continuous variable.

Discrete probability distributions-

Let X be a discrete variate which is the outcome of some experiments. If the probability that X takes the values of x is Pi , then-

P(X = xi) = pi for I = 1, 2, . . .

Where-

- p(xi) ≥ 0 for all values of i

The set of values xi with their probabilities pi makes a discrete probability distribution of the discrete random variable X.

Probability distribution of a random variable X can be exhibited as follows-

X | x1 | x2 | x3 And so on |

P(x) | p(x1) | p(x2) | p(x3) |

Example: Find the probability distribution of the number of heads when three coins are tossed simultaneously.

Sol.

Let be the number of heads in the toss of three coins

The sample space will be-

{HHH, HHT, HTH, THH, HTT, THT, TTH, TTT}

Here variable X can take the values 0, 1, 2, 3 with the following probabilities-

P[X= 0] = P[TTT] = 1/8

P[X = 1] = P [HTT, THH, TTH] = 3/8

P[X = 2] = P[HHT, HTH, THH] = 3/8

P[X = 3] = P[HHH] = 1/8

Hence the probability distribution of X will be-

X | 0 | 1 | 2 | 3 |

P(x) | 1/8 | 3/8 | 3/8 | 1/8 |

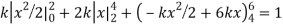

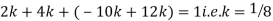

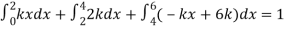

Example: For the following probability distribution of a discrete random variable X,

X | 0 | 1 | 2 | 3 | 4 | 5 |

p(x) | 0 | c | c | 2c | 3c | c |

Find-

1. The value of c.

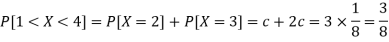

2. P[1<x<4]

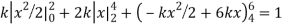

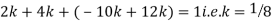

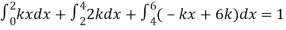

Sol,

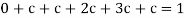

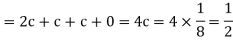

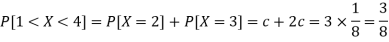

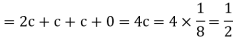

- We know that-

So that-

0 + c + c + 2c + 3c + c = 1

8c = 1

Then c = 1/8

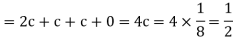

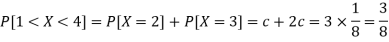

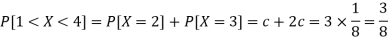

Now, 2. P[1<x<4] = P[X = 2] + P[X = 3] = c + 2c = 3c = 3× 1/8 = 3/8

Continuous probability distribution n-

When a random variable X takes every value in an interval, it gives to continuous distribution of X.

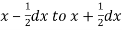

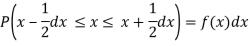

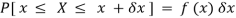

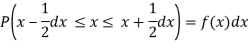

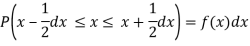

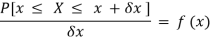

The probability distribution of a continuous variate x defined by a function f(x) such that the probabilities of the variate x falling in the interval x = ½ dx to x + ½ dx

We express it as-

P(x – ½ dx ≤ x ≤ x + ½ dx) = f(x) dx

Here f(x) is called the probability density function.

Distribution function-

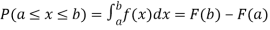

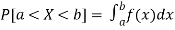

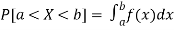

If F(x) = P(X≤x) =  then F(x) is called as the distribution function of X.

then F(x) is called as the distribution function of X.

It has the following properties-

1. F(-∞) = 0

2. F(∞) = 1

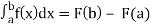

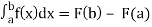

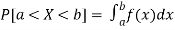

3. P(a ≤ x ≤ b) =

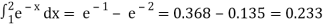

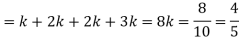

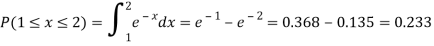

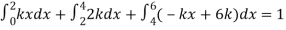

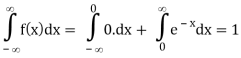

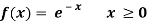

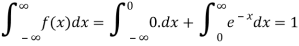

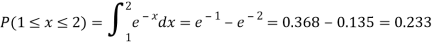

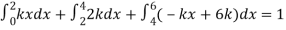

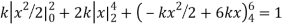

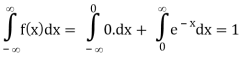

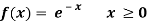

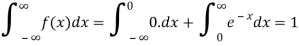

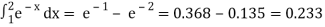

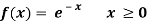

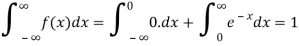

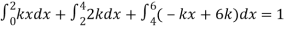

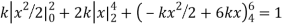

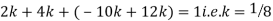

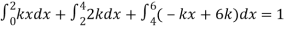

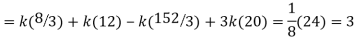

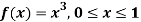

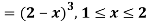

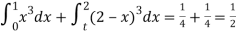

Example: Show that the following function can be defined as a density function and then find P(1 ≤ x ≤ 2).

f(x) = e-x x ≥ 0

= 0 x < 0

Sol.

Here

So that, the function can be defined as a density function.

Now.

P(1 ≤ x ≤ 2) =

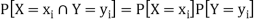

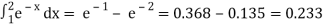

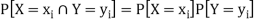

Independent random variables-

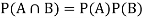

Two discrete random variables X and Y are said to be independent only if-

Note- Two events are independent only if

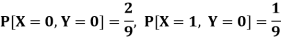

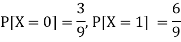

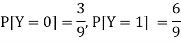

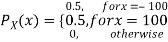

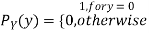

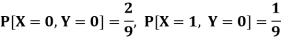

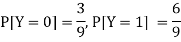

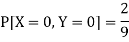

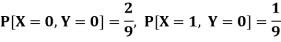

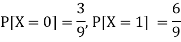

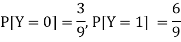

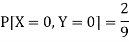

Example: Two discrete random variables X and Y have-

And

P[X = 1, Y = 1] = 5/9.

Check whether X and Y are independent or not?

Sol.

First, we write the given distribution In tabular form-

X/Y | 0 | 1 | P(x) |

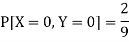

0 | 2/9 | 1/9 | 3/9 |

1 | 1/9 | 5/9 | 6/9 |

P(y) | 3/9 | 6/9 | 1 |

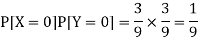

Now-

But

So that-

Hence X and Y are not independent.

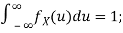

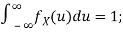

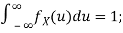

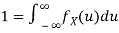

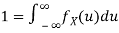

Continuous Random Variables:

A continuous random variable is a random variable where the data can take infinitely many values. For example, a random variable measuring the time taken for something to be done is continuous since there is an infinite number of possible times that can be taken.

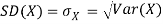

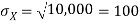

A continuous random variable is called by a probability density function p (x), with given properties: p (x) ≥ 0 and the area between the x-axis & the curve is 1: ... Standard deviation of a variable Random is defined by σ x = √Variance (x).

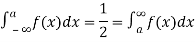

- A continuous random variable is known by a probability density function p(x), with these things: p(x) ≥ 0 and the area on the x-axis and the curve is 1:

∫-∞∞ p(x) dx = 1.

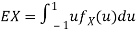

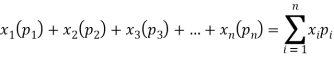

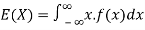

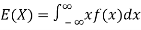

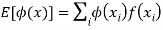

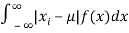

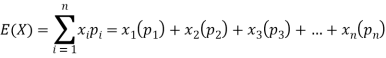

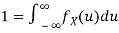

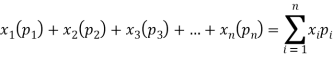

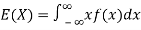

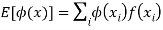

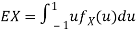

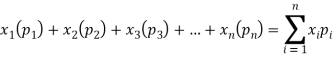

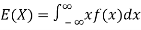

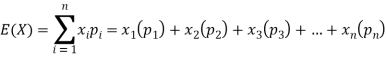

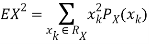

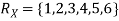

2. The expected value E(x) of a discrete variable is known as:

E(x) = Σi=1n xi pi

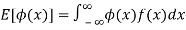

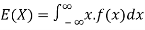

3. The expected value E(x) of a continuous variable is called:

E(x) = ∫-∞∞ x p(x) dx

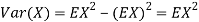

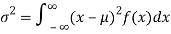

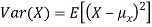

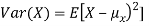

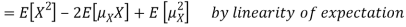

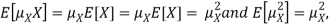

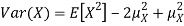

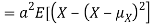

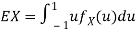

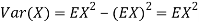

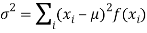

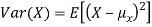

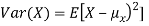

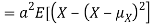

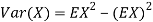

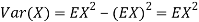

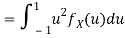

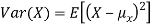

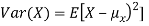

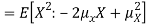

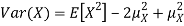

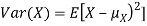

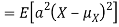

4. The Variance(x) of a random variable is known as Variance(x) = E[(x - E(x)2].

5. 2 random variable x and y are independent if E[xy] = E(x)E(y).

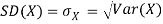

6. The standard deviation of a random variable is known as σx = √Variance(x).

7. Given the value of standard error is used in its place of a standard deviation when denoting the sample mean.

σmean = σx / √n

8. If x is a normal random variable with limitsμ and σ2 (spread = σ), mark in symbols: x ˜ N(μ, σ2).

9. The sample variance of x1, x2, ..., xn is given by-

sx2 = | (x1 - x)2 + ... + (xn - x)2 n – 1

|

10. If x1, x2, ..., xn are explanationssince a random sample, the sample standard deviation s is known as the square root of variance:

sx = | √ |

|

11. Sample Co-variance of x1, x2, ..., xn is known-

sxy = |

|

12. A random vector is a column vector of a random variable.

v = (x1 ... xn)T

13. The expected value of Random vector E(v) is known by a vector of the expected value of the component.

If v = (x1 ... xn)T

E(v) = [E(x1) ... E(xn)]T

14. Co-variance of matrix Co-variance(v) of a random vector is the matrix of variances and Co-variance of the component.

If v = (x1 ... xn)T, the ijth component of the Co-variance(v) is sij

Properties

Starting from properties 1 to 7, c is a constant; x and y are random variables.

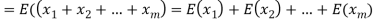

- E(x + y) = E(x) + E(y).

- E(cx) = c E(y).

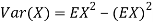

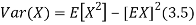

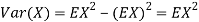

- Variance(x) = E(x2) - E(x)2

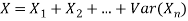

- If x and y are individual, then Variance(x + y) = Variance(x) + Variance(y).

- Variance(x + c) = Variance(x)

- Variance(cx) = c2 Variance(x)

- Co-variance(x + c, y) = Co-variance(x, y)

- Co-variance(cx, y) = c Co-variance(x, y)

- Co-variance(x, y + c) = Co-variance(x, y)

- Co-variance(x, cy) = c Co-variance(x, y)

- If x1, x2, ...,xn are discrete and N(μ, σ2), then E(x) = μ. We say that x is neutral for μ.

- If x1, x2, ... ,xn are independent and N(μ, σ2), then E(s) = σ2. We can be told S is neutral for σ2.

From given properties 8 to 12, w and v is a random vector; b is a continuous vector; A is a continuous matrix.

8. E(v + w) = E(v) + E(w)

9. E(b) = b

10. E(Av) = A E(v)

11. Co-variance(v + b) = Co-variance(v)

12. Co-variance(Av) = A Co-variance(v) AT

The probability distribution of a discrete random variable is a list of probabilities associated with each of its possible values. It is also sometimes called the probability function or the probability mass function.

Suppose a random variable X may take k different values, with the probability that X = xi defined to be P(X = xi) = pi. The probabilities pi must satisfy the following:

1: 0 < pi < 1 for each i

2: p1 + p2 + ... + pk = 1.

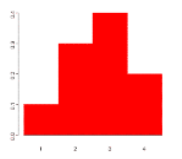

Example

Suppose a variable X can take values 1,2,3, or 4. The probabilities associated with each outcome are described by the following table:

Outcome 1 2 3 4

Probability 0.1 0.3 0.4 0.2

The probability that X is equal to 2 or 3 is the sum of the two probabilities:

P(X = 2 or X = 3) = P(X = 2) + P(X = 3) = 0.3 + 0.4 = 0.7.

Similarly, the probability that X is greater than 1 is equal to 1 - P(X = 1)

= 1 - 0.1 = 0.9,

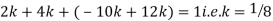

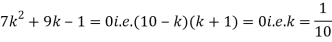

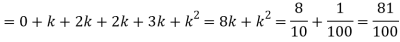

Example: A random variable x has the following probability distribution-

X | 0 | 1 | 2 | 3 | 4 | 5 |

p(x) | 0 | c | c | 2c | 3c | c |

Then find-

1. Value of c.

2. P[X≤3]

3. P[1 < X <4]

Sol.

We know that for the given probability distribution-

So that-

2.

3.

Continuous Random Variable

A continuous random variable is a random variable where the data can take infinitely many values. For example, a random variable measuring the time taken for something to be done is continuous since there are an infinite number of possible times that can be taken.

Continuous random variable is called by a probability density function p (x), with given properties: p (x) ≥ 0 and the area between the x-axis & the curve is 1: ... Standard deviation of a variable Random is defined by σ x = √Variance (x).

Continuous probability distribution (probability density function)-

When a random variable X takes every value in an interval, it gives to continuous distribution of X.

The probability distribution of a continuous variate x defined by a function f(x) such that the probabilities of the variate x falling in the interval

We express it as-

Here f(x) is called the probability density function.

Distribution function-

If F(x) = P(X≤x) =  then F(x) is called as the distribution function of X.

then F(x) is called as the distribution function of X.

It has the following properties-

1. F(-∞) = 0

2. F(∞) = 1

3.

Probability Density:

Probability density function (PDF) is a arithmetical appearance which gives a probability distribution for a discrete random variable as opposite to a continuous random variable. The difference among a discrete random variable is that we check an exact value of the variable. Like, the value for the variable, a stock worth, only goes two decimal points outside the decimal (Example 32.22), while a continuous variable have an countless number of values (Example 32.22564879…).

When the PDF is graphically characterized, the area under the curve will show the interval in which the variable will decline. The total area in this interval of the graph equals the probability of a discrete random variable happening. More exactly, since the absolute prospect of a continuous random variable taking on any exact value is zero owing to the endless set of possible values existing, the value of a PDF can be used to determine the likelihood of a random variable dropping within a exact range of values.

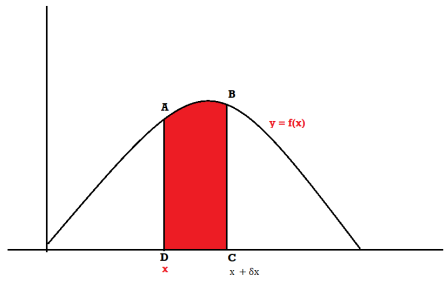

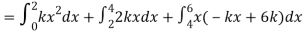

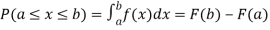

Probability density function-

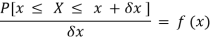

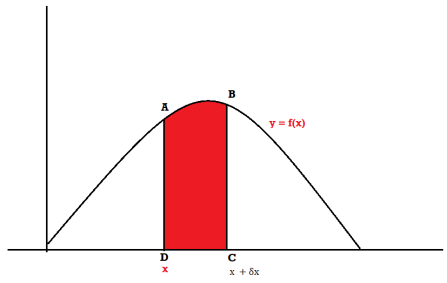

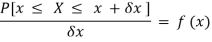

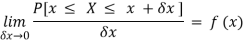

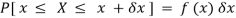

Suppose f( x ) be a continuous function of x . Suppose the shaded region ABCD shown in the following figure represents the area bounded by y = f( x ), x –axis and the ordinates at the points x and x + δx , where δx is the length of the interval ( x , x + δx ).if δx is very-very small, then the curve AB will act as a line and hence the shaded region will be a rectangle whose area will be AD × DC this area = probability that X lies in the interval ( x, x +δx )

= P[ x≤ X ≤ x +δx ]

Hence,

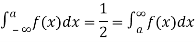

Properties of Probability density function-

1.

2.

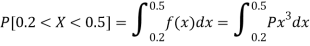

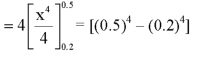

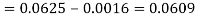

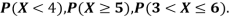

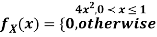

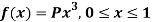

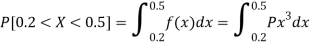

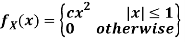

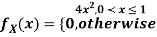

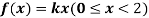

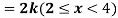

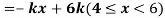

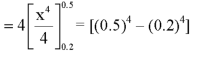

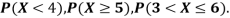

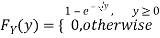

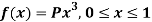

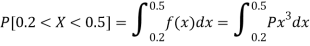

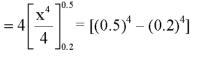

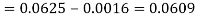

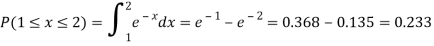

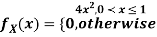

Example: If a continuous random variable X has the following probability density function:

Then find-

1. P[0.2 < X < 0.5]

Sol.

Here f(x) is the probability density function, then-

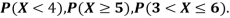

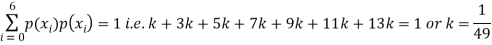

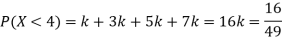

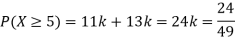

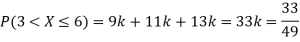

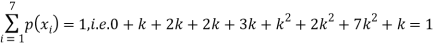

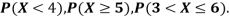

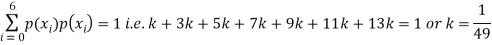

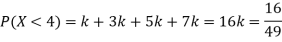

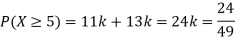

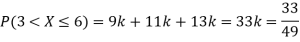

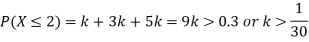

Example. The probability density function of a variable X is

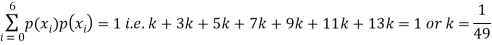

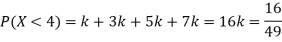

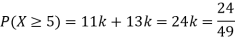

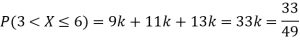

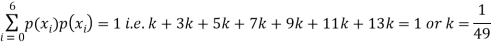

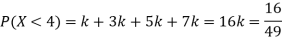

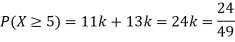

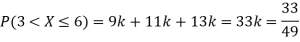

X | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

P(X) | k | 3k | 5k | 7k | 9k | 11k | 13k |

(i) Find

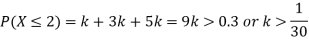

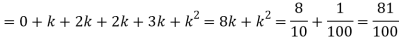

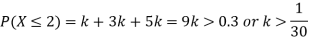

(ii) What will be e minimum value of k so that

Solution. (i) If X is a random variable then

(ii)Thus minimum value of k=1/30.

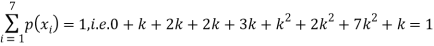

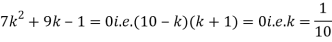

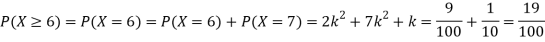

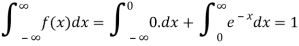

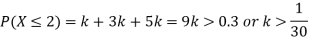

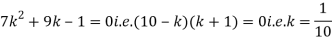

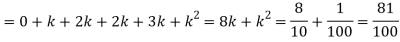

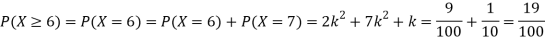

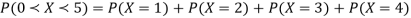

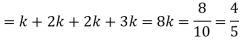

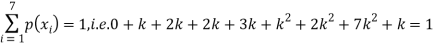

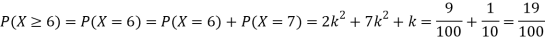

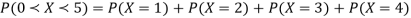

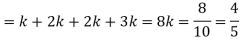

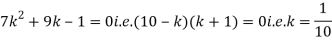

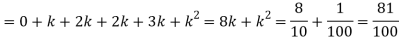

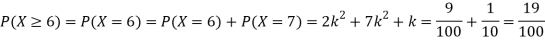

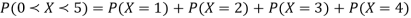

Example. A random variate X has the following probability function

x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

P (x) | 0 | k | 2k | 2k | 3k |  |  |  |

- Find the value of the k.

Solution. (i) If X is a random variable then

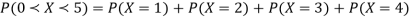

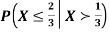

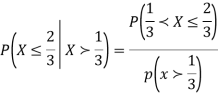

Example: Show that the following function can be defined as a density function and then find  .

.

Sol.

Here

So that, the function can be defined as a density function.

Now.

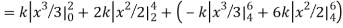

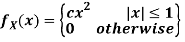

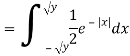

Example:

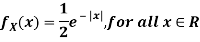

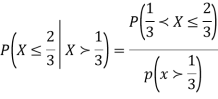

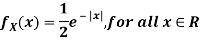

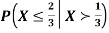

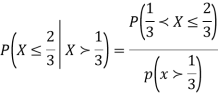

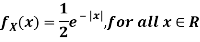

Let X be a random variable with PDF given by

a, Find the constant c.

b. Find EX and Var (X).

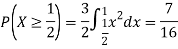

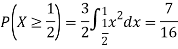

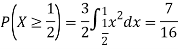

c. Find P(X  ).

).

Solution.

- To find c, we can use

Thus we must have  .

.

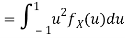

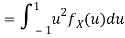

b. To find EX we can write

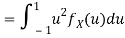

In fact, we could have guessed EX = 0 because the PDF is symmetric around x = 0. To find Var (X) we have

c. To find  we can write

we can write

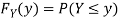

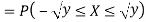

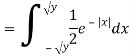

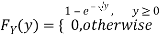

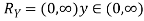

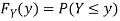

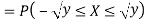

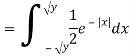

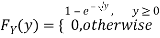

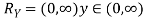

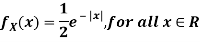

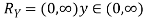

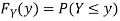

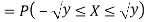

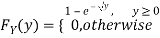

Example:

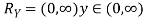

Let X be a continuous random variable with PDF given by

If  , find the CDF of Y.

, find the CDF of Y.

Solution. First we note that  , we have

, we have

Thus,

Example:

Let X be a continuous random variable with PDF

Find  .

.

Solution. We have

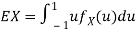

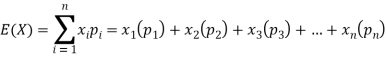

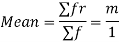

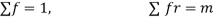

Let a random variable X has a probability distribution which assumes the values say with their associated probabilities

with their associated probabilities  then the mathematical expectation can be defined as-

then the mathematical expectation can be defined as-

The expected value of a random variable X is written as E(X).

The expected value for a continuous random variable is

OR

The mean value (μ) of the probability distribution of a variate X is commonly known as its expectation current is denoted by E (X). If f(x) is the probability density function of the variate X, then

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

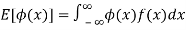

In general expectation of any function is  given by

given by

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

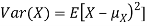

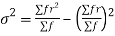

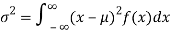

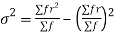

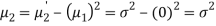

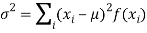

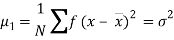

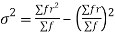

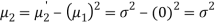

(2) Variance offer distribution is given by

(discrete distribution)

(discrete distribution)

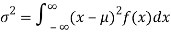

(continuous distribution)

(continuous distribution)

Where  is the standard deviation of the distribution.

is the standard deviation of the distribution.

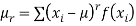

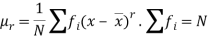

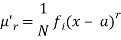

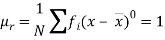

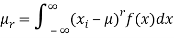

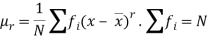

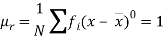

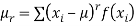

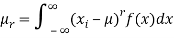

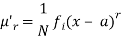

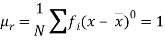

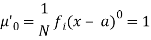

(3) The rth moment about the mean (denoted by  is defined by

is defined by

(discrete function)

(discrete function)

(continuous function)

(continuous function)

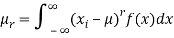

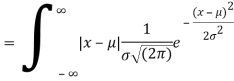

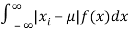

(4) Mean deviation from the mean is given by

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

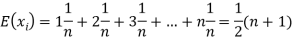

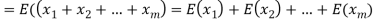

Example. In a lottery, m tickets are drawn at a time out of tickets numbered from 1 to n. Find the expected value of the sum of the numbers on the tickets drawn.

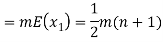

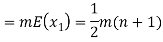

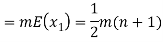

Solution. Let  be the variables representing the numbers on the first, second,…nth ticket. The probability of drawing a ticket out of n ticket spelling in each case 1/n, we have

be the variables representing the numbers on the first, second,…nth ticket. The probability of drawing a ticket out of n ticket spelling in each case 1/n, we have

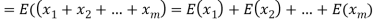

Therefore the expected value of the sum of the numbers on the tickets drawn

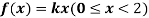

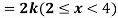

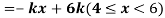

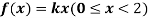

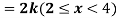

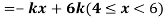

Example. X is a continuous random variable with probability density function given by

Find k and mean value of X.

Solution. Since the total probability is unity.

Mean of X =

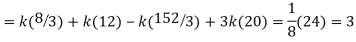

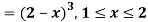

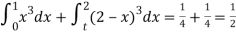

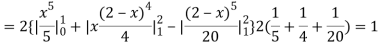

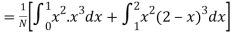

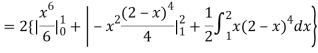

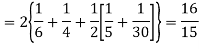

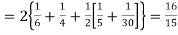

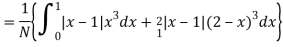

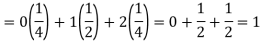

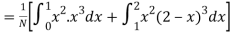

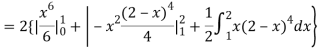

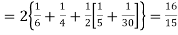

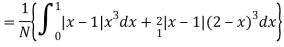

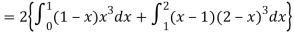

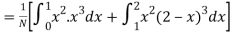

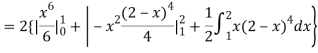

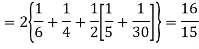

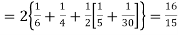

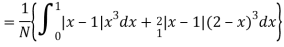

Example. The frequency distribution of a measurable characteristic varying between 0 and 2 is as under

Calculate two standard deviations and also the mean deviation about the mean.

Solution. Total frequency N =

(about the origin)=

(about the origin)=

(about the origin)=

(about the origin)=

Hence,

i.e., standard deviation

Mean derivation about the mean

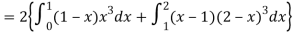

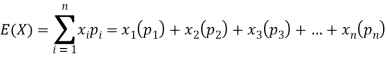

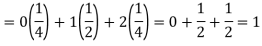

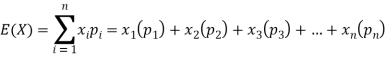

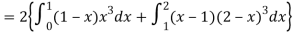

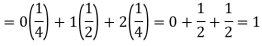

Example: If a random variable X has the following probability distribution in the tabular form then what will be the expected value of X.

X | 0 | 1 | 2 |

P(x) | 1/4 | 1/2 | 1/4 |

Sol.

We know that-

So that-

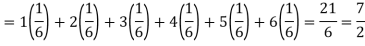

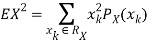

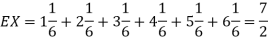

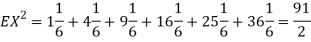

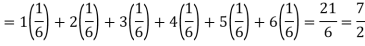

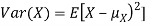

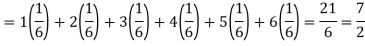

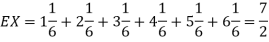

Example: Find the expectations of the number of an unbiased die when thrown.

Sol. Let X be a random variable that represents the number on a die when thrown.

X can take the values-

1, 2, 3, 4, 5, 6

With

P[X = 1] = P[X = 2] = P[X = 3] = P[X = 4] = P[X = 5] = P[X = 6] = 1/6

The distribution table will be-

X | 1 | 2 | 3 | 4 | 5 | 6 |

p(x) | 1/6 | 1/6 | 1/6 | 1/6 | 1/6 | 1/6 |

Hence the expectation of number on the die thrown is-

So that-

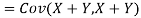

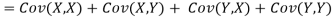

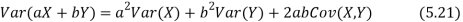

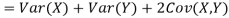

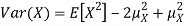

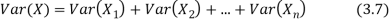

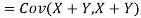

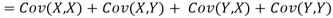

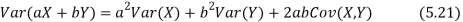

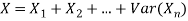

The variance of a sum

One of the applications of covariance is finding the variance of a sum of several random variables. In particular, if Z = X + Y, then

Var (Z) =Cov (Z,Z)

More generally, for a, b R we conclude

R we conclude

Variance

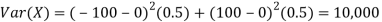

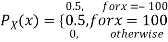

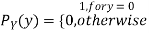

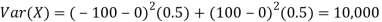

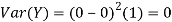

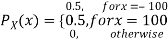

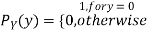

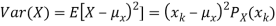

Consider two random variables X and Y with the following PMFs

(3.3)

(3.3)

(3.4)

(3.4)

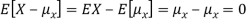

Note that EX =EY = 0. Although both random variables have the same mean value, their distribution is completely different. Y is always equal to its mean of 0, while X is IDA 100 or -100, quite far from its mean value. The variance is a measure of how spread out the distribution of a random variable is. Here the variance of Y is quite small since its distribution is concentrated value. Why the variance of X will be larger since its distribution is more spread out.

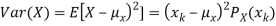

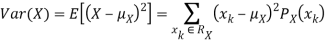

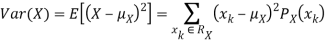

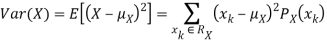

The variance of a random variable X with mean  , is defined as

, is defined as

By definition, the variance of X is the average value of  Since

Since ≥0, the variance is always larger than or equal to zero. A large value of the variance means that

≥0, the variance is always larger than or equal to zero. A large value of the variance means that  is often large, so X often X value far from its mean. This means that the distribution is very spread out. On the other hand, low variance means that the distribution is concentrated around its average.

is often large, so X often X value far from its mean. This means that the distribution is very spread out. On the other hand, low variance means that the distribution is concentrated around its average.

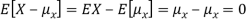

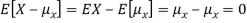

Note that if we did not square the difference between X and it means the result would be zero. That is

X is sometimes below its average and sometimes above its average. Thus  is sometimes negative and sometimes positive but on average it is zero.

is sometimes negative and sometimes positive but on average it is zero.

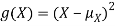

To compute  , note that we need to find the expected value of

, note that we need to find the expected value of  , so we can use LOTUS. In particular, we can write

, so we can use LOTUS. In particular, we can write

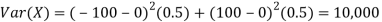

For example, for X and Y defined in equations 3.3 and 3.4 we have

As we expect, X has a very large variance while Var (Y) = 0

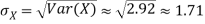

Note that Var (X) has a different unit than X. For example, if X is measured in metres then Var(X) is in  .to solve this issue we define another measure called the standard deviation usually shown as

.to solve this issue we define another measure called the standard deviation usually shown as  which is simply the square root of variance.

which is simply the square root of variance.

The standard deviation of a random variable X is defined as

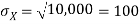

The standard deviation of X has the same unit as X. For X and Y defined in equations 3.3 and 3.4 we have

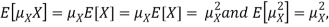

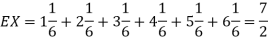

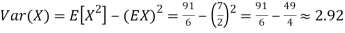

Here is a useful formula for computing the variance.

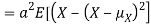

The computational formula for the variance

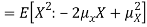

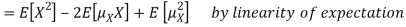

To prove it note that

Note that for a given random variable X,  is just a constant real number. Thus

is just a constant real number. Thus  so we have

so we have

Equation 3.5 is equally easier to work with compared to  . To use this equation we can find

. To use this equation we can find  using LOTUS.

using LOTUS.

And then subtract  to obtain the variance.

to obtain the variance.

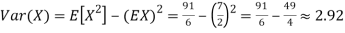

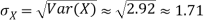

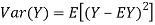

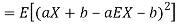

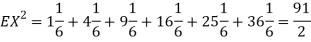

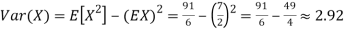

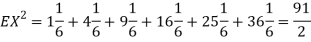

Example.I roll a fair die and let X be the resulting number. Find E(X), Var(X), and

Solution. We have  and

and  for k = 1,2,…,6. Thus we have

for k = 1,2,…,6. Thus we have

Thus,

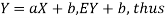

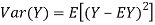

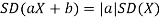

Theorem

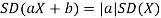

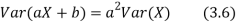

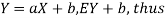

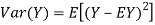

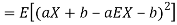

For random variable X and real number a and b

Proof. If

From equation 3.6, we conclude that, for standard deviation,  . We mentioned that variance is NOT a linear operation. But there is a very important case, in which variance behaves like a linear operation and that is when we look at the sum of independent random variables,

. We mentioned that variance is NOT a linear operation. But there is a very important case, in which variance behaves like a linear operation and that is when we look at the sum of independent random variables,

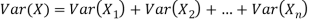

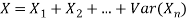

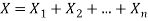

Theorem

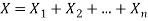

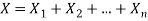

If  are independent random variables and

are independent random variables and  , then

, then

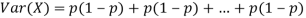

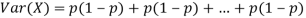

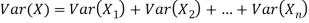

Example. If  Binomial (n, p) find Var (X).

Binomial (n, p) find Var (X).

Solution. We know that we can write a Binomial (n, p) random variable as the sum of n independent Bernoulli (p) random variable, i.e.

If  Bernoulli (p) then its variance is

Bernoulli (p) then its variance is

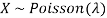

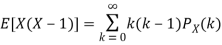

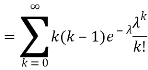

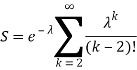

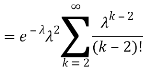

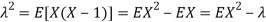

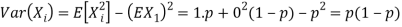

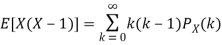

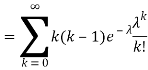

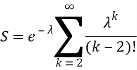

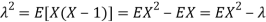

Problem. If  , find Var (X).

, find Var (X).

Solution. We already know  , thus Var (X)

, thus Var (X) . You can find

. You can find  directly using LOTUS, however, it is a little easier to find E [X (X-1)] first. In particular using LOTUS, we have

directly using LOTUS, however, it is a little easier to find E [X (X-1)] first. In particular using LOTUS, we have

So we have  . Thus,

. Thus,  and we conclude

and we conclude

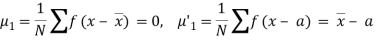

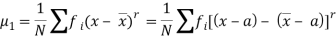

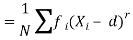

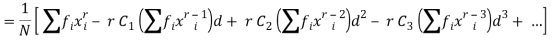

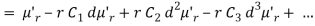

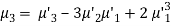

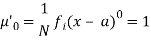

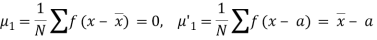

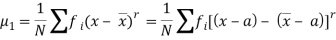

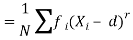

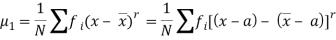

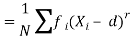

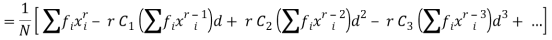

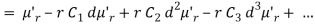

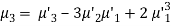

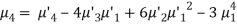

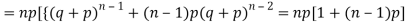

The rth moment of a variable x about the mean x is usually denoted by is given by

The rth moment of a variable x about any point a is defined by

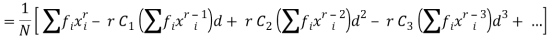

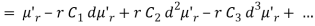

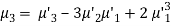

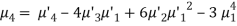

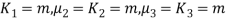

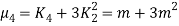

The relation between moments about mean and moment about any point:

Where Xi =x - a and d =

In particular

Note. 1. The sum of the coefficients of the various terms on the right-hand side is zero.

2. The dimension of each term on the right‐hand side is the same as that of terms on the left.

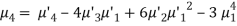

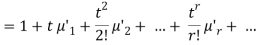

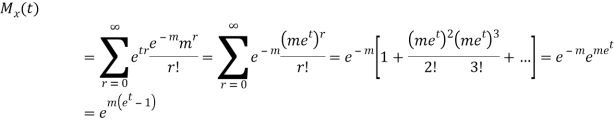

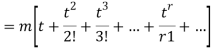

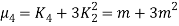

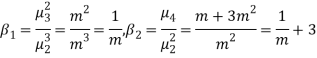

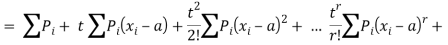

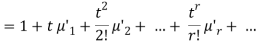

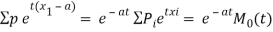

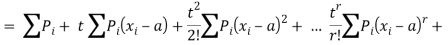

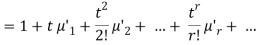

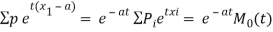

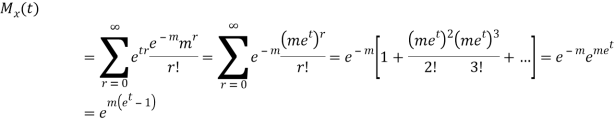

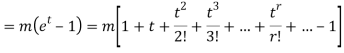

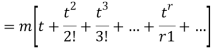

MOMENT GENERATING FUNCTION

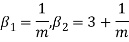

The moment generating function of the variate x about x = a is defined as the expected value of et(x – a) and is denoted by Mo(t).

M0(t) =

Where μ, ‘ is the moment of order  about

about

Hence μ ‘ = coefficient of tr/r! or μ’r = [d’/dt’ Ma(t)]t - 0

Again Ma(t) =

Thus the moment generating function about the point a = eat moment generating function about the origin.

A probability distribution is an arithmetical function which defines completely possible values &possibilities that a random variable can take in a given range. This range will be bounded between the minimum and maximum possible values. But exactly where the possible value is possible to be plotted on the probability distribution depends on a number of influences. These factors include the distribution's mean, SD, Skewness, and kurtosis.

It was discovered by a Swiss Mathematician Jacob James Bernoulli in the year 1700.

This distribution is concerned with trials of a repetitive nature in which only the occurrence or non-occurrence, success or failure, acceptance or rejection, yes or no of a particular event is of interest.

For convenience, we shall call the occurrence of the event ‘a success’ and its non-occurrence ‘a failure’.

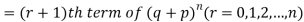

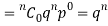

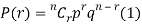

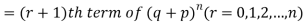

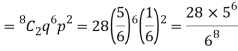

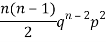

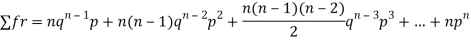

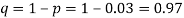

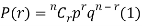

Let there be n independent trials in an experiment. Let a random variable X denote the number of successes in these n trials. Let p be the probability of a success and q that of a failure in a single trial so that p + q = 1. Let the trials be independent and p be constant for every trial.

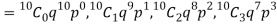

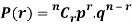

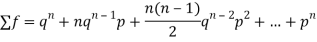

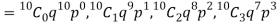

BINOMIAL DISTRIBUTION

To find the probability of the happening of an event once, twice, thrice,…r times exactly in n trails.

Let the probability of the happening of an event A in one trial be p and its probability of not happening to be 1 – p – q.

We assume that there are n trials and the happening of the event A is r times and it's not happening is n – r times.

This may be shown as follows

AA……A

r times n – r times (1)

A indicates it's happening  its failure and P (A) =p and P (

its failure and P (A) =p and P (

We see that (1) has the probability

Pp…p qq….q=

r times n-r times (2)

Clearly (1) is merely one order of arranging r A’S.

The probability of (1) = Number of different arrangements of r A’s and (n-r)

Number of different arrangements of r A’s and (n-r) ’s

’s

The number of different arrangements of r A’s and (n-r) ’s

’s

Probability of the happening of event r times =

If r = 0, probability of happening of an event 0 times

If r = 1,probability of happening of an event 1 times

If r = 2,probability of happening of an event 2 times

If r = 3,probability of happening of an event 3 times  and so on.

and so on.

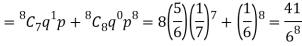

These terms are the successive terms in the expansion of

Hence it is called Binomial Distribution.

Note - n and p occurring in the binomial distribution are called the parameters of the distribution.

Note - In a binomial distribution:

(i) n, the number of trials is finite.

(ii) each trial has only two possible outcomes usually called success and failure.

(iii) all the trials are independent.

(iv) p (and hence q) is constant for all the trials.

Applications of binomial distribution:

1. In problems concerning no. Of defectives in a sample production line.

2. In estimation of reliability of systems.

3. No. Of rounds fired from a gun hitting a target.

4. In Radar detection

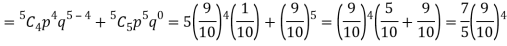

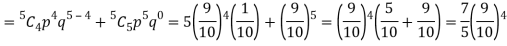

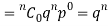

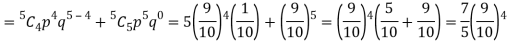

Example. If on average one ship in every ten is wrecked. Find the probability that out of 5 ships expected to arrive, 4 at least we will arrive safely.

Solution. Out of 10 ships, one ship is wrecked.

I.e. nine ships out of 10 ships are safe, P (safety) =

P (at least 4 ships out of 5 are safe) = P (4 or 5) = P (4) + P(5)

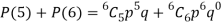

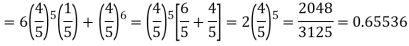

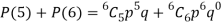

Example. The overall percentage of failures in a certain examination is 20. If 6 candidates appear in the examination what is the probability that at least five pass the examination?

Solution. Probability of failures = 20%

Probability of (P) =

Probability of at least 5 passes = P(5 or 6)

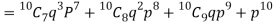

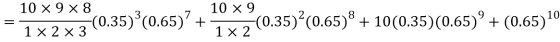

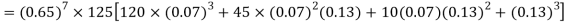

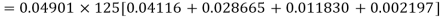

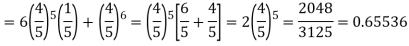

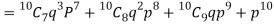

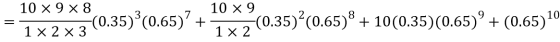

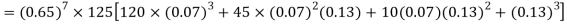

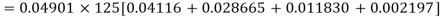

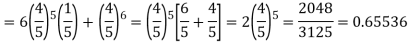

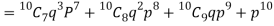

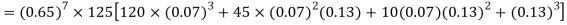

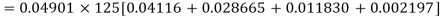

Example. The probability that a man aged 60 will live to be 70 is 0.65. What is the probability that out of 10 men, now 60, at least seven will live to be 70?

Solution. The probability that a man aged 60 will live to be 70

Number of men= n = 10

Probability that at least 7 men will live to 70 = (7 or 8 or 9 or 10)

= P (7)+ P(8)+ P(9) + P(10) =

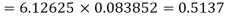

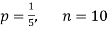

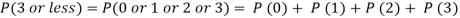

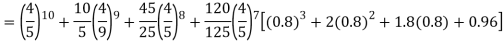

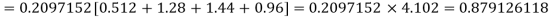

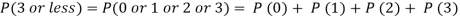

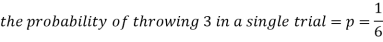

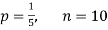

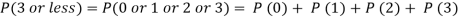

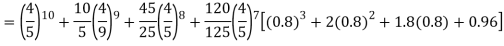

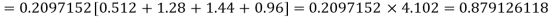

Example. Assuming that 20% of the population of a city are literate so that the chance of an individual being literate is  and assuming that a hundred investigators each take 10 individuals to see whether they are illiterate, how many investigators would you expect to report 3 or less were literate.

and assuming that a hundred investigators each take 10 individuals to see whether they are illiterate, how many investigators would you expect to report 3 or less were literate.

Solution.

Required number of investigators = 0.879126118× 100 =87.9126118

= 88 approximate

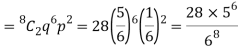

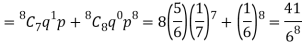

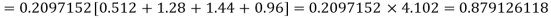

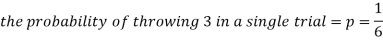

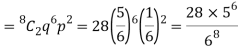

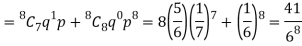

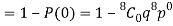

Example: A die is thrown 8 times then find the probability that 3 will show-

1. Exactly 2 times

2. At least 7 times

3. At least once

Sol.

As we know that-

Then-

1. Probability of getting 3 exactly 2 times will be-

2. Probability of getting 3 at least 7 or 8 times will be-

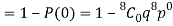

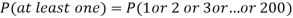

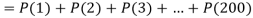

3. Probability of getting 3 at least once or (1 or 2 or 3 or 4 or 5 or 6 or 7 or 8 times)-

=P (1) + P (2) + P (3) + P (4) + P (5) + P (6) +P (7) + P (8)

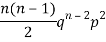

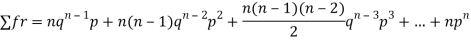

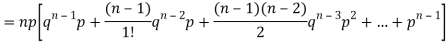

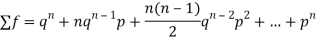

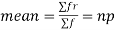

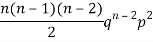

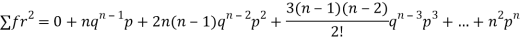

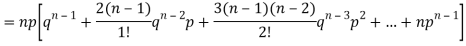

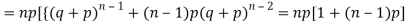

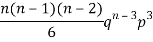

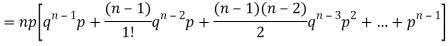

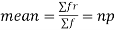

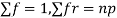

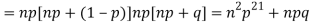

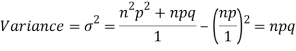

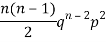

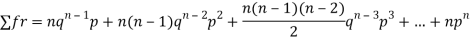

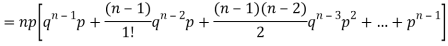

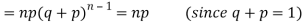

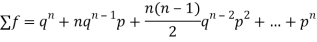

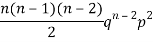

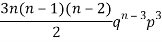

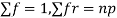

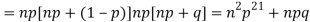

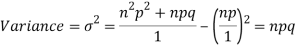

Mean of the binomial distribution

Successors r | Frequency f | Rf |

0 |  | 0 |

1 |  |  |

2 |  | n(n-1)  |

3 |  |  |

….. | …… | …. |

N |  |  |

Since

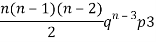

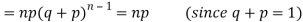

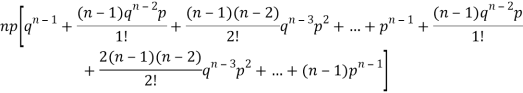

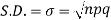

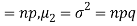

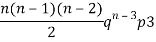

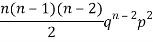

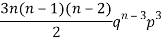

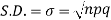

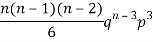

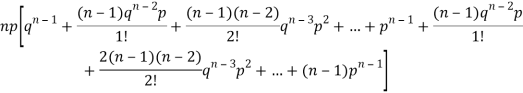

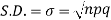

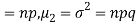

STANDARD DEVIATION OF BINOMIAL DISTRIBUTION

Successors r | Frequency f |  |

0 |  | 0 |

1 |  |  |

2 |  | 2n(n-1)  |

3 |  |  |

….. | …… | …. |

N |  |  |

We know that  (1)

(1)

r is the deviation of items (successes) from 0.

Putting these values in (1) we have

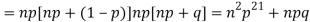

Hence for the binomial distribution, Mean

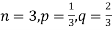

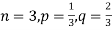

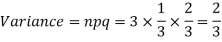

Example. A die is tossed thrice. Success is getting 1 or 6 on a TOSS. Find the mean and variance of the number of successes.

Solution.

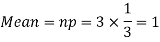

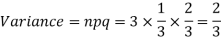

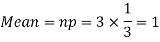

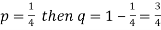

Example: Find the mean and variance of a binomial distribution with p = 1/4 and n = 10.

Sol.

Here

Mean = np =

Variance = npq =

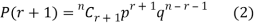

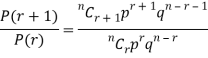

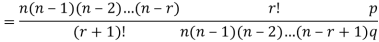

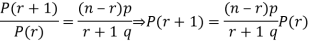

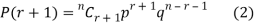

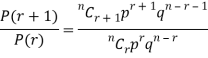

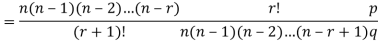

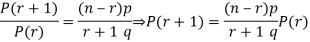

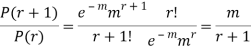

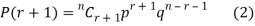

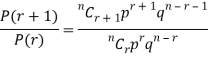

RECURRENCE RELATION FOR THE BINOMIAL DISTRIBUTION

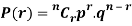

By Binomial Distribution

On dividing (2) by (1), we get

Key takeaways:

- BINOMIAL DISTRIBUTION P(r) = nCr pr. qn - r

- n and p occurring in the binomial distribution are called the parameters of the distribution.

- Mean =

- S.D. = σ = npq

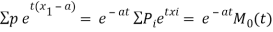

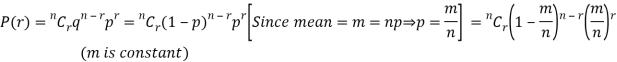

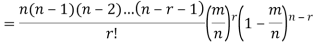

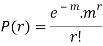

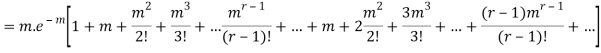

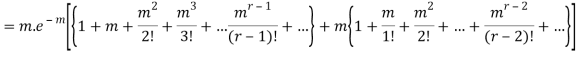

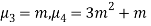

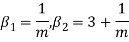

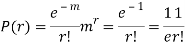

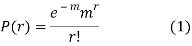

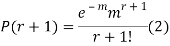

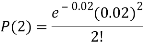

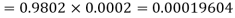

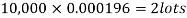

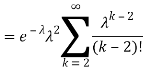

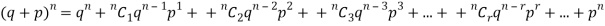

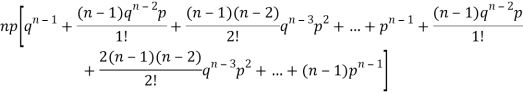

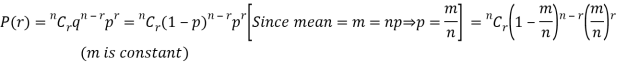

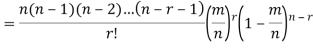

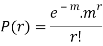

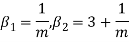

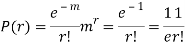

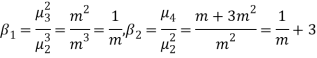

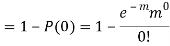

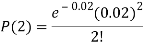

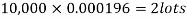

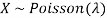

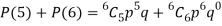

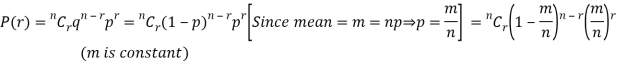

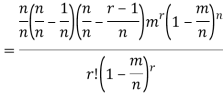

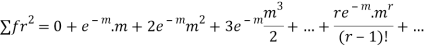

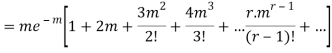

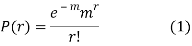

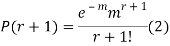

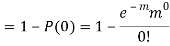

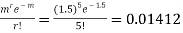

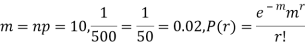

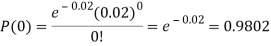

Poisson distribution is a particular limiting form of the Binomial distribution when p (or q) is very small and n is large enough.

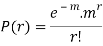

Poisson distribution is

Where m is the mean of the distribution.

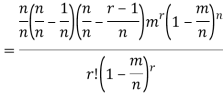

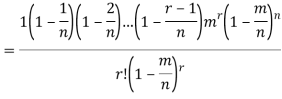

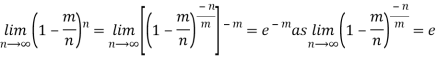

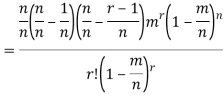

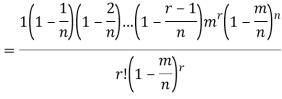

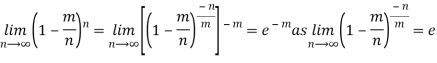

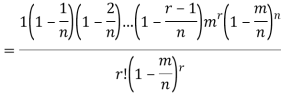

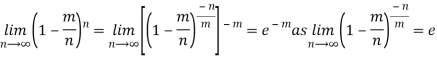

Proof. In Binomial Distribution

Taking limits when n tends to infinity

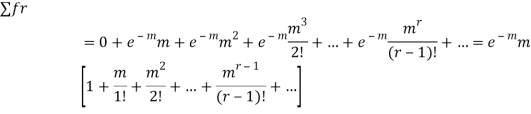

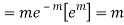

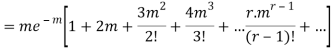

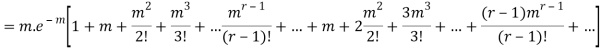

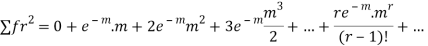

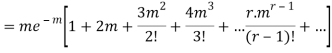

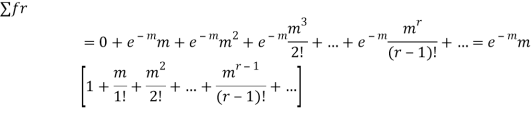

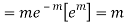

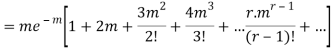

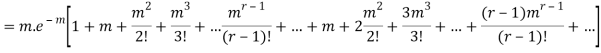

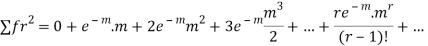

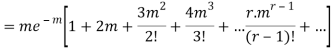

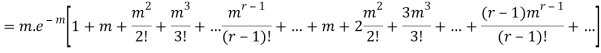

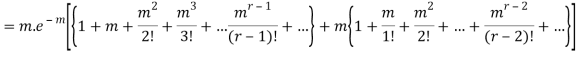

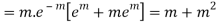

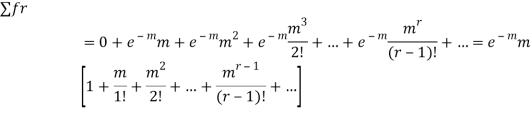

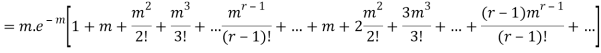

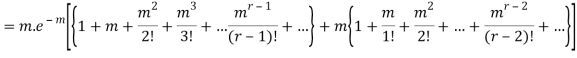

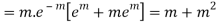

MEAN OF POISSON DISTRIBUTION

Success r | Frequency f | f.r |

0 |  | 0 |

1 |  |  |

2 |  |  |

3 |  |  |

… | … | … |

r |  |  |

… | … | … |

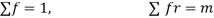

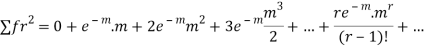

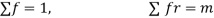

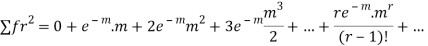

STANDARD DEVIATION OF POISSON DISTRIBUTION

Successive r | Frequency f | Product rf | Product  |

0 |  | 0 | 0 |

1 |  |  |  |

2 |  |  |  |

3 |  |  |  |

……. | …….. | …….. | …….. |

r |  |  |  |

…….. | ……. | …….. | ……. |

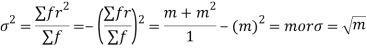

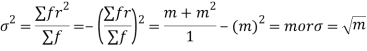

Hence mean and variance of a Poisson distribution is equal to m. Similarly, we can obtain,

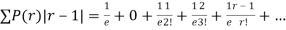

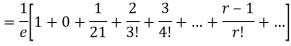

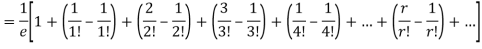

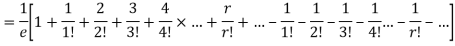

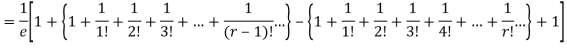

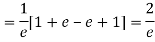

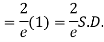

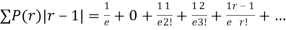

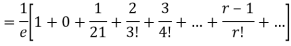

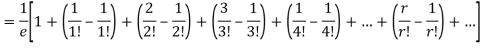

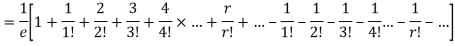

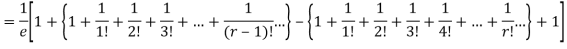

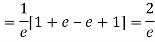

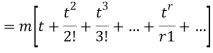

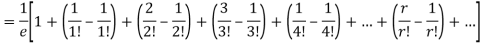

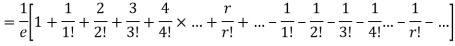

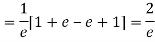

MEAN DEVIATION

Show that in a Poisson distribution with unit mean, and the mean deviation about the mean is 2/e times the standard deviation.

Solution.  But mean = 1 i.e. m =1 and S.D. =

But mean = 1 i.e. m =1 and S.D. =

r | P (r) | |r-1| | P(r)|r-1| |

0 |  | 1 |  |

1 |  | 0 | 0 |

2 |  | 1 |  |

3 |  | 2 |  |

4 |  | 3 |  |

….. | ….. | ….. | ….. |

r |  | r-1 |  |

Mean Deviation =

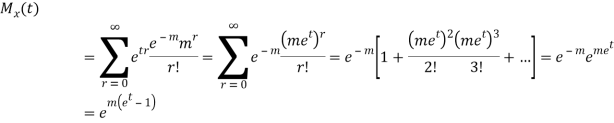

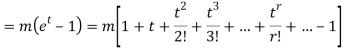

MOMENT GENERATING FUNCTION OF POISSON DISTRIBUTION

Solution.

Let  be the moment generating function then

be the moment generating function then

CUMULANTS

The cumulant generating function  is given by

is given by

Now  cumulant =coefficient of

cumulant =coefficient of  in K (t) = m

in K (t) = m

i.e.  , where r = 1,2,3,…

, where r = 1,2,3,…

Mean =

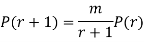

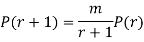

RECURRENCE FORMULA FOR POISSON DISTRIBUTION

SOLUTION. By Poisson distribution

On dividing (2) by (1) we get

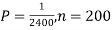

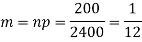

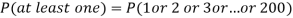

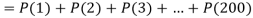

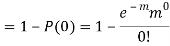

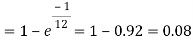

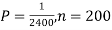

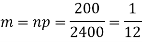

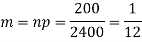

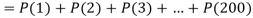

Example. Assume that the probability of an individual coal miner being killed in a mine accident during a year is  . Use appropriate statistical distribution to calculate the probability that in a mine employing 200 miners, there will be at least one fatal accident in a year.

. Use appropriate statistical distribution to calculate the probability that in a mine employing 200 miners, there will be at least one fatal accident in a year.

Solution.

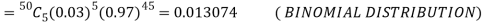

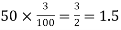

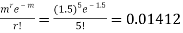

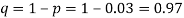

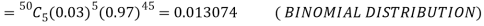

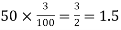

Example. Suppose 3% of bolts made by a machine are defective, the defects occurring at random during production. If bolts are packaged 50 per box, find

(a) Exact probability and

(b) Poisson approximation to it, that a given box will contain 5 defectives.

Solution.

(a) Hence the probability for 5 defectives bolts in a lot of 50.

(b) To get Poisson approximation m = np =

Required Poisson approximation=

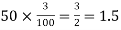

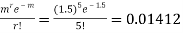

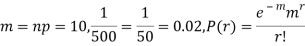

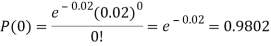

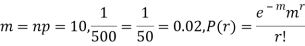

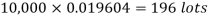

Example. In a certain factory producing cycle tyres, there is a smallchance of 1 in 500 tyres to be defective. The tyres are supplied in lots of 10. Using Poisson distribution, calculate the approximate number of lots containing no defective, one defective, and two defective tyres, respectively, in a consignment of 10,000 lots.

Solution.

S.No. | Probability of defective | Number of lots containing defective |

1. |  |  |

2. |   |  |

3. |   |  |

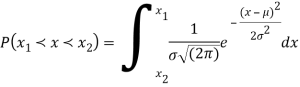

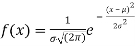

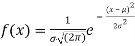

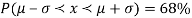

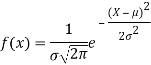

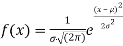

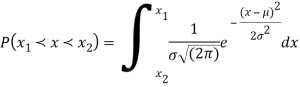

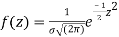

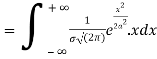

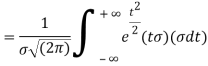

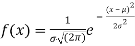

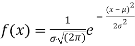

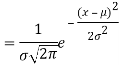

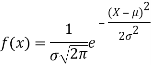

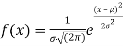

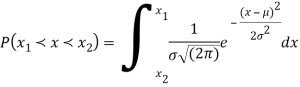

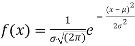

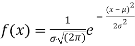

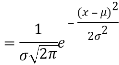

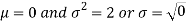

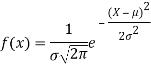

The normal distribution is a continuous distribution. It is derived as the limiting form of the Binomial distribution for large values of n and p and q are not very small.

The normal distribution is given by the equation

(1)

(1)

Where  = mean,

= mean,  = standard deviation,

= standard deviation,  =3.14159…e=2.71828…

=3.14159…e=2.71828…

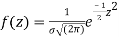

On substitution  in (1) we get

in (1) we get  (2)

(2)

Here mean = 0, standard deviation = 1

(2) is known as the standard form of normal distribution.

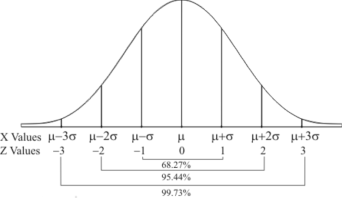

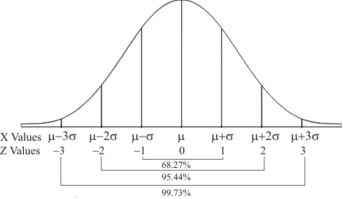

Graph of a normal probability function-

The curve looks like a bell-shaped curve. The top of the bell is exactly above the mean.

If the value of standard deviation is large then the curve tends to flatten out and for small standard deviation, it has a sharp peak.

This is one of the most important probability distributions in statistical analysis.

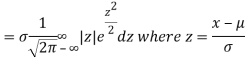

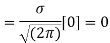

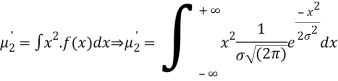

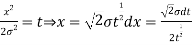

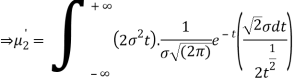

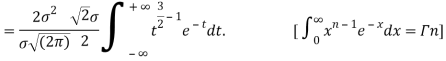

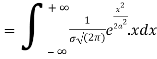

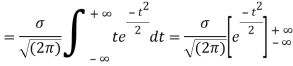

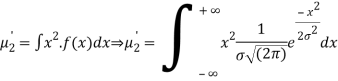

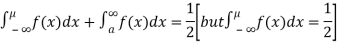

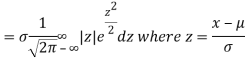

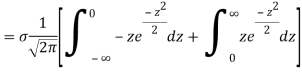

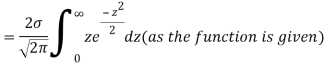

MEAN FOR NORMAL DISTRIBUTION

Mean  [Putting

[Putting

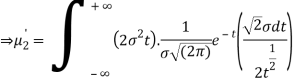

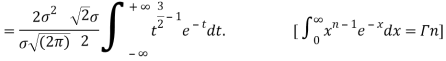

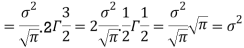

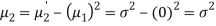

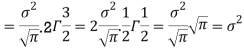

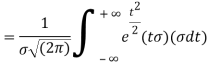

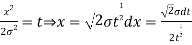

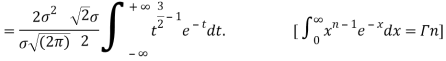

STANDARD DEVIATION FOR NORMAL DISTRIBUTION

Put,

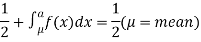

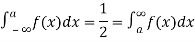

MEDIAN OF THE NORMAL DISTRIBUTION

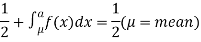

If a is the median then it divides the total area into two equal halves so that

Where,

Suppose  mean,

mean,  then

then

Thus,

Similarly, when  mean, we have a =

mean, we have a =

Thus, median = mean =

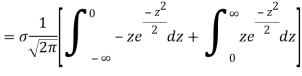

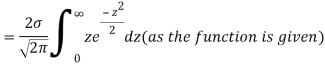

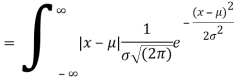

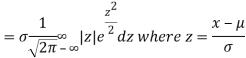

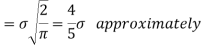

MEA DEVIATION ABOUT THE MEAN

Mean deviation

MODE OF THE NORMAL DISTRIBUTION

We know that mode is the value of the variate x for which f (x) is maximum. Thus by differential calculus f (x) is maximum if  and

and

Where,

Thus, mode is  and model ordinate =

and model ordinate =

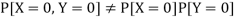

NORMAL CURVE

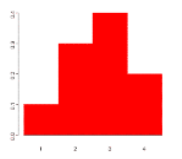

Let us show binomial distribution graphically. The probabilities of heads in 1 toss are

. It is shown in the given figure.

. It is shown in the given figure.

If the variates (head here) are treated as if they were continuous, the required probability curve will be normal as shown in the above figure by dotted lines.

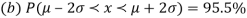

Properties of the normal curve

- The curve is symmetrical about the y- axis. The mean, median and mode coincide at the origin.

- The curve is drawn, if the mean (origin of x) and standard deviation are given. The value of

can be calculated from the fact that the area of the curve must be equal to the total number of observations.

can be calculated from the fact that the area of the curve must be equal to the total number of observations. - y decreases rapidly as x increases numerically. The curve extends to infinity on either side of the origin.

- (a)

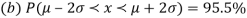

AREA UNDER THE NORMAL CURVE

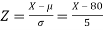

By taking  the standard normal curve is formed.

the standard normal curve is formed.

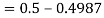

The total area under this curve is 1. The area under the curve is divided into two equal parts by z = 0. The left-hand side area and right-hand side area to z = 0 are 0.5. The area between the ordinate z = 0.

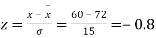

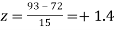

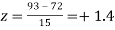

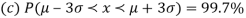

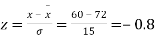

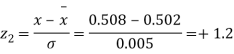

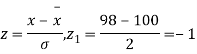

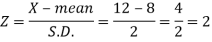

Example. On a final examination in mathematics, the mean was 72, and the standard deviation was 15. Determine the standard scores of students receiving grades.

(a) 60

(b) 93

(c) 72

Solution. (a)

(b)

(c)

Example. Find the area under the normal curve in each of the cases

(a) Z = 0 and z = 1.2

(b) Z = -0.68 and z = 0

(c) Z = -0.46 and z = -2.21

(d) Z = 0.81 and z = 1.94

(e) To the left of z = -0.6

(f) Right of z = -1.28

Solution. (a) Area between Z = 0 and z = 1.2 =0.3849

(b)Area between z = 0 and z = -0.68 = 0.2518

(c)Required area = (Area between z = 0 and z = 2.21) + (Area between z = 0 and z =-0.46)\

= (Area between z = 0 and z = 2.21)+ (Area between z = 0 and z = 0.46)

=0.4865 + 0.1772 = 0.6637

(d)Required area = (Area between z = 0 and z = 1.+-(Area between z = 0 and z = 0.81)

= 0.4738-0.2910=0.1828

(e) Required area = 0.5-(Area between z = 0 and z = 0.6)

= 0.5-0.2257=0.2743

(f)Required area = (Area between z = 0 and z = -1.28)+0.5

= 0.3997+0.5

= 0.8997

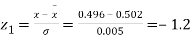

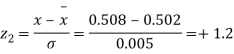

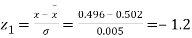

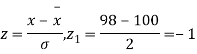

Example. The mean inside diameter of a sample of 200 washers produced by a machine is 0.0502 cm and the standard deviation is 0.005 cm. The purpose for which these washers are intended allows a maximum tolerance in the diameter of 0.496 to 0.508 cm, otherwise, the washers are considered defective. Determine the percentage of defective washers produced by the machine assuming the diameters are normally distributed.

Solution.

Area for non – defective washers = Area between z = -1.2

And z = +1.2

=2 Area between z = 0 and z = 1.2

=2 (0.3849)-0.7698=76.98%

(0.3849)-0.7698=76.98%

Percentage of defective washers = 100-76.98=23.02%

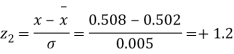

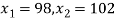

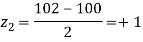

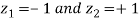

Example. A manufacturer knows from experience that the resistance of resistors he produces is normal with mean  and standard deviation

and standard deviation  . What percentage of resistors will have resistance between 98 ohms and 102 ohms?

. What percentage of resistors will have resistance between 98 ohms and 102 ohms?

Solution.  ,

,

Area between

= (Area between z = 0 and z = +1)

= 2 (Area between z = 0 and z = +1)=2 0.3413 = 0.6826

0.3413 = 0.6826

Percentage of resistors having resistance between 98 ohms and 102 ohms = 68.26

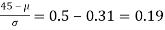

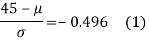

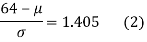

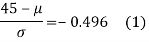

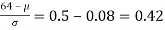

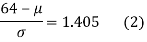

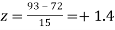

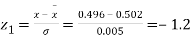

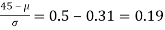

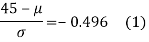

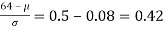

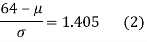

Example. In a normal distribution, 31% of the items are 45 and 8% are over 64. Find the mean and standard deviation of the distribution.

Solution. Let  be the mean and

be the mean and  the S.D.

the S.D.

If x = 45,

If x = 64,

The area between 0 and

[From the table, for the area 0.19, z = 0.496)

Area between z = 0 and z =

(from the table for area 0.42, z = 1.405)

Solving (1) and (2) we get

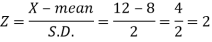

Example: The life of electric bulbs is normally distributed with a mean of 8 months and a standard deviation of 2 months.

If 5000 electric bulbs are issued how many bulbs should be expected to need replacement after 12 months?

[Given that P (z ≥ 2) = 0. 0228]

Sol.

Here mean (μ) = 8 and standard deviation = 2

Number of bulbs = 5000

Total months (X) = 12

We know that-

Area (z ≥ 2) = 0.0228

Number of electric bulbs whose life is more than 12 months ( Z> 12)

= 5000 × 0.0228 = 114

Therefore replacement after 12 months = 5000 – 114 = 4886 electric bulbs.

Example:

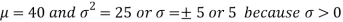

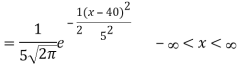

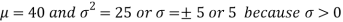

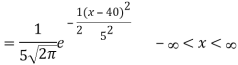

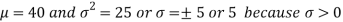

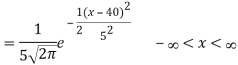

1. If X  then find the probability density function of X.

then find the probability density function of X.

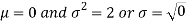

2. If X  then find the probability density function of X.

then find the probability density function of X.

Sol.

1. We are given X

Here

We know that-

Then the p.d.f. will be-

2. We are given X

Here

We know that-

Then the p.d.f. will be-

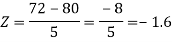

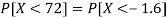

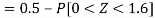

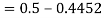

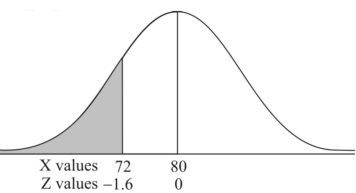

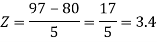

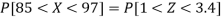

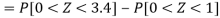

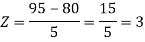

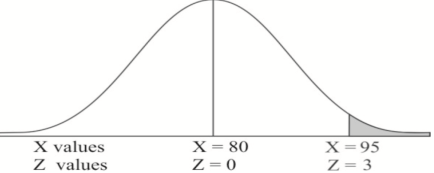

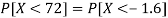

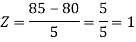

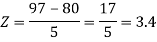

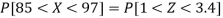

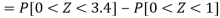

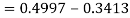

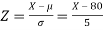

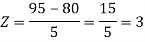

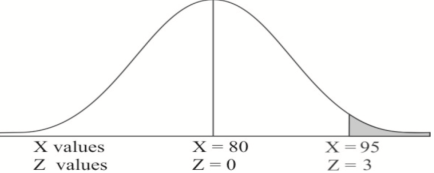

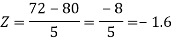

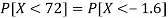

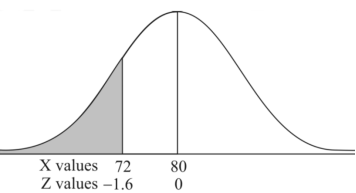

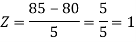

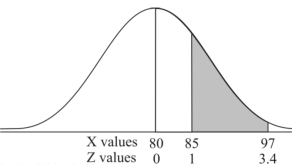

Example: If a random variable X is normally distributed with mean 80 and standard deviation 5, then find-

1. P[X > 95]

2. P[X < 72]

3. P [85 < X <97]

[Note- use the table- area under the normal curve]

Sol.

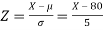

The standard normal variate is –

Now-

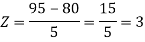

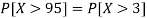

1. X = 95,

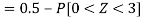

So that-

2. X = 72,

So that-

3. X = 85,

X = 97,

So that-

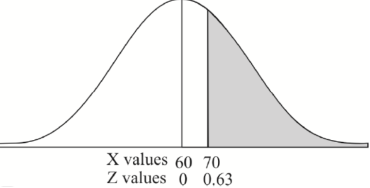

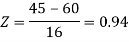

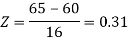

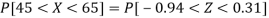

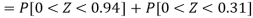

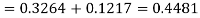

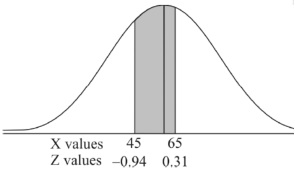

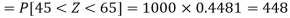

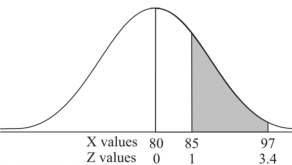

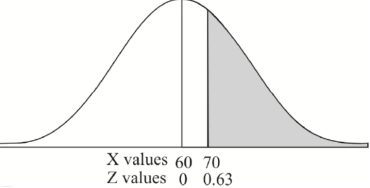

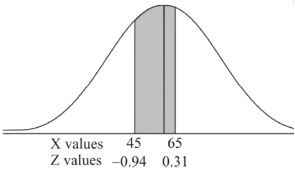

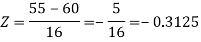

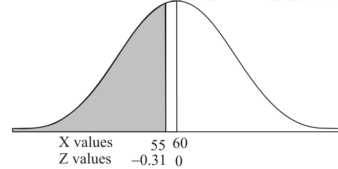

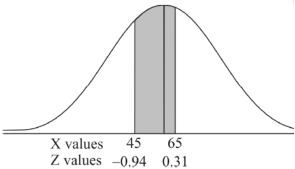

Example: In a company, the mean weight of 1000 employees is 60kg and the standard deviation is 16kg.

Find the number of employees having their weights-

1. Less than 55kg.

2. More than 70kg.

3. Between 45kg and 65kg.

Sol. Suppose X be a normal variate = the weight of employees.

Here mean 60kg and S.D. = 16kg

X

Then we know that-

We get from the data,

Now-

1. For X = 55,

So that-

P [X< 55] = P [Z< - 0.31] = P [Z> 0.31]

= 0.5 – P [0<Z<0.31]

= 0.5 – 0.1217

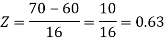

2. For X = 70,

So that-

P [X> 70] = P [Z> 0.63]

= 0.5 – P [0<Z<0.63]

3. For X = 45,

For X = 65,

Hence the number of employees having weights between 45kg and 65kg-

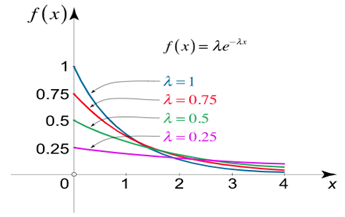

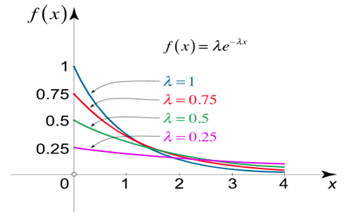

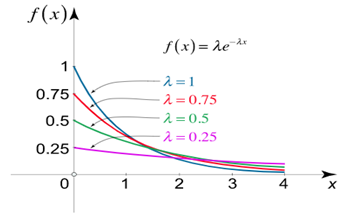

The exponential distribution is a C.D. Which is usually used to define to come time till some precise event happens. Like, the amount of time until a storm or other unsafe weather event occurs follows an exponential distribution law.

The one-parameter exponential distribution of the probability density function PDF is defined:

f(x)=λ ,x≥0

,x≥0

Where, the rate λ signifies the

Normal amount of events in single time.

The mean value is μ=  . The median of the exponential distribution is m =

. The median of the exponential distribution is m =  , and the variance is shown by

, and the variance is shown by  .

.

Note-

If  , then mean < Variance

, then mean < Variance

If  , then mean = Variance

, then mean = Variance

If  , then mean > Variance

, then mean > Variance

The memoryless Property of Exponential Distribution

It can be stated as below

If X has an exponential distribution, then for every constant a  0, one has P[X

0, one has P[X  x + a |X

x + a |X  a] = P[X

a] = P[X  x]for all x i.e. the conditional probability of waiting up to the time 'x +a ' given that it exceeds ‘a’ is same as the probability of waiting up to the time ‘ x ’.

x]for all x i.e. the conditional probability of waiting up to the time 'x +a ' given that it exceeds ‘a’ is same as the probability of waiting up to the time ‘ x ’.

References:

1. Erwin Kreyszig, Advanced Engineering Mathematics, 9thEdition, John Wiley & Sons, 2006.

2. N.P. Bali and Manish Goyal, A textbook of Engineering Mathematics, Laxmi Publications.

3. P. G. Hoel, S. C. Port, and C. J. Stone, Introduction to Probability Theory, Universal Book Stall.

4. S. Ross, A First Course in Probability, 6th Ed., Pearson Education India,2002.

Unit - 4

Probability and Statistics

A random variable is a real-valued function whose domain is a set of possible outcomes of a random experiment and range is a sub-set of the set of real numbers and has the following properties:

i) Each particular value of the random variable can be assigned some probability

Ii) Uniting all the probabilities associated with all the different values of the random variable gives the value 1.

A random variable is denoted by X, Y, Z etc.

For example if a random experiment E consists of tossing a pair of dice, the sum X of the two numbers which turn up have the value 2,3,4,…12 depending on chance. Then X is a random variable

Discrete random variable-

A random variable is said to be discrete if it has either a finite or a countable number of values. Countable number of values means the values which can be arranged in a sequence.

Note- if a random variable takes a finite set of values it is called discrete and if if a random variable takes an infinite number of uncountable values it is called continuous variable.

Discrete probability distributions-

Let X be a discrete variate which is the outcome of some experiments. If the probability that X takes the values of x is Pi , then-

P(X = xi) = pi for I = 1, 2, . . .

Where-

- p(xi) ≥ 0 for all values of i

The set of values xi with their probabilities pi makes a discrete probability distribution of the discrete random variable X.

Probability distribution of a random variable X can be exhibited as follows-

X | x1 | x2 | x3 And so on |

P(x) | p(x1) | p(x2) | p(x3) |

Example: Find the probability distribution of the number of heads when three coins are tossed simultaneously.

Sol.

Let be the number of heads in the toss of three coins

The sample space will be-

{HHH, HHT, HTH, THH, HTT, THT, TTH, TTT}

Here variable X can take the values 0, 1, 2, 3 with the following probabilities-

P[X= 0] = P[TTT] = 1/8

P[X = 1] = P [HTT, THH, TTH] = 3/8

P[X = 2] = P[HHT, HTH, THH] = 3/8

P[X = 3] = P[HHH] = 1/8

Hence the probability distribution of X will be-

X | 0 | 1 | 2 | 3 |

P(x) | 1/8 | 3/8 | 3/8 | 1/8 |

Example: For the following probability distribution of a discrete random variable X,

X | 0 | 1 | 2 | 3 | 4 | 5 |

p(x) | 0 | c | c | 2c | 3c | c |

Find-

1. The value of c.

2. P[1<x<4]

Sol,

- We know that-

So that-

0 + c + c + 2c + 3c + c = 1

8c = 1

Then c = 1/8

Now, 2. P[1<x<4] = P[X = 2] + P[X = 3] = c + 2c = 3c = 3× 1/8 = 3/8

Continuous probability distribution n-

When a random variable X takes every value in an interval, it gives to continuous distribution of X.

The probability distribution of a continuous variate x defined by a function f(x) such that the probabilities of the variate x falling in the interval x = ½ dx to x + ½ dx

We express it as-

P(x – ½ dx ≤ x ≤ x + ½ dx) = f(x) dx

Here f(x) is called the probability density function.

Distribution function-

If F(x) = P(X≤x) =  then F(x) is called as the distribution function of X.

then F(x) is called as the distribution function of X.

It has the following properties-

1. F(-∞) = 0

2. F(∞) = 1

3. P(a ≤ x ≤ b) =

Example: Show that the following function can be defined as a density function and then find P(1 ≤ x ≤ 2).

f(x) = e-x x ≥ 0

= 0 x < 0

Sol.

Here

So that, the function can be defined as a density function.

Now.

P(1 ≤ x ≤ 2) =

Independent random variables-

Two discrete random variables X and Y are said to be independent only if-

Note- Two events are independent only if

Example: Two discrete random variables X and Y have-

And

P[X = 1, Y = 1] = 5/9.

Check whether X and Y are independent or not?

Sol.

First, we write the given distribution In tabular form-

X/Y | 0 | 1 | P(x) |

0 | 2/9 | 1/9 | 3/9 |

1 | 1/9 | 5/9 | 6/9 |

P(y) | 3/9 | 6/9 | 1 |

Now-

But

So that-

Hence X and Y are not independent.

Continuous Random Variables:

A continuous random variable is a random variable where the data can take infinitely many values. For example, a random variable measuring the time taken for something to be done is continuous since there is an infinite number of possible times that can be taken.

A continuous random variable is called by a probability density function p (x), with given properties: p (x) ≥ 0 and the area between the x-axis & the curve is 1: ... Standard deviation of a variable Random is defined by σ x = √Variance (x).

- A continuous random variable is known by a probability density function p(x), with these things: p(x) ≥ 0 and the area on the x-axis and the curve is 1:

∫-∞∞ p(x) dx = 1.

2. The expected value E(x) of a discrete variable is known as:

E(x) = Σi=1n xi pi

3. The expected value E(x) of a continuous variable is called:

E(x) = ∫-∞∞ x p(x) dx

4. The Variance(x) of a random variable is known as Variance(x) = E[(x - E(x)2].

5. 2 random variable x and y are independent if E[xy] = E(x)E(y).

6. The standard deviation of a random variable is known as σx = √Variance(x).

7. Given the value of standard error is used in its place of a standard deviation when denoting the sample mean.

σmean = σx / √n

8. If x is a normal random variable with limitsμ and σ2 (spread = σ), mark in symbols: x ˜ N(μ, σ2).

9. The sample variance of x1, x2, ..., xn is given by-

sx2 = | (x1 - x)2 + ... + (xn - x)2 n – 1

|

10. If x1, x2, ..., xn are explanationssince a random sample, the sample standard deviation s is known as the square root of variance:

sx = | √ |

|

11. Sample Co-variance of x1, x2, ..., xn is known-

sxy = |

|

12. A random vector is a column vector of a random variable.

v = (x1 ... xn)T

13. The expected value of Random vector E(v) is known by a vector of the expected value of the component.

If v = (x1 ... xn)T

E(v) = [E(x1) ... E(xn)]T

14. Co-variance of matrix Co-variance(v) of a random vector is the matrix of variances and Co-variance of the component.

If v = (x1 ... xn)T, the ijth component of the Co-variance(v) is sij

Properties

Starting from properties 1 to 7, c is a constant; x and y are random variables.

- E(x + y) = E(x) + E(y).

- E(cx) = c E(y).

- Variance(x) = E(x2) - E(x)2

- If x and y are individual, then Variance(x + y) = Variance(x) + Variance(y).

- Variance(x + c) = Variance(x)

- Variance(cx) = c2 Variance(x)

- Co-variance(x + c, y) = Co-variance(x, y)

- Co-variance(cx, y) = c Co-variance(x, y)

- Co-variance(x, y + c) = Co-variance(x, y)

- Co-variance(x, cy) = c Co-variance(x, y)

- If x1, x2, ...,xn are discrete and N(μ, σ2), then E(x) = μ. We say that x is neutral for μ.

- If x1, x2, ... ,xn are independent and N(μ, σ2), then E(s) = σ2. We can be told S is neutral for σ2.

From given properties 8 to 12, w and v is a random vector; b is a continuous vector; A is a continuous matrix.

8. E(v + w) = E(v) + E(w)

9. E(b) = b

10. E(Av) = A E(v)

11. Co-variance(v + b) = Co-variance(v)

12. Co-variance(Av) = A Co-variance(v) AT

The probability distribution of a discrete random variable is a list of probabilities associated with each of its possible values. It is also sometimes called the probability function or the probability mass function.

Suppose a random variable X may take k different values, with the probability that X = xi defined to be P(X = xi) = pi. The probabilities pi must satisfy the following:

1: 0 < pi < 1 for each i

2: p1 + p2 + ... + pk = 1.

Example

Suppose a variable X can take values 1,2,3, or 4. The probabilities associated with each outcome are described by the following table:

Outcome 1 2 3 4

Probability 0.1 0.3 0.4 0.2

The probability that X is equal to 2 or 3 is the sum of the two probabilities:

P(X = 2 or X = 3) = P(X = 2) + P(X = 3) = 0.3 + 0.4 = 0.7.

Similarly, the probability that X is greater than 1 is equal to 1 - P(X = 1)

= 1 - 0.1 = 0.9,

Example: A random variable x has the following probability distribution-

X | 0 | 1 | 2 | 3 | 4 | 5 |

p(x) | 0 | c | c | 2c | 3c | c |

Then find-

1. Value of c.

2. P[X≤3]

3. P[1 < X <4]

Sol.

We know that for the given probability distribution-

So that-

2.

3.

Continuous Random Variable

A continuous random variable is a random variable where the data can take infinitely many values. For example, a random variable measuring the time taken for something to be done is continuous since there are an infinite number of possible times that can be taken.

Continuous random variable is called by a probability density function p (x), with given properties: p (x) ≥ 0 and the area between the x-axis & the curve is 1: ... Standard deviation of a variable Random is defined by σ x = √Variance (x).

Continuous probability distribution (probability density function)-

When a random variable X takes every value in an interval, it gives to continuous distribution of X.

The probability distribution of a continuous variate x defined by a function f(x) such that the probabilities of the variate x falling in the interval

We express it as-

Here f(x) is called the probability density function.

Distribution function-

If F(x) = P(X≤x) =  then F(x) is called as the distribution function of X.

then F(x) is called as the distribution function of X.

It has the following properties-

1. F(-∞) = 0

2. F(∞) = 1

3.

Probability Density:

Probability density function (PDF) is a arithmetical appearance which gives a probability distribution for a discrete random variable as opposite to a continuous random variable. The difference among a discrete random variable is that we check an exact value of the variable. Like, the value for the variable, a stock worth, only goes two decimal points outside the decimal (Example 32.22), while a continuous variable have an countless number of values (Example 32.22564879…).

When the PDF is graphically characterized, the area under the curve will show the interval in which the variable will decline. The total area in this interval of the graph equals the probability of a discrete random variable happening. More exactly, since the absolute prospect of a continuous random variable taking on any exact value is zero owing to the endless set of possible values existing, the value of a PDF can be used to determine the likelihood of a random variable dropping within a exact range of values.

Probability density function-

Suppose f( x ) be a continuous function of x . Suppose the shaded region ABCD shown in the following figure represents the area bounded by y = f( x ), x –axis and the ordinates at the points x and x + δx , where δx is the length of the interval ( x , x + δx ).if δx is very-very small, then the curve AB will act as a line and hence the shaded region will be a rectangle whose area will be AD × DC this area = probability that X lies in the interval ( x, x +δx )

= P[ x≤ X ≤ x +δx ]

Hence,

Properties of Probability density function-

1.

2.

Example: If a continuous random variable X has the following probability density function:

Then find-

1. P[0.2 < X < 0.5]

Sol.

Here f(x) is the probability density function, then-

Example. The probability density function of a variable X is

X | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

P(X) | k | 3k | 5k | 7k | 9k | 11k | 13k |

(i) Find

(ii) What will be e minimum value of k so that

Solution. (i) If X is a random variable then

(ii)Thus minimum value of k=1/30.

Example. A random variate X has the following probability function

x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

P (x) | 0 | k | 2k | 2k | 3k |  |  |  |

- Find the value of the k.

Solution. (i) If X is a random variable then

Example: Show that the following function can be defined as a density function and then find  .

.

Sol.

Here

So that, the function can be defined as a density function.

Now.

Example:

Let X be a random variable with PDF given by

a, Find the constant c.

b. Find EX and Var (X).

c. Find P(X  ).

).

Solution.

- To find c, we can use

Thus we must have  .

.

b. To find EX we can write

In fact, we could have guessed EX = 0 because the PDF is symmetric around x = 0. To find Var (X) we have

c. To find  we can write

we can write

Example:

Let X be a continuous random variable with PDF given by

If  , find the CDF of Y.

, find the CDF of Y.

Solution. First we note that  , we have

, we have

Thus,

Example:

Let X be a continuous random variable with PDF

Find  .

.

Solution. We have

Let a random variable X has a probability distribution which assumes the values say with their associated probabilities

with their associated probabilities  then the mathematical expectation can be defined as-

then the mathematical expectation can be defined as-

The expected value of a random variable X is written as E(X).

The expected value for a continuous random variable is

OR

The mean value (μ) of the probability distribution of a variate X is commonly known as its expectation current is denoted by E (X). If f(x) is the probability density function of the variate X, then

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

In general expectation of any function is  given by

given by

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

(2) Variance offer distribution is given by

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

Where  is the standard deviation of the distribution.

is the standard deviation of the distribution.

(3) The rth moment about the mean (denoted by  is defined by

is defined by

(discrete function)

(discrete function)

(continuous function)

(continuous function)

(4) Mean deviation from the mean is given by

(discrete distribution)

(discrete distribution)

(continuous distribution)

(continuous distribution)

Example. In a lottery, m tickets are drawn at a time out of tickets numbered from 1 to n. Find the expected value of the sum of the numbers on the tickets drawn.

Solution. Let  be the variables representing the numbers on the first, second,…nth ticket. The probability of drawing a ticket out of n ticket spelling in each case 1/n, we have

be the variables representing the numbers on the first, second,…nth ticket. The probability of drawing a ticket out of n ticket spelling in each case 1/n, we have

Therefore the expected value of the sum of the numbers on the tickets drawn

Example. X is a continuous random variable with probability density function given by

Find k and mean value of X.

Solution. Since the total probability is unity.

Mean of X =

Example. The frequency distribution of a measurable characteristic varying between 0 and 2 is as under

Calculate two standard deviations and also the mean deviation about the mean.

Solution. Total frequency N =

(about the origin)=

(about the origin)=

(about the origin)=

(about the origin)=

Hence,

i.e., standard deviation

Mean derivation about the mean

Example: If a random variable X has the following probability distribution in the tabular form then what will be the expected value of X.

X | 0 | 1 | 2 |

P(x) | 1/4 | 1/2 | 1/4 |

Sol.

We know that-

So that-

Example: Find the expectations of the number of an unbiased die when thrown.

Sol. Let X be a random variable that represents the number on a die when thrown.

X can take the values-

1, 2, 3, 4, 5, 6

With

P[X = 1] = P[X = 2] = P[X = 3] = P[X = 4] = P[X = 5] = P[X = 6] = 1/6

The distribution table will be-

X | 1 | 2 | 3 | 4 | 5 | 6 |

p(x) | 1/6 | 1/6 | 1/6 | 1/6 | 1/6 | 1/6 |

Hence the expectation of number on the die thrown is-

So that-

The variance of a sum

One of the applications of covariance is finding the variance of a sum of several random variables. In particular, if Z = X + Y, then

Var (Z) =Cov (Z,Z)

More generally, for a, b R we conclude

R we conclude

Variance

Consider two random variables X and Y with the following PMFs

(3.3)

(3.3)

(3.4)

(3.4)

Note that EX =EY = 0. Although both random variables have the same mean value, their distribution is completely different. Y is always equal to its mean of 0, while X is IDA 100 or -100, quite far from its mean value. The variance is a measure of how spread out the distribution of a random variable is. Here the variance of Y is quite small since its distribution is concentrated value. Why the variance of X will be larger since its distribution is more spread out.

The variance of a random variable X with mean  , is defined as

, is defined as

By definition, the variance of X is the average value of  Since

Since ≥0, the variance is always larger than or equal to zero. A large value of the variance means that

≥0, the variance is always larger than or equal to zero. A large value of the variance means that  is often large, so X often X value far from its mean. This means that the distribution is very spread out. On the other hand, low variance means that the distribution is concentrated around its average.

is often large, so X often X value far from its mean. This means that the distribution is very spread out. On the other hand, low variance means that the distribution is concentrated around its average.

Note that if we did not square the difference between X and it means the result would be zero. That is

X is sometimes below its average and sometimes above its average. Thus  is sometimes negative and sometimes positive but on average it is zero.

is sometimes negative and sometimes positive but on average it is zero.

To compute  , note that we need to find the expected value of

, note that we need to find the expected value of  , so we can use LOTUS. In particular, we can write

, so we can use LOTUS. In particular, we can write

For example, for X and Y defined in equations 3.3 and 3.4 we have

As we expect, X has a very large variance while Var (Y) = 0

Note that Var (X) has a different unit than X. For example, if X is measured in metres then Var(X) is in  .to solve this issue we define another measure called the standard deviation usually shown as

.to solve this issue we define another measure called the standard deviation usually shown as  which is simply the square root of variance.

which is simply the square root of variance.

The standard deviation of a random variable X is defined as

The standard deviation of X has the same unit as X. For X and Y defined in equations 3.3 and 3.4 we have

Here is a useful formula for computing the variance.

The computational formula for the variance

To prove it note that

Note that for a given random variable X,  is just a constant real number. Thus

is just a constant real number. Thus  so we have

so we have

Equation 3.5 is equally easier to work with compared to  . To use this equation we can find

. To use this equation we can find  using LOTUS.

using LOTUS.

And then subtract  to obtain the variance.

to obtain the variance.

Example.I roll a fair die and let X be the resulting number. Find E(X), Var(X), and

Solution. We have  and

and  for k = 1,2,…,6. Thus we have

for k = 1,2,…,6. Thus we have

Thus,

Theorem

For random variable X and real number a and b

Proof. If

From equation 3.6, we conclude that, for standard deviation,  . We mentioned that variance is NOT a linear operation. But there is a very important case, in which variance behaves like a linear operation and that is when we look at the sum of independent random variables,

. We mentioned that variance is NOT a linear operation. But there is a very important case, in which variance behaves like a linear operation and that is when we look at the sum of independent random variables,

Theorem

If  are independent random variables and

are independent random variables and  , then

, then

Example. If  Binomial (n, p) find Var (X).

Binomial (n, p) find Var (X).

Solution. We know that we can write a Binomial (n, p) random variable as the sum of n independent Bernoulli (p) random variable, i.e.

If  Bernoulli (p) then its variance is

Bernoulli (p) then its variance is

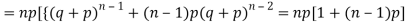

Problem. If  , find Var (X).

, find Var (X).

Solution. We already know  , thus Var (X)

, thus Var (X) . You can find

. You can find  directly using LOTUS, however, it is a little easier to find E [X (X-1)] first. In particular using LOTUS, we have

directly using LOTUS, however, it is a little easier to find E [X (X-1)] first. In particular using LOTUS, we have

So we have  . Thus,

. Thus,  and we conclude

and we conclude

The rth moment of a variable x about the mean x is usually denoted by is given by

The rth moment of a variable x about any point a is defined by

The relation between moments about mean and moment about any point:

Where Xi =x - a and d =

In particular

Note. 1. The sum of the coefficients of the various terms on the right-hand side is zero.

2. The dimension of each term on the right‐hand side is the same as that of terms on the left.

MOMENT GENERATING FUNCTION

The moment generating function of the variate x about x = a is defined as the expected value of et(x – a) and is denoted by Mo(t).

M0(t) =

Where μ, ‘ is the moment of order  about

about

Hence μ ‘ = coefficient of tr/r! or μ’r = [d’/dt’ Ma(t)]t - 0

Again Ma(t) =

Thus the moment generating function about the point a = eat moment generating function about the origin.

A probability distribution is an arithmetical function which defines completely possible values &possibilities that a random variable can take in a given range. This range will be bounded between the minimum and maximum possible values. But exactly where the possible value is possible to be plotted on the probability distribution depends on a number of influences. These factors include the distribution's mean, SD, Skewness, and kurtosis.

It was discovered by a Swiss Mathematician Jacob James Bernoulli in the year 1700.

This distribution is concerned with trials of a repetitive nature in which only the occurrence or non-occurrence, success or failure, acceptance or rejection, yes or no of a particular event is of interest.

For convenience, we shall call the occurrence of the event ‘a success’ and its non-occurrence ‘a failure’.

Let there be n independent trials in an experiment. Let a random variable X denote the number of successes in these n trials. Let p be the probability of a success and q that of a failure in a single trial so that p + q = 1. Let the trials be independent and p be constant for every trial.

BINOMIAL DISTRIBUTION

To find the probability of the happening of an event once, twice, thrice,…r times exactly in n trails.

Let the probability of the happening of an event A in one trial be p and its probability of not happening to be 1 – p – q.

We assume that there are n trials and the happening of the event A is r times and it's not happening is n – r times.

This may be shown as follows

AA……A

r times n – r times (1)

A indicates it's happening  its failure and P (A) =p and P (

its failure and P (A) =p and P (

We see that (1) has the probability

Pp…p qq….q=

r times n-r times (2)

Clearly (1) is merely one order of arranging r A’S.

The probability of (1) = Number of different arrangements of r A’s and (n-r)

Number of different arrangements of r A’s and (n-r) ’s

’s

The number of different arrangements of r A’s and (n-r) ’s

’s

Probability of the happening of event r times =

If r = 0, probability of happening of an event 0 times

If r = 1,probability of happening of an event 1 times

If r = 2,probability of happening of an event 2 times

If r = 3,probability of happening of an event 3 times  and so on.

and so on.

These terms are the successive terms in the expansion of

Hence it is called Binomial Distribution.

Note - n and p occurring in the binomial distribution are called the parameters of the distribution.

Note - In a binomial distribution:

(i) n, the number of trials is finite.

(ii) each trial has only two possible outcomes usually called success and failure.

(iii) all the trials are independent.

(iv) p (and hence q) is constant for all the trials.

Applications of binomial distribution:

1. In problems concerning no. Of defectives in a sample production line.

2. In estimation of reliability of systems.

3. No. Of rounds fired from a gun hitting a target.

4. In Radar detection

Example. If on average one ship in every ten is wrecked. Find the probability that out of 5 ships expected to arrive, 4 at least we will arrive safely.

Solution. Out of 10 ships, one ship is wrecked.

I.e. nine ships out of 10 ships are safe, P (safety) =

P (at least 4 ships out of 5 are safe) = P (4 or 5) = P (4) + P(5)

Example. The overall percentage of failures in a certain examination is 20. If 6 candidates appear in the examination what is the probability that at least five pass the examination?

Solution. Probability of failures = 20%

Probability of (P) =

Probability of at least 5 passes = P(5 or 6)

Example. The probability that a man aged 60 will live to be 70 is 0.65. What is the probability that out of 10 men, now 60, at least seven will live to be 70?

Solution. The probability that a man aged 60 will live to be 70

Number of men= n = 10

Probability that at least 7 men will live to 70 = (7 or 8 or 9 or 10)

= P (7)+ P(8)+ P(9) + P(10) =

Example. Assuming that 20% of the population of a city are literate so that the chance of an individual being literate is  and assuming that a hundred investigators each take 10 individuals to see whether they are illiterate, how many investigators would you expect to report 3 or less were literate.

and assuming that a hundred investigators each take 10 individuals to see whether they are illiterate, how many investigators would you expect to report 3 or less were literate.

Solution.

Required number of investigators = 0.879126118× 100 =87.9126118

= 88 approximate

Example: A die is thrown 8 times then find the probability that 3 will show-

1. Exactly 2 times

2. At least 7 times

3. At least once

Sol.

As we know that-

Then-

1. Probability of getting 3 exactly 2 times will be-

2. Probability of getting 3 at least 7 or 8 times will be-

3. Probability of getting 3 at least once or (1 or 2 or 3 or 4 or 5 or 6 or 7 or 8 times)-

=P (1) + P (2) + P (3) + P (4) + P (5) + P (6) +P (7) + P (8)

Mean of the binomial distribution

Successors r | Frequency f | Rf |

0 |  | 0 |

1 |  |  |

2 |  | n(n-1)  |

3 |  |  |

….. | …… | …. |

N |  |  |

Since

STANDARD DEVIATION OF BINOMIAL DISTRIBUTION

Successors r | Frequency f |  |

0 |  | 0 |

1 |  |  |

2 |  | 2n(n-1)  |

3 |  |  |

….. | …… | …. |

N |  |  |

We know that  (1)

(1)

r is the deviation of items (successes) from 0.

Putting these values in (1) we have

Hence for the binomial distribution, Mean

Example. A die is tossed thrice. Success is getting 1 or 6 on a TOSS. Find the mean and variance of the number of successes.

Solution.

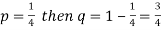

Example: Find the mean and variance of a binomial distribution with p = 1/4 and n = 10.

Sol.

Here

Mean = np =

Variance = npq =

RECURRENCE RELATION FOR THE BINOMIAL DISTRIBUTION

By Binomial Distribution

On dividing (2) by (1), we get

Key takeaways:

- BINOMIAL DISTRIBUTION P(r) = nCr pr. qn - r

- n and p occurring in the binomial distribution are called the parameters of the distribution.

- Mean =

- S.D. = σ = npq

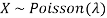

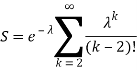

Poisson distribution is a particular limiting form of the Binomial distribution when p (or q) is very small and n is large enough.

Poisson distribution is

Where m is the mean of the distribution.

Proof. In Binomial Distribution

Taking limits when n tends to infinity

MEAN OF POISSON DISTRIBUTION

Success r | Frequency f | f.r |

0 |  | 0 |

1 |  |  |

2 |  |  |

3 |  |  |

… | … | … |

r |  |  |

… | … | … |

STANDARD DEVIATION OF POISSON DISTRIBUTION

Successive r | Frequency f | Product rf | Product  |

0 |  | 0 | 0 |

1 |  |  |  |

2 |  |  |  |

3 |  |  |  |

……. | …….. | …….. | …….. |

r |  |  |  |

…….. | ……. | …….. | ……. |

Hence mean and variance of a Poisson distribution is equal to m. Similarly, we can obtain,

MEAN DEVIATION

Show that in a Poisson distribution with unit mean, and the mean deviation about the mean is 2/e times the standard deviation.

Solution.  But mean = 1 i.e. m =1 and S.D. =

But mean = 1 i.e. m =1 and S.D. =

r | P (r) | |r-1| | P(r)|r-1| |

0 |  | 1 |  |

1 |  | 0 | 0 |

2 |  | 1 |  |

3 |  | 2 |  |

4 |  | 3 |  |

….. | ….. | ….. | ….. |

r |  | r-1 |  |

Mean Deviation =

MOMENT GENERATING FUNCTION OF POISSON DISTRIBUTION

Solution.

Let  be the moment generating function then

be the moment generating function then

CUMULANTS

The cumulant generating function  is given by

is given by

Now  cumulant =coefficient of

cumulant =coefficient of  in K (t) = m

in K (t) = m

i.e.  , where r = 1,2,3,…

, where r = 1,2,3,…

Mean =

RECURRENCE FORMULA FOR POISSON DISTRIBUTION

SOLUTION. By Poisson distribution

On dividing (2) by (1) we get

Example. Assume that the probability of an individual coal miner being killed in a mine accident during a year is  . Use appropriate statistical distribution to calculate the probability that in a mine employing 200 miners, there will be at least one fatal accident in a year.

. Use appropriate statistical distribution to calculate the probability that in a mine employing 200 miners, there will be at least one fatal accident in a year.

Solution.

Example. Suppose 3% of bolts made by a machine are defective, the defects occurring at random during production. If bolts are packaged 50 per box, find

(a) Exact probability and

(b) Poisson approximation to it, that a given box will contain 5 defectives.

Solution.

(a) Hence the probability for 5 defectives bolts in a lot of 50.

(b) To get Poisson approximation m = np =

Required Poisson approximation=

Example. In a certain factory producing cycle tyres, there is a smallchance of 1 in 500 tyres to be defective. The tyres are supplied in lots of 10. Using Poisson distribution, calculate the approximate number of lots containing no defective, one defective, and two defective tyres, respectively, in a consignment of 10,000 lots.

Solution.

S.No. | Probability of defective | Number of lots containing defective |

1. |  |  |

2. |   |  |

3. |   |  |

The normal distribution is a continuous distribution. It is derived as the limiting form of the Binomial distribution for large values of n and p and q are not very small.

The normal distribution is given by the equation

(1)

(1)

Where  = mean,

= mean,  = standard deviation,

= standard deviation,  =3.14159…e=2.71828…

=3.14159…e=2.71828…

On substitution  in (1) we get

in (1) we get  (2)

(2)

Here mean = 0, standard deviation = 1

(2) is known as the standard form of normal distribution.

Graph of a normal probability function-

The curve looks like a bell-shaped curve. The top of the bell is exactly above the mean.

If the value of standard deviation is large then the curve tends to flatten out and for small standard deviation, it has a sharp peak.

This is one of the most important probability distributions in statistical analysis.

MEAN FOR NORMAL DISTRIBUTION

Mean  [Putting

[Putting

STANDARD DEVIATION FOR NORMAL DISTRIBUTION

Put,

MEDIAN OF THE NORMAL DISTRIBUTION

If a is the median then it divides the total area into two equal halves so that

Where,

Suppose  mean,

mean,  then

then

Thus,

Similarly, when  mean, we have a =

mean, we have a =

Thus, median = mean =

MEA DEVIATION ABOUT THE MEAN

Mean deviation

MODE OF THE NORMAL DISTRIBUTION

We know that mode is the value of the variate x for which f (x) is maximum. Thus by differential calculus f (x) is maximum if  and

and

Where,

Thus, mode is  and model ordinate =

and model ordinate =

NORMAL CURVE

Let us show binomial distribution graphically. The probabilities of heads in 1 toss are

. It is shown in the given figure.

. It is shown in the given figure.

If the variates (head here) are treated as if they were continuous, the required probability curve will be normal as shown in the above figure by dotted lines.

Properties of the normal curve

- The curve is symmetrical about the y- axis. The mean, median and mode coincide at the origin.

- The curve is drawn, if the mean (origin of x) and standard deviation are given. The value of

can be calculated from the fact that the area of the curve must be equal to the total number of observations.

can be calculated from the fact that the area of the curve must be equal to the total number of observations. - y decreases rapidly as x increases numerically. The curve extends to infinity on either side of the origin.

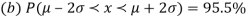

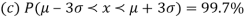

- (a)

AREA UNDER THE NORMAL CURVE

By taking  the standard normal curve is formed.

the standard normal curve is formed.

The total area under this curve is 1. The area under the curve is divided into two equal parts by z = 0. The left-hand side area and right-hand side area to z = 0 are 0.5. The area between the ordinate z = 0.

Example. On a final examination in mathematics, the mean was 72, and the standard deviation was 15. Determine the standard scores of students receiving grades.

(a) 60

(b) 93

(c) 72

Solution. (a)

(b)

(c)

Example. Find the area under the normal curve in each of the cases

(a) Z = 0 and z = 1.2

(b) Z = -0.68 and z = 0

(c) Z = -0.46 and z = -2.21

(d) Z = 0.81 and z = 1.94

(e) To the left of z = -0.6

(f) Right of z = -1.28

Solution. (a) Area between Z = 0 and z = 1.2 =0.3849

(b)Area between z = 0 and z = -0.68 = 0.2518

(c)Required area = (Area between z = 0 and z = 2.21) + (Area between z = 0 and z =-0.46)\

= (Area between z = 0 and z = 2.21)+ (Area between z = 0 and z = 0.46)

=0.4865 + 0.1772 = 0.6637

(d)Required area = (Area between z = 0 and z = 1.+-(Area between z = 0 and z = 0.81)

= 0.4738-0.2910=0.1828

(e) Required area = 0.5-(Area between z = 0 and z = 0.6)