Unit-2

Software Requirement Specifications (SRS)

Elicitation

It has to do with the different methods for learning about the project domain and specifications. Customers, company manuals, current applications of the same kind, specifications, and other project stakeholders are among the numerous sources of domain information.

Interviews, brainstorming, role analysis, Delphi methodology, prototyping, and other methods are used to elicit requirements. Elicitation does not result in systematic models of the understood specifications. Instead, it broadens the analyst's domain awareness and thus aids in providing feedback to the next level.

Analysis

Analysis of requirements is an important and necessary task after elicitation. To render clear and unambiguous demands, we evaluate, refine, and scrutinize the collected specifications. All specifications are checked by this operation which can include a graphical view of the entire system. It is expected that the understandability of the project will substantially increase after the completion of the study.

Here, the dialogue with the client may also be used to illustrate points of misunderstanding and to understand which specifications are more relevant than others.

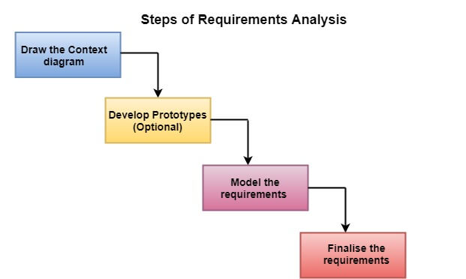

The different steps in the study of requirements are shown in Fig:

Fig 1: steps of requirements analysis

- Draw the context diagram:

A basic model describing the boundaries and interfaces of the proposed structures with the external world is the context diagram. This describes the entities that communicate with the system outside the proposed system.

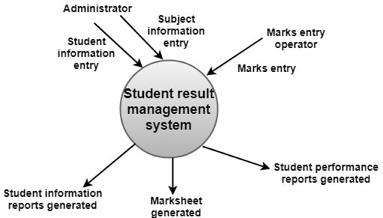

The context diagram of the student outcome management system is given below.

Fig 2: context diagram for student management

2. Development of a Prototype (optional):

Constructing a prototype, something that looks and ideally works as part of the device they claim they want, is one successful way to find out what the consumer needs.

Until the user is consistently pleased, we will use their input to change the prototype. The prototype therefore allows the customer to imagine the device proposed and increase the understanding of requirements.

A prototype may help both parties to make a final decision if developers and users are not sure about some of the elements.

The prototype could be designed at a reasonably low cost and quickly. Therefore, in the final framework, it would still have drawbacks and would not be reasonable. This task is optional.

3. Model the requirements:

Typically, this method consists of different graphical representations of the functions, data entities, external entities, and their relationships. It will help to find wrong, contradictory, incomplete, and superfluous criteria in the graphical view. The Data Flow diagram, Entity-Relationship diagram, Data Dictionaries, State-transition diagrams, etc., contain such models.

4. Finalise the requirements:

We will have a clearer understanding of the behaviour of the system after modelling the requirements. It has established and corrected the contradictions and ambiguities. The flow of information among different modules has been analysed. The practises of elicitation and interpretation have provided greater insight into the scheme. The assessed requirements are now finalised, and the next step is to record these requirements in a specified format.

Review and management of Users Needs

This is a method in which people from the client and contractor organizations collaborate to search for omissions. Different people involved in this form of activity review different sections of the text. During the user needs analysis process, the following tasks are carried out:

● Plan a review

● Review meeting

● Follow-up actions

● Checking for redundancy

● Completeness

● Consistency

Management review:

“Managing user needs is not an easy task; it is dependent on a variety of organizational issues.” This can be accomplished in the following manner:

● Gathering information about the organization's past success, future plans, and objectives.

● Understanding of the existing problem-There must be a good understanding of the currently identified problems and management's objectives.

● Know the user profile—Knowing who operates the device is critical. The majority of organizational charts do not provide details about the customer. In terms of technology capability and exposure, each of these user groups has a somewhat different profile.

Key takeaway:

- Elicitation does not result in systematic models of the understood specifications. Instead, it broadens the analyst's domain awareness and thus aids in providing feedback to the next level.

- Analysis of requirements is an important and necessary task after elicitation.

- Managing user needs is not an easy task; it is dependent on a variety of organizational issues.

- Review is a method in which people from the client and contractor organizations collaborate to search for omissions.

When a customer approaches a company to have a desired application built, they usually have a rough understanding of what functions the software can perform and what functionality they expect from it.

The analysts use this information to conduct a thorough investigation into whether the desired system and its features are feasible to build.

This feasibility report is geared toward the organization's objectives. This study examines whether the software product can be realistically realized in terms of implementation, project contribution to the enterprise, cost constraints, and alignment with the organization's principles and objectives. It delves into the project's and product's technological aspects, such as usability, maintainability, efficiency, and integration capability.

This process should result in a feasibility analysis report that includes sufficient comments and suggestions for management on whether or not the project should be pursued.

Depending on the aspects they cover, there can be many forms of viability. Here are a few examples:

● Technical Feasibility

● Operation Feasibility

● Economical Feasibility

Key takeaway:

- This feasibility report is geared toward the organization's objectives.

- This process should result in a feasibility analysis report that includes sufficient comments and suggestions for management on whether or not the project should be pursued.

Software engineers and website designers use a knowledge model to create an efficient platform that is simple to use and navigate. Many users will find the website or software lacks intuitive functionality and the navigation can be messy, leading users to become irritated if the engineer or designer fails to construct or produce a bad information model.

The majority of these models are hierarchical, with the dominant domain at the top and the deeper domains at the bottom. To make a software or website functional, engineers must consider what the consumer wants from it. Software engineers and website designers may build a program or website from the ground up, with no plan or model.

This strategy, on the other hand, is more likely to result in mistakes, both during production and in the final product's use. If no information model is used before developing a product, the website or software will be difficult to use; it will be difficult to navigate between sites, users will be confused when trying to locate information, and resources or data will be poorly organized. For these purposes, it is recommended that an informational model be created prior to construction.

The information model is based on a hierarchy schema, and the model's complexity is determined by the product and the number of features added by the programmer. The key domain is at the top of the model, from which other features and parts will be mapped. Because of the various factors involved with each, information models for websites and programs are somewhat different.

Different pages and topics are mapped using a website information model. The home page will be at the top of the model, with other pages appearing at lower levels.

Key takeaway:

- The information model is based on a hierarchy schema, and the model's complexity is determined by the product and the number of features added by the programmer.

- Different pages and topics are mapped using a website information model.

● These are directed graphs in which the nodes specify processing activities and arcs (lines with arrowheads) specify the data items transmitted between the processing nodes.

● Like flowcharts, data flow diagrams can be used at any desired level of abstraction.

● Unlike flowcharts, data flow diagrams do not indicate decision logic or conditions under which various processing nodes in the diagram might be activated.

● They might represent data flow :-

● Between individual statements or blocks of statements in a routine,

● Between sequential routines,

● Between concurrent processes,

● Between geographically remote processing units.

● The DFDs have basic two levels of development, they are as follows –

● A Level 0 DFD, also known as Fundamental System Model or Context Model, represents the entire system as a single bubble with incoming arrowed lines as the input data and outgoing arrowed lines as output.

● A Level 1 DFD might contain 5 or 6 bubbles with interconnecting arrows. Each process represented here is the detailed view of the functions shown in the level 0 DFD.

● Here the rectangular boxes are used to represent the external entities that may act as input or output outside the system.

● Round Circles are used to represent any kind of transformation process inside the system.

● Arrow headed lines are used to represent the data object and its direction of flow.

● Following are some guidelines for developing a DFD:-

● Level 0 DFD should depict the entire software system as a single bubble.

● Primary input and output should be carefully noted.

● For the next level of DFD the candidate processes, data objects and stores should be recognized distinctively.

● All the arrows and bubbles should be labeled with meaningful names.

● Information flow continuity should be maintained at all the levels.

● One bubble should be refined at a time.

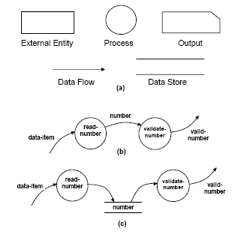

● Primitive symbols used for constructing DFDs:-

Some Symbols are used for constructing DFDs. They are-

● External Entity Symbol: A rectangle is used to show external entities. The external entities are those who communicate with the system by inputting or receiving data from the system. It can be human, hardware, another application software, etc.

● Process Symbol: A circle is used to represent a process or a function

● Data Flow Symbol: An arrow or a directed arc is used to show the data flow. The data flow can be between two processes or between an external entity and a process.

● Data Store Symbol: Two parallel lines are used to represent data store. A data store symbol can represent a data structure or a physical file on disk. The connection between each data store and a process is by the use of data flow symbols.

● Output Symbol: Whenever a hardcopy is produced the output symbol is used to represent the same.

Fig: Symbols used to design the DFDs

Key takeaway:

- It illustrates how data is transformed when moving from input to output and portrays the functions that transform data flow.

- It provides additional information for the study of the information domain and acts as a foundation for function modeling.

- Like flowcharts, data flow diagrams can be used at any desired level of abstraction.

It depicts the relationships between data objects and is used in the data modeling process. Data object description can be used to define the attributes of each object in the Entity Relationship Diagram. It serves as the foundation for data design activities.

The entity relationship data model is focused on a perception of a real world made up of simple objects called entities and their relationships. In the real world, an entity is an object or item that can be distinguished from other objects. An object has a set of characteristics. A relationship is a connection between two or more people.

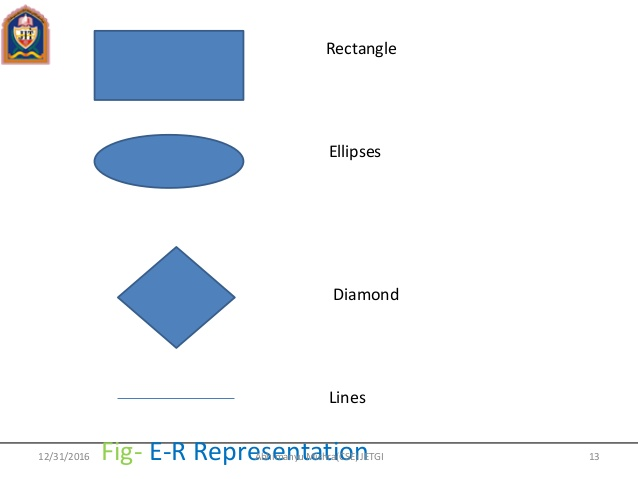

The major components of an ER diagram are as follows:

● Rectangle - which represents entity sets.

● Ellipse - which represents attributes.

● Diamond - which represents relationship sets.

● Lines - which link attributes to entity sets and to relationship sets.

Fig: ER representation

Benefits of the E-R Model-

● It is simple to understand and requires little preparation. As a result, the db designer will use the model to convey design to the end user.

● It has clear entity-to-entity ties.

● It is possible to find a relation from one node to all other nodes in the ER model.

The E-R Model has a number of drawbacks.

● Constraint representation with a few constraints

● Relationship representation is limited.

● There is no representation of data. Knowledge is lost.

Key takeaway:

- It depicts the relationships between data objects and is used in the data modeling process.

- Data object description can be used to define the attributes of each object in the Entity Relationship (ER)Diagram.

- A relationship is a connection between two or more people.

A decision table is a quick visual representation of certain actions to take in response to certain conditions. The knowledge in decision tables may also be interpreted as decision trees or as if-then-else and switch-case statements in a programming language.

A decision table, also known as a cause-effect table, is a good way to settle for various combinations of inputs and outputs. The cause-effect table is named after a similar logical diagramming method known as cause-effect graphing, which is primarily used to generate the decision table.

Decision Table: Combinations

CONDITIONS STEP 1 STEP 2 STEP 3 STEP 4

Condition 1 Y Y N N

Condition 2 Y N Y N

Condition 3 Y N N Y

Condition 4 N Y Y N

Importance of decision table:

● In terms of test design, decision tables are extremely useful.

● It assists testers in determining the effects of various inputs and other software states that must correctly apply business rules.

● When dealing with complex business codes, it is a systematic exercise to plan specifications.

● It's also used to simulate complex logic.

● A decision table is a fantastic tool that can be used in both research and requirements management.

Advantages

● Using this technique, any dynamic business flow can be easily translated into test scenarios and test cases.

● It provides comprehensive coverage of test cases, reducing the amount of time spent rewriting test scenarios and test cases.

● These tables ensure that we take into account all possible combinations of condition values. This is referred to as the property of completeness.

● This approach is easy to understand, and anyone can use it to build test scenarios and test cases.

Key takeaway:

- A decision table is a quick visual representation of certain actions to take in response to certain conditions.

- A decision table, also known as a cause-effect table, is a good way to settle for various combinations of inputs and outputs.

A specification of software requirements (SRS) is a document that explains what the software is supposed to do and how it is expected to work. It also describes the functionality needed by the product to fulfill the needs of all stakeholders (business, users).

A standard SRS consists of -

● A purpose - Initially, the main objective is to clarify and illustrate why this document is important and what the intention of the document is.

● An overall description - The product summary is explained here. It's simply a rundown or an overall product analysis.

● Scope of this document - This outline and demonstrates the overall work and primary goal of the paper and what value it can bring to the consumer. It also provides a summary of the cost of construction and the necessary time.

When embedded in hardware, or when linked to other software, the best SRS documents describe how the software can communicate.

Why we use SRS document -

For your entire project, a software specification is the foundation. It sets the structure that will be followed by any team involved in development.

It is used to provide multiple teams with essential information - creation, quality assurance, operations, and maintenance. This keeps it on the same page for everybody.

The use of the SRS helps ensure fulfilment of specifications. And it can also help you make decisions about the lifecycle of your product, such as when to remove a feature.

Writing an SRS can also reduce time and costs for overall production. The use of an SRS especially benefits embedded development teams.

Key takeaway:

- SRS is a document that explains what the software is supposed to do and how it is expected to work.

- For your entire project, a software specification is the foundation.

- The use of the SRS helps ensure fulfilment of specifications.

Software engineering standards and related documents have been established by the IEEE, ISO, and other standards organizations. A software engineering company may adopt standards willingly or be forced to do so by the customer or other stakeholders. SQA's role is to make sure that the guidelines that have been established are followed and that all work items are compliant with them.

SRS creation is a joint effort that begins with the design of a software project and ends with the conversion of the SRS text. A basic structure and components for SRS have been proposed by several organizations. The IEEE has laid out a basic framework for software creation.

An example of a simple SRS outline is as follows:

Introduction -

● purpose

● Document convention

● Intended audiences

● References

● Additional information

Overall description -

● Product perspective

● Product function

● User environment

● Design

● Assumptions & depended

External interface requirement -

● User interface

● Hardware interface

● Software interface

● Communication protocols and interfaces

System features -

● Description and priority

● Action/result

● Functional requirements

Key takeaway:

- Software engineering standards and related documents have been established by the IEEE, ISO, and other standards organizations.

- The IEEE has laid out a basic framework for software creation.

- SRS creation is a joint effort that begins with the design of a software project and ends with the conversion of the SRS text.

Software testing is a subset of a larger subject known as verification and validation (V&V). Verification is a collection of tasks that ensures that software performs a particular function correctly. Validation is a collection of tasks that ensures that the software that has been developed can be traced back to the customer's specifications.

Here's how Boehm describes it:

Verification: “Are we building the product, right?”

Validation: “Are we building the right product?”

Many software quality assurance practices are included in the concept of V&V.

Verification and certification encompass a broad range of SQA practices, including: technical reviews, quality and configuration audits, performance monitoring, simulation, feasibility studies, documentation reviews, database reviews, algorithm analysis, development testing, usability testing, certification testing, acceptance testing, and installation testing are all examples of testing methods. While testing is critical in V&V, there are several other activities that must be completed.

Testing is the last line of defense in terms of determining accuracy and, most importantly, identifying errors. Testing, on the other hand, should not be perceived as a safety net. “You can't test in quality,” as they claim. It won't be there when you finish testing if it wasn't there before you started.”

Throughout the software engineering process, quality is built into the code. Quality is verified during testing due to proper implementation of methods and tools, accurate technical reviews, and solid management and measurement.

“The fundamental motivation of program testing is to affirm software quality with methods that can be applied to both large-scale and small-scale systems,” Miller says, relating software testing to quality assurance.

Key takeaway:

- Software testing is a subset of a larger subject known as verification and validation.

- Many software quality assurance practices are included in the concept of V&V.

- Verification is a collection of tasks that ensures that software performs a particular function correctly.

- Validation is a collection of tasks that ensures that the software that has been developed can be traced back to the customer's specifications.

The Software Quality Assurance Plan lays out a strategy for implementing software quality assurance. The schedule, which was created by the SQA community (or the software team in the absence of a SQA group), serves as a blueprint for the SQA activities that are implemented for each software project.

The IEEE [IEE93] has released a standard for SQA plans. The standard suggests a framework that includes:

(1) the plan's purpose and scope,

(2) a summary of all software engineering work items (e.g., models, documents, source code) that come under SQA's purview, and

(3) all applicable standards and practices used during the software development process.

(4) SQA behavior and tasks (including evaluations and audits) and their location in the software process,

(5) SQA tools and processes,

(6) software configuration management procedures,

(7) methods for assembling, safeguarding, and managing all SQA-related documents, and

(8) organizational roles and obligations related to product quality.

Key takeaway:

- The Software Quality Assurance Plan lays out a strategy for implementing software quality assurance.

- The IEEE has released a standard for SQA plans.

The Software Quality Framework is a model for software quality that connects and integrates various perspectives on the subject. This system combines the customer's perspective on software quality with the developer's perspective, and it treats software as a commodity. The software product view enumerates the features of a product that affect its ability to meet specified and implied requirements.

This is a structure that explains all of the various definitions related to quality in a consistent manner, using a qualitative scale that can be easily understood and interpreted. As a result, the consumer experience is the most influential consideration for developers. This system links the developer and the consumer in order to arrive at a shared understanding of quality.

Developer view: Validation and verification are two separate approaches that are used together to ensure that a software product meets the specifications and serves its intended function.

Validation ensures that the product design meets the intended use, while testing ensures that the program is free of errors. The design and engineering processes involved in developing applications are the primary source of concern for developers. The degree of conformance to predetermined requirements and standards can be used to assess quality, and deviations from these standards can result in poor quality and reliability.

Many factors affect the developer's perception of software quality. This model focuses on three main points.

The code: It is judged on its accuracy and dependability.

The data: The program integrity is used to calculate it.

Maintainability: It has a variety of metrics, the most basic of which is the mean time to adjust.

Users view: When a consumer buys software, he or she expects it to be of high quality. When end users create their own apps, the output varies. Programming to obtain the outcome of a program solely for personal, rather than public use, is known as end-user programming. The key difference here is that software is not designed to be used by a large number of users with different needs.

Product view: The product view defines consistency as it relates to the product's intrinsic characteristics. Product quality is described as a product's collection of characteristics and features that contribute to its ability to meet specified requirements. The value-based view of product quality considers the quality to be determined by the price a consumer is willing to pay for it.

A high-quality product, according to consumers, is one that meets their requirements while still satisfying their desires and preferences. End-user satisfaction reflects the ability to learn, use, and update the product, and a favorable rating is provided when asked to engage in rating the product.

Key takeaway:

- The Software Quality Framework is a model for software quality that connects and integrates various perspectives on the subject.

- This system combines the customer's perspective on software quality with the developer's perspective, and it treats software as a commodity.

- The software product view enumerates the features of a product that affect its ability to meet specified and implied requirements.

Another popular set of standards related to software quality is the International Organization for Standardization’s (ISO) 9000. ISO is an international standard organization that sets standards for everything from nuts and bolts to, in the case of ISO 9000, quality management and quality assurance.

You may have heard of ISO 9000 or noticed it in advertisements for a company’s products or services. Often it’s a little logo or note next to the company name. It’s a big deal to become ISO 9000 certified, and a company that has achieved it wants to make that fact known to its customers – especially if its competitors aren’t certified.

ISO 9000 is a family of standards on quality management and quality assurance that defines a basic set of good practices that will help a company consistently deliver products (or service) that meet their customer’s quality requirements. It doesn’t matter if the company has run out a garage or is a multi – billion - dollar corporation, is making software, fishing lures, or is delivering pizza. Good management practices apply equally to all of them.

ISO 9000 works well for two reasons:

It targets the development process, not the product. It’s concerned about the way an organization goes about its work, not the results of the work. It doesn’t attempt to define the quality level of the widgets coming off the assembly line or the software on the CD. As you’ve learned, quality is relative and subjective. A company’s goals should be to create the level of quality that its customers want. Having a quality development process will help achieve that.

ISO 9000 dictates only what the process requirements are, not how they are to be achieved. For example, the standard says that a software team should plan and perform product design reviews (see chapters 4 and 6), but it doesn’t say how that requirement should be accomplished. Performing design reviews is a good exercise that a responsible design team should do (which is why it’s in ISO 9000), but exactly how the design review is to be organized and run is up to the individual team creating the product. ISO 9000 tells you what to do but not how to do it.

The sections of the ISO 9000 standard that deal with software are ISO 9001 and ISO 9000–3. ISO 9001 is for businesses that design, develop, produce, install, and service products. ISO 9000–3 is for businesses that develop, supply, install and maintain computer software.

The following list will give you an idea of what type of criteria the standard contains. It will also, hopefully, make you feel a little better, knowing that there’s an international initiative to help companies have a better software development process and to help them build better quality software.

Some of the requirements in ISO 9000-3 include:

● Develop detailed quality plans and procedures to control configuration management, product verification and validation (testing), nonconformance (bugs), and corrective action (fixes).

● Prepare and receive approval for a software development plan that includes a definition of the project, a list of the project’s objectives, a project schedule, a project specification, a description of how the project is organized, a discussion of risks and assumptions, and strategies for controlling it.

● Communicate the specification in items that makes it easy for the customer to understand and to validate during testing.

● Plan, develop, document, and perform software design review procedures.

● Develop Procedures that control software design changes made over the product’s life cycle.

● Develop and document software test plans.

● Develop methods to test whether the software meets the customer’s requirement.

● Perform software validation and acceptance tests.

● Maintain records of the test results.

● Control how software bugs are investigated and resolved.

● Prove that the product is ready before it’s released.

● Develop procedures to control the software’s release process.

● Identify and define what quality information should be collected.

● Use statistical techniques to analyze the software development process.

● Use statistical techniques to Evaluate product quality.

These requirements should all sound pretty fundamental and common sense to you by now. You may even be wondering how a software company could even create software without having these processes in place. It’s amazing that it’s even possible, but it does explain why much of the software on the market is so full of bugs. Hopefully, over time, competition and customer demand will compel more companies in the software industry to adopt ISO 9000 as the means by which they do business.

Key takeaway:

● ISO 9000 is a family of standards on quality management and quality assurance that defines a basis set of good practices

● ISO 9000 dictates only what the process requirements are, not how they are to be achieved.

● ISO 9000 standards that deal with software are ISO 9001 and ISO 9000–3.

The SEI approach provides a measure of the global effectiveness of a company’s software engineering practices and established five process maturity levels that are defined in the following manner:

Level 1: Initial.

The software process is characterized as ad hoc and occasionally even chaotic. Few processes are defined, and success depends on individual effort.

Level 2: Repeatable.

Basic project management processes are established to track cost, schedule, and functionality. The necessary process discipline is in place to repeat earlier successes on projects with similar applications.

Level 3: Defined.

The software process for both management and engineering activities is documented, standardized, and integrated into an organization wide software process. All projects use a documented and approved version of the organization’s process for developing and supporting software. This level includes characteristics defined for level 2.

Level 4: Managed.

Detailed measures of the software process and product quality are collected. Both the software process and products are quantitatively understood and controlled using detailed measures. This level includes all characteristics defined for level 3.

Level 5: Optimizing.

Continuous process improvement is enabled by quantitative feedback from the process and from testing innovative ideas and technologies. This level includes all characteristics defined for level 4.

The five levels defined by the SEI were derived as a consequence of evaluating responses to the SEI assessment questionnaire that is based on the CMM. The results of the questionnaire are distilled to a single numerical grade that provides an indication of an organization’s process maturity.

The SEI has associated key process areas (KPAs) with each of the maturity levels. The KPAs describe those software engineering functions (e.g., software project planning, requirements management) that must be present to satisfy good practice at a particular level. Each KPA is described by identifying the following characteristics:

- Goals: The overall objectives that the KPA must achieve.

- Commitments: Requirements (imposed on the organization) that must be met to achieve the goals or provide proof of intent to comply with the goals.

- Abilities: Those things that must be in place (Organizationally and technically) to enable the organization to meet the commitments.

- Activities: The specific tasks required to achieve the KPA function.

- Methods for monitoring implementation: The manner in which the activities are monitored as they are put into place.

- Methods for verifying implementation: The manner in which proper practice for the KPA can be verified.

Eighteen KPAs (each described using these characteristics) are defined across the maturity model and mapped into different levels of process maturity.

The following KPAs should be achieved at each process maturity level:

- Process maturity level 2

- Software configuration management

- Software quality assurance

- Software subcontract management

- Software project tracking and oversight

- Software project planning

- Requirement management

Ii. Process maturity level 3

- Peer reviews

- Inter – group coordination

- Software product engineering

- Integrated software management

- Training program

- Organization process definition

- Organization process focus

Iii. Process maturity level 4

- Software quality management

- Quantitative process management

Iv. Process maturity level 5

- Process change management

- Technology change management

- Defect prevention

Each of the KPAs is defined by a set of key practices that contribute to satisfying its goals. The key practices are policies, procedures, and activities that must occur before a key process area has been fully instituted. The SEI defines key indicators as “those key practices or components of key practices that offer the greatest insight into whether the goals of key process areas have been achieved”.

Key takeaway:

- The five levels defined by the SEI were derived as a consequence of evaluating responses to the SEI assessment questionnaire that is based on the CMM.

- The SEI has associated key process areas (KPAs) with each of the maturity levels.

- The KPAs describe those software engineering functions that must be present to satisfy good practice at a particular level.

References:

- KK Aggarwal and Yogesh Singh, Software Engineering, New Age International Publishers.

2. Ghezzi, M. Jarayeri, D. Mandrioli, Fundamentals of Software Engineering, PHI Publication.

3. Ian Sommerville, Software Engineering, Addison Wesley.