Unit III

Bivariate Distributions

A bivariate distribution, set only, is the probability that a definite event will happen when there are 2 independent random variables in your scenario. E.g., having two bowls, individually complete with 2dissimilarkinds of candies, and drawing one candy from each bowl gives you 2 independent random variables, the 2dissimilar candies. Since you are pulling one candy from each bowl at the same time, you have a bivariate distribution when calculating your probability of finish up with specific types of candies.

Bivariate discrete random variables-

Let X and Y be two discrete random variables defined on the sample space S of a random experiment then the function (X, Y) defined on the same sample space is called a two-dimensional discrete random variable. In others words, (X, Y) is a two-dimensional random variable if the possible values of (X, Y) are finite or countably infinite. Here, each value of X and Y is represented as a point ( x, y ) in the xy-plane.

Joint probability mass function-

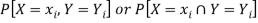

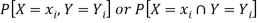

Let (X, Y) be two-dimensional discrete random variables with each possible outcome  , we associate a number

, we associate a number  representing-

representing-

|

And satisfying the given conditions-

|

The function p defined for all  is called joint probability mass function of X and Y.

is called joint probability mass function of X and Y.

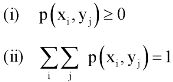

Conditional probability mass function-

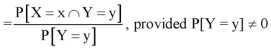

Let (X, Y) be a discrete two-dimensional random variable. Then the conditional probability mass function of X, given Y = y is defined as-

Because-

|

A bivariate distribution, set only, is the probability that a definite event will happen when there are 2 independent random variables in your scenario. E.g, having two bowls, individually complete with 2dissimilarkinds of candies, and drawing one candy from each bowl gives you 2 independent random variables, the 2dissimilar candies. Since you are pulling one candy from each bowl at the same time, you have a bivariate distribution when calculating your probability of finish up with specific types of candies.

Properties:

Properties:

Property-1

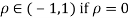

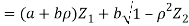

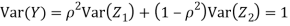

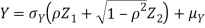

Two random variables X and Y are said to be bivariate normal, or jointly normal distribution for all

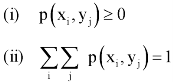

Properties 2:

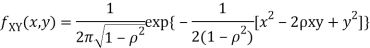

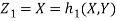

Two random variables X and Y are set to have the standard bivariate normal distribution with correlation efficient if their joint PDF is given by

|

Where  then we just say X and Y have the standard by will it normal distribution.

then we just say X and Y have the standard by will it normal distribution.

Properties 3:

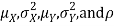

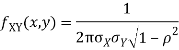

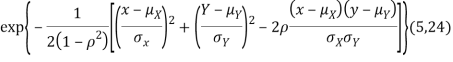

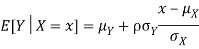

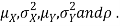

Two random variables X and Y are set to have a bivariate normal distribution with parameters  if their joint PDF is given by

if their joint PDF is given by

|

Where  are all constants.

are all constants.

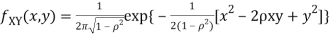

Properties 4:

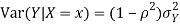

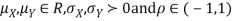

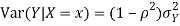

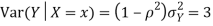

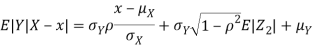

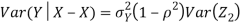

Suppose X and Y are jointly normal random variables with parameters  . Then given X = x, Y is normally distributed with

. Then given X = x, Y is normally distributed with

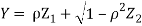

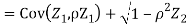

Example. Let    Where  |

b. Show that X and Y are bivariate normal.

c. Find the joint PDF of X and Y.

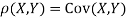

d. Find  (X,Y)

(X,Y)

Solution.

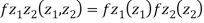

First note that since  are normal and independent they are jointly normal with the joint PDF

are normal and independent they are jointly normal with the joint PDF

Which is the linear combination of

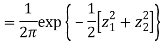

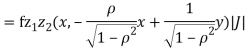

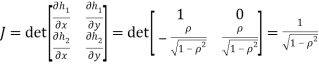

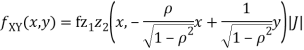

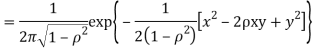

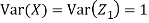

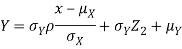

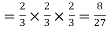

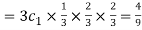

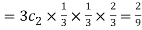

We have   Where,  Thus we conclude that    b. To find    Therefore,

|

Example:

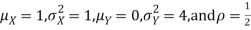

Let X and Y be jointly normal random variable with parameters

- Find P (2X+ Y≤3)

- Find

- Find

Solution.

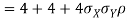

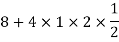

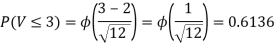

- Since X and Y are jointly normal the random variables V =2 X +Y is normal. We have

Thus V ~ N (2, 12). Therefore,

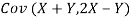

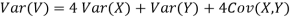

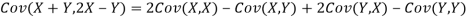

b. Note that Cov (X,Y)=  (X,Y) =1. We have

(X,Y) =1. We have

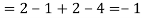

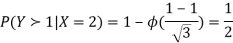

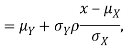

c. Using properties we conclude that given X =2, Y is normally distributed with

Example:

Let X and Y be jointly normal random variables with parameters  Find the conditional distribution of Y given X =x.

Find the conditional distribution of Y given X =x.

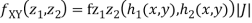

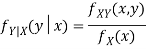

Sol. One way to solve this problem is by using the joint PDF formula since X

Sol. One way to solve this problem is by using the joint PDF formula since X N

N  we can use

we can use

Thus given X=x, we have

And,

Since  are independent, knowing

are independent, knowing  does not provide any information on

does not provide any information on  . We have shown that given X=x,Y is a linear function of

. We have shown that given X=x,Y is a linear function of  , thus it is normal. Ln particular

, thus it is normal. Ln particular

We conclude that given X=x,Y is normally distributed with mean  and variance

and variance

Key takeaways-

Given random variables X and Y that are defined on a probability space, the joint probability distribution for X and Y is a probability distribution that gives the probability that each of X and Y falls in any particular range or discrete set of values specified for that variable. In the case of only two random variables, this is called a bivariate distribution, but the concept generalizes to any number of random variables, giving a multivariate distribution.

The joint probability distribution can be expressed either in terms of a joint cumulative distribution function or in terms of a joint probability density function (in the case of continuous variables) or joint probability mass function (in the case of discrete variables). These in turn can be used to find two other types of distributions: the marginal distribution giving the probabilities for any one of the variables with no reference to any specific ranges of values for the other variables, and the conditional probability distribution giving the probabilities for any subset of the variables conditional on particular values of the remaining variables.

Example. A die is tossed thrice. A success is getting 1 or 6 on a toss. Find the mean and variance of the number of successes.

Solution

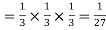

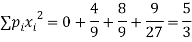

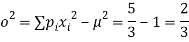

Probability of success  probability of failures

probability of failures

Probability of no success= probability of all three failures

Probability of one successes and two failures

Probability of Two successes and one failure

Probability of three successes

| 1 | 2 | 3 |

| 4/9 | 2/9 | 1/27 |

Mean

Variance,

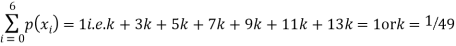

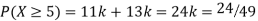

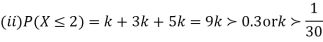

Example. The probability density function of a variate X is

X | 0 | 1 | 2 | 3 | 4 | 5 | 6 |

P (X) | k | 3k | 5k | 7k | 9k | 11k | 13k |

Solution. (I) If X is random variable then

hus minimum value of k =1/30

hus minimum value of k =1/30

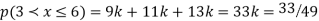

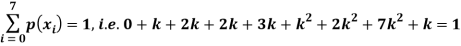

Example. Random variable X has the following probability function

x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

P (x) | 0 | k | 2k | 2k | 3k |  |  |  |

(i) Find the value of the k

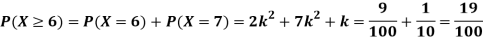

(ii) Evaluate P (X < 6), P (X≥6)

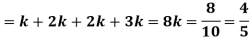

(iii)

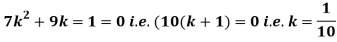

Solution. (i) if X is a random variable then

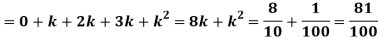

(ii)P (X < 6) =P( X=0) +P(X=1)+P(X=2)+ P(X=3) +P(X=4) + P (X=5)

(iv)

In probability theory assumed two jointly distributed R.V. X & Y the conditional probability distribution of Y given X is the probability distribution of Y when X is known to be a precise value; in some suitcases the conditional probabilities may be stated as functions containing the unnamed x value of X as a limitation. Once together X and Yare given variables, a conditional probability is characteristically used to indicate the conditional probability. The conditional distribution differences with the marginal distribution of a random variable, which is distribution deprived of reference to the value of the additional variable.

the conditional probability distribution of Y given X is the probability distribution of Y when X is known to be a precise value; in some suitcases the conditional probabilities may be stated as functions containing the unnamed x value of X as a limitation. Once together X and Yare given variables, a conditional probability is characteristically used to indicate the conditional probability. The conditional distribution differences with the marginal distribution of a random variable, which is distribution deprived of reference to the value of the additional variable.

If conditional distribution of Y under X is a continuous distribution, then probability density function is called as conditional density function. The properties of a conditional distribution, such as the moments, are frequently denoted to by corresponding names such as the conditional mean and conditional variance.

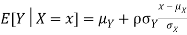

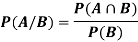

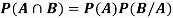

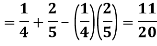

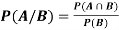

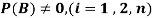

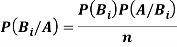

Let A and B are two event in a sample space S and P(B)≠0. Then the probability that an event A occurs once B has occurred or the conditional probability of A given B which is defined as-

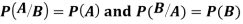

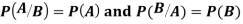

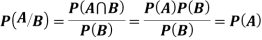

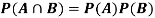

Note- If two events A and B are independent then-

Theorem. If the events A and B defined on a sample space S of a random experiment are independent then  Proof. A and B are given to be independent events.    Multiplication theorem for conditional probability-

|

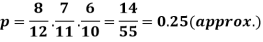

Example: A bag contains 12 pens of which 4 are defective. Three pens are picked at random from the bag one after the other.

Then find the probability that all three are non-defective.

Sol. Here the probability of the first which will be non-defective = 8/12

By the multiplication theorem of probability,

If we draw pens one after the other then the required probability will be-

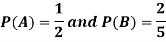

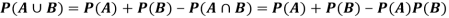

Example: The probability of A hits the target is 1 / 4 and the probability that B hits the target is 2/ 5. If both shoot the target then find the probability that at least one of them hits the target.

Sol.

Here it is given that-

Now we have to find-

Both two events are independent. So that-

Key takeaways-

- If two events A and B are independent then-

3. If the events A and B defined on a sample space S of a random experiment are independent then

4. Multiplication theorem for conditional probability-

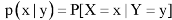

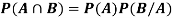

If  ,

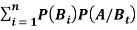

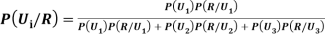

,  are mutually exclusive events with

are mutually exclusive events with  of a random experiment then for any arbitrary event

of a random experiment then for any arbitrary event  of the sample space of the above experiment with

of the sample space of the above experiment with  , we have

, we have

|

Example1: An urn  contains 3 white and 4 red balls and an urn lI contains 5 white and 6 red balls. One ball is drawn at random from one ofthe urns and isfound to be white. Find the probability that it was drawn from urn 1.

contains 3 white and 4 red balls and an urn lI contains 5 white and 6 red balls. One ball is drawn at random from one ofthe urns and isfound to be white. Find the probability that it was drawn from urn 1.

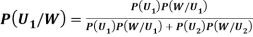

Solution: Let  : the ball is drawn from urn I

: the ball is drawn from urn I

: the ball is drawn from urn II

: the ball is drawn from urn II

: the ball is white.

: the ball is white.

We have to find

By Bayes Theorem

|

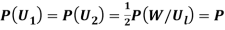

Since two urns are equally likely to be selected,  (a white ball is drawn from urn

(a white ball is drawn from urn  )

)

(a white ball is drawn from urn II)

(a white ball is drawn from urn II)

From(1),

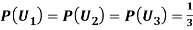

Example2: Three urns contain 6 red, 4 black, 4 red, 6 black; 5 red, 5 black balls respectively. One of the urns is selected at random and a ball is drawn from it. Lf the ball drawn is red find the probability that it is drawn from the first urn.

Solution:

Let  : the ball is drawn from urn 1.

: the ball is drawn from urn 1.

: the ball is drawn from urn lI.

: the ball is drawn from urn lI.

: the ball is drawn from urn 111.

: the ball is drawn from urn 111.

: the ball is red.

: the ball is red.

We have to find  .

.

By Baye’s Theorem,

... (1)

... (1)

Since the three urns are equally likely to be selected

Also  (a red ball is drawn from urn

(a red ball is drawn from urn  )

)

(R/

(R/ )

)  (a red ball is drawn from urn II)

(a red ball is drawn from urn II)

(a red ball is drawn from urn III)

(a red ball is drawn from urn III)

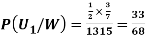

From (1), we have

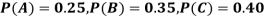

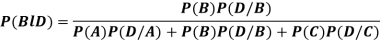

Example3: ln a bolt factory machines  and

and  manufacturerespectively 25%, 35% and 40% of the total. Lf their output 5, 4 and 2 per cent are defective bolts. A bolt is drawn at random from the product and is found to be defective. What is the probability that it was manufactured by machine B.

manufacturerespectively 25%, 35% and 40% of the total. Lf their output 5, 4 and 2 per cent are defective bolts. A bolt is drawn at random from the product and is found to be defective. What is the probability that it was manufactured by machine B. ?

?

Solution: bolt is manufactured by machine

: bolt is manufactured by machine

: bolt is manufactured by machine

: bolt is manufactured by machine

: bolt is manufactured by machine

The probability ofdrawing a defective bolt manufactured by machine  is

is  (D/A)

(D/A)

Similarly,  (D/B)

(D/B)  and

and  (D/C)

(D/C)

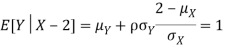

By Baye’s theorem

|

References:

1. E. Kreyszig, “Advanced Engineering Mathematics”, John Wiley & Sons, 2006.

2. P. G. Hoel, S. C. Port and C. J. Stone, “Introduction to Probability Theory”, Universal Book Stall, 2003.

3. S. Ross, “A First Course in Probability”, Pearson Education India, 2002.

4. W. Feller, “An Introduction to Probability Theory and its Applications”, Vol. 1, Wiley, 1968.

5. N.P. Bali and M. Goyal, “A text book of Engineering Mathematics”, Laxmi Publications, 2010.

6. B.S. Grewal, “Higher Engineering Mathematics”, Khanna Publishers, 2000.

7. T. Veerarajan, “Engineering Mathematics”, Tata McGraw-Hill, New Delhi, 2010.