Unit - 5

Wireless and Mobile Technologies and Protocols and their performance evaluation

The history of wireless follows following hierarchy. After the Second World War many national and international projects in the area of wireless communications were triggered off.

In ancient times the light was modulated either ON or OFF pattern used for wireless communication. Flags were used to signal code words. Smoke signals were used in wireless communication as early as 150 BC

- In 1982 the European Conference of Postal and Telecommunications Administrations founded working group.

- In 1987 the Global System for Mobile communications standard was available

- In 1991 in Switzerland the first devices are presented

- In 1995SMS was available

- In 1998, Universal Mobile Telecommunication Systems (UMTS) developed by Europeans.

- In 1999, the 802.11b, Bluetooth was standardized.

- In 2000, General Packet Radio Service (GPRS) IEEE802.11a was developed

- In 2001, International Mobile Telecommunications (IMT – d-2000) was standardized

- The year 2007, is the fourth generation the Internet based.

Key takeaway

The development in the wireless system is explained. Old times used light On and OFF for wireless communication.

Mobile computing is an interaction between human and computer by which a computer is expected to be motivating during normal usage. Mobile computing involves software, hardware and mobile communication. Respectively, mobile software deals with the requirements of mobile applications. Also, hardware includes the components and devices which are needed for mobility. Communication issues include ad-hoc and infrastructure networks, protocols, communication properties, data encryption and concrete technologies.

Mobile Computing is a technology that allows transmission of data, voice and video via a computer or any other wireless enabled device without having to be connected to a fixed physical link.

In the last 10 years, the rise of mobile phones as well as laptops has dramatically, Increased the availability of mobile devices to businesses and home users. More recently, Smaller portable devices such as PDAs and especially embedded devices (e.g. Washing Machines, sensors) have slowly changed the way humans live and think of computers.

Mobile computing is associated with the mobility of hardware, data and software in computer applications. The study of this new area of computing has prompted the need to rethink carefully about the way in which mobile network and systems are conceived. Even though mobile and traditional distributed systems may appear to be closely related, there area number of factors that differentiate the two, especially in terms of type of device (fixed/mobile),network connection (permanent/intermittent) and execution context (static/dynamic)

A cellular or mobile phone is a long range portable electronic device for communication over long distance. Current Mobile Phones can support many latest services such as SMS, GPRS, MMS, email, packet switching, WAP, Bluetooth and many more. Most of the mobile phones connect to the cellular networks and which are further connected with the PSTN (Public switching telephone network). Besides mobile communications, there is a wide range of mobile products available such mobile scanners, mobile printers and mobile labellers.

Conceptually, computing can be seen as: USER ⇔ COMP.SYS ⇔ ENVIRONMENT

Key takeaway

A cellular or mobile phone is a long range portable electronic device for communication over long distance.

Mobile computing is an interaction between human and computer by which a computer is expected to be motivating during normal usage.

Long Term Evolution (LTE)

The current dominant cellular transmission technology. Superseding 3G, LTE is a 4G technology that uses the GSM software infrastructure but different hardware interfaces. LTE/4G will coexist with 5G for some time.

Download speeds in the U.S. Run the gamut from roughly 5 to 85 Mbps. Standardized in 2008, the first LTE smartphones appeared in 2011.

LTE provides global interoperability in more than three dozen frequency bands worldwide. However, no single phone supports all channels. Speed and other enhancements were made to the original LTE standard

LTE Is Based on IP Packets

In 3G and all prior cellular networks, voice was handled by the traditional circuit-switched network, and only data used the packet switched architecture of the Internet. However, LTE's Evolved Packet System (EPS) transmits both voice and data in IP packets. EPS comprises the OFDMA-based E-UTRAN air interface and

Evolved Packet Core (EPC).

In 2010, the ITU defined LTE, WiMAX and HSPA+ as 4G technologies. Previously, only LTE Advanced (LTE-A) was considered to be 4G. See LTE Advanced, LTE architecture, IP Multimedia Subsystem and 3GPP.

5G is the new technology in the field of cellular network. It is the technology with many advantages over the old generation of cellular networks. It is designed such that it will increase the speed, reduce error and make it more flexible for wireless communication. It can offer speeds up to 2Gbps.

Working of 5G

This technology has improved architecture and also utilises the spectrum which was unused in 4G. The technology called Multiple input and multiple output abbreviated as MIMO is employed. This has many transmitters and receivers so that large data can be transferred. It is not just limited to new radio spectrum. It will have a software platform for networking. It will provide advancements in virtualization, cloud-based technology. The business process automation allows the 5G to be flexible enough to provide easy user access any time.5G networks can create software-defined subnetwork constructs known as network slices. These slices enable network administrators to dictate network functionality based on users and devices.

Architecture of 5G

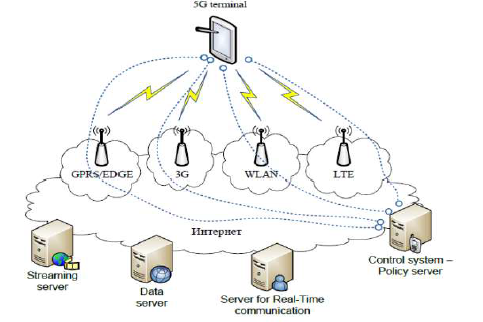

The 5G model is based completely up on IP. There are various independent radio access technologies with a main user terminal. For outsider internet world each of the radio technologies is considered as the IP link. In order to get proper routing of IP packets related to some applications the IP technology is designed exclusively to get sufficient control. Moreover, to make accessible routing of packets should be fixed in accordance with the given policies of the user.

Fig 1 Architecture

The master core technology is basically used in present wireless network system. Due to this design, it becomes easy to operate parallel multimode which includes all the IP networks and 5G network mode. The network technologies such as RAN and DAT (Different Access Network) can be controlled easily through this mode. This mode is less complicated more efficient and powerful as it manages all the new deployments. World Combination Service Mode is being preferred because any service mode can be opened under this. This technology can be understood with a very basic example as when any teacher writes on board in any country it can be displayed on any other board in any other country except conversation and video.

Advantage of 5G

- It can utilise large bandwidth and offers high resolution.

- It is very efficient.

- Technology to facilitate subscriber supervision tools for the quick action.

- Most likely, will provide a huge broadcasting data (in Gigabit), which will support more than 60,000 connections.

- Easily manageable with the previous generations.

- Technological sound to support heterogeneous services (including private network).

- Possible to provide uniform, uninterrupted, and consistent connectivity across the world.

The field of computer networks has grown significantly in the last three decades. An interesting usage of computer networks is in offices and educational institutions, where tens (sometimes hundreds) of personal computers (PCs) are interconnected, to share resources (e.g., printers) and exchange information, using a high-bandwidth communication medium (such as the Ethernet). These privately-owned networks are known as local area networks (LANs) which come under the category of small-scale networks (networks within a single building or campus with a size of a few kilometres).

To do away with the wiring associated with the interconnection of PCs in LANs, researchers have explored the possible usage of radio waves and infrared light for interconnection. This has resulted in the emergence of wireless LANs (WLANs), where wireless transmission is used at the physical layer of the network. Wireless personal area networks (WPANs) are the next step down from WLANs, covering smaller areas with low power transmission, for networking of portable and mobile computing devices such as PCs, personal digital assistants (PDAs), which are essentially very small computers designed to consume as little power as possible so as to increase the lifetime of their batteries, cell phones, printers, speakers, microphones, and other consumer electronics.

FUNDAMENTALS OF WLANS

The terms "node," "station," and "terminal" are used interchangeably. While both portable terminals and mobile terminals can move from one place to another, portable terminals are accessed only when they are stationary. Mobile terminals (MTs), on the other hand, are more powerful, and can be accessed when they are in motion. WLANs aim to support truly mobile work stations.

Differences Between Wireless and Wired Transmission

- Address is not equivalent to physical location: In a wireless network, address refers to a particular station and this station need not be stationary. Therefore, address may not always refer to a particular geographical location.

- Dynamic topology and restricted connectivity: The mobile nodes may often go out of reach of each other. This means that network connectivity is partial at times.

- Medium boundaries are not well-defined: The exact reach of wireless signals cannot be determined accurately. It depends on various factors such as signal strength and noise levels. This means that the precise boundaries of the medium cannot be determined easily.

- Error-prone medium: Transmissions by a node in the wireless channel are affected by simultaneous transmissions by neighboring nodes that are located within the direct transmission range of the transmitting node. This means that the error rates are significantly higher in the wireless medium. We need to build a reliable network on top of an inherently unreliable channel. This is realized in practice by having reliable protocols at the MAC layer, which hide the unreliability that is present in the physical layer. Uses of WLANs Wireless computer networks are capable of offering versatile functionalities. WLANs are very flexible and can be configured in a variety of topologies based on the application. Some possible uses of WLANs are mentioned below.

- Users would be able to surf the Internet, check e-mail, and receive Instant Messages on the move.

- In areas affected by earthquakes or other such disasters, no suitable infrastructure may be available on the site. WLANs are handy in such locations to set up networks on the fly.

- There are many historic buildings where there has been a need to set up computer networks. In such places, wiring may not be permitted or the building design may not be conducive to efficient wiring. WLANs are very good solutions in such places.

Design Goals

The following are some of the goals which have to be achieved while designing WLANs:

- Operational simplicity: Design of wireless LANs must incorporate features to enable a mobile user to quickly set up and access network services in a simple and efficient manner.

- Power-efficient operation: The power-constrained nature of mobile computing devices such as laptops and PDAs necessitate the important requirement of WLANs operating with minimal power consumption. Therefore, the design of WLAN must incorporate power-saving features and use appropriate technologies and protocols to achieve this.

- License-free operation: One of the major factors that affects the cost of wireless access is the license fee for the spectrum in which a particular wireless access technology operates. Low cost of access is an important aspect for popularizing a WLAN technology. Hence the design of WLAN should consider the parts of the frequency spectrum (e.g., ISM band) for its operation which do not require an explicit licensing.

- Tolerance to interference: The proliferation of different wireless networking technologies both for civilian and military applications and the use of the microwave frequency spectrum for non-communication purposes (e.g., microwave ovens) have led to a significant increase in the interference level across the radio spectrum. The WLAN design should account for this and take appropriate measures by way of selecting technologies and protocols to operate in the presence of interference.

- Global usability: The design of the WLAN, the choice of technology, and the selection of the operating frequency spectrum should take into account the prevailing spectrum restrictions in countries across the world. This ensures the acceptability of the technology across the world.

- Security: The inherent broadcast nature of wireless medium adds to the requirement of security features to be included in the design of WLAN technology.

- Safety requirements: The design of WLAN technology should follow the safety requirements that can be classified into the following: (i) interference to medical and other instrumentation devices and (ii) increased power level of transmitters that can lead to health hazards. A well-designed WLAN should follow the power emission restrictions that are applicable in the given frequency spectrum.

- Quality of service requirements: Quality of service (QoS) refers to the provisioning of designated levels of performance for multimedia traffic. The design of WLAN should take into consideration the possibility of supporting a wide variety of traffic, including multimedia traffic.

- Compatibility with other technologies and applications: The interoperability among the different LANs (wired or wireless) is important for efficient communication between hosts operating with different LAN technologies. In addition to this, interoperability with existing WAN protocols such as TCP/IP of the Internet is essential to provide a seamless communication across the WANs. 1.2.2 Network Architecture This section lists the types of WLANs, the components of a typical WLAN, and the services offered by a WLAN. Infrastructure Based Versus Ad Hoc LANs WLANs can be broadly classified into two types, infrastructure networks and adhoc LANs, based on the underlying architecture. Infrastructure networks contain special nodes called access points (APs), which are connected via existing networks.

- APs are special in the sense that they can interact with wireless nodes as well as with the existing wired network. The other wireless nodes, also known as mobile stations, communicate via APs. The APs also act as bridges with other networks. Ad hoc LANs do not need any fixed infrastructure. These networks can be setup on the fly at any place. Nodes communicate directly with each other or forward messages through other nodes that are directly accessible.

Key takeaway

The terms "node," "station," and "terminal" are used interchangeably. While both portable terminals and mobile terminals can move from one place to another, portable terminals are accessed only when they are stationary. Mobile terminals (MTs), on the other hand, are more powerful, and can be accessed when they are in motion. WLANs aim to support truly mobile work stations.

Simulation is a technique used for executing the network on to the computer and with this, the behavior of the network is calculated with the help of some mathematical calculations that are used by network organizations. They are used to allow the researchers to develop, test and diagnose network scenarios that are difficult to simulate in the real world. It is basically used to test the new networking protocols or to change the existing protocols under controlled and reproductive environment. Different types of topologies can be designed by using various types of nodes (host, bridges, routers, hubs and mobile units etc.

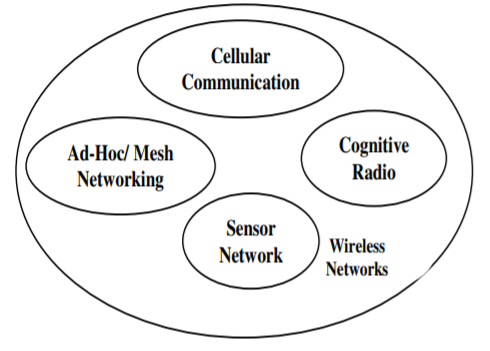

Now a days information society is continuing to emerge and demands for the growth of wireless communication. So, for that purpose, future generation wireless networks are necessary to considered with their increasing necessities. The Future generation wireless networks are characterized by a distributed, dynamic, self-organizing architecture. These networks are mainly classified into different types based upon some specific characteristics viz Cognitive Radio Networks, Ad-Hoc/Mesh Networks, Sensor Networks, etc are shown in Figure. These wireless networks are used in various applications viz business, healthcare, military, gaming etc. The exposure of wireless networking has created many open research issues in network design too and many researchers are trying their best in designing the future generation wireless network.

Fig 2 Different kinds of Wireless Networks

For analysing the performance of wireless networks as well as wired network, three techniques are used; analytical methods, simulation by computer and test bed measurement or physical measurement. In analytical method, attempts have been made to find the analytical solution to problems with the help of some initial conditions and set of parameters. However, it is a very complex method because lots of mathematical analysis has been done at each layer. Therefore, it is also known as comprehensive model for wireless ad-hoc networks. Also, the construction of test bed for any pre-set network is very costly, un-bearable task and requires a lot of efforts.

However, Simulation by computers is the most common method and has proven to be a valuable tool for developing and testing new protocols for wireless network where analytical method is neither applicable nor feasible. Researchers commonly use simulation method for analysis the performance of a system. Simulators are used for the many reasons like lower in cost, ease of implementation and practicality of testing large-scale networks. The main aim of simulators is to attain as real as possible situation in order to make the obtainable result realistic as well as adaptable.

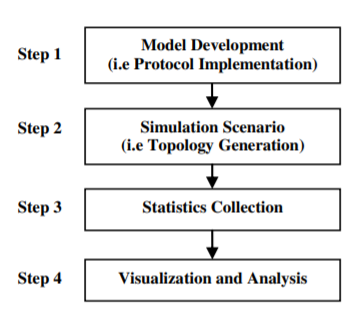

In wireless network simulation, three important points have to be considered; firstly, the protocols and algorithms should be free from errors and have been implemented in details, secondly the simulation environment should be realistic and in addition to that a genuine method is used to analyse the collected data. However, it still has some potential pitfalls. So, to overcome these, it is necessary to know about different tools that are available and came to know about their benefits and drawbacks. The basic steps to run a simulation are as shown in Figure.

Fig. 3 Simulation Steps

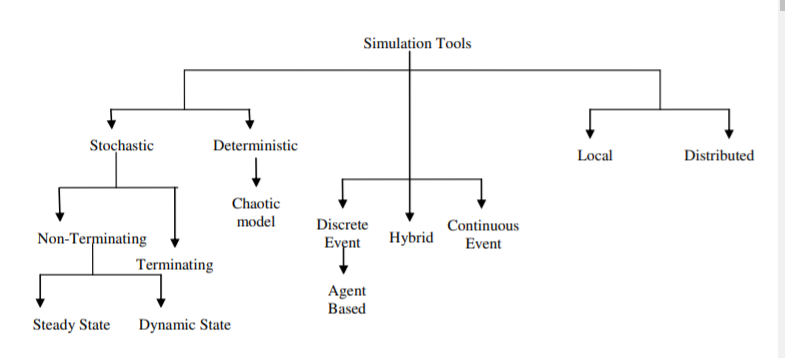

The first step is to implement a protocol (that is model development); second step is to create a simulation scenario (that is designing a network topology and traffic scenario); third step is the collection of statistics and finally the fourth step is to visualize and analyse the simulation results which is carried out during or after the execution of simulation. The main problem with this method is that no one can make a guess in advance that how many times this process should be repeated to have a minimal error. And if the error is found out to be large then this process needs to be repeated. Simulation tools are classified into several norms which are as shown in Figure.

Fig. 4 Classification of Simulation Tools

A. Stochastic simulation: Stochastic simulation tool is one of the most realistic simulation tools that will include some randomness along with some time elapsing elements. The main applications of such simulations are to propose a model to observe the traffic pattern in some particular grid, customer service centers, and many more.

B. Deterministic simulation: Deterministic simulations are fixed and non random values that are used to define model of the system which is under investigation. The output of such system is fixed according to some specific inputs because of no randomness. Example: Chaotic model.

C. Terminating simulation: Terminating system may be defined as systems which have some natural event to occur and have some established starting and terminating condition. For example: A working day in an office starts at 9 am in morning and ends at 5 pm in evening. In such systems, the established conditions generally affect some measurement in performance. So its basic function is to understand the behavior of the transient system.

D. Non-Terminating simulation: Non-Terminating systems are those systems that do not have a finite duration. For Example: The Internet. These simulation tools are used to explore long term behavior of systems. Of-course such type of systems stops at some point but it is a non-trivial problem to determine proper time.

E. Steady State simulation: Steady State simulation models are used to define the relationship between elements of system and the state in which equilibrium state occurs in system with the help of some equations.

F. Dynamic simulation: Dynamic simulation models are those models which results in change in system as input signals changes.

G. Discrete event simulation: Discrete event simulations are those models which organize events on time basis. In such type of simulations, a queue of events has been made by simulator on the basis of time in which they occur. Then the simulator reads the event queue by queue and new event is entered as the previous one is executed. Most of simulation tools came under this category like computers, fault-tree and logic-test simulators. Agent Based simulators is a special case of this simulator in which mobile entities are known as agents. However, in case of traditional discrete event model, entities have only attributes but agents have both attributes as well as methods which includes rules for interacting with other agents.

H. Continuous Event simulators Continuous simulators are of opposite nature as compared with discrete simulators as they solve differential equations which show the evolution of system using continuous equations. Such type of simulation works continuously and smoothly with any information rather in discrete steps. For- Example: The movement of water through chain of pipes and reservoirs can be described by continuous simulator.

I. Hybrid Simulators Hybrid simulations are those tools which combine both the features of continuous as well as discrete simulators and it can solve differential equations only if it is superimpose on continuous systems.

J. Local simulators Local simulator models are those models which run within an interconnected network or on individual machine.

a. Distributed simulators Distributed simulator models are those models which run over a network of interconnected computers basically through the Internet. So, the simulation which scattered over many host computers is referred to known as distributed simulations.

References:

1. Cristopher Cox, “An Introduction to LTE: LTE, LTE-Advanced, SAE, VoLTE and 4G Mobile Communications”, Wiley, 2nd Edition.

2. E. Dahlman, J. Skold, and S. Parkvall, “4G, LTE-Advanced Pro and The Road to 5G”, Academic Press, 3rd Edition.

3. B. P. Lathi, “Modern Digital and Analog Communications Systems”. Oxford university press, 2015, 4th Edition.

4. Obaidat, P. Nicopolitids, “Modeling and simulation of computer networks and systems: Methodologies and applications” Elsevier, 1st Edition.