Unit - 2

Data Link Layer (DLL)

It is second layer from bottom of OSI model it is required Due to below mentioned reasons.

Physical layer takes care of transmitting information over a communication channel. Information transmitted may be affected by noise or distortion caused in the channel. Hence, the transmission over communication channel is not reliable.

The data transfer is also affected by delay and has finite rate of transmission. This reduces the efficiency of transmission.

Data link layer is designed to take care of these problems i.e., data link layer improves reliability and efficiency of channel.

We can also say that the services provided by physical layer are not reliable. Hence, we require some layer above physical layer which can take care of these problems. The layer above physical layer is Data Link Layer (DLL).

Following are some of the functions of a data link layer.

Error Control: Physical layer is error prone. The errors introduced in the channel need to be corrected.

Flow Control: There might be mismatch in the transmission rate of sender and the rate at which receiver receives. This mismatch must be taken care of.

Addressing: In the network where there are multiple terminals, whom to send the data has to be specified.

Frame Synchronization: In physical layer, information is in the form of bits. These bits are grouped in blocks of frames at data link layer. In order to identify beginning and end of frames, some identification mark is put before and/or after each frame.

Link Management: In order to manage co-ordination and co-operation among terminals in the network, initiation, maintenance and termination of link is required to be done properly. These procedures are handled by data link layer. The control signals required for this purpose use the same channels on which data is exchanged. Hence, identification of control and data information is another task of data link layer.

Data Link Layer Design Issues

Physical layer provides the services to the services to the data link layer to send and receive bits over communication channels. It has a number of functions, including Dealing with transmission errors.

Regulating the flow of data so that slow receivers are not swamped by fast senders. Providing a well-defined service interface to the network layer.

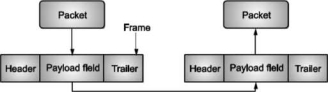

To complete these functions the data link layer gets the packets from network layer and it divided into the frames for transmission. Each frame has a frame header, a pay load field for holding the packet. And a frame trailer as shown in following Figure. frame management is the main function of the data link layer. It explains what the data link layer does.

Sending machine Receiving machine

Fig. Frame Management

Services Provided to Network Layer

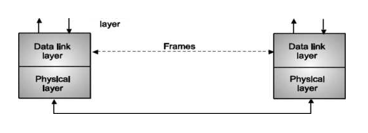

Data link layer provides services to the layer above it viz. Network layer. The basic service is transferring packets from network layer on source machine to network layer on destination machine as shown in Fig.

Fig. Service provided to network layer

The service model describes the service provided by a protocol.

There are two categories of service models:

Connection-oriented service.

Connectionless service.

In connection-oriented service, connection is established between the peer entities first and then data transfer begins. There will be connection setup, data transfer and connection release procedure required to be carried out.

Connectionless services do not require a connection setup procedure. Information blocks are transmitted using address information in each Protocol Data Unit (PDU).

Acknowledged connectionless services provide acknowledgement for each PDU so that data transfer is reliable.

Unacknowledged connectionless services do not provide acknowledgement for each PDU. This is also called best effort service. In such case, network layer has to provide reliable service i.e., acknowledged service.

The service model specifies the Quality of Service (QoS). It includes expected performance level in transfer of information. Examples of some QoS parameters are:

> Probability of error.

> Probability of loss.

> Transfer delay.

Framing

When the bits of information is received from physical layer, data link layer entity identifies beginning and end of block of information i.e., frames with the help of special pattern placed by the peer entity. The frames may be fixed length or variable length. The requirements of framing methods will vary accordingly.

In case of fixed length frames, a frame consists of a single bit followed by a particular length sequence.

Variable length frames required additional information for frame identification.

For example,

Special characters to identify beginning and end of frame. Starting and ending flags. Character counts.

CRC Checking Methods (Checksum)

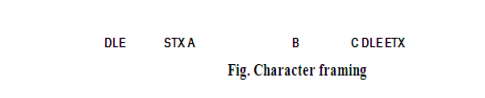

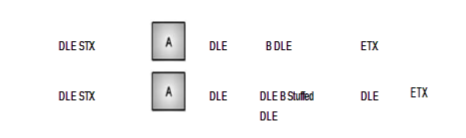

• The first framing method uses ASCII characters DLE and STX at the start of each frame and DLE and ETX at the end of the frame. It is as shown in Fig. 2.3 (a), where DLE is Data Link Escape, STX is Start of Text and ETX is End of Text.

But then this framing method has a problem. Consider the case where the data to be transmitted contains the character DLE STX in this case wrong identification of start of frame will be made. Similarly, if DLE ETX occur it will trigger end of frame.

This problem can be solved by stuffing (adding) another DLE whenever DLE occurs in the data sequence.

This technique is called character stuffing. The stuffed DLE can be destuffed (deleted) by receiving DLL entity.

Fig- Character stuffing

This method is suitable only for data containing ASCII or printable characters and not for arbitrary sized characters.

The second technique which is also called as bit stuffing allows arbitrary number of bits per character. At the beginning and end of each frame a special bit pattern 01111110 called as flag is used. Here also there is a possibility that the flag bits may occur in the data. The technique used to avoid this problem is bit stuffing. Whenever there are five ones in data sequence, 0 is stuffed and at the receiving end it is de stuffed.

Bit stuffing is shown below

ORIGINAL PATTERN:

(Data)

111111111111O111111O11l33Zα

AFTER BIT STUFFING:

Five ones followed by 11 will indicate an error.

If receiver loses synchronization all it has to do is scan for flag pattern.

The character count method employs count of number of characters in the frame to be placed at the beginning of each frame.

The receiver will look into character count and extract those many characters from the frame and hence it knows the end of frame also. Problem will come when the count is changed due to error in transmission. The synchronization will be completely lost. Even if we use checksum, there will be no way of identifying the start of next frame. Hence this method is not used much.

Character count

∣ 4 ∣ 1 ∣ 2 ∣ 3 ∣ 4 ∣ 4 ∣ 4 ∣ 6 ∣ 7 ∣ 8 ∣ 5 ∣ 0 ∣ 1 ∣ 2 ∣ 3 ∣ 4 ∣ 7 ∣ 5 ∣ 6 ∣ 7 ∣ 8 ∣ 9 ∣ 0~∣~1~∣

Error

∣ 4 ∣ 1 ∣ 2 ∣ 3 ∣ 4 ∣ 3 ∣ 4 ∣ 6 ∣ 7 ∣ 8 ∣ 6 ∣ 0 ∣ 1 ∣ 2 ∣ 3 ∣ 4 ∣ 8 ∣ 5 ∣ 6 ∣ 7 ∣ 8 ∣ 9 ∣ 0 ∣ 1 ∣

In CRC based framing method, along with character count, CRC of count field is placed. Hence, the receiver examines four bytes at a time to see if CRC computed over first two bytes equals contents of next two bytes.

Many data link protocols use a combination of character count with other methods, for making it doubly sure that proper synchronization is achieved.

For example, count of character is placed at the beginning of the frame and a flag is placed at the end of frame and may be checksum is also used. Count field is used to locate end of frame and only if appropriate flag is present at the end of frame and checksum is correct, the frame is accepted.

Automatic Repeat Request (ARQ) known as Automatic Repeat Query. It is an error control strategy used in a 2-way communication system. It is a group of error control protocols to achieve reliable data transmission over an unreliable source or service.

Flow Control:

In a communication network the two communicating entities will have different speed of transmission and reception. There will be problem if sender is faster than receiver.

The fast sender will swamp over the slow receiver. The “flow” of information between sender and receiver has to be “controlled”. The technique used for this is called flow control technique. Also, there will be time required to process incoming data at the receiver.

This time required for processing is often more than the time for transmission. Incoming data must be checked and processed before they can be used. Hence, we require a buffer at the receiver to store the received data. This buffer is limited, therefore, before it becomes full the sender has to be informed to halt transmission temporarily.

A set of procedures are required to be carried out to restrict the amount of data the sender can send before waiting for acknowledgement. This is called flow control.

Error Control:

When the data is transmitted it is going to be corrupted. We have seen how to tackle this problem by adding redundancy. Still the error is bound to occur. If such error occurs, the receiver can detect the errors and even correct them.

What we can do is if error is detected by receiver, it can ask the sender to retransmit the data. This process is called Automatic Repeat Request (ARQ). Thus, error control is based on ARQ, which is retransmission of data.

Protocols:

The functions of data link layer viz. Framing, error control and flow control are implemented in software. There are different protocols depending on the channel.

For noiseless channel, there are two protocols: (1) Simplest, (2) Stop-and-wait. For noisy channel, there are 3 protocols.

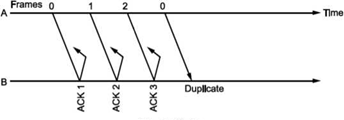

Stop and Wait ARQ (Simplex Protocol)

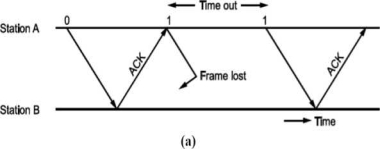

In this technique, transmitter (A) transmits a frame to receiver (B) and waits for an acknowledgement from B.

When acknowledgement from B is received, it transmits next frame.

Now, consider a case where the frame is lost i.e., not received by B. B will not send an acknowledgement. A will wait and wait and wait to avoid this, we can start a timer at A, corresponding to a frame.

If the acknowledgement for a frame is not received within the time timer is on, we can retransmit the frame, as shown in Fig.

Same thing can happen when frame is in error and B does not send acknowledgement. After A times out it will retransmit.

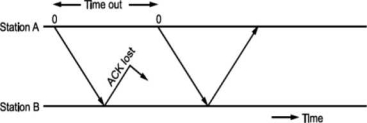

• There is another situation when some frame is transmitted but its acknowledgement is lost as shown in Fig.

The time out will send the same frame again which will result into accepting duplicate frame at B. For this, we have to bring in the concept of sequence number to frames. In case a duplicate frame is received due to loss of Ack, it can be discarded.

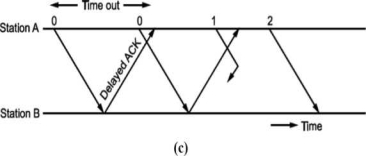

A second ambiguity will arise due to delayed acknowledgement as shown in Fig.

Fig: Stop and wait ARQ

As shown in figure, the acknowledgement received after frame 1 is transmitted would result into acknowledging frame 1 which is actually lost. We can give sequence number to acknowledgements so that transmitter knows the acknowledgement of which frame is received.

The acknowledgement number will be the number of next frames expected i.e., when frame 0 is received properly, we will be sending Acknowledgement number 1 as frame 1 is expected next.

Now, the next question is what should be the sequence numbers given to frame and acknowledgement. We cannot give large sequence numbers because they are going to occupy some space in frame header. Hence, sequence number should have minimum number of bits.

In Stop and wait ARQ (simplex) protocol, one bit sequence number is sufficient. For this consider that frame O is transmitted and the receiver receives and sends acknowledgement number 1. Now, frame 1 is transmitted and sends acknowledgement for it since frame O is already received. We can use same number for next frame as shown in Fig.

Frame O arises at B

ACK sent

(O, O) ► (0,1)

(1.0)— (1,1)

Frame 1 arises at B

ACK sent

• This ARQ technique is used in IBM's Binary Synchronous Communication (BISYNC) protocol and XMODEM, a file transfer protocol for modems.

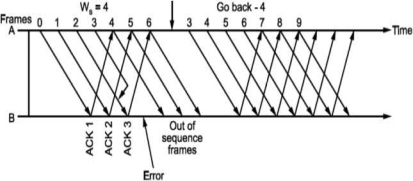

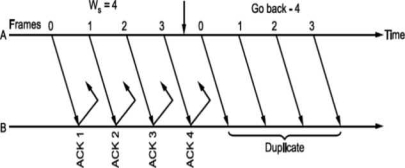

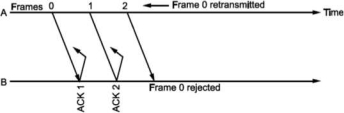

Go-Back N ARQ

Unlike Stop and wait ARQ, in this technique transmitter continues sending frames without waiting for acknowledgement.

The transmitter keeps the frames which are transmitted in a buffer called sending window till its acknowledgement is received.

Let the number of frames transmitter can keep in its buffer be Ws. It is called size of sender's window.

The size of window is selected on the basis of delay-bandwidth product so that channel does not remain idle and efficiency is more.

The transmitter keeps on transmitting the frames in window (buffer), till acknowledgement for the first frame in the window is received.

When frames O to Ws - 1 are transmitted, the transmitter waits for acknowledgement of frame O. When it is received the next frame is taken from network layer into the buffer i.e., window slides forward by one frame.

If acknowledgement for an expected frame (i.e., first frame in the window) does not reach back and time-out occurs for the frame, all the frames in the buffer are transmitted again. Since there are N = Ws frames waiting in the buffer, this technique is called Go back N ARQ.

Thus, Go back N ARQ pipelines the processing of frames to keep the channel busy.

Fig shows Go Back N ARQ.

It can be seen that the receiver window size will be 1, since only one frame which is in order is accepted.

Also, the expected frame number at the receiver end is always less than or equal to recently transmitted frame.

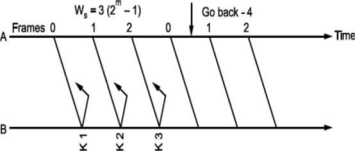

What should be the maximum window size at the transmitter i.e., what should be value of W S∙ It will depend on the number of bits used in sequence number field of the frame. So maximum window size at transmitter should be Ws = 2m i.e., if 3 bits are reserved for sequence number Ws = 8, but it will not! For this consider following situation shown in Fig.

i.e., if all the frames transmitted are acknowledged or their acknowledgement is lost. The transmitter will retransmit the frames in the buffer. The receiver will accept them as if they are new frames! Hence, to avoid this problem, we reduce window size by 1 i.e., Ws = 2^ - 1 = 3 i.e., make it Goback3. But the sequence number is maintained from 0 to 3. Now consider Fig. (c), where the acknowledgements of all the received frames 0, 1, 2 are lost but the receiver is expecting frame 3. Hence, even if we transmit 0,1,2 again they will not be accepted as the expected sequence number does not match transmitted one. Hence, the window size should be 2m - 1 for GoBackN ARQ.

Go Back N can be implemented for both ends i.e., we can send information and acknowledgement together which is called piggybacking. This improves the use of bandwidth. Fig. (c) shows the scheme.

Fig -GoBackN ARQ

Note that both transmitter and receiver need sending and receiving windows. Go back N ARQ is implemented in HDLC protocol and V-24 modem standard

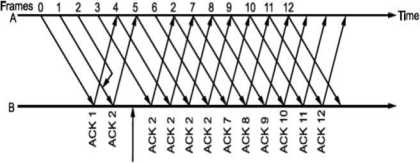

Selective Repeat ARQ

Go Back N ARQ is inefficient when channels have high error rates.

Instead of transmitting all the frames in buffer, we can transmit only the frame in error.

For this, we have to increase the window size of receiver so that it can accept frames which are error free but out of order (not in sequence).

Normally, when an acknowledgement for first frame is received, the transmit window is advanced. Similarly, whenever acknowledgement for the first frame in receiver window is sent it advances.

Whenever there is error or loss of frame and no acknowledgement is sent, the transmitter retransmits the frame whenever its timer expires. The receiver whenever accepts next frame which is out of sequence now sends negative acknowledgement NAK corresponding to the frame number it is expecting. Till the time the frame is received it keeps on accumulating frames received in the receiver window. Then, it sends the acknowledgement of recently accepted frame that was in error. It is shown in Fig. Below.

To calculate the window size for given sequence numbering having m

Bits, consider the situation shown in Fig.

Let us select window size for m = 2 as Ws = 2m -1=3. Let the frames 0, 1, 2 be in the buffer and are transmitted. They are received correctly but their acknowledgements are lost. Timer for frame 0 expires, hence is retransmitted. The receiver window is expecting frame 0 which it accepts as new frame but actually, it is duplicate!

Fig. Selective Repeat ARQ

Thus, the window size at transmitter and receiver are too large. Hence, we select Ws = Wr = 2m∕2 = 2m-l. In above case, Ws = Wr = 2⅜ = 2. Sequence numbers for frames will be 0, 1, 2, 3, as shown in Fig. 2.19 (c). The transmitter transmits frames 0, 1. But because of lost acknowledgements, timer for frame 0 expires. Hence, it retransmits frame 0. At the receiver we have expected frames {2, 3}. Hence, frame 0 is rejected as it is duplicate and not part of receiver window.

The selective repeat ARQ is used in TCP (Transmission Control Protocol) and SSCOP (Service Specific Connection Oriented Protocol).

The protocols discussed here assume that the data flows only in one direction from sender to receiver. In practice, however, it is bidirectional. Hence, when the flow is bidirectional, we will be using piggybacking i.e., sending acknowledgement (positive/ negative) along with data if any to be sent to the other end.

Error Detection and Correction

Whenever bits flow from one point to another, they are subjected to unpredictable changes, because of interference. This interference can change the shape of signals. In a single bit error aθ is changed to al or al to aθ. In a burst error, multiple bits are changed. For example, 1/1 OOs burst of impulse noise on a transmission with a data rate of 1200 bps might change all or some of the 12 bits of information.

Single Bit Error: It means that only 1 bit of a given data unit (as a byte, character or packet) is changed from 1 to 0 or 0 to 1.

Below figure shows the effect of single bit error on a data unit. To understand the impact of change, imagine that each group of 8 bits is an ASCII character with aθ bit added to the left. In Fig. 2.7, 00000010 (ASCII/STX) was sent, meaning start of text but 00001010 (ASCII LF) was received, meaning line feed.

Sent Received Single bit errors are the least likely type of errors in serial data transmission. To understand imagine data sent at 1 Mbps. This means that each bit lasts only 1/1,000,000s or 1 μs. For single bit error to occur, the noise must have a duration of only 1 μs, which is very rare. Noise normally lasts much longer than this.

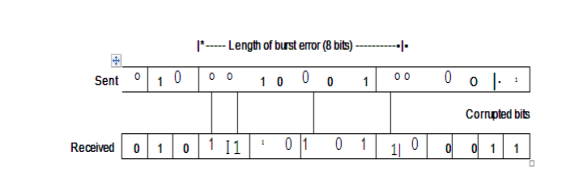

Burst Error: The term means that 2 or more bits in the data unit have changed from 1 to 0 or from 0 to 1.

In this case 0100010001000011 was sent, but 0101110101100011 was received. A burst error does not necessarily mean that the errors occur in consecutive bits. The length of the burst is measured from the first corrupted bit to the last corrupted bit. Some bits in between may not have been corrupted.

A burst error is more likely to occur than a single bit error. The duration of noise is normally longer than the duration of 1 bit, this means when noise affects data, it affects a set of bits. The number of bits affected depends on the data rate and duration of noise. For example, if we are sending data at 1 kbps, a noise of 1/1 OOs can affect 10 bits, if we are sending data at 1 Mbps, the same noise can affect 10,000 bits.

Redundancy:

The main concept in detecting errors is redundancy. To detect errors, we need to send some extra bits with our data. These redundant bits are added by sender and removed by the receiver. Their presence allows the receiver to detect corrupted bits.

Detection Versus Correction:

The correction of error is more difficult than detection. In error detection, we are looking only to see if any error has occurred. The answer is a simple yes or no. We are not even interested in the number of errors. A single bit error is the same for us as a burst error.

In error correction, we need to know the exact number of bits that are corrupted and more importantly, their location in the message. The number of errors and the size of the message are important factors. If we need to correct one single error in an 8-bit data unit. We need to consider eight possible error locations. If we need to correct two errors in data unit of the same size, we need to consider 28 possibilities. You can imagine the receiver’s difficulty in finding 10 errors in data unit of 1000 bits.

Forward Error Correction Versus Retransmission:

There are two main methods of error correction. Forward error correction is the process in which the receiver tries to guess the message by using redundant bits. This is possible, as we see later, if the number of errors is small.

Correction by retransmission is a technique in which the receiver detects the occurrence of an error and asks the sender to resend the message. Resending is repeated until a message arrives that the receiver believes in error-free.

Parity bits is used for error detection purpose it is used for Single bit error detection in computer network transmission

Parity Check Codes: These are simplest possible block codes. These codes are generated by adding one bit to the message bits. They can be even parity check codes or odd parity check codes. Even parity check codes add 1 to message if number of 1s in message are odd and 0 if number of 1s in message are even.

Example:

Message | Code | Parity bit |

100101 1lOlOl | 1001011 1101010 | 1 0 |

Similarly,

Odd Parity Check Codes:

Message | Code | - Parity bit |

OlOOll 010001 | 0100110 0100011 | 0 1 |

If single error occurs in these codeword’s, it can be detected at the receiver end.

Weight of a Codeword: The number of non-zero symbols in a codeword is called weight of the codeword.

Hamming Codes

Hamming code is an error correction codes that can be used to detect and correct bit errors that can occur when computer data is moved or stored.

Hamming Distance: It is a number of symbols in which two codeword’s differ.

C1 = 1Ol111

C2 = 00OlO

Hamming distance between c 1 and c2 is 4, denoted as d (c ι, c2) = 4.

Minimum hamming distance between any two codeword’s of a code is called minimum hamming distance of that code. It is denoted as dmin.

A linear code: It is a code which has following properties.

The sum of any two codeword’s in the code will yield another codeword of that code. There is always all-zero codeword. The minimum hamming distance between any two codeword’s is equal to minimum weight of any non-zero codeword.

Example

Consider the following code. C = {000, 111}

Solution:

It consists of the two codeword’s.

Weight of 000 is 0

Weight of 111 is 3.

Hamming distance between two codeword’s = 3.

Minimum hamming distance of the code = 3.

It is a linear code since addition of the two codeword’s yield one of the

Codeword’s 111.

Example

Consider a code. C = {000, 010, 001, 111}

Codeword | Weight | |

000 | 0 | |

010 | 1 | |

001 | 1 | |

111 | 3 | |

Solution:

Minimum hamming distance = 1

It is not a linear code as addition of OOl and 010 does not yield valid codeword i.e., 011 is not a valid codeword of this code.

Minimum Hamming Distance (dmjn): Minimum Hamming distance of a linear code is equal to minimum weight of the non-zero codeword’s in that code.

Consider a code C = {000, 010, 101, 111}

Codeword | Weight |

000 | 0 |

010 | 1 |

101 | 2 |

111 | 3 |

Since, minimum weight of non-zero code is 1.

Minimum hamming distance dmin = 1.

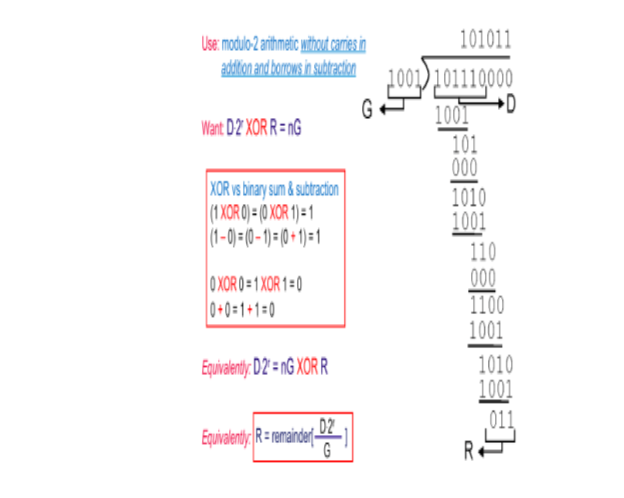

CRC

It is error detection method that is widely used for finding single as well

As multi bit error during data transmission in any transmission channel

In CRC, the data bits for error checking are considered to be a binary number D. A generator bit pattern G of length r + 1 bits is used to generate a CRC bit pattern R of length r bits to be included in the frame header. R is the remainder of the division D*2r/G in modulo- 2 arithmetic (without carries in addition and borrows in subtraction).

The generated CRC bit pattern R satisfy the following condition. < D, R > means the binary number formed by concatenating D and R) is exactly divisible by G in modulo-2 arithmetic (without carries in addition and borrows in subtraction). The receiving side uses the same generator bit pattern G to compute the division <D, R>/G. If the remainder of this division is zero, then the frame passes the CRC and its content is delivered to the layer above. If not, an error is detected. While

Parity bit checking performs very badly for bursty bit errors, CRC can detect bursts of length less than or equal to r.

Working of CRC

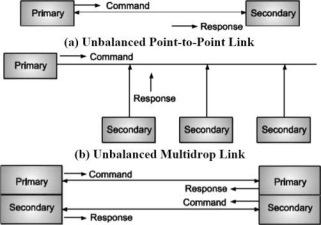

High Level Data Link Control (HDLC)

It is the most widely used DLL protocol. It has a set of functions which provides communication service to network layer.

HDLC supports variety of applications for which it has three types of stations, two link configurations and three data transfer modes.

Types of Stations:

Primary Station: It controls operation of link. It issues commands.

Secondary Station: Primary station controls it by issuing command frames transmitted by secondary station.

Combined Station: It has features of both primary and secondary stations i.e., it issues both commands and response.

Types of Configurations:

Unbalanced Configuration: It has one primary and one or more secondary stations. It supports both full duplex and half duplex configuration.

Balanced Configuration: It consists of two combined stations supporting half duplex and full duplex transmission.

These configurations are shown in Fig.

Types of Data Transfer Modes:

Normal Response Mode (NRM): Used with unbalanced configuration. Primary can initiate data transfer to a secondary. Secondary can only transfer data in response to command from primary.

Asynchronous Balanced Mode (ABM): Used with balanced configuration. Any one of the combined stations can initiate transmission without the permission of other station.

Asynchronous Response Mode (ARM): Used with unbalanced configuration secondary can initiate transmission without permission from primary. But primary has control of the link.

NRM can be used on multi drop lines and point-to-point links. ABM is most widely used. ARM is rarely used.

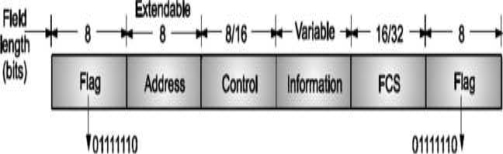

HDLC Frame Format

The functionality of a protocol depends on the control fields that are defined in the header and trailer.

The various data transfer modes are determined by the frame structure.

Information is attached with a header consisting of flag, address and control fields and a trailer consisting of checksum and flag.

Flag fields which are 8 bit long delimit the frame at both ends with a pattern 01111110 as discussed in before. Bit stuffing is used to achieve data transparency.

The addressing function of DLL is identifying the station that transmitted the frame and the station that will be receiving the frame. The address field specifies this. It is extendable over more than 8 bits in multiples. If this field is all Γs, the frame is broadcast to all secondary.

Point-To-Point Protocol (PPP)

The Point-to-Point Protocol (PPP) originally emerged as an encapsulation protocol for transporting IP traffic over point-to-point links. PPP also established a standard for the assignment and management of IP addresses asynchronous (start/stop) and bit-oriented synchronous encapsulation, network protocol multiplexing, link configuration, link quality testing, error detection, and option negotiation for such capabilities as network layer address negotiation and data-compression negotiation. PPP supports these functions by providing an extensible Link Control Protocol (LCP) and a family of Network Control Protocols (NCPs) to negotiate optional configuration parameters and facilities.

PPP Components

PPP provides a method for transmitting datagrams over serial point-to-point links. PPP contains three main components:

A method for encapsulating datagram over serial links. PPP uses the High-Level Data Link Control (HDLC) protocol as a basis for encapsulating datagram over point-to-point links.

An extensible LCP to establish, configure, and test the data link connection.

A family of NCPs for establishing and configuring different network layer protocols. PPP is designed to allow the simultaneous use of multiple network layer protocols.

PPP Frame Format

The PPP frame format appears as shown in Fig.

Field length

In bytes 1 Variable 2 or 4

Flag | Address | Control | Protocol | Data | FCS |

Fig. Six fields make up the PPP frame

The following descriptions summarize the PPP frame fields illustrated in Fig.

Flag: A single byte that indicates the beginning or end of a frame. The flag field consists of the binary sequence 01111110.

Address: A single byte that contains the binary sequence 11111111, the standard broadcast address. PPP does not assign individual station addresses.

Control: A single byte that contains the binary sequence 00000011, which calls for transmission of user data in an unsequenced frame. A connectionless link service similar to that of Logical Link Control (LLC) Type 1 is provided.

Protocol: Two bytes that identify the protocol encapsulated in the information field of the frame. The most up-to-date values of the protocol field are specified in the most recent Assigned Numbers Request for Comments (RFC).

Data: Zero or more bytes that contain the datagram for the protocol specified in the protocol field. The end of the information field is found by locating the closing flag sequence and allowing 2 bytes for the FCS field. The default maximum length of the information field is 1,500 bytes. By prior agreement, consenting PPP implementations can use other values for the maximum information field length.

Frame check sequence (FCS): Normally 16 bits (2 bytes). By prior agreement, consenting PPP implementations can use a 32-bit (4-byte) FCS for improved error detection.

The LCP can negotiate modifications to the standard PPP frame structure. Modified frames, however, always will be clearly distinguishable from standard frames.

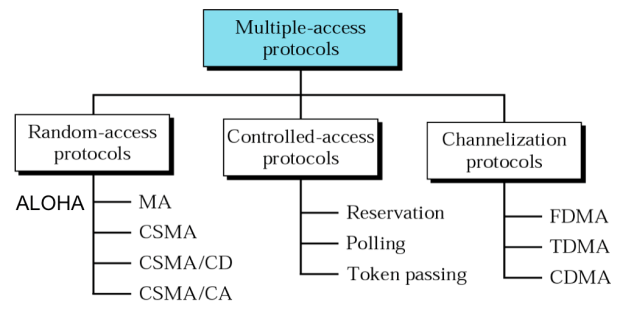

Multiple Access Protocols

Multiple access protocol regulates how different nodes sharing the same link can gain transmission access to the link. MAC addresses are used in frame headers to identify the source and the destination nodes on a single link. Because of this the data link layer is also sometimes referred to as the MAC layer. The link layer uses the physical layer service put bits on the medium.

It is between the network layer and the physical layer.

There are three classes of multiple-access protocols in the literature:

• Channel partitioning (channelization) protocols

• Random access protocols

• Controlled access protocols (taking turns)

ALOHA

It is a random-access scheme for transmitting information for terminals sharing the same channel.

It is simple in operation.

Information is transmitted over the shared channel as soon as it becomes available.

If there is collision because of more stations transmitting simultaneously, they will wait for random amount of time before transmitting the information again. It is called back off.

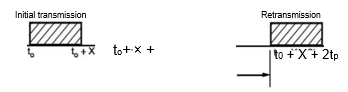

Efficiency of ALOHA:

Let L be the length of frame (bits) (constant).

R be rate of transmission.

L

Frame time = X

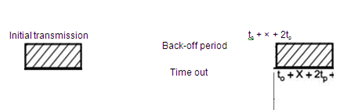

Let some frame arrive at time t0 and end at to + X.

This frame will collide if there is transmission from other stations between to - X and to

+ X as shown in Fig.

Vulnerable time = (to ÷ X) - (to - X)

= 2X

Let G be the total arrival rate of the system in frames/X seconds. G is also throughput of the system. Let G be total arrival rate of the system in frames/X seconds. G is also called total load of the system.

With the assumption that the back-off spreads retransmissions such that new and repeated frame transmission are equally likely to occur, the number of frames transmitted in a time interval has Poisson distribution with average number of arrivals of 2G arrivals∕2X seconds.

Hence, probability that k frames are generated during a given frame time are (2G)k ,r

P[k transmissions in 2X seconds] = —— e 2lj

Hence, throughput S is equal to total arrival rate G times probability of successful transmission.

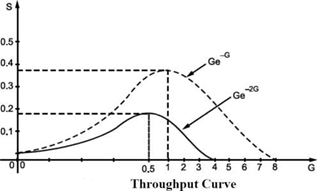

S = P [no collision]

= P[0 transmissions in 2X seconds]

= G≡2e-2G

= Ge-2G

The plot of S versus G is shown in Fig.

It can be seen that the maximum value of S = at G = 0.5. That is system can achieve

Throughput of 18.4% only.

Slotted ALOHA: Performance of ALOHA can be improved by putting a restriction on time of transmission i.e., stations will transmit only at a fixed time (Synchronize fashion). Thus, reducing the probability of collisions.

All stations keep track of transmission time slots and are allowed to initiate transmission only at beginning of slot.

Vulnerable time i.e., time of collision reduces to to - X to X i.e., X second

Here are G arrivals in X seconds, (G is total arrival rate)

S = G × P [no collision]

= G × P [0 transmission in X seconds]

= Ge-G

Above equation is plotted in Fig. 3.5. Slotted ALOHA has maximum throughput of - =

36.8% at G= 1.

Carrier Sense Multiple Access Protocols

Protocols in which stations listen for carriers and take suitable action are called Carrier Sense Protocols.

Following are some carrier sense protocols:

I-Persistent CSMA:

When a station has some data to send it listens to the channel.

If channel is busy, it waits until it becomes free or idle continuously sensing the channel.

If channel is idle, it transmits the frame.

It is called 1-persistent because whenever channel is idle the station transmits with probability 1.

Non-Persistent CSMA:

When station has some data to send, it listens to the channel and if channel is idle, it sends data.

If channel is busy, it waits until it becomes free/idle.

But then it does not sense the channel continuously as in 1-persistent CSMA. It waits for random period and then again senses the channel.

p-Persistent CSMA:

It applies to slotted channels.

When a station is ready to transit data and channel is idle it transmits with probability p and decides not to transmit with probability q = 1 - p until next slot. If that slot is also idle it decides to transmit or defer with probability p and q. This process is repeated until either frame is transmitted or another station has started transmission.

If the channel is busy, it waits until next slot and repeats above step.

Controlled Access

In controlled access, the station consults one another to find which station has the right to send. We discuss three popular methods:

Reservation

In reservation method, a station needs to make reservation before sending data. Time is divided into intervals. In each interval, a reservation frame precedes the data frames sent in that interval.

If there are N stations in the system, there are exactly N reservation mini slots in reservation frame. Each mini slot belongs to a station. When a station needs to send data frame, it makes reservation in its own mini slot. The stations that have made reservations can send their data frames after the reservation frame.

12345 12345 12345

0 | 0 | 0 | 0 | 0 | Data station 2 | 0 | 1 | 0 | 0 | 0 | Data station 3 | Data station 4 | 0 | 0 | 1 | 1 | 0 |

∣→— Reservation—>∣ frame

Reservation Access

In Fig above, it shows a situation with five stations and five mini slot reservation frames. In the first interval, only stations 1, 3 and 4 have made reservations. In the second interval, only station 1 has made a reservation.

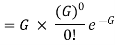

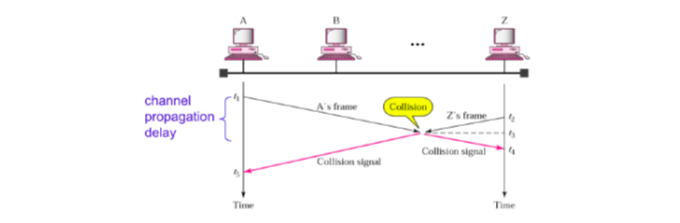

Note: Below figure is why collision is not detected by CSMA.

Carrier Sense Multiple Access with Collision Detection (CSMA/CD)

A Shared Medium

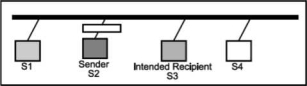

The Ethernet network may be used to provide shared access by a group of attached nodes to the physical medium which connects the nodes. These nodes are said to form a Collision Domain. All frames sent on the medium are physically received by all receivers, however the Medium Access Control (MAC) header contains a MAC destination address which ensure only the specified destination actually forwards the received frame (the other computers all discard the frames which are not addressed to them).

Consider a LAN with four computers each with a Network Interface Card (NIC) connected by a common Ethernet cable.

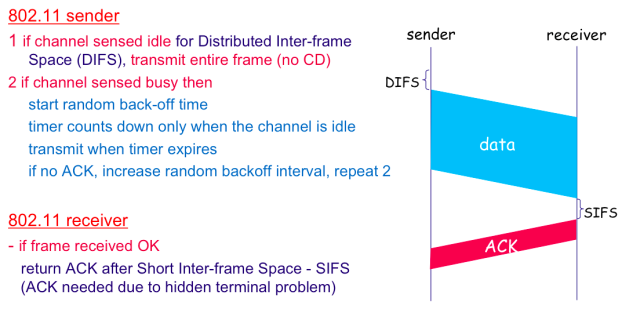

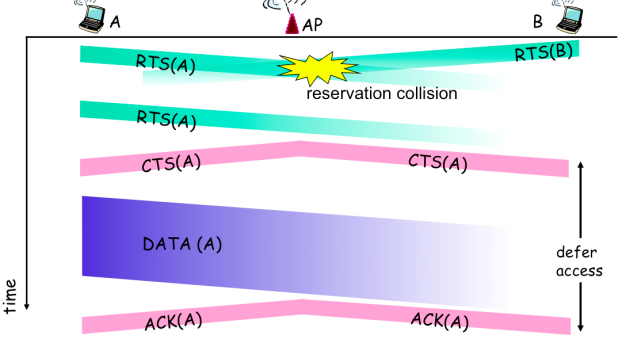

CSMA with Collision Avoidance (CSMA/CA) does not employ collision detection. CSMA/CA proves to be useful especially in wireless networks where collision detection is very difficult. There are two difficulties with collision detection in wireless. The first difficulty is that the power of the received signal is usually very weak in comparison to the power of the transmitted signal Secondly, the so-called hidden terminal problem

One computer (S2) uses a NIC to send a frame to the shared medium, which has a destination address corresponding to the source address of the NIC in the S3 computer.

Note – Below figure is showing CSMA/CA in IEEE 802.11

Clear-To-Send (CTS) message. The CTS message indicates which node is allowed to transmit next. Upon receiving the CTS broadcast, the node

That is cleared for transmission will transmit its frame while the other

Nodes will wait. Note that RTS messages can still collide with each other

And with CTS messages of the access point. However, since these are tiny packets the loss due to such collisions is minimal compared to the gain from reduced numbers of large frame collisions. The CSMA/CA operation with channel reservation (collision avoidance)

Note- CSMA/CA with channel reservation

Wavelength Division Multiple Access (WDMA)

A technique that manages multiple transmissions on a fiber-optic cable system using wavelength division multiplexing (WDM). WDMA divides each channel into a set of time slots using time-division multiplexing, and data from different sources is assigned to a repeating set of time slots.

Binary Exponential Back -off algorithm

CSMA/CD

In networks where the propagation delay is small relative to the packet transmission time, the CSMA scheme and its variants can result in smaller average delays and higher throughput than with the ALOHA protocols. This performance improvement is due primarily to the fact that carrier sensing reduces the number of collisions and, more important, the length of the collision interval. The main drawback of CSMA-based schemes, however, is that contending stations continue transmitting their data packets even when collision occurs. For long data packets, the amount of wasted bandwidth is significant compared with the propagation time. Furthermore, nodes may suffer unnecessarily long delays waiting for the transmission of the entire packet to complete before attempting to transmit the packet again.

To overcome the shortcomings of CSMA-based schemes and further reduce the collision interval, networks using CSMA/CD extend the capabilities of a communicating node to listen while transmitting. This allows the node to monitor the signal on the channel and detect a collision when it occurs. More specifically, if a node has data to send, it first listens to determine if there is an ongoing transmission over the communication channel. In the absence of any activity on the channel, the node starts transmitting its data and continues to monitor the signal on the channel while transmitting. If an interfering signal is detected over the channel, the transmitting station immediately aborts its transmission. This reduces the amount of bandwidth wasted due to collision to the time it takes to detect a collision. When a collision occurs, each contending station involved in the collision waits for a time period of random length before attempting to retransmit the packet. The length of time that a colliding node waits before it schedules packet retransmission is determined by a probabilistic algorithm, referred to as the truncated binary exponential back-off algorithm.

The algorithm derives the waiting time after collision from the slot time and the current number of attempts to retransmit.

The major drawback of CSMA/CD is the need to provision sensor nodes with collision detection capabilities. Sensor nodes have a very limited amount of storage, processing power, and energy resources. These limitations impose severe constraints on the design of the MAC layer. Support for collision detection in WSNs is not possible without additional circuitry. In particular, wireless transceivers are typically half-duplex. To detect collision, the sensor node must therefore be capable of “listening” while “talking.” The complexity and cost of sensor nodes, however, are intended to be low and scalable to enable broad adaptations of the technology in cost sensitive applications where deployment of large number of sensors is expected. Consequently, the design of physical layer must be optimized to keep the cost low.

Fast Ethernet, Gigabit Ethernet,

IEEE 802.3 Standards and Frame Formats

Ethernet protocols refer to the family of local-area network (LAN) covered by the IEEE 802.3. In the Ethernet standard, there are two modes of operation: half-duplex and full- duplex modes. In the half duplex mode, data are transmitted using the popular Carrier- Sense Multiple Access/Collision Detection (CSMA/CD) protocol on a shared medium. The main disadvantages of the half-duplex are the efficiency and distance limitation, in which the link distance is limited by the minimum MAC frame size. This restriction reduces the efficiency drastically for high-rate transmission. Therefore, the carrier extension technique is used to ensure the minimum frame size of 512 bytes in Gigabit Ethernet to achieve a reasonable link distance.

Four data rates are currently defined for operation over optical fiber and twisted-pair cables:

10 Mbps - IOBase-T Ethernet (IEEE 802.3)

100 Mbps - Fast Ethernet (IEEE 802.3u)

1000 Mbps - Gigabit Ethernet (IEEE 802.3z)

IO-Gigabit - 10 Gbps Ethernet (IEEE 802.3ae).

The Ethernet system consists of three basic elements:

The physical medium used to carry Ethernet signals between computers,

A set of medium access control rules embedded in each Ethernet interface that allow multiple computers to fairly arbitrate access to the shared Ethernet channel, and

An Ethernet frame that consists of a standardized set of bits used to carry data over the system.

As with all IEEE 802 protocols, the ISO data link layer is divided into two IEEE 802 sublayers, the Media Access Control (MAC) sublayer and the MAC-client sublayer. The IEEE 802.3 physical layer corresponds to the ISO physical layer.

The MAC sub-layer has two primary responsibilities:

Data encapsulation, including frame assembly before transmission, and frame parsing/error detection during and after reception.

Media access control, including initiation of frame transmission and recovery from transmission failure.

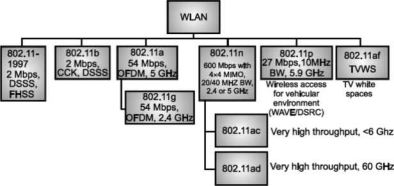

IEEE 802.11a∕b∕g∕n

IEEE 802.11 standard is a group of specifications developed by the Institute of Electrical and Electronics Engineers (IEEE) for wireless local area networks (WLANs).

In 1997, the Institute of Electrical and Electronics Engineers (IEEE) created the first WLAN standard. They called it 802.11 after the name of the group formed for its development.

Unfortunately, 802.11 only supported a maximum network bandwidth of 2 Mbps- too slow for most applications.

For this reason, ordinary 802.11 wireless products are no longer manufactured.

Much of the current work on IEEE 802.11 centers on increasing transmission speeds and range, improving Quality of Service (QoS), and adding new capabilities.

DSRC = Dedicated short-range communications

802.11, or “Wi-Fi” as it is popularly known, came into existence as a result of a decision in 1985 by the United States Federal Communications Commission (FCC) to open several bands of the wireless spectrum for use without a government license.

These so-called "garbage bands” were allocated to equipment such as microwave ovens which use radio waves to heat food. To operate in these bands though, devices were required to use "spread spectrum" technology.

This technology spreads a radio signal out over a wide range of frequencies, making the signal less susceptible to interference and difficult to intercept.

In 1990, a new IEEE committee called IEEE 802.11 was set up to look into getting an open standard started.

Demand for wireless devices was so high that by the time the new standard was developed under the rules of the IEEE (commonly pronounced as “I triple e”).

The IEEE 802.11 standard defines an over-the-air interface between a wireless client and a base station (or access point), or between two or more wireless clients.

As capabilities are added to the IEEE 802.11, some become known by the name of the improvement. For example, many people recognize IEEE 802.11b, IEEE 802.11g and IEEE 802.1 In as popular wireless solutions for connecting to networks.

Each of these improvements defines a maximum speed of operation, the radio frequency band of operation, how data is encoded for transmission, and the characteristics of the transmitter and receiver.

The first two variants were IEEE 802.11b (which operates in the industrial, medical and scientific — ISM — band of 2.4 GHz), and IEEE 802.1 la, which operates in the available 5 GHz bands (5.15-5.35 GHz, 5.47-5.725 GHz, and 5.725-5.825 GHz).

A third variant, IEEE 802.1 lg, was published in June 2003. Both IEEE 802.11a and IEEE 802.11g use a more advanced form of modulation called orthogonal frequency-division multiplexing (OFDM). Using OFDM in the 2.4 GHz band, IEEE 802.1 Ig achieves speeds of up to 54 Mbps.

Now that IEEE 802.1 In, the latest version of IEEE 802.11, is shipping in volume, the focus is on even faster solutions, specifically IEEE 802.1 lac and IEEE 802.1 lad. These improvements aim to provide gigabit speed WLAN.

The difference is their frequencies. IEEE 802. Ilac will deliver its throughput over the 5 GHz band, affording easy migration from IEEE 802.1 In, which also uses 5 GHz band (as well as the 2.4 band).

IEEE 802.1 lad, targeting shorter range transmissions, will use the unlicensed 60 GHz band. Through range improvements and faster wireless transmissions, IEEE 802.1 lac and ad will:

Improve the performance of high-definition TV (HDTV) and digital video streams in the home and advanced applications in enterprise networks

Help businesses reduce capital expenditures by freeing them from the cost of laying and maintaining Ethernet cabling

Increase the reach and performance of hotspots

Allow connections to handle more clients

Improve overall user experience where and whenever people are connected

IEEE 802.15 Standards and frame format

Bluetooth over IEEE 802.15.1

Bluetooth, also known as the IEEE 802.15.1 standard is based on a wireless radio system designed for short-range and cheap devices to replace cables for computer peripherals, such as mice, keyboards, joysticks, and printers. This range of applications is known as wireless personal area network (WPAN). Two connectivity topologies are defined in Bluetooth: the piconet and scatter net. A piconet is a WPAN formed by a Bluetooth device serving as a master in the piconet and one or more Bluetooth devices serving as slaves. A frequency-hopping channel based on the address of the master defines each piconet. All devices participating in communications in a given piconet are synchronized using the clock of the master. Slaves communicate only with their master in a point-to- point fashion under the control of the master. The master’s transmissions may be either point-to-point or point-to multipoint.

Also, besides in an active mode, a slave device can be in the parked or standby modes so as to reduce power consumptions. A scatter net is a collection of operational Bluetooth pioneers overlapping in time and space. Two pioneers can be connected to form a scatter net. A Bluetooth device may participate in several pioneers at the same time, thus allowing for the possibility that information could flow beyond the coverage area of the single piconet. A device in a scatter net could be a slave in several pioneers, but master in only one of them.

Bluetooth is an emerging wireless communication technology that allows devices, within 10–100-meter proximity, to communicate with each other.

The primary goal of this technology is to enable devices to communicate without physical cables.

It is a worldwide specification for a small low-cost radio.

It links mobile computers, mobile phones, other portable handheld devices, and provides Internet connectivity.

It is developed, published and promoted by the Bluetooth Special Interest Group (SIG).

Its key features are robustness, low complexity, low power and low cost.

Bluetooth does not require direct line of sight and can also support multipoint communication in addition to point-to-point communication.

The short-range transceivers that are built into mobile gadgets to provide Bluetooth compatibility are designed to operate in the 2.45 GHz unlicensed radio band.

It provides data rate up to 721 kbps as well as three 64 kbps voice channels.

Through the use of frequency hopping, a Bluetooth transceiver can minimize the effect of interference from other signals by hopping to a new frequency after transmitting or receiving a packet. (Bluetooth hop frequency is 1600 hops/second).

Each Bluetooth gadget has a unique 12-bit address. In order for Bluetooth gadget - A to connect with Bluetooth gadget -B, gadget A must know the (12-bit) address of gadget B.

Bluetooth supports gadget authentication and communications encryption.

Bluetooth uses GFSK (Gaussian frequency shift keying) modulation technique.

IEEE 802.16 Standards, Frame formats

WiMAX (Worldwide Interoperability for Microwave Access) is an emerging wireless communication system that is expected to provide high data rate communications in metropolitan area networks (MANs). In the past few years, the IEEE 802.16 working group has developed a number of standards for WiMAX. The first standard was published in 2001, which supports communications in the 10-66 GHz frequency band. In 2003, IEEE 802.16a was introduced to provide additional physical layer specifications for the 2-11 GHz frequency band.

These two standards were further revised in 2004 (IEEE 802.16-2004). Recently, IEEE 802.16e has also been approved as the official standard for mobile applications

Need of DLL

Physical layer takes care of transmitting information over a communication channel.

Information transmitted may be affected by noise or distortion caused in the channel.

Hence, the transmission over communication channel is not reliable.

The data transfer is also affected by delay and has finite rate of transmission. This reduces the efficiency of transmission.

Data link layer is designed to take care of these problems i.e., data link layer improves reliability and efficiency of channel.

We can also say that the services provided by physical layer are not reliable.

Hence, we require some layer above physical layer which can take care of these problems. The layer above physical layer is Data Link Layer (DLL).

Functions of a data link layer

Error Control: Physical layer is error prone. The errors introduced in the channel need to be corrected.

Flow Control: There might be mismatch in the transmission rate of sender and the rate at which receiver receives. This mismatch must be taken care of.

Addressing: In the network where there are multiple terminals, whom to send the data has to be specified.

Frame Synchronization: In physical layer, information is in the form of bits. These bits are grouped in blocks of frames at data link layer. In order to identify beginning and end of frames, some identification mark is put before and/or after each frame.

Link Management: In order to manage co-ordination and co-operation among terminals in the network, initiation, maintenance and termination of link is required to be done properly. These procedures are handled by data link layer. The control signals required for this purpose use the same channels on which data is exchanged. Hence, identification of control and data information is another task of data link layer.

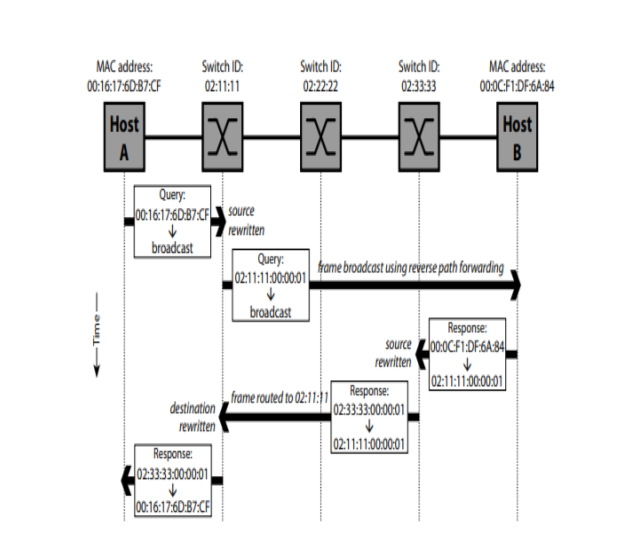

Simulation

Due to the large number of hosts, switches and links here we will be aiming to simulate to demonstrate the weaknesses of Ethernet, we will be using simulation instead of implementing the topology physically.

While simulators pose an enormous advantage in terms of the time it takes to change the topology, implement new protocols and explore larger topologies, there are a number of disadvantages, namely that as it is a simulation, it takes time to program a simulator, and this may approach the difficulty in creating a real network, execution can be computationally expensive, and statistical analysis of the simulation in order to verify the results can be difficult.

There are a number of different type of simulators the main distinction being a discrete event simulation or a continuous event simulation. The most suitable for performing network simulation of a packet-based architecture is a discrete event simulator, as each movement of a packet may be viewed of as an event in the system.

In order to ensure that the goals of the project are achievable, and the results are repeatable and useful to others, we will be building upon an existing network simulator. Some time was spent investigating the different network simulators available.

Fig- It is showing simulation of DLL.

References:

1. Kurose, Ross, “Computer Networking a Top Down Approach Featuring the Internet”, Pearson, ISBN-10: 0132856204

2. L. Peterson and B. Davie, “Computer Networks: A Systems Approach”, 5th Edition, Morgan-Kaufmann, 2012.

3. Douglas E. Comer & M.S Narayanan, “Computer Network & Internet”, Pearson Education

4. William Stallings, “Cryptography and Network Security: Principles and Practice”, 4th Edition

5. Pachghare V. K., “Cryptography and Information Security”, 3rd Edition, PHI