Unit-1

Measurement Systems and Performance

1.1.1 Configuration of a Measuring System

Introduction to Measurement and Instrumentation

Measurement

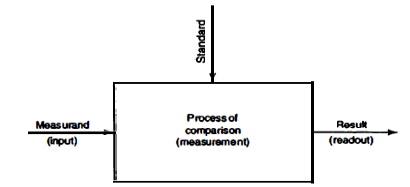

In field of engineering there are several type quantities which need to measured and expressed in day-to-day work. For example, physical, chemical, mechanical quantities, etc. Measurement is process of obtaining quantitative comparison between predefined standard and unknown quantity (measurand) (figure 1). Measurand is physical parameter being observed and quantified i.e. input quantity for measuring process. Mechanical measurement means determination measurand related to mechanical measurement. The act of measurement produces result.

|

Figure 1: Fundamental measuring process

Following quantities are typically within the scope of mechanical measurement.

Fundamental quantities: mass, length, time.

Derived quantities: Pressure, displacement, stress and strain, temperature, acoustics, fluid flow, etc.

In order to observed and compare magnitude of quantities some magnitude of each kind must be taken as basis or unit. Well defined units are needed to measure any quantity. Dimension means characteristic of measurand. Dimensional unit is the basis for qualification of measurand. For example, length is dimension and centimetre is its unit, time is dimension and second is its unit. So, unit is standard measurement of any fundamental quantity. Number of measures is same quantity as number of times unit occurs. For example, 100 metres, we know that the metre is the unit of length and that the number of units of length is one hundred. Therefore, physical quantity length is defined by the unit metre.

Fundamental and derived unit

The units which are independent and not related with each other are known as fundamental units. This unit does not vary with time, temperature and pressure etc. There are seven fundamental units: mass, length, time, electric current, temperature, luminous intensity and quantity of matter. Derived unit are expressed in terms of fundamental units. Derived units originate from some physical law defining that unit. For example: the area of rectangle is proportional to its length (l) and breadth (b) or A = l × b. If the metre is considered as the unit of length, then the unit of area is  . The derived unit for area (A) is then the square metre (

. The derived unit for area (A) is then the square metre ( ).

).

International system of units (SI units)

International system if units is modern form of metric system and is generally a system of units of measurement devised around seven base units and the convenience of the number ten. It is most commonly use of metric system of measurement in both commerce and engineering. Following table shows fundamental units with standard definitions.

Physical quantity | Standard Unit | Definition | Symbol |

Length | meter | The length of path travelled by light in an interval of 1/299 792 458 Seconds | m |

Mass | kilogram | The mass of a platinum–iridium cylinder kept in the International Bureau of Weights and Measures, Sevres, Paris | Kg |

Time | Second | 9.192631770 × 109 cycles of radiation from vaporized caesium-133 (an accuracy of 1 in 1012 or 1 second in 36000 years) | s |

Temperature | kelvin | The temperature difference between absolute zero and the triple point of water is defined as 273.16 kelvin | K |

Current | ampere | One ampere is the current flowing through two infinitely long parallel conductors of negligible cross-section placed 1 metre apart in a vacuum and producing a force of 2 × 10–7 Newtons per metre length of conductor | A |

Luminous intensity | candela | One candela is the luminous intensity in a given direction from a source emitting monochromatic radiation at a frequency of 540 terahertz (× 1.4641 mW/steradian. (1 steradian is the solid angle which, having its vertex at the centre of a sphere, cuts off an area of the sphere surface equal to that of a square with sides of length equal to the sphere radius) | cd |

Matter | Mole | The number of atoms in a 0.012 kg mass of carbon-12 | mol |

There are two supplementary unit added to SI units.

Following table show some derived unit

Quantity | Standard unit | Symbol |

Area square | metre |

|

Volume cubic | Metre |

|

Velocity | metre per second | m/s |

Acceleration | metre per second squared | m/ |

Angular velocity | radian per second | rad/s

|

Density | kilogram per cubic metre |

|

Force | Newton | N |

Pressure | Newton per square metre | N/ |

Torque | Newton metre | Nm |

Work, Energy, heat | joule | J |

power | watt | W |

Thermal conductivity | Watt per metre Kelvin | W/mK |

Electric charge | coulomb | C |

Voltage, e.m.f., potential difference | volt | V |

Dimensions

Dimension is unique quality of every quantity which distinguishes it from all other quantities. In mechanics there are three fundamental units: length, mass and time. Their dimensional symbolic representation is given as: Length = [L], Mass = [M], Time = [T] There are some more units which are, charge, temperature and current. Charge = [Q], Temperature = [K], Current = [I]

Standards

Physical representation of unit of measurement is known as standard of measurement. A unit is realized by reference to an arbitrary material standard or to natural phenomena including physical and atomic constants. For example, the fundamental unit of mass in the international system (SI) is the kilogram, defined as the mass of a cubic decimetre of water as its temperature of maximum density of 400°C. It is important to have relationship between standards and read out scale of any measuring system and this relationship can be established through a process known as calibration.

Classification of standards

Standards are classified according to their function and application as follows

International standards

International standards are defined by international agreement. They represent units of measurement to closet possible accuracy that measurement technology allows. These standards checked by absolute measurement in terms of fundamental units periodically. These standards are maintained at international bureau of weights and measure.

Primary standards

Primary standards are maintained by national standard laboratories in different part of world. Function of primary standard is calibration and verification of secondary standards. These standards are not available outside the laboratories.

Secondary standards

These standards are basic reference standards used in industrial laboratories. These standards are checked locally against other reference standards in area. Secondary standards are sent to international standards periodically for calibration and comparing against primary standards. Then they sent back to industrial laboratories with certification of their measured value against primary standards.

Working standards

These standards are used to check laboratory instruments for accuracy and performance. For example, manufacturers of components such as capacitors uses working standard for checking the component values being manufactured, e.g. a standard capacitor for checking of capacitance value manufactured.

Significance of measurements

Measurements provide quantitative information of physical variables and processes. Measurement is basis of all research, design and development.

All mechanical design involves three elements: Experience element which depends on engineers’ experience and common sense. Rational element based on quantitative engineering principles, laws of physics and so on. And experimental element is based on measurements i.e. measurement of performance or operations of device that being design and developed.

Measurement provides comparison between what is desired and what is achieved.

Measurement is fundamental element of any control process. Control process requires measurand between actual and desired performance. Controlling system of control process must know the magnitude and direction of measurand in order to control the process as per desired performance.

Many daily operations require measurement to maintain proper performance. An example is in the central power station. Temperatures, flows, pressures, and vibrational amplitudes must be constantly monitored by measurement to ensure proper performance of the system.

Methods of measurements

Direct method

In this method measurand is directly compare with primary standard or secondary standard. For example: Measuring a length of bar. We measure length of bar with the help of measuring tape or scale which is acts as secondary standard i.e., we compare measured quantity with secondary standard directly. Scale is expressed in terms of numerical value and unit.

Indirect method

In this method measurand is converted into some other measurable quantity then we measure this measurable quantity. In indirect method makes a use of some transducing device coupled to chain of connecting apparatus which we call measuring system. This chain of devices converts the basic form input into equivalent analogous form which then processes and present at out as known function of original input. For example: we cannot measure strain in bar due to applied load directly and for human it is hard to sense strain in bar. So assistance is required to sense, convert and finally represent an analogous output in the form of displacement on scale or chart or digital readout.

Generalized measuring system

Generally, most of the measuring system consisting of three stages (Figure 3)

Stage 1: Sensor-transducer stage

Primary function of first stage is to sense the measurand and provide analogues output. At a same time, it should be insensitive to every other possible input. For example, if it pressure measuring device then it should be insensitive to other inputs like change in temperature or acceleration. If it is linear accelerometer then it should insensitive to angular acceleration and so on. Practically it is impossible to have such selective sensors. Unwanted sensitivity is measuring error known as noise when it varies rapidly and known as drift when it varies slowly.

Stage 2: Signal conditioning stage

The purpose of this stage is to modify the output signal from stage one so it can be suitable for next readout-recording stage. Sometimes output from stage 1 has low magnitude so signal conditioning can also amplify the signal to the level required to drive final terminating device. In addition, this stage can also perform filtrations to remove unwanted signal which cause an error in readout-recording stage.

Stage 3: Readout-recording stage

This stage provides indication or recording in form that can be evaluated by human senses or controller. Output can be indicated in two forms

|

Figure 2: Block diagram of generalized measuring system

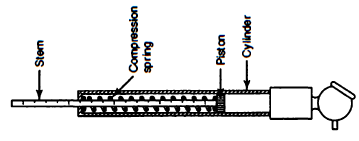

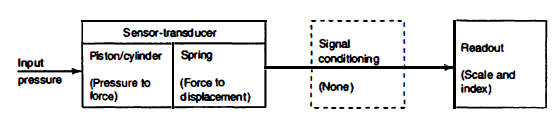

To illustrate the measuring systems, consider a tire gauge which used to measure pressure of tire shown in figure 4.

|

Figure 2: A gage for measuring pressure in automobile tyre

It consists of cylinder and piston, spring resisting the piston movement and stem with scale divisions. As the air pressure bears against the piston, the resulting force compresses the spring until the spring and air forces balance. The calibrated stem, which remains in place after the spring returns the piston, indicates the applied pressure.

The piston-cylinder combination constitutes a force-summing apparatus, sensing and transducing pressure to force. As a secondary transducer, the spring converts the force to a displacement. Finally, the transduced input is transferred without signal conditioning to the scale and index for readout. Block diagram for a gage for measuring pressure in automobile tyre is shown in figure 5.

|

Figure 4: Block diagram for a gage for measuring pressure in automobile tyre.

Instruments

An instrument is serves as an extension of human faculties and enables the man to determine the value of unknown quantity which is impossible to measure with human senses only. Measuring instruments provides the information about measurand.

Classification of instruments

Instruments are classified according to following types,

Electrical measuring instruments are measuring instrument which uses mechanical movement of electromagnetic meter to measure voltage, current, power, etc. These measuring instruments uses d’Arsonval meter. While any measuring instruments use d’Arsonval meter along with amplifiers to increase sensitivity of measurements are called as electronics instruments.

2. Analogue and digital instruments:

Measuring instruments that uses analogue signal to display the magnitude of measurand is called as analogue instrument. Digital instruments use digital signal to display magnitude of measurand.

3. Absolute and secondary instruments:

Absolute instrument gives the magnitude of measurand in terms of physical constant of instrument and deflection of one part of instrument. In this type of instrument, no calibration is needed. While secondary instruments, the quantity of the measured values is obtained by observing the output indicated by these instruments.

Characteristics of measuring instruments Characteristics of measuring are classified into two categories:

Static characteristics

Static characteristics of instrument are characteristic of system when parameter of interest i.e., input is constant or varying slowly with time. Static characteristic of instrument includes range and span, linearity, loading effect, accuracy, precision, resolution, repeatability, reproducibility, static error, sensitivity and drift.

Range and span

Range is the region between upper and lower limit within which instrument is design to operate for measuring, indicating, recording a physical quantity. For example, a thermometer has a scale from −40°C to 100°C. Thus, the range varies from −40°C to 100°C.

Span is algebraic difference of upper and lower limit. For example, a thermometer, its scale goes from −40°C to 100°C. Thus, its span is 140°C.

Linearity

Normally it is necessary output reading of instrument is linearly proportional to quantity being measured. An instrument is said to be linear if relationship between input and output can be fitted into a line. If it is not linear it should not conclude that instrument is inaccurate.

Loading effect

Change of circuit parameter, characteristic, or behaviour due to instrument operation is known as loading effect.

Accuracy

Accuracy is closeness with which instrument reading approaches true value of variable under measurement. If the difference between reading and true value is very less then instrument it is said to be having high accuracy i.e. accuracy refers to how closely the measured value is close to its corresponding true value. The manufacturer of the instrument specifies accuracy as the maximum amount of error which will not be exceeded. Therefore, it can be termed as conformity to truth. For example, if accuracy of ±2% is specified for a 100 V voltmeter, the true value of the voltage lies between 98 V and 102 V with maximum error for any observed value or reading not exceeding ± 2V. The accuracy of an instrument can be specified in either of the following ways.

Point accuracy: Point accuracy means accuracy of instrument is only at some particular point on scale. Point accuracy does not give any information regarding general accuracy of an instrument.

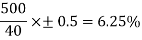

Percentage of scale range accuracy: The accuracy of a uniform scale instrument is expressed in terms of scale range. For example, consider a thermometer having a range and accuracy of 500°C and ± 0.5% of scale range, respectively. This implies that for a reading of 500°C, the accuracy is ±0.5%, while for a reading of 40°C, the same accuracy yields a greater error.

|

Percentage of true value accuracy: The accuracy is defined in terms of true value of quantity being measured. Thus, errors are proportional to the reading i.e., smaller reading lesser error. This method is best way to specify accuracy of instrument.

Precision

Precision means having two or more reading for same input quantity close to each other under same condition. Precise reading is not need to be accurate reading. Consider a voltmeter having very high degree of precision with sharp pointer and having mirror-backed distinct scales which remove parallax error but while measuring zero adjustment is not properly done thus readings are highly precise but not accurate because zero adjustment is not accurate. Conformity and number of significant figures are two characteristics of precision. Conformity is necessary but not a sufficient condition for precision and vice versa. For example, consider that the true value of a resistance is given as 2,496,692 W, which is shown as 2.5 MW measured using an ohmmeter. The ohmmeter will show this reading and eventually due to scale limitation, the instrument causes a precision error. It is evident from the above example that due to lack of significant figures, the result or measurement is not precise, though its closeness to true value implies its accuracy.

Resolution

Resolution is defined smallest change in measurand to which instrument will respond. It is also known as discrimination. For example, consider a 500 V voltmeter in which the needle shows a deflection or reading change from zero only when the minimum input is 1 V. This instrument cannot used to measure 50mV because its resolution is 1V. i.e. there will be no effect in instrument for any input less than 1V.

Repeatability

Repeatability provides the closeness with which we can measure a given input value repeatedly. Repeatability is also called as inherent precision of the measurement equipment. If an input of a constant magnitude is applied intermittently, the output reading must be the same, otherwise the instrument is said to have poor repeatability. Figure 2.1 shows the relation between input and output with ± repeatability.

|

Figure 5: Graph showing repeatability.

Reproducibility

Reproducibility is similar to repeatability which measures the closeness with which we can measure given input repeatedly when input is applied constantly under the same condition. The only difference is reproducibility is measured over a period of time. Instrument should have good reproducibility, i.e. when the output reading of the instrument remains the same when an input with a constant magnitude is applied continuously over a period of time. Otherwise, the instrument is said to have poor reproducibility.

Static error

Error is defined as difference between measured value and true value. Sometimes it’s impossible to measure exact true value so best measured value is considered. When this error difference is constant is known as static error.

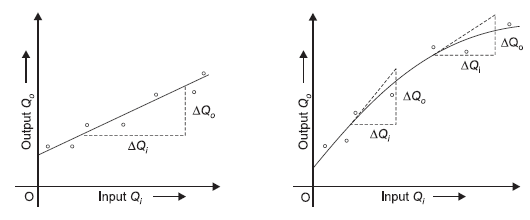

Sensitivity

The ratio of change in output to the change in input of the instrument is known as sensitivity of instrument. For example, consider a voltmeter in which the input voltage  changes by 2 V, then the output reading should also change by 2 V. Therefore, the sensitivity is expressed as

changes by 2 V, then the output reading should also change by 2 V. Therefore, the sensitivity is expressed as  thus it represents how instrument respond to change in input. The graphical representation of input-output relationship of measurand helps to determine sensitivity of instrument as a slop of calibration curve.

thus it represents how instrument respond to change in input. The graphical representation of input-output relationship of measurand helps to determine sensitivity of instrument as a slop of calibration curve.

|

Figure 6: Graphical representation of sensitivity

Where,

Then Sensitivity In other case calibration curve is not straight line so sensitivity is not constant and varies as input varies as shown figure 7. |

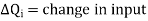

Drift

Over a period of time indication of instrument is gradually shift is known as drift during which true value of quantity does not change. Drift is categorized in three type,

Zero drift

Same of shifting throughout the whole calibration is known as zero drift. Zero drift also known as calibration drift as shown in figure 7a. Slippage, undue warming up of electronic tube circuits, or if an initial zero adjustment in an instrument is not made are most common reason for zero drift.

Span drift

|

Drift which increase gradually with the deflection of pointer is known as span drift. Also known as sensitivity drift and is not constant as shown in figure 7b. Combination of zero drift and span drift is shown in figure 7c.

Figure 7: Types of drift

Zonal drift

Drift occurs in particular zone of instrument is known as zonal drift. Change in temperature, thermal emfs, mechanical vibrations, wear and tear, stray electric and magnetic fields, and high mechanical stresses developed in some parts of the instruments and systems are some of the reasons causing zonal drift.

Dynamic characteristic

The characteristics which indicate the response of instruments that measure time-varying quantities in which the input varies with time and so does the output are known as dynamic characteristics. The dynamic characteristics of an instrument include measuring lag, fidelity, speed of response, and dynamic error.

Various factor causes error in measurement some of which due to instrument themselves or some of which due to inappropriate handling of instrument. Errors can be categorized into different type such as gross errors, systematic errors, absolute and relative errors, random errors.

Gross errors

Gross errors are mainly occurring due to human mistakes like errors reading of measurements or improper handling of instruments or mistake in recording and calculating of measurements. Gross errors cannot be completely minimized but they can be control to some extent by implementing following measures,

Systematic errors

These errors occur due shortcoming in measuring instrument such as defective or worn out part or environmental effect on instrument. These errors are also known as bias. A constant uniform deviation of operation of instrument is known as systematic error. Systematic errors are classified as instrumental error, environmental and observational error.

Instrumental error

Instrumental errors are inherent in measuring instruments, because of their mechanical structure. For example, in the D’Arsonval movement, friction in the bearings of various moving components, irregular spring tensions, stretching of the spring or reduction in tension due to improper handling or overloading of the instrument.

These errors can be avoided by

Environmental error

These errors occur due to effect of surrounding environment on instrument such as change in temperature, change in humidity, change pressure, vibrations, and electrostatic or magnetic fields. For example, as surrounding temperature changes elasticity of a moving coil (spring) of an instrument also changes thus, reading results also changes. These errors can be minimized by

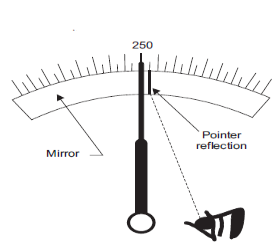

Observational error

These errors occur due to misleading observations made by observer. The most common observational error is the parallax error occurs while reading a meter scale, and the error of estimation when obtaining a reading from a meter scale. These errors are caused by the habits of individual observers. For example, an observer can cause error by holding his head too far to the left while reading a needle and scale reading as shown in figure 8.

|

Figure 7: Parallax error occurs due line of vision is not inline pointer.

When two individual observers read measurement, they may not be the same due to their different sensing capabilities and therefore, affect the accuracy of the measurement. For example, observers note down the reading at a different time (one is noted too early and other ones noted at particular time) causing an error. These errors can be minimized by using digital instrument instead of using deflection type instrument. The parallax errors can be minimized by using instrument having highly accurate meters provided with mirrored scales with sharp pointer so pointer’s image can hide by pointer itself and readings are noted directly in line with the pointer.

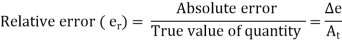

Absolute and relative errors

Absolute error is defined as amount of physical error in any measurement. It is denoted as  . The unit of physical error is same as unit of quantity being measured. For example, a 400 Ω is said to be have possible error of ± 30 Ω. Here ± 30 Ω represent absolute error in terms of same unit ohm (Ω). Mathematically absolute error can be expressed as

. The unit of physical error is same as unit of quantity being measured. For example, a 400 Ω is said to be have possible error of ± 30 Ω. Here ± 30 Ω represent absolute error in terms of same unit ohm (Ω). Mathematically absolute error can be expressed as

Where,

|

Relative error is defined as ratio of absolute error to true value of quantity being measured. It is denoted as . Relative error is also known as fractional error. It is usually expressed in percentage, ratio, parts per thousand, or parts per million relative to the total quantity. Mathematically relative error can be expressed as

. Relative error is also known as fractional error. It is usually expressed in percentage, ratio, parts per thousand, or parts per million relative to the total quantity. Mathematically relative error can be expressed as

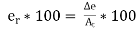

Percentage relative error (% From equation (1) and (3)

|

Random error

The errors which are occurs due to unknown factors are known as random errors. These types of errors occur even after gross error or systematic errors are corrected or at least accounted for. For example, suppose a voltage is being observed by a voltmeter and observations are taken after every 20 minutes of intervals. And instrument is being operated under ideal environmental conditions and is accurately calibrated before measurement, it still gives readings that vary slightly over the period of observation. This variation cannot be corrected by any method of calibration or any other known method of control.

Sources of errors

The sources of error, other than the inability of a piece of hardware to provide a true measurement, are as follows:

The statistical analysis of data allows an analytical determination of the uncertainty of the final test result. In order to make statistical analysis more meaningful a large number of measurements should be taken. Statistical analysis helps to determine deviation of measurement from its true value when reason for specific error is unpredictable. However statistical analysis cannot remove fixed bias which is present in all reading hence while performing statistical analysis systematic errors should be as small as compare to random errors.

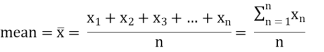

Arithmetic mean

Arithmetic mean is used to find most probable value of measured variable from large number of reading of same quantity which are not exactly equal to each other. The arithmetic mean for n readings  is given as:

is given as:

|

Where

= arithmetic mean

= arithmetic mean

= nth reading taken.

= nth reading taken.

n=total number of readings

Deviation from the mean

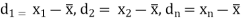

This is the departure of a given reading from the arithmetic mean of the group of readings. If the deviation of the first reading,  , is called

, is called  and that of the second reading

and that of the second reading  is called

is called  , and so on, the deviations from the mean can be expressed as

, and so on, the deviations from the mean can be expressed as

|

The deviation may be positive or negative. The algebraic sum of all the deviations must be zero.

Average deviations

The average deviation is an indication of the precision of the instrument used in measurement. Average deviation is defined as the sum of the absolute values of the deviation divided by the number of readings. The absolute value of the deviation is the value without respect to the sign. Highly precise instruments yield a low average deviation between readings. Average deviation may be expressed as

Where

N= total number of readings |

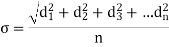

Standard deviation

The standard deviation of an infinite number of data is the Square root of the sum of all the individual deviations squared, divided by the number of readings. It may be expressed as

Where

|

The standard deviation is also known as root mean square deviation, and is the most important factor in the statistical analysis of measurement data. Reduction in this quantity effectively means improvement in measurement. For small readings (n < 30), the denominator is frequently expressed as (n – 1) to obtain a more accurate value for the standard deviation.

Limiting Errors

Most manufacturers of measuring instruments specify accuracy within a certain % of a full-scale reading. For example, the manufacturer of a certain voltmeter may specify the instrument to be accurate within ± 2% with full scale deflection. This specification is called the limiting error. This means that a full-scale deflection reading is guaranteed to be within the limits of 2% of a perfectly accurate reading; however, with a reading less than full scale, the limiting error increases.

References:

1. Instrumentation and control systems by W. Bolton, 2nd edition, Newnes, 2000.

2. Thomas G. Beckwith, Roy D. Marangoni, John H. Lienhard V, Mechanical Measurements (6th Edition) 6th Edition, Pearson Education India, 2007.

3. Gregory K. McMillan, Process/Industrial Instruments and Controls Handbook, Fifth Edition, McGraw-Hill: New York, 1999.