6.1.1 Fundamental Concept

It is mimicry of real world beyond the flat monitor which gives the 3D visual experience.

Virtual reality is the experience that is similar to real world or it can be different from the real world.

It is used in various field as medicine, entertainment, education, design and training, etc.

It often used in marketing buzzword for compelling interactive video games or 3D movies and television programs.

6.1.2 Three I’s Of Virtual Reality and Classic Components Of VR Systems

Three I’s of virtual reality

Virtual reality is based on following three I’s:

Immersion:

It helps to feel the real audience as virtually.

As the immersion helps in visualization or branded projects to adjust formatting and to find exciting way to take into new world for people.

Immersion gives the real time perception of being present physically in virtual environment.

It also helps to interact with or without disruption.

It translates directly into increased comfort.

The immersion shows the sympathy towards the brand providing such a real experience.

Immersion is the simulation of computer graphics.

It creates the real world realistic and becomes interactive.

It is the part of screen where action performs.

Interaction

Interaction is the action that performs on the user’s input.

Interaction is found with functionality such as user’s input with gesture, verbal commands, head movement tracking, etc.

As example, the product in virtual clothing store can be interacted and manipulated using controllers or by gazing at certain points in the created environment.

By interaction the experience goes to the next level.

It works from passive observation to active participation.

The device tracks the interaction of user in virtual world from which the tool understand user’s needs and it influence user in buying decision process.

Interaction becomes synthesized not static.

Imagination

In virtual reality the imagination used to perceive non-existent things and creates illusion of them being real.

Virtual reality is the medium to tell story and experience it.

It also helps in marketing to show the number of possibilities.

The human imagination occurs in virtual environment.

It creates the virtual world in front of human mind.

As the imagination occurs the problems solving ability is also increasing in virtual environment.

|

Summary:

Three I’s of the virtual reality are as follows:

- Interaction

- Imagination

- Immersion

6.1.3 Classic Components of VR Systems

This section shows the overview of VR system from hardware to software to human perception to understand working of entire VR system.

Following are the components that are used in VR system.

- Hardware

- VR device

- Software

- Audio

- Human perception

Hardware

The hardware of VR works on the human motion then hardware produce stimuli which overrides the senses of the user.

This process is occurred by sensors for tracking motions of user such as button presses, controller movements, and eye and other body part movement, etc.

It also considers the physical surrounding world as it is engineered hardware and software which not constitutes the complete VR system.

The user’s interaction and user both are equally important for hardware.

The hardware of VR is sensor which converts the energy from signal to electrical circuit. Hence it is acting like a transducer.

The sensor are receptors to collect the energy for conversion where user get sense about his eyes and ears for the same purpose.

The user moves with physical world which is having his own configuration space which can be transformed or configured correspondingly.

VR Device

The device that is hardware product used for VR technology to happen.

Basically the inputs are received by the user and his surrounding with appropriate view of the world which renders to display for VR experiences.

Some kind of key components are used for VR system as given below:

- Personal Computer/ Console/ smartphone

The PC or console or smartphone is required for creation of content and production of significant computing power.

As the inputs and outputs are collected sequentially by the computer.

Therefore the PCs are important for the VR system.

The VR content is perceive by the user or user that view inside so it is equally important other hardware.

- Input device

Input devices are used to give the immersion to the user.

It determines the way of user communication with computer.

The input devices are helping user to interact with VR environment to make it intuitive and natural as possible.

But in current state it is not advanced enough to support this yet.

Following are some input devices used in VR system.

- Joystick

- Force balls/tracking balls

- Controller wads

- Data gloves

- Track pad

- On-device control buttons

- Motion tracker

- Bodysuits

- Treadmills

- Motion platforms (virtual omni)

- Output device

It is a device that can actually sense organs.

It creates an immersive feeling in VR environment.

It is used to present the VR world to the user.

It uses the visual, auditory or haptic displays to present the VR system.

In current situation the output devices are also underdeveloped due to the current state of art VR system is not allow to stimulate human senses perfectly.

Some are the devices are used for audio or the haptic information.

Software

After the coordination of input output devices the underlying software is also equally important.

It helps to manage I/O devices, analyzing incoming data and also to generate proper feedback.

As the applications are complex or time critical then software must be there to manage it.

The input must be handle timely and sent to the output display to prompt the feeling of immersion in VR system.

The process is start with SDK (Software Development Kit) from VR headset vendor and build their own VWG from scratch.

SDK includes the basic drivers, an interface to access tracking data and call graphical rendering libraries.

The readymade VWG are used for particular VR experiences and has option to add high level scripts.

Audio

It is equally important as uses senses and to achieve immersion.

It is technically may not be complex as visual components.

Mostly virtual reality provides the headsets to users with option to use their own headsets in conjunction with headset.

The audio in VR works as positional or multispeaker audio which gives the 3D world immersion.

Positional audio is the way of seeing with ears used in VR to gain the attention of users and to give the information that cannot be shown visually.

Human Perception

To achieve the human perception it needs to understand the physiology of human body.

Optical illusion is important to achieve maximum human perceptual without side effects.

There are different stimulus, receptor and sense organ of human are used to sense a human.

In VR the human senses are fooled is important to create immersion or stimulate the real world in front of human.

The human touch, hearing and other senses are followed by the human brain which gives the most of information to the VR to create a vision.

In VR, the system synchronization of all stimuli with user’s actions are responsible for proper functioning of VR.

Summary:

Following are the components that are used in VR system.

- Hardware

- VR device

- Software

- Audio

- Human perception

6.1.4 Applications of VR Systems

The Virtual Reality is described as the computer generated environment where user can interact and explore with.

The user immersed in that world and the brain is triggered to think about the what someone is seeing in virtual world is real.

Following are some applications of VR used in various fields:

- Automotive industry

- Healthcare

- Retail

- Tourism

- Real estate

- Architecture

- Gambling

- Learning and development

- Recruitment

- Entertainment

- Education

- Sports

- Arts and design

6.2.1 Input- 3D Position Trackers and Its Types

The head mounted display, controller and other body parts within space is detected by the positional tracking at its precise position.

The VR is used to stimulate the perception of human.

In 3 dimensional space the illusion is not break because of accurate and precise positional tracking being paramount.

There are various methods of tracking the position and orientation of the display have been developed to achieve.

The sensors tracked the signal from transmitters on or near the tracked objects and send the data to computer to maintain approximation of their physical location.

The physical location can be identify and defined by using one or more coordinating system such as Cartesian rectilinear system, spherical polar system and cylindrical system.

The movement controls and monitoring done by many interfaces which are designed for it.

The positional tracking system is working to provide seamless user’s experience.

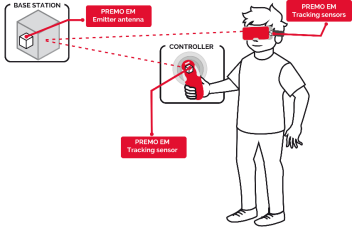

|

Fig. no. 6.2.1

Following are the types of virtual 3D positional tracking.

Wireless Tracking

The wireless tracking system is same as GPS system but works in both indoor and outdoor.

It uses set of anchors which are placed around the perimeter of the tracking space and one or more tags that are tracked.

It is also known as indoor GPS.

Optical Tracking

The computer vision algorithm is used in optical tracking.

The cameras are used to tracked the position and orientation placed around the headsets.

It works on stereoscopic human vision technique.

Inertial Tracking

Accelerometer and gyroscopes are used in inertial tracking system.

The linear acceleration is measured by the accelerometer.

Angular velocity is measured by gyroscope.

The MEMs technology is used in modern inertial tracking system.

It can track fast movements elated to other sensors.

It is capable to give update on high rates.

Acoustic Tracking

The echolocation technique is used for acoustic tracking system.

As naturally animal is found by device position followed by acoustic tracking.

This technique is used to identify the objects or device position by echolocation.

It gives accurate measurement of coordinates and angles.

It is easy to produce and cheap to buy.

It does not uses the electromagnetic interference.

Magnetic Tracking

It follows the Theremin principle.

The electromagnetic sensors are used to measure the intensity of inhomogeneous magnetic fields.

It is suitable for fully immersive virtual reality displays.

The user needs to be close to base emitter.

It has lot of errors and jitter because of frequent calibration requirements.

Summary:

Following are the types of virtual 3D positional tracking.

- Wireless Tracking

- Optical Tracking

- Inertial Tracking

- Acoustic Tracking

- Magnetic Tracking

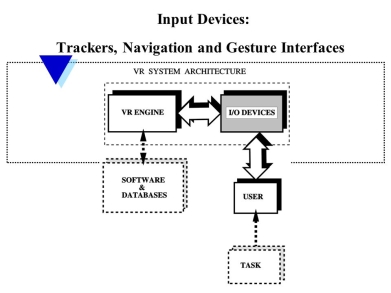

6.2.2 Navigation And Manipulation Interfaces

Navigation is moving object from one place to other place within environment.

The combination of travel with wayfinding and travel without wayfinding is used in navigation.

It allows relative position control of virtual objects.

The direct manipulation uses shadows, perspective, occlusion and other 3D techniques.

It minimizes the number of navigation steps for users to accomplish their task.

It avoids unnecessary visual clutter, distraction, contrast shifts and reflections.

In Navigation and Manipulation Interfaces the user enable to construct visual groups to support spatial recall.

Following figure shows the input devices used in VR system.

|

Fig. no. 6.2.2

6.2.3 Gesture Interfaces

User usually uses the keyboard and mouse to interact with computer.

The conventional keyboard and mouse interfaces decreases the level of immersion.

As the manipulation method does not resemble actual action in reality.

The gesture interface is used for the navigation in 3D space.

It is suitable for HMD based virtual environment.

The Kinect Depth Camera is used to capture and recognize the gesture in real time.

The gesture interface is more preferable than conventional keyboard and mouse for obtaining a high level of immersion.

6.2.4 Graphics Displays –HMD And CAVE, Sound Displays, Haptic Feedback

HMD And CAVE

HMD

HMD requires distortion and color correction.

HMD is completely immersive.

There are 100 degrees of field view in HMD.

The disembodies and isolating is presence of HMD.

Only single user can use the HMD.

In HMD the movement is limited due to isolation, tether and small tracked volume.

CAVE

CAVE has perfect pixels.

It is immersive but not as HMD.

It has 170 degrees field of view more than HMD.

It is excellent and can see own body.

The CAVE is completely wireless, precise tracking.

It can move around freely in user volume.

The multiple viewers, one tracked user can handle CAVE.

It has lightweight glasses and wireless.

Sound Displays

The interactivity, immersion and perceived image quality are the role play of sound display.

It is equally important in movies and video games.

There are sound dimensions as mono, stereo and occlusion.

Some are the sound display hardware such as offload computational load, convolvotron, Audigy.

Haptic Feedback

It is a touch to communicate with users.

The haptic feedback is for example mobile phone vibrates on touch and rumble in game control.

The device communicates with predominantly by sight and hearing as human having many senses to communicate.

The haptic feedback uses to stimulate the sense of touch.

As human touches to the computer same with computer also can touch to the user by haptic feedback.

It is a mode of communication not a technology or application.

Summary:

Following are some graphics display.

- HMD

- CAVE

- Sound Displays

- Haptic Feedback

6.3.1 Graphics rendering pipeline

Graphics rendering Pipeline is used to describe steps to be performed to render a 3D scene to 2D screen.

It is also known as graphics pipeline or rendering pipeline.

When the 3D model created for video game or any other 3D animation then the graphic pipeline process starts.

As it turns 3D model into what computer displays.

The steps are to be performed to process the graphic pipeline depends on the software or hardware and computer characteristics that are used.

There is no universal case of graphic pipeline is introduce for all cases.

Application programing interfaces (APIs) are used to unify similar steps and control graphics pipeline of given acceleration like Direct3D and OpenGL.

This model is usually used in real time rendering.

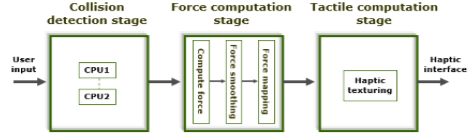

6.3.2 Haptic rendering pipeline in virtual reality

Haptic rendering pipelines are the applied forces that are used by users through force feedback device.

The difference between haptic device and input interfaces such as mouse and keyboard is that it will give the force feedback to the virtual environment.

Haptic rendering is used to enable user to touch the, feel and manipulate virtual objects.

It also enhance the virtual experience to the user.

Following figure shows the process of haptic rendering pipeline modelling which contains some stages.

- Collision detection stage

- Force computation stage

- Tactile computation stage

|

Summary:

Following figure shows the process of haptic rendering pipeline modelling which contains some stages.

- Collision detection stage

- Force computation stage

- Tactile computation stage

Following are some concepts that are used in Haptic Rendering Pipeline Modeling.

6.3.3 Geometric Modeling

It is a modelling based on CAD file.

It uses the AutoCAD software

The files needs to be converted to formats compatible with VR toolkit.

This modelling is used in preexisting models in manufacturing applications.

In this the each part is considered as separate file.

6.3.4 Kinematic Modeling

It has homogeneous transformation matrices.

It relates to the object system of coordinates to world system of coordinates given by homogeneous transformation matrix.

If the virtual object moves the transformation matrix becomes a function of time.

If the aligned virtual object translates all vertices gets translated.

If the virtual object translates back to its initial position all its vertices translate by an equal but negative amount.

It allows models to be partitioned into a hierarchy and become dynamic.

6.3.5 Physical Modeling

It uses the bounding bpx collision detection for fast response.

There are two types of bounding boxes with fixed size or variable size.

Fixed size is computationally faster but less precise.

For more precise detection can use a two stage collision detection which followed by slower exact collision detection stage.

6.3.6 Behavior Modeling.

The behavior modelling having simulation level of autonomy (LOA) function of its components.

There are three levels of autonomy as guided, programmed and autonomous.

It has behavior independent of user’s input.

This is needed in large virtual environment which is impossible fr user to provide all required inputs.

Summary:

Haptics rendering pipeline has following modelling.

- Geometric modelling

- Kinematics pipeline

- Physical modelling

- Behavior modelling

References

- S. Harrington, “Computer Graphics” ‖, 2nd Edition, McGraw-Hill Publications, 1987, ISBN 0 – 07– 100472 – 6.

- Donald D. Hearn and Baker, “Computer Graphics with OpenGL”, 4th Edition, ISBN-13:9780136053583.

- D. Rogers, “Procedural Elements for Computer Graphics”, 2nd Edition, Tata McGraw-Hill Publication, 2001, ISBN 0 – 07 – 047371 – 4.

- J. Foley, V. Dam, S. Feiner, J. Hughes, “Computer Graphics Principles and Practice” ‖, 2nd Edition, Pearson Education, 2003, ISBN 81 – 7808 – 038 – 9.

- D. Rogers, J. Adams, “Mathematical Elements for Computer Graphics” ‖, 2nd Edition, Tata McGraw Hill Publication, 2002, ISBN 0 – 07 – 048677 – 8.