Unit - 4

Database Design & Query Processing

4.1.1 Purpose of Normalization

Normalization is the method of structuring and maintaining the data relationship to eliminate duplication in the relational table and prevent the database's unwanted anomaly properties, such as insertion, update and delete. This helps to break large database tables into smaller tables and to create a link between them. Redundant data can be removed and table fields can be easily added, manipulated or deleted.

For the relational table, a normalization sets out rules as to whether it follows the normal form. A normal form is a method that evaluates each relationship against specified criteria and eliminates from a relationship the multivalued, joined, functional and trivial dependence. If any data is modified, removed or added, database tables do not create any issues and help improve the consistency and performance of the relational table.

Objectives of Normalization

● It is used to delete redundant information and anomalies in the database from the relational table.

● Normalization improves by analyzing new data types used in the table to reduce consistency and complexity.

● Dividing the broad database table into smaller tables and connecting them using a relationship is helpful.

● This avoids duplicating data into a table or not repeating classes.

● It decreases the probability of anomalies in a database occurring.

Key takeaway:

- Normalization is a method of arranging the database data to prevent data duplication, anomaly of addition, anomaly of update & anomaly of deletion.

- Standard types are used in database tables to remove or decrease redundancies.

4.1.2 Data Redundancy and Update Anomalies

Data redundancy

❏ Data redundancy is a situation that is generated inside a database or data storage technology in which two different locations hold the same piece of data.

❏ Within a single database, this may mean two different areas, or two different spots in various software environments or platforms. It effectively constitutes data redundancy if data is replicated.

❏ Data redundancy can occur by mistake, but it is often done purposely for the purposes of backup and recovery.

❏ There are various classifications under the general concept of data redundancy, depending on what is considered acceptable in database management and what is considered unnecessary or inefficient. Wasteful data duplication normally happens when, due to inefficient coding or process sophistication, a given piece of data does not need to be repeated but ends up being duplicated.

For instance, when inconsistent duplicates of the same entry are found on the same database, wasteful data redundancy may occur. Due to inefficient coding or overcomplicated data storage processes, accidental data duplication may happen and pose a problem in terms of efficiency and cost.

Anomalies

A relation that has redundant data may have update anomalies. These anomalies are

Classified as: -

1. Insertion Anomalies.

2. Deletion Anomalies.

3. Modification Anomalies.

Insertion Anomalies

These can be differentiated into two types: -

To insert new employee tuples in Emp_dept, we must enter values for dept or NULL if the employee does not work for the department.

Case I: -

To insert new employee record into Emp_Dept:

Ename=Kunal, Ssn=777, bdate=6/6/93, Address=Pune, Dno=21.

Now, Dno=21 is already existing in dept relation so, while inserting this into

Emp_dept

Dname must be IT.

Dmgr_Ssn must be 123.

If these values are incorrect, these will be consistency problem.

Case II: -

This problem will not occur if we consider employee and department relationship. Because for employee we will enter only dno=21, Remaining information of department will be recorded in dept relation only once. So, there will be no problem of consistency

To insert new department tuple in Emp_Dept relation this has

No employees as yet:

Dno=51, Dname=Civil, Dmgr_Ssn=321

Case I: -

To insert this into Emp_Dept, we’ve to set:

Ename=NULL

Ssn=NULL

Address=NULL

Dno=51

Dname=Civil

Dmgr_Ssn=321

But we cannot set Ssn=NULL as it is primary key of relation.

Case II: -

This problem will not occur if we consider Employee and department relation. Because dept is entered in department relation whether /not any employee works for it.

Deletion Anomalies

Case I: -

Consider, first row of Emp_Dept, where Dno=21. Digvijay is the only employee working for Dno=21. So, if we delete this line, the information regarding Dno=21 will get lost.

Case II: -

This is not the case for relation employees Department. If we delete record from

Employee (Dno=21) Digvijay, the dept info will remain in table department separately.

Modification Anomalies

In Emp_Dept, if we change the value of one of the attributes of a particular dept_say

Manager for dept_no.31 i.e. we change Dmgr_Ssn(456 to 765), then we must update the tuples of all employees who work in that dept, otherwise database will become

Inconsistent.

A database should be designed so that it has no insertion, deletion or modification

Anomalies. A database without any anomaly will work correctly and it will be consistent.

Key takeaway:

- Data redundancy can occur by mistake, but it is often done purposely for the purposes of backup and recovery.

- A relation that has redundant data may have update anomalies.

- A database without any anomaly will work correctly and it will be consistent.

4.1.3 Functional Dependencies

Functional Dependency (FD) is a constraint in a database management system system that specifies the relationship of one attribute to another attribute (DBMS). Functional Dependency helps to maintain the database's data quality. Finding the difference between good and poor database design plays a critical role.

The arrow "→" signifies a functional dependence. X→ Y is defined by the functional dependence of X on Y.

Rules of functional dependencies

The three most important rules for Database Functional Dependence are below:

● Reflexive law—. X holds a value of Y if X is a set of attributes and Y is a subset of X.

● Augmentation rule: If x -> y holds, and c is set as an attribute, then ac -> bc holds as well. That is to add attributes that do not modify the fundamental dependencies.

● Transitivity law: If x -> y holds and y -> z holds, this rule is very similar to the transitive rule in algebra, then x -> z also holds. X -> y is referred to as functionally evaluating y.

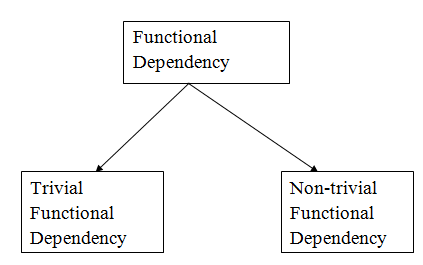

Types of functional dependency

Fig 1: Types of functional dependency

1. Trivial functional dependency

● A → B has trivial functional dependency if B is a subset of A.

● The following dependencies are also trivial like: A → A, B → B

Example

Consider a table with two columns Employee_Id and Employee_Name.

{Employee_id, Employee_Name} → Employee_Id is a trivial functional dependency as

Employee_Id is a subset of {Employee_Id, Employee_Name}.

Also, Employee_Id → Employee_Id and Employee_Name → Employee_Name are trivial dependencies too.

2. Non - trivial functional dependencies

● A → B has a non-trivial functional dependency if B is not a subset of A.

● When A intersection B is NULL, then A → B is called as complete non-trivial.

Example:

ID → Name,

Name → DOB

Key takeaway:

- The arrow "→" signifies a functional dependence.

- Functional Dependency helps to maintain the database's data quality.

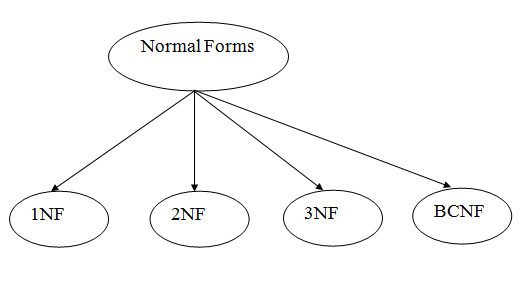

4.1.4 The process of Normalization

Normalization is often executed as a series of different forms. Each normal form has its own properties. As normalization proceeds, the relations become progressively more restricted in format, and also less vulnerable to update anomalies. For the relational data model, it is important to bring the relation only in first normal form (1NF) that is critical in creating relations. All the remaining forms are optional.

A relation R is said to be normalized if it does not create any anomaly for three basic operations: insert, delete and update.

Fig 2: Normalization Forms

❖ First Normal Form (1NF):

A relation r is in 1NF if and only if every tuple contains only atomic attributes means exactly one value for each attribute. As per the rule of first normal form, an attribute of a table cannot hold multiple values. It should hold only atomic values.

Example: suppose Company has created a employee table to store name, address and mobile number of employees.

Emp_id | Emp_name | Emp_address | Emp_mobile |

101 | Rachel | Mumbai | 9817312390 |

102 | John | Pune | 7812324252, 9890012244 |

103 | Kim | Chennai | 9878381212 |

104 | Mary | Bangalore | 9895000123, 7723455987 |

In above table, two employees John, Mary has two mobile numbers. So, the attribute,

Emp_mobile is Multivalued attribute.

So, this table is not in 1NF as the rule says, “Each attribute of a table must have atomic

Values”. But in above table the the attribute, emp_mobile, is not atomic attribute as it

Contains multiple values. So, it violates the rule of 1 NF.

Following solution brings employee table in 1NF.

Emp_id | Emp_name | Emp_address | Emp_mobile |

101 | Rachel | Mumbai | 9817312390 |

102 | John | Pune | 7812324252 |

102 | John | Pune | 9890012244 |

103 | Kim | Chennai | 9878381212 |

104 | Mary | Bangalore | 9895000123 |

104 | Mary | Bangalore | 7723455987 |

❖ Second Normal Form (2NF)

A table is said to be in 2NF if both of the following conditions are satisfied:

● Table is in 1 NF.

● No non-prime attribute is dependent on the proper subset of any candidate key of table. It means non-prime attribute should fully functionally dependent on whole candidate key of a table. It should not depend on part of key.

An attribute that is not part of any candidate key is known as non-prime attribute.

Example: Suppose a school wants to store data of teachers and the subjects they teach. Since a teacher can teach more than one subjects, the table can have multiple rows for the same teacher.

Teacher_id | Subject | Teacher_age |

111 | DSF | 28 |

111 | DBMS | 28 |

222 | CNT | 35 |

333 | OOPL | 38 |

333 | FDS | 38 |

For above table:

Candidate Keys: {Teacher_Id, Subject}

Non prime attribute: Teacher_Age

The table is in 1 NF because each attribute has atomic values. However, it is not in 2NF because non-prime attribute Teacher_Age is dependent on Teacher_Id alone which is a proper subset of candidate key. This violates the rule for 2NF as the rule says “no non-prime attribute is dependent on the proper subset of any candidate key of the table”.

To bring above table in 2NF we can break it in two tables (Teacher_Detalis and

Teacher_Subject) like this:

Teacher_Details table:

Teacher_id | Teacher_age |

111 | 28 |

222 | 35 |

333 | 38 |

Teacher_Subject table:

Teacher_id | Subject |

111 | DSF |

111 | DBMS |

222 | CNT |

333 | OOPL |

333 | FDS |

Now these two tables are in 2NF.

❖ Third Normal Form (3NF)

A table design is said to be in 3NF if both the following conditions hold:

● Table must be in 2NF.

● Transitive functional dependency from the relation must be removed.

So it can be stated that, a table is in 3NF if it is in 2NF and for each functional dependency

P->Q at least one of the following conditions hold:

● P is a super key of table

● Q is a prime attribute of table

An attribute that is a part of one of the candidate keys is known as prime attribute.

Transitive functional dependency:

A functional dependency is said to be transitive if it is indirectly formed by two functional dependencies.

For example:

P->R is a transitive dependency if the following three functional dependencies hold true:

1) P->Q and

2) Q->R

Example: suppose a company wants to store information about employees. Then the table Employee_Details looks like this:

Emp_id | Emp_name | Manager_id | Mgr_Dept | Mgr_Name |

E1 | Hary | M1 | IT | William |

E2 | John | M1 | IT | William |

E3 | Nil | M2 | SALES | Stephen |

E4 | Mery | M3 | HR | Johnson |

E5 | Steve | M2 | SALSE | Stephen |

Super keys: {emp_id}, {emp_id, emp_name}, {emp_id, emp_name, Manager_id}

Candidate Key: {emp_id}

Non-prime attributes: All attributes except emp_id are non-prime as they are not subpart part of any candidate keys.

Here, Mgr_Dept, Mgr_Name dependent on Manager_id. And, Manager_id is dependent on emp_id that makes non-prime attributes (Mgr_Dept, Mgr_Name) transitively dependent on super key (emp_id). This violates the rule of 3NF.

To bring this table in 3NF we have to break into two tables to remove transitive dependency.

Employee_Details table:

Emp_id | Emp_name | Manager_id |

E1 | Hary | M1 |

E2 | John | M1 |

E3 | Nil | M2 |

E4 | Mery | M3 |

E5 | Steve | M2 |

Manager_Details table:

Manager_id | Mgr_Dept | Mgr_Name |

M1 | IT | William |

M2 | SALSE | Stephen |

M3 | HR | Johnson |

❖ Boyce Codd normal form (BCNF)

It is an advance version of 3NF. BCNF is stricter than 3NF. A table complies with BCNF if it is in 3NF and for every functional dependency X->Y, X should be the super key of the table.

Example: Suppose there is a company wherein employees work in more than one

Department. They store the data like this:

Emp_id | Emp_nationality | Emp_dept | Dept_type | Dept_no_of_emp |

101 | Indian | Planning | D01 | 100 |

101 | Indian | Accounting | D01 | 50 |

102 | Japanese | Technical support | D14 | 300 |

102 | Japanese | Sales | D14 | 100 |

Functional dependencies in the table above:

Emp_id ->emp_nationality

Emp_dept -> {dept_type, dept_no_of_emp}

Candidate key: {emp_id, emp_dept}

The table is not in BCNF as neither emp_id nor emp_dept alone are keys. To bring this table in BCNF we can break this table in three tables like:

Emp_nationality table:

Emp_id | Emp_nationality |

101 | Indian |

102 | Japanese |

Emp_dept table:

Emp_dept | Dept_type | Dept_no_of_emp |

Planning | D01 | 100 |

Accounting | D01 | 50 |

Technical support | D14 | 300 |

Sales | D14 | 100 |

Emp_dept_mapping table:

Emp_id | Emp_dept |

101 | Planning |

101 | Accounting |

102 | Technical support |

102 | Sales |

Functional dependencies:

Emp_id ->; emp_nationality

Emp_dept -> {dept_type, dept_no_of_emp}

Candidate keys:

For first table: emp_id

For second table: emp_dept

For third table: {emp_id, emp_dept}

This is now in BCNF as in both functional dependencies left side is a key.

❖ Fourth Normal Form (4NF)

A relation is in the 4NF if it is in BCNF and has no multi valued dependencies.

It means relation R is in 4NF if and only if whenever there exist subsets A and B of the

Attributes of R such that the Multi valued dependency AààB is satisfied then all attributes of R are also functionally dependent on A.

Multi-Valued Dependency

● The multi-valued dependency X -> Y holds in a relation R if whenever we have two tuples of R that agree (same) in all the attributes of X, then we can swap their Y components and get two new tuples that are also in R.

● Suppose a student can have more than one subject and more than one activity.

❖ Fifth Normal Form (5NF)

A table is in the 5NF if it is in 4NF and if for all Join dependency (JD) of (R1, R2, R3, ..., Rm) in R, every Ri is a super key for R. It means:

● A table is in the 5NF if it is in 4NF and if it cannot have a lossless decomposition in to any number of smaller tables (relations).

● It is also known as Project-join normal form (PJ/NF)

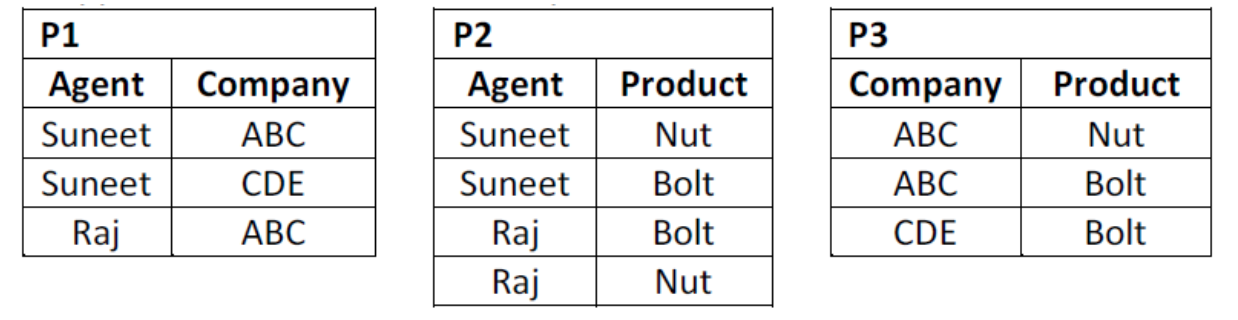

Example

i. AGENT_COMPANY_PRODUCT (Agent, Company, Product)

Ii. This table lists agents, the companies they work for and the products they sell for those companies. ‘The agents do not necessarily sell all the products supplied by the companies they do business with.

An example of this table might be:

AGENT_COMPANY_PRODUCT | ||

Agent | Company | Product |

Suneet | ABC | Nut |

Raj | ABC | Bolt |

Raj | ABC | Nut |

Suneet | CDE | Bolt |

Suneet | ABC | Bolt |

1. The table is in 4NF because it contains no multi-valued dependency.

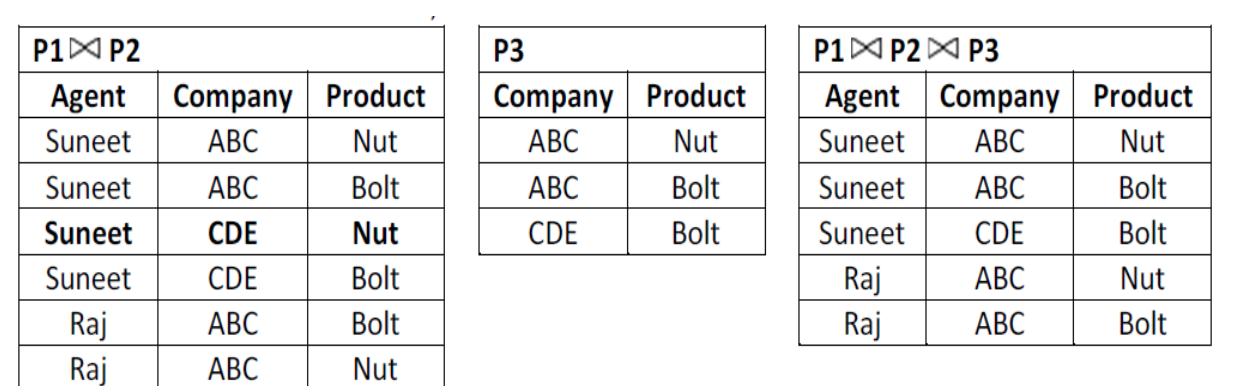

2. Suppose that the table is decomposed into its three relations, P1, P2 and P3.

i. But if we perform natural join between the above three relations then no spurious (extra) rows are added so this decomposition is called lossless decomposition.

Ii. So above three tables P1, P2 and P3 are in 5 NF

Key takeaway:

- A relation r is in 1NF if and only if every tuple contains only atomic attributes means exactly one value for each attribute.

- A relation is in the 4NF if it is in BCNF and has no multi valued dependencies.

- A table is in the 5NF if it is in 4NF and if it cannot have a lossless decomposition in to any number of smaller tables (relations).

- BCNF is stricter than 3NF.

- a table is in 3NF if it is in 2NF and for each functional dependency

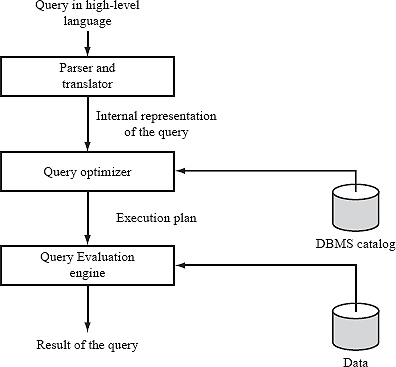

4.2.1 Overview

Query processing is nothing but steps involved in retrieving data from database. These activities include translation of queries written high level database language into expressions that can be understood at the physical level of the file system. It also includes a variety of query optimizing transformations and actual evaluations of queries.

Query processing procedure

Fig 3: Query processing steps

Basic steps in query processing are:

1. Parsing and translation

2. Optimization

3. Evaluation

At the beginning of query processing, the queries must be translated into a form which could be understood at the physical level of the system. A language like SQL is not suitable for the internal representation of a query. Relational algebra is the good option for internal representation of query.

So, the first step in query processing is to translate a given query into its internal

Representation. In generating internal form of a query, parser checks the syntax of the query written by user, verifies that the relation names appearing in the query really exists in the database and so on.

The system constructs a parse-tree of the query. Then this tree is translated into relational- algebra expressions. If the query was expressed in terms of a view, the translation phase replaces all uses of the view by the relational-algebra expression that defines the view.

One query can be answered in different ways. Relational-algebra representation of a query specifies only partially how to evaluate a query. There are different ways to evaluate relational-algebra expressions. Consider the query:

Select acc_balance from account where acc_balance<5000

This query can be translated into one of the following relational-algebra expressions:

1. σ acc_balance<5000 (Π acc_balance (account))

2. Πacc_balance (σ acc_balance <5000 (account))

Different algorithms are available to execute relational-algebra operation. To solve the above query, we can search every tuple in account to find the tuples having balance less than 5000. If a B + tree index is available on the balance attribute, we can use this index instead to search for the required tuples.

To specify how to evaluate a query, we need not only to provide the relational-algebra expression, but also to explain it with instructions specifying the procedure for evaluation of each operation.

Account_id | Cust_id | Acc_Balance |

Account Relation

Cust_id | Cust_Name |

Customer Relation

Πacc_balance

↓

σ acc_balance <5000; use index 1

↓

Account

Fig 4: procedure for evaluation

A relational-algebra operation along with instructions on how to evaluate it is called an evaluation primitive. A sequence of primitive operations that can be used in the evaluation of a query is called a query execution plan or query evaluation plan. Above figure illustrates an evaluation plan for our example query, in which a particular index is specified for the selection operation from database. The query execution engine takes a query evaluation plan, executes that plan, and returns the answer to the query.

Different evaluation plans have different costs. It is the responsibility of the system to

Construct a query evaluation plan that minimizes the cost of query evaluation; this task is called Query Optimization. Once the query plan with minimum cost is chosen, the query is evaluated with the plan, and the result of the query is the output. In order to optimize a query, a query optimizer must know the cost of each operation.

Although the exact cost is hard to compute, it is possible to get a rough estimate of

Execution cost for each operation. Exact cost is hard for computation because it depends on many parameters such as actual memory available to execute the operation.

Key takeaway:

- Query processing is nothing but steps involved in retrieving data from database.

- It also includes a variety of query optimizing transformations and actual evaluations of queries.

- The first step in query processing is to translate a given query into its internal

Representation.

4.2.2 Measures of Query cost

The cost of query evaluation can be measured in terms of number of different resources used, including disk accesses, CPU time to execute given query, and, in a distributed database or parallel database system. In large database systems, however, the cost to access data from disk is usually the most important cost, since disk accesses are slow compared to in-memory operations.

Moreover, CPU speeds have been improving much faster than have disk speeds. The CPU time taken for a task is harder to estimate since it depends on low-level details of the execution.

The time taken by CPU is negligible in most systems when compared with the number of disk accesses.

If we consider the number of block transfers as the main component in calculating the cost of a query, it would include more sub-components. Those are as below:

Rotational latency – time taken to bring and turn the required data under the read-write head of the disk.

Seek time – It is the time taken to position the read-write head over the required track or cylinder.

Sequential I/O – reading data that are stored in contiguous blocks of the disk

Random I/O – reading data that are stored in different blocks of the disk that are not

Contiguous.

From these sub-components, we would list the components of a more accurate measure as follows;

● The number of seek operations performed

● The number of block read operations performed

● The number of blocks written operations performed

Hence, the total query cost can be written as follows;

Query cost = (Number of seek operations X average seek time) +

(Number of blocks read X average transfer time for reading a block) +

(Number of blocks written X average transfer time for writing a block).

Key takeaway:

- Cost is normally calculated as the total time spent responding to a question.

- We only use the number of block transfers from the disc as the cost measure for simplicity.

4.2.3 Selection and Join operations

Selection Operation includes following steps:

File scan – search algorithms that locate and retrieve records that fulfill a selection

Condition.

Selection Operation:

Algorithm A1 (linear search):

It Scans each file block and test all records to see whether they satisfy the selection

Condition. Following factors are considered in this algorithm:

● Cost estimate (number of disk blocks scanned) = br. Br denotes number of blocks containing records from relation r.

● If selection is on a key attribute, cost = (br /2) “stop on finding record.

● Linear search can be applied regardless of "selection condition” or “ordering of records in the file”, or “availability of indices”

Algorithm A2 (binary search):

It is applicable if selection is an equality comparison on the attribute on which file is ordered.

Assume that the blocks of a relation are stored contiguously. Following factors are

Considered in this algorithm:

Cost estimate (number of disk blocks to be scanned): log2(br ) — cost of locating the first tuple by a binary search on the blocks plus number of blocks containing records that satisfy selection condition.

Join Operations:

There are several different algorithms that can be used to implement joins (natural-, equi-, condition-join) like:

● Nested-Loop Join

● Block Nested-Loop Join

● Index Nested-Loop Join

● Sort-Merge Join

● Hash-Join

Choice of a particular algorithm is based on cost estimate. For this, join size estimates are required and in particular cost estimates for outer-level operations in a relational algebra expression.

Example:

Assume the query CUSTOMERS ✶ ORDERS (with join attribute only being CName)

NCUSTOMERS = 5,000 tuples

FCUSTOMERS = 20

BCUSTOMERS = 5,000/20 = 250 blocks

NORDERS = 10,000 tuples

FORDERS = 25, i.e., BORDERS = 400 blocks

V(CName, ORDERS) = 2,500, meaning that in this relation, on average, each customer has four orders – Also assume that CName in ORDERS is a foreign key on CUSTOMERS

1 Estimating the Size of Joins:

● The Cartesian product R × S results in NR ∗ NS tuples; each tuple requires SR + SS Bytes.

● If schema(R) ∩ schema(S) = primary key for R, then a tuple of S will match with at most one tuple from R. Therefore, the number of tuples in R✶S is not greater than NS If schema(R) ∩ schema(S) = foreign key in S referencing R, then the number of tuples in R✶S is exactly NS. Other cases are symmetric.

● In the example query CUSTOMERS ✶ ORDERS, CName in ORDERS is a foreign key of CUSTOMERS; the result thus has exactly NORDERS = 10,000 tuples

● If schema(R) ∩ schema(S) = {A} is not a key for R or S; assume that every tuple in R produces tuples in R ✶ S. Then the number of tuples in R ✶ S is estimated to be: NR ∗ NS V(A, S) If the reverse is true, the estimate is NR ∗ NS V(A, R) and the lower of the two estimates is probably the more accurate one.

Size estimates for CUSTOMERS ✶ ORDERS without using information about foreign keys:

– V(CName, CUSTOMERS) = 5,000, and V(CName, ORDERS) = 2,500 – The two

Estimates are 5,000*10,000/2,500=20,000 and 5,000*10,000/5,000=10,000.

Key takeaway:

- It Scans each file block and test all records to see whether they satisfy the selection condition.

- It is applicable if selection is an equality comparison on the attribute on which file is ordered.

- Join the necessary size estimates, particularly for cost estimates in a relational-algebra expression for outer-level operations.

4.2.4 Evaluation of Expressions

For evaluation of an expression two ways can be used. One way is used is materialization and other is pipelining.

In materialization, in evaluation of an expression, one operation is evaluated at a time, in an appropriate order. The result of each evaluation is materialized in a temporary relation for further use. An alternative approach is to evaluate several operations simultaneously in a pipeline, with the result of one operation passed on to the next operation, without the need to store intermediate values in a temporary relation.

- Materialization:

This is one method of query evaluation. In this method queries are divided into sub queries and then the results of sub-queries are combined to get the final result.

Suppose, consider the example, we want to find the names of customers who have balance less than 5000 in the bank account. The relational algebra for this query can be written as:

Πcust_name ( σ acc_balance<5000 (account) ∞ customer)

Here we can observe that the query has two sub-queries: one is to select the number of accounts having balance less than 5000. Another is to select the customer details of the account whose id is retrieved in the first query. The database system also performs the same task. It breaks the query into two sub-queries as mentioned above. Once it is divided into two subparts, it evaluates the first sub-query and stores result in the temporary table in the memory. This temporary table data will be then used to evaluate the second sub-part of query.

Π cust_name

↓

∞

↙ ↘

σ acc_balance<5000 Customer

↓

Account

Fig 5 : Representation of query

This is the example of query which can be subdivided into two levels in materialization method. In this method, we start from lowest level operations in the expression. In our example, there is only one selection operation on account relation.

The result of this operation is stored in a temporary table. We can use this temporary relation to execute the operations at the next level up in the database. Inputs for the join operation are the customer relation and the temporary relation created by the selection on account.

The join can be evaluated, creating another temporary relation.

Although this method looks simply, the cost of this type of evaluation is always more.

Because it involves write operation in temporary table. It takes the time to evaluate and write into temporary table, then retrieve from this temporary table and query to get the next level of result and so on. Hence cost of evaluation in this method is:

Cost = cost of individual SELECT operation + cost of write operation into temporary

Table

2. Pipelining algorithm

As seen in the example of materialization, the query evaluation cost is increased due to temporary relations. This increased cost can be minimized by reducing the number of temporary files that are produced. This reduction can be achieved by combining several relational operations into a pipeline of operations, in which the results of one operation are passed along to next operation in the pipeline. This kind of evaluation is called as a pipelined evaluation.

For example, in the previous expression tree, no need to store the result of intermediate select operation in temporary relation; instead, pass tuples directly to the join operation.

Similarly, do not store the result of join, but pass tuples directly to project operation.

So the benefits of pipelining are:

● Pipelining is less costly than materialization.

● No need to store a temporary relation to disk.

For pipelining to be effective, use evaluation algorithms that generate output tuples even as tuples are received for inputs to the operation Pipelines can be executed in two ways:

● demand driven

● producer driven

Demand Driven:

In a demand driven pipeline, the system makes repeated requests for the tuples from the operation at the top of the pipeline. Each time that an operation receives a request for tuples, it computes the next tuple to be returned, and then returns that tuple. If the inputs of the operations are not pipelined, the next tuple to be returned can be computed from the input relations. If it has some pipelined inputs, the operation also makes requests for tuples from its pipelined inputs. Using the tuples received from its pipelined inputs, the operation computes tuples for its output, and passes them up to parent.

Produced Driven:

In a produced driven pipeline method, operations do not wait for requests to produce tuples, but instead generate the tuples eagerly. Each operation at the bottom of a pipeline continually generates output tuples, and puts them in its output buffer, until buffer is full. An operation at any other level of a pipeline generates output tuples when it gets input tuples from lower down in the pipeline, until its output buffer is full. Once the operation uses a tuple from a pipelined input, it removes the tuples from its input buffer. In either case, once the output buffer is full, the operation waits until its parent operation removes tuples from the buffer, so that the buffer has space for more tuples. At this point, the operation generates more tuples, until the buffer is full again. The operation repeats this process until all the

Output tuples have been generated.

Key takeaway:

- For evaluation of an expression two ways can be used.

- In materialization, in evaluation of an expression, one operation is evaluated at a time, in an appropriate order.

- In a pipeline, with the result of one operation passed on to the next operation, without the need to store intermediate values in a temporary relation.

4.3.1 Estimation

A single query can be executed using different algorithms or re-written in different forms and structures. Hence, the question of query optimization comes into the picture. The query optimizer helps to determine the most efficient way to execute a given query by considering the possible query plans.

The optimizer tries to generate the most optimal execution plan for a SQL statement. It selects the plan with lowest execution cost among all other available plans. The optimizer uses available statistics to calculate cost. For a specific query in a given environment, the most computation accounts for factors of query execution such as I/O, CPU, and communication.

For example, consider table storing information about employees who are managers. If the optimizer statistics indicate that 80% of employees are managers, then the optimizer may decide that a full table scan is most efficient. However, if statistics indicate that very few employees are managers, then reading an index followed by a table access by row id may be more efficient than full table scan.

Because the database has many internal statistics and tools at its disposal, the optimizer is usually in a better position than the user to determine the optimal method of statement execution. For this reason, all SQL statements use the optimizer.

There are broadly two ways a query can be optimized:

1. Analyze and transform equivalent relational expressions: Try to minimize the tuple and column counts of the intermediate and final query processes.

2. Using different algorithms for each operation: These underlying algorithms determine how tuples are accessed from the data structures they are stored in, indexing, hashing, data retrieval and hence influence the number of disk and block accesses.

Advantages of Query Optimization as:

➔ First, it provides the user with faster results, which makes the application seem faster to the user.

➔ Secondly, it allows the system to service more queries in the same amount of time, because each request takes less time than un-optimized queries.

➔ Thirdly, query optimization ultimately reduces the amount of wear on the hardware (e.g., disk drives), and allows the server to run more efficiently (e.g. Lower power consumption, less memory usage).

Cost estimation:

❏ The cost must be calculated for every strategy considered:

➢ Costs of each operation must be calculated in the plan tree.

● It depends on the cardinal inputs.

● Operations expense (sequential scan, index scan, joins, etc.)

➢ The result size must also be calculated for each operation in the tree!

● Using data about input relationships.

● Assume freedom of predicates for choices and entries.

Estimating result size:

❏ Reduction factor

➢ The ratio of the size of the (expected) result to the size of the input, considering Just the selection that the word reflects.

❏ How to estimate factors for reduction:

➢ Column = value

1/Nkeys(I) – I:

- index on column

1/10: random

➢ Column1 = column2

1/Max (Nkeys(I1), Nkeys(I2)): both columns have indexes

1/Nkeys(I): either column1 or column 2 has index I

1/10: no index

Size Estimation:

❏ How to estimate factors for reduction:

➢ Column>value

● (High(I)-value)/(High(I)-Low(I)): with index

● Less than half: no index

➢ Column IN (list of values)

● RF (column=value) * number of items

● At most half

❏ Reduction factor for projection

Key takeaway:

- A single query can be executed using different algorithms or re-written in different forms and structures.

- The optimizer tries to generate the most optimal execution plan for a SQL statement.

- The query optimizer helps to determine the most efficient way to execute a given query by considering the possible query plans.

4.3.2 Transformation of Relational Expression

Generating a query-evaluation plan for an expression of the relational algebra involves two steps:

1. Generate logically equivalent expressions

2. Annotate these evaluation plans by specific algorithms and access structures to get

Alternative query plans Use equivalence rules to transform a relational algebra expression into an equivalent one. Based on estimated cost, the most cost-effective annotated plan is selected for evaluation.

The process is called cost-based query optimization. Equivalence of Expressions Result relations generated by two equivalent relational algebra expressions have the same set of attributes and contain the same set of tuples, although their attributes may be ordered differently.

➢ Equivalence Rules (for expressions E, E1, E2, conditions Fi)

Applying distribution and commutativity of relational algebra operations

1. σF1 (σF2 (E)) ≡ σF1∧F2 (E)

2. σF(E1 [∪, ∩, −] E2) ≡ σF(E1) [∪, ∩, −] σF(E2)

3. σF (E1 × E2) ≡ σF0(σF1(E1) × σF2(E2)); F ≡ F0 ∧ F1 ∧ F2,

Fi contains only attributes of Ei, i = 1, 2.

4. σA=B (E1 × E2) ≡ E1 ✶ A=B E2

5. πA (E1 [∪, ∩, −] E2) 6≡ πA(E1) [∪, ∩, −] πA(E2)

6. πA (E1 × E2) ≡ πA1(E1) × πA2(E2), with Ai = A ∩ {attributes in Ei}, i = 1, 2.

7. E1 [∪, ∩] E2 ≡ E2 [∪, ∩] E1 (E1 ∪ E2) ∪ E3 ≡ E1 ∪ (E2 ∪ E3) (the analogous holds for ∩)

8. E1 × E2 ≡ πA1, A2(E2 × E1) (E1 × E2) × E3 ≡ E1 × (E2 × E3) (E1 × E2) × E3 ≡ π ((E1 ×E3) × E2) 9. E1 ✶ E2 ≡ E2 ✶ E1 (E1 ✶ E2) ✶ E3 ≡ E1 ✶ (E2 ✶ E3)

The application of equivalence rules to a relational algebra expression is also sometimes called algebraic optimization.

Examples:

Selection: –

Find the name of all customers who have ordered a product for more than $5,000 from a supplier located in Davis.

πCName (σSAddress like 0%Davis%0 ∧ Price>;5000 (CUSTOMERS ✶ (ORDERS ✶

(OFFERS ✶ SUPPLIERS))))

Perform selection as early as possible (but take existing indexes on relations into account)

πCName (CUSTOMERS ✶ (ORDERS ✶ (σPrice>5000(OFFERS) ✶ (σSAddress like

0%Davis%0(SUPPLIERS))))) •

Projection: –

πCName, account (CUSTOMERS ✶ σProdname=0CD−ROM0(ORDERS))

Reduce the size of argument relation in join πCName, account (CUSTOMERS ✶

πCName(σProdname=0CD−ROM0(ORDERS)))

Projection should not be shifted before selections, because minimizing the number of tuples in general leads to more efficient plans than reducing the size of tuples.

Join Ordering

• For relations R1, R2, R3, (R1 ✶ R2) ✶ R3 ≡ R1 ✶ (R2 ✶ R3)

• If (R2 ✶ R3) is quite large and (R1 ✶ R2) is small, we choose (R1 ✶ R2) ✶ R3 so that a smaller temporary relation is computed and materialized.

Example:

List the name of all customers who have ordered a product from a supplier located in Davis.

πCName (σSAddress like 0%Davis%0 (SUPPLIERS ✶ ORDERS ✶ CUSTOMERS))

ORDERS ✶ CUSTOMERS is likely to be a large relation. Because it is likely that only a small fraction of suppliers is from Davis, we compute the join σSAddress like

0%Davis%0(SUPPLIERS ✶ ORDERS) first.

Key takeaway:

- The optimizer's first step is to enforce certain expressions that are logically equivalent to the phrase given.

- An example of a legal database refers to a database system that meets all the integrity limitations stated in the database schema.

References:

- G. K. Gupta “Database Management Systems”, Tata McGraw Hill

- Rab P., Coronel C. “Database Systems Design, Implementation and Management”, 5th edition, Thomson Course Technology, 2002

- Elmasri R., Navathe S. “Fundamentals of Database Systems”, 4th edition, Pearson Education, 2003