Unit 1

Introduction to Software Engineering

For systems engineering (SE) of software-intensive systems, the essence of the software medium has many implications. It is distinguished from other types of engineering objects by four software properties, taken together.

Such four properties are the following:

➢ Complexity

➢ Changeability

➢ Conformity

➢ invisibility

Brooks states:

“Software entities are more complex for their size than perhaps any other human construct because no two parts are alike (at least above the statement level). If they are, we make the two similar parts into a subroutine — open or closed. In this respect, software systems differ profoundly from computers, buildings, or automobiles, where repeated elements abound.”

Complexity:

The complexity of software emerges from the large number of specific components in a software system that communicate with each other.

The sections are special because they are encapsulated instead of being repeated as functions, subroutines, or objects, and invoked as necessary. There are many different types of interactions with software modules, including serial and concurrent invocations, state transformations, data couplings, and database and external device interfaces.

To illustrate the various static structures, dynamic couplings, and modes of interaction that occur in computer software, the depiction of a software object also involves many different design representations. Complexity within the components and in the relations between components requires significant design rigour and regression testing for modifications. For modules that are embedded, distributed and data based, software provides functionality.

As well as complicated algorithms and heuristics, software can implement simple control loops.

Complexity can cover defects that cannot be easily detected, requiring considerable additional and unplanned rework.

Changeability:

Software coordinates the operation of physical components and, in software-intensive systems, provides much of the features. Because software in a software-intensive system is the most malleable (easily changed) element, it is the most frequently changed element.

During the late stages of a development project and during system maintenance, this is particularly true. This does not mean, however, that software is easy to modify. Complexity and the need for conformity will make it an incredibly difficult task to update software.

In other parts of the system, modifying one aspect of a software system often results in undesired side effects, requiring further adjustments before the software can work at maximum performance.

Conformity:

Unlike a physical product, software has no fundamental natural rules that it must adhere to, such as the laws of motion of Newton. However, in the representation of and of its components, in the interfaces to other internal parts and in the connections to the world in which it works, the program must comply with exacting requirements.

A compiler may detect a missing semicolon or other syntactic error, but it may be difficult to detect a defect in the program logic or a timing error until it is encountered during operation.

Lack of conformity will trigger problems when it is not feasible to reuse an existing software feature as designed because it does not meet the needs of the product under development. This needs (usually) an unplanned allocation of resources which can delay completion of the project.

Invisibility:

Software, since it has no physical properties, is said to be invisible. Although the results of software execution on a digital device are measurable, it is not possible to see, taste, smell, touch, or hear the software itself. Software is an intangible entity because it is unable to be sensed directly by our five human senses.

Job products such as descriptions of specifications, design documents, source code and object code are software representations, but they are not software.

Software remains in the magnetization and current flow at the most elementary stage in an enormous number of electronic elements inside a digital system. Since software has no physical presence, in an effort to image the essentially intangible object, software engineers must use various representations at different levels of abstraction.

Uniqueness:

The uniqueness of software is another point about the existence of software that Brooks alludes to but does not specifically call out. For the following reasons, software and software projects are unique:

● There are no physical properties for software;

● The product of intellect-intensive team work is software;

● Software developers' productivity varies more broadly than other engineering disciplines' productivity.

● Software project risk management is primarily process-oriented;

● Software alone, as it is often a part of a larger scheme, is useless, and

● The most frequently updated aspect in software intensive systems is software.

Key takeaways

- software systems differ profoundly from computers, buildings, or automobiles, where repeated elements abound.

- The complexity of software emerges from the large number of specific components in a software system that communicate with each other.

- Because software in a software-intensive system is the most easily changed element.

It contains a set of ideas, principles, processes, and tools that are called upon on a daily basis by a software engineer.

Equipping managers to handle software projects and software engineers to construct computer programs offers the necessary strategies and management to do the job.

- Understand the problem (communication and analysis)

● Who has a stake in the problem's solution?

● What unknowns are there? And what is needed (data, function, behaviour) to solve the problem properly?

● Can the problem be graphically represented? Can a model be developed for analysis?

● Is it possible to compartmentalize the problem? It is possible to portray smaller topics that might be simpler to learn.

B. Plan a solution (planning, modeling and software design)

● Have you seen issues close to this before?

● Has a similar question been resolved? If not, are the solution's components reusable?

● Is it possible to identify sub-issues and are solutions available for the sub-issues?

C. Carry out the plan (construction, code generation)

● Does the solution comply with the plan? Can the source code be traced back to the design?

● Is each part of the solution accurate? Was the layout and code tested, or better?

D. Examine the results for accuracy (testing and quality assurance)

● Can each part of the solution be tested? Has a sound plan for testing been implemented?

● Does the solution produce result that correspond to the data, function and behaviour that are required?

● Was the program validated against the demands of all stakeholders?

It is important to go through a series of predictable steps as you work to create a product or system - a road map that helps you produce a timely, high-quality result.

A " software process" is called the road map that you follow.

Technical definition:

The software process is a structure for the operations, activities and tasks needed to create high-quality software.

The term software specifies to the set of computer programs, procedures and

Associated documents (Flowcharts, manuals, etc.) that describe the program and

How they are to be used.

A software process is the set of activities and associated outcome that produce a

Software product. Software engineers mostly carry out these activities. These are

Four key process activities, which are common to all software processes.

These activities are:

1. Software specifications: The functionality of the software and constraints on

Its operation must be defined.

2. Software development: The software to meet the requirement must be

Produced.

3. Software validation: The software must be validated to ensure that it does

What the customer wants.

4. Software evolution: The software must evolve to meet changing client

Needs.

Key takeaways

- A software process is the set of activities and associated outcome that produce a software product.

- The software process is a structure for the operations, activities and tasks needed to create high-quality software.

Software myths are negative attitudes that have caused administrators and technical people alike to have serious problems. Myths about apps spread misinformation and uncertainty. Three sorts of tech myths exist:

- Management Myths

Software-responsible administrators are also under pressure to control budgets, avoid sliding schedules, and boost efficiency. The management myths are below:

Myth: We also have a book full of software building standards and procedures, isn't that going to teach my people what they need to know?

Reality: The Standards Book might very well exist, but it's not used. The majority of tech professionals are not conscious of its presence. It also does not reflect current practices in software engineering and is complete as well.

Myth: We can add more programmers and catch up if we get behind schedule (sometimes called the Mongolian horde concept).

Reality: The creation of software is not a mechanistic method like manufacturing. When new individuals are introduced, people who have been working have to spend time training newcomers, decreasing the amount of time spent on productive growth efforts. It is possible to add people, but only in a prepared and well-coordinated way.

Myth: I can only relax and let the firm develop it if I plan to outsource the software project to a third party.

Reality: If a company does not understand how to internally handle and control software projects, as its outsourced software projects, it will invariably fail.

2. Developer’s Myths

Developers have these myths:

Myth: I have no way of determining its efficiency until I get the software 'going'.

Reality: From the beginning of a project, one of the most powerful software quality assurance processes can be used, the systematic technical review. Software reviews are a "quality filter" that has been shown to be more reliable than checking for those kinds of software faults to be found.

Myth: Software engineering will make us produce voluminous paperwork that is redundant and will invariably slow us down.

Reality: The engineering of software is not about record creation. It is about quality creation. Better output contributes to decreased rework. Aster delivery times result in reduced rework.

Myth: Our job is finished until we write the software and get it to work.

Reality: "Someone once said, "The faster you start writing code, the longer it takes you to do it. Industry research shows that when it is first shipped to the consumer, between 60 and 80 percent of all effort spent on software will be spent.

3. Customer’s Myths

Customer misconceptions lead to false assumptions and, inevitably, disappointment with the developer (by the customer). The customer myths are below:

Myth: Project specifications change constantly, but since software is modular, adjustments can be easily accommodated.

Reality: It is true that the specifications of software change, but the effect of the change varies with the time it is implemented. The cost effect increases rapidly as improvements are requested during software design. Resources were dedicated and a structure for design was developed. Heavy additional costs may be caused by transition. Changes, when demanded after the development of software, may be far more costly than the same change requested earlier.

Myth: To begin writing programs, a general statement of priorities is enough-we will fill in the specifics later.

Reality: The major cause of failed software attempts is a weak up-front concept. It is important to provide a formal and thorough explanation of the functions, actions, performance, interfaces, design constraints and requirements for validation.

Key takeaway

Myths about apps spread misinformation and uncertainty.

Process Models

The method is defined as a set of tasks, activities and tasks performed when a work product is to be produced. The acts and tasks of each of these activities are based on a structure or model that determines their relationship with the process and with each other.

A process model (also termed as software life cycle model) is a pictorial and diagrammatic representation of the software life cycle. A life cycle model represents

All the methods required to make a software product transit through its life cycle stages. It also captures the structure in which these methods are to be undertaken.

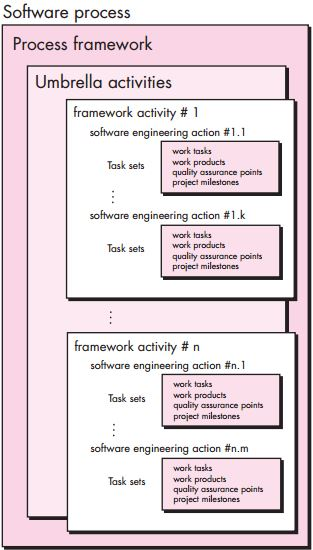

The software process is depicted schematically, with a collection of software engineering activities populating each framework activity.

A task set that defines the work tasks to be completed is defined by each software engineering activity.

The working products to be made, the points of quality assurance that will be needed, and the milestones to be used to demonstrate progress.

A generic system of process work for software engineering framework tasks -

➢ Communication

➢ Planning

➢ Modeling

➢ construction

➢ Deployment

Five application activities for communication, planning, modelling, construction, and deployment describe a common process framework for software engineering. In addition, a range of umbrella practices are implemented throughout the process: project monitoring and tracking, risk management, quality assurance, configuration management, technical reviews, and others. (called process flow)

Fig 1: Generic process model

Key takeaways

- The acts and tasks of each of these activities are based on a structure or model that determines their relationship with the process and with each other.

- A process model (also termed as software life cycle model) is a pictorial and diagrammatic representation of the software life cycle.

Often referred to as the classic life cycle or waterfall model. This model implies a sequential approach to software development that starts at the system level and progresses through analysis, coding, help, testing, etc.

Each of the five framework activities is carried out by a linear process flow, such as:

1) Information/System Engineering and Modeling

Software is part of a larger system or organization; work starts by defining for all system components and then assigning to software some subset of these requirements. Such modelling is important for the purpose of interaction.

2) Design

In reality, software design is a multi-step process that focuses on different programme attributes, such as data structure, software architecture, representations of interfaces, and procedural information. The design method transforms specifications into a software representation that can be checked for consistency before the beginning of coding.

3) Code generation

A machine-readable type must be converted into the design. This task is performed by the code generating stage. If construction is carried out in a comprehensive way, it is possible to work mechanistically in code generation. The code generator is supposed to produce code that is correct in an exact way.

4) Testing

Program testing starts after code creation. The testing process focuses on the software's logical internals, ensuring that all claims have been tested, and on the externals that perform tests to detect errors and ensure that specified input produces real results that comply with the results needed. Unit testing in computer programming is the process by which code source, sets of one or more computer programme modules along with associated control data are tested to determine if they are fit for use.

5) Support

After distribution to the client, the programme can change. There will be improvements because failures have been found as a result of changes in the external world. Changes can occur in the operating system or due to peripheral device errors.

Disadvantages of the model:

This is the oldest software engineering paradigm. When this model is implemented, however, several issues have been encountered. Below, some of these are mentioned.

● The linear sequential model is rarely pursued by genuine ventures. While iteration can be accommodated by the linear model, it does so indirectly.

● Customers also find it hard to clearly state all the specifications. This model needs this and has trouble handling the complexity of existence.

● Clients have to be careful. Until late in the project time-span, a working version of the programme will not be available. A big error, if undetected before the work programme is checked, may be devastating.

Computer work is fast-paced today and is subject to a never-ending flood of modifications. For this, the linear sequential model is unacceptable, but when the criteria are set and work is preceded linearly, a useful process would be the linear sequential model.

Key takeaways

- Often referred to as the classic life cycle or waterfall model.

- This model implies a sequential approach to software development that starts at the system level.

- Each of the above five framework activities is carried out by a linear process flow.

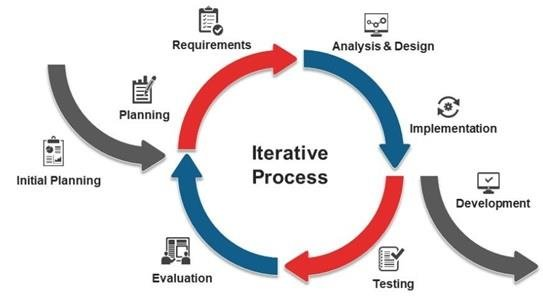

The Life Cycle of Software Development (SDLC) is extremely vast and full of diverse practices, methodologies, methods, resources, and more for development and testing. Intense planning and management, estimation and training are involved. It is only after integrating all these attempts of the software engineers that a programme or software is successfully developed. The Iterative Model is also a part of the life cycle of software development.

It is a basic implementation of a life cycle of software development that focuses on an original, simpler implementation, which then gradually gains more complexity and a wider feature set before the final framework is complete. In brief, iterative development is a way of breaking down a big application's software development into smaller parts.

An iterative life cycle model does not start with a complete requirement specification. The development of this model starts by defining and implementing only part of the programme, which is then reviewed to decide further specifications. In addition, the iterative process begins with a basic implementation of a small set of software specifications in the iterative model, which iteratively develops the developing iterations until the entire framework is implemented and ready for deployment. In a particular and defined time span, which is called iteration, each release of the Iterative Model is created.

The iterative model is then used in the following scenarios:

● When the parameters of the whole scheme are clearly described and understood.

● The key requirements are established, while some features and requested changes change with the development phase process.

● The development team is using a new technology and is learning it as they are working on the project.

● If there are some high-risk attributes and priorities, these may change in the future.

● When the tools with the appropriate skill sets are not available and are scheduled to be used for particular iterations on a contract basis.

Process of iterative model:

In comparison to the more conventional models that rely on a systematic step-by-step development process, the Iterative Model process is cyclic. In this phase, a handful of steps are repeated again and again until the initial planning is finished, with the end of each step refining and iterating on the programme incrementally.

The following defines other steps of the iterative model:

- Planning Phase

This is the first step of the iterative model, where the team carries out proper preparation to assist them in mapping the documentation of the specifications, defining criteria for software or hardware and generally preparing for the next phases of the cycle.

Fig 2: iterative process

2. Analysis and Design Phase

If the preparation for the cycle is complete, an analysis is carried out to determine the required business logic, database models and to understand any other specifications of this specific level. In addition, in this step of the iterative model, the design phase also takes place, where technical specifications are defined that will be used to satisfy the need for the analysis phase.

3. Implement Phase

This is the third step of the iterative model and the most critical one. Here, the method of actual implementation and coding is performed. In this initial iteration of the project, all planning, specification, and design documents up to this stage are coded and implemented.

4. Testing Phase

Testing is started in the period after the current build version is coded and implemented to detect and find any possible vulnerabilities or problems that may have been in the programme.

5. Evaluation Phase

The final stage of the Iterative life cycle is the assessment step, in which the whole team, along with the client, reviews the project status and validates whether it is up to the criteria proposed.

Advantages:

Until incorporating it in the Software Development Life Cycle, it is highly important to consider the benefits of the Iterative model (SDLC). The main advantage of this model is that it is applied during the early stages of the software development period, enabling developers and testers to detect functional or design-related defects as soon as possible, allowing them to take corrective action on a small budget.

Other benefits or bonuses of this model are:

➢ It can easily be tailored to the ever-changing needs of both the project and the customer.

➢ It is ideally suited to companies which are agile.

➢ In the Iterative model, it is more cost efficient to adjust the scope or specifications.

➢ It is possible to plan a parallel growth.

➢ It's easy to test and debug during smaller iterations.

➢ During iteration, threats are defined and resolved; each iteration is easily handled.

➢ In the iterative model, less time is spent on documentation and more design time is provided.

Disadvantages:

Although the iterative model is extremely advantageous, there are few drawbacks and disadvantages attached to it, such as the rigidity of each stage of an iteration without overlaps. Device architecture or design problems can also occur when not all specifications are met at the start of the entire life cycle. Additional drawbacks of the iterative model include:

➢ More attention from management is needed.

➢ For smaller projects, it is not appropriate.

➢ Skill analysis requires highly qualified personnel.

➢ Project success depends heavily on the process of risk analysis.

➢ Defining increments can require the whole system to be specified.

➢ There could be more resources needed

Key takeaways

- The Iterative Model enables earlier stages, in which the variations were made, to be accessed.

- At the end of the Software Development Life Cycle (SDLC) process, the final performance of the project was renewed.

- You can begin with some of the specifications of the software in this model and create the first version of the software.

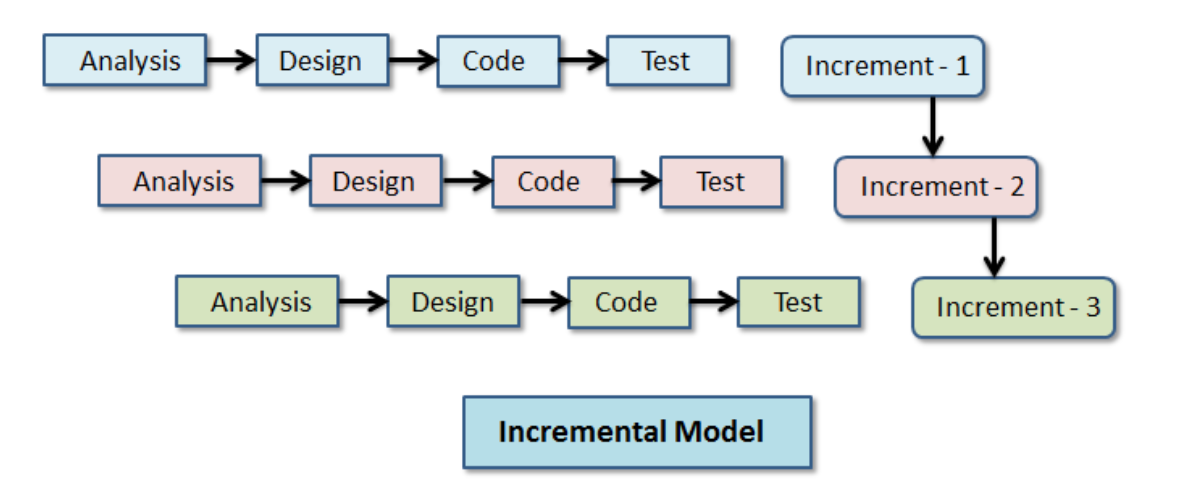

The software development process that is often implemented is an incremental model. In software development, there are several models that have been developed to achieve various goals. These models define how the software is designed to enforce these phases at each point of iteration and process.

The choice of model depends entirely on the company and its software purpose, and this model choice also has a high influence on the methodologies for research. Agile, Waterfall, Gradual, RAD, Iterative, Spiral etc. include some of the popular models. We will look at one such model, known as the incremental model, in this article.

Incremental Model is one of the most common software development system models where in the life cycle of software development the software requirement is broken down into several standalone modules. Once the modules are divided, incremental development will be carried out in steps covering all the research, design, execution, performing all the testing or verification and maintenance required.

There will be a review at each incremental point on the basis of which the decision on the next move will be made.

The phases and progress of each incremental step are outlined below in the diagram:

Fig 3: Incremental model

You will see that we are going through research, design code and test process at each point of incremental growth, and by doing so we ensure that the different incremental phases are consistent and cumulative in helping to achieve the software's necessary goals.

Phase of incremental model:

Let us look at each stage in the growth of each incremental phase.

- Requirement analysis

The review is completed on the requirement and how to make sure that this requirement is consistent with previously defined requirements.

2. Design

Once I understand and clearly understand the criteria for this specific increase, design will be drawn up on how this requirement can be enforced and archived.

3. Code

The coding is now carried out in compliance with the criteria to achieve the objective. Without any defaults and redundant hard codes, all the coding standards will be followed.

4. Test

This is the last step in the incremental stage in which aggressive testing on the established code is carried out and defects are identified and corrected.

Advantages of incremental model:

Let's look at a few of the benefits of this particular model

➢ There will be several tests for the programme and further testing, better performance and less bugs as there is testing at each incremental point.

➢ We will lower the initial cost of distribution by following this strategy.

➢ As the criteria or scope is modified, this model is versatile and incurs no costs.

➢ Feedback on each stage should be generated by the consumer or the customer so that work effort is respected and sudden changes in the requirement can be avoided.

Key takeaways

- This model tends to be lighter on the user's pockets compared to the other model.

- Errors can be silently detected quickly by following these templates.

- This model is also favoured if there are long production timetables for the project.

- Often, if new technology is introduced in the production of apps, then this approach is often favoured because developers are new to the technology.

Agile Software Development

The Agile Manifesto is a document that lays out the core concepts and principles behind the Agile methodology and aims to work more effectively and sustainably with development teams.

Agile Manifesto, which was developed in 2001 by 17 software developers.

The Agile Manifesto consists of four core values and 12 supporting principles that direct the Agile approach to the development of software. The four principles are implemented in different ways by each Agile approach, but all of them rely on them to direct the creation and delivery of high-quality, working applications.

Four core principles will summarize the manifesto:

- Individual interactions over process and tools

"Individuals and interactions over processes and tools are the first value in the Agile Manifesto. It is easy to understand to value people more highly than processes or tools because it is the individuals who respond to business needs and drive the process of development." The team is less receptive to change and less able to meet customer expectations if the process or the tools drive growth. Contact is an example of the disparity between individual valuation and process valuation. Communication is complex in the case of individuals and occurs when a need arises. Communication is scheduled in the event of a phase and involves particular material.

2. Working software rather than thorough documentation

Historically, large quantities of time have been spent on recording the commodity for growth and eventual delivery. Each includes technical specifications, technical requirements, technical prospectus, interface design documents, test plans, documentation plans, and approvals.

The list was lengthy and was a justification for the long production delays. Agile does not delete paperwork, but streamlines it in a way that allows the developer what it takes to do the job without minutiae being bogged down. Agile records specifications as user stories, which are sufficient for a software developer to begin the task of developing a new product. Documentation values the Agile Manifesto, but its values working apps more.

3. Collaboration with customer instead of contract negotiations

Negotiation is the time where the delivery details are sorted out by the client and the product manager, at points along the way where the details can be renegotiated. Collaboration is completely another creature. Customers discuss the specifications for the product with production models such as Waterfall, often in great detail, before any work starts.

The Agile Manifesto identifies a customer who is active and collaborates in the process of growth. This makes it much simpler for production to fulfil the client's requirements. Agile approaches can involve the client for occasional demonstrations at intervals, but a project may include an end-user as easily as a regular member of the team and attend all meetings, ensuring that the product meets the customer's business needs.

4. More than following a plan, respond to change

Change was viewed by conventional software development as an expense, so it was to be avoided. The goal was to build detailed, intricate plans, with a given set of characteristics and with everything, normally having as high a priority as everything else, and with a large number of dependencies on delivering in a certain order so that the team could focus on the next piece of the puzzle.

Key takeaways

- The Agile Manifesto consists of four core values and 12 supporting principles that direct the Agile approach to the development of software.

- The Agile Manifesto is a document that lays out the core concepts and principles behind the Agile methodology and aims to work more effectively and sustainably with development teams.

For the methodologies used under the title "The Agile Movement," the Twelve Principles are the guiding principles. They define a community in which change is welcome, and the work focuses on the client. As stated by Alistair Cockburn, one of the signatories to the Agile Manifesto, they also illustrate the purpose of the movement to bring growth in line with business needs.

Agile development's twelve concepts include:

- Customer satisfaction through early and continuous software delivery –

Instead of waiting for long periods of time between launches, consumers are happier when they receive working apps at regular intervals.

2. Accommodate changing requirements throughout the development process –

If a requirement or function request changes, the opportunity to avoid delays.

3. Frequent delivery of working software –

As the team works in software sprints or iterations that ensure daily delivery of working software, scrum complies with this idea.

4. Collaboration between the business stakeholders and developers throughout the project -

When the company and technical staff are aligned, better decisions are made.

5. Support, trust, and motivate the people involved –

Motivated teams are more likely than dissatisfied teams to perform their best work.

6. Enable face-to-face interactions –

When development teams are co-located, communication is more effective.

7. Working software is the primary measure of progress –

The ultimate element that measures success is providing usable applications to the consumer.

8. Agile processes to support a consistent development pace –

Teams set a repeatable and maintainable rate at which they will produce working software, and with each release they repeat it.

9. Attention to technical detail and design enhances agility –

The right skills and good design ensure that the team can maintain the pace, enhance the product consistently, and manage change.

10. Simplicity –

Create just enough for the moment to get the job done.

11. Self-organizing teams encourage great architectures, requirements, and designs –

Professional and empowered members of the team who have decision-making authority, take responsibility, engage with other members of the team frequently, and exchange ideas that produce quality goods.

12. Regular reflections on how to become more effective –

Self-improvement, method improvement, capabilities enhancement, and strategies enable team members to work more effectively.

Key takeaways

- They define a community in which change is welcome, and the work focuses on the client.

- For the methodologies used under the title "The Agile Movement," the Twelve Principles are the guiding principles.

The Iterative Waterfall model was very common in earlier days to complete a project. But today, when using it to create a program, developers face different problems. During project growth, the key challenges included managing change requests from clients and the high cost and time needed to implement these changes. To counter these limitations of the Waterfall model, the Agile Software Development model was proposed in the mid-1990s

The Agile model was primarily designed to help a project to adapt to change requests quickly. So, the main aim of the Agile model is to facilitate quick project completion. Agility is necessary to achieve this mission. Agility is accomplished by adapting the approach to the project, eliminating behaviours that may not be important for a particular project. Something that is a waste of time and money is also stopped.

The Agile model actually applies to a community of production processes. Such procedures share certain fundamental features, but there are some subtle variations between them. Below are a few Agile SDLC models –

● Crystal

● Atern

● Feature-driven development

● Scrum

● Extreme programming (XP)

● Lean development

● Unified process

The specifications are decomposed into several small parts in the Agile model that can be built gradually. Iterative production is embraced by the Agile model. Over an iteration, each incremental component is created. Each iteration is intended to be small and simple to handle, and can only be completed in a few weeks. One version is scheduled, produced and deployed to the clients at a time. They do not make long-term plans.

The fusion of iterative and incremental process models is the Agile model. In agile SDLC models, the steps involved are:

● Requirement gathering

● Requirement Analysis

● Design

● Coding

● Unit testing

● Acceptance testing

The time for an iteration to be completed is known as a Time Box. The time-box refers to the maximum amount of time available for clients to deliver an iteration. So, it does not change the end date for an iteration. But, if required to produce it on time, the development team may decide to decrease the supplied functionality during a Time-box.

Key takeaway:

● The basic concept of the Agile model is that the consumer receives an increase after each time box.

● The Agile model actually applies to a community of production processes.

● Agility is accomplished by adapting the approach to the project, eliminating behaviours that may not be important for a particular project.

Myths and misunderstandings may acquire credence and become common knowledge over time, as with any system or process.

Myth 1 - Agile is new

Certainly, Agile isn't new. Agile processes have long been around. In the late 1980s and 1990s, the systems that are now collectively known as agile primarily emerged, meaning agile is mature and an approach that many people are inherently familiar with.

Agile, in essence, is all about allowing inspection and adaptation in complex environments where there is uncertainty. This is a founding concept of evolutionary theory, for instance. It is also the way human beings communicate on a day-to-day basis with the universe. In reality, it is the only way that human beings can interact effectively in a complex and complex world.

Myth 2 - Implementing agile is easy

Typically, changing a complex system delivery lifecycle to a simple one is not easy. It is usually easier for organizations to make things more complicated than to simplify them.

Unfortunately, what occurs with certain companies is that they attempt 'by the book' to adopt an agile operating model or a single agile system without recognizing the complexities of change.

Inevitably, such companies struggle to achieve the true advantages of agile. By reading a book, you can potentially learn to fly a plane, but don't expect me to sit next to you on your first take-off!

Myth 3 - Agile gives instant benefit

While a transition to agile can offer tremendous advantages, the fact is that most transitions go through a learning curve. Although individuals and organisations are learning, the capacity of delivery will actually go down before making the step-change upwards and beginning to reach the enhanced capacity of delivery.

Myth 4 - Agile means no documentation

Most possibly, this misconception stems from the Agile Manifesto's misinterpretation, where it states: "We value working software over comprehensive documentation."

It is important to note that the manifesto does not suggest that documentation is not required; instead of wasting exhaustive quantities of time developing comprehensive documentation up front, it says the emphasis is on producing working software.

All effective agile deliveries should facilitate and generate oriented, value-driven, business-beneficial documentation that helps the organization to efficiently use the product and support and manage it by the technical team.

Myth 5 - Agile means “hacking” code together with little thought of architecture or design

Agile "hacking" means cobbling together an IT device with little to no architectural thought or design.

The Agile Manifesto notes that "continuous attention to technical excellence and good design improves agility," and several agile systems provide the team with the resources and methods to create very high-quality code. In extreme programming, for instance, several activities are primarily aimed at ensuring that the quality of the product being provided is fit for purpose.

Myth 6 - Agile is a silver bullet

Agile isn't the solution to all concerns with IT. Instead of combining various structures, each one of which provides part of the solution, there is no single answer to all IT problems. It must be proactive to incorporate implementation and management systems such as agile. The real-world context in which a method will be applied and used must be understood, and the best integration of agile and non-agile systems that will operate in that real-world environment must be considered. There's no single structure for silver bullets.

Myth 7 - Agile: just read a book

Agile comprehension is not something that can be accomplished simply by reading a book. It is a very good idea to pick and read some of the agile books from the leading agile exponents (many suggestions are made in the book of the BCS/Radtac Agile Foundations), but just reading a book does not replace the realistic experience that is required to enable an agile mindset to become agile and effectively transform an organization or team.

Myth 8 - Agile only relates to software delivery

It is true that, in the sense of software delivery, the Agile Manifesto defines agile, but agile can be effectively implemented in business environments that are not strictly software-related. Agile is essentially tailored to any dynamic business climate, such as marketing or business change, that experiences variability.

Key takeaways

Myths about apps spread misinformation and uncertainty.

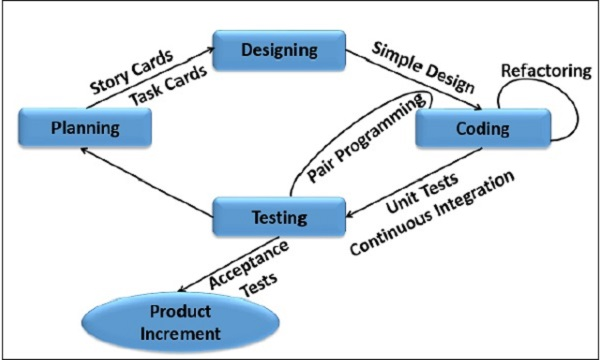

1.13.1 Extreme programming

On these tasks and coding, Intense Programming builds. With many tight feedback loops through efficient implementation, testing and continuous refactoring, it is the comprehensive (not the only) design operation.

Extreme Programming is based on the values below:

● Communication

● Simplicity

● Feedback

● Courage

● Respect

XP is a software development that is lightweight, effective, low-risk, scalable, predictable, science and fun.

In the face of unclear and evolving requirements, eXtreme Programming (XP) was conceived and developed to meet the unique needs of software development by small teams.

One of the methodologies of Agile software development is Extreme Programming. It offers beliefs and ideals to guide the actions of the team. It is predicted that the team will self-organize. Extreme programming offers particular core activities in which

● Each activity is easy and complete in itself.

● More complex and changing behaviour is created by a combination of techniques.

Extreme Programming involves −

● Prior to programming, writing unit tests and keeping all of the tests running at all times. Unit testing is automated and faults are eliminated early, thus lowering costs.

● Just enough to code the features at hand and redesign when necessary, beginning with a basic design.

● Pair programming (called pair programming), with two programmers on a single computer, using the keyboard in turns. When one of them is on the keyboard, the other continuously reviews and gives suggestions.

● Several times a day, implementing and checking the entire system.

● Rapidly bringing a minimal working system into development and updating it whenever appropriate.

● Holding the client active and receiving constant input all the time.

As the programme progresses with the evolving demands, iterating enables the accommodating changes.

Fig 4: extreme programming

1.13.2 Scrum

Scrum is a lightweight agile method system mainly used for software development management. Hey, Scrum is

❏ Lightweight since it has few components that are recommended

● Three roles: Team, Scrum Master (often a Project Manager), Product Owner (often a Product Manager)

● Three meetings: Sprint Planning, Daily Scrum, Retrospective

● Three artifacts: Product Backlog, Sprint Backlog, Burndown chart

❏ Agile as it maximizes responsiveness to evolving needs of customers

❏ A system structure because it is not a method, but a set of methods and principles from which a process can be constructed.

Scrum is a process system that, since the early 1990s, has been used to handle work on complex goods. Scrum is not a procedure, methodology, or conclusive process. Instead, it is a structure within which different processes and techniques can be employed. Scrum makes clear the relative effectiveness of the product management and job strategies such that the product, the staff, and the working atmosphere can be continually enhanced.

Scrum Teams and their related tasks, events, objects, and rules consist of the Scrum system. Each component serves a particular role within the system and is important to the success and use of Scrum.

Scrum's rules tie the functions, activities, and objects together, governing the relationships and interaction between them.

Benefits of scrum:

From a software development process, various stakeholders want distinct things.

● Developers want code, not documents, to be written.

● Quality Assurance engineers want to build research plans that guarantee the quality of the product and provide a high-quality code to test.

● Project managers want a process that is simple to schedule, implement, and control.

● Product Managers want features that are easily implemented, with no bugs.

Key takeaways

- XP is a software development that is lightweight, effective, low-risk, scalable, predictable, science and fun.

- One of the methodologies of Agile software development is Extreme Programming.

- It offers beliefs and ideals to guide the actions of the team.

- Scrum is not a procedure, methodology, or conclusive process.

- Scrum Teams and their related tasks, events, objects, and rules consist of the Scrum system.

Agile Practices

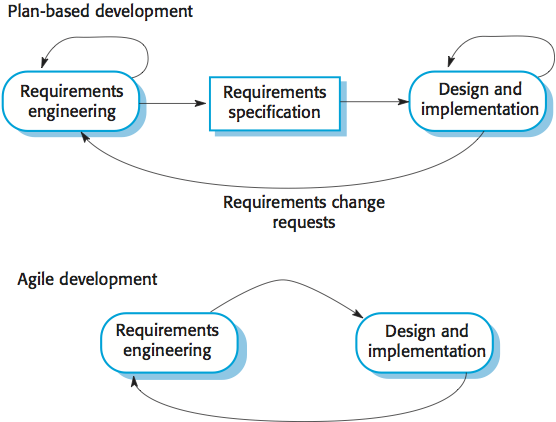

Plan-driven procedures are systems in which all process operations are carried out.

Designed in advance, and progress toward this strategy is calculated. Different device types include various software processes.

In the software process, agile approaches to software development consider design and execution to be the core activities. They introduce other tasks into the design and execution, such as the elicitation and testing of specifications.

By comparison, a software engineering plan-driven approach describes different phases in the software process with outputs associated with each step. As a basis for planning the following process operation, the outputs from one stage are used.

Iteration happens through tasks in an agile approach.

The specifications and the design are thus established together, rather than separately. Not necessarily a waterfall model of a plan-driven software operation, plan driven, incremental development and execution is feasible.

Allocating criteria and planning the design and development process as a sequence of increments is perfectly feasible. An agile process is not inevitably code-focused and some design documentation may be created.

Not necessarily a waterfall model of a plan-driven software operation, plan driven, incremental development and execution is feasible. It is entirely feasible to assign specifications and schedule the design and development process as a sequence of increments.

Fig 5: Plan - driven and agile development

There are components of plan-driven and agile processes in most projects. It depends on technological, human and organizational issues to determine the balance:

- Before moving to implementation, is it necessary to have a very comprehensive specification and design? If so, a plan-driven technique typically needs to be used by a software engineer.

2. Is an incremental distribution approach practical, where the software developer delivers the software to customers and receives fast input from them? Consider using agile strategies if so.

3. In order to help device growth, what technologies are available? Agile techniques rely on good instruments to keep track of a changing architecture

4. What kind of scheme is being created? For programmes that require a lot of study before implementation, plan-driven approaches might be needed (e.g. Real-time system with complex timing requirements).

5. What is the predicted lifetime of a system? In order to convey the original goals of the system developers to the support team, longer-life systems can require further design documentation.

6. How big is the scheme that is being created? Where the framework can be built with a small co-located team who can communicate informally, agile approaches are most successful. For complex systems that need larger development teams, this may not be feasible, so a plan-driven approach may have to be used.

Key takeaways

- In the software process, agile approaches to software development consider design and execution to be the core activities.

- An agile process is not inevitably code-focused and some design documentation may be created.

- Iteration happens through tasks in an agile approach.

Pair programming is a programming style in which two programmers work side by side on one computer, share one screen, keyboard, and mouse, and collaborate on the same concept, algorithm, code, or test on an ongoing basis.

One programmer has control of the keyboard/mouse, called the driver, and actively executes the code or writes a test. The other programmer, referred to as the navigator, regularly watches the driver's work to locate faults and also strategically thinks about the work's course.

The two programmers brainstorm on any challenging issue when needed. Periodically, the two programmers shift positions and work together as equals to create a software.

Advantages of pair programming

The main benefits of pair programming are −

❏ The moment they are typed, rather than in QA testing or in the field, several errors are found.

❏ The content of the end defects is statistically lower.

❏ The designs are stronger and the length of the code is shorter.

❏ The team fixes issues quicker.

❏ People learn considerably more about the system and the development of apps.

❏ The project concludes with the comprehension of each piece of the structure by many individuals.

❏ People learn to work together and communicate together more frequently, creating a smoother flow of knowledge and team dynamics.

❏ People appreciate their job more.

Usage of the practice of pair programming has been shown to increase the efficiency and quality of software products.

The analysis of Pair Programming shows that

● Pairs use no more man-hours than singles.

● Pairs create fewer defects.

● Pairs create fewer lines of code.

● Pairs enjoy their work more.

Key takeaways

- One programmer has control of the keyboard/mouse, called the driver, and actively executes the code or writes a test.

- The two programmers brainstorm on any challenging issue when needed.

Continuous integration in DevOps, shortly referred to as 'CI', is an essential process or a collection of processes described and performed as part of a pipeline called 'Construct Pipeline' or 'CI Pipeline.'

We recognize that we have a single version control tool for the Development and Operations team in the DevOps practice, where everyone's code will be deposited as a master code base, enabling the team to operate in parallel.

In DevOps, therefore, continuous integration is nothing but the combination of individual developer code into the master copy of the code to the main branch where version control is maintained. For the code merge that needs to happen in a day, there is no cap on all of the times.

Where and when the developer tests the version control in their code, the CI kick phase begins immediately.

The method for CI includes,

- Merging of all the Developers code to the main line,

- Triggering a build,

- Compiling the code and making a build and …. Lastly

- Carrying out the unit test.

Therefore, Continuous Integration is a method of integrating all the code of the developer to a central location and validating each of their combinations with an automated build and test.

Technically, to clarify what happens during CI is to

There will be a continuous integration server that hosts the CI tool, which continues to track the code check-in version control tool, and as soon as a check-in is detected, it triggers automatic compilation, builds and runs unit testing along with static code review and a simple level of automated security testing.

Both of these tools will be incorporated with the CI pipeline with the different tools to perform automated testing, such as Jenkins, TestNG, NUnit to perform unit testing, Sonar to perform static code analysis, and strengthening to perform security testing.

So, without any manual intervention, the full CI pipeline is an automated process and runs within a few seconds or minutes.

Major benefits:

- The CI runs after the code is reviewed by the developer and throws out the results in seconds. So, it helps developers to instantly know whether their code has been created or broken successfully.

2. It also lets the developer know that something another team member has done to a certain section of the code base whether his code has successfully merged or broken with the other's code. CI thus conducts the faster analysis of code and makes the later merges easier and error-free.

Key takeaways

- DevOps as an extension of Agile principles.

- Continuous integration (CI), a technique developed and called by Grady Booch that continuously merges source code changes from all developers on a team into a common mainline, is a cornerstone of DevOps.

Refactoring consists of strengthening the internal structure of the source code of an existing application, while retaining its external actions.

The noun "refactoring" refers to one specific transformation that preserves actions, such as "Extract Method" or "Introduce Parameter."

Refactoring does "not" imply that:

● rewriting code

● fixing bugs

● improve observable aspects of software such as its interface

Refactoring is dangerous in the absence of protections against the introduction of errors (i.e. breaching the condition of 'behaviour preserving'). Safeguards include regression testing aids, which include automated unit tests or automated acceptance tests, and formal reasoning aids, such as type systems.

The process of clarifying and simplifying the functionality of the current code without altering its behaviour is code refactoring. Agile teams retain and expand their code from iteration to iteration a great deal, and this is hard to do without constant refactoring. This is because code that is un-refactored appears to rust. Rot takes several forms: Unhealthy class or package dependencies, poor class responsibility allocation, way too many responsibilities per process or class, redundant code, and many other forms of uncertainty and clutter.

Code refactoring ruthlessly removes rot, keeping the code simple to maintain and expand. This versatility is the reason for refactoring and is the measure of its efficiency. But remember that if we have comprehensive unit test suites of the type we get if we work test-first, it is only "safe" to refactor the code this extensively. After each small step in refactoring, we run the risk of introducing bugs without being able to run certain tests.

Benefits:

The following benefits of refactoring are claimed:

● Refactoring strengthens objective code characteristics (length, replication, coupling and cohesion, cyclomatic complexity) that correlate with ease of maintenance.

● Code comprehension is improved by refactoring

● Refactoring helps each developer, particularly in the sense of collective ownership / collective code ownership, to think about and understand design decisions.

● Refactoring favours the emergence of interchangeable design components and code modules (such as design patterns)

Key takeaways

- Refactoring is dangerous in the absence of protections against the introduction of errors

- Code refactoring ruthlessly removes rot, keeping the code simple to maintain and expand.

- This versatility is the reason for refactoring and is the measure of its efficiency.

References

- Roger Pressman, “Software Engineering:A Practitioner’s Approach”, McGraw Hill,ISBN 0-07-337597-7

- Ian Sommerville, “Software Engineering”,Addison and Wesley, ISBN 0-13-7035152

- Joseph Phillips, “IT Project Management-On Track From start to Finish”, Tata McGraw-Hill, ISBN13:978-0-07106727-0, ISBN-10:0-07-106727-2

- Pankaj Jalote, “Software Engineering: A Precise Approach”,Wiley India, ISBN: 9788-1265-2311-5

- Marchewka, “Information Technology Project Management”,Willey India, ISBN: 9788-1265-4394-6

- Rajib Mall, “Fundamentals of Software Engineering”,Prentice Hall India, ISBN-13:9788-1203-4898-1