Unit 3

Matrices

Symmetric matrix: In linear algebra, a symmetric matrix is a square matrix that is equal to its transpose. Formally, because equal matrices have equal dimensions, only square matrices can be symmetric. The entries of a symmetric matrix are symmetric with respect to the main diagonal. So, if aᵢⱼ denotes the entry in the i-th row and j-th column then for all indices i and j. Every square diagonal matrix is symmetric, since all off-diagonal elements are zero.

Any square matrix is said to be symmetric matrix if its transpose equals to the matrix itself.

For example:

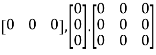

&

&

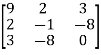

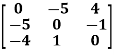

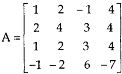

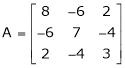

Example: check whether the following matrix A is symmetric or not?

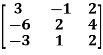

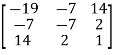

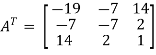

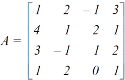

A =

Sol. As we know that if the transpose of the given matrix is same as the matrix itself then the matrix is called symmetric matrix.

So that, first we will find its transpose,

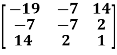

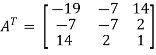

Transpose of matrix A ,

Here,

A =

So that, the matrix A is symmetric.

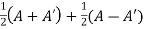

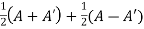

Example: Show that any square matrix can be expressed as the sum of symmetric matrix and anti- symmetric matrix.

Sol. Suppose A is any square matrix .

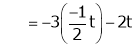

Then,

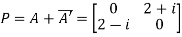

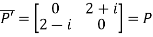

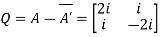

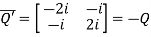

A =

Now,

(A + A’)’ = A’ + A

A+A’ is a symmetric matrix.

Also,

(A - A’)’ = A’ – A

Here A’ – A is an anti – symmetric matrix

So that,

Square matrix = symmetric matrix + anti-symmetric matrix

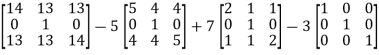

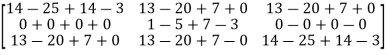

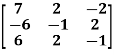

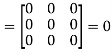

Example1: Let us test whether the given matrices are symmetric or not i.e., we check for,

A =

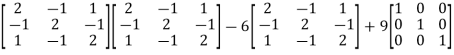

(1) A =

Now

Now

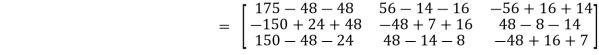

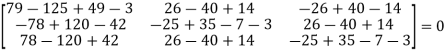

=

=

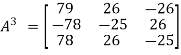

A =

A =

Hence the given matric symmetric

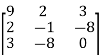

Example2: let A be a real symmetric matrix whose diagonal entries are all positive real numbers.

Is this true for all of the diagonal entries of the inverse matrix A-1 are also positive? If so, prove it. Otherwise give a counter example

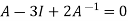

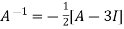

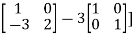

Solution: The statement is false, hence we give a counter example

Let us consider the following 2 2 matrix

2 matrix

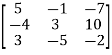

A =

The matrix A satisfies the required conditions, that is A is symmetric and its diagonal entries are positive.

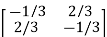

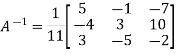

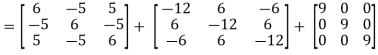

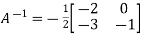

The determinant det(A) = (1)(1)-(2)(2) = -3 and the inverse of A is given by

A-1=  =

=

By the formula for the inverse matrix for 2 2 matrices.

2 matrices.

This shows that the diagonal entries of the inverse matric A-1 are negative.

Skew-symmetric:

A skew-symmetric matrix is a square matrix that is equal to the negative of its own transpose. Anti-symmetric matrices are commonly called as skew-symmetric matrices.

In other words-

Skew-symmetric matrix-

A square matrix A is said to be skew symmetrix matrix if –

1. A’ = -A, [ A’ is the transpose of A]

2. All the main diagonal elements will always be zero.

For example-

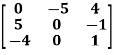

A =

This is skew symmetric matrix, because transpose of matrix A is equals to negative A.

Example: check whether the following matrix A is symmetric or not?

A =

Sol. This is not a skew symmetric matrix, because the transpose of matrix A is not equals to -A.

-A = A’

Example: Let A and B be n n skew-matrices. Namely AT = -A and BT = -B

n skew-matrices. Namely AT = -A and BT = -B

(a) Prove that A+B is skew-symmetric.

(b) Prove that cA is skew-symmetric for any scalar c.

(c) Let P be an m n matrix. Prove that PTAP is skew-symmetric.

n matrix. Prove that PTAP is skew-symmetric.

Solution: (a) (A+B)T = AT + BT = (-A) +(-B) = -(A+B)

Hence A+B is skew symmetric.

(b) (cA)T = c.AT =c(-A) = -cA

Thus, cA is skew-symmetric.

(c)Let P be an m n matrix. Prove that PT AP is skew-symmetric.

n matrix. Prove that PT AP is skew-symmetric.

Using the properties, we get,

(PT AP)T = PTAT(PT)T = PTATp

= PT (-A) P = - (PT AP)

Thus (PT AP) is skew-symmetric.

Orthogonal matrix: An orthogonal matrix is the real specialization of a unitary matrix, and thus always a normal matrix. Although we consider only real matrices here, the definition can be used for matrices with entries from any field.

Suppose A is a square matrix with real elements and of n x n order and AT is the transpose of A. Then according to the definition, if, AT = A-1 is satisfied, then,

A AT = I

Where ‘I’ is the identity matrix, A-1 is the inverse of matrix A, and ‘n’ denotes the number of rows and columns.

Orthogonal matrix-

Any square matrix A is said to be an orthogonal matrix if the product of the matrix A and its transpose is an identity matrix.

Such that,

A. A’ = I

Matrix × transpose of matrix = identity matrix

Note- if |A| = 1, then we can say that matrix A is proper.

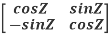

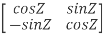

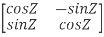

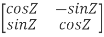

Example:  and

and  are the form of orthogonal matrices.

are the form of orthogonal matrices.

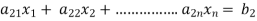

Example: prove Q=  is an orthogonal matrix

is an orthogonal matrix

Solution: Given Q =

So, QT =  …..(1)

…..(1)

Now, we have to prove QT = Q-1

Now we find Q-1

Q-1 =

Q-1 =

Q-1 =

Q-1 =  … (2)

… (2)

Now, compare (1) and (2) we get QT = Q-1

Therefore, Q is an orthogonal matrix.

Key takeaways-

- Any square matrix is said to be symmetric matrix if its transpose equals to the matrix itself.

- Square matrix = symmetric matrix + anti-symmetric matrix

- A skew-symmetric matrix is a square matrix that is equal to the negative of its own transpose. Anti-symmetric matrices are commonly called as skew-symmetric matrices

4. A square matrix A is said to be skew symmetrix matrix if –

1. A’ = -A, [ A’ is the transpose of A]

2. All the main diagonal elements will always be zero.

5. Matrix × transpose of matrix = identity matrix

- Definition:

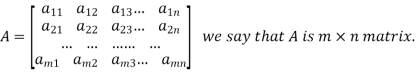

An arrangement of m.n numbers in m rows and n columns is called a matrix of order mxn.

Generally a matrix is denoted by capital letters. Like, A, B, C, ….. Etc.

Types of matrices:

Row matrix:

A matrix with only one single row and many columns can be possible.

A =

Column matrix

A matrix with only one single column and many rows can be possible.

A =

Square matrix

A matrix in which number of rows is equal to number of columns is called a square matrix. Thus an matrix is square matrix then m=n and is said to be of order n.

matrix is square matrix then m=n and is said to be of order n.

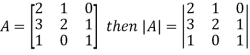

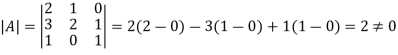

The determinant having the same elements as the square matrix A is called the determinant of the matrix A. Denoted by |A|.

The diagonal elements of matrix A are 2, 2 and 1 is the leading and the principal diagonal.

The sum of the diagonal elements of square matrix A is called the trace of A.

A square matrix is said to be singular if its determinant is zero otherwise non-singular.

Hence the square matrix A is non-singular.

Diagonal matrix

A square matrix is said to be diagonal matrix if all its non diagonal elements are zero.

Scalar matrix:

A diagonal matrix is said to be scalar matrix if its diagonal elements are equal.

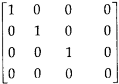

Identity matrix:

A square matrix in which elements in the diagonal are all 1 and rest are all zero is called an identity matrix or Unit matrix.

Null Matrix:

If all the elements of a matrix are zero, it is called a null or zero matrixes.

Ex:  etc.

etc.

Symmetric matrix:

Any square matrix is said to be symmetric matrix if its transpose equals to the matrix itself.

For example:

and

and

Example: check whether the following matrix A is symmetric or not?

A =

Sol. As we know that if the transpose of the given matrix is same as the matrix itself then the matrix is called symmetric matrix.

So that, first we will find its transpose,

Transpose of matrix A ,

Here,

A =

So that, the matrix A is symmetric.

Example: Show that any square matrix can be expressed as the sum of symmetric matrix and anti- symmetric matrix.

Sol. Suppose A is any square matrix .

Then,

A =

Now,

(A + A’)’ = A’ + A

A+A’ is a symmetric matrix.

Also,

(A - A’)’ = A’ – A

Here A’ – A is an anti – symmetric matrix

So that,

Square matrix = symmetric matrix + anti-symmetric matrix

Hermitian matrix:

A square matrix A =  is said to be hermitian matrix if every element of A is equal to conjugate complex j-ith element of A.

is said to be hermitian matrix if every element of A is equal to conjugate complex j-ith element of A.

It means,

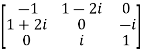

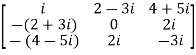

For example:

Necessary and sufficient condition for a matrix A to be hermitian –

A = (͞A)’

Skew-Hermitian matrix-

A square matrix A =  is said to be hermitian matrix if every element of A is equal to negative conjugate complex j-ith element of A.

is said to be hermitian matrix if every element of A is equal to negative conjugate complex j-ith element of A.

Note- all the diagonal elements of a skew hermitian matrix are either zero or pure imaginary.

For example:

The necessary and sufficient condition for a matrix A to be skew hermitian will be as follows-

- A = (͞A)’

Note: A Hermitian matrix is a generalization of a real symmetric matrix and also every real symmetric matrix is Hermitian.

Similarly a Skew- Hermitian matrix is a generalization of a Skew symmetric matrix and also every Skew- symmetric matrix is Skew –Hermitian.

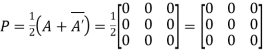

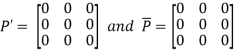

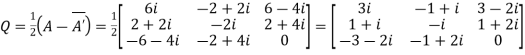

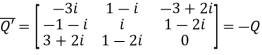

Theorem: Every square complex matrix can be uniquely expressed as sum hermitian and skew-hermitian matrix.

Or If A is given square complex matrix then  is hermitian and

is hermitian and  is skew-hermitian matrices.

is skew-hermitian matrices.

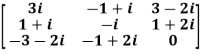

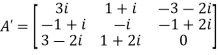

Example1: Express the matrix A as sum of hermitian and skew-hermitian matrix where

Let A =

Therefore  and

and

Let

Again

Hence P is a hermitian matrix.

Let

Again

Hence Q is a skew- hermitian matrix.

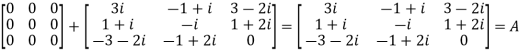

We Check

P +Q=

Hence proved.

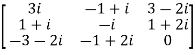

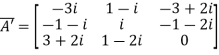

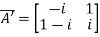

Example2: If A =  then show that

then show that

(i)  is hermitian matrix.

is hermitian matrix.

(ii)  is skew-hermitian matrix.

is skew-hermitian matrix.

Given A =

Then

Let

Also

Hence P is a Hermitian matrix.

Let

Also

Hence Q is a skew-hermitian matrix.

Key takeaways-

- A square matrix A =

is said to be hermitian matrix if every element of A is equal to conjugate complex j-ith element of A.

is said to be hermitian matrix if every element of A is equal to conjugate complex j-ith element of A.

It means,

2. square matrix A =  is said to be hermitian matrix if every element of A is equal to negative conjugate complex j-ith element of A.

is said to be hermitian matrix if every element of A is equal to negative conjugate complex j-ith element of A.

3. A Hermitian matrix is a generalization of a real symmetric matrix and also every real symmetric matrix is Hermitian.

4. Every square complex matrix can be uniquely expressed as sum hermitian and skew-hermitian matrix.

Rank of a matrix-

Rank of a matrix by echelon form-

The rank of a matrix (r) can be defined as –

1. It has at least one non-zero minor of order r.

2. Every minor of A of order higher than r is zero.

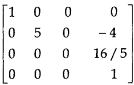

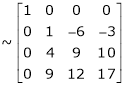

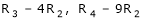

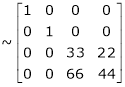

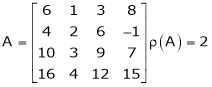

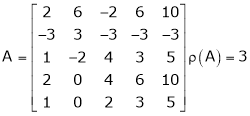

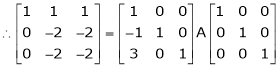

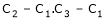

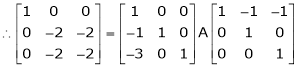

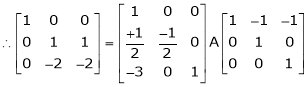

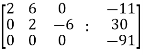

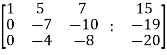

Example: Find the rank of a matrix M by echelon form.

M =

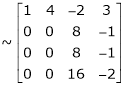

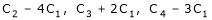

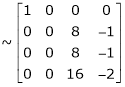

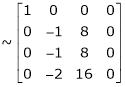

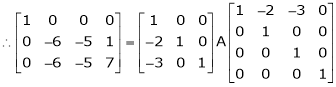

Sol. First, we will convert the matrix M into echelon form,

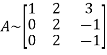

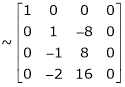

M =

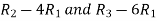

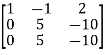

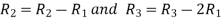

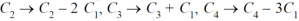

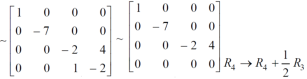

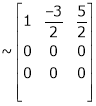

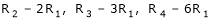

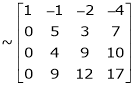

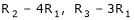

Apply,  , we get

, we get

M =

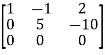

Apply  , we get

, we get

M =

Apply

M =

We can see that, in this echelon form of matrix, the number of non – zero rows is 3.

So that the rank of matrix X will be 3.

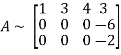

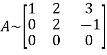

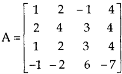

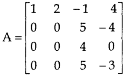

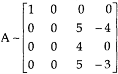

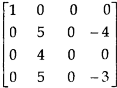

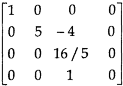

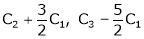

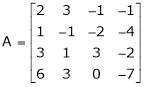

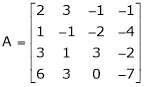

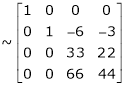

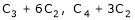

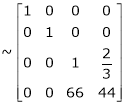

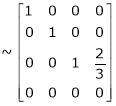

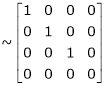

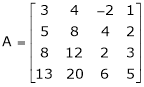

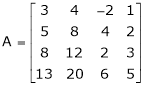

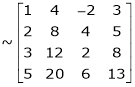

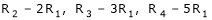

Example: Find the rank of a matrix A by echelon form.

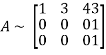

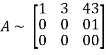

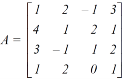

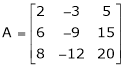

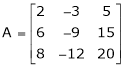

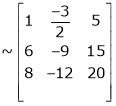

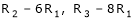

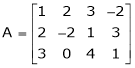

A =

Sol. Convert the matrix A into echelon form,

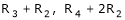

A =

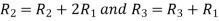

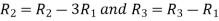

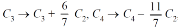

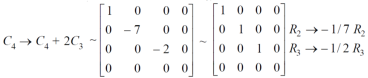

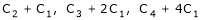

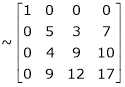

Apply

A =

Apply  , we get

, we get

A =

Apply  , we get

, we get

A =

Apply  ,

,

A =

Apply  ,

,

A =

Therefore the rank of the matrix will be 2.

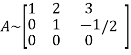

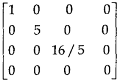

Example: Find the rank of a matrix A by echelon form.

A =

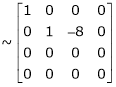

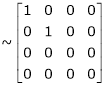

Sol. Transform the matrix A into echelon form, then find the rank,

We have,

A =

Apply,

A =

Apply  ,

,

A =

Apply

A =

Apply

A =

Hence the rank of the matrix will be 2.

Example: Find the rank of the following matrices by echelon form?

Let A =

Applying

A

Applying

A

Applying

A

Applying

A

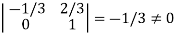

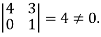

It is clear that minor of order 3 vanishes but minor of order 2 exists as

Hence rank of a given matrix A is 2 denoted by

2.

Let A =

Applying

Applying

Applying

The minor of order 3 vanishes but minor of order 2 non zero as

Hence the rank of matrix A is 2 denoted by

3.

Let A =

Apply

Apply

Apply

It is clear that the minor of order 3 vanishes where as the minor of order 2 is non zero as

Hence the rank of given matrix is 2 i.e.

Rank of a matrix by normal form-

Any matrix ‘A’ which is non-zero can be reduced to a normal form of ‘A’ by using elementary transformations.

There are 4 types of normal forms –

The number r obtained is known as rank of matrix A.

Both row and column transformations may be used in order to find the rank of the matrix.

Note-Normal form is also known as canonical form

Example: reduce the matrix A to its normal form and find rank as well.

Sol. We have,

We will apply elementary row operation,

We get,

Now apply column transformation,

We get,

Apply

, we get,

, we get,

Apply  and

and

Apply

Apply  and

and

Apply  and

and

As we can see this is required normal form of matrix A.

Therefore the rank of matrix A is 3.

Example: Find the rank of a matrix A by reducing into its normal form.

Sol. We are given,

Apply

Apply

This is the normal form of matrix A.

So that the rank of matrix A = 3

Key takeaways-

- There are 4 types of normal forms –

2. Normal form is also known as canonical form

Every mxn matrix of rank r can be reduced to the form

By a finite sequence of elementary transformation. This form is called normal form or the first canonical form of the matrix A.

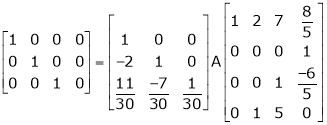

Ex. 1

Reduce the following matrix to normal form of Hence find it’s rank,

Solution:

We have,

Apply

Rank of A = 1

Rank of A = 1

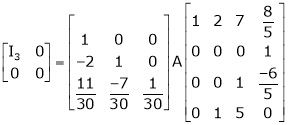

Ex. 2

Find the rank of the matrix

Solution:

We have,

Apply R12

Rank of A = 3

Rank of A = 3

Ex. 3

Find the rank of the following matrices by reducing it to the normal form.

Solution:

Apply C14

H.W.

Reduce the follo9wing matrices in to the normal form and hence find their ranks.

a)

b)

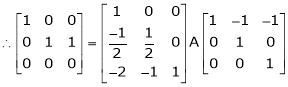

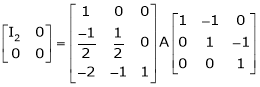

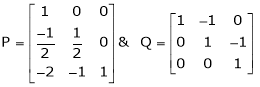

2. Reduction of a matrix a to normal form PAQ.

If A is a matrix of rank r, then there exist a non – singular matrices P & Q such that PAQ is in normal form.

i.e.

To obtained the matrices P and Q we use the following procedure.

Working rule:-

- If A is a mxn matrix, write A = Im A In.

- Apply row transformations on A on l.h.s. And the same row transformations on the prefactor Im.

- Apply column transformations on A on l.h.s and the column transformations on the postfactor In.

So that A on the l.h.s. Reduces to normal form.

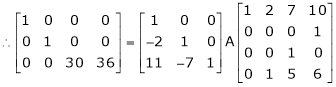

Example 1

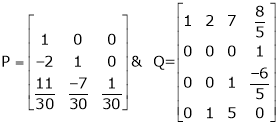

If  Find Two

Find Two

Matrices P and Q such that PAQ is in normal form.

Solution:

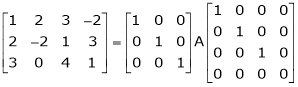

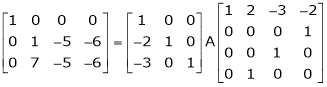

Here A is a square matrix of order 3 x 3. Hence we write,

A = I3 A.I3

i.e.

i.e.

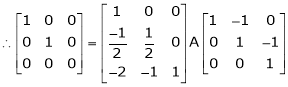

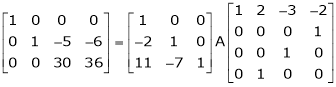

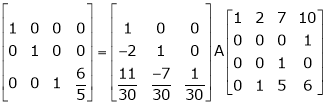

Example 2

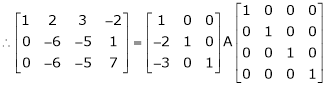

Find a non – singular matrices p and Q such that P A Q is in normal form where

Solution:

Here A is a matrix of order 3 x 4. Hence we write A as,

i.e.

i.e.

There are two types of linear equations-

1. Consistent 2. Inconsistent

Let’s understand about these two types of linear equations

Consistent –

If a system of equations has one or more than one solution, it is said be consistent.

There could be unique solution or infinite solution.

For example-

A system of linear equations-

2x + 4y = 9

x + y = 5

Has unique solution,where as,

A system of linear equations-

2x + y = 6

4x + 2y = 12

Has infinite solutions.

Inconsistent-

If a system of equations has no solution, then it is called inconsistent.

Consistency of a system of linear equations-

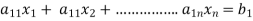

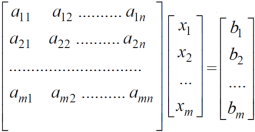

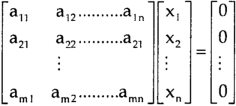

Suppose that a system of linear equations is given as-

This is the format as AX = B

Its augmented matrix is-

[A:B] = C

(1) consistent equations-

If Rank of A = Rank of C

Here, Rank of A = Rank of C = n ( no. Of unknown) – unique solution

And Rank of A = Rank of C = r , where r<n - infinite solutions

(2) inconsistent equations-

If Rank of A ≠ Rank of C

Key takeaways-

- If a system of equations has one or more than one solution, it is said be consistent.

- If a system of equations has no solution, then it is called inconsistent.

1) consistent equations-

If Rank of A = Rank of C

Here, Rank of A = Rank of C = n ( no. Of unknown) – unique solution

And Rank of A = Rank of C = r , where r<n - infinite solutions

(2) inconsistent equations-

If Rank of A ≠ Rank of C

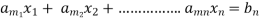

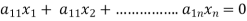

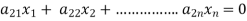

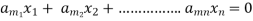

Solution of homogeneous system of linear equations-

A system of linear equations of the form AX = O is said to be homogeneous , where A denotes the coefficients and of matrix and and O denotes the null vector.

Suppose the system of homogeneous linear equations is ,

It means ,

AX = O

Which can be written in the form of matrix as below,

Note- A system of homogeneous linear equations always has a solution if

1. r(A) = n then there will be trivial solution, where n is the number of unknown,

2. r(A) < n , then there will be an infinite number of solution.

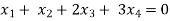

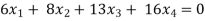

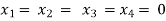

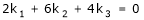

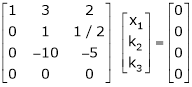

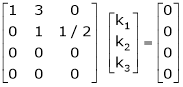

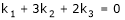

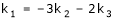

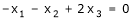

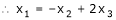

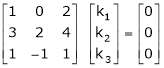

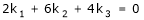

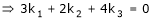

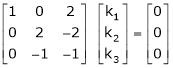

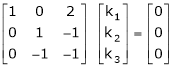

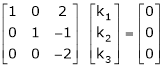

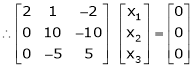

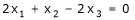

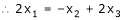

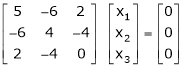

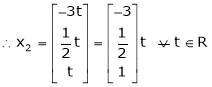

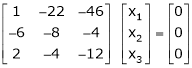

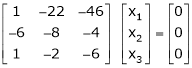

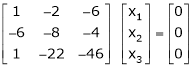

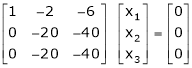

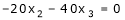

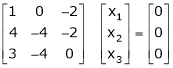

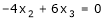

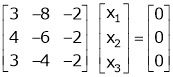

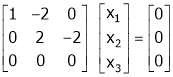

Example: Find the solution of the following homogeneous system of linear equations,

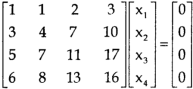

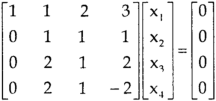

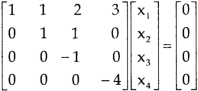

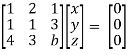

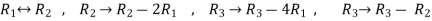

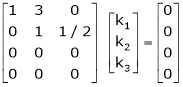

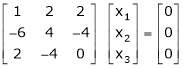

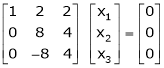

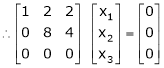

Sol. The given system of linear equations can be written in the form of matrix as follows,

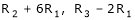

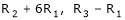

Apply the elementary row transformation,

, we get,

, we get,

, we get

, we get

Here r(A) = 4, so that it has trivial solution,

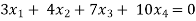

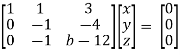

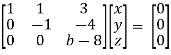

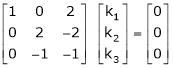

Example: Find out the value of ‘b’ in the system of homogenenous equations-

2x + y + 2z = 0

x + y + 3z = 0

4x + 3y + bz = 0

Which has

(1) trivial solution

(2) non-trivial solution

Sol. (1)

For trivial solution, we already know that the values of x , y and z will be zerp, so that ‘b’ can have any value.

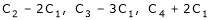

Now for non-trivial solution-

(2)

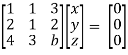

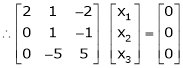

Convert the system of equations into matrix form-

AX = O

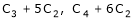

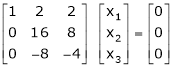

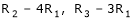

Apply  respectively , we get the following resultant matrices

respectively , we get the following resultant matrices

For non-trivial solutions , r(A) = 2 < n

b – 8 = 0

b = 8

Solution of non-homogeneous system of linear equations-

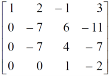

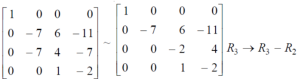

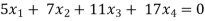

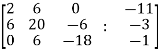

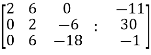

Example-1: check whether the following system of linear equations is consistent of not.

2x + 6y = -11

6x + 20y – 6z = -3

6y – 18z = -1

Sol. Write the above system of linear equations in augmented matrix form,

Apply  , we get

, we get

Apply

Here the rank of C is 3 and the rank of A is 2

Therefore both ranks are not equal. So that the given system of linear equations is not consistent.

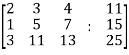

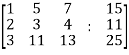

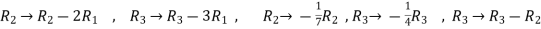

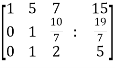

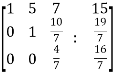

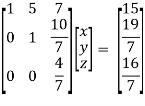

Example: Check the consistency and find the values of x , y and z of the following system of linear equations.

2x + 3y + 4z = 11

X + 5y + 7z = 15

3x + 11y + 13z = 25

Sol. Re-write the system of equations in augmented matrix form.

C = [A,B]

That will be,

Apply

Now apply ,

We get,

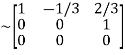

~

~ ~

~

Here rank of A = 3

And rank of C = 3, so that the system of equations is consistent,

So that we can can solve the equations as below,

That gives,

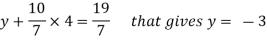

x + 5y + 7z = 15 ……………..(1)

y + 10z/7 = 19/7 ………………(2)

4z/7 = 16/7 ………………….(3)

From eq. (3)

z = 4,

From 2,

From eq.(1), we get

x + 5(-3) + 7(4) = 15

That gives,

x = 2

Therefore the values of x , y , z are 2 , -3 , 4 respectively.

Key takeaways-

A system of homogeneous linear equations always has a solution if

1. r(A) = n then there will be trivial solution, where n is the number of unknown,

2. r(A) < n , then there will be an infinite number of solution.

Linear Dependence

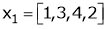

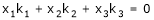

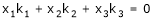

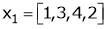

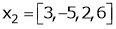

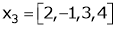

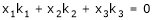

A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, ……., kr not all zero such that

k1 + x2k2 + …………….. + xr kr = 0 … (1)

Linear Independence

A set of r vectors x1, x2, …………., xr is said to be linearly independent if there exist scalars k1, k2, …………, kr all zero such that

x1 k1 + x2 k2 + …….. + xr kr = 0

Note:-

- Equation (1) is known as a vector equation.

- If the vector equation has non – zero solution i.e. k1, k2, …., kr not all zero. Then the vector x1, x2, ………. xr are said to be linearly dependent.

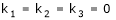

- If the vector equation has only trivial solution i.e.

k1 = k2 = …….= kr = 0. Then the vector x1, x2, ……, xr are said to linearly independent.

- Linear combination

A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xr kr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

Results:

- A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors.

- A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

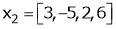

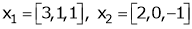

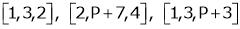

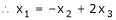

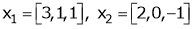

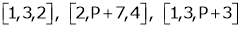

Example 1

Are the vectors  ,

,  ,

,  linearly dependent. If so, express x1 as a linear combination of the others.

linearly dependent. If so, express x1 as a linear combination of the others.

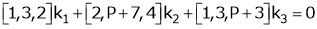

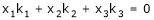

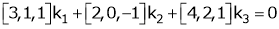

Solution:

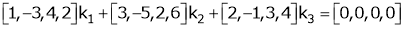

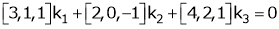

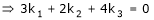

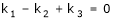

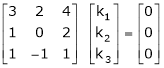

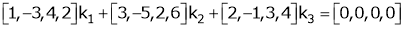

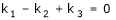

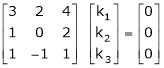

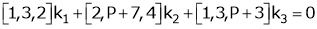

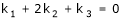

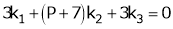

Consider a vector equation,

i.e.

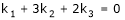

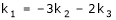

Which can be written in matrix form as,

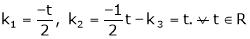

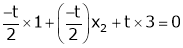

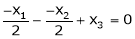

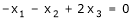

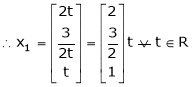

Here  & no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

& no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

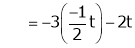

Put

and

and

Thus

i.e.

i.e.

Since F11 k2, k3 not all zero. Hence  are linearly dependent.

are linearly dependent.

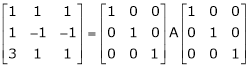

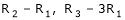

Example 2

Examine whether the following vectors are linearly independent or not.

and

and  .

.

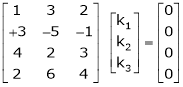

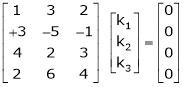

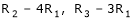

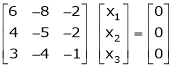

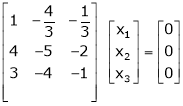

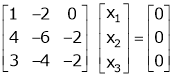

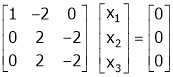

Solution:

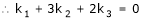

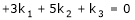

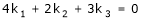

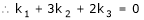

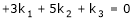

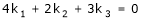

Consider the vector equation,

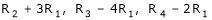

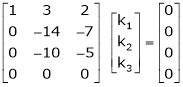

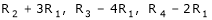

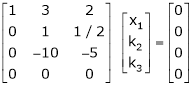

i.e.  … (1)

… (1)

Which can be written in matrix form as,

R12

R2 – 3R1, R3 – R1

R3 + R2

Here Rank of coefficient matrix is equal to the no. Of unknowns. i.e. r = n = 3.

Hence the system has unique trivial solution.

i.e.

i.e. vector equation (1) has only trivial solution. Hence the given vectors x1, x2, x3 are linearly independent.

Example 3

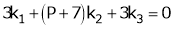

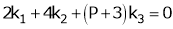

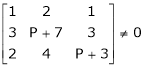

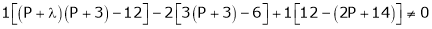

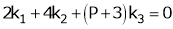

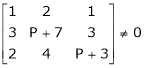

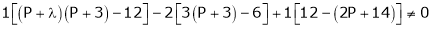

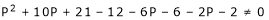

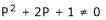

At what value of P the following vectors are linearly independent.

Solution:

Consider the vector equation.

i.e.

This is a homogeneous system of three equations in 3 unknowns and has a unique trivial solution.

If and only if Determinant of coefficient matrix is non zero.

consider

consider  .

.

.

.

i.e.

Thus for  the system has only trivial solution and Hence the vectors are linearly independent.

the system has only trivial solution and Hence the vectors are linearly independent.

Key takeaways-

- A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, ……., kr not all zero such that

k1 + x2k2 + …………….. + xr kr = 0

2. A set of r vectors x1, x2, …………., xr is said to be linearly independent if there exist scalars k1, k2, …………, kr all zero such that

x1 k1 + x2 k2 + …….. + xr kr = 0

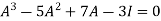

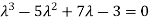

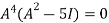

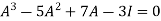

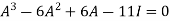

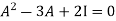

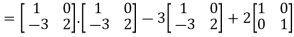

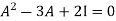

Statement-

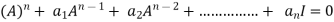

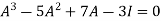

Every square matrix satisfies its characteristic equation , that means for every square matrix of order n,

|A -  | =

| =

Then the matrix equation-

Is satisfied by X = A

That means

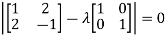

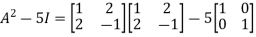

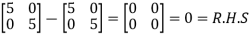

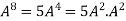

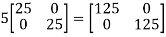

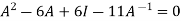

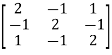

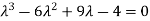

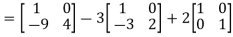

Example-1: Find the characteristic equation of the matrix A =  andVerify cayley-Hamlton theorem.

andVerify cayley-Hamlton theorem.

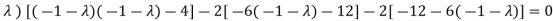

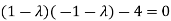

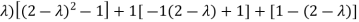

Sol. Characteristic equation of the matrix, we can be find as follows-

Which is,

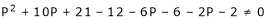

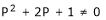

( 2 - , which gives

, which gives

According to cayley-Hamilton theorem,

…………(1)

…………(1)

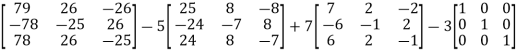

Now we will verify equation (1),

Put the required values in equation (1) , we get

Hence the cayley-Hamilton theorem is verified.

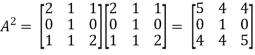

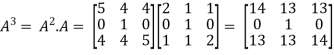

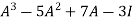

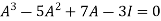

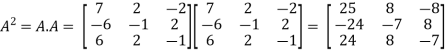

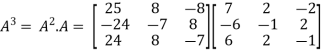

Example-2: Find the characteristic equation of the the matrix A and verify Cayley-Hamilton theorem as well.

A =

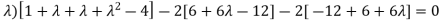

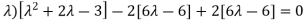

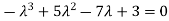

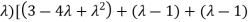

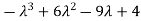

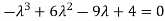

Sol. Characteristic equation will be-

= 0

= 0

( 7 -

(7-

(7-

Which gives,

Or

According to cayley-Hamilton theorem,

…………………….(1)

…………………….(1)

In order to verify cayley-Hamilton theorem , we will find the values of

So that,

Now

Put these values in equation(1), we get

= 0

= 0

Hence the cayley-hamilton theorem is verified.

Example-3:Using Cayley-Hamilton theorem, find  , if A =

, if A =  ?

?

Sol. Let A =

The characteristics equation of A is

Or

Or

By Cayley-Hamilton theorem

L.H.S.

=

By Cayley-Hamilton theorem we have

Multiply both side by

.

.

Or

=

=

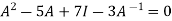

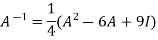

Key takeaways-

We can find the inverse of any matrix by multiplying the characteristic equation With  .

.

For example, suppose we have a characteristic equation  then multiply this by

then multiply this by  , then it becomes

, then it becomes

Then we can find  by solving the above equation.

by solving the above equation.

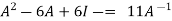

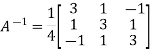

Example-1: Find the inverse of matrix A by using Cayley-Hamilton theorem.

A =

Sol. The characteristic equation will be,

|A -  | = 0

| = 0

Which gives,

(4-

According to Cayley-Hamilton theorem,

Multiplying by

That means

On solving,

11

=

=

So that,

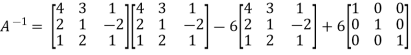

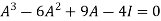

Example-2: Find the inverse of matrix A by using Cayley-Hamilton theorem.

A =

Sol. The characteristic equation will be,

|A -  | = 0

| = 0

=

= (2-

= (2 -

=

That is,

Or

We know that by Cayley-Hamilton theorem,

…………………….(1)t,

…………………….(1)t,

Multiply equation(1) by  , we get

, we get

Or

Now we will find

=

=

Hence the inverse of matrix A is,

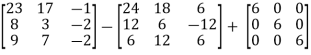

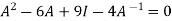

Example-3: Verify the Cayley-Hamilton theorem and find the inverse.

?

?

Sol. Let A =

The characteristics equation of A is

Or

Or

Or

By Cayley-Hamilton theorem

L.H.S:

=  =0=R.H.S

=0=R.H.S

Multiply both side by  on

on

Or

Or  [

[

Or

In this chapter we are going to study a very important theorem viz first we have to study of eigen values and eigen vector.

2. Vector

An ordered n – touple of numbers is called an n – vector. Thus the ‘n’ numbers x1, x2, ………… xn taken in order denote the vector x. i.e. x = (x1, x2, ……., xn).

Where the numbers x1, x2, ……….., xn are called component or co – ordinates of a vector x. A vector may be written as row vector or a column vector.

If A be an mxn matrix then each row will be an n – vector & each column will be an m – vector.

3. Linear Dependence

A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, ……., kr not all zero such that

k1 + x2k2 + …………….. + xr kr = 0 … (1)

4. Linear Independence

A set of r vectors x1, x2, …………., xr is said to be linearly independent if there exist scalars k1, k2, …………, kr all zero such that

x1 k1 + x2 k2 + …….. + xr kr = 0

Note:-

4. Equation (1) is known as a vector equation.

5. If the vector equation has non – zero solution i.e. k1, k2, …., kr not all zero. Then the vector x1, x2, ………. xr are said to be linearly dependent.

6. If the vector equation has only trivial solution i.e.

k1 = k2 = …….= kr = 0. Then the vector x1, x2, ……, xr are said to linearly independent.

5. Linear combination

A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xr kr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

Results:

3. A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors.

4. A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

Example 1

Are the vectors  ,

,  ,

,  linearly dependent. If so, express x1 as a linear combination of the others.

linearly dependent. If so, express x1 as a linear combination of the others.

Solution:

Consider a vector equation,

i.e.

Which can be written in matrix form as,

Here  & no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

& no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

Put

and

and

Thus

i.e.

i.e.

Since F11 k2, k3 not all zero. Hence  are linearly dependent.

are linearly dependent.

Example 2

Examine whether the following vectors are linearly independent or not.

and

and  .

.

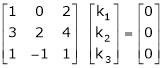

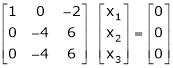

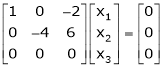

Solution:

Consider the vector equation,

i.e.  … (1)

… (1)

Which can be written in matrix form as,

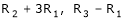

R12

R2 – 3R1, R3 – R1

R3 + R2

Here Rank of coefficient matrix is equal to the no. Of unknowns. i.e. r = n = 3.

Hence the system has unique trivial solution.

i.e.

i.e. vector equation (1) has only trivial solution. Hence the given vectors x1, x2, x3 are linearly independent.

Example 3

At what value of P the following vectors are linearly independent.

Solution:

Consider the vector equation.

i.e.

This is a homogeneous system of three equations in 3 unknowns and has a unique trivial solution.

If and only if Determinant of coefficient matrix is non zero.

consider

consider  .

.

.

.

i.e.

Thus for  the system has only trivial solution and Hence the vectors are linearly independent.

the system has only trivial solution and Hence the vectors are linearly independent.

Note:-

If the rank of the coefficient matrix is r, it contains r linearly independent variables & the remaining vectors (if any) can be expressed as linear combination of these vectors.

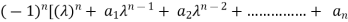

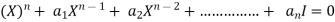

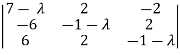

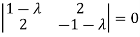

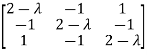

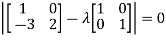

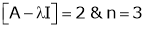

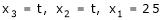

Characteristic equation:-

Let A he a square matrix,  be any scaler then

be any scaler then  is called characteristic equation of a matrix A.

is called characteristic equation of a matrix A.

Note:

Let a be a square matrix and ‘ ’ be any scaler then,

’ be any scaler then,

1)  is called characteristic matrix

is called characteristic matrix

2)  is called characteristic polynomial.

is called characteristic polynomial.

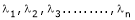

The roots of a characteristic equations are known as characteristic root or latent roots, eigen values or proper values of a matrix A.

Eigen vector:-

Suppose  be an eigen value of a matrix A. Then

be an eigen value of a matrix A. Then  a non – zero vector x1 such that.

a non – zero vector x1 such that.

… (1)

… (1)

Such a vector ‘x1’ is called as eigen vector corresponding to the eigen value  .

.

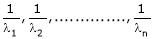

Properties of Eigen values:-

- Then sum of the eigen values of a matrix A is equal to sum of the diagonal elements of a matrix A.

- The product of all eigen values of a matrix A is equal to the value of the determinant.

- If

are n eigen values of square matrix A then

are n eigen values of square matrix A then  are m eigen values of a matrix A-1.

are m eigen values of a matrix A-1. - The eigen values of a symmetric matrix are all real.

- If all eigen values are non – zen then A-1 exist and conversely.

- The eigen values of A and A’ are same.

Properties of eigen vector:-

- Eigen vector corresponding to distinct eigen values are linearly independent.

- If two are more eigen values are identical then the corresponding eigen vectors may or may not be linearly independent.

- The eigen vectors corresponding to distinct eigen values of a real symmetric matrix are orthogonal.

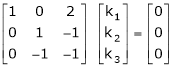

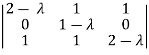

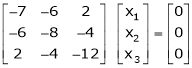

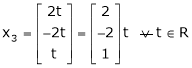

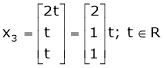

Example 1

Determine the eigen values of eigen vector of the matrix.

Solution:

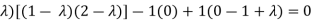

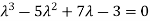

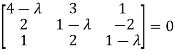

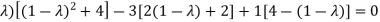

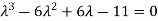

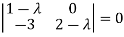

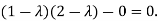

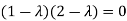

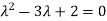

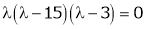

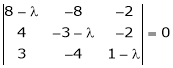

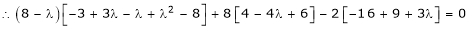

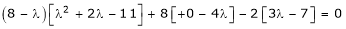

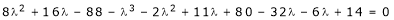

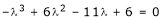

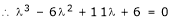

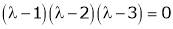

Consider the characteristic equation as,

i.e.

i.e.

i.e.

Which is the required characteristic equation.

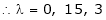

are the required eigen values.

are the required eigen values.

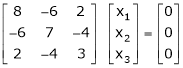

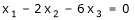

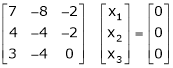

Now consider the equation

… (1)

… (1)

Case I:

If  Equation (1) becomes

Equation (1) becomes

R1 + R2

Thus

independent variable.

independent variable.

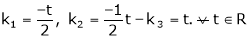

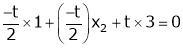

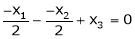

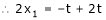

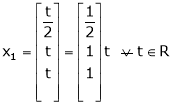

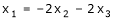

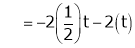

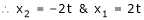

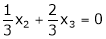

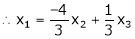

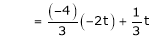

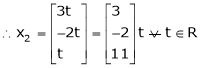

Now rewrite equation as,

Put x3 = t

&

&

Thus  .

.

Is the eigen vector corresponding to  .

.

Case II:

If  equation (1) becomes,

equation (1) becomes,

Here

independent variables

independent variables

Now rewrite the equations as,

Put

&

&

.

.

Is the eigen vector corresponding to  .

.

Case III:

If  equation (1) becomes,

equation (1) becomes,

Here rank of

independent variable.

independent variable.

Now rewrite the equations as,

Put

Thus  .

.

Is the eigen vector for  .

.

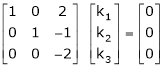

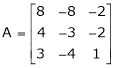

Example 2

Find the eigen values of eigen vector for the matrix.

Solution:

Consider the characteristic equation as

i.e.

i.e.

are the required eigen values.

are the required eigen values.

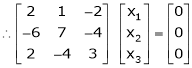

Now consider the equation

… (1)

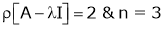

… (1)

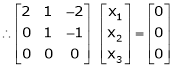

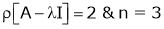

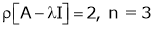

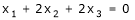

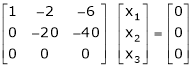

Case I:

Equation (1) becomes,

Equation (1) becomes,

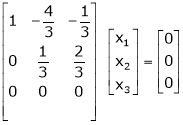

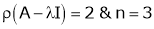

Thus  and n = 3

and n = 3

3 – 2 = 1 independent variables.

3 – 2 = 1 independent variables.

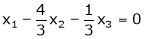

Now rewrite the equations as,

Put

,

,

i.e. the eigen vector for

Case II:

If  equation (1) becomes,

equation (1) becomes,

Thus

Independent variables.

Now rewrite the equations as,

Put

Is the eigen vector for

Now

Case II:-

If  equation (1) gives,

equation (1) gives,

R1 – R2

Thus

independent variables

independent variables

Now

Put

Thus

Is the eigen vector for  .

.

Key takeaways-

- A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xr kr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

2. A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors

3. If the rank of the coefficient matrix is r, it contains r linearly independent variables & the remaining vectors (if any) can be expressed as linear combination of these vectors.

4. Let A he a square matrix,  be any scaler then

be any scaler then  is called characteristic equation of a matrix A.

is called characteristic equation of a matrix A.

References

1. Erwin Kreyszig, Advanced Engineering Mathematics, 9thEdition, John Wiley & Sons, 2006.

2. N.P. Bali and Manish Goyal, A textbook of Engineering Mathematics, Laxmi Publications.

3. Higher engineering mathematic, Dr. B.S. Grewal, Khanna publishers

4. HK dass, engineering mathematics.