Unit - 5

Statistics

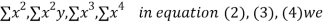

5.1 Fitting of curve- Least squares principle , fitting of straight line , fitting of second degree parabola, fitting of curves of the form y=  , y = a

, y = a , y =

, y =

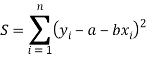

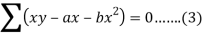

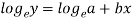

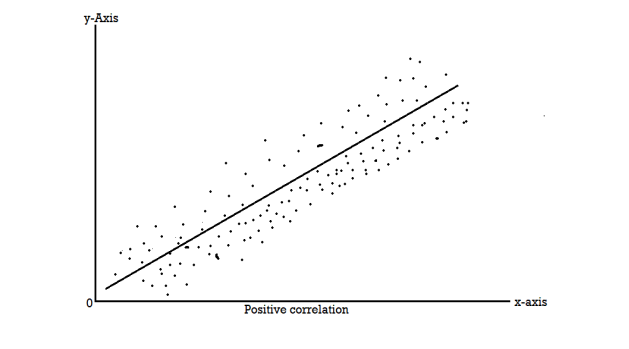

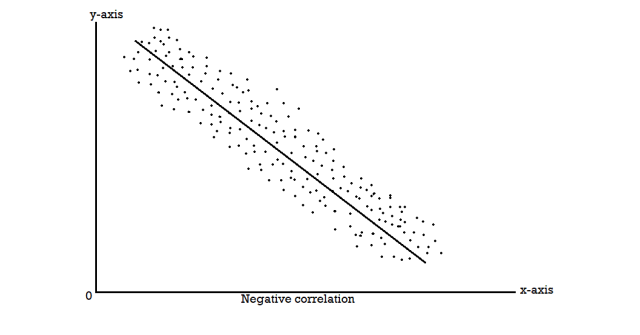

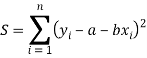

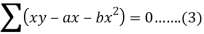

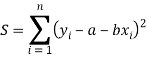

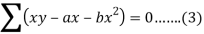

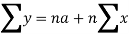

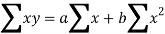

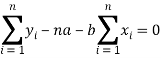

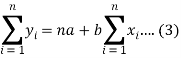

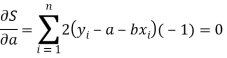

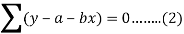

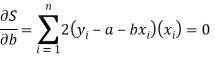

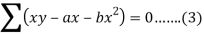

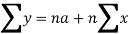

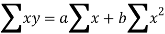

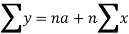

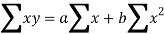

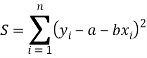

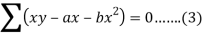

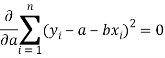

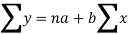

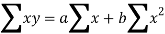

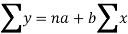

Method of least square-

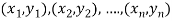

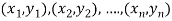

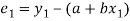

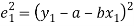

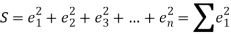

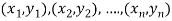

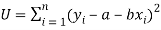

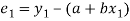

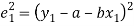

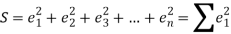

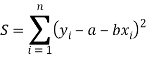

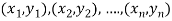

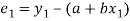

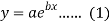

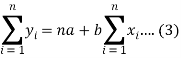

Suppose

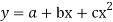

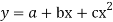

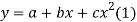

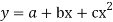

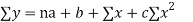

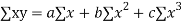

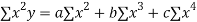

y = a + bx ………. (1)

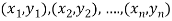

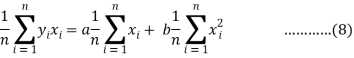

Is the straight line has to be fitted for the data points given-

Let  be the theoretical value for

be the theoretical value for

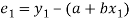

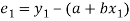

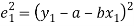

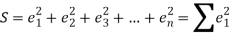

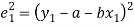

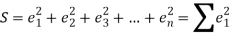

Now-

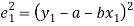

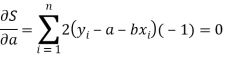

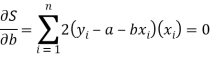

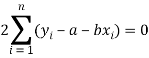

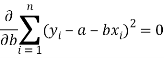

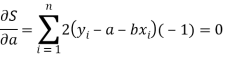

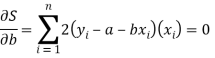

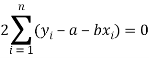

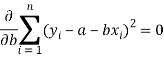

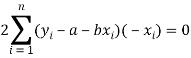

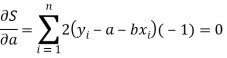

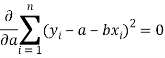

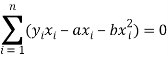

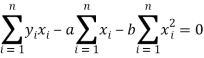

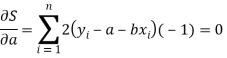

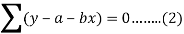

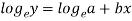

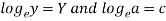

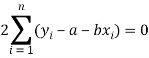

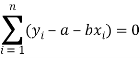

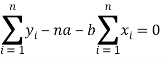

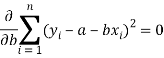

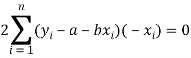

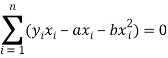

For the minimum value of S -

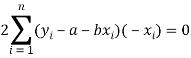

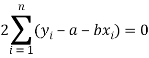

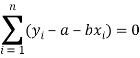

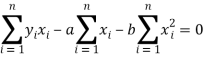

Or

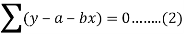

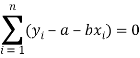

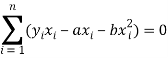

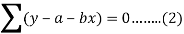

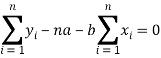

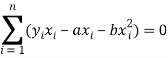

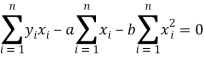

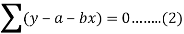

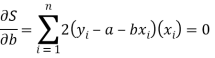

Now

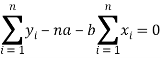

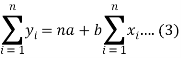

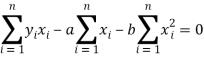

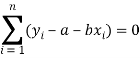

Or

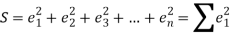

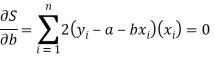

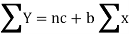

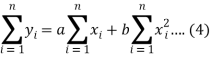

On solving equation (1) and (2), we get-

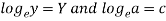

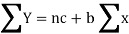

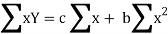

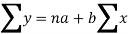

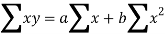

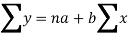

These two equation are known as the normal equations.

Now on solving these two equations we get the values of a and b.

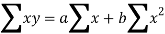

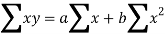

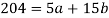

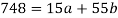

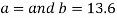

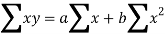

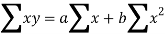

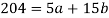

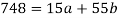

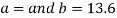

Example: Find the straight line that best fits of the following data by using method of least square.

X | 1 | 2 | 3 | 4 | 5 |

y | 14 | 27 | 40 | 55 | 68 |

Sol.

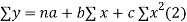

Suppose the straight line

y = a + bx…….. (1)

Fits the best-

Then-

x | y | Xy |  |

1 | 14 | 14 | 1 |

2 | 27 | 54 | 4 |

3 | 40 | 120 | 9 |

4 | 55 | 220 | 16 |

5 | 68 | 340 | 25 |

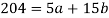

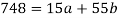

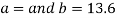

Sum = 15 | 204 | 748 | 55 |

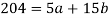

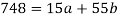

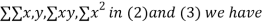

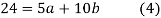

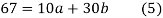

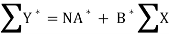

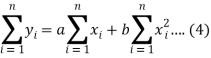

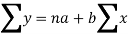

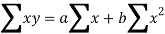

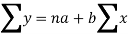

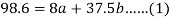

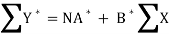

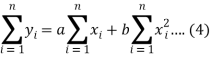

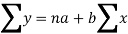

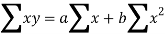

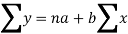

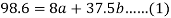

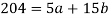

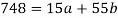

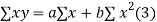

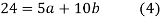

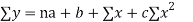

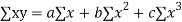

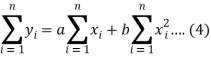

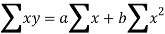

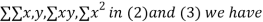

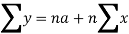

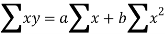

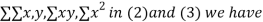

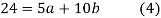

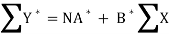

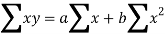

Normal equations are-

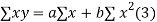

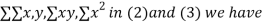

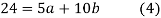

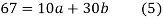

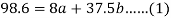

Put the values from the table, we get two normal equations-

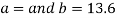

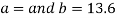

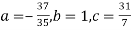

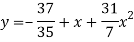

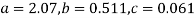

On solving the above equations, we get-

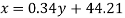

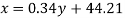

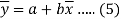

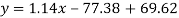

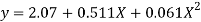

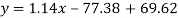

So that the best fit line will be- (on putting the values of a and b in equation (1))

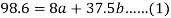

Example: Find the best values of a and b so that y = a + bx fits the data given in the table

x | 0 | 1 | 2 | 3 | 4 |

y | 1.0 | 2.9 | 4.8 | 6.7 | 8.6 |

Solution.

y = a + bx

x | y | Xy |  |

0 | 1.0 | 0 | 0 |

1 | 2.9 | 2.0 | 1 |

2 | 4.8 | 9.6 | 4 |

3 | 6.7 | 20.1 | 9 |

4 | 8.6 | 13.4 | 16 |

|  |  |  |

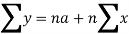

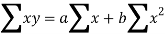

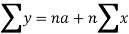

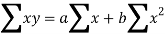

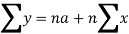

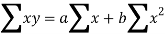

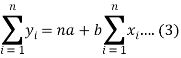

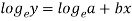

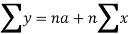

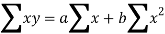

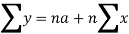

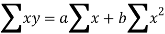

Normal equations,  y= na+ b

y= na+ b x (2)

x (2)

On putting the values of

On solving (4) and (5) we get,

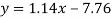

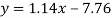

On substituting the values of a and b in (1) we get

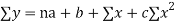

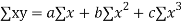

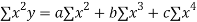

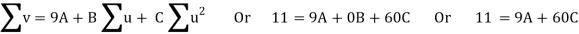

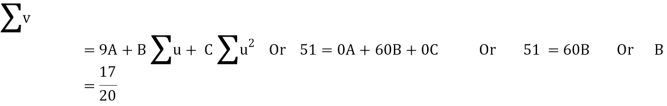

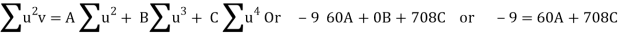

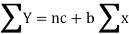

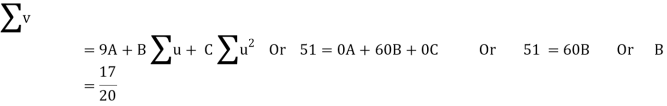

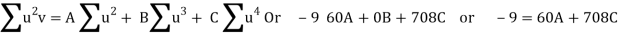

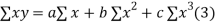

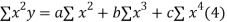

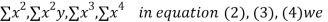

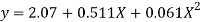

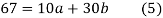

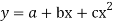

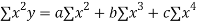

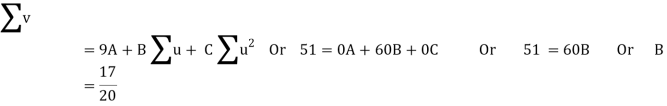

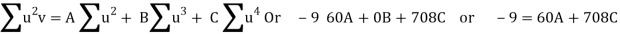

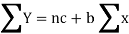

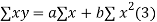

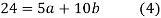

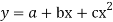

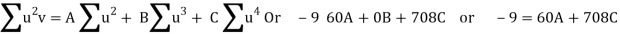

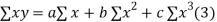

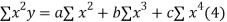

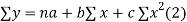

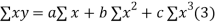

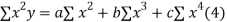

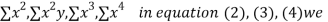

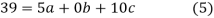

To fit the parabola

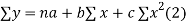

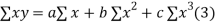

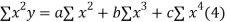

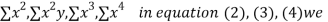

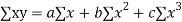

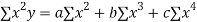

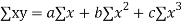

The normal equations are

On solving three normal equations we get the values of a,b and c.

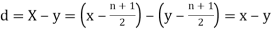

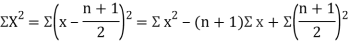

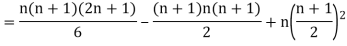

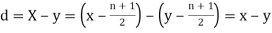

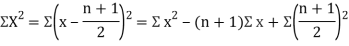

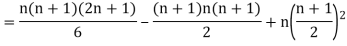

Note- Change of scale-

We change the scale if the data is large and given in equal interval.

As-

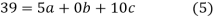

Example: Fit a second degree parabola to the following data by least squares method.

| 1929 | 1930 | 1931 | 1932 | 1933 | 1934 | 1935 | 1936 | 1937 |

| 352 | 356 | 357 | 358 | 360 | 361 | 361 | 360 | 359 |

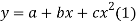

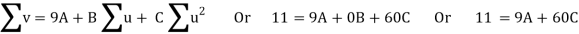

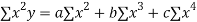

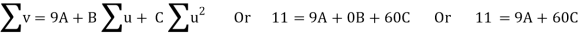

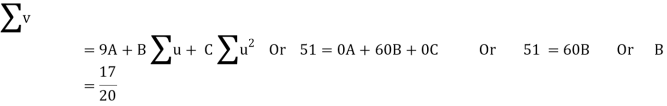

Solution: Taking x0 = 1933, y0 = 357

Taking u = x – x0, v = y – y0

u = x – 1933, v = y – 357

The equation y = a+bx+cx2 is transformed to v = A + Bu + Cu2 …(2)

| u = x – 1933 |  |  |  |  | u2v | u3 | u4 |

1929 | -4 | 352 | -5 | 20 | 16 | -80 | -64 | 256 |

1930 | -3 | 360 | -1 | 3 | 9 | -9 | -27 | 81 |

1931 | -2 | 357 | 0 | 0 | 4 | 0 | -8 | 16 |

1932 | -1 | 358 | 1 | -1 | 1 | 1 | -1 | 1 |

1933 | 0 | 360 | 3 | 0 | 0 | 0 | 0 | 0 |

1934 | 1 | 361 | 4 | 4 | 1 | 4 | 1 | 1 |

1935 | 2 | 361 | 4 | 8 | 4 | 16 | 8 | 16 |

1936 | 3 | 360 | 3 | 9 | 9 | 27 | 27 | 81 |

1937 | 4 | 359 | 2 | 8 | 16 | 32 | 64 | 256 |

Total |  |

|  |  |  |  |  |  |

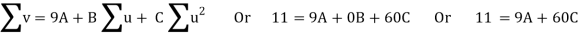

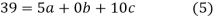

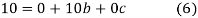

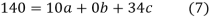

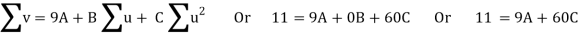

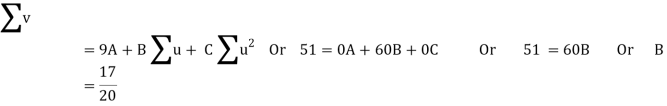

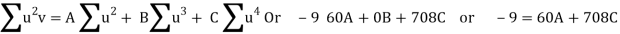

Normal equations are

On solving these equations, we get A = 694/231, B = 17/20, C = - 247/924

V = 694/231 + 17/20 u – 247/924 u2V = 694/231 + 17/20 u – 247/924 u2

y – 357 = 694/231 + 17/20 (x – 1933) – 247/924 (x – 1933)2

= 694/231 + 17x/20 - 32861/20 – 247x2/924 (-3866x) – 247/924 + (1933)2

y = 694/231 – 32861/20 – 247/924 (1933)2 + 17x/20 + (247 3866)x/924 - 247 x2/924

y = 3 – 1643.05 – 998823.36 + 357 + 0.85 x + 1033.44 x – 0.267 x2

y = - 1000106.41 + 1034.29x – 0.267 x2

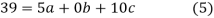

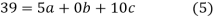

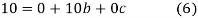

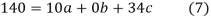

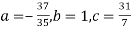

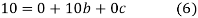

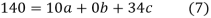

Example: Find the least squares approximation of second degree for the discrete data

x | 2 | -1 | 0 | 1 | 2 |

y | 15 | 1 | 1 | 3 | 19 |

Solution. Let the equation of second degree polynomial be

x | y | Xy |  |  |  |  |

-2 | 15 | -30 | 4 | 60 | -8 | 16 |

-1 | 1 | -1 | 1 | 1 | -1 | 1 |

0 | 1 | 0 | 0 | 0 | 0 | 0 |

1 | 3 | 3 | 1 | 3 | 1 | 1 |

2 | 19 | 38 | 4 | 76 | 8 | 16 |

|  |  |  |  |  |  |

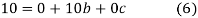

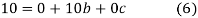

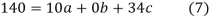

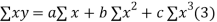

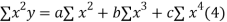

Normal equations are

On putting the values of  x,

x,  y,

y, xy,

xy,  have

have

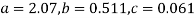

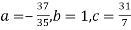

On solving (5),(6),(7), we get,

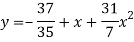

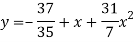

The required polynomial of second degree is

Example: Fit a second degree parabola to the following data.

X = 1.0 | 1.5 | 2.0 | 2.5 | 3.0 | 3.5 | 4.0 |

Y = 1.1 | 1.3 | 1.6 | 2.0 | 2.7 | 3.4 | 4.1 |

Solution

We shift the origin to (2.5, 0) antique 0.5 as the new unit. This amounts to changing the variable x to X, by the relation X = 2x – 5.

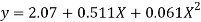

Let the parabola of fit be y = a + bX The values of

The values of  X etc. Are calculated as below:

X etc. Are calculated as below:

x | X | y | Xy |  |  |  |  |

1.0 | -3 | 1.1 | -3.3 | 9 | 9.9 | -27 | 81 |

1.5 | -2 | 1.3 | -2.6 | 4 | 5.2 | -5 | 16 |

2.0 | -1 | 1.6 | -1.6 | 1 | 1.6 | -1 | 1 |

2.5 | 0 | 2.0 | 0.0 | 0 | 0.0 | 0 | 0 |

3.0 | 1 | 2.7 | 2.7 | 1 | 2.7 | 1 | 1 |

3.5 | 2 | 3.4 | 6.8 | 4 | 13.6 | 8 | 16 |

4.0 | 3 | 4.1 | 12.3 | 9 | 36.9 | 27 | 81 |

Total | 0 | 16.2 | 14.3 | 28 | 69.9 | 0 | 196 |

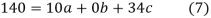

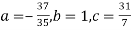

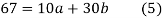

The normal equations are

7a + 28c =16.2; 28b =14.3;. 28a +196c=69.9

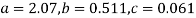

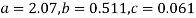

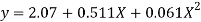

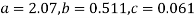

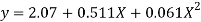

Solving these as simultaneous equations we get

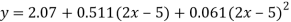

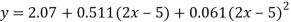

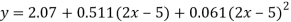

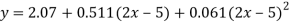

Replacing X bye 2x – 5 in the above equation we get

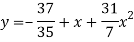

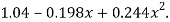

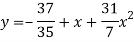

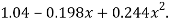

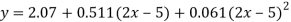

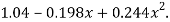

Which simplifies to y =

This is the required parabola of the best fit.

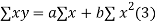

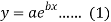

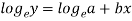

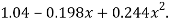

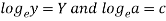

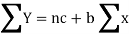

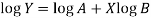

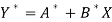

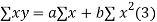

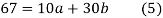

Example: Fit the curve  by using the method of least square.

by using the method of least square.

X | 1 | 2 | 3 | 4 | 5 | 6 |

Y | 7.209 | 5.265 | 3.846 | 2.809 | 2.052 | 1.499 |

Sol.

Here-

Now put-

Then we get-

x | Y |  | XY |  |

1 | 7.209 | 1.97533 | 1.97533 | 1 |

2 | 5.265 | 1.66108 | 3.32216 | 4 |

3 | 3.846 | 1.34703 | 4.04109 | 9 |

4 | 2.809 | 1.03283 | 4.13132 | 16 |

5 | 2.052 | 0.71881 | 3.59405 | 25 |

6 | 1.499 | 0.40480 | 2.4288 | 36 |

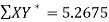

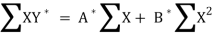

Sum = 21 |

| 7.13988 | 19.49275 | 91 |

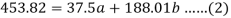

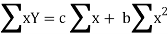

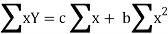

Normal equations are-

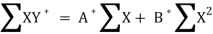

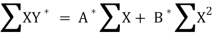

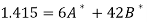

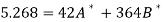

Putting the values form the table, we get-

7.13988 = 6c + 21b

19.49275 = 21c + 91b

On solving, we get-

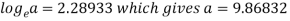

b = -0.3141 and c = 2.28933

c =

Now put these values in equations (1), we get-

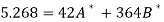

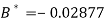

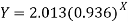

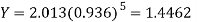

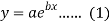

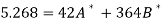

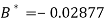

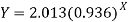

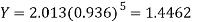

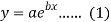

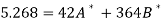

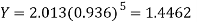

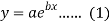

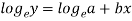

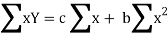

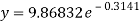

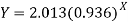

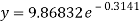

Example: Estimate the chlorine residual in a swimming pool 5 hours after it has been treated with chemicals by fitting an exponential curve of the form

of the data given below-

of the data given below-

Hours(X) | 2 | 4 | 6 | 8 | 10 | 12 |

Chlorine residuals (Y) | 1.8 | 1.5 | 1.4 | 1.1 | 1.1 | 0.9 |

Sol.

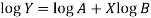

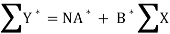

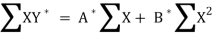

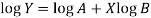

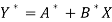

Taking log on the curve which is non-linear,

We get-

Put

Then-

Which is the linear equation in X,

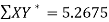

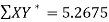

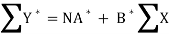

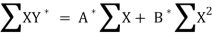

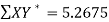

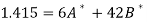

Its nomal equations are-

X Y Y* = ln Y X2 XY*

2 1.8 0.5878 4 0.1756

4 1.5 0.4055 16 1.622

6 1.4 0.3365 36 2.019

8 1.1 0.0953 64 0.7264

10 1.1 0.0953 100 0.953

12 0.9 -0.10536 144 -1.26432

42 1.415 364 5.26752

Here N = 6,

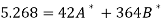

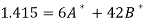

Thus the normal equations are-

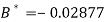

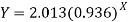

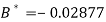

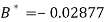

On solving, we get

Or

A = 2.013 and B = 0.936

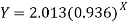

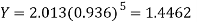

Hence the required least square exponential curve-

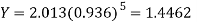

Prediction-

Chlorine content after 5 hours-

Key takeaways-

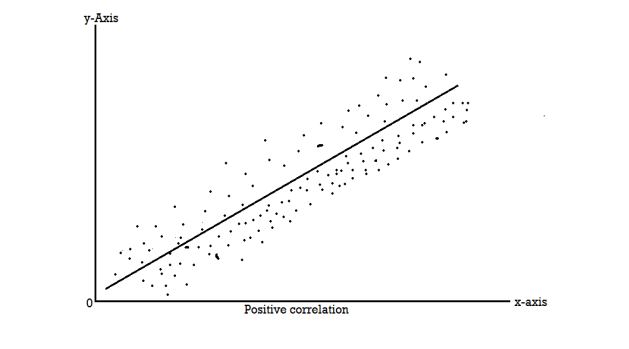

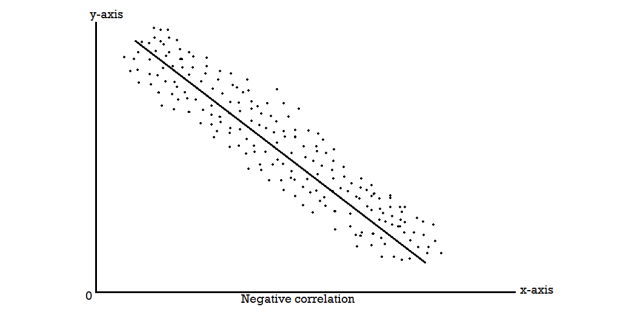

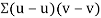

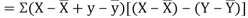

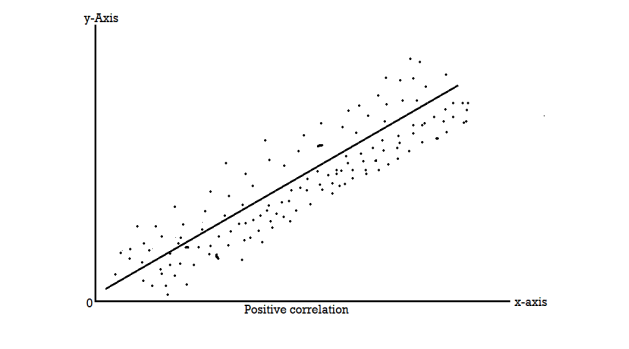

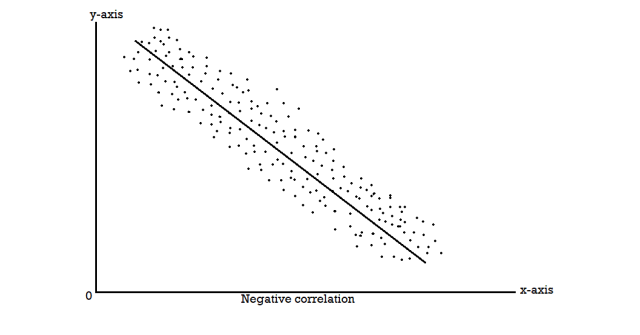

When two variables are related in such a way that change in the value of one variable affects the value of the other variable, then these two variables are said to be correlated and there is correlation between two variables.

Example- Height and weight of the persons of a group.

The correlation is said to be perfect correlation if two variables vary in such a way that their ratio is constant always.

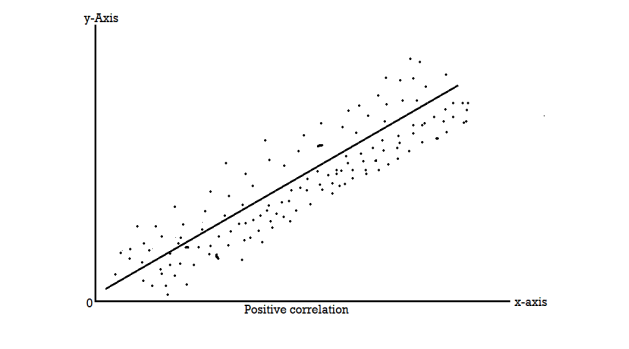

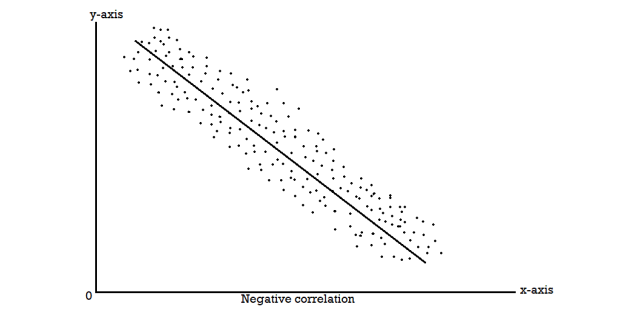

Scatter diagram-

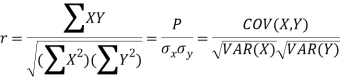

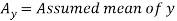

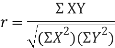

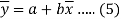

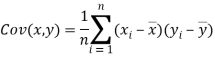

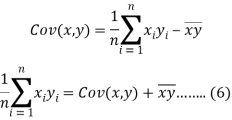

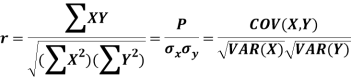

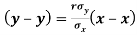

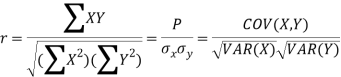

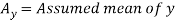

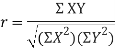

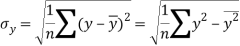

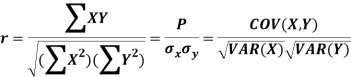

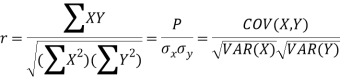

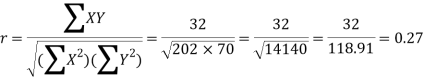

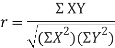

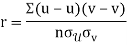

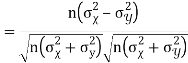

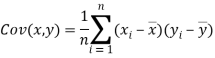

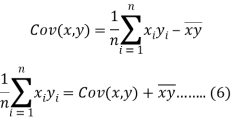

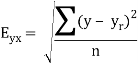

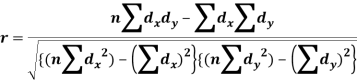

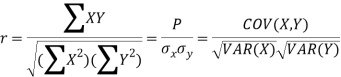

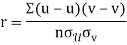

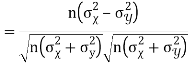

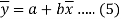

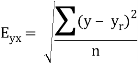

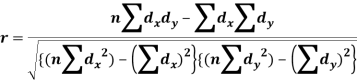

Karl Pearson’s coefficient of correlation-

Here-  and

and

Note-

1. Correlation coefficient always lies between -1 and +1.

2. Correlation coefficient is independent of change of origin and scale.

3. If the two variables are independent then correlation coefficient between them is zero.

Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

Correlation coefficient | Type of correlation |

+1 | Perfect positive correlation |

-1 | Perfect negative correlation |

0.25 | Weak positive correlation |

0.75 | Strong positive correlation |

-0.25 | Weak negative correlation |

-0.75 | Strong negative correlation |

0 | No correlation |

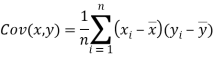

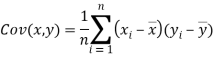

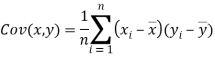

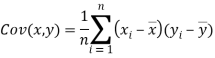

Example: Find the correlation coefficient between Age and weight of the following data-

Age | 30 | 44 | 45 | 43 | 34 | 44 |

Weight | 56 | 55 | 60 | 64 | 62 | 63 |

Sol.

x | y |  |  |  |  | (   |

30 | 56 | -10 | 100 | -4 | 16 | 40 |

44 | 55 | 4 | 16 | -5 | 25 | -20 |

45 | 60 | 5 | 25 | 0 | 0 | 0 |

43 | 64 | 3 | 9 | 4 | 16 | 12 |

34 | 62 | -6 | 36 | 2 | 4 | -12 |

44 | 63 | 4 | 16 | 3 | 9 | 12 |

Sum= 240 |

360 |

0 |

202 |

0 |

70

|

32 |

Karl Pearson’s coefficient of correlation-

Here the correlation coefficient is 0.27.which is the positive correlation (weak positive correlation), this indicates that the as age increases, the weight also increase.

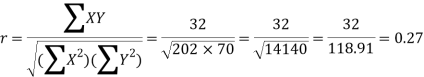

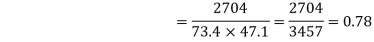

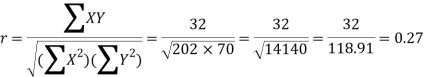

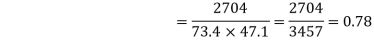

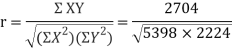

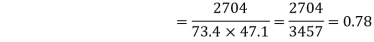

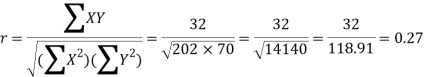

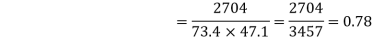

Example:

Ten students got the following percentage of marks in Economics and Statistics

Calculate the  of correlation.

of correlation.

Roll No. |  |  |  |  |  |  |  |  |  |  |

Marks in Economics |  |  |  |  |  |  |  |  |  |  |

Marks in  |  |  |  |  |  |  |  |  |  |  |

Solution:

Let the marks of two subjects be denoted by  and

and  respectively.

respectively.

Then the mean for  marks

marks  and the mean ofy marks

and the mean ofy marks

and

and are deviations ofx’s and

are deviations ofx’s and  ’s from their respective means, then the data may be arranged in the following form:

’s from their respective means, then the data may be arranged in the following form:

x | y | X = x - 65 | Y = y - 66 | X2 | Y2 | X.Y |

78 36 98 25 75 82 90 62 65 39 650 | 84 51 91 60 68 62 86 58 53 47 660 | 13 -29 33 -40 10 17 25 -3 0 -26 0 | 18 -15 25 -6 2 -4 20 -8 -13 -19 0 | 169 841 1089 1600 100 289 625 9 0 676 5398

| 324 225 625 36 4 16 400 64 169 361 2224 | 234 435 825 240 20 -68 500 24 0 494 2704

|

|

|

|

|  |  |  |

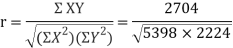

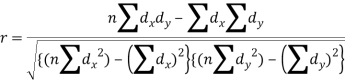

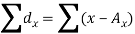

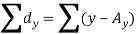

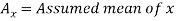

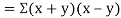

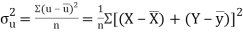

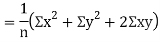

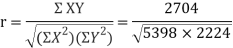

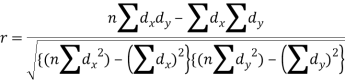

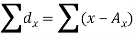

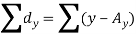

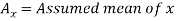

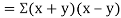

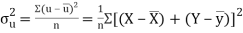

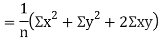

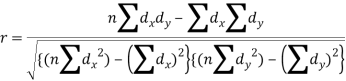

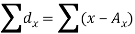

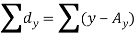

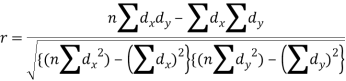

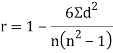

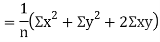

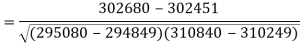

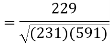

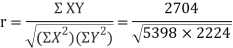

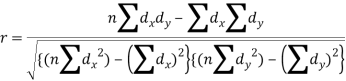

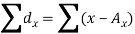

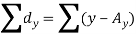

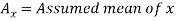

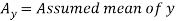

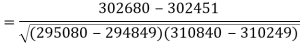

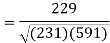

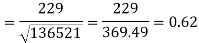

Short-cut method to calculate correlation coefficient-

Here,

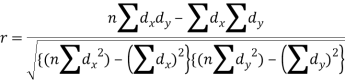

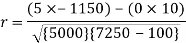

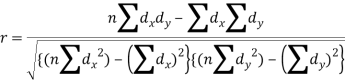

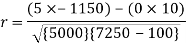

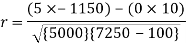

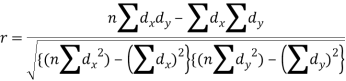

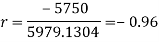

Example: Find the correlation coefficient between the values X and Y of the dataset given below by using short-cut method-

X | 10 | 20 | 30 | 40 | 50 |

Y | 90 | 85 | 80 | 60 | 45 |

Sol.

X | Y |  |  |  |  |  |

10 | 90 | -20 | 400 | 20 | 400 | -400 |

20 | 85 | -10 | 100 | 15 | 225 | -150 |

30 | 80 | 0 | 0 | 10 | 100 | 0 |

40 | 60 | 10 | 100 | -10 | 100 | -100 |

50 | 45 | 20 | 400 | -25 | 625 | -500 |

Sum = 150 |

360 |

0 |

1000 |

10 |

1450 |

-1150 |

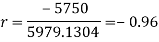

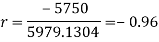

Short-cut method to calculate correlation coefficient-

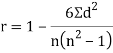

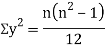

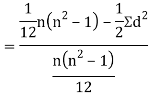

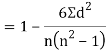

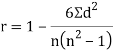

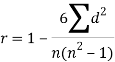

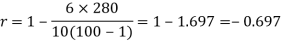

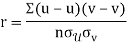

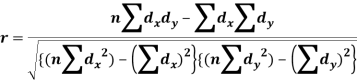

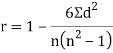

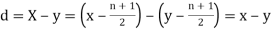

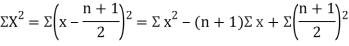

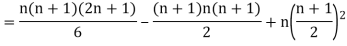

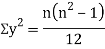

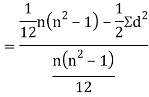

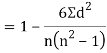

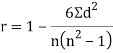

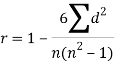

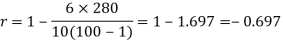

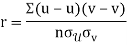

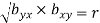

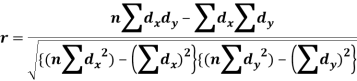

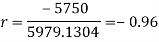

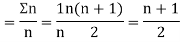

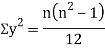

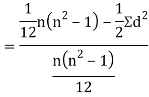

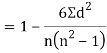

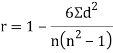

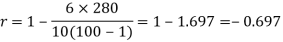

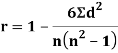

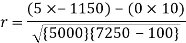

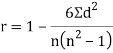

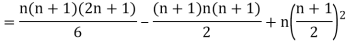

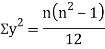

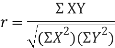

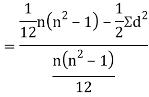

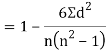

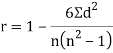

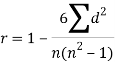

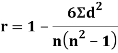

Spearman’s rank correlation-

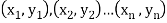

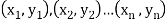

Solution. Let  be the ranks of

be the ranks of  individuals corresponding to two characteristics.

individuals corresponding to two characteristics.

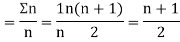

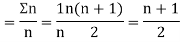

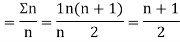

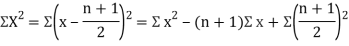

Assuming nor two individuals are equal in either classification, each individual takes the values 1, 2, 3,  and hence their arithmetic means are, each

and hence their arithmetic means are, each

Let  ,

,  ,

,  ,

,  be the values of variable

be the values of variable  and

and  ,

,  ,

,  those of

those of

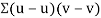

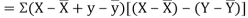

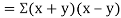

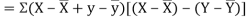

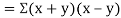

Then

Where and y are deviations from the mean.

and y are deviations from the mean.

Clearly,  and

and

SPEARMAN’S RANK CORRELATION COEFFICIENT:

Where denotes rank coefficient of correlation and

denotes rank coefficient of correlation and  refers to the difference ofranks between paired items in two series.

refers to the difference ofranks between paired items in two series.

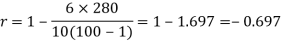

Example: Compute the Spearman’s rank correlation coefficient of the dataset given below-

Person | A | B | C | D | E | F | G | H | I | J |

Rank in test-1 | 9 | 10 | 6 | 5 | 7 | 2 | 4 | 8 | 1 | 3 |

Rank in test-2 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

Sol.

Person | Rank in test-1 | Rank in test-2 | d =  |  |

A | 9 | 1 | 8 | 64 |

B | 10 | 2 | 8 | 64 |

C | 6 | 3 | 3 | 9 |

D | 5 | 4 | 1 | 1 |

E | 7 | 5 | 2 | 4 |

F | 2 | 6 | -4 | 16 |

G | 4 | 7 | -3 | 9 |

H | 8 | 8 | 0 | 0 |

I | 1 | 9 | -8 | 64 |

J | 3 | 10 | -7 | 49 |

Sum |

|

|

| 280 |

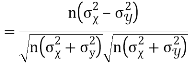

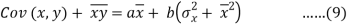

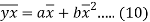

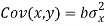

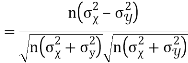

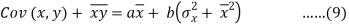

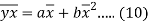

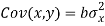

Example: If X and Y are uncorrelated random variables,  the

the  of correlation between

of correlation between  and

and

Solution.

Let  and

and

Then

Now

Similarly

Now

Also

(As

(As  and

and  are not correlated, we have

are not correlated, we have  )

)

Similarly

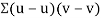

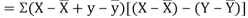

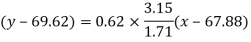

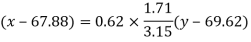

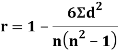

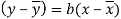

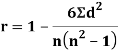

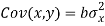

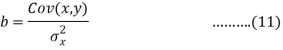

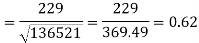

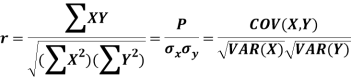

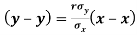

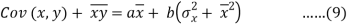

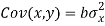

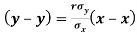

Regression-

If the scatter diagram indicates some relationship between two variables  and

and  , then the dots of the scatter diagram will be concentrated round a curve. This curve is called the curve of regression. Regression analysis is the method used for estimating the unknown values of one variable corresponding to the known value of another variable.

, then the dots of the scatter diagram will be concentrated round a curve. This curve is called the curve of regression. Regression analysis is the method used for estimating the unknown values of one variable corresponding to the known value of another variable.

Or in other words, Regression is the measure of average relationship between independent and dependent variable

Regression can be used for two or more than two variables.

There are two types of variables in regression analysis.

1. Independent variable

2. Dependent variable

The variable which is used for prediction is called independent variable.

It is known as predictor or regressor.

The variable whose value is predicted by independent variable is called dependent variable or regressed or explained variable.

The scatter diagram shows relationship between independent and dependent variable, then the scatter diagram will be more or less concentrated round a curve, which is called the curve of regression.

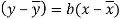

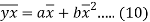

When we find the curve as a straight line then it is known as line of regression and the regression is called linear regression.

Note- regression line is the best fit line which expresses the average relation between variables.

LINE OF REGRSSION

When the curve is a straight line, it is called a line of regression. A line of regression is the straight line which gives the best fit in the least square sense to the given frequency.

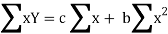

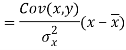

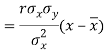

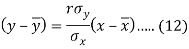

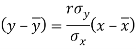

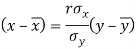

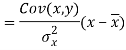

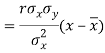

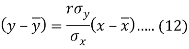

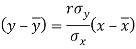

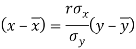

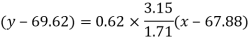

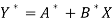

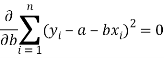

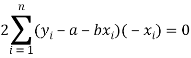

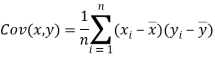

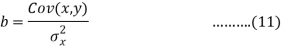

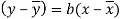

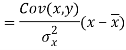

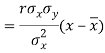

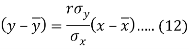

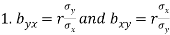

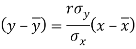

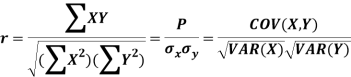

Equation of the line of regression-

Let

y = a + bx ………….. (1)

Is the equation of the line of y on x.

Let  be the estimated value of

be the estimated value of  for the given value of

for the given value of  .

.

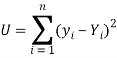

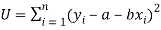

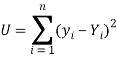

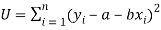

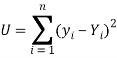

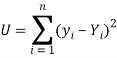

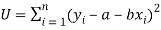

So that, According to the principle of least squares, we have the determined ‘a’ and ‘b’ so that the sum of squares of deviations of observed values of y from expected values of y,

That means-

Or

…….. (2)

…….. (2)

Is minimum.

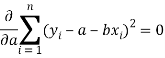

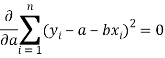

Form the concept of maxima and minima, we partially differentiate U with respect to ‘a’ and ‘b’ and equate to zero.

Which means

And

These equations (3) and (4) are known as normal equation for straight line.

Now divide equation (3) by n, we get-

This indicates that the regression line of y on x passes through the point .

.

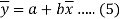

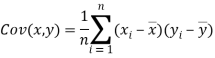

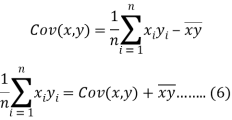

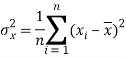

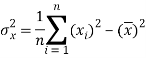

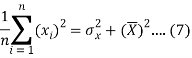

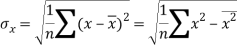

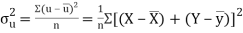

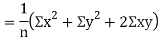

We know that-

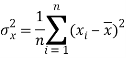

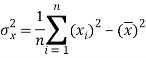

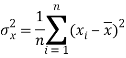

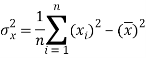

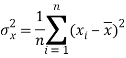

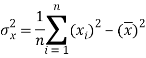

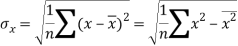

The variance of variable x can be expressed as-

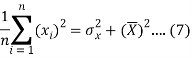

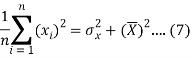

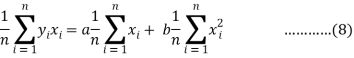

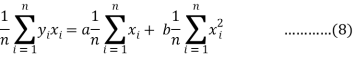

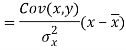

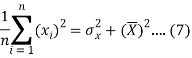

Dividing equation (4) by n, we get-

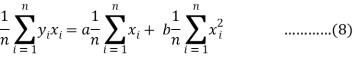

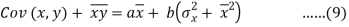

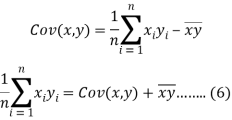

From the equation (6), (7) and (8)-

Multiply (5) by , we get-

, we get-

Subtracting equation (10) from equation (9), we get-

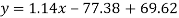

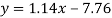

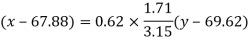

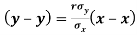

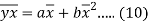

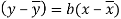

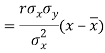

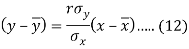

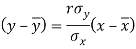

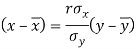

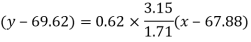

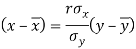

Since ‘b’ is the slope of the line of regression y on x and the line of regression passes through the point ( ), so that the equation of the line of regression of y on x is-

), so that the equation of the line of regression of y on x is-

This is known as regression line of y on x.

Note-

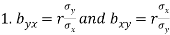

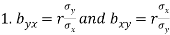

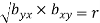

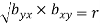

are the coefficients of regression.

are the coefficients of regression.

2.

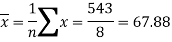

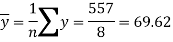

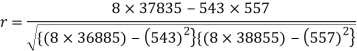

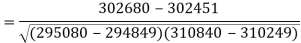

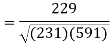

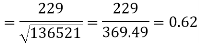

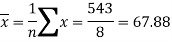

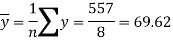

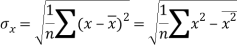

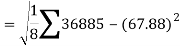

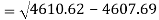

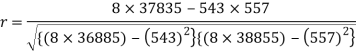

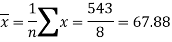

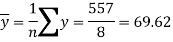

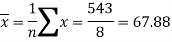

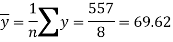

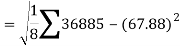

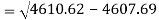

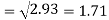

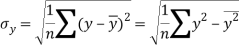

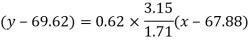

Example: Two variables X and Y are given in the dataset below, find the two lines of regression.

x | 65 | 66 | 67 | 67 | 68 | 69 | 70 | 71 |

y | 66 | 68 | 65 | 69 | 74 | 73 | 72 | 70 |

Sol.

The two lines of regression can be expressed as-

And

x | y |  |  | Xy |

65 | 66 | 4225 | 4356 | 4290 |

66 | 68 | 4356 | 4624 | 4488 |

67 | 65 | 4489 | 4225 | 4355 |

67 | 69 | 4489 | 4761 | 4623 |

68 | 74 | 4624 | 5476 | 5032 |

69 | 73 | 4761 | 5329 | 5037 |

70 | 72 | 4900 | 5184 | 5040 |

71 | 70 | 5041 | 4900 | 4970 |

Sum = 543 | 557 | 36885 | 38855 | 37835 |

Now-

And

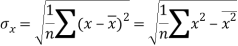

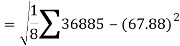

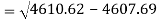

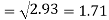

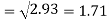

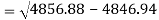

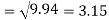

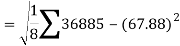

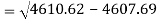

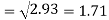

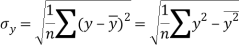

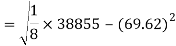

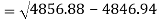

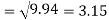

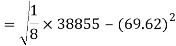

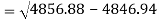

Standard deviation of x-

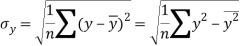

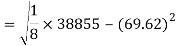

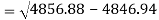

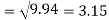

Similarly-

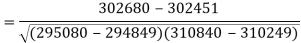

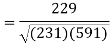

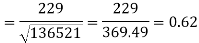

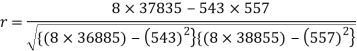

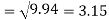

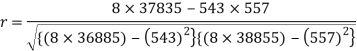

Correlation coefficient-

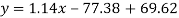

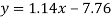

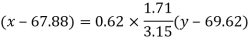

Put these values in regression line equation, we get

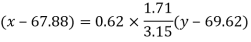

Regression line y on x-

Regression line x on y-

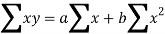

Regression line can also be find by the following method-

Example: Find the regression line of y on x for the given dataset.

X | 4.3 | 4.5 | 5.9 | 5.6 | 6.1 | 5.2 | 3.8 | 2.1 |

Y | 12.6 | 12.1 | 11.6 | 11.8 | 11.4 | 11.8 | 13.2 | 14.1 |

Sol.

Let y = a + bx is the line of regression of y on x, where ‘a’ and ‘b’ are given as-

We will make the following table-

x | y | Xy |  |

4.3 | 12.6 | 54.18 | 18.49 |

4.5 | 12.1 | 54.45 | 20.25 |

5.9 | 11.6 | 68.44 | 34.81 |

5.6 | 11.8 | 66.08 | 31.36 |

6.1 | 11.4 | 69.54 | 37.21 |

5.2 | 11.8 | 61.36 | 27.04 |

3.8 | 13.2 | 50.16 | 14.44 |

2.1 | 14.1 | 29.61 | 4.41 |

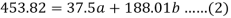

Sum = 37.5 | 98.6 | 453.82 | 188.01 |

Using the above equations we get-

On solving these both equations, we get-

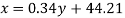

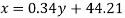

a = 15.49 and b = -0.675

So that the regression line is –

y = 15.49 – 0.675x

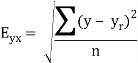

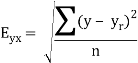

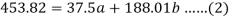

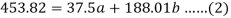

Note – Standard error of predictions can be find by the formula given below-

Difference between regression and correlation-

1. Correlation is the linear relationship between two variables while regression is the average relationship between two or more variables.

2. There are only limited applications of correlation as it gives the strength of linear relationship while the regression is to predict the value of the dependent varibale for the given values of independent variables.

3. Correlation does not consider dependent and independent variables while regression consider one dependent variable and other indpendent variables.

Key takeaways-

- Karl Pearson’s coefficient of correlation-

2. Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

3. Short-cut method to calculate correlation coefficient-

4. Spearman’s rank correlation-

5. The variable which is used for prediction is called independent variable. It is known as predictor or regressor.

6. regression line is the best fit line which expresses the average relation between variables.

7. regression line of y on x.

References:

- E. Kreyszig, “Advanced Engineering Mathematics”, John Wiley & Sons, aa2006.

- P. G. Hoel, S. C. Port And C. J. Stone, “Introduction To Probability Theory”, Universal Book Stall, 2003.

- S. Ross, “A First Course in Probability”, Pearson Education India, 2002.

- W. Feller, “An Introduction To Probability Theory and Its Applications”, Vol. 1, Wiley, 1968.

- N.P. Bali and M. Goyal, “A Text Book of Engineering Mathematics”, Laxmi Publications, 2010.

- B.S. Grewal, “Higher Engineering Mathematics”, Khanna Publishers, 2000.

- T. Veerarajan, “Engineering Mathematics”, Tata Mcgraw-Hill, New Delhi, 2010

Unit - 5

Statistics

5.1 Fitting of curve- Least squares principle , fitting of straight line , fitting of second degree parabola, fitting of curves of the form y=  , y = a

, y = a , y =

, y =

Method of least square-

Suppose

y = a + bx ………. (1)

Is the straight line has to be fitted for the data points given-

Let  be the theoretical value for

be the theoretical value for

Now-

For the minimum value of S -

Or

Now

Or

On solving equation (1) and (2), we get-

These two equation are known as the normal equations.

Now on solving these two equations we get the values of a and b.

Example: Find the straight line that best fits of the following data by using method of least square.

X | 1 | 2 | 3 | 4 | 5 |

y | 14 | 27 | 40 | 55 | 68 |

Sol.

Suppose the straight line

y = a + bx…….. (1)

Fits the best-

Then-

x | y | Xy |  |

1 | 14 | 14 | 1 |

2 | 27 | 54 | 4 |

3 | 40 | 120 | 9 |

4 | 55 | 220 | 16 |

5 | 68 | 340 | 25 |

Sum = 15 | 204 | 748 | 55 |

Normal equations are-

Put the values from the table, we get two normal equations-

On solving the above equations, we get-

So that the best fit line will be- (on putting the values of a and b in equation (1))

Example: Find the best values of a and b so that y = a + bx fits the data given in the table

x | 0 | 1 | 2 | 3 | 4 |

y | 1.0 | 2.9 | 4.8 | 6.7 | 8.6 |

Solution.

y = a + bx

x | y | Xy |  |

0 | 1.0 | 0 | 0 |

1 | 2.9 | 2.0 | 1 |

2 | 4.8 | 9.6 | 4 |

3 | 6.7 | 20.1 | 9 |

4 | 8.6 | 13.4 | 16 |

|  |  |  |

Normal equations,  y= na+ b

y= na+ b x (2)

x (2)

On putting the values of

On solving (4) and (5) we get,

On substituting the values of a and b in (1) we get

To fit the parabola

The normal equations are

On solving three normal equations we get the values of a,b and c.

Note- Change of scale-

We change the scale if the data is large and given in equal interval.

As-

Example: Fit a second degree parabola to the following data by least squares method.

| 1929 | 1930 | 1931 | 1932 | 1933 | 1934 | 1935 | 1936 | 1937 |

| 352 | 356 | 357 | 358 | 360 | 361 | 361 | 360 | 359 |

Solution: Taking x0 = 1933, y0 = 357

Taking u = x – x0, v = y – y0

u = x – 1933, v = y – 357

The equation y = a+bx+cx2 is transformed to v = A + Bu + Cu2 …(2)

| u = x – 1933 |  |  |  |  | u2v | u3 | u4 |

1929 | -4 | 352 | -5 | 20 | 16 | -80 | -64 | 256 |

1930 | -3 | 360 | -1 | 3 | 9 | -9 | -27 | 81 |

1931 | -2 | 357 | 0 | 0 | 4 | 0 | -8 | 16 |

1932 | -1 | 358 | 1 | -1 | 1 | 1 | -1 | 1 |

1933 | 0 | 360 | 3 | 0 | 0 | 0 | 0 | 0 |

1934 | 1 | 361 | 4 | 4 | 1 | 4 | 1 | 1 |

1935 | 2 | 361 | 4 | 8 | 4 | 16 | 8 | 16 |

1936 | 3 | 360 | 3 | 9 | 9 | 27 | 27 | 81 |

1937 | 4 | 359 | 2 | 8 | 16 | 32 | 64 | 256 |

Total |  |

|  |  |  |  |  |  |

Normal equations are

On solving these equations, we get A = 694/231, B = 17/20, C = - 247/924

V = 694/231 + 17/20 u – 247/924 u2V = 694/231 + 17/20 u – 247/924 u2

y – 357 = 694/231 + 17/20 (x – 1933) – 247/924 (x – 1933)2

= 694/231 + 17x/20 - 32861/20 – 247x2/924 (-3866x) – 247/924 + (1933)2

y = 694/231 – 32861/20 – 247/924 (1933)2 + 17x/20 + (247 3866)x/924 - 247 x2/924

y = 3 – 1643.05 – 998823.36 + 357 + 0.85 x + 1033.44 x – 0.267 x2

y = - 1000106.41 + 1034.29x – 0.267 x2

Example: Find the least squares approximation of second degree for the discrete data

x | 2 | -1 | 0 | 1 | 2 |

y | 15 | 1 | 1 | 3 | 19 |

Solution. Let the equation of second degree polynomial be

x | y | Xy |  |  |  |  |

-2 | 15 | -30 | 4 | 60 | -8 | 16 |

-1 | 1 | -1 | 1 | 1 | -1 | 1 |

0 | 1 | 0 | 0 | 0 | 0 | 0 |

1 | 3 | 3 | 1 | 3 | 1 | 1 |

2 | 19 | 38 | 4 | 76 | 8 | 16 |

|  |  |  |  |  |  |

Normal equations are

On putting the values of  x,

x,  y,

y, xy,

xy,  have

have

On solving (5),(6),(7), we get,

The required polynomial of second degree is

Example: Fit a second degree parabola to the following data.

X = 1.0 | 1.5 | 2.0 | 2.5 | 3.0 | 3.5 | 4.0 |

Y = 1.1 | 1.3 | 1.6 | 2.0 | 2.7 | 3.4 | 4.1 |

Solution

We shift the origin to (2.5, 0) antique 0.5 as the new unit. This amounts to changing the variable x to X, by the relation X = 2x – 5.

Let the parabola of fit be y = a + bX The values of

The values of  X etc. Are calculated as below:

X etc. Are calculated as below:

x | X | y | Xy |  |  |  |  |

1.0 | -3 | 1.1 | -3.3 | 9 | 9.9 | -27 | 81 |

1.5 | -2 | 1.3 | -2.6 | 4 | 5.2 | -5 | 16 |

2.0 | -1 | 1.6 | -1.6 | 1 | 1.6 | -1 | 1 |

2.5 | 0 | 2.0 | 0.0 | 0 | 0.0 | 0 | 0 |

3.0 | 1 | 2.7 | 2.7 | 1 | 2.7 | 1 | 1 |

3.5 | 2 | 3.4 | 6.8 | 4 | 13.6 | 8 | 16 |

4.0 | 3 | 4.1 | 12.3 | 9 | 36.9 | 27 | 81 |

Total | 0 | 16.2 | 14.3 | 28 | 69.9 | 0 | 196 |

The normal equations are

7a + 28c =16.2; 28b =14.3;. 28a +196c=69.9

Solving these as simultaneous equations we get

Replacing X bye 2x – 5 in the above equation we get

Which simplifies to y =

This is the required parabola of the best fit.

Example: Fit the curve  by using the method of least square.

by using the method of least square.

X | 1 | 2 | 3 | 4 | 5 | 6 |

Y | 7.209 | 5.265 | 3.846 | 2.809 | 2.052 | 1.499 |

Sol.

Here-

Now put-

Then we get-

x | Y |  | XY |  |

1 | 7.209 | 1.97533 | 1.97533 | 1 |

2 | 5.265 | 1.66108 | 3.32216 | 4 |

3 | 3.846 | 1.34703 | 4.04109 | 9 |

4 | 2.809 | 1.03283 | 4.13132 | 16 |

5 | 2.052 | 0.71881 | 3.59405 | 25 |

6 | 1.499 | 0.40480 | 2.4288 | 36 |

Sum = 21 |

| 7.13988 | 19.49275 | 91 |

Normal equations are-

Putting the values form the table, we get-

7.13988 = 6c + 21b

19.49275 = 21c + 91b

On solving, we get-

b = -0.3141 and c = 2.28933

c =

Now put these values in equations (1), we get-

Example: Estimate the chlorine residual in a swimming pool 5 hours after it has been treated with chemicals by fitting an exponential curve of the form

of the data given below-

of the data given below-

Hours(X) | 2 | 4 | 6 | 8 | 10 | 12 |

Chlorine residuals (Y) | 1.8 | 1.5 | 1.4 | 1.1 | 1.1 | 0.9 |

Sol.

Taking log on the curve which is non-linear,

We get-

Put

Then-

Which is the linear equation in X,

Its nomal equations are-

X Y Y* = ln Y X2 XY*

2 1.8 0.5878 4 0.1756

4 1.5 0.4055 16 1.622

6 1.4 0.3365 36 2.019

8 1.1 0.0953 64 0.7264

10 1.1 0.0953 100 0.953

12 0.9 -0.10536 144 -1.26432

42 1.415 364 5.26752

Here N = 6,

Thus the normal equations are-

On solving, we get

Or

A = 2.013 and B = 0.936

Hence the required least square exponential curve-

Prediction-

Chlorine content after 5 hours-

Key takeaways-

When two variables are related in such a way that change in the value of one variable affects the value of the other variable, then these two variables are said to be correlated and there is correlation between two variables.

Example- Height and weight of the persons of a group.

The correlation is said to be perfect correlation if two variables vary in such a way that their ratio is constant always.

Scatter diagram-

Karl Pearson’s coefficient of correlation-

Here-  and

and

Note-

1. Correlation coefficient always lies between -1 and +1.

2. Correlation coefficient is independent of change of origin and scale.

3. If the two variables are independent then correlation coefficient between them is zero.

Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

Correlation coefficient | Type of correlation |

+1 | Perfect positive correlation |

-1 | Perfect negative correlation |

0.25 | Weak positive correlation |

0.75 | Strong positive correlation |

-0.25 | Weak negative correlation |

-0.75 | Strong negative correlation |

0 | No correlation |

Example: Find the correlation coefficient between Age and weight of the following data-

Age | 30 | 44 | 45 | 43 | 34 | 44 |

Weight | 56 | 55 | 60 | 64 | 62 | 63 |

Sol.

x | y |  |  |  |  | (   |

30 | 56 | -10 | 100 | -4 | 16 | 40 |

44 | 55 | 4 | 16 | -5 | 25 | -20 |

45 | 60 | 5 | 25 | 0 | 0 | 0 |

43 | 64 | 3 | 9 | 4 | 16 | 12 |

34 | 62 | -6 | 36 | 2 | 4 | -12 |

44 | 63 | 4 | 16 | 3 | 9 | 12 |

Sum= 240 |

360 |

0 |

202 |

0 |

70

|

32 |

Karl Pearson’s coefficient of correlation-

Here the correlation coefficient is 0.27.which is the positive correlation (weak positive correlation), this indicates that the as age increases, the weight also increase.

Example:

Ten students got the following percentage of marks in Economics and Statistics

Calculate the  of correlation.

of correlation.

Roll No. |  |  |  |  |  |  |  |  |  |  |

Marks in Economics |  |  |  |  |  |  |  |  |  |  |

Marks in  |  |  |  |  |  |  |  |  |  |  |

Solution:

Let the marks of two subjects be denoted by  and

and  respectively.

respectively.

Then the mean for  marks

marks  and the mean ofy marks

and the mean ofy marks

and

and are deviations ofx’s and

are deviations ofx’s and  ’s from their respective means, then the data may be arranged in the following form:

’s from their respective means, then the data may be arranged in the following form:

x | y | X = x - 65 | Y = y - 66 | X2 | Y2 | X.Y |

78 36 98 25 75 82 90 62 65 39 650 | 84 51 91 60 68 62 86 58 53 47 660 | 13 -29 33 -40 10 17 25 -3 0 -26 0 | 18 -15 25 -6 2 -4 20 -8 -13 -19 0 | 169 841 1089 1600 100 289 625 9 0 676 5398

| 324 225 625 36 4 16 400 64 169 361 2224 | 234 435 825 240 20 -68 500 24 0 494 2704

|

|

|

|

|  |  |  |

Short-cut method to calculate correlation coefficient-

Here,

Example: Find the correlation coefficient between the values X and Y of the dataset given below by using short-cut method-

X | 10 | 20 | 30 | 40 | 50 |

Y | 90 | 85 | 80 | 60 | 45 |

Sol.

X | Y |  |  |  |  |  |

10 | 90 | -20 | 400 | 20 | 400 | -400 |

20 | 85 | -10 | 100 | 15 | 225 | -150 |

30 | 80 | 0 | 0 | 10 | 100 | 0 |

40 | 60 | 10 | 100 | -10 | 100 | -100 |

50 | 45 | 20 | 400 | -25 | 625 | -500 |

Sum = 150 |

360 |

0 |

1000 |

10 |

1450 |

-1150 |

Short-cut method to calculate correlation coefficient-

Spearman’s rank correlation-

Solution. Let  be the ranks of

be the ranks of  individuals corresponding to two characteristics.

individuals corresponding to two characteristics.

Assuming nor two individuals are equal in either classification, each individual takes the values 1, 2, 3,  and hence their arithmetic means are, each

and hence their arithmetic means are, each

Let  ,

,  ,

,  ,

,  be the values of variable

be the values of variable  and

and  ,

,  ,

,  those of

those of

Then

Where and y are deviations from the mean.

and y are deviations from the mean.

Clearly,  and

and

SPEARMAN’S RANK CORRELATION COEFFICIENT:

Where denotes rank coefficient of correlation and

denotes rank coefficient of correlation and  refers to the difference ofranks between paired items in two series.

refers to the difference ofranks between paired items in two series.

Example: Compute the Spearman’s rank correlation coefficient of the dataset given below-

Person | A | B | C | D | E | F | G | H | I | J |

Rank in test-1 | 9 | 10 | 6 | 5 | 7 | 2 | 4 | 8 | 1 | 3 |

Rank in test-2 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

Sol.

Person | Rank in test-1 | Rank in test-2 | d =  |  |

A | 9 | 1 | 8 | 64 |

B | 10 | 2 | 8 | 64 |

C | 6 | 3 | 3 | 9 |

D | 5 | 4 | 1 | 1 |

E | 7 | 5 | 2 | 4 |

F | 2 | 6 | -4 | 16 |

G | 4 | 7 | -3 | 9 |

H | 8 | 8 | 0 | 0 |

I | 1 | 9 | -8 | 64 |

J | 3 | 10 | -7 | 49 |

Sum |

|

|

| 280 |

Example: If X and Y are uncorrelated random variables,  the

the  of correlation between

of correlation between  and

and

Solution.

Let  and

and

Then

Now

Similarly

Now

Also

(As

(As  and

and  are not correlated, we have

are not correlated, we have  )

)

Similarly

Regression-

If the scatter diagram indicates some relationship between two variables  and

and  , then the dots of the scatter diagram will be concentrated round a curve. This curve is called the curve of regression. Regression analysis is the method used for estimating the unknown values of one variable corresponding to the known value of another variable.

, then the dots of the scatter diagram will be concentrated round a curve. This curve is called the curve of regression. Regression analysis is the method used for estimating the unknown values of one variable corresponding to the known value of another variable.

Or in other words, Regression is the measure of average relationship between independent and dependent variable

Regression can be used for two or more than two variables.

There are two types of variables in regression analysis.

1. Independent variable

2. Dependent variable

The variable which is used for prediction is called independent variable.

It is known as predictor or regressor.

The variable whose value is predicted by independent variable is called dependent variable or regressed or explained variable.

The scatter diagram shows relationship between independent and dependent variable, then the scatter diagram will be more or less concentrated round a curve, which is called the curve of regression.

When we find the curve as a straight line then it is known as line of regression and the regression is called linear regression.

Note- regression line is the best fit line which expresses the average relation between variables.

LINE OF REGRSSION

When the curve is a straight line, it is called a line of regression. A line of regression is the straight line which gives the best fit in the least square sense to the given frequency.

Equation of the line of regression-

Let

y = a + bx ………….. (1)

Is the equation of the line of y on x.

Let  be the estimated value of

be the estimated value of  for the given value of

for the given value of  .

.

So that, According to the principle of least squares, we have the determined ‘a’ and ‘b’ so that the sum of squares of deviations of observed values of y from expected values of y,

That means-

Or

…….. (2)

…….. (2)

Is minimum.

Form the concept of maxima and minima, we partially differentiate U with respect to ‘a’ and ‘b’ and equate to zero.

Which means

And

These equations (3) and (4) are known as normal equation for straight line.

Now divide equation (3) by n, we get-

This indicates that the regression line of y on x passes through the point .

.

We know that-

The variance of variable x can be expressed as-

Dividing equation (4) by n, we get-

From the equation (6), (7) and (8)-

Multiply (5) by , we get-

, we get-

Subtracting equation (10) from equation (9), we get-

Since ‘b’ is the slope of the line of regression y on x and the line of regression passes through the point ( ), so that the equation of the line of regression of y on x is-

), so that the equation of the line of regression of y on x is-

This is known as regression line of y on x.

Note-

are the coefficients of regression.

are the coefficients of regression.

2.

Example: Two variables X and Y are given in the dataset below, find the two lines of regression.

x | 65 | 66 | 67 | 67 | 68 | 69 | 70 | 71 |

y | 66 | 68 | 65 | 69 | 74 | 73 | 72 | 70 |

Sol.

The two lines of regression can be expressed as-

And

x | y |  |  | Xy |

65 | 66 | 4225 | 4356 | 4290 |

66 | 68 | 4356 | 4624 | 4488 |

67 | 65 | 4489 | 4225 | 4355 |

67 | 69 | 4489 | 4761 | 4623 |

68 | 74 | 4624 | 5476 | 5032 |

69 | 73 | 4761 | 5329 | 5037 |

70 | 72 | 4900 | 5184 | 5040 |

71 | 70 | 5041 | 4900 | 4970 |

Sum = 543 | 557 | 36885 | 38855 | 37835 |

Now-

And

Standard deviation of x-

Similarly-

Correlation coefficient-

Put these values in regression line equation, we get

Regression line y on x-

Regression line x on y-

Regression line can also be find by the following method-

Example: Find the regression line of y on x for the given dataset.

X | 4.3 | 4.5 | 5.9 | 5.6 | 6.1 | 5.2 | 3.8 | 2.1 |

Y | 12.6 | 12.1 | 11.6 | 11.8 | 11.4 | 11.8 | 13.2 | 14.1 |

Sol.

Let y = a + bx is the line of regression of y on x, where ‘a’ and ‘b’ are given as-

We will make the following table-

x | y | Xy |  |

4.3 | 12.6 | 54.18 | 18.49 |

4.5 | 12.1 | 54.45 | 20.25 |

5.9 | 11.6 | 68.44 | 34.81 |

5.6 | 11.8 | 66.08 | 31.36 |

6.1 | 11.4 | 69.54 | 37.21 |

5.2 | 11.8 | 61.36 | 27.04 |

3.8 | 13.2 | 50.16 | 14.44 |

2.1 | 14.1 | 29.61 | 4.41 |

Sum = 37.5 | 98.6 | 453.82 | 188.01 |

Using the above equations we get-

On solving these both equations, we get-

a = 15.49 and b = -0.675

So that the regression line is –

y = 15.49 – 0.675x

Note – Standard error of predictions can be find by the formula given below-

Difference between regression and correlation-

1. Correlation is the linear relationship between two variables while regression is the average relationship between two or more variables.

2. There are only limited applications of correlation as it gives the strength of linear relationship while the regression is to predict the value of the dependent varibale for the given values of independent variables.

3. Correlation does not consider dependent and independent variables while regression consider one dependent variable and other indpendent variables.

Key takeaways-

- Karl Pearson’s coefficient of correlation-

2. Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

3. Short-cut method to calculate correlation coefficient-

4. Spearman’s rank correlation-

5. The variable which is used for prediction is called independent variable. It is known as predictor or regressor.

6. regression line is the best fit line which expresses the average relation between variables.

7. regression line of y on x.

References:

- E. Kreyszig, “Advanced Engineering Mathematics”, John Wiley & Sons, aa2006.

- P. G. Hoel, S. C. Port And C. J. Stone, “Introduction To Probability Theory”, Universal Book Stall, 2003.

- S. Ross, “A First Course in Probability”, Pearson Education India, 2002.

- W. Feller, “An Introduction To Probability Theory and Its Applications”, Vol. 1, Wiley, 1968.

- N.P. Bali and M. Goyal, “A Text Book of Engineering Mathematics”, Laxmi Publications, 2010.

- B.S. Grewal, “Higher Engineering Mathematics”, Khanna Publishers, 2000.

- T. Veerarajan, “Engineering Mathematics”, Tata Mcgraw-Hill, New Delhi, 2010

Unit - 5

Statistics

5.1 Fitting of curve- Least squares principle , fitting of straight line , fitting of second degree parabola, fitting of curves of the form y=  , y = a

, y = a , y =

, y =

Method of least square-

Suppose

y = a + bx ………. (1)

Is the straight line has to be fitted for the data points given-

Let  be the theoretical value for

be the theoretical value for

Now-

For the minimum value of S -

Or

Now

Or

On solving equation (1) and (2), we get-

These two equation are known as the normal equations.

Now on solving these two equations we get the values of a and b.

Example: Find the straight line that best fits of the following data by using method of least square.

X | 1 | 2 | 3 | 4 | 5 |

y | 14 | 27 | 40 | 55 | 68 |

Sol.

Suppose the straight line

y = a + bx…….. (1)

Fits the best-

Then-

x | y | Xy |  |

1 | 14 | 14 | 1 |

2 | 27 | 54 | 4 |

3 | 40 | 120 | 9 |

4 | 55 | 220 | 16 |

5 | 68 | 340 | 25 |

Sum = 15 | 204 | 748 | 55 |

Normal equations are-

Put the values from the table, we get two normal equations-

On solving the above equations, we get-

So that the best fit line will be- (on putting the values of a and b in equation (1))

Example: Find the best values of a and b so that y = a + bx fits the data given in the table

x | 0 | 1 | 2 | 3 | 4 |

y | 1.0 | 2.9 | 4.8 | 6.7 | 8.6 |

Solution.

y = a + bx

x | y | Xy |  |

0 | 1.0 | 0 | 0 |

1 | 2.9 | 2.0 | 1 |

2 | 4.8 | 9.6 | 4 |

3 | 6.7 | 20.1 | 9 |

4 | 8.6 | 13.4 | 16 |

|  |  |  |

Normal equations,  y= na+ b

y= na+ b x (2)

x (2)

On putting the values of

On solving (4) and (5) we get,

On substituting the values of a and b in (1) we get

To fit the parabola

The normal equations are

On solving three normal equations we get the values of a,b and c.

Note- Change of scale-

We change the scale if the data is large and given in equal interval.

As-

Example: Fit a second degree parabola to the following data by least squares method.

| 1929 | 1930 | 1931 | 1932 | 1933 | 1934 | 1935 | 1936 | 1937 |

| 352 | 356 | 357 | 358 | 360 | 361 | 361 | 360 | 359 |

Solution: Taking x0 = 1933, y0 = 357

Taking u = x – x0, v = y – y0

u = x – 1933, v = y – 357

The equation y = a+bx+cx2 is transformed to v = A + Bu + Cu2 …(2)

| u = x – 1933 |  |  |  |  | u2v | u3 | u4 |

1929 | -4 | 352 | -5 | 20 | 16 | -80 | -64 | 256 |

1930 | -3 | 360 | -1 | 3 | 9 | -9 | -27 | 81 |

1931 | -2 | 357 | 0 | 0 | 4 | 0 | -8 | 16 |

1932 | -1 | 358 | 1 | -1 | 1 | 1 | -1 | 1 |

1933 | 0 | 360 | 3 | 0 | 0 | 0 | 0 | 0 |

1934 | 1 | 361 | 4 | 4 | 1 | 4 | 1 | 1 |

1935 | 2 | 361 | 4 | 8 | 4 | 16 | 8 | 16 |

1936 | 3 | 360 | 3 | 9 | 9 | 27 | 27 | 81 |

1937 | 4 | 359 | 2 | 8 | 16 | 32 | 64 | 256 |

Total |  |

|  |  |  |  |  |  |

Normal equations are

On solving these equations, we get A = 694/231, B = 17/20, C = - 247/924

V = 694/231 + 17/20 u – 247/924 u2V = 694/231 + 17/20 u – 247/924 u2

y – 357 = 694/231 + 17/20 (x – 1933) – 247/924 (x – 1933)2

= 694/231 + 17x/20 - 32861/20 – 247x2/924 (-3866x) – 247/924 + (1933)2

y = 694/231 – 32861/20 – 247/924 (1933)2 + 17x/20 + (247 3866)x/924 - 247 x2/924

y = 3 – 1643.05 – 998823.36 + 357 + 0.85 x + 1033.44 x – 0.267 x2

y = - 1000106.41 + 1034.29x – 0.267 x2

Example: Find the least squares approximation of second degree for the discrete data

x | 2 | -1 | 0 | 1 | 2 |

y | 15 | 1 | 1 | 3 | 19 |

Solution. Let the equation of second degree polynomial be

x | y | Xy |  |  |  |  |

-2 | 15 | -30 | 4 | 60 | -8 | 16 |

-1 | 1 | -1 | 1 | 1 | -1 | 1 |

0 | 1 | 0 | 0 | 0 | 0 | 0 |

1 | 3 | 3 | 1 | 3 | 1 | 1 |

2 | 19 | 38 | 4 | 76 | 8 | 16 |

|  |  |  |  |  |  |

Normal equations are

On putting the values of  x,

x,  y,

y, xy,

xy,  have

have

On solving (5),(6),(7), we get,

The required polynomial of second degree is

Example: Fit a second degree parabola to the following data.

X = 1.0 | 1.5 | 2.0 | 2.5 | 3.0 | 3.5 | 4.0 |

Y = 1.1 | 1.3 | 1.6 | 2.0 | 2.7 | 3.4 | 4.1 |

Solution

We shift the origin to (2.5, 0) antique 0.5 as the new unit. This amounts to changing the variable x to X, by the relation X = 2x – 5.

Let the parabola of fit be y = a + bX The values of

The values of  X etc. Are calculated as below:

X etc. Are calculated as below:

x | X | y | Xy |  |  |  |  |

1.0 | -3 | 1.1 | -3.3 | 9 | 9.9 | -27 | 81 |

1.5 | -2 | 1.3 | -2.6 | 4 | 5.2 | -5 | 16 |

2.0 | -1 | 1.6 | -1.6 | 1 | 1.6 | -1 | 1 |

2.5 | 0 | 2.0 | 0.0 | 0 | 0.0 | 0 | 0 |

3.0 | 1 | 2.7 | 2.7 | 1 | 2.7 | 1 | 1 |

3.5 | 2 | 3.4 | 6.8 | 4 | 13.6 | 8 | 16 |

4.0 | 3 | 4.1 | 12.3 | 9 | 36.9 | 27 | 81 |

Total | 0 | 16.2 | 14.3 | 28 | 69.9 | 0 | 196 |

The normal equations are

7a + 28c =16.2; 28b =14.3;. 28a +196c=69.9

Solving these as simultaneous equations we get

Replacing X bye 2x – 5 in the above equation we get

Which simplifies to y =

This is the required parabola of the best fit.

Example: Fit the curve  by using the method of least square.

by using the method of least square.

X | 1 | 2 | 3 | 4 | 5 | 6 |

Y | 7.209 | 5.265 | 3.846 | 2.809 | 2.052 | 1.499 |

Sol.

Here-

Now put-

Then we get-

x | Y |  | XY |  |

1 | 7.209 | 1.97533 | 1.97533 | 1 |

2 | 5.265 | 1.66108 | 3.32216 | 4 |

3 | 3.846 | 1.34703 | 4.04109 | 9 |

4 | 2.809 | 1.03283 | 4.13132 | 16 |

5 | 2.052 | 0.71881 | 3.59405 | 25 |

6 | 1.499 | 0.40480 | 2.4288 | 36 |

Sum = 21 |

| 7.13988 | 19.49275 | 91 |

Normal equations are-

Putting the values form the table, we get-

7.13988 = 6c + 21b

19.49275 = 21c + 91b

On solving, we get-

b = -0.3141 and c = 2.28933

c =

Now put these values in equations (1), we get-

Example: Estimate the chlorine residual in a swimming pool 5 hours after it has been treated with chemicals by fitting an exponential curve of the form

of the data given below-

of the data given below-

Hours(X) | 2 | 4 | 6 | 8 | 10 | 12 |

Chlorine residuals (Y) | 1.8 | 1.5 | 1.4 | 1.1 | 1.1 | 0.9 |

Sol.

Taking log on the curve which is non-linear,

We get-

Put

Then-

Which is the linear equation in X,

Its nomal equations are-

X Y Y* = ln Y X2 XY*

2 1.8 0.5878 4 0.1756

4 1.5 0.4055 16 1.622

6 1.4 0.3365 36 2.019

8 1.1 0.0953 64 0.7264

10 1.1 0.0953 100 0.953

12 0.9 -0.10536 144 -1.26432

42 1.415 364 5.26752

Here N = 6,

Thus the normal equations are-

On solving, we get

Or

A = 2.013 and B = 0.936

Hence the required least square exponential curve-

Prediction-

Chlorine content after 5 hours-

Key takeaways-

When two variables are related in such a way that change in the value of one variable affects the value of the other variable, then these two variables are said to be correlated and there is correlation between two variables.

Example- Height and weight of the persons of a group.

The correlation is said to be perfect correlation if two variables vary in such a way that their ratio is constant always.

Scatter diagram-

Karl Pearson’s coefficient of correlation-

Here-  and

and

Note-

1. Correlation coefficient always lies between -1 and +1.

2. Correlation coefficient is independent of change of origin and scale.

3. If the two variables are independent then correlation coefficient between them is zero.

Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

Correlation coefficient | Type of correlation |

+1 | Perfect positive correlation |

-1 | Perfect negative correlation |

0.25 | Weak positive correlation |

0.75 | Strong positive correlation |

-0.25 | Weak negative correlation |

-0.75 | Strong negative correlation |

0 | No correlation |

Example: Find the correlation coefficient between Age and weight of the following data-

Age | 30 | 44 | 45 | 43 | 34 | 44 |

Weight | 56 | 55 | 60 | 64 | 62 | 63 |

Sol.

x | y |  |  |  |  | (   |

30 | 56 | -10 | 100 | -4 | 16 | 40 |

44 | 55 | 4 | 16 | -5 | 25 | -20 |

45 | 60 | 5 | 25 | 0 | 0 | 0 |

43 | 64 | 3 | 9 | 4 | 16 | 12 |

34 | 62 | -6 | 36 | 2 | 4 | -12 |

44 | 63 | 4 | 16 | 3 | 9 | 12 |

Sum= 240 |

360 |

0 |

202 |

0 |

70

|

32 |

Karl Pearson’s coefficient of correlation-

Here the correlation coefficient is 0.27.which is the positive correlation (weak positive correlation), this indicates that the as age increases, the weight also increase.

Example:

Ten students got the following percentage of marks in Economics and Statistics

Calculate the  of correlation.

of correlation.

Roll No. |  |  |  |  |  |  |  |  |  |  |

Marks in Economics |  |  |  |  |  |  |  |  |  |  |

Marks in  |  |  |  |  |  |  |  |  |  |  |

Solution:

Let the marks of two subjects be denoted by  and

and  respectively.

respectively.

Then the mean for  marks

marks  and the mean ofy marks

and the mean ofy marks

and

and are deviations ofx’s and

are deviations ofx’s and  ’s from their respective means, then the data may be arranged in the following form:

’s from their respective means, then the data may be arranged in the following form:

x | y | X = x - 65 | Y = y - 66 | X2 | Y2 | X.Y |

78 36 98 25 75 82 90 62 65 39 650 | 84 51 91 60 68 62 86 58 53 47 660 | 13 -29 33 -40 10 17 25 -3 0 -26 0 | 18 -15 25 -6 2 -4 20 -8 -13 -19 0 | 169 841 1089 1600 100 289 625 9 0 676 5398

| 324 225 625 36 4 16 400 64 169 361 2224 | 234 435 825 240 20 -68 500 24 0 494 2704

|

|

|

|

|  |  |  |

Short-cut method to calculate correlation coefficient-

Here,

Example: Find the correlation coefficient between the values X and Y of the dataset given below by using short-cut method-

X | 10 | 20 | 30 | 40 | 50 |

Y | 90 | 85 | 80 | 60 | 45 |

Sol.

X | Y |  |  |  |  |  |

10 | 90 | -20 | 400 | 20 | 400 | -400 |

20 | 85 | -10 | 100 | 15 | 225 | -150 |

30 | 80 | 0 | 0 | 10 | 100 | 0 |

40 | 60 | 10 | 100 | -10 | 100 | -100 |

50 | 45 | 20 | 400 | -25 | 625 | -500 |

Sum = 150 |

360 |

0 |

1000 |

10 |

1450 |

-1150 |

Short-cut method to calculate correlation coefficient-

Spearman’s rank correlation-

Solution. Let  be the ranks of

be the ranks of  individuals corresponding to two characteristics.

individuals corresponding to two characteristics.

Assuming nor two individuals are equal in either classification, each individual takes the values 1, 2, 3,  and hence their arithmetic means are, each

and hence their arithmetic means are, each

Let  ,

,  ,

,  ,

,  be the values of variable

be the values of variable  and

and  ,

,  ,

,  those of

those of

Then

Where and y are deviations from the mean.

and y are deviations from the mean.

Clearly,  and

and

SPEARMAN’S RANK CORRELATION COEFFICIENT:

Where denotes rank coefficient of correlation and

denotes rank coefficient of correlation and  refers to the difference ofranks between paired items in two series.

refers to the difference ofranks between paired items in two series.

Example: Compute the Spearman’s rank correlation coefficient of the dataset given below-

Person | A | B | C | D | E | F | G | H | I | J |

Rank in test-1 | 9 | 10 | 6 | 5 | 7 | 2 | 4 | 8 | 1 | 3 |

Rank in test-2 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

Sol.

Person | Rank in test-1 | Rank in test-2 | d =  |  |

A | 9 | 1 | 8 | 64 |

B | 10 | 2 | 8 | 64 |

C | 6 | 3 | 3 | 9 |

D | 5 | 4 | 1 | 1 |

E | 7 | 5 | 2 | 4 |

F | 2 | 6 | -4 | 16 |

G | 4 | 7 | -3 | 9 |

H | 8 | 8 | 0 | 0 |

I | 1 | 9 | -8 | 64 |

J | 3 | 10 | -7 | 49 |

Sum |

|

|

| 280 |

Example: If X and Y are uncorrelated random variables,  the

the  of correlation between

of correlation between  and

and

Solution.

Let  and

and

Then

Now

Similarly

Now

Also

(As

(As  and

and  are not correlated, we have

are not correlated, we have  )

)

Similarly

Regression-

If the scatter diagram indicates some relationship between two variables  and

and  , then the dots of the scatter diagram will be concentrated round a curve. This curve is called the curve of regression. Regression analysis is the method used for estimating the unknown values of one variable corresponding to the known value of another variable.

, then the dots of the scatter diagram will be concentrated round a curve. This curve is called the curve of regression. Regression analysis is the method used for estimating the unknown values of one variable corresponding to the known value of another variable.

Or in other words, Regression is the measure of average relationship between independent and dependent variable

Regression can be used for two or more than two variables.

There are two types of variables in regression analysis.

1. Independent variable

2. Dependent variable

The variable which is used for prediction is called independent variable.

It is known as predictor or regressor.

The variable whose value is predicted by independent variable is called dependent variable or regressed or explained variable.

The scatter diagram shows relationship between independent and dependent variable, then the scatter diagram will be more or less concentrated round a curve, which is called the curve of regression.

When we find the curve as a straight line then it is known as line of regression and the regression is called linear regression.

Note- regression line is the best fit line which expresses the average relation between variables.

LINE OF REGRSSION

When the curve is a straight line, it is called a line of regression. A line of regression is the straight line which gives the best fit in the least square sense to the given frequency.

Equation of the line of regression-

Let

y = a + bx ………….. (1)

Is the equation of the line of y on x.

Let  be the estimated value of

be the estimated value of  for the given value of

for the given value of  .

.

So that, According to the principle of least squares, we have the determined ‘a’ and ‘b’ so that the sum of squares of deviations of observed values of y from expected values of y,

That means-

Or

…….. (2)

…….. (2)

Is minimum.

Form the concept of maxima and minima, we partially differentiate U with respect to ‘a’ and ‘b’ and equate to zero.

Which means

And

These equations (3) and (4) are known as normal equation for straight line.

Now divide equation (3) by n, we get-

This indicates that the regression line of y on x passes through the point .

.

We know that-

The variance of variable x can be expressed as-

Dividing equation (4) by n, we get-

From the equation (6), (7) and (8)-

Multiply (5) by , we get-

, we get-

Subtracting equation (10) from equation (9), we get-

Since ‘b’ is the slope of the line of regression y on x and the line of regression passes through the point ( ), so that the equation of the line of regression of y on x is-

), so that the equation of the line of regression of y on x is-

This is known as regression line of y on x.

Note-

are the coefficients of regression.

are the coefficients of regression.

2.

Example: Two variables X and Y are given in the dataset below, find the two lines of regression.

x | 65 | 66 | 67 | 67 | 68 | 69 | 70 | 71 |

y | 66 | 68 | 65 | 69 | 74 | 73 | 72 | 70 |

Sol.

The two lines of regression can be expressed as-

And

x | y |  |  | Xy |

65 | 66 | 4225 | 4356 | 4290 |

66 | 68 | 4356 | 4624 | 4488 |

67 | 65 | 4489 | 4225 | 4355 |

67 | 69 | 4489 | 4761 | 4623 |

68 | 74 | 4624 | 5476 | 5032 |

69 | 73 | 4761 | 5329 | 5037 |

70 | 72 | 4900 | 5184 | 5040 |

71 | 70 | 5041 | 4900 | 4970 |

Sum = 543 | 557 | 36885 | 38855 | 37835 |

Now-

And

Standard deviation of x-

Similarly-

Correlation coefficient-

Put these values in regression line equation, we get

Regression line y on x-

Regression line x on y-

Regression line can also be find by the following method-

Example: Find the regression line of y on x for the given dataset.

X | 4.3 | 4.5 | 5.9 | 5.6 | 6.1 | 5.2 | 3.8 | 2.1 |

Y | 12.6 | 12.1 | 11.6 | 11.8 | 11.4 | 11.8 | 13.2 | 14.1 |

Sol.

Let y = a + bx is the line of regression of y on x, where ‘a’ and ‘b’ are given as-

We will make the following table-

x | y | Xy |  |

4.3 | 12.6 | 54.18 | 18.49 |

4.5 | 12.1 | 54.45 | 20.25 |

5.9 | 11.6 | 68.44 | 34.81 |

5.6 | 11.8 | 66.08 | 31.36 |

6.1 | 11.4 | 69.54 | 37.21 |

5.2 | 11.8 | 61.36 | 27.04 |

3.8 | 13.2 | 50.16 | 14.44 |

2.1 | 14.1 | 29.61 | 4.41 |

Sum = 37.5 | 98.6 | 453.82 | 188.01 |

Using the above equations we get-

On solving these both equations, we get-

a = 15.49 and b = -0.675

So that the regression line is –

y = 15.49 – 0.675x

Note – Standard error of predictions can be find by the formula given below-

Difference between regression and correlation-

1. Correlation is the linear relationship between two variables while regression is the average relationship between two or more variables.

2. There are only limited applications of correlation as it gives the strength of linear relationship while the regression is to predict the value of the dependent varibale for the given values of independent variables.

3. Correlation does not consider dependent and independent variables while regression consider one dependent variable and other indpendent variables.

Key takeaways-

- Karl Pearson’s coefficient of correlation-

2. Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

3. Short-cut method to calculate correlation coefficient-

4. Spearman’s rank correlation-

5. The variable which is used for prediction is called independent variable. It is known as predictor or regressor.

6. regression line is the best fit line which expresses the average relation between variables.

7. regression line of y on x.

References:

- E. Kreyszig, “Advanced Engineering Mathematics”, John Wiley & Sons, aa2006.

- P. G. Hoel, S. C. Port And C. J. Stone, “Introduction To Probability Theory”, Universal Book Stall, 2003.

- S. Ross, “A First Course in Probability”, Pearson Education India, 2002.

- W. Feller, “An Introduction To Probability Theory and Its Applications”, Vol. 1, Wiley, 1968.

- N.P. Bali and M. Goyal, “A Text Book of Engineering Mathematics”, Laxmi Publications, 2010.

- B.S. Grewal, “Higher Engineering Mathematics”, Khanna Publishers, 2000.

- T. Veerarajan, “Engineering Mathematics”, Tata Mcgraw-Hill, New Delhi, 2010

Unit - 5

Statistics

5.1 Fitting of curve- Least squares principle , fitting of straight line , fitting of second degree parabola, fitting of curves of the form y=  , y = a

, y = a , y =

, y =

Method of least square-

Suppose

y = a + bx ………. (1)

Is the straight line has to be fitted for the data points given-

Let  be the theoretical value for

be the theoretical value for

Now-

For the minimum value of S -

Or

Now

Or

On solving equation (1) and (2), we get-

These two equation are known as the normal equations.

Now on solving these two equations we get the values of a and b.

Example: Find the straight line that best fits of the following data by using method of least square.

X | 1 | 2 | 3 | 4 | 5 |

y | 14 | 27 | 40 | 55 | 68 |

Sol.

Suppose the straight line

y = a + bx…….. (1)

Fits the best-

Then-

x | y | Xy |  |

1 | 14 | 14 | 1 |

2 | 27 | 54 | 4 |

3 | 40 | 120 | 9 |

4 | 55 | 220 | 16 |

5 | 68 | 340 | 25 |

Sum = 15 | 204 | 748 | 55 |

Normal equations are-

Put the values from the table, we get two normal equations-

On solving the above equations, we get-

So that the best fit line will be- (on putting the values of a and b in equation (1))

Example: Find the best values of a and b so that y = a + bx fits the data given in the table

x | 0 | 1 | 2 | 3 | 4 |

y | 1.0 | 2.9 | 4.8 | 6.7 | 8.6 |

Solution.

y = a + bx

x | y | Xy |  |

0 | 1.0 | 0 | 0 |

1 | 2.9 | 2.0 | 1 |

2 | 4.8 | 9.6 | 4 |

3 | 6.7 | 20.1 | 9 |

4 | 8.6 | 13.4 | 16 |

|  |  |  |

Normal equations,  y= na+ b

y= na+ b x (2)

x (2)

On putting the values of

On solving (4) and (5) we get,

On substituting the values of a and b in (1) we get

To fit the parabola

The normal equations are

On solving three normal equations we get the values of a,b and c.

Note- Change of scale-

We change the scale if the data is large and given in equal interval.

As-

Example: Fit a second degree parabola to the following data by least squares method.

| 1929 | 1930 | 1931 | 1932 | 1933 | 1934 | 1935 | 1936 | 1937 |

| 352 | 356 | 357 | 358 | 360 | 361 | 361 | 360 | 359 |

Solution: Taking x0 = 1933, y0 = 357

Taking u = x – x0, v = y – y0

u = x – 1933, v = y – 357

The equation y = a+bx+cx2 is transformed to v = A + Bu + Cu2 …(2)

| u = x – 1933 |  |  |  |  | u2v | u3 | u4 |

1929 | -4 | 352 | -5 | 20 | 16 | -80 | -64 | 256 |

1930 | -3 | 360 | -1 | 3 | 9 | -9 | -27 | 81 |

1931 | -2 | 357 | 0 | 0 | 4 | 0 | -8 | 16 |

1932 | -1 | 358 | 1 | -1 | 1 | 1 | -1 | 1 |

1933 | 0 | 360 | 3 | 0 | 0 | 0 | 0 | 0 |

1934 | 1 | 361 | 4 | 4 | 1 | 4 | 1 | 1 |

1935 | 2 | 361 | 4 | 8 | 4 | 16 | 8 | 16 |

1936 | 3 | 360 | 3 | 9 | 9 | 27 | 27 | 81 |

1937 | 4 | 359 | 2 | 8 | 16 | 32 | 64 | 256 |

Total |  |

|  |  |  |  |  |  |

Normal equations are

On solving these equations, we get A = 694/231, B = 17/20, C = - 247/924

V = 694/231 + 17/20 u – 247/924 u2V = 694/231 + 17/20 u – 247/924 u2

y – 357 = 694/231 + 17/20 (x – 1933) – 247/924 (x – 1933)2

= 694/231 + 17x/20 - 32861/20 – 247x2/924 (-3866x) – 247/924 + (1933)2

y = 694/231 – 32861/20 – 247/924 (1933)2 + 17x/20 + (247 3866)x/924 - 247 x2/924

y = 3 – 1643.05 – 998823.36 + 357 + 0.85 x + 1033.44 x – 0.267 x2

y = - 1000106.41 + 1034.29x – 0.267 x2

Example: Find the least squares approximation of second degree for the discrete data

x | 2 | -1 | 0 | 1 | 2 |

y | 15 | 1 | 1 | 3 | 19 |

Solution. Let the equation of second degree polynomial be

x | y | Xy |  |  |  |  |

-2 | 15 | -30 | 4 | 60 | -8 | 16 |

-1 | 1 | -1 | 1 | 1 | -1 | 1 |

0 | 1 | 0 | 0 | 0 | 0 | 0 |

1 | 3 | 3 | 1 | 3 | 1 | 1 |

2 | 19 | 38 | 4 | 76 | 8 | 16 |

|  |  |  |  |  |  |

Normal equations are

On putting the values of  x,

x,  y,

y, xy,

xy,  have

have

On solving (5),(6),(7), we get,

The required polynomial of second degree is

Example: Fit a second degree parabola to the following data.

X = 1.0 | 1.5 | 2.0 | 2.5 | 3.0 | 3.5 | 4.0 |

Y = 1.1 | 1.3 | 1.6 | 2.0 | 2.7 | 3.4 | 4.1 |

Solution

We shift the origin to (2.5, 0) antique 0.5 as the new unit. This amounts to changing the variable x to X, by the relation X = 2x – 5.

Let the parabola of fit be y = a + bX The values of

The values of  X etc. Are calculated as below:

X etc. Are calculated as below:

x | X | y | Xy |  |  |  |  |

1.0 | -3 | 1.1 | -3.3 | 9 | 9.9 | -27 | 81 |

1.5 | -2 | 1.3 | -2.6 | 4 | 5.2 | -5 | 16 |

2.0 | -1 | 1.6 | -1.6 | 1 | 1.6 | -1 | 1 |

2.5 | 0 | 2.0 | 0.0 | 0 | 0.0 | 0 | 0 |

3.0 | 1 | 2.7 | 2.7 | 1 | 2.7 | 1 | 1 |

3.5 | 2 | 3.4 | 6.8 | 4 | 13.6 | 8 | 16 |

4.0 | 3 | 4.1 | 12.3 | 9 | 36.9 | 27 | 81 |

Total | 0 | 16.2 | 14.3 | 28 | 69.9 | 0 | 196 |

The normal equations are

7a + 28c =16.2; 28b =14.3;. 28a +196c=69.9

Solving these as simultaneous equations we get

Replacing X bye 2x – 5 in the above equation we get

Which simplifies to y =

This is the required parabola of the best fit.

Example: Fit the curve  by using the method of least square.

by using the method of least square.

X | 1 | 2 | 3 | 4 | 5 | 6 |

Y | 7.209 | 5.265 | 3.846 | 2.809 | 2.052 | 1.499 |

Sol.

Here-

Now put-

Then we get-

x | Y |  | XY |  |

1 | 7.209 | 1.97533 | 1.97533 | 1 |

2 | 5.265 | 1.66108 | 3.32216 | 4 |

3 | 3.846 | 1.34703 | 4.04109 | 9 |

4 | 2.809 | 1.03283 | 4.13132 | 16 |

5 | 2.052 | 0.71881 | 3.59405 | 25 |

6 | 1.499 | 0.40480 | 2.4288 | 36 |

Sum = 21 |

| 7.13988 | 19.49275 | 91 |

Normal equations are-

Putting the values form the table, we get-

7.13988 = 6c + 21b

19.49275 = 21c + 91b

On solving, we get-

b = -0.3141 and c = 2.28933

c =

Now put these values in equations (1), we get-

Example: Estimate the chlorine residual in a swimming pool 5 hours after it has been treated with chemicals by fitting an exponential curve of the form

of the data given below-

of the data given below-

Hours(X) | 2 | 4 | 6 | 8 | 10 | 12 |

Chlorine residuals (Y) | 1.8 | 1.5 | 1.4 | 1.1 | 1.1 | 0.9 |

Sol.

Taking log on the curve which is non-linear,

We get-

Put

Then-

Which is the linear equation in X,

Its nomal equations are-

X Y Y* = ln Y X2 XY*

2 1.8 0.5878 4 0.1756

4 1.5 0.4055 16 1.622

6 1.4 0.3365 36 2.019

8 1.1 0.0953 64 0.7264

10 1.1 0.0953 100 0.953

12 0.9 -0.10536 144 -1.26432

42 1.415 364 5.26752

Here N = 6,

Thus the normal equations are-

On solving, we get

Or

A = 2.013 and B = 0.936

Hence the required least square exponential curve-

Prediction-

Chlorine content after 5 hours-

Key takeaways-

Unit - 5

Statistics

5.1 Fitting of curve- Least squares principle , fitting of straight line , fitting of second degree parabola, fitting of curves of the form y=  , y = a

, y = a , y =

, y =

Method of least square-

Suppose

y = a + bx ………. (1)

Is the straight line has to be fitted for the data points given-

Let  be the theoretical value for

be the theoretical value for

Now-

For the minimum value of S -

Or

Now

Or

On solving equation (1) and (2), we get-

These two equation are known as the normal equations.

Now on solving these two equations we get the values of a and b.

Example: Find the straight line that best fits of the following data by using method of least square.

X | 1 | 2 | 3 | 4 | 5 |

y | 14 | 27 | 40 | 55 | 68 |

Sol.

Suppose the straight line

y = a + bx…….. (1)

Fits the best-

Then-

x | y | Xy |  |

1 | 14 | 14 | 1 |

2 | 27 | 54 | 4 |

3 | 40 | 120 | 9 |

4 | 55 | 220 | 16 |

5 | 68 | 340 | 25 |

Sum = 15 | 204 | 748 | 55 |

Normal equations are-

Put the values from the table, we get two normal equations-

On solving the above equations, we get-

So that the best fit line will be- (on putting the values of a and b in equation (1))

Example: Find the best values of a and b so that y = a + bx fits the data given in the table

x | 0 | 1 | 2 | 3 | 4 |

y | 1.0 | 2.9 | 4.8 | 6.7 | 8.6 |

Solution.

y = a + bx

x | y | Xy |  |

0 | 1.0 | 0 | 0 |

1 | 2.9 | 2.0 | 1 |

2 | 4.8 | 9.6 | 4 |

3 | 6.7 | 20.1 | 9 |

4 | 8.6 | 13.4 | 16 |

|  |  |  |

Normal equations,  y= na+ b

y= na+ b x (2)

x (2)

On putting the values of

On solving (4) and (5) we get,

On substituting the values of a and b in (1) we get

To fit the parabola

The normal equations are

On solving three normal equations we get the values of a,b and c.

Note- Change of scale-

We change the scale if the data is large and given in equal interval.

As-

Example: Fit a second degree parabola to the following data by least squares method.

| 1929 | 1930 | 1931 | 1932 | 1933 | 1934 | 1935 | 1936 | 1937 |

| 352 | 356 | 357 | 358 | 360 | 361 | 361 | 360 | 359 |

Solution: Taking x0 = 1933, y0 = 357

Taking u = x – x0, v = y – y0

u = x – 1933, v = y – 357

The equation y = a+bx+cx2 is transformed to v = A + Bu + Cu2 …(2)

| u = x – 1933 |  |  |  |  | u2v | u3 | u4 |

1929 | -4 | 352 | -5 | 20 | 16 | -80 | -64 | 256 |

1930 | -3 | 360 | -1 | 3 | 9 | -9 | -27 | 81 |

1931 | -2 | 357 | 0 | 0 | 4 | 0 | -8 | 16 |

1932 | -1 | 358 | 1 | -1 | 1 | 1 | -1 | 1 |

1933 | 0 | 360 | 3 | 0 | 0 | 0 | 0 | 0 |

1934 | 1 | 361 | 4 | 4 | 1 | 4 | 1 | 1 |

1935 | 2 | 361 | 4 | 8 | 4 | 16 | 8 | 16 |

1936 | 3 | 360 | 3 | 9 | 9 | 27 | 27 | 81 |

1937 | 4 | 359 | 2 | 8 | 16 | 32 | 64 | 256 |

Total |  |

|  |  |  |  |  |  |

Normal equations are

On solving these equations, we get A = 694/231, B = 17/20, C = - 247/924

V = 694/231 + 17/20 u – 247/924 u2V = 694/231 + 17/20 u – 247/924 u2

y – 357 = 694/231 + 17/20 (x – 1933) – 247/924 (x – 1933)2

= 694/231 + 17x/20 - 32861/20 – 247x2/924 (-3866x) – 247/924 + (1933)2

y = 694/231 – 32861/20 – 247/924 (1933)2 + 17x/20 + (247 3866)x/924 - 247 x2/924

y = 3 – 1643.05 – 998823.36 + 357 + 0.85 x + 1033.44 x – 0.267 x2

y = - 1000106.41 + 1034.29x – 0.267 x2

Example: Find the least squares approximation of second degree for the discrete data

x | 2 | -1 | 0 | 1 | 2 |

y | 15 | 1 | 1 | 3 | 19 |

Solution. Let the equation of second degree polynomial be

x | y | Xy |  |  |  |  |

-2 | 15 | -30 | 4 | 60 | -8 | 16 |

-1 | 1 | -1 | 1 | 1 | -1 | 1 |

0 | 1 | 0 | 0 | 0 | 0 | 0 |

1 | 3 | 3 | 1 | 3 | 1 | 1 |

2 | 19 | 38 | 4 | 76 | 8 | 16 |

|  |  |  |  |  |  |

Normal equations are

On putting the values of  x,

x,  y,

y, xy,

xy,  have

have

On solving (5),(6),(7), we get,

The required polynomial of second degree is

Example: Fit a second degree parabola to the following data.

X = 1.0 | 1.5 | 2.0 | 2.5 | 3.0 | 3.5 | 4.0 |

Y = 1.1 | 1.3 | 1.6 | 2.0 | 2.7 | 3.4 | 4.1 |

Solution

We shift the origin to (2.5, 0) antique 0.5 as the new unit. This amounts to changing the variable x to X, by the relation X = 2x – 5.

Let the parabola of fit be y = a + bX The values of

The values of  X etc. Are calculated as below:

X etc. Are calculated as below:

x | X | y | Xy |  |  |  |  |

1.0 | -3 | 1.1 | -3.3 | 9 | 9.9 | -27 | 81 |

1.5 | -2 | 1.3 | -2.6 | 4 | 5.2 | -5 | 16 |

2.0 | -1 | 1.6 | -1.6 | 1 | 1.6 | -1 | 1 |

2.5 | 0 | 2.0 | 0.0 | 0 | 0.0 | 0 | 0 |

3.0 | 1 | 2.7 | 2.7 | 1 | 2.7 | 1 | 1 |

3.5 | 2 | 3.4 | 6.8 | 4 | 13.6 | 8 | 16 |

4.0 | 3 | 4.1 | 12.3 | 9 | 36.9 | 27 | 81 |

Total | 0 | 16.2 | 14.3 | 28 | 69.9 | 0 | 196 |

The normal equations are

7a + 28c =16.2; 28b =14.3;. 28a +196c=69.9

Solving these as simultaneous equations we get

Replacing X bye 2x – 5 in the above equation we get

Which simplifies to y =

This is the required parabola of the best fit.

Example: Fit the curve  by using the method of least square.

by using the method of least square.

X | 1 | 2 | 3 | 4 | 5 | 6 |

Y | 7.209 | 5.265 | 3.846 | 2.809 | 2.052 | 1.499 |

Sol.

Here-

Now put-

Then we get-

x | Y |  | XY |  |

1 | 7.209 | 1.97533 | 1.97533 | 1 |

2 | 5.265 | 1.66108 | 3.32216 | 4 |

3 | 3.846 | 1.34703 | 4.04109 | 9 |

4 | 2.809 | 1.03283 | 4.13132 | 16 |

5 | 2.052 | 0.71881 | 3.59405 | 25 |

6 | 1.499 | 0.40480 | 2.4288 | 36 |

Sum = 21 |

| 7.13988 | 19.49275 | 91 |

Normal equations are-

Putting the values form the table, we get-

7.13988 = 6c + 21b

19.49275 = 21c + 91b

On solving, we get-

b = -0.3141 and c = 2.28933

c =

Now put these values in equations (1), we get-

Example: Estimate the chlorine residual in a swimming pool 5 hours after it has been treated with chemicals by fitting an exponential curve of the form

of the data given below-

of the data given below-

Hours(X) | 2 | 4 | 6 | 8 | 10 | 12 |

Chlorine residuals (Y) | 1.8 | 1.5 | 1.4 | 1.1 | 1.1 | 0.9 |

Sol.

Taking log on the curve which is non-linear,

We get-

Put

Then-

Which is the linear equation in X,

Its nomal equations are-

X Y Y* = ln Y X2 XY*

2 1.8 0.5878 4 0.1756

4 1.5 0.4055 16 1.622

6 1.4 0.3365 36 2.019

8 1.1 0.0953 64 0.7264

10 1.1 0.0953 100 0.953

12 0.9 -0.10536 144 -1.26432

42 1.415 364 5.26752

Here N = 6,

Thus the normal equations are-

On solving, we get

Or

A = 2.013 and B = 0.936

Hence the required least square exponential curve-

Prediction-

Chlorine content after 5 hours-

Key takeaways-

When two variables are related in such a way that change in the value of one variable affects the value of the other variable, then these two variables are said to be correlated and there is correlation between two variables.

Example- Height and weight of the persons of a group.

The correlation is said to be perfect correlation if two variables vary in such a way that their ratio is constant always.

Scatter diagram-

Karl Pearson’s coefficient of correlation-

Here-  and

and

Note-

1. Correlation coefficient always lies between -1 and +1.

2. Correlation coefficient is independent of change of origin and scale.

3. If the two variables are independent then correlation coefficient between them is zero.

Perfect Correlation: If two variables vary in such a way that their ratio is always constant, then the correlation is said to be perfect.

Correlation coefficient | Type of correlation |

+1 | Perfect positive correlation |

-1 | Perfect negative correlation |

0.25 | Weak positive correlation |

0.75 | Strong positive correlation |

-0.25 | Weak negative correlation |

-0.75 | Strong negative correlation |

0 | No correlation |

Example: Find the correlation coefficient between Age and weight of the following data-

Age | 30 | 44 | 45 | 43 | 34 | 44 |

Weight | 56 | 55 | 60 | 64 | 62 | 63 |

Sol.

x | y |  |  |  |  | (   |

30 | 56 | -10 | 100 | -4 | 16 | 40 |

44 | 55 | 4 | 16 | -5 | 25 | -20 |

45 | 60 | 5 | 25 | 0 | 0 | 0 |

43 | 64 | 3 | 9 | 4 | 16 | 12 |

34 | 62 | -6 | 36 | 2 | 4 | -12 |

44 | 63 | 4 | 16 | 3 | 9 | 12 |

Sum= 240 |

360 |

0 |

202 |

0 |

70

|

32 |

Karl Pearson’s coefficient of correlation-

Here the correlation coefficient is 0.27.which is the positive correlation (weak positive correlation), this indicates that the as age increases, the weight also increase.

Example:

Ten students got the following percentage of marks in Economics and Statistics

Calculate the  of correlation.

of correlation.

Roll No. |  |  |  |  |  |  |  |  |  |  |

Marks in Economics |  |  |  |  |  |  |  |  |  |  |

Marks in  |  |  |  |  |  |  |  |  |  |  |

Solution:

Let the marks of two subjects be denoted by  and

and  respectively.

respectively.

Then the mean for  marks

marks  and the mean ofy marks

and the mean ofy marks

and

and are deviations ofx’s and

are deviations ofx’s and  ’s from their respective means, then the data may be arranged in the following form:

’s from their respective means, then the data may be arranged in the following form:

x | y | X = x - 65 | Y = y - 66 | X2 | Y2 | X.Y |

78 36 98 25 75 82 90 62 65 39 650 | 84 51 91 60 68 62 86 58 53 47 660 | 13 -29 33 -40 10 17 25 -3 0 -26 0 | 18 -15 25 -6 2 -4 20 -8 -13 -19 0 | 169 841 1089 1600 100 289 625 9 0 676 5398

| 324 225 625 36 4 16 400 64 169 361 2224 | 234 435 825 240 20 -68 500 24 0 494 2704

|

|

|

|

|  |  |  |

Short-cut method to calculate correlation coefficient-

Here,

Example: Find the correlation coefficient between the values X and Y of the dataset given below by using short-cut method-

X | 10 | 20 | 30 | 40 | 50 |

Y | 90 | 85 | 80 | 60 | 45 |

Sol.

X | Y |  |  |  |  |  |