Module 5

Matrices

- Definition:

An arrangement of m.n numbers in m rows and n columns is called a matrix of order mxn.

Generally a matrix is denoted by capital letters. Like, A, B, C, ….. Etc.

2. Types of matrices:- (Review)

- Row matrix

- Column matrix

- Square matrix

- Diagonal matrix

- Trace of a matrix

- Determinant of a square matrix

- Singular matrix

- Non – singular matrix

- Zero/ null matrix

- Unit/ Identity matrix

- Scaler matrix

- Transpose of a matrix

- Triangular matrices

Upper triangular and lower triangular matrices,

14. Conjugate of a matrix

15. Symmetric matrix

16. Skew – symmetric matrix

3. Operations on matrices:

- Equality of two matrices

- Multiplication of A by a scalar k.

- Addition and subtraction of two matrices

- Product of two matrices

- Inverse of a matrix

4. Elementary transformations

a) Elementary row transformation

These are three elementary transformations

- Interchanging any two rows (Rij)

- Multiplying all elements in ist row by a non – zero constant k is denoted by KRi

- Adding to the elements in ith row by the kth multiple of jth row is denoted by

.

.

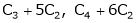

b) Elementary column transformations:

There are three elementary column transformations.

- Interchanging ith and jth column. Is denoted by Cij.

- Multiplying ith column by a non – zero constant k is denoted by kCj.

- Adding to the element of ith column by the kth multiple of jth column is denoted by Ci + kCj.

Rank of a matrix:

Let A be a given rectangular matrix or square matrix. From this matrix select any r rows from these r rows select any r columns thus getting a square matrix of order r x r. The determinant of this matrix of order r x r is called minor or order r.

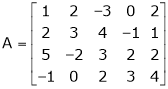

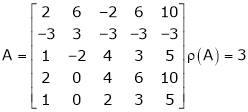

e.g.

If

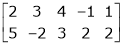

For example select 2nd and 3rd row. i.e.

Now select any two columns. Suppose, 1st and 2nd.

i.e.

Invariance of rank through elementary transformations.

- The rank of matrix remains unchanged by elementary transformations. i.e. from a matrix. A we get another matrix B by using some elementary transformation. Then

Rank of A = Rank of B

2. Equivalent matrices:

The matrix B is obtained from a matrix A by a sequence of a finite no. Of elementary transformations is said to be equivalent to A. And we write.

Normal form or canonical form:

Every mxn matrix of rank r can be reduced to the form

By a finite sequence of elementary transformation. This form is called normal form or the first canonical form of the matrix A.

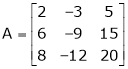

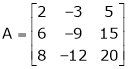

Ex. 1

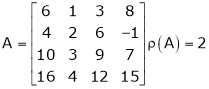

Reduce the following matrix to normal form of Hence find it’s rank,

Solution:

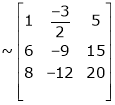

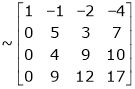

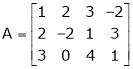

We have,

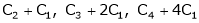

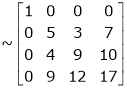

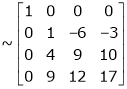

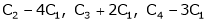

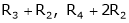

Apply

Rank of A = 1

Rank of A = 1

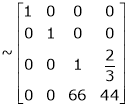

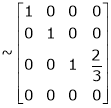

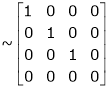

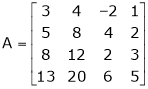

Ex. 2

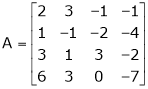

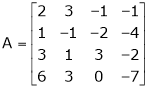

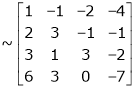

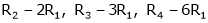

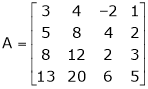

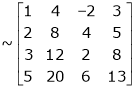

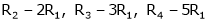

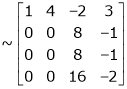

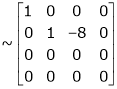

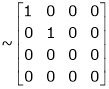

Find the rank of the matrix

Solution:

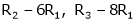

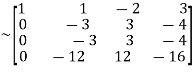

We have,

Apply R12

Rank of A = 3

Rank of A = 3

Ex. 3

Find the rank of the following matrices by reducing it to the normal form.

Solution:

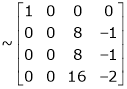

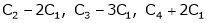

Apply C14

H.W.

Reduce the follo9wing matrices in to the normal form and hence find their ranks.

a)

b)

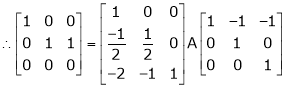

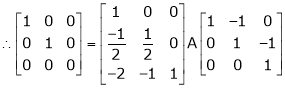

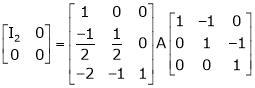

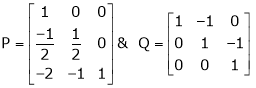

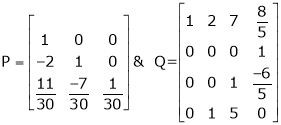

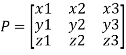

5. Reduction of a matrix a to normal form PAQ.

If A is a matrix of rank r, then there exist a non – singular matrices P & Q such that PAQ is in normal form.

i.e.

To obtained the matrices P and Q we use the following procedure.

Working rule:-

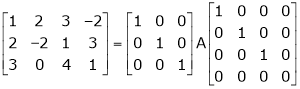

- If A is a mxn matrix, write A = Im A In.

- Apply row transformations on A on l.h.s. And the same row transformations on the prefactor Im.

- Apply column transformations on A on l.h.s and the column transformations on the postfactor In.

So that A on the l.h.s. Reduces to normal form.

Example 1

If  Find Two

Find Two

Matrices P and Q such that PAQ is in normal form.

Solution:

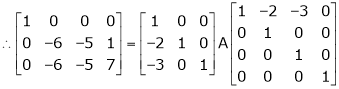

Here A is a square matrix of order 3 x 3. Hence we write,

A = I3 A.I3

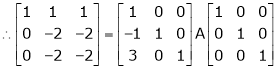

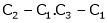

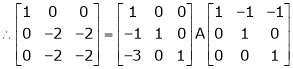

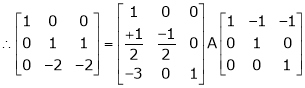

i.e.

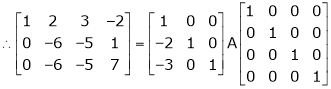

i.e.

Example 2

Find a non – singular matrices p and Q such that P A Q is in normal form where

Solution:

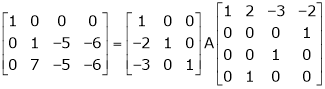

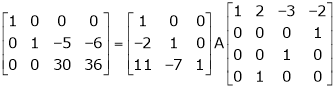

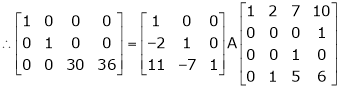

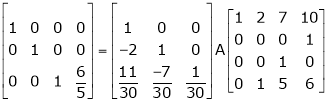

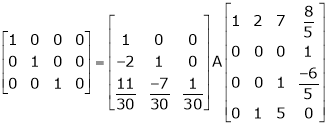

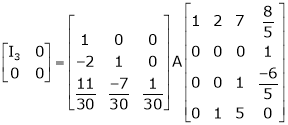

Here A is a matrix of order 3 x 4. Hence we write A as,

i.e.

i.e.

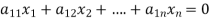

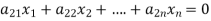

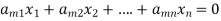

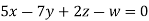

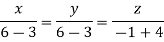

The standard form of system of homogenous linear equation is

(1)

(1)

…………………………………

It has m number of equations and n number of unknowns.

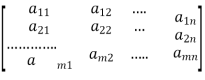

Let the coefficient matrix be A =

By elementary transformation we reduce the matrix A in triangular form we calculate the rank of matrix A, let rank of matrix A be r.

The following condition helps us to know the solution (if exists consistent otherwise inconsistent) of system of above equations:

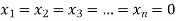

- If r = n i.e. rank of coefficient matrix is equal to number of unknowns then system of equations has trivial zero solution by

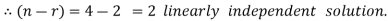

II. If r < n i.e. rank of coefficient matrix is less than the number of unknowns then system of equations has (n-r) linearly independent solutions.

In this case we assume the value of (n-r) variables and other are expressed in terms of these assumed variables. The system has infinite number of solutions.

III. If m < n i.e. number of equations is less than the number of unknowns then system of equations has non zero and infinite number of solutions.

IV. If m=n number of equations is equal to the number of unknowns then system of equations has non zero unique solution if and only if |A| 0. The solution is consistent. The |A| is called eliminant of equations.

0. The solution is consistent. The |A| is called eliminant of equations.

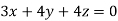

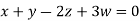

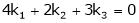

Example1: Solve the equations:

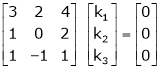

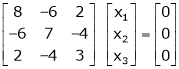

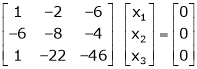

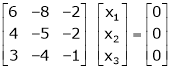

Let the coefficient matrix be A =

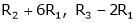

Apply

A

Apply

A

Since |A| ,

,

Also number of equation is m=3 and number of unknowns n=3

Since rank of coefficient matrix A = n number of unknowns

The system of equation is consistent and has trivial zero solution.

That is

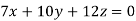

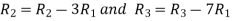

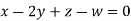

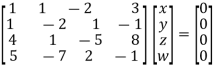

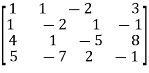

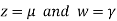

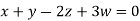

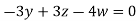

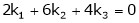

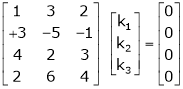

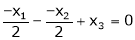

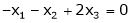

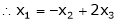

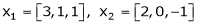

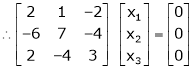

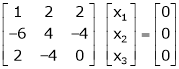

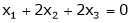

Example2: Solve completely the system of equations

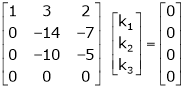

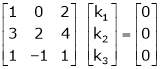

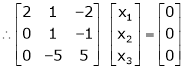

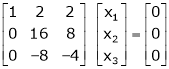

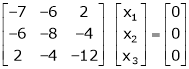

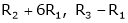

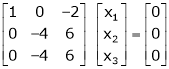

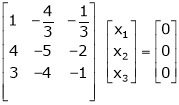

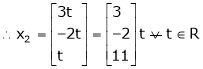

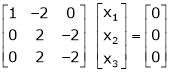

Solution: We can write the given system of equation as AX=0

Or

Where coefficient matrix A =

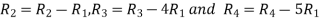

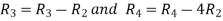

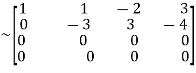

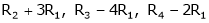

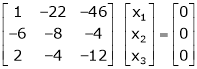

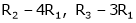

Apply

A

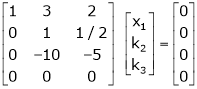

Apply

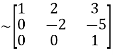

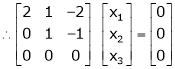

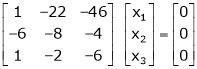

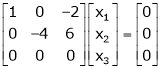

A  …(i)

…(i)

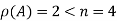

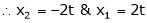

Since |A|=0 and also  , number of equations m =4 and number of unknowns n=4.

, number of equations m =4 and number of unknowns n=4.

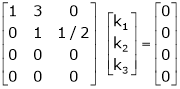

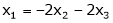

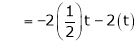

Here

So that the system has (n-r) linearly independent solution.

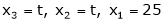

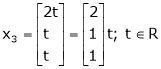

Let

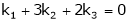

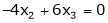

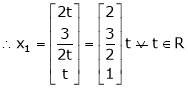

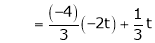

Then from equation (i) we get

Putting

We get  has infinite number solution.

has infinite number solution.

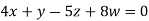

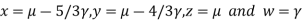

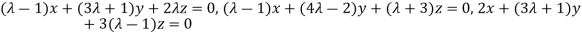

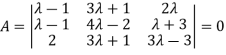

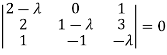

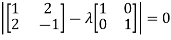

Example 3: find the value of λ for which the equations

Are consistent, and find the ratio of x: y: z when λ has the smallest of these values. What happen when λ has the greatest o these values?

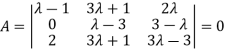

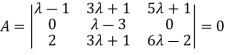

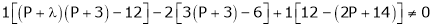

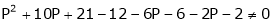

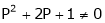

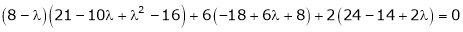

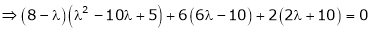

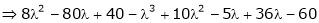

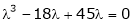

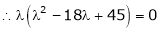

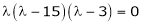

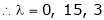

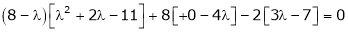

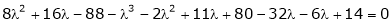

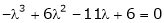

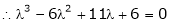

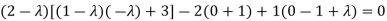

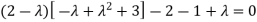

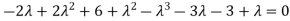

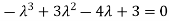

The system of equation is consistent only if the determinant of coefficient matrix is zero.

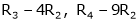

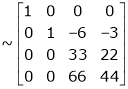

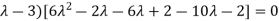

Apply

Apply

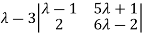

Or  =0

=0

Or

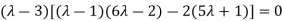

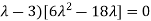

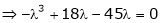

Or (

Or (

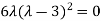

Or

Or  ………….(i)

………….(i)

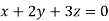

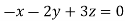

- When λ =0, the equation become

3z=0

3z=0

On solving we get

Hence x=y=z

II. When λ=3, equation become identical.

Introduction:

In this chapter we are going to study a very important theorem viz first we have to study of eigen values and eigen vector.

- Vector

An ordered n – touple of numbers is called an n – vector. Thus the ‘n’ numbers x1, x2, ………… xn taken in order denote the vector x. i.e. x = (x1, x2, ……., xn).

Where the numbers x1, x2, ……….., xn are called component or co – ordinates of a vector x. A vector may be written as row vector or a column vector.

If A be an mxn matrix then each row will be an n – vector & each column will be an m – vector.

2. Linear Dependence

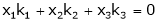

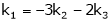

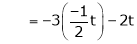

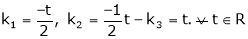

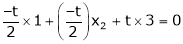

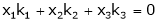

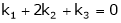

A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, ……., kr not all zero such that

k1 + x2k2 + …………….. + xr kr = 0 … (1)

3. Linear Independence

A set of r vectors x1, x2, …………., xr is said to be linearly independent if there exist scalars k1, k2, …………, kr all zero such that

x1 k1 + x2 k2 + …….. + xr kr = 0

Note:-

- Equation (1) is known as a vector equation.

- If the vector equation has non – zero solution i.e. k1, k2, …., kr not all zero. Then the vector x1, x2, ………. xr are said to be linearly dependent.

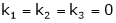

- If the vector equation has only trivial solution i.e.

k1 = k2 = …….= kr = 0. Then the vector x1, x2, ……, xr are said to linearly independent.

4. Linear combination

A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xr kr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

Results:

- A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors.

- A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

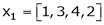

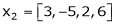

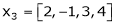

Example 1

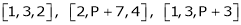

Are the vectors  ,

,  ,

,  linearly dependent. If so, express x1 as a linear combination of the others.

linearly dependent. If so, express x1 as a linear combination of the others.

Solution:

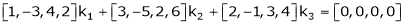

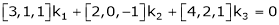

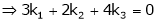

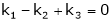

Consider a vector equation,

i.e.

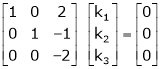

Which can be written in matrix form as,

Here  & no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

& no. Of unknown 3. Hence the system has infinite solutions. Now rewrite the questions as,

Put

and

and

Thus

i.e.

i.e.

Since F11 k2, k3 not all zero. Hence  are linearly dependent.

are linearly dependent.

Example 2

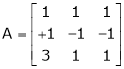

Examine whether the following vectors are linearly independent or not.

and

and  .

.

Solution:

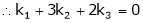

Consider the vector equation,

i.e.  … (1)

… (1)

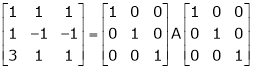

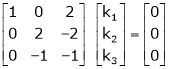

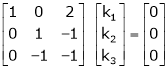

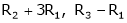

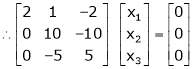

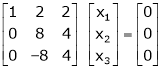

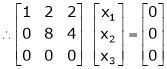

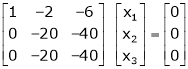

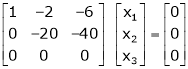

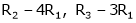

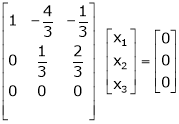

Which can be written in matrix form as,

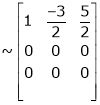

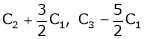

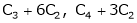

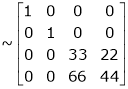

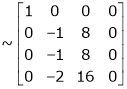

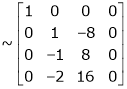

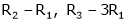

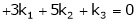

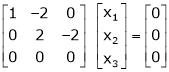

R12

R2 – 3R1, R3 – R1

R3 + R2

Here Rank of coefficient matrix is equal to the no. Of unknowns. i.e. r = n = 3.

Hence the system has unique trivial solution.

i.e.

i.e. vector equation (1) has only trivial solution. Hence the given vectors x1, x2, x3 are linearly independent.

Example 3

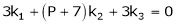

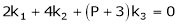

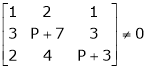

At what value of P the following vectors are linearly independent.

Solution:

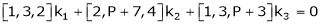

Consider the vector equation.

i.e.

Which is a homogeneous system of three equations in 3 unknowns and has a unique trivial solution.

If and only if Determinant of coefficient matrix is non zero.

consider

consider  .

.

.

.

i.e.

Thus for  the system has only trivial solution and Hence the vectors are linearly independent.

the system has only trivial solution and Hence the vectors are linearly independent.

Note:-

If the rank of the coefficient matrix is r, it contains r linearly independent variables & the remaining vectors (if any) can be expressed as linear combination of these vectors.

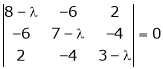

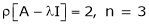

Characteristic equation:-

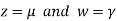

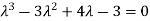

Let A he a square matrix,  be any scaler then

be any scaler then  is called characteristic equation of a matrix A.

is called characteristic equation of a matrix A.

Note:

Let a be a square matrix and ‘ ’ be any scaler then,

’ be any scaler then,

1)  is called characteristic matrix

is called characteristic matrix

2)  is called characteristic polynomial.

is called characteristic polynomial.

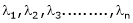

The roots of a characteristic equations are known as characteristic root or latent roots, eigen values or proper values of a matrix A.

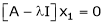

Eigen vector:-

Suppose  be an eigen value of a matrix A. Then

be an eigen value of a matrix A. Then  a non – zero vector x1 such that.

a non – zero vector x1 such that.

… (1)

… (1)

Such a vector ‘x1’ is called as eigen vector corresponding to the eigen value  .

.

Properties of Eigen values:-

- Then sum of the eigen values of a matrix A is equal to sum of the diagonal elements of a matrix A.

- The product of all eigen values of a matrix A is equal to the value of the determinant.

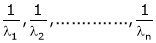

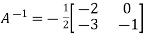

- If

are n eigen values of square matrix A then

are n eigen values of square matrix A then  are m eigen values of a matrix A-1.

are m eigen values of a matrix A-1. - The eigen values of a symmetric matrix are all real.

- If all eigen values are non – zen then A-1 exist and conversely.

- The eigen values of A and A’ are same.

Properties of eigen vector:-

- Eigen vector corresponding to distinct eigen values are linearly independent.

- If two are more eigen values are identical then the corresponding eigen vectors may or may not be linearly independent.

- The eigen vectors corresponding to distinct eigen values of a real symmetric matrix are orthogonal.

Example 1

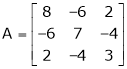

Determine the eigen values of eigen vector of the matrix.

Solution:

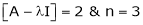

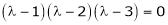

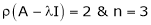

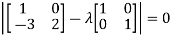

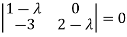

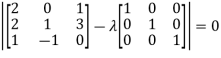

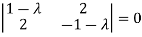

Consider the characteristic equation as,

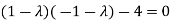

i.e.

i.e.

i.e.

Which is the required characteristic equation.

are the required eigen values.

are the required eigen values.

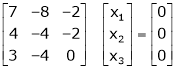

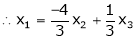

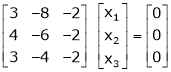

Now consider the equation

… (1)

… (1)

Case I:

If  Equation (1) becomes

Equation (1) becomes

R1 + R2

Thus

independent variable.

independent variable.

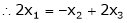

Now rewrite equation as,

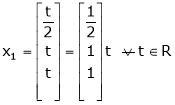

Put x3 = t

&

&

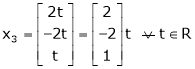

Thus  .

.

Is the eigen vector corresponding to  .

.

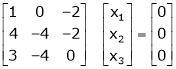

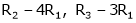

Case II:

If  equation (1) becomes,

equation (1) becomes,

Here

independent variables

independent variables

Now rewrite the equations as,

Put

&

&

.

.

Is the eigen vector corresponding to  .

.

Case III:

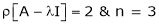

If  equation (1) becomes,

equation (1) becomes,

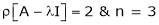

Here rank of

independent variable.

independent variable.

Now rewrite the equations as,

Put

Thus  .

.

Is the eigen vector for  .

.

Example 2

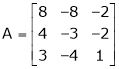

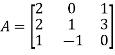

Find the eigen values of eigen vector for the matrix.

Solution:

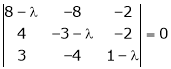

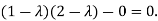

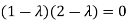

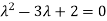

Consider the characteristic equation as

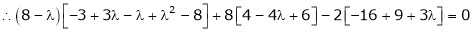

i.e.

i.e.

are the required eigen values.

are the required eigen values.

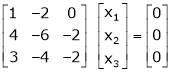

Now consider the equation

… (1)

… (1)

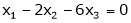

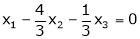

Case I:

Equation (1) becomes,

Equation (1) becomes,

Thus  and n = 3

and n = 3

3 – 2 = 1 independent variables.

3 – 2 = 1 independent variables.

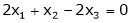

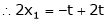

Now rewrite the equations as,

Put

,

,

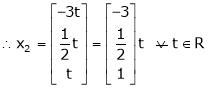

i.e. the eigen vector for

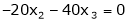

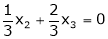

Case II:

If  equation (1) becomes,

equation (1) becomes,

Thus

Independent variables.

Now rewrite the equations as,

Put

Is the eigen vector for

Now

Case II:-

If  equation (1) gives,

equation (1) gives,

R1 – R2

Thus

independent variables

independent variables

Now

Put

Thus

Is the eigen vector for  .

.

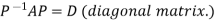

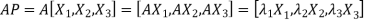

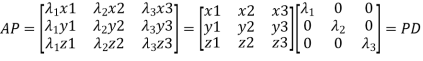

Let A be a square matrix of order n has n linearly independent Eigen vectors which form the matrix P such that

Where P is called the modal matrix and D is known as spectral matrix.

Procedure: let A be a square matrix of order 3.

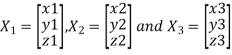

Let three Eigen vectors of A are  corresponding to Eigen values

corresponding to Eigen values

Let

{by characteristics equation of A}

{by characteristics equation of A}

Or

Or

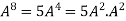

Note: The method of diagonalization is helpful in calculating power of a matrix.

.Then for an integer n we have

.Then for an integer n we have

We are using the example of 1.6*

Example1: Diagonalise the matrix

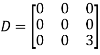

Let A=

The three Eigen vectors obtained are (-1,1,0), (-1,0,1) and (3,3,3) corresponding to Eigen values  .

.

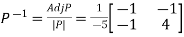

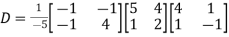

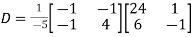

Then  and

and

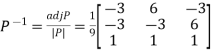

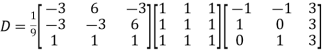

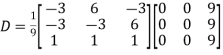

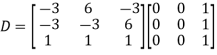

Also we know that

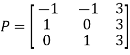

Example2: Diagonalise the matrix

Let A =

The Eigen vectors are (4,1),(1,-1) corresponding to Eigen values  .

.

Then  and also

and also

Also we know that

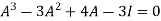

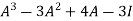

It states that every square matrix A when substituted in its characteristics equation, will satisfies it.

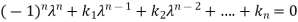

Let A is a square matrix of order n. The characteristic equation of the matrix A.

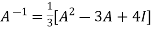

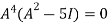

Then according to Cayley-Hamilton theorem

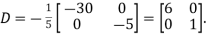

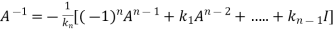

We can also find the inverse of A ,

Multiplying  on both side of above equation we get

on both side of above equation we get

Or

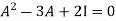

Example1: Verify the Cayley-Hamilton theorem and find the inverse.

?

?

Let A =

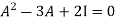

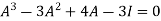

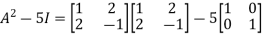

The characteristics equation of A is

Or

Or

Or

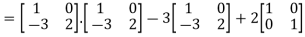

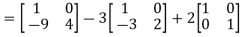

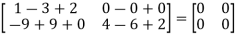

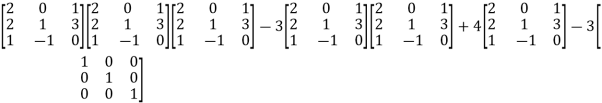

By Cayley-Hamilton theorem

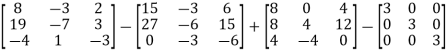

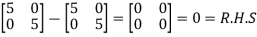

L.H.S:

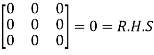

=  =0=R.H.S

=0=R.H.S

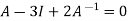

Multiply both side by  on

on

Or

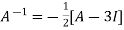

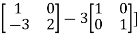

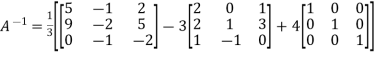

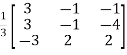

Or  [

[

Or

Example2: Verify the Cayley-Hamilton theorem and find the inverse.

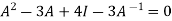

The characteristics equation of A is

Or

Or

Or

Or

Or

By Cayley-Hamilton theorem

L.H.S.

=

=

=

Multiply both side with  in

in

Or

Or

=

Example3: Using Cayley-Hamilton theorem, find  , if A =

, if A =  ?

?

Let A =

The characteristics equation of A is

Or

Or

By Cayley-Hamilton theorem

L.H.S.

=

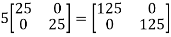

By Cayley-Hamilton theorem we have

Multiply both side by

.

.

Or

=

=

References

1. G.B. Thomas and R.L. Finney, Calculus and Analytic geometry, 9th Edition,Pearson, Reprint, 2002.

2. Erwin kreyszig, Advanced Engineering Mathematics, 9th Edition, John Wiley & Sons, 2006.

3. Veerarajan T., Engineering Mathematics for first year, Tata McGraw-Hill, New Delhi, 2008.

4. Ramana B.V., Higher Engineering Mathematics, Tata McGraw Hill New Delhi, 11thReprint, 2010.

5. D. Poole, Linear Algebra: A Modern Introduction, 2nd Edition, Brooks/Cole, 2005.

6. N.P. Bali and Manish Goyal, A text book of Engineering Mathematics, Laxmi Publications, Reprint, 2008.

7. B.S. Grewal, Higher Engineering Mathematics, Khanna Publishers, 36th Edition, 2010.