Unit - 2

Relational Data Model

Relational data model is the primary data model, which is used widely around the world for data storage and processing. This model is simple and it has all the properties and capabilities required to process data with storage efficiency.

Concepts

Tables−In relational data model, relations are saved in the format of Tables. This format stores the relation among entities. A table has rows and columns, where rows represents records and columns represent the attributes.

Tuple− A single row of a table, which contains a single record for that relation is called a tuple.

Relation instance− A finite set of tuples in the relational database system represents relation instance. Relation instances do not have duplicate tuples.

Relation schema− A relation schema describes the relation name (table name), attributes, and their names.

Relation key−Each row has one or more attributes, known as relation key, which can identify the row in the relation (table) uniquely.

Attribute domain−Every attribute has some predefined value scope, known as attribute domain.

Constraints

Every relation has some conditions that must hold for it to be a valid relation. These conditions are called Relational Integrity Constraints. There are three main integrity constraints −

● Key constraints

● Domain constraints

● Referential integrity constraints

Key Constraints

There must be at least one minimal subset of attributes in the relation, which can identify a tuple uniquely. This minimal subset of attributes is called key for that relation. If there are more than one such minimal subsets, these are called candidate keys.

Key constraints force that −

- In a relation with a key attribute, no two tuples can have identical values for key attributes.

- A key attribute cannot have NULL values.

- Key constraints are also referred to as Entity Constraints.

Domain Constraints

Attributes have specific values in real-world scenario. For example, age can only be a positive integer. The same constraints have been tried to employ on the attributes of a relation. Every attribute is bound to have a specific range of values. For example, age cannot be less than zero and telephone numbers cannot contain a digit outside 0-9.

Referential integrity Constraints

Referential integrity constraints work on the concept of Foreign Keys. A foreign key is a key attribute of a relation that can be referred in other relation.

Referential integrity constraint states that if a relation refers to a key attribute of a different or same relation, then that key element must exist.

Key takeaway

Relational data model is the primary data model, which is used widely around the world for data storage and processing. This model is simple and it has all the properties and capabilities required to process data with storage efficiency.

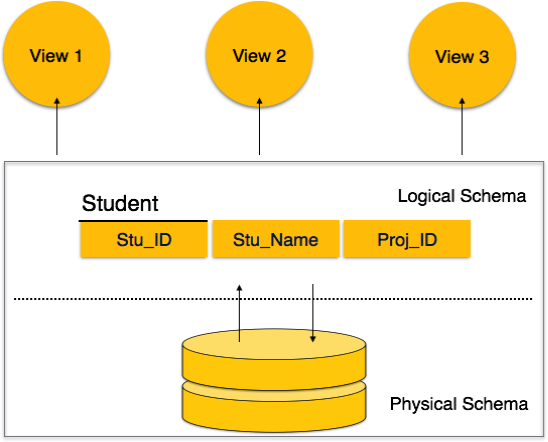

Database Schema

A database schema is the skeleton structure that represents the logical view of the entire database. It defines how the data is organized and how the relations among

Them are associated. It formulates all the constraints that are to be applied on the data.

A database schema defines its entities and the relationship among them. It contains a descriptive detail of the database, which can be depicted by means of schema diagrams. It’s the database designers who design the schema to help programmers understand the database and make it useful.

Fig1: Database schema

A database schema can be divided broadly into two categories −

● Physical Database Schema−This schema pertains to the actual storage of data and its form of storage like files, indices, etc. It defines how the data will be stored in a secondary storage.

● Logical Database Schema−This schema defines all the logical constraints that need to be applied on the data stored. It defines tables, views, and integrity constraints.

Database Instance

It is important that we distinguish these two terms individually. Database schema is the skeleton of database. It is designed when the database doesn't exist at all. Once the database is operational, it is very difficult to make any changes to it. A database schema does not contain any data or information.

A database instance is a state of operational database with data at any given time. It contains a snapshot of the database. Database instances tend to change with time. A DBMS ensures that its every instance (state) is in a valid state, by diligently following all the validations, constraints, and conditions that the database designers have imposed.

Key takeaway

A database schema is the skeleton structure that represents the logical view of the entire database. It defines how the data is organized and how the relations among them are associated. It formulates all the constraints that are to be applied on the data.

A database schema defines its entities and the relationship among them. It contains a descriptive detail of the database, which can be depicted by means of schema diagrams. It’s the database designers who design the schema to help programmers understand the database and make it useful.

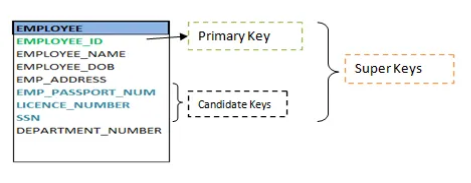

Keys are the entity's attributes, which define the entity's record uniquely.

Several types of keys exist.

These are listed underneath:

❏ Composite key

A composite key consists of two attributes or more, but it must be minimal.

❏ Candidate key

A candidate key is a key that is simple or composite and special and minimal. It is special since no two rows can have the same value at any time in a table. It is minimal since, in order to achieve uniqueness, every column is required.

❏ Super key

The Super Key is one or more of the entity's attributes that uniquely define the database record.

❏ Primary key

The primary key is a candidate key that the database designer chooses to be used as an identification mechanism for the entire set of entities. In a table, it must uniquely classify tuples and not be null.

In the ER model, the primary key is indicated by underlining the attribute.

● To uniquely recognise tuples in a table, the designer selects a candidate key. It must not be empty.

● A key is selected by the database builder to be used by the entire entity collection as an authentication mechanism. This is regarded as the primary key. In the ER model, this key is indicated by underlining the attribute.

Fig 2: Shows different keys

❏ Alternate key

Alternate keys are all candidate keys not chosen as the primary key.

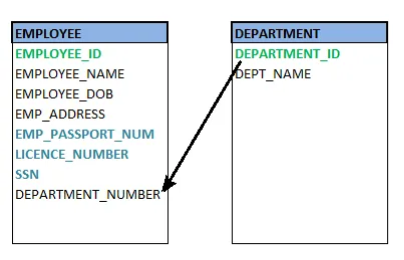

❏ Foreign key

A foreign key (FK) is an attribute in a table that, in another table, references the primary key OR it may be null. The same data type must be used for both international and primary keys.

Fig 3: Foreign key

❏ Secondary key

A secondary key is an attribute used exclusively (can be composite) for retrieval purposes, such as: phone and last name.

Key takeaway:

Keys are the entity's attributes, which define the entity's record uniquely. A candidate key is a key that is simple or composite and special and minimal. Primary key, uniquely recognised tuples in a table, the designer selects a candidate key. It must not be empty.

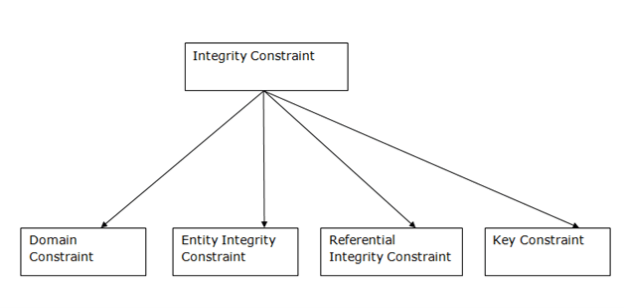

Integrity Constraints

● Integrity constraints are a set of rules. It is used to maintain the quality of information.

● Integrity constraints ensure that the data insertion, updating, and other processes have to be performed in such a way that data integrity is not affected.

● Thus, integrity constraint is used to guard against accidental damage to the database.

Types of Integrity Constraint

Fig 4: Integrity Constraint

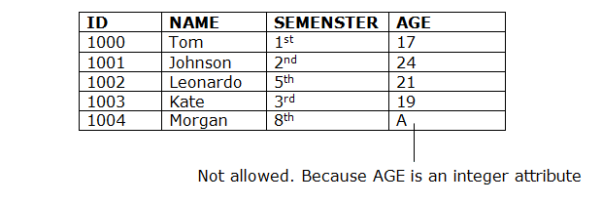

1. Domain constraints

- Domain constraints can be defined as the definition of a valid set of values for an attribute.

- The data type of domain includes string, character, integer, time, date, currency, etc. The value of the attribute must be available in the corresponding domain.

Example:

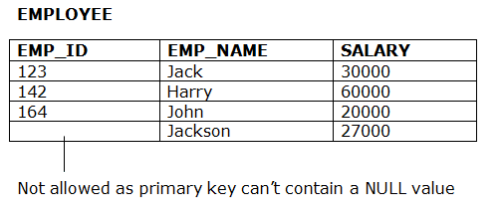

2. Entity integrity constraints

- The entity integrity constraint states that primary key value can't be null.

- This is because the primary key value is used to identify individual rows in relation and if the primary key has a null value, then we can't identify those rows.

- A table can contain a null value other than the primary key field.

Example:

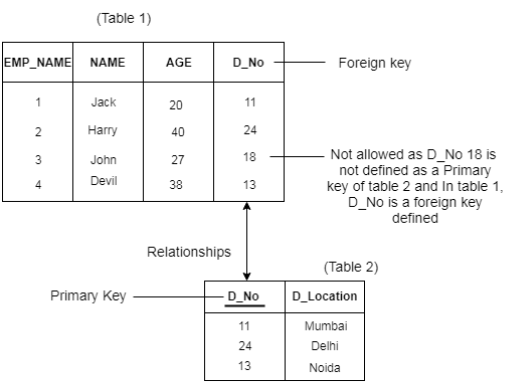

3. Referential Integrity Constraints

- A referential integrity constraint is specified between two tables.

- In the Referential integrity constraints, if a foreign key in Table 1 refers to the Primary Key of Table 2, then every value of the Foreign Key in Table 1 must be null or be available in Table.

Example:

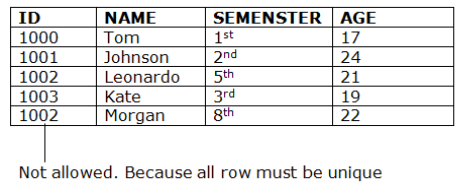

4. Key constraints

- Keys are the entity set that is used to identify an entity within its entity set uniquely.

- An entity set can have multiple keys, but out of which one key will be the primary key. A primary key can contain a unique and null value in the relational table.

Example:

Foreign Key in DBMS

A foreign key is different from a super key, candidate key or primary key because a foreign key is the one that is used to link two tables together or create connectivity between the two.

Here, in this section, we will discuss foreign key, its use and look at some examples that will help us to understand the working and use of the foreign key. We will also see its practical implementation on a database, i.e., creating and deleting a foreign key on a table.

What is a Foreign Key

A foreign key is the one that is used to link two tables together via the primary key. It means the columns of one table points to the primary key attribute of the other table. It further means that if any attribute is set as a primary key attribute will work in another table as a foreign key attribute. But one should know that a foreign key has nothing to do with the primary key.

Use of Foreign Key

The use of a foreign key is simply to link the attributes of two tables together with the help of a primary key attribute. Thus, it is used for creating and maintaining the relationship between the two relations.

Example of Foreign Key

Let’s discuss an example to understand the working of a foreign key.

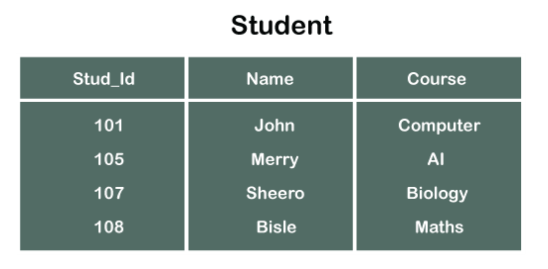

Consider two tables Student and Department having their respective attributes as shown in the below table structure:

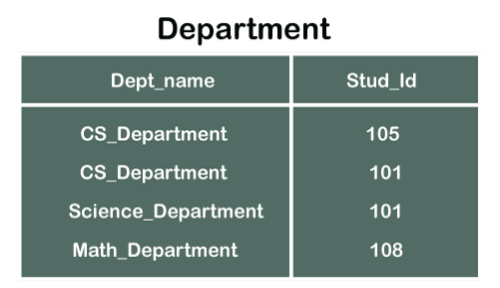

In the tables, one attribute, you can see, is common, that is Stud_Id, but it has different key constraints for both tables. In the Student table, the field Stud_Id is a primary key because it is uniquely identifying all other fields of the Student table. On the other hand, Stud_Id is a foreign key attribute for the Department table because it is acting as a primary key attribute for the Student table. It means that both the Student and Department table are linked with one another because of the Stud_Id attribute.

In the below-shown figure, you can view the following structure of the relationship between the two tables.

Note: Referential Integrity in DBMS is developed from the concept of the foreign key. It is clear that a primary key is an alone existing key and a foreign key always reference to a primary key in some other table, in which the table that contains the primary key is known as the referenced table or parent table for the other table that is having the foreign key.

Creating Foreign Key constraint

On CREATE TABLE

Below is the syntax that will make us learn the creation of a foreign key in a table:

- CREATE TABLE Department (

- Dept_name varchar (120) NOT NULL,

- Stud_Id int,

- FOREIGN KEY (Stud_Id) REFERENCES Student (Stud_Id)

- );

So, in this way, we can set a foreign key for a table in the MYSQL database.

In case of creating a foreign key for a table in SQL or Oracle server, the following syntax will work:

- CREATE TABLE Department (

- Dept_name varchar (120) NOT NULL,

- Stud_Id int FOREIGN KEY REFERENCES Student (Stud_Id)

- );

On ALTER TABLE

Following is the syntax for creating a foreign key constraint on ALTER TABLE:

- ALTER TABLE Department

- ADD FOREIGN KEY (Stud_Id) REFERENCES Student (Stud_Id);

Dropping Foreign Key

In order to delete a foreign key, there is a below-described syntax that can be used:

- ALTER TABLE Department

- DROP FOREIGN KEY FK_StudentDepartment;

So, in this way, we can drop a foreign key using the ALTER TABLE in the MYSQL database.

Point to remember

When you drop the foreign key, one needs to take care of the integrity of the tables which are connected via a foreign key. In case you make changes in one table and disturbs the integrity of both tables, it may display certain errors due to improper connectivity between the two tables.

Referential Actions

There are some actions that are linked with the actions taken by the foreign key table holder:

1) Cascade

When we delete rows in the parent table (i.e., the one holding the primary key), the same columns in the other table (i.e., the one holding a foreign key) also gets deleted. Thus, the action is known as Cascade.

2) Set NULL

Such referential action maintains the referential integrity of both tables. When we manipulate/delete a referenced row in the parent/referenced table, in the child table (table having foreign key), the value of such referencing row is set as NULL. Such a referential action performed is known as Set NULL.

3) Set DEFAULT

Such an action takes place when the values in the referenced row of the parent table are updated, or the row is deleted, the values in the child table are set to default values of the column.

4) Restrict

It is the restriction constraint where the value of the referenced row in the parent table cannot be modified or deleted unless it is not referred to by the foreign key in the child table. Thus, it is a normal referential action of a foreign key.

5) No Action

It is also a restriction constraint of the foreign key but is implemented only after trying to modify or delete the referenced row of the parent table.

6) Triggers

All these and other referential actions are basically implemented as triggers where the actions of a foreign key are much similar or almost similar to user-defined triggers. However, in some cases, the ordered referential actions get replaced by their equivalent user-defined triggers for ensuring proper trigger execution.

Key takeaway

Integrity constraints are a set of rules. It is used to maintain the quality of information.

Integrity constraints ensure that the data insertion, updating, and other processes have to be performed in such a way that data integrity is not affected.

Thus, integrity constraint is used to guard against accidental damage to the database.

Relational Algebra is procedural query language. It takes Relation as input and generates relation as output. Relational algebra mainly provides theoretical foundation for relational databases and SQL.

Basic Operations which can be performed using relational algebra are:

1. Projection

2. Selection

3. Join

4. Union

5. Set Difference

6. Intersection

7. Cartesian product

8. Rename

Consider following relation R (A, B, C)

A | B | C |

1 | 1 | 1 |

2 | 3 | 1 |

4 | 1 | 3 |

2 | 3 | 4 |

5 | 4 | 5 |

1. Projection (π):

This operation is used to select particular columns from the relation.

Π(AB) R:

It will select A and B column from the relation R. It produces the output like:

A | B |

1 | 1 |

2 | 3 |

4 | 1 |

5 | 4 |

Project operation automatically removes duplicates from the resultant set.

2. Selection (σ):

This operation is used to select particular tuples from the relation.

σ(C<4) R:

It will select the tuples which have a value of c less than 4.

But select operation is used to only select the required tuples from the relation. To display those tuples on screen, select operation must be combined with project operation.

Π (σ (C<4) R) will produce the result like:

A | B | C |

1 | 1 | 1 |

2 | 3 | 1 |

4 | 1 | 3 |

3. Join

A Cartesian product followed by a selection criterion is basically a joint process.

Operation of join, denoted by ⋈.

The JOIN operation often allows tuples from different relationships to join different tuples

Types of JOIN:

Various forms of join operation are:

Inner Joins:

- Theta join

- EQUI join

- Natural join

Outer join:

- Left Outer Join

- Right Outer Join

- Full Outer Join

4. Union

Union operation in relational algebra is the same as union operation in set theory, only the restriction is that both relations must have the same set of attributes for a union of two relationships.

Syntax: table_name1 ∪ table_name2

For instance, if we have two tables with RegularClass and ExtraClass, both have a student column to save the student name, then,

∏Student(RegularClass) ∪ ∏Student(ExtraClass)

The above operation will send us the names of students who attend both normal and extra classes, reducing repetition.

5. Set difference

Set Difference in relational algebra is the same operation of set difference as in set theory, with the limitation that the same set of attributes can have both relationships.

Syntax: A - B

Where the A and B relationships.

For instance, if we want to find the names of students who attend the regular class, but not the extra class, then we can use the following procedure:

∏Student(RegularClass) - ∏Student(ExtraClass)

6. Intersection

The symbol ∩ is the definition of an intersection.

A ∩ B

Defines a relationship consisting of a set of all the tuples in both A and B. A and B must be union-compatible, however.

7. Cartesian product

This is used to merge information into one from two separate relationships(tables) and to fetch information from the merged relationship.

Syntax: A X B

For example, if we want to find the morning Regular Class and Extra Class data, then we can use the following operation:

σtime = 'morning' (RegularClass X ExtraClass)

Both RegularClass and ExtraClass should have the attribute time for the above query to operate.

8.Rename

Rename is a unified operation that is used to rename relationship attributes.

ρ (a/b)R renames the 'b' component of the partnership to 'a'.

Syntax: ρ(RelationNew, RelationOld)

Key takeaway:

Relational Algebra is procedural query language.

It takes Relation as input and generates relation as output.

Data stored in a database can be retrieved using a query.

Relational Calculus

● Relational calculus is a non-procedural query language. In the non-procedural query language, the user is concerned with the details of how to obtain the end results.

● The relational calculus tells what to do but never explains how to do.

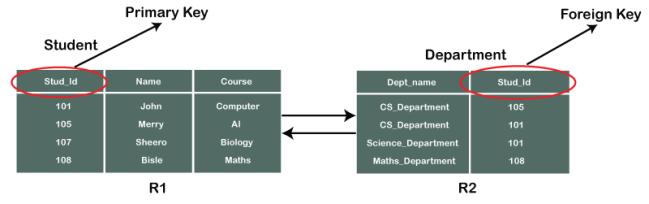

Types of Relational calculus:

Fig 5: Relational calculus

1. Tuple Relational Calculus (TRC)

- The tuple relational calculus is specified to select the tuples in a relation. In TRC, filtering variable uses the tuples of a relation.

- The result of the relation can have one or more tuples.

Notation:

{T | P (T)} or {T | Condition (T)}

Where

T is the resulting tuples

P(T) is the condition used to fetch T.

For example:

{ T.name | Author(T) AND T.article = 'database' }

OUTPUT: This query selects the tuples from the AUTHOR relation. It returns a tuple with 'name' from Author who has written an article on 'database'.

TRC (tuple relation calculus) can be quantified. In TRC, we can use Existential (∃) and Universal Quantifiers (∀).

For example:

{ R| ∃T ∈ Authors(T.article='database' AND R.name=T.name)}

Output: This query will yield the same result as the previous one.

2. Domain Relational Calculus (DRC)

- The second form of relation is known as Domain relational calculus. In domain relational calculus, filtering variable uses the domain of attributes.

- Domain relational calculus uses the same operators as tuple calculus. It uses logical connectives ∧ (and), ∨ (or) and ┓ (not).

- It uses Existential (∃) and Universal Quantifiers (∀) to bind the variable.

Notation:

{ a1, a2, a3, ..., an | P (a1, a2, a3, ... ,an)}

Where

a1, a2 are attributes

P stands for formula built by inner attributes

For example:

{< article, page, subject > | ∈ javatpoint ∧ subject = 'database'}

Output: This query will yield the article, page, and subject from the relational javatpoint, where the subject is a database.

Key takeaway

Relational calculus is a non-procedural query language. In the non-procedural query language, the user is concerned with the details of how to obtain the end results.

The relational calculus tells what to do but never explains how to do.

- Domain relational calculus is known as the second type of relationship. The filtering variable uses the domain attributes in the domain relational calculus.

- The same operators as the tuple calculus are used in domain relational calculus. It utilises logical relations ∧ (and), ∨ (or) and ┓ (not).

- To connect the variable, it uses Existential (∃) and Universal Quantifiers (∀).

Notation:

{ a1, a2, a3, ..., an | P (a1, a2, a3, ... ,an)}

Where,

a1,a2 - attributes

P - formula built by inner attributes

Example

{< article, page, subject > | ∈ CSE ∧ subject = 'database'}

Output: This query will result from the relational CSE, where the topic is a database, to the post, page, and subject.

Indexing in DBMS

● Indexing is used to optimize the performance of a database by minimizing the number of disk accesses required when a query is processed.

● The index is a type of data structure. It is used to locate and access the data in a database table quickly.

Index structure:

Indexes can be created using some database columns.

Fig 6: Structure of Index

● The first column of the database is the search key that contains a copy of the primary key or candidate key of the table. The values of the primary key are stored in sorted order so that the corresponding data can be accessed easily.

● The second column of the database is the data reference. It contains a set of pointers holding the address of the disk block where the value of the particular key can be found.

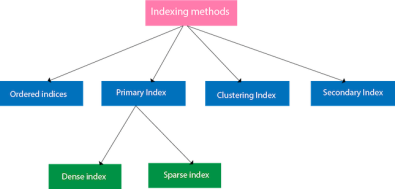

Indexing Methods

Fig 7: Indexing Methods

Ordered indices

The indices are usually sorted to make searching faster. The indices which are sorted are known as ordered indices.

Example: Suppose we have an employee table with thousands of records and each of which is 10 bytes long. If their IDs start with 1, 2, 3....and so on and we have to search student with ID-543.

● In the case of a database with no index, we have to search the disk block from starting till it reaches 543. The DBMS will read the record after reading 543*10=5430 bytes.

● In the case of an index, we will search using indexes and the DBMS will read the record after reading 542*2= 1084 bytes which are very less compared to the previous case.

Primary Index

● If the index is created on the basis of the primary key of the table, then it is known as primary indexing. These primary keys are unique to each record and contain 1:1 relation between the records.

● As primary keys are stored in sorted order, the performance of the searching operation is quite efficient.

● The primary index can be classified into two types: Dense index and Sparse index.

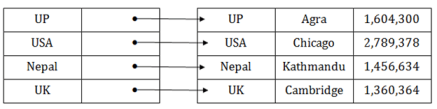

Dense index

● The dense index contains an index record for every search key value in the data file. It makes searching faster.

● In this, the number of records in the index table is same as the number of records in the main table.

● It needs more space to store index record itself. The index records have the search key and a pointer to the actual record on the disk.

Fig 8: Dense Index

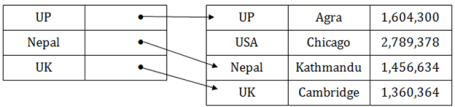

Sparse index

● In the data file, index record appears only for a few items. Each item points to a block.

● In this, instead of pointing to each record in the main table, the index points to the records in the main table in a gap.

Fig 9: Sparse Index

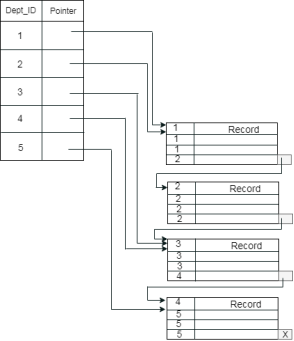

Clustering Index

● A clustered index can be defined as an ordered data file. Sometimes the index is created on non-primary key columns which may not be unique for each record.

● In this case, to identify the record faster, we will group two or more columns to get the unique value and create index out of them. This method is called a clustering index.

● The records which have similar characteristics are grouped, and indexes are created for these group.

Example: suppose a company contains several employees in each department. Suppose we use a clustering index, where all employees which belong to the same Dept_ID are considered within a single cluster, and index pointers point to the cluster as a whole. Here Dept_Id is a non-unique key.

The previous schema is little confusing because one disk block is shared by records which belong to the different cluster. If we use separate disk block for separate clusters, then it is called better technique.

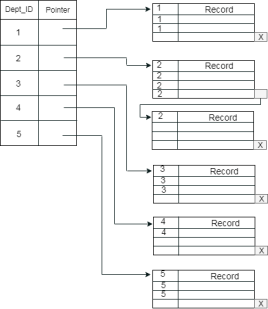

Secondary Index

In the sparse indexing, as the size of the table grows, the size of mapping also grows. These mappings are usually kept in the primary memory so that address fetch should be faster. Then the secondary memory searches the actual data based on the address got from mapping. If the mapping size grows then fetching the address itself becomes slower. In this case, the sparse index will not be efficient. To overcome this problem, secondary indexing is introduced.

In secondary indexing, to reduce the size of mapping, another level of indexing is introduced. In this method, the huge range for the columns is selected initially so that the mapping size of the first level becomes small. Then each range is further divided into smaller ranges. The mapping of the first level is stored in the primary memory, so that address fetch is faster. The mapping of the second level and actual data are stored in the secondary memory (hard disk).

Fig 10: Secondary Index

For example:

● If you want to find the record of roll 111 in the diagram, then it will search the highest entry which is smaller than or equal to 111 in the first level index. It will get 100 at this level.

● Then in the second index level, again it does max (111) <= 111 and gets 110. Now using the address 110, it goes to the data block and starts searching each record till it gets 111.

● This is how a search is performed in this method. Inserting, updating or deleting is also done in the same manner.

Key takeaway

● Indexing is used to optimize the performance of a database by minimizing the number of disk accesses required when a query is processed.

● The index is a type of data structure. It is used to locate and access the data in a database table quickly.

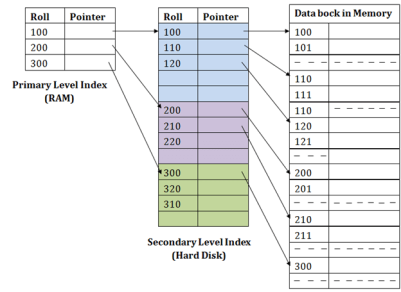

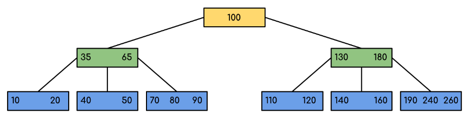

A self-balancing search tree is a B-Tree. It is presumed that everything is in the primary memory of most other self-balancing search trees (such as AVL and Red-Black Trees). We have to think of the vast amount of data that can not fit into the main memory to understand the use of B-Trees. When the number of keys is high, data in the form of blocks is read from the disc. Compared to the principal memory access time, disc access time is very high.

The key idea of using B-Trees is to decrease the amount of access to the disc. Most tree operations (search, insert, delete, max, min, ..etc) include access to the O(h) disc where the height of the tree is h. The B-tree is a tree with fat.

By placing the maximum possible keys into a B-Tree node, the height of the B-Trees is kept low. The B-Tree node size is usually held equal to the size of the disc block. Since the B-tree height is poor, total disc access is drastically reduced compared to balanced binary search trees such as AVL Tree, Red-Black Tree, etc. for most operations.

Time Complexity of B - tree

S.no. | Algorithm | Time complexity |

1 | Search | O ( log n) |

2 | Insert | O ( log n) |

3 | Delete | O ( log n) |

n is the total no. Of elements

All the properties of an M-way tree are found in the B tree of order m. Furthermore, it includes the properties below.

● In a B-Tree, each node contains a maximum of m children.

● With the exception of the root node and the leaf node, each node in the B-Tree contains at least m/2 kids.

● There must be at least 2 nodes for the root nodes.

● It is important to have all leaf nodes at the same level.

Fig 11: Example of B - tree

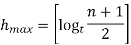

The minimum height of the B-Tree that can exist with n node numbers and m is the maximum number that a node can have of children is:

hmin = [logm (n+1)] - 1

The maximum height of the B-Tree that can exist with n node numbers and d is the minimum number of children that can have a non-root node:

And

Traversal in B - tree

Traversal is also similar to Binary Tree's Inorder Traversal. We start with the leftmost child, print the leftmost child recursively, then repeat the same method for the remaining children and keys. In the end, print the rightmost child recursively.

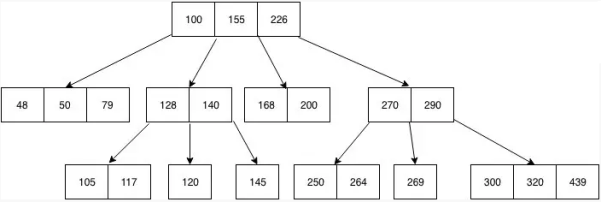

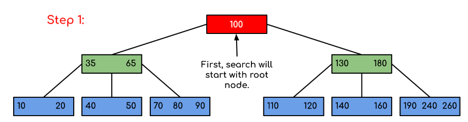

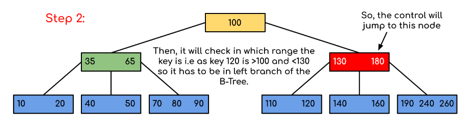

Search operation in B - tree

A search in the Binary Search Tree is equivalent to a search. Let the search key be k. We begin from the root and traverse down recursively. For any non-leaf node visited, we simply return the node if the node has the key. Otherwise, we return to the appropriate child of the node (the child who is just before the first big key). If a leaf node is reached, and k is not contained in the leaf node, then NULL is returned.

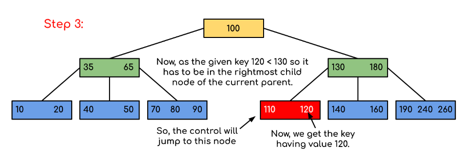

Example: Searching 120 in the given B-Tree.

Fig 12: Example for searching operation

Solution:

Fig 13: Steps of searching

Key takeaway:

● A self-balancing search tree is a B-Tree.

● The key idea of using B-Trees is to decrease the amount of access to the disc.

● The B-Tree node size is usually held equal to the size of the disc block.

A hash index is created by taking the prefix of a complete hash value. A depth number is assigned to each hash index to indicate how many bits are used to compute a hash function. These bits can be used to address up to 2n buckets. When all of these bits have been spent, the depth value is increased linearly, and the buckets are divided in half.

A hash index is a technique for expediting the search process. Traditional indexes require you to scan each row to ensure that your query is successful. This isn't the case with hash indexes, though!

Each index key comprises only one row of table data and uses the hashing indexing process to assign them a unique memory address, removing all other keys with duplicate values before locating what they're seeking for.

One of the various methods to organise data in a database is to use hash indexes. They work by collecting user input and using it as a key for disc storage. These keys, also known as hash values, might be anything from string lengths to input characters.

When querying specific inputs with specified features, hash indexes are most typically employed. It may be, for example, locating all A-letters that are higher than 10 cm. By constructing a hash index function, you can do it rapidly.

The PostgreSQL database system includes hash indexes. This technology was created to boost speed and efficiency. Hash indexes can be combined with other index types such as B-tree and GiST.

A hash index divides keys into smaller portions called buckets, each of which is given an integer ID number so that it may be conveniently retrieved when looking for a key's placement in the hash table. The buckets are stored sequentially on a disk so that the data they contain can be quickly accessed.

Advantages

The fundamental benefit of employing hash indexes is that they provide for quick access to records when searching by key value. It is frequently used in queries that have an equality condition. Hash benchmarks also don't take up a lot of storage space. As a result, it is a useful tool, although it is not without flaws.

Disadvantages

Hash indexes are a relatively new indexing mechanism that has the potential to improve performance significantly. You can think of them as a binary search tree with a twist (BSTs).

Hash indexes work by grouping data into buckets depending on their hash values, allowing for quick and efficient data retrieval. They will always be in good working order.

Duplicate keys, on the other hand, cannot be stored in a bucket. As a result, some overhead will always exist. However, thus far, the benefits of employing hash indexes exceed the disadvantages.

Key takeaway

A hash index is a technique for expediting the search process. Traditional indexes require you to scan each row to ensure that your query is successful.

In the past, using a function on an indexed column in the where clause of a query ensured that no index was utilised. To address this issue, developed Function - Based Indexes. Instead of indexing a column, you index the function on that column, which stores the function's product rather than the original column data. When a query that could benefit from that index is sent to the server, the query is changed to allow the index to be used.

The default index type is a b-tree index, which uses a tree-like structure to generate an index on one or more columns. A bitmap index is a two-dimensional map of the rows and values in a given column.

A function-based index, on the other hand, is built using the results of a function or expression.

You can establish an index based on a function or expression with function-based indexes. The person who creates the index specifies the function or expression's value, which is then put in the index. Multiple columns, arithmetic expressions, or a PL/SQL function or C callout can all be used in function-based indexes.

Syntax

The CREATE INDEX command is used.

CREATE INDEX index_name

ON table_name(function(column_name));

This command's parameters are as follows:

● index name - You can give your new index a name here.

● table name - The name of the table on which a new index will be created.

● function - This is the function that is being applied to the column.

● column name - This is the name of the column in the table on which you're conducting the index function.

Key takeaway

The result of a function applied to one or more columns of a single table is used to define a functional index. Functional indexes can be utilised to provide quick access to data based on function call results.

Bitmap indexing is a sort of database indexing in which bitmaps are used. This strategy is employed in large databases where columns have a low cardinality and are regularly used in queries.

It's a sort of indexing that's based on a single key. It is, however, designed to swiftly execute searches on numerous keys. Before we can use bitmap indexing, we need to arrange the records in a sequential order. It simplifies the process of retrieving a specific record from the block. It is also simple to allocate them in a file's block.

The bit is the lowest unit for representing data with values of 0 or 1. It's the bit arrays that perform bitwise logical operations on bitmaps to answer queries. Indexing is a data structure that arranges data according to a set of rules. When a column has a low cardinality and is frequently used in a query, this bitmap indexing approach is used in large databases. Low cardinality columns are those that have a small number of unique values. We will explore why it is necessary, as well as its applications, benefits, and drawbacks in today's IT world.

It is used in data warehousing applications with a large amount of data and complex queries but low-level transactions. It aids in reducing response time for complex queries, space reduction compared to other indexing techniques, increased hardware performance with fewer CPUs and memory, and effective maintenance for such applications. Low cardinality columns have multiple distinct values, either absolute or proportional to the amount of data entries. Low cardinality can also take on Boolean values, such as true or false.

The index gives you a list of all the rows in a table that have a specific key value. A conventional index keeps a list of row ids for each key in the same row as the key-value row, but a key-value index saves each key-value instead of its list of row ids.

Structure of Bitmap

The words 'bitmap' is made up of the words 'bit' and 'map.' In a computer system, a bit is the smallest unit of data. A map is a visual representation of how things are organised. As a result, a bitmap is essentially an array of bits that have been mapped. Each attribute in a relation has its own bitmap for its value. A bitmap has enough bits to number each record in a block.

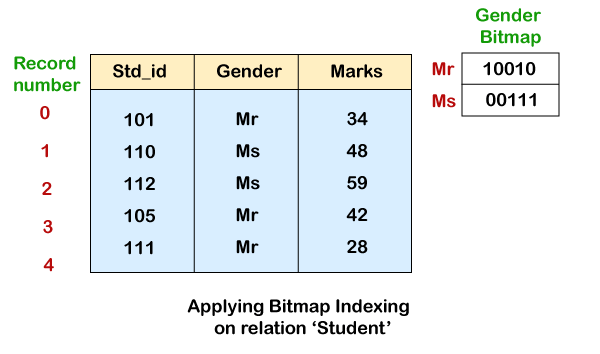

Consider the Student record relation, where we want to find out which female and male students have an English score of greater than 40. The gender bitmaps are shown in the figure below.

When the value of a record is set to Mr, the bitmap's ithbit will be set to 1. All remaining bits in the bitmap for Mr will be set to 0. In the case of female students, the identical procedure is followed. If the value is set to Ms for a particular j record, the jth bit in the bitmap will be set to 1. For Ms, all other bits will be set to 0. Now, a user just needs to read all the records in the relation to retrieve either a single or all records for female students or male students, i.e., value as Mr or Ms. Select the required records for Mr. Or Ms. After reading.

The bitmap indexing, on the other hand, makes it difficult to swiftly choose entries. However, it allows users to read and select only the records they need. As can be seen in the sample above, the user only picked the required records for female or male students.

Advantages

● When fewer cardinality columns are utilised in querying often, it aids in speedier data retrieval.

● Even if the table has a large number of records, it is efficient.

● Incorporating table columns into insertion, updating, and deletion actions would be far more efficient.

● When running a query, multiple indices can be combined.

● On a temporary table, a Bitmap join index can be built.

Disadvantages

● For databases with fewer records, bitmap indexing is ineffective because DBMSs demand a full scan of the table instead of using bitmap indexing.

● Multiple insertions, updates, and deletions from various users can result in a stalemate.

● Bitmap indexing costs time to perform DML operations because it takes time to complete the transaction and update the bitmap index.

● Maintaining a large number of records can be time consuming.

● If the user inputs a new record, it must be changed throughout, which is time-consuming and complicated.

● When utilising the bitmap join index, only one table can be modified by many transactions.

● The table's UNIQUE property prevents it from being created.

Key takeaway

Bitmap indexing is a sort of database indexing in which bitmaps are used. This strategy is employed in large databases where columns have a low cardinality and are regularly used in queries.

Functional Dependency (FD) is a constraint in a database management system that specifies the relationship of one attribute to another attribute (DBMS). Functional Dependency helps to maintain the database's data quality. Finding the difference between good and poor database design plays a critical role.

The arrow "→" signifies a functional dependence. X→ Y is defined by the functional dependence of X on Y.

Rules of functional dependencies

The three most important rules for Database Functional Dependence are below:

- Reflexive law: X holds a value of Y if X is a set of attributes and Y is a subset of X.

- Augmentation rule: If x -> y holds, and c is set as an attribute, then ac -> bc holds as well. That is to add attributes that do not modify the fundamental dependencies.

- Transitivity law: If x -> y holds and y -> z holds, this rule is very similar to the transitive rule in algebra, then x -> z also holds. X -> y is referred to as functionally evaluating y.

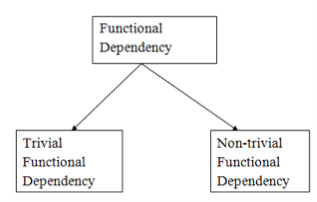

Types of functional dependency

Fig 14: Types of functional dependency

1. Trivial functional dependency

- A → B has trivial functional dependency if B is a subset of A.

- The following dependencies are also trivial like: A → A, B → B

Example

Consider a table with two columns Employee_Id and Employee_Name.

{Employee_id, Employee_Name} → Employee_Id is a trivial functional dependency as

Employee_Id is a subset of {Employee_Id, Employee_Name}.

Also, Employee_Id → Employee_Id and Employee_Name → Employee_Name are trivial dependencies too.

2. Non - trivial functional dependencies

- A → B has a non-trivial functional dependency if B is not a subset of A.

- When A intersection B is NULL, then A → B is called as complete non-trivial.

Example:

ID → Name,

Name → DOB

Key takeaway:

- The arrow "→" signifies a functional dependence.

- Functional Dependency helps to maintain the database's data quality.

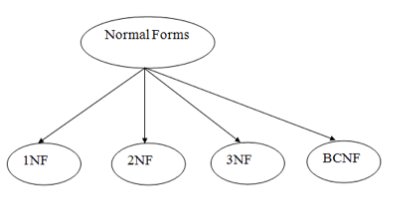

Normalization is often executed as a series of different forms. Each normal form has its own properties. As normalization proceeds, the relations become progressively more restricted in format, and also less vulnerable to update anomalies. For the relational data model, it is important to bring the relation only in first normal form (1NF) that is critical in creating relations. All the remaining forms are optional.

A relation R is said to be normalized if it does not create any anomaly for three basic operations: insert, delete and update.

Fig 15: Types of normal forms

1NF

If a relation has an atomic value, it is in 1NF.

2NF

If a relation is in 1NF and all non-key attributes are fully functioning and dependent on the primary key, it is in 2NF.

3NF

If a relation is in 2NF and there is no transition dependency, it is in 3NF.

4NF

If a relation is in Boyce Codd normal form and has no multivalued dependencies, it is in 4NF.

5NF

If a relation is in 4NF and does not contain any join dependencies, it is in 5NF, and joining should be lossless.

Objectives of Normalization

- It is used to delete redundant information and anomalies in the database from the relational table.

- Normalization improves by analyzing new data types used in the table to reduce consistency and complexity.

- Dividing the broad database table into smaller tables and connecting them using a relationship is helpful.

- This avoids duplicating data into a table or not repeating classes.

- It decreases the probability of anomalies in a database occurring.

Key takeaway:

- Normalization is a method of arranging the database data to prevent data duplication, anomaly of addition, anomaly of update & anomaly of deletion.

- Standard types are used in database tables to remove or decrease redundancies.

References:

- “Database System Concepts”, 6th Edition by Abraham Silberschatz, Henry F. Korth, S. Sudarshan, McGraw-Hill

- “Principles of Database and Knowledge – Base Systems”, Vol 1 by J. D. Ullman, Computer Science Press

- “Fundamentals of Database Systems”, 5th Edition by R. Elmasri and S. Navathe, Pearson Education