UNIT 1

Fundamentals of Programming

Studying programming languages will help you be better at your job, make more money, and be a happier, more fulfilled and more informed citizen, because you’ll learn to:

... Mastering more than one language is often a watershed in the career of a professional programmer. Once a programmer realizes that programming principles transcend the syntax of any specific language, the doors swing open to knowledge that truly makes a difference in quality and productivity. — Steve McConnell

- choose the best way of doing a given task

- utilize some of its non-obvious powerful features

- simulate useful (and powerful) features from other languages if your language lacks them

- write elegant code

- understand obscure features

- understand weird error messages

- understand and diagnose unexpected behaviour

- understand the performance implications of doing things a certain way

- actually use a debugger effectively

- A query language for database access

- A query language for a search engine

- A calculator

- A console interface to an adventure game

Key takeaway

Studying programming languages will help you be better at your job, make more money, and be a happier, more fulfilled and more informed citizen, because you’ll learn to:

... Mastering more than one language is often a watershed in the career of a professional programmer. Once a programmer realizes that programming principles transcend the syntax of any specific language, the doors swing open to knowledge that truly makes a difference in quality and productivity. — Steve McConnell

Ever since the invention of Charles Babbage’s difference engine in 1822, computers have required a means of instructing them to perform a specific task. This means is known as a programming language. Computer languages were first composed of a series of steps to wire a particular program; these morphed into a series of steps keyed into the computer and then executed; later these languages acquired advanced features such as logical branching and object orientation. The computer languages of the last fifty years have come in two stages, the first major languages and the second major languages, which are in use today.

In the beginning, Charles Babbage’s difference engine could only be made to execute tasks by changing the gears which executed the calculations. Thus, the earliest form of a computer language was physical motion. Eventually, physical motion was replaced by electrical signals when the US Government built the ENIAC in 1942. It followed many of the same principles of Babbage’s engine and hence, could only be “programmed” by pre-setting switches and rewiring the entire system for each new “program” or calculation. This process proved to be very tedious.

In 1945, John Von Neumann was working at the Institute for Advanced Study. He developed two important concepts that directly affected the path of computer programming languages. The first was known as “shared-program technique” (www.softlord.com). This technique stated that the actual computer hardware should be simple and not need to be hand-wired for each program. Instead, complex instructions should be used to control the simple hardware, allowing it to be reprogrammed much faster.

The second concept was also extremely important to the development of programming languages. Von Neumann called it “conditional control transfer” (www.softlord.com). This idea gave rise to the notion of subroutines, or small blocks of code that could be jumped to in any order, instead of a single set of chronologically ordered steps for the computer to take. The second part of the idea stated that computer code should be able to branch based on logical statements such as IF (expression) THEN, and looped such as with a FOR statement. “Conditional control transfer” gave rise to the idea of “libraries,” which are blocks of code that can be reused over and over. (Updated Aug 1 2004: Around this time, Konrad Zeus, a German, was inventing his own computing systems independently and developed many of the same concepts, both in his machines and in the Plankalkul programming language. Alas, his work did not become widely known until much later.

In 1949, a few years after Von Neumann’s work, the language Short Code appeared (www.byte.com). It was the first computer language for electronic devices and it required the programmer to change its statements into 0’s and 1’s by hand. Still, it was the first step towards the complex languages of today. In 1951, Grace Hopper wrote the first compiler, A-0 (www.byte.com). A compiler is a program that turns the language’s statements into 0’s and 1’s for the computer to understand. This lead to faster programming, as the programmer no longer had to do the work by hand.

In 1957, the first of the major languages appeared in the form of FORTRAN. Its name stands for Formula Translating system. The language was designed at IBM for scientific computing. The components were very simple, and provided the programmer with low-level access to the computers innards. Today, this language would be considered restrictive as it only included IF, DO, and GOTO statements, but at the time, these commands were a big step forward. The basic types of data in use today got their start in FORTRAN, these included logical variables (TRUE or FALSE), and integer, real, and double-precision numbers.

Though FORTAN was good at handling numbers, it was not so good at handling input and output, which mattered most to business computing. Business computing started to take off in 1959, and because of this, COBOL was developed. It was designed from the ground up as the language for businessmen. Its only data types were numbers and strings of text. It also allowed for these to be grouped into arrays and records, so that data could be tracked and organized better. It is interesting to note that a COBOL program is built in a way similar to an essay, with four or five major sections that build into an elegant whole. COBOL statements also have a very English-like grammar, making it quite easy to learn. All of these features were designed to make it easier for the average business to learn and adopt it.

(Updated Aug 11 2004) In 1958, John McCarthy of MIT created the LISt Processing (or LISP) language. It was designed for Artificial Intelligence (AI) research. Because it was designed for a specialized field, the original release of LISP had a unique syntax: essentially none. Programmers wrote code in parse trees, which are usually a compiler-generated intermediary between higher syntax (such as in C or Java) and lower-level code. Another obvious difference between this language (in original form) and other languages is that the basic and only type of data is the list; in the mid-1960’s, LISP acquired other data types. A LISP list is denoted by a sequence of items enclosed by parentheses. LISP programs themselves are written as a set of lists, so that LISP has the unique ability to modify itself, and hence grow on its own. The LISP syntax was known as “Cambridge Polish,” as it was very different from standard Boolean logic (Wexelblat, 177):

LISP remains in use today because its highly specialized and abstract nature.

The Algol language was created by a committee for scientific use in 1958. Its major contribution is being the root of the tree that has led to such languages as Pascal, C, C++, and Java. It was also the first language with a formal grammar, known as Backus-Naar Form or BNF (McGraw-Hill Encyclopaedia of Science and Technology, 454). Though Algol implemented some novel concepts, such as recursive calling of functions, the next version of the language, Algol 68, became bloated and difficult to use (www.byte.com). This led to the adoption of smaller and more compact languages, such as Pascal.

Pascal was begun in 1968 by Niklaus Wirth. Its development was mainly out of necessity for a good teaching tool. In the beginning, the language designers had no hopes for it to enjoy widespread adoption. Instead, they concentrated on developing good tools for teaching such as a debugger and editing system and support for common early microprocessor machines which were in use in teaching institutions.

Pascal was designed in a very orderly approach, it combined many of the best features of the languages in use at the time, COBOL, FORTRAN, and ALGOL. While doing so, many of the irregularities and oddball statements of these languages were cleaned up, which helped it gain users (Bergin, 100-101). The combination of features, input/output and solid mathematical features, made it a highly successful language. Pascal also improved the “pointer” data type, a very powerful feature of any language that implements it. It also added a CASE statement, that allowed instructions to to branch like a tree in such a manner:

CASE expression OF

possible-expression-value-1:

statements to execute...

possible-expression-value-2:

statements to execute...

END

Pascal also helped the development of dynamic variables, which could be created while a program was being run, through the NEW and DISPOSE commands. However, Pascal did not implement dynamic arrays, or groups of variables, which proved to be needed and led to its downfall (Bergin, 101-102). Wirth later created a successor to Pascal, Modula-2, but by the time it appeared, C was gaining popularity and users at a rapid pace.

C was developed in 1972 by Dennis Ritchie while working at Bell Labs in New Jersey. The transition in usage from the first major languages to the major languages of today occurred with the transition between Pascal and C. Its direct ancestors are B and BCPL, but its similarities to Pascal are quite obvious. All of the features of Pascal, including the new ones such as the CASE statement are available in C. C uses pointers extensively and was built to be fast and powerful at the expense of being hard to read. But because it fixed most of the mistakes Pascal had, it won over former-Pascal users quite rapidly.

Ritchie developed C for the new Unix system being created at the same time. Because of this, C and Unix go hand in hand. Unix gives C such advanced features as dynamic variables, multitasking, interrupt handling, forking, and strong, low-level, input-output. Because of this, C is very commonly used to program operating systems such as Unix, Windows, the MacOS, and Linux.

In the late 1970’s and early 1980’s, a new programing method was being developed. It was known as Object Oriented Programming, or OOP. Objects are pieces of data that can be packaged and manipulated by the programmer. Bjarne Stroustroup liked this method and developed extensions to C known as “C with Classes.” This set of extensions developed into the full-featured language C++, which was released in 1983.

C++ was designed to organize the raw power of C using OOP, but maintain the speed of C and be able to run on many different types of computers. C++ is most often used in simulations, such as games. C++ provides an elegant way to track and manipulate hundreds of instances of people in elevators, or armies filled with different types of soldiers. It is the language of choice in today’s AP Computer Science courses.

In the early 1990’s, interactive TV was the technology of the future. Sun Microsystems decided that interactive TV needed a special, portable (can run on many types of machines), language. This language eventually became Java. In 1994, the Java project team changed their focus to the web, which was becoming “the cool thing” after interactive TV failed. The next year, Netscape licensed Java for use in their internet browser, Navigator. At this point, Java became the language of the future and several companies announced applications which would be written in Java, none of which came into use.

Though Java has very lofty goals and is a text-book example of a good language, it may be the “language that wasn’t.” It has serious optimization problems, meaning that programs written in it run very slowly. And Sun has hurt Java’s acceptance by engaging in political battles over it with Microsoft. But Java may wind up as the instructional language of tomorrow as it is truly object-oriented and implements advanced techniques such as true portability of code and garbage collection.

Visual Basic is often taught as a first programming language today as it is based on the BASIC language developed in 1964 by John Kemeny and Thomas Kurtz. BASIC is a very limited language and was designed for non-computer science people. Statements are chiefly run sequentially, but program control can change based on IF..THEN, and GOSUB statements which execute a certain block of code and then return to the original point in the program’s flow.

Microsoft has extended BASIC in its Visual Basic (VB) product. The heart of VB is the form, or blank window on which you drag and drop components such as menus, pictures, and slider bars. These items are known as “widgets.” Widgets have properties (such as its color) and events (such as clicks and double-clicks) and are central to building any user interface today in any language. VB is most often used today to create quick and simple interfaces to other Microsoft products such as Excel and Access without needing a lot of code, though it is possible to create full applications with it.

Perl has often been described as the “duct tape of the Internet,” because it is most often used as the engine for a web interface or in scripts that modify configuration files. It has very strong text matching functions which make it ideal for these tasks. Perl was developed by Larry Wall in 1987 because the Unix sed and awk tools (used for text manipulation) were no longer strong enough to support his needs. Depending on whom you ask, Perl stands for Practical Extraction and Reporting Language or Pathologically Eclectic Rubbish Lister.

Programming languages have been under development for years and will remain so for many years to come. They got their start with a list of steps to wire a computer to perform a task. These steps eventually found their way into software and began to acquire newer and better features. The first major languages were characterized by the simple fact that they were intended for one purpose and one purpose only, while the languages of today are differentiated by the way they are programmed in, as they can be used for almost any purpose. And perhaps the languages of tomorrow will be more natural with the invention of quantum and biological computers.

Key takeaway

Ever since the invention of Charles Babbage’s difference engine in 1822, computers have required a means of instructing them to perform a specific task. This means is known as a programming language. Computer languages were first composed of a series of steps to wire a particular program; these morphed into a series of steps keyed into the computer and then executed; later these languages acquired advanced features such as logical branching and object orientation. The computer languages of the last fifty years have come in two stages, the first major languages and the second major languages, which are in use today.

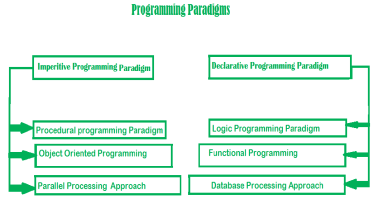

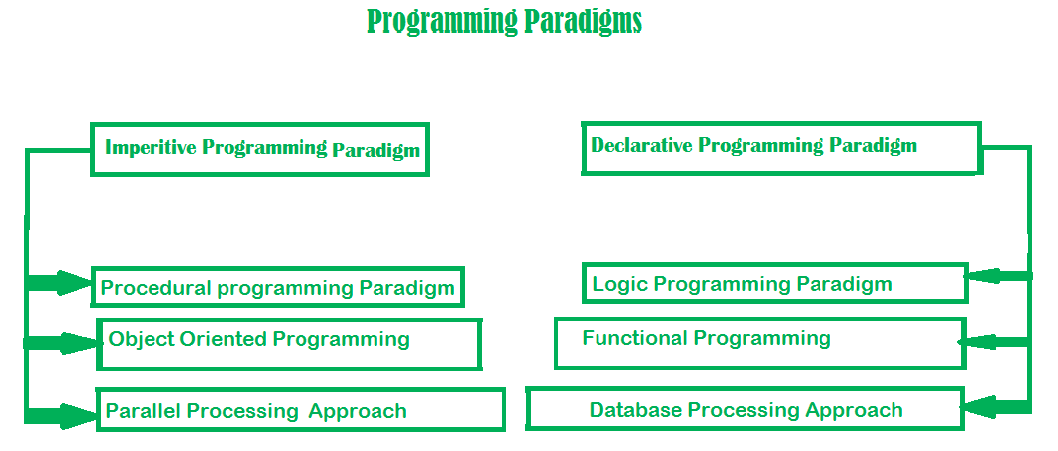

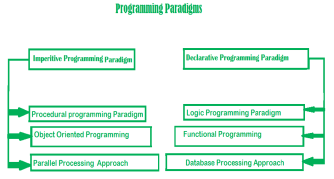

Paradigm can also be termed as method to solve some problem or do some task. Programming paradigm is an approach to solve problem using some programming language or also we can say it is a method to solve a problem using tools and techniques that are available to us following some approach. There are lots for programming language that are known but all of them need to follow some strategy when they are implemented and this methodology/strategy is paradigms. Apart from varieties of programming language there are lots of paradigms to fulfil each and every demand. They are discussed below:

Fig 1 – Programming paradigms

1. Imperative programming paradigm:

It is one of the oldest programming paradigms. It features close relation relation to machine architecture. It is based on Von Neumann architecture. It works by changing the program state through assignment statements. It performs step by step task by changing state. The main focus is on how to achieve the goal. The paradigm consists of several statements and after execution of all the result is stored.

Advantage:

Disadvantage:

Examples of Imperative programming paradigm:

C: developed by Dennis Ritchie and Ken Thompson

Fortran: developed by John Backus for IBM

Basic: developed by John G Kemeny and Thomas E Kurtz

// average of five number in C

int marks[5] = { 12, 32, 45, 13, 19 } int sum = 0; float average = 0.0; for (int i = 0; i < 5; i++) { sum = sum + marks[i]; } average = sum / 5; |

Imperative programming is divided into three broad categories: Procedural, OOP and parallel processing. These paradigms are as follows:

This paradigm emphasizes on procedure in terms of under lying machine model. There is no difference in between procedural and imperative approach. It has the ability to reuse the code and it was boon at that time when it was in use because of its reusability.

Pascal: developed by Niklaus Wirth

#include <iostream> using namespace std; int main() { int i, fact = 1, num; cout << "Enter any Number: "; cin >> number; for (i = 1; i <= num; i++) { fact = fact * i; } cout << "Factorial of " << num << " is: " << fact << endl; return 0; } |

Then comes OOP,

7. Object oriented programming –

The program is written as a collection of classes and object which are meant for communication. The smallest and basic entity is object and all kind of computation is performed on the objects only. More emphasis is on data rather procedure. It can handle almost all kind of real life problems which are today in scenario.

Advantages:

Examples of Object Oriented programming paradigm:

Simula: first OOP language

Java: developed by James Gosling at Sun Microsystems

C++: developed by Bjarne Stroustrup

Objective-C: designed by Brad Cox

Visual Basic .NET: developed by Microsoft

Python: developed by Guido van Rossum

Ruby: developed by Yukihiro Matsumoto

Smalltalk: developed by Alan Kay, Dan Ingalls, Adele Goldberg

import java.io.*;

class GFG { public static void main(String[] args) { System.out.println("GfG!"); Signup s1 = new Signup(); s1.create(22, "riya", "riya2@gmail.com", 'F', 89002); } }

class Signup { int userid; String name; String emailid; char sex; long mob;

public void create(int userid, String name, String emailid, char sex, long mob) { System.out.println("Welcome to GeeksforGeeks\nLets create your account\n"); this.userid = 132; this.name = "Radha"; this.emailid = "radha.89@gmail.com"; this.sex = 'F'; this.mob = 900558981; System.out.println("your account has been created"); } } |

8. Parallel processing approach –

Parallel processing is the processing of program instructions by dividing them among multiple processors. A parallel processing system posses many numbers of processor with the objective of running a program in less time by dividing them. This approach seems to be like divide and conquer. Examples are NESL (one of the oldest one) and C/C++ also supports because of some library function.

2. Declarative programming paradigm:

It is divided as Logic, Functional, Database. In computer science the declarative programming is a style of building programs that expresses logic of computation without talking about its control flow. It often considers programs as theories of some logic. It may simplify writing parallel programs. The focus is on what needs to be done rather how it should be done basically emphasize on what code code is actually doing. It just declares the result we want rather how it has be produced. This is the only difference between imperative (how to do) and declarative (what to do) programming paradigms. Getting into deeper we would see logic, functional and database.

It can be termed as abstract model of computation. It would solve logical problems like puzzles, series etc. In logic programming we have a knowledge base which we know before and along with the question and knowledge base which is given to machine, it produces result. In normal programming languages, such concept of knowledge base is not available but while using the concept of artificial intelligence, machine learning we have some models like Perception model which is using the same mechanism.

In logical programming the main emphasize is on knowledge base and the problem. The execution of the program is very much like proof of mathematical statement, e.g., Prolog

r=r1+n

12. Functional programming paradigms –

The functional programming paradigms has its roots in mathematics and it is language independent. The key principle of this paradigms is the execution of series of mathematical functions. The central model for the abstraction is the function which are meant for some specific computation and not the data structure. Data are loosely coupled to functions. The function hide their implementation. Function can be replaced with their values without changing the meaning of the program. Some of the languages like Perl, JavaScript mostly uses this paradigm.

13. Examples of Functional programming paradigm:

14. JavaScript: developed by Brendan Eich

15. Haswell : developed by Lennart Augustsson, Dave Barton

16. Scala : developed by Martin Odersky

17. Erlang : developed by Joe Armstrong, Robert Virding

18. Lisp: developed by John McCarthy

19. ML: developed by Robin Milner

Clojure: developed by Rich Hickey

The next kind of approach is of Database.

20. Database/Data driven programming approach –

This programming methodology is based on data and its movement. Program statements are defined by data rather than hard-coding a series of steps. A database program is the heart of a business information system and provides file creation, data entry, update, query and reporting functions. There are several programming languages that are developed mostly for database application. For example SQL. It is applied to streams of structured data, for filtering, transforming, aggregating (such as computing statistics), or calling other programs. So it has its own wide application.

21. CREATE DATABASE database Address;

22. CREATE TABLE Addr (

23. PersonID int,

24. LastName varchar(200),

25. FirstName varchar(200),

26. Address varchar(200),

27. City varchar(200),

28. State varchar(200)

);

Key takeaway

Paradigm can also be termed as method to solve some problem or do some task. Programming paradigm is an approach to solve problem using some programming language or also we can say it is a method to solve a problem using tools and techniques that are available to us following some approach. There are lots for programming language that are known but all of them need to follow some strategy when they are implemented and this methodology/strategy is paradigms. Apart from varieties of programming language there are lots of paradigms to fulfil each and every demand.

A programming language is an artificial language that can be used to control the behaviour of a machine, particularly a computer. Programming languages, like human languages, are defined through the use of syntactic and semantic rules, to determine structure and meaning respectively.

Programming languages are used to facilitate communication about the task of organizing and manipulating information, and to express algorithms precisely. Some authors restrict the term "programming language" to those languages that can express all possible algorithms; sometimes the term " computer language" is used for more limited artificial languages.

Thousands of different programming languages have been created, and new ones are created every year.

Authors disagree on the precise definition, but traits often considered important requirements and objectives of the language to be characterized as a programming language:

Non-computational languages, such as mark-up languages like HTML or formal grammars like BNF, are usually not considered programming languages. It is a usual approach to embed a programming language into the non-computational (host) language, to express templates for the host language.

A prominent purpose of programming languages is to provide instructions to a computer. As such, programming languages differ from most other forms of human expression in that they require a greater degree of precision and completeness. When using a natural language to communicate with other people, human authors and speakers can be ambiguous and make small errors, and still expect their intent to be understood. However, computers do exactly what they are told to do, and cannot understand the code the programmer "intended" to write. The combination of the language definition, the program, and the program's inputs must fully specify the external behaviour that occurs when the program is executed.

Many languages have been designed from scratch, altered to meet new needs, combined with other languages, and eventually fallen into disuse. Although there have been attempts to design one "universal" computer language that serves all purposes, all of them have failed to be accepted in this role. The need for diverse computer languages arises from the diversity of contexts in which languages are used:

One common trend in the development of programming languages has been to add more ability to solve problems using a higher level of abstraction. The earliest programming languages were tied very closely to the underlying hardware of the computer. As new programming languages have developed, features have been added that let programmers express ideas that are more removed from simple translation into underlying hardware instructions. Because programmers are less tied to the needs of the computer, their programs can do more computing with less effort from the programmer. This lets them write more programs in the same amount of time.

Natural language processors have been proposed as a way to eliminate the need for a specialized language for programming. However, this goal remains distant and its benefits are open to debate. Edsger Dijkstra took the position that the use of a formal language is essential to prevent the introduction of meaningless constructs, and dismissed natural language programming as "foolish." Alan Perlis was similarly dismissive of the idea.

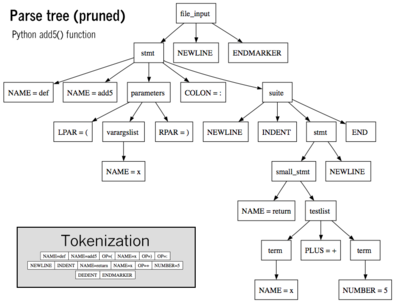

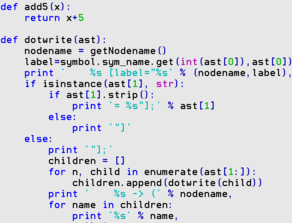

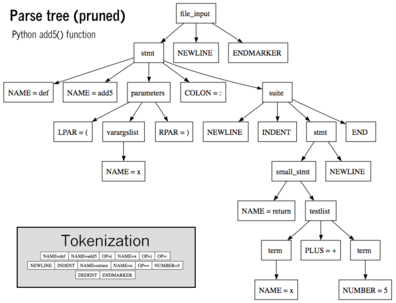

Fig 2 - Parse tree of Python code with inset tokenization

Syntax highlighting is often used to aid programmers in the recognition of elements of source code. The language you see here is Python

A programming language's surface form is known as its syntax. Most programming languages are purely textual; they use sequences of text including words, numbers, and punctuation, much like written natural languages. On the other hand, there are some programming languages which are more graphical in nature, using spatial relationships between symbols to specify a program.

The syntax of a language describes the possible combinations of symbols that form a syntactically correct program. The meaning given to a combination of symbols is handled by semantics. Since most languages are textual, this article discusses textual syntax.

Programming language syntax is usually defined using a combination of regular expressions (for lexical structure) and Backus-Naur Form (for grammatical structure). Below is a simple grammar, based on Lisp:

expression ::= atom | list

atom ::= number | symbol

number ::= [+-]?['0'-'9']+

symbol ::= ['A'-'Z''a'-'z'].*

list ::= '(' expression* ')'

This grammar specifies the following:

The following are examples of well-formed token sequences in this grammar: '12345', '()', '(a b c232 (1))'

Not all syntactically correct programs are semantically correct. Many syntactically correct programs are nonetheless ill-formed, per the language's rules; and may (depending on the language specification and the soundness of the implementation) result in an error on translation or execution. In some cases, such programs may exhibit undefined behaviour. Even when a program is well-defined within a language, it may still have a meaning that is not intended by the person who wrote it.

Using natural language as an example, it may not be possible to assign a meaning to a grammatically correct sentence or the sentence may be false:

The following C language fragment is syntactically correct, but performs an operation that is not semantically defined (because p is a null pointer, the operations p->real and p->im have no meaning):

complex *p = NULL;

complex abs_p = sqrt (p->real * p->real + p->im * p->im);

A type system defines how a programming language classifies values and expressions into types, how it can manipulate those types and how they interact. This generally includes a description of the data structures that can be constructed in the language. The design and study of type systems using formal mathematics is known as type theory.

Internally, all data in modern digital computers are stored simply as zeros or ones (binary). The data typically represent information in the real world such as names, bank accounts and measurements, so the low-level binary data are organized by programming languages into these high-level concepts as data types. There are also more abstract types whose purpose is just to warn the programmer about semantically meaningless statements or verify safety properties of programs.

Languages can be classified with respect to their type systems.

A language is typed if operations defined for one data type cannot be performed on values of another data type. For example, "this text between the quotes" is a string. In most programming languages, dividing a number by a string has no meaning. Most modern programming languages will therefore reject any program attempting to perform such an operation. In some languages, the meaningless operation will be detected when the program is compiled ("static" type checking), and rejected by the compiler, while in others, it will be detected when the program is run ("dynamic" type checking), resulting in a runtime exception.

By opposition, an untyped language, such as most assembly languages, allows any operation to be performed on any data type. High-level languages which are untyped include BCPL and some varieties of Forth.

In practice, while few languages are considered typed from the point of view of type theory (verifying or rejecting all operations), most modern languages offer a degree of typing. Many production languages provide means to bypass or subvert the type system.

In static typing all expressions have their types determined prior to the program being run (typically at compile-time). For example, 1 and (2+2) are integer expressions; they cannot be passed to a function that expects a string, or stored in a variable that is defined to hold dates.

Statically-typed languages can be manifestly typed or type-inferred. In the first case, the programmer must explicitly write types at certain textual positions (for example, at variable declarations). In the second case, the compiler infers the types of expressions and declarations based on context. Most mainstream statically-typed languages, such as C++ and Java, are manifestly typed. Complete type inference has traditionally been associated with less mainstream languages, such as Haskell and ML. However, many manifestly typed languages support partial type inference; for example, Java and C# both infer types in certain limited cases.

Dynamic typing, also called latent typing, determines the type-safety of operations at runtime; in other words, types are associated with runtime values rather than textual expressions. As with type-inferred languages, dynamically typed languages do not require the programmer to write explicit type annotations on expressions. Among other things, this may permit a single variable to refer to values of different types at different points in the program execution. However, type errors cannot be automatically detected until a piece of code is actually executed, making debugging more difficult. Lisp, JavaScript, and Python are dynamically typed.

Weak typing allows a value of one type to be treated as another, for example treating a string as a number. This can occasionally be useful, but it can also cause bugs; such languages are often termed unsafe. C, C++, and most assembly languages are often described as weakly typed.

Strong typing prevents the above. Attempting to mix types raises an error. Strongly-typed languages are often termed type-safe or safe, but they do not make bugs impossible. Ada, Python, and ML are strongly typed.

An alternate definition for "weakly typed" refers to languages, such as Perl, JavaScript, and C++ which permit a large number of implicit type conversions; Perl in particular can be characterized as a dynamically typed programming language in which type checking can take place at runtime. See type system. This capability is often useful, but occasionally dangerous; as it would permit operations whose objects can change type on demand.

Strong and static are generally considered orthogonal concepts, but usage in the literature differs. Some use the term strongly typed to mean strongly, statically typed, or, even more confusingly, to mean simply statically typed. Thus, C has been called both strongly typed and weakly, statically typed.

Once data has been specified, the machine must be instructed to perform operations on the data. The execution semantics of a language defines how and when the various constructs of a language should produce a program behaviour.

For example, the semantics may define the strategy by which expressions are evaluated to values, or the manner in which control structures conditionally execute statements.

Most programming languages have an associated core library (sometimes known as the 'Standard library', especially if it is included as part of the published language standard), which is conventionally made available by all implementations of the language. Core libraries typically include definitions for commonly used algorithms, data structures, and mechanisms for input and output.

A language's core library is often treated as part of the language by its users, although the designers may have treated it as a separate entity. Many language specifications define a core that must be made available in all implementations, and in the case of standardized languages this core library may be required. The line between a language and its core library therefore differs from language to language. Indeed, some languages are designed so that the meanings of certain syntactic constructs cannot even be described without referring to the core library. For example, in Java, a string literal is defined as an instance of the java.lang.String class; similarly, in Smalltalk, an anonymous function expression (a "block") constructs an instance of the library's Block Context class. Conversely, Scheme contains multiple coherent subsets that suffice to construct the rest of the language as library macros, and so the language designers do not even bother to say which portions of the language must be implemented as language constructs, and which must be implemented as parts of a library.

A language's designers and users must construct a number of artifacts that govern and enable the practice of programming. The most important of these artifacts are the language specification and implementation.

The specification of a programming language is intended to provide a definition that language users and implementors can use to interpret the behaviour of programs when reading their source code.

A programming language specification can take several forms, including the following:

An implementation of a programming language provides a way to execute that program on one or more configurations of hardware and software. There are, broadly, two approaches to programming language implementation: compilation and interpretation. It is generally possible to implement a language using both techniques.

The output of a compiler may be executed by hardware or a program called an interpreter. In some implementations that make use of the interpreter approach there is no distinct boundary between compiling and interpreting. For instance, some implementations of the BASIC programming language compile and then execute the source a line at a time.

Programs that are executed directly on the hardware usually run several orders of magnitude faster than those that are interpreted in software.

One technique for improving the performance of interpreted programs is just-in-time compilation. Here the virtual machine monitors which sequences of bytecode are frequently executed and translates them to machine code for direct execution on the hardware.

A selection of textbooks that teach programming, in languages both popular and obscure. These are only a few of the thousands of programming languages and dialects that have been designed in history.

The first programming languages predate the modern computer. The 19th century had "programmable" looms and player piano scrolls which implemented, what are today recognized as examples of, domain-specific programming languages. By the beginning of the twentieth century, punch cards encoded data and directed mechanical processing. In the 1930s and 1940s, the formalisms of Alonzo Church's lambda calculus and Alan Turing's Turing machines provided mathematical abstractions for expressing algorithms; the lambda calculus remains influential in language design.

In the 1940s, the first electrically powered digital computers were created. The computers of the early 1950s, notably the UNIVAC I and the IBM 701 used machine language programs. First generation machine language programming was quickly superseded by a second generation of programming languages known as Assembly languages. Later in the 1950s, assembly language programming, which had evolved to include the use of macro instructions, was followed by the development of three modern programming languages: FORTRAN, LISP, and COBOL. Updated versions of all of these are still in general use, and importantly, each has strongly influenced the development of later languages. At the end of the 1950s, the language formalized as Algol 60 was introduced, and most modern programming languages are, in many respects, descendants of Algol. The format and use of the early programming languages was heavily influenced by the constraints of the interface.

The period from the 1960s to the late 1970s brought the development of the major language paradigms now in use, though many aspects were refinements of ideas in the very first Third-generation programming languages:

Each of these languages spawned an entire family of descendants, and most modern languages count at least one of them in their ancestry.

The 1960s and 1970s also saw considerable debate over the merits of structured programming, and whether programming languages should be designed to support it. Edsger Dijkstra, in a famous 1968 letter published in the Communications of the ACM, argued that GOTO statements should be eliminated from all "higher level" programming languages.

The 1960s and 1970s also saw expansion of techniques that reduced the footprint of a program as well as improved productivity of the programmer and user. The card deck for an early 4GL was a lot smaller for the same functionality expressed in a 3GL deck.

The 1980s were years of relative consolidation. C++ combined object-oriented and systems programming. The United States government standardized Ada, a systems programming language intended for use by defence contractors. In Japan and elsewhere, vast sums were spent investigating so-called "fifth generation" languages that incorporated logic programming constructs. The functional languages community moved to standardize ML and Lisp. Rather than inventing new paradigms, all of these movements elaborated upon the ideas invented in the previous decade.

One important trend in language design during the 1980s was an increased focus on programming for large-scale systems through the use of modules, or large-scale organizational units of code. Modula-2, Ada, and ML all developed notable module systems in the 1980s. Module systems were often wedded to generic programming constructs.

The rapid growth of the Internet in the mid-1990's created opportunities for new languages. Perl, originally a Unix scripting tool first released in 1987, became common in dynamic Web sites. Java came to be used for server-side programming. These developments were not fundamentally novel, rather they were refinements to existing languages and paradigms, and largely based on the C family of programming languages.

Programming language evolution continues, in both industry and research. Current directions include security and reliability verification, new kinds of modularity (mixins, delegates, aspects), and database integration.

The 4GLs are examples of languages which are domain-specific, such as SQL, which manipulates and returns sets of data rather than the scalar values which are canonical to most programming languages. Perl, for example, with its ' here document' can hold multiple 4GL programs, as well as multiple JavaScript programs, in part of its own perl code and use variable interpolation in the 'here document' to support multi-language programming.

There is no overarching classification scheme for programming languages. A given programming language does not usually have a single ancestor language. Languages commonly arise by combining the elements of several predecessor languages with new ideas in circulation at the time. Ideas that originate in one language will diffuse throughout a family of related languages, and then leap suddenly across familial gaps to appear in an entirely different family.

The task is further complicated by the fact that languages can be classified along multiple axes. For example, Java is both an object-oriented language (because it encourages object-oriented organization) and a concurrent language (because it contains built-in constructs for running multiple threads in parallel). Python is an object-oriented scripting language.

In broad strokes, programming languages divide into programming paradigms and a classification by intended domain of use. Paradigms include procedural programming, object-oriented programming, functional programming, and logic programming; some languages are hybrids of paradigms or multi-paradigmatic. An assembly language is not so much a paradigm as a direct model of an underlying machine architecture. By purpose, programming languages might be considered general purpose, system programming languages, scripting languages, domain-specific languages, or concurrent/distributed languages (or a combination of these). Some general purpose languages were designed largely with educational goals.

A programming language can be classified by its position in the Chomsky hierarchy. For example, the Thue programming language can recognize or define Type-0 languages in the Chomsky hierarchy. Most programming languages are Type-2 languages and obey context-free grammars.

Key takeaway

A programming language is an artificial language that can be used to control the behaviour of a machine, particularly a computer. Programming languages, like human languages, are defined through the use of syntactic and semantic rules, to determine structure and meaning respectively.

Programming languages are used to facilitate communication about the task of organizing and manipulating information, and to express algorithms precisely. Some authors restrict the term "programming language" to those languages that can express all possible algorithms; sometimes the term " computer language" is used for more limited artificial languages.

Thousands of different programming languages have been created, and new ones are created every year.

Though Environment Setup is not an element of any Programming Language, it is the first step to be followed before setting on to write a program.

When we say Environment Setup, it simply implies a base on top of which we can do our programming. Thus, we need to have the required software setup, i.e., installation on our PC which will be used to write computer programs, compile, and execute them. For example, if you need to browse Internet, then you need the following setup on your machine −

If you are a PC user, then you will recognize the following screenshot, which we have taken from the Internet Explorer while browsing tutorialspoint.com.

Similarly, you will need the following setup to start with programming using any programming language.

In case you don’t have sufficient exposure to computers, you will not be able to set up either of this software. So, we suggest you take the help from any technical person around you to set up the programming environment on your machine from where you can start. But for you, it is important to understand what these items are.

A text editor is a software that is used to write computer programs. Your Windows machine must have a Notepad, which can be used to type programs. You can launch it by following these steps −

Start Icon → All Programs → Accessories → Notepad → Mouse Click on Notepad

It will launch Notepad with the following window −

You can use this software to type your computer program and save it in a file at any location. You can download and install other good editors like Notepad++, which is freely available.

If you are a Mac user, then you will have TextEdit or you can install some other commercial editor like BBEdit to start with.

You write your computer program using your favourite programming language and save it in a text file called the program file.

Now let us try to get a little more detail on how the computer understands a program written by you using a programming language. Actually, the computer cannot understand your program directly given in the text format, so we need to convert this program in a binary format, which can be understood by the computer.

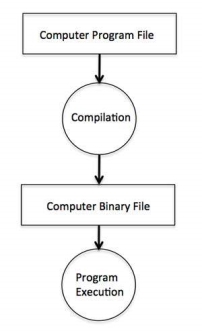

The conversion from text program to binary file is done by another software called Compiler and this process of conversion from text formatted program to binary format file is called program compilation. Finally, you can execute binary file to perform the programmed task.

We are not going into the details of a compiler and the different phases of compilation.

The following flow diagram gives an illustration of the process −

Fig 3 – Process state

So, if you are going to write your program in any such language, which needs compilation like C, C++, Java and Pascal, etc., then you will need to install their compilers before you start programming.

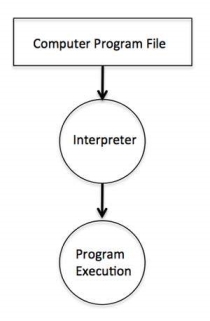

We just discussed about compilers and the compilation process. Compilers are required in case you are going to write your program in a programming language that needs to be compiled into binary format before its execution.

There are other programming languages such as Python, PHP, and Perl, which do not need any compilation into binary format, rather an interpreter can be used to read such programs line by line and execute them directly without any further conversion.

Fig 4 – Flow Chart

So, if you are going to write your programs in PHP, Python, Perl, Ruby, etc., then you will need to install their interpreters before you start programming.

If you are not able to set up any editor, compiler, or interpreter on your machine, then tutorialspoint.com provides a facility to compile and run almost all the programs online with an ease of a single click.

So do not worry and let's proceed further to have a thrilling experience to become a computer programmer in simple and easy steps.

Key takeaway

Though Environment Setup is not an element of any Programming Language, it is the first step to be followed before setting on to write a program.

When we say Environment Setup, it simply implies a base on top of which we can do our programming. Thus, we need to have the required software setup, i.e., installation on our PC which will be used to write computer programs, compile, and execute them. For example, if you need to browse Internet, then you need the following setup on your machine −

Why Programming?

You may already have used software, perhaps for word processing or spreadsheets, to solve problems. Perhaps now you are curious to learn how programmers write software. A program is a set of step-by-step instructions that directs the computer to do the tasks you want it to do and produce the results you want.

There are at least three good reasons for learning programming:

A set of rules that provides a way of telling a computer what operations to perform is called a programming language. There is not, however, just one programming language; there are many. In this chapter you will learn about controlling a computer through the process of programming. You may even discover that you might want to become a programmer.

An important point before we proceed: You will not be a programmer when you finish reading this chapter or even when you finish reading the final chapter. Programming proficiency takes practice and training beyond the scope of this book. However, you will become acquainted with how programmers develop solutions to a variety of problems.

What Programmers Do

In general, the programmer's job is to convert problem solutions into instructions for the computer. That is, the programmer prepares the instructions of a computer program and runs those instructions on the computer, tests the program to see if it is working properly, and makes corrections to the program. The programmer also writes a report on the program. These activities are all done for the purpose of helping a user fill a need, such as paying employees, billing customers, or admitting students to college.

The programming activities just described could be done, perhaps, as solo activities, but a programmer typically interacts with a variety of people. For example, if a program is part of a system of several programs, the programmer coordinates with other programmers to make sure that the programs fit together well. If you were a programmer, you might also have coordination meetings with users, managers, systems analysts, and with peers who evaluate your work-just as you evaluate theirs.

Let us turn to the programming process.

The Programming Process

Developing a program involves steps similar to any problem-solving task. There are five main ingredients in the programming process:

Let us discuss each of these in turn.

Suppose that, as a programmer, you are contacted because your services are needed. You meet with users from the client organization to analyze the problem, or you meet with a systems analyst who outlines the project. Specifically, the task of defining the problem consists of identifying what it is you know (input-given data), and what it is you want to obtain (output-the result). Eventually, you produce a written agreement that, among other things, specifies the kind of input, processing, and output required. This is not a simple process.

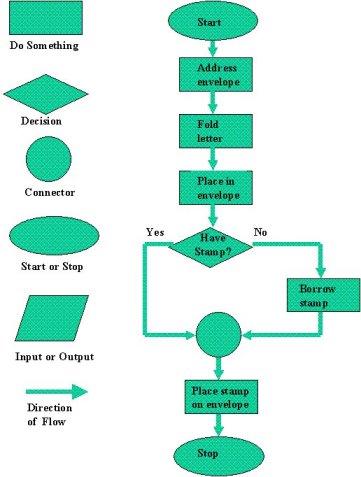

Figure 5: Flow Chart Symbols and Flow Chart For Mailing Letter

Two common ways of planning the solution to a problem are to draw a flowchart and to write pseudocode, or possibly both. Essentially, a flowchart is a pictorial representation of a step-by-step solution to a problem. It consists of arrows representing the direction the program takes and boxes and other symbols representing actions. It is a map of what your program is going to do and how it is going to do it. The American National Standards Institute (ANSI) has developed a standard set of flowchart symbols.

Figure 5 shows the symbols and how they might be used in a simple flowchart of a common everyday act-preparing a letter for mailing.

Pseudocode is an English-like nonstandard language that lets you state your solution with more precision than you can in plain English but with less precision than is required when using a formal programming language. Pseudocode permits you to focus on the program logic without having to be concerned just yet about the precise syntax of a particular programming language. However, pseudocode is not executable on the computer. We will illustrate these later in this chapter, when we focus on language examples.

3. Coding the Program

As the programmer, your next step is to code the program-that is, to express your solution in a programming language. You will translate the logic from the flowchart or pseudocode-or some other tool-to a programming language. As we have already noted, a programming language is a set of rules that provides a way of instructing the computer what operations to perform. There are many programming languages: BASIC, COBOL, Pascal, FORTRAN, and C are some examples. You may find yourself working with one or more of these. We will discuss the different types of languages in detail later in this chapter.

Although programming languages operate grammatically, somewhat like the English language, they are much more precise. To get your program to work, you have to follow exactly the rules-the syntax-of the language you are using. Of course, using the language correctly is no guarantee that your program will work, any more than speaking grammatically correct English means you know what you are talking about. The point is that correct use of the language is the required first step. Then your coded program must be keyed, probably using a terminal or personal computer, in a form the computer can understand.

One more note here: Programmers usually use a text editor, which is somewhat like a word processing program, to create a file that contains the program. However, as a beginner, you will probably want to write your program code on paper first.

4. Testing the Program

Some experts insist that a well-designed program can be written correctly the first time. In fact, they assert that there are mathematical ways to prove that a program is correct. However, the imperfections of the world are still with us, so most programmers get used to the idea that their newly written programs probably have a few errors. This is a bit discouraging at first, since programmers tend to be precise, careful, detail-oriented people who take pride in their work. Still, there are many opportunities to introduce mistakes into programs, and you, just as those who have gone before you, will probably find several of them.

Eventually, after coding the program, you must prepare to test it on the computer. This step involves these phases:

5. Documenting the Program

Documenting is an ongoing, necessary process, although, as many programmers are, you may be eager to pursue more exciting computer-centred activities. Documentation is a written detailed description of the programming cycle and specific facts about the program. Typical program documentation materials include the origin and nature of the problem, a brief narrative description of the program, logic tools such as flowcharts and pseudocode, data-record descriptions, program listings, and testing results. Comments in the program itself are also considered an essential part of documentation. Many programmers document as they code. In a broader sense, program documentation can be part of the documentation for an entire system.

The wise programmer continues to document the program throughout its design, development, and testing. Documentation is needed to supplement human memory and to help organize program planning. Also, documentation is critical to communicate with others who have an interest in the program, especially other programmers who may be part of a programming team. And, since turnover is high in the computer industry, written documentation is needed so that those who come after you can make any necessary modifications in the program or track down any errors that you missed.

Programming as a Career

There is a shortage of qualified personnel in the computer field. Before you join their ranks, consider the advantages of the computer field and what it takes to succeed in it.

The Joys of the Field

Although many people make career changes into the computer field, few choose to leave it. In fact, surveys of computer professionals, especially programmers, consistently report a high level of job satisfaction. There are several reasons for this contentment. One is the challenge-most jobs in the computer industry are not routine. Another is security, since established computer professionals can usually find work. And that work pays well-you will probably not be rich, but you should be comfortable. The computer industry has historically been a rewarding place for women and minorities. And, finally, the industry holds endless fascination since it is always changing.

What It Takes

You need, of course, some credentials, most often a two- or four-year degree in computer information systems or computer science. The requirements and salaries vary by the organization and the region, so we will not dwell on these here. Beyond that, the person most likely to land a job and move up the career ladder is the one with excellent communication skills, both oral and written . These are also the qualities that can be observed by potential employers in an interview. Promotions are sometimes tied to advanced degrees (an M.B.A. or an M.S. in computer science).

Open Doors

The overall outlook for the computer field is promising. The Bureau of Labour Statistics shows, through the year 2010, a 72 percent increase in programmers and a 69 percent increase in system use today, and we will discuss the most popular ones later In the chapter. Before we turn to specific languages, however, we need to discuss levels of language.

Levels of Language

Programming languages are said to be "lower" or "higher," depending on how close they are to the language the computer itself uses (Os and 1s = low) or to the language people use (more English-like-high). We will consider five levels of language. They are numbered 1 through 5 to correspond to levels, or generations. In terms of ease of use and capabilities, each generation is an improvement over its predecessors. The five generations of languages are

Let us look at each of these categories.

Machine Language

Humans do not like to deal in numbers alone-they prefer letters and words. But, strictly speaking, numbers are what machine language is. This lowest level of language, machine language, represents data and program instructions as 1s and Os-binary digits corresponding to the on and off electrical states in the computer. Each type of computer has its own machine language. In the early days of computing, programmers had rudimentary systems for combining numbers to represent instructions such as add and compare. Primitive by today's standards, the programs were not convenient for people to read and use. The computer industry quickly moved to develop assembly languages.

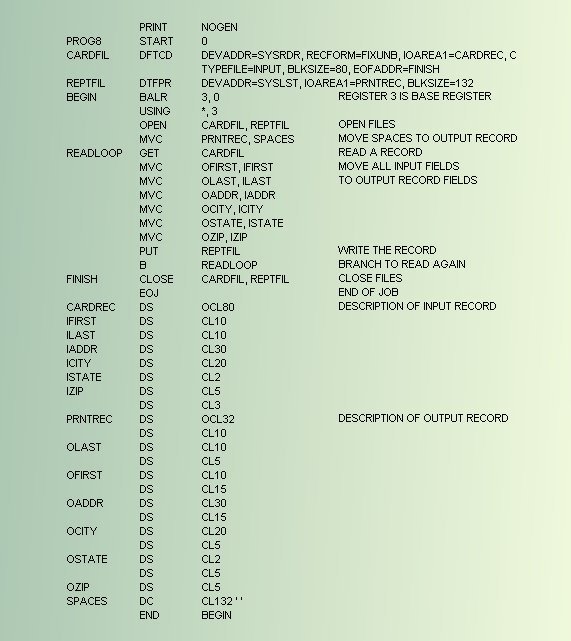

Assembly Languages

Figure 6: Example Assembly Language Program

Today, assembly languages are considered very low level-that is, they are not as convenient for people to use as more recent languages. At the time they were developed, however, they were considered a great leap forward. To replace the Is and Os used in machine language, assembly languages use mnemonic codes, abbreviations that are easy to remember: A for Add, C for Compare, MP for Multiply, STO for storing information in memory, and so on. Although these codes are not English words, they are still- from the standpoint of human convenience-preferable to numbers (Os and 1s) alone. Furthermore, assembly languages permit the use of names- perhaps RATE or TOTAL-for memory locations instead of actual address numbers. just like machine language, each type of computer has its own assembly language.

The programmer who uses an assembly language requires a translator to convert the assembly language program into machine language. A translator is needed because machine language is the only language the computer can actually execute. The translator is an assembler program, also referred to as an assembler. It takes the programs written in assembly language and turns them into machine language. Programmers need not worry about the translating aspect; they need only write programs in assembly language. The translation is taken care of by the assembler.

Although assembly languages represent a step forward, they still have many disadvantages. A key disadvantage is that assembly language is detailed in the extreme, making assembly programming repetitive, tedious, and error prone. This drawback is apparent in the program in Figure 2. Assembly language may be easier to read than machine language, but it is still tedious.

High-Level Languages

The first widespread use of high-level languages in the early 1960s transformed programming into something quite different from what it had been. Programs were written in an English-like manner, thus making them more convenient to use. As a result, a programmer could accomplish more with less effort, and programs could now direct much more complex tasks.

These so-called third-generation languages spurred the great increase in data processing that characterized the 1960s and 1970s. During that time the number of mainframes in use increased from hundreds to tens of thousands. The impact of third-generation languages on our society has been enormous.

Of course, a translator is needed to translate the symbolic statements of a high-level language into computer-executable machine language; this translator is usually a compiler. There are many compilers for each language and one for each type of computer. Since the machine language generated by one computer's COBOL compiler, for instance, is not the machine language of some other computer, it is necessary to have a COBOL compiler for each type of computer on which COBOL programs are to be run. Keep in mind, however, that even though a given program would be compiled to different machine language versions on different machines, the source program itself-the COBOL version-can be essentially identical on each machine.

Some languages are created to serve a specific purpose, such as controlling industrial robots or creating graphics. Many languages, however, are extraordinarily flexible and are considered to be general-purpose. In the past the majority of programming applications were written in BASIC, FORTRAN, or COBOL-all general-purpose languages. In addition to these three, another popular high-level language is C, which we will discuss later.

Very High-Level Languages

Languages called very high-level languages are often known by their generation number, that is, they are called fourth-generation languages or, more simply, 4GLs.

Definition

Will the real fourth-generation languages please stand up? There is no consensus about what constitutes a fourth-generation language. The 4GLs are essentially shorthand programming languages. An operation that requires hundreds of lines in a third-generation language such as COBOL typically requires only five to ten lines in a 4GL. However, beyond the basic criterion of conciseness, 4GLs are difficult to describe.

Characteristics

Fourth-generation languages share some characteristics. The first is that they make a true break with the prior generation-they are basically non-procedural. A procedural language tells the computer how a task is done: Add this, compare that, do this if something is true, and so forth-a very specific step-by-step process. The first three generations of languages are all procedural. In a nonprocedural language, the concept changes. Here, users define only what they want the computer to do; the user does not provide the details of just how it is to be done. Obviously, it is a lot easier and faster just to say what you want rather than how to get it. This leads us to the issue of productivity, a key characteristic of fourth-generation languages.

Productivity

Folklore has it that fourth-generation languages can improve productivity by a factor of 5 to 50. The folklore is true. Most experts say the average improvement factor is about 10-that is, you can be ten times more productive in a fourth-generation language than in a third-generation language. Consider this request: Produce a report showing the total units sold for each product, by customer, in each month and year, and with a subtotal for each customer. In addition, each new customer must start on a new page. A 4GL request looks something like this:

TABLE FILE SALES

SUM UNITS BY MONTH BY CUSTOMER BY PRODUCT

ON CUSTOMER SUBTOTAL PAGE BREAK

END

Even though some training is required to do even this much, you can see that it is pretty simple. The third-generation language COBOL, however, typically requires over 500 statements to fulfil the same request. If we define productivity as producing equivalent results in less time, then fourth-generation languages clearly increase productivity.

Downside

Fourth-generation languages are not all peaches and cream and productivity. The 4GLs are still evolving, and that which is still evolving cannot be fully defined or standardized. What is more, since many 4GLs are easy to use, they attract a large number of new users, who may then overcrowd the computer system. One of the main criticisms is that the new languages lack the necessary control and flexibility when it comes to planning how you want the output to look. A common perception of 4GLs is that they do not make efficient use of machine resources; however, the benefits of getting a program finished more quickly can far outweigh the extra costs of running it.

Benefits

Fourth-generation languages are beneficial because

It was not long ago that few people believed that 4GLs would ever be able to replace third-generation languages. These 4GL languages are being used, but in a very limited way.

Query Languages

A variation on fourth-generation languages are query languages, which can be used to retrieve information from databases. Data is usually added to databases according to a plan, and planned reports may also be produced. But what about a user who needs an unscheduled report or a report that differs somehow from the standard reports? A user can learn a query language fairly easily and then be able to input a request and receive the resulting report right on his or her own terminal or personal computer. A standardized query language, which can be used with several different commercial database programs, is Structured Query Language, popularly known as SQL. Other popular query languages are Query-by-Example, known as QBE, and Intellect.

Natural Languages

The word "natural" has become almost as popular in computing circles as it has in the supermarket. Fifth-generation languages are, as you may guess, even more ill-defined than fourth-generation languages. They are most often called natural languages because of their resemblance to the "natural" spoken English language. And, to the manager new to computers for whom these languages are now aimed, natural means human-like. Instead of being forced to key correct commands and data names in correct order, a manager tells the computer what to do by keying in his or her own words.

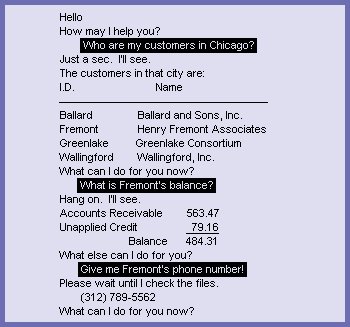

Figure 7: Example of Natural Language Interaction

A manager can say the same thing any number of ways. For example, "Get me tennis racket sales for January" works just as well as "I want January tennis racket revenues." Such a request may contain misspelled words, lack articles and verbs, and even use slang. The natural language translates human instructions-bad grammar, slang, and all-into code the computer understands. If it is not sure what the user has in mind, it politely asks for further explanation.

Natural languages are sometimes referred to as knowledge-based languages, because natural languages are used to interact with a base of knowledge on some subject. The use of a natural language to access a knowledge base is called a knowledge-based system.

Consider this request that could be given in the 4GL Focus: "SUM ORDERS BY DATE BY REGION." If we alter the request and, still in Focus, say something like "Give me the dates and the regions after you've added up the orders," the computer will spit back the user-friendly version of "You've got to be kidding" and give up. But some natural languages can handle such a request. Users can relax the structure of their requests and increase the freedom of their interaction with the data.

Here is a typical natural language request:

REPORT THE BASE SALARY, COMMISSIONS AND YEARS OF

SERVICE BROKEN DOWN BY STATE AND CITY FOR SALESCLERKS

IN NEW JERSEY AND MASSACHUSETTS.

You can hardly get closer to conversational English than that.

An example of a natural language is shown in Figure 3. Natural languages excel at easy data access. Indeed, the most common application for natural languages is interacting with databases.

Choosing a Language

How do you choose the language with which to write your program?

There are several possibilities:

Major Programming Languages

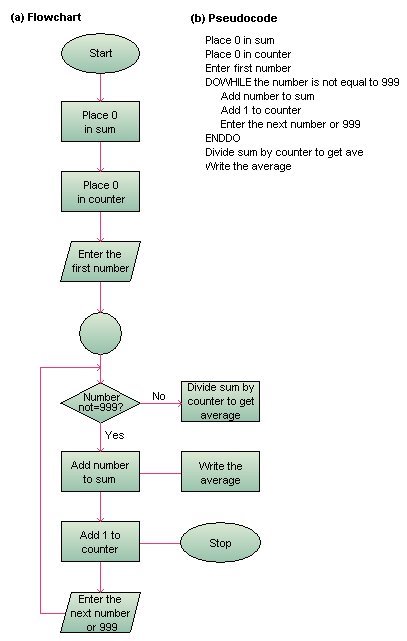

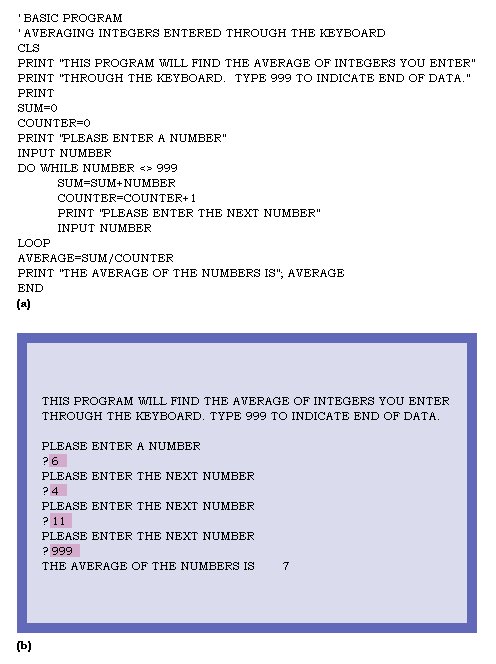

Figure 8: Flow Chart For Averaging Numbers

The following sections on individual languages will give you an overview of the third-generation languages in common use today: FORTRAN (a scientific language), COBOL (a business language), BASIC (simple language used for education and business), Pascal (education), Ada (military), and C (general purposed).

This chapter will present programs written in some of these languages. You will also see output produced by each program. Each program is designed to find the average of three numbers; the resulting average is shown in the sample output matching each program. Since all programs perform the same task, you will see some of the differences and similarities among the languages. We do not expect you to understand these programs; they are here merely to let you glimpse each language. Figure 4 presents the flowchart and pseudocode for the task of averaging numbers. As we discuss each language, we will provide a program for averaging numbers that follows the logic shown in this figure.

FORTRAN: The First High-Level Language

Figure 9: Example Fortran Program To Average Numbers

Developed by IBM and introduced in 1954, FORTRAN-for Formula Translator-was the first high-level language. FORTRAN is a scientifically oriented language-in the early days use of the computer was primarily associated with engineering, mathematical, and scientific research tasks.

FORTRAN is noted for its brevity, and this characteristic is part of the reason why it remains popular. This language is very good at serving its primary purpose, which is execution of complex formulas such as those used in economic analysis and engineering. Although in the past it was considered limited in regard to file processing or data processing, its capabilities have been greatly improved.

Not all programs are organized in the same way. Organization varies according to the language used. In many languages (such as COBOL), programs are divided into a series of parts. FORTRAN programs are not composed of different parts (although it is possible to link FORTRAN programs together); a FORTRAN program consists of statements one after the other. Different types of data are identified as the data is used. Descriptions for data records appear in format statements that accompany the READ and WRITE statements. Figure 5 shows a FORTRAN program and a sample output from the program.

COBOL: The Language of Business

Figure 10: Example COBOL Program to Average Numbers

In the 1950s FORTRAN had been developed, but there was still no accepted high-level programming language appropriate for business. The U.S. Department of Defence in particular was interested in creating such a standardized language, and so it called together representatives from government and various industries, including the computer industry. These representatives formed CODASYL-Conference of Data System Languages. In 1959 CODASYL introduced COBOL-for Common Business Oriented Language.

The U.S. government offered encouragement by insisting that anyone attempting to win government contracts for computer-related projects had to use COBOL. The American National Standards Institute first standardized COBOL in 1968 and, in 1974, issued standards for another version known as ANSI-COBOL. After more than seven controversial years of industry debate, the standard known as COBOL 85 was approved, making COBOL a more usable modern-day software tool. The principal benefit of standardization is that COBOL is relatively machine independent- that is, a program written for one type of computer can be run with only slight modifications on another type for which a COBOL compiler has been developed.

COBOL is very good for processing large files and performing relatively simple business calculations, such as payroll or interest. A noteworthy feature of COBOL is that it is English-like-far more so than FORTRAN or BASIC. The variable names are set up in such a way that, even if you know nothing about programming, you can still understand what the program does. For example:

IF SALES-AMOUNT IS GREATER THAN SALES-QUOTA

COMPUTE COMMISSION = MAX-RATE * SALES-AMOUNT

ELSE

COMPUTE COMMISSION = MIN-RATE * SALES-AMOUNT.

Once you understand programming principles, it is not too difficult to add COBOL to your repertoire. COBOL can be used for just about any task related to business programming; indeed, it is especially suited to processing alphanumeric data such as street addresses, purchased items, and dollar amounts-the data of business. However, the feature that makes COBOL so useful-its English-like appearance and easy readability-is also a weakness because a COBOL program can be incredibly verbose. A programmer seldom knocks out a quick COBOL program. In fact, there is hardly such a thing as a quick COBOL program; there are just too many program lines to write, even to accomplish a simple task. For speed and simplicity, BASIC, FORTRAN, and Pascal are probably better bets.

As you can see in Figure 6, a COBOL program is divided into four parts called divisions. The identification division identifies the program by name and often contains helpful comments as well. The environment division describes the computer on which the program will be compiled and executed. It also relates each file of the program to the specific physical device, such as the tape drive or printer, that will read or write the file. The data division contains details about the data processed by the program, such as type of characters (whether numeric or alphanumeric), number of characters, and placement of decimal points. The procedure division contains the statements that give the computer specific instructions to carry out the logic of the program.

It has been fashionable for some time to criticize COBOL: It is old-fashioned, cumbersome, and inelegant. In fact, some companies, devoted to fast, nimble program development, are converting to the more trendy language C. But COBOL, with more than 30 years of staying power, is still famous for its clear code, which is easy to read and debug.

BASIC: For Beginners and Others

Figure 11: Example Basic Program to Average Numbers

BASIC-Beginners' All-purpose Symbolic Instruction Code-is a common language that is easy to learn. Developed at Dartmouth College, BASIC was introduced by John Kemeny and Thomas Kurtz in 1965 and was originally intended for use by students in an academic environment. In the late 1960s it became widely used in interactive time-sharing environments in universities and colleges. The use of BASIC has extended to business and personal computer systems.

The primary feature of BASIC is one that may be of interest to many readers of this book: BASIC is easy to learn, even for a person who has never programmed before. Thus, the language is used often to train students in the classroom. BASIC is also used by non-programming people, such as engineers, who find it useful in problem solving. For many years, BASIC was looked down on by "real programmers," who complained that it had too many limitations and was not suitable for complex tasks. Newer versions, such as Microsoft's QuickBasic, include substantial improvements. An example of a BASIC program and its output are shown in Figure 7.

Pascal: The Language of Simplicity

Named for Blaise Pascal, the seventeenth-century French mathematician, Pascal was developed as a teaching language by a Swiss computer scientist, Niklaus Wirth, and first became available in 1971. Since that time it has become quite popular, first in Europe and now in the United States, particularly in universities and colleges offering computer science programs.

The foremost feature of Pascal is that it is simpler than other languages -it has fewer features and is less wordy than most. In addition to the popularity of Pascal in college computer science departments, the language has also made large inroads in the personal computer market as a simple yet sophisticated alternative to BASIC. Over the years new versions have improved on the original capabilities of Pascal. Today, Borland's Turbo Pascal leads the Pascal world because its designers eliminated most of the drawbacks of the original Pascal. Turbo Pascal is used by the business community and is often the choice of nonprofessional programmers who need to write their own programs.

Ada: Named for the Countess