Unit - 5

Synchronization and Concurrency Control

Concurrency is the simultaneous execution of multiple instruction sequences. When numerous process threads are running in parallel in the operating system, this occurs. The threads of a running process always communicate with one another via shared memory or message forwarding. Concurrency causes resource sharing, which leads to issues like as deadlocks and resource scarcity.

It aids with approaches such as process coordination, memory allocation, and execution scheduling in order to maximize throughput.

Principles

Interleaved and overlapping processes are both forms of concurrent processes, and they both have the same issues.

It is impossible to estimate the relative pace of execution. It is determined by the following factors:

● Processes that are involved in other processes.

● Interrupt handling in the operating system.

● The operating system's scheduling policies.

Issues of concurrency

Non-atomic –

Non-atomic operations that are interrupted by several processes can cause issues.

Race condition

When the outcome is determined by which of numerous processes arrives at a place first, a race situation occurs.

Blocking

Waiting for resources might be stifled by processes. A process may be halted for an extended amount of time while it waits for input from a terminal. This would be particularly bad if the procedure was required to update some data on a regular basis.

Starvation

It occurs when a procedure is unable to advance due to a lack of service.

Deadlocks

It happens when two processes are blocked, and neither of them can execute.

Key takeaway

Concurrency is the simultaneous execution of multiple instruction sequences.

When numerous process threads are running in parallel in the operating system, this occurs.

To apply mutual exclusion in any place, a facility must fulfil some of the basic requirement which is as follows:

- Mutual exclusion is something which must be enforced that means at a time only single process can access the critical section, rest of the process will wait for their turn.

- In case a process stops execution in its non-critical section, then it must halt without disturbing other process.

- There should not be a situation of deadlock or starvation among processes.

- If the critical section is free at any time, then any new process that want to access the critical section will be granted permission.

- There should be any assumptions about how many numbers of processors are there and what is the process speeds.

- There should be a limited amount of time for a process to be in its critical section.

To satisfy these requirements of mutual exclusion, various approaches are there such as:

- First approach explains that it should be the responsibility of process to decide whether to execute concurrently or not.

- The second approach makes use of the machine instructions since that can reduce the system load but it is not attractive in general use.

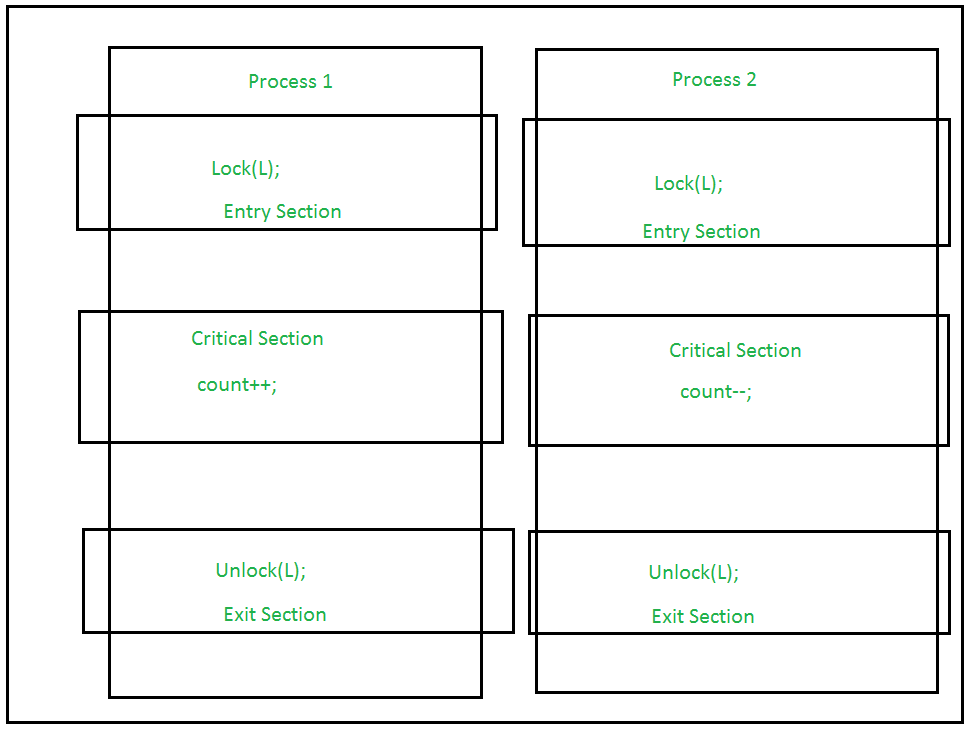

The Lock and Unlock approach can be used to implement the Hardware Approach to synchronization. The process is locked in the Entry Section so that only one process can enter the Critical Section. Once it has completed its execution, the process is transported to the Exit Section, where the process is unlocked so that another process in the Lock Section can repeat the procedure. This procedure is set up in such a way that it meets all three of the Critical Sections' requirements.

Fig 1: Image of lock

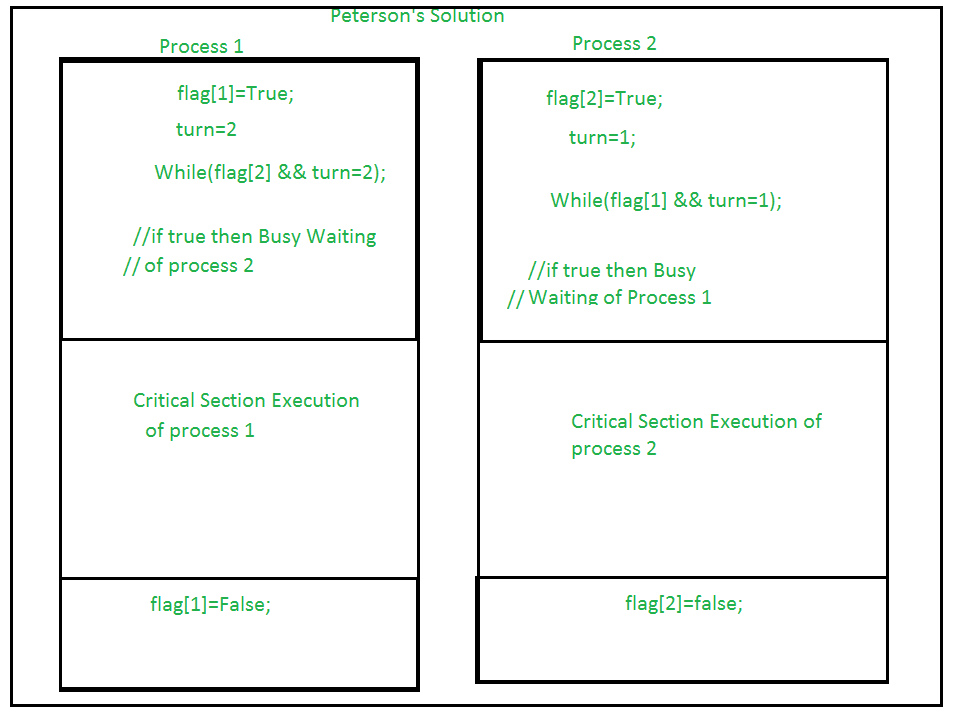

Some unique Algorithm technique is employed in the Software Approach to preserve data synchronization. For a number of two processes, a temporary variable like (turn) or Boolean variable (flag) value is used to hold the data, much like in Approach One or Approach Two. When the condition is True, the process enters the Busy Waiting State, which is a state of waiting. This does not meet all of the standards of the Critical Section.

For synchronization, another software solution called as Peterson's Solution is best. In order to ensure consistency, it uses two variables in the Entry Section: Flag (Boolean variable) and Turn variable (storing the process states). It satisfies all the three Critical Section requirements.

Fig 2: Image of Peterson’s algorithm

Semaphores square measure number variables that square measure accustomed to solve the important section downside by exploitation 2 atomic operations, wait and signal that square measure used for method synchronization.

The definitions of wait and signal square measure as follows −

● Wait

The wait operation decrements the worth of its argument S if it's positive. If S is negative or zero, then no operation is performed.

Wait(S)

Whereas (S<=0);

S--;

}

● Signal

The signal operation increments the worth of its argument S.

Signal(S)

Types of Semaphores

There square measure 2 main kinds of semaphores i.e., reckoning semaphores and binary semaphores. Details concerning these square measure given as follows –

● Counting Semaphores

These square measure number worth semaphores Associate in Nursing has an unrestricted worth domain. These semaphores square measure accustomed coordinate the resource access, wherever the semaphore count is that the range of accessible resources. If the resources square measure extra, the semaphore count is mechanically incremented and if the resources square measure is removed, the count is decremented.

● Binary Semaphores

The binary semaphores square measure like reckoning semaphores however their worth is restrict-ed to zero and one. The wait operation solely works once the semaphore is one and therefore the signal operation succeeds once the semaphore is zero. It's typically easier to implement binary semaphores than reckoning semaphores.

Advantages of Semaphores

Some of the benefits of semaphores square measure as follows −

● Semaphores permit only 1 method into the important section. They follow the mutual Pauli exclusion principle strictly and square measure far more economical than another way of synchronization.

● There isn't any resource wastage as a result of busy waiting in semaphores as processor time isn't wasted unnecessarily to ascertain if a condition is consummated to permit a method to access the important section.

● Semaphores square measure enforced within the machine freelance code of the microkernel. So that they square measure machine freelance.

Disadvantages of Semaphores

Some of the disadvantages of semaphores square measure as follows −

● Semaphores square measure difficult therefore the wait and signal operations should be implemented within the correct order to stop deadlocks.

● Semaphores square measure impractical for last scale use as their use ends up in the loss of modularity. This happens as a result of the wait and signal operations stop the creation of a structured layout for the system.

● Semaphores might cause a priority inversion wherever low priority processes might access the important section 1st and high priority processes later.

Key takeaway

Semaphores square measure number variables that square measure accustomed to solve the important section downside by exploitation 2 atomic operations, wait and signal that square measure used for method synchronization.

Mutual Exclusion is a term used to describe a situation Mutex is a short name for object. We can deduce from the term mutual exclusion that only one process at a time has access to a given resource. The mutex object allows many application threads to access the same resource at the same time, but only one at a time.

When a program starts, it asks the operating system to create a mutex object for a specific resource. The system assigns a unique name or ID to the mutex object. When a program thread wishes to use a resource, it takes the mutex object's lock, uses the resource, and then releases the mutex object's lock. The next process is then authorized to obtain the mutex object's lock.

Meanwhile, a process has taken control of a mutex object, and no other thread or process can access it. If the mutex object is already locked, the process that wants to acquire the lock must wait until the mutex object is unlocked, and the system will queue it up.

A mutex is a binary variable that serves as a locking mechanism. It's used to give a portion of code mutual exclusion, which means that only one process can work on it at a time.

There is a common misconception that a binary semaphore is the same as a mutex variable, but they are not. Binary semaphores, in addition to providing locking mechanisms, also provide two atomic operations: signal and wait, which means that after releasing a resource, the semaphore will provide signaling for processes that are waiting for it.

Monitor

Monitors are a type of synchronization device designed to solve difficulties produced by semaphores, such as timing errors.

Monitors are data types that are abstract in nature and contain common data variables and operations. A process cannot directly access the shared data variables, hence procedures are needed to allow a single process to access the shared data variables at a time.

As an example, consider the following:

Monitor monitorName

{

Data variables;

Procedure P1(....)

{

}

Procedure P2(....)

{

}

Procedure Pn(....)

{

}

Initialization Code(....)

{

}

}

In a monitor, only one process can be active at a time. Other processes that require access to shared variables in a monitor must queue and are granted access only after the prior process releases the shared variables.

The readers-writers issue concerns a shared object, such as a file, that is used by numerous programs. Some of these processes are readers, in the sense that they simply want to read data from the object, while others are writers, in the sense that they want to write data into the object.

The readers-writers problem is used to maintain synchronization and ensure that the object data is not corrupted. There is no difficulty, for example, if two readers access the object at the same time. However, there may be issues if two writers or a reader and writer access the item at the same time.

To address this issue, a writer should have exclusive access to an object, meaning that no other writer or reader should be able to access it while he or she is working on it. Multiple readers, on the other hand, can use the object at the same time.

Semaphores can be used to do this. The following are the codes for the reader and writer processes in the reader-writer problem:

Reader process

Below is the code that defines the reader process.

Wait (mutex);

Rc ++;

If (rc == 1)

Wait (wrt);

Signal(mutex);

.

. READ THE OBJECT

.

Wait(mutex);

Rc --;

If (rc == 0)

Signal (wrt);

Signal(mutex);

The semaphores mutex and wrt are initialized to 1 in the code above. In addition, rc is a 0 initialized variable. The mutex semaphore maintains mutual exclusion, while wrt, which is shared by the reader and writer process code, manages the writing mechanism.

The number of readers who have accessed the object is shown by the variable rc. On wrt, the wait operation is used as soon as rc becomes 1. This signifies that the object is no longer accessible to the writer. Rc is decremented after the read operation is completed. On wrt, signal operation is used when re becomes 0. As a result, a writer can now access the object.

Writer process

The following is the code that defines the writer process:

Wait(wrt);

.

. WRITE INTO THE OBJECT

.

Signal(wrt);

A wait operation is performed on wrt if a writer wants to access the object. After that, no other writer will be able to write to the object. When a writer has finished writing into an object, the signal operation on wrt is performed.

The Producer-Consumer problem is a classic multi-process synchronization problem, which means we're attempting to synchronize many processes.

In the producer-consumer conundrum, there is only one Producer who produces some items, and there is only one Consumer who consumes the Producer's products. Both producers and consumers have access to the same fixed-size memory buffer.

The Producer's job is to make the item, store it in the memory buffer, and then start making more. The Consumer's responsibility is to consume the item from the memory buffer.

What is the problem?

The following are some of the issues that arise in the Producer-Consumer relationship:

● When the buffer isn't full, the producer should just produce data. The producer is not permitted to put any data into the memory buffer if the buffer is confirmed to be full.

● The consumer can only consume data if and only if the memory buffer is not empty. If the memory buffer is found to be empty, the consumer is not permitted to use any data from it.

● Producers and consumers should not be able to access the memory buffer at the same time.

This is a generalization of the producer consumer problem wherein access is managed by a shared group of buffers of a restricted size.

We expect that the pool comprises n buffers, each fit for holding one item.

The mutex semaphore gives mutual exclusion to gets to the buffer pool and is initialized to 1.

The empty and full semaphores count the quantity of empty and full buffers. The semaphore empty is introduced to the value n; the semaphore full is initialized to the value 0.

Do {

...

// produce an item in nextp

...

Wait(empty);

Wait(mutex);

...

// add nextp to buffer

...

Signal(mutex);

Signal(full);

} while (TRUE);

The structure of the producer process

Do {

Wait (full);

Wait (mutex);

...

// remove an item from buffer to nextc

...

Signal(mutex);

Signal(empty);

...

// consume the item in nextc

...

} while (TRUE);

The structure of the consumer process.

In the dining philosopher’s conundrum, five philosophers share a circular table and alternate between eating and thinking. Each of the philosophers has a bowl of rice and five chopsticks. To eat, a philosopher requires both their right and left chopsticks. Only if both chopsticks are accessible can a hungry philosopher eat. Otherwise, a philosopher will put down their chopstick and resume their thought process.

The dining philosopher is a classic synchronization problem since it exemplifies a broad category of concurrency control issues.

Solution of the Dining Philosopher problem

We symbolize a chopstick with a semaphore, which effectively solves the Dining Philosophers Problem. The Dining Philosophers Problem will be solved using wait and signal operations. A wait operation can be used to pick a chopstick, while a semaphore can be used to release a chopstick signal.

Semaphore: A semaphore is an integer variable in S that can be accessed using only two standard atomic operations: wait and signal, which are defined as follows:

1. Wait( S )

{

While (S <= 0);

S--;

}

2. Signal (S)

{

S++;

}

It is evident from the previous definitions of wait that if S = 0, the program will enter an infinite loop (because of the semicolon; after while loop). The signal's function is to increase the value of S.

The chopstick is made up of an array of semaphores, which are expressed as follows:

Semaphore C [5];

As the chopsticks are on the table and not picked up by any of the philosophers, each element of the semaphore C0, C1, C2, C3, and C4 is initially set to 1.

Using the semaphore methods wait and signal, we can make the desired code for the Dining Philosopher Problem look like this.

Void Philosopher

{

While(1)

{

Wait( take_chopstickC[i] );

Wait( take_chopstickC[(i+1) % 5] ) ;

. .

. EATING THE NOODLE

.

Signal( put_chopstickC[i] );

Signal( put_chopstickC[ (i+1) % 5] ) ;

.

. THINKING

}

}

The first wait operation is executed on take chopstickC[i] and take chopstickC [(i+1) percent 5] in the above code. This image depicts philosopher picking up chopsticks from its left and right sides. Following that, the eating function is carried out.

After philosopher I has finished eating, signal operations on take chopstickC[i] and take chopstickC [ (i+1) percent 5] are executed. This demonstrates that both the left and right chopsticks have been consumed and put down by the philosopher. Finally, the philosopher resumes his contemplation.

Difficulty with the solution

The approach outlined above ensures that no two philosophers can eat at the same time. However, this solution may result in a deadlock. If all of the philosophers pick their left chopstick at the same time, this could happen. Then neither of them can eat, resulting in an impasse.

The following are some suggestions for avoiding deadlock:

● On the table, there should be no more than four philosophers.

● An even philosopher should choose the right chopstick first, followed by the left, and an odd philosopher should choose the left chopstick first, followed by the right chopstick.

● Only if both chopsticks are available at the same moment should a philosopher be permitted to choose one.

Every process needs some resources to complete its execution. However, the resource is granted in a sequential order.

- The process requests for some resource.

- OS grant the resource if it is available otherwise let the process waits.

- The process uses it and release on the completion.

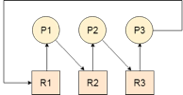

A Deadlock is a situation where each of the computer process waits for a resource which is being assigned to some another process. In this situation, none of the process gets executed since the resource it needs, is held by some other process which is also waiting for some other resource to be released.

Let us assume that there are three processes P1, P2 and P3. There are three different resources R1, R2 and R3. R1 is assigned to P1, R2 is assigned to P2 and R3 is assigned to P3.

After some time, P1 demands for R1 which is being used by P2. P1 halts its execution since it can't complete without R2. P2 also demands for R3 which is being used by P3. P2 also stops its execution because it can't continue without R3. P3 also demands for R1 which is being used by P1 therefore P3 also stops its execution.

In this scenario, a cycle is being formed among the three processes. None of the process is progressing and they are all waiting. The computer becomes unresponsive since all the processes got blocked.

Fig 3: Process cycle

If we model deadlock with a table standing on four legs, we can also simulate four legs with the four criteria that produce deadlock when they occur concurrently.

However, if one of the table's legs is broken, the table will undoubtedly fall. In the case of deadlock, we can avoid it if we can breach one of the four required conditions and do not allow them to occur simultaneously.

Let's look at how we may avoid each of these problems.

Mutual exclusion

Mutual section refers to the fact that a resource may never be accessed by more than one process at the same time, which is reasonable, but it is the main cause of the stalemate. If a resource could be used by multiple processes at the same time, the process would never have had to wait for anything.

However, the impasse can be avoided if we are able to violate resources that behave in mutually incompatible ways.

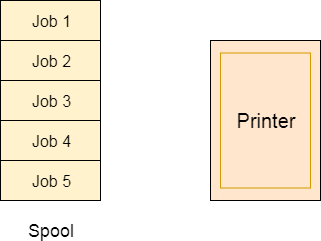

Spooling

Spooling can be useful for a device like a printer. The printer has a memory attached to it that stores tasks from each of the processes. Printer then gathers all of the tasks and prints each one according to FCFS. This method eliminates the need for the process to wait for the printer, allowing it to continue doing whatever it was doing before. It collects the output once it has been created.

Fig 4: Spooling

Although spooling can be a useful method for breaking mutual exclusion, it has two drawbacks.

● This does not apply to all resources.

● There may be a race condition between the processes to gain space in that spool once a certain amount of time has passed.

We cannot compel a resource to be used by multiple processes at the same time since it will not be equitable and may cause major performance issues. As a result, we cannot practically breach mutual exclusion for a process.

Hold and Wait

When a process holds a resource while waiting for another resource to do its work, it is called a hold and wait situation. Because more than one process can be holding one resource and waiting for another in a cyclic order, deadlock can arise.

However, we need to figure out a way for a process to either not hold any resources or not wait. That is, a process must be given all of the resources it requires before it can begin to execute. Once the execution has begun, a process must not wait for any resources.

!(Hold and wait) = !hold or !wait (negation of hold and wait is, either you don't hold or you don't wait)

This may be done in practice if a process declares all of the resources from the start. However, while this appears to be a very practical solution, it is not possible to implement in a computer system because a process cannot calculate the required resources from the outset.

The CPU executes a process, which is a series of instructions. Each instruction may necessitate the use of several resources at different moments. The OS will not be able to solve the problem.

The problem with this strategy is that:

● It's practically impossible.

● Because some processes may hold a resource for an extended period of time, the risk of starvation will increase.

No Preemption

Deadlock occurs when a process cannot be terminated once it has started. We can, however, avoid deadlock by diverting resources away from the process that is producing it.

This is not a good technique since removing a resource that the process is using will cause all of the work it has done so far to become inconsistent.

Any operation that uses a printer should be considered. If we remove the printer from one process and assign it to another, all of the data that has been printed may become inconsistent and ineffective, as well as the fact that the process will not be able to resume printing from where it left off, resulting in performance inefficiencies.

Circular wait

We can assign a priority number to each resource to break the cyclic wait. A process cannot request a resource with a lower priority. This assures that no one process can request a resource that is already in use by another process, preventing the formation of a loop.

Violation of Circular Wait is the only way that can be used in practice out of all the others.

Key takeaway

If we model deadlock with a table standing on four legs, we can also simulate four legs with the four criteria that produce deadlock when they occur concurrently.

Mutual section refers to the fact that a resource may never be accessed by more than one process at the same time, which is reasonable, but it is the main cause of the stalemate.

Spooling can be useful for a device like a printer.

We can assign a priority number to each resource to break the cyclic wait.

In a deadlock-avoidance system, any resource request will be granted if the system's ensuing state does not create deadlock. The system's state will be evaluated on a regular basis for safe and harmful conditions.

To avoid deadlocks, the process must inform the operating system of the maximum quantity of resources it can require to complete its execution.

The most straightforward and practical method is for the process to declare the maximum amount of resources of each type that it may ever require. The deadlock avoidance method monitors resource allocations to ensure that a circular wait state never occurs.

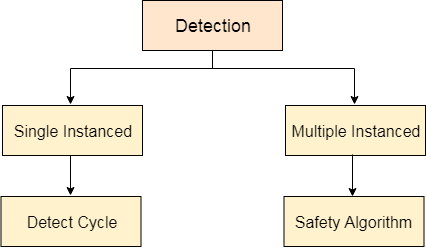

The OS does not use any mechanisms to avoid or prevent deadlocks in this way. As a result, the system assumes that a deadlock will inevitably occur. The OS periodically scans the system for any deadlocks in order to avoid them. If any deadlocks are discovered, the OS will attempt to restore the system using several ways.

The OS's primary responsibility is to detect deadlocks. With the help of the Resource allocation graph, the OS can detect deadlocks.

Fig 5: Deadlock detection

If a cycle forms in a system with single instanced resource types, there will undoubtedly be a deadlock. Detecting a cycle, on the other hand, isn't adequate in a graph with numerous instances of the same resource type. By turning the resource allocation graph into the allocation matrix and request matrix, we must apply the safety algorithm to the system.

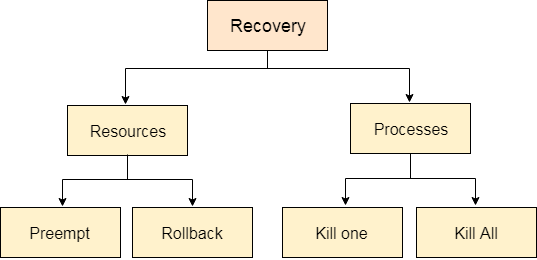

Either OS examines resources or processes in order to recover the system from deadlocks.

Fig 6: Deadlock recovery

Preempt the resource

We can take one of the resources from the resource owner (process) and give it to another process in the hopes that it will finish the execution and release the resource sooner. Choosing a resource that will be snatched, on the other hand, will be tough.

Rollback to a safe state

To reach the stalemate state, the system goes through a series of states. The operating system has the ability to restore the system to a previous safe state. The OS must implement check pointing at each state for this to work.

When we reach a deadlock, we shall reverse all allocations and return to the prior safe state.

Kill a process

Our problem can be solved by killing a process, however the bigger issue is deciding which process to kill. In most cases, the operating system kills a process that has done the least amount of work up to this point.

Kill all process

This is not a persuasion strategy, although it may be used if the problem becomes extremely critical. Killing all processes will result in system inefficiencies because all processes will re-start from the beginning.

Key takeaway

The OS does not use any mechanisms to avoid or prevent deadlocks in this way. As a result, the system assumes that a deadlock will inevitably occur. T

If a cycle forms in a system with single instanced resource types, there will undoubtedly be a deadlock.

Locking the objects involved in a composite operation is a natural approach to concurrency control (transaction). We will demonstrate that locking can achieve transaction serializability, but that deadlock must be considered. An alternative to locking is to assign a time stamp to each transaction and use this as the foundation for enforcing a single serializable order of execution of transaction operations. Concurrency control methods are considered in light of the possibility that conflict is extremely unlikely, and that putting a lot of mechanisms in place to resolve it would be a waste of time. Optimistic concurrency control is the final option we discuss because it may be the most appropriate method for particular application domains.

To begin, we show that concurrent invocation of composite operations in main memory suffers from some of the same issues as transaction systems. After that, we concentrate on transaction processing systems (where persistent memory must be used).

Concurrent composite operations in main memory only

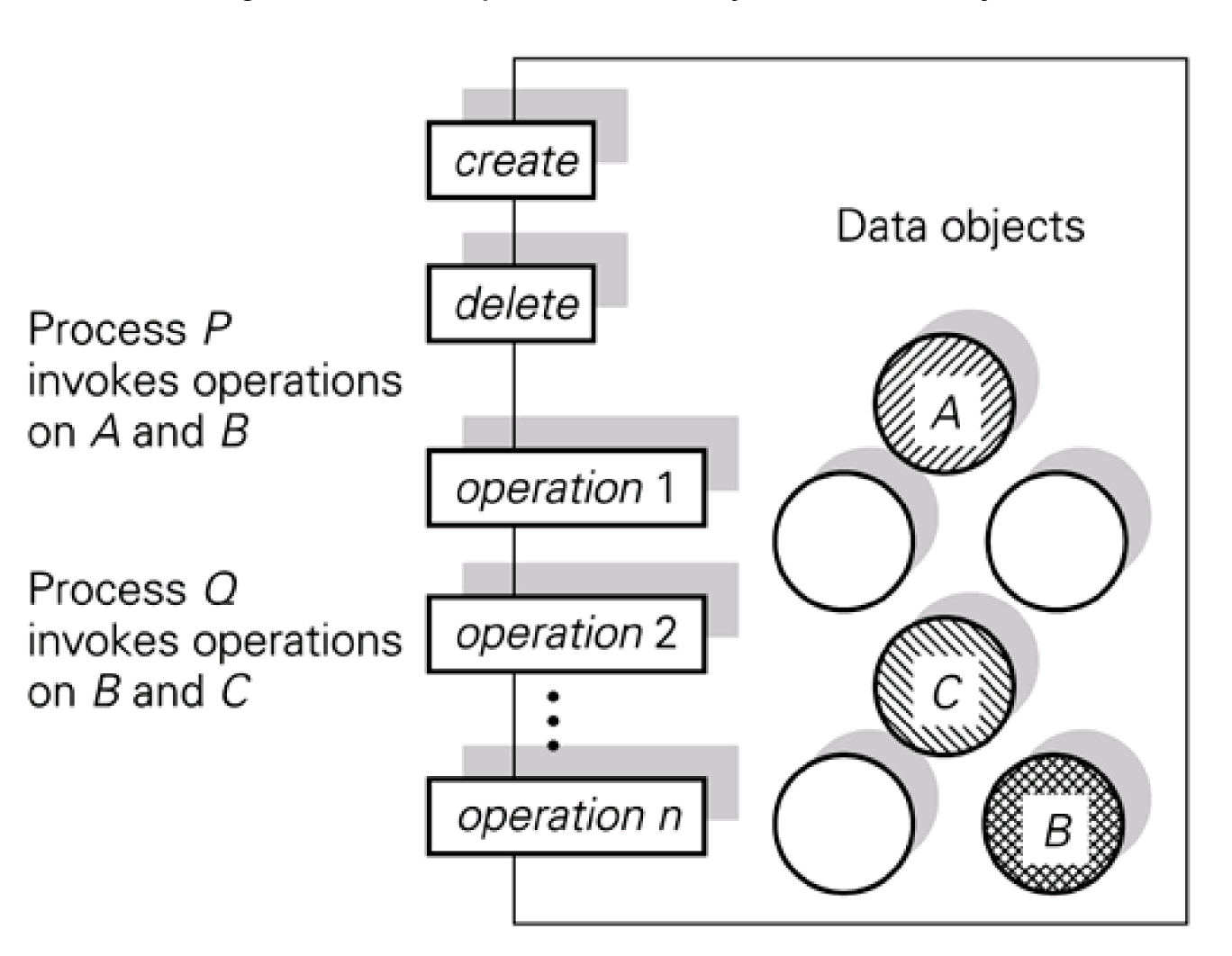

Assume that a concurrent program was built in a modular manner. Each shared data abstraction is assumed to be implemented as an abstract data type, as shown in Figure, and that a data object is locked for exclusive usage when any of its actions is invoked.

Fig: Related operations on data objects in main memory

This paradigm is affected by the issues of uncontrolled concurrent invocation of composite operations, which were explored in Part III. Assume that one concurrent process operates on both objects A and B in Figure; that is, a meaningful, high-level operation includes both an operation on object A and an operation on object B. Another composite action triggered by a different process also involves both item B and object C. We've already seen how improper interleaving’s of the sub operations of composite operations can lead to inaccurate values. This is true for both main memory and persistent memory data objects.

Objects in the main memory of a single computer

The attributes of transactions were established: atomicity, consistency, isolation, and durability (ACID). These features are useful for concurrent composite operation invocation in main memory only; that is, when no sub operation of a composite operation writes to persistent store. Let's start with a program that runs in a single computer's main memory.

Atomicity - If a crash occurs during a composite operation, all sub-operation effects are lost. The composite procedure will finish if there is no crash. Atomicity is self-contained and does not require any particular enforcement.

Consistency - A composite (high-level) operation is assumed to have significance. The state handled by the program is changed from one consistent value to another when a single composite action is invoked. We are unconcerned with crashes because a crash wipes out all state in main memory. When there are multiple invocations running at the same time, consistency must be guaranteed.

Isolation - The results of a composite operation's component operations should not be divulged until the process is finished. Isolation must be strictly enforced.

Durability - If a program is running in a single computer's main memory and the computer crashes during a composite operation invocation, the entire main memory is lost. The issue of durability is irrelevant.

The qualities of consistency and isolation are important in ensuring that programs with composite operation invocations run correctly. The primary challenge is the control of concurrent execution of composite operations.

Concurrency

Concurrency is the simultaneous execution of multiple instruction sequences. When numerous process threads are running in parallel in the operating system, this occurs. The threads of a running process always communicate with one another via shared memory or message forwarding. Concurrency causes resource sharing, which leads to issues like as deadlocks and resource scarcity.

It aids with approaches such as process coordination, memory allocation, and execution scheduling in order to maximize throughput.

Principles

Interleaved and overlapping processes are both forms of concurrent processes, and they both have the same issues.

It is impossible to estimate the relative pace of execution. It is determined by the following factors:

● Processes that are involved in other processes.

● Interrupt handling in the operating system.

● The operating system's scheduling policies.

Issues of concurrency

Non-atomic –

Non-atomic operations that are interrupted by several processes can cause issues.

Race condition

When the outcome is determined by which of numerous processes arrives at a place first, a race situation occurs.

Blocking

Waiting for resources might be stifled by processes. A process may be halted for an extended amount of time while it waits for input from a terminal. This would be particularly bad if the procedure was required to update some data on a regular basis.

Starvation

It occurs when a procedure is unable to advance due to a lack of service.

Deadlocks

It happens when two processes are blocked, and neither of them can execute.

Systematic approaches to concurrent program development

The following are some techniques to solve these difficulties in the context of a concurrent program:

- Adding a transaction specification to the programming language is a good idea. As an example.

Start transaction

Invoke operation 1 on object A

Invoke operation n on object B

End transaction;

2. Use formal program analysis techniques to ensure that the software system is free of deadlocks.

3. Incorporate a program with a universal object management. The manager could make use of data structures and algorithms to detect or avoid deadlock.

4. Consider the likelihood of deadlock when developing the concurrent program, and prevent calls between operations that could result in cycles (a systematic rather than formal approach).

To avoid cycles, the order in which locks are acquired for a given program could be defined statically. The reverse direction of call is not necessary in circumstances when large data objects are broken down into smaller components so that an operation on the enclosing object includes a nested call to the contained object. It may also be feasible to lock only the component in question, rather than the entire contained object. In general, avoiding the likelihood of cycles is challenging, and a methodical, unstructured approach is prone to error. Small difficulties, such as the example of the dining philosophers, can be solved on a case-by-case basis.

5. Consider time-stamp ordering as an alternative to locking for concurrency control.

References:

- Silberschatz, Galvin, Gagne, "Operating System Principles", 9th Edition, Wiley, ISBN 978- 1-118-06333-0

- Alfred V. Aho, Ravi Sethi, Reffrey D. Ullman, “Compilers Principles, Techniques, and Tools”, Addison Wesley, ISBN 981-235-885-4

- Leland Beck, “System Software: An Introduction to Systems Programming”, Pearson

- Dhamdhere D., "Systems Programming and Operating Systems", McGraw Hill, ISBN 0 - 07 - 463579 – 4