CN

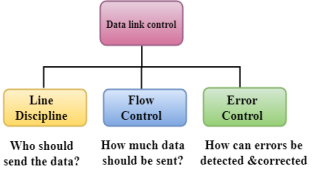

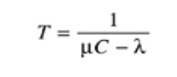

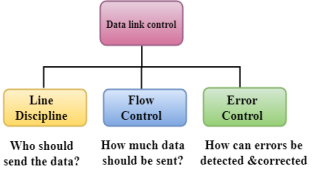

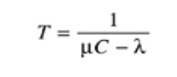

Unit - 2Data Link Layer 2.1 DLC Services HDLCData Link Control is the service provider by the Data Link Layer which provides the reliable data transfer for the physical medium.For example, in the half-duplex transmission mode, one device can transmit the data at one time. If both the devices at the end of the links transmit the data parallel, they will collide and leads to the loss of the information. The Data link layer can provide the coordination among the devices so no collision occurs.Three functions of data link layer are as follows:Line discipline Flow Control Error Control  HDLCShort for High-level Data Link Control, a transmission protocol used at the data link layer (layer 2) of the OSI seven layer model for data communications. The HDLC protocol embeds information in a data frame that allows devices to control data flow and correct errors. HDLC is an ISO standard developed from the Synchronous Data Link Control (SDLC)standard proposed by IBM in the 1970's.For any HDLC communications session, one station is designated primary and the other secondary. A session can use one of the following connection modes, which determine how the primary and secondary stations interact.Normal unbalanced: The secondary station responds only to the primary station. Asynchronous: The secondary station can initiate a message. Asynchronous balanced: Both stations send and receive over its part of a duplex line. This mode is used for X.25 packet - switching networks. The MAC sublayer is the bottom part of the data link layer. The protocols used to determine who goes next on a multi-access channel belong to a sublayer of the data link layer called the MAC (Medium Access Control) sublayer. The MAC sublayer is especially important in LANs, particularly wireless ones because wireless is naturally a broadcast channel. Broadcast channels are sometimes referred to as multi-access channels or random access channels. The Channel Allocation Problem The channel allocation problem is how to allocate a single broadcast channel among competing users. Static Channel Allocation In Lans And Mans The traditional way of allocating a single channel, such as a telephone trunk, among multiple competing users is Frequency Division Multiplexing (FDM). If there are N users, the bandwidth is divided into N equal-sized portions, each user being assigned one portion. Since each user has a private frequency band, there is no interference between users. When there is only a small and constant number of a user, each of which has a heavy (buffered) load of traffic (e.g., carriers' switching offices), FDM is a simple and efficient allocation mechanism. However, when the number of senders is large and continuously varying or the traffic is bursty, FDM presents some problems. If the spectrum is cut up into N regions and fewer than N users are currently interested in communicating, a large piece of valuable spectrum will be wasted. If more than N users want to communicate, some of them will be denied permission for lack of bandwidth, even if some of the users who have been assigned a frequency band hardly ever transmit or receive anything.However, even assuming that the number of users could somehow be held constant at N, dividing the single available channel into static sub channels is inherently inefficient. The basic problem is that when some users are quiescent, their bandwidth is simply lost. They are not using it, and no one else is allowed to use it either. Furthermore, in most computer systems, data traffic is extremely bursty (peak traffic to mean traffic ratios of 1000:1 are common). Consequently, most of the channels will be idle most of the time. The poor performance of static FDM can easily be seen from a simple queuing theory calculation. Let us start with the mean time delay, T, for a channel of capacity C bps, with an arrival rate of λ frames/sec, each frame having a length drawn from an exponential probability density function with mean 1/μ bits/frame. With these parameters the arrival rate is λ frames/sec and the service rate is μ C frames/sec. From queuing theory it can be shown that for Poisson arrival and service times,

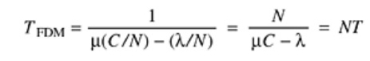

HDLCShort for High-level Data Link Control, a transmission protocol used at the data link layer (layer 2) of the OSI seven layer model for data communications. The HDLC protocol embeds information in a data frame that allows devices to control data flow and correct errors. HDLC is an ISO standard developed from the Synchronous Data Link Control (SDLC)standard proposed by IBM in the 1970's.For any HDLC communications session, one station is designated primary and the other secondary. A session can use one of the following connection modes, which determine how the primary and secondary stations interact.Normal unbalanced: The secondary station responds only to the primary station. Asynchronous: The secondary station can initiate a message. Asynchronous balanced: Both stations send and receive over its part of a duplex line. This mode is used for X.25 packet - switching networks. The MAC sublayer is the bottom part of the data link layer. The protocols used to determine who goes next on a multi-access channel belong to a sublayer of the data link layer called the MAC (Medium Access Control) sublayer. The MAC sublayer is especially important in LANs, particularly wireless ones because wireless is naturally a broadcast channel. Broadcast channels are sometimes referred to as multi-access channels or random access channels. The Channel Allocation Problem The channel allocation problem is how to allocate a single broadcast channel among competing users. Static Channel Allocation In Lans And Mans The traditional way of allocating a single channel, such as a telephone trunk, among multiple competing users is Frequency Division Multiplexing (FDM). If there are N users, the bandwidth is divided into N equal-sized portions, each user being assigned one portion. Since each user has a private frequency band, there is no interference between users. When there is only a small and constant number of a user, each of which has a heavy (buffered) load of traffic (e.g., carriers' switching offices), FDM is a simple and efficient allocation mechanism. However, when the number of senders is large and continuously varying or the traffic is bursty, FDM presents some problems. If the spectrum is cut up into N regions and fewer than N users are currently interested in communicating, a large piece of valuable spectrum will be wasted. If more than N users want to communicate, some of them will be denied permission for lack of bandwidth, even if some of the users who have been assigned a frequency band hardly ever transmit or receive anything.However, even assuming that the number of users could somehow be held constant at N, dividing the single available channel into static sub channels is inherently inefficient. The basic problem is that when some users are quiescent, their bandwidth is simply lost. They are not using it, and no one else is allowed to use it either. Furthermore, in most computer systems, data traffic is extremely bursty (peak traffic to mean traffic ratios of 1000:1 are common). Consequently, most of the channels will be idle most of the time. The poor performance of static FDM can easily be seen from a simple queuing theory calculation. Let us start with the mean time delay, T, for a channel of capacity C bps, with an arrival rate of λ frames/sec, each frame having a length drawn from an exponential probability density function with mean 1/μ bits/frame. With these parameters the arrival rate is λ frames/sec and the service rate is μ C frames/sec. From queuing theory it can be shown that for Poisson arrival and service times, For example, if C is 100 Mbps, the mean frame length, 1/μ, is 10,000 bits, and the frame arrival rate, λ, is 5000 frames/sec, then T = 200 μ sec. Note that if we ignored the queuing delay and just asked how long it takes to send a 10,000 bit frame on a 100-Mbps network, we would get the (incorrect) answer of 100 μ sec. That result only holds when there is no contention for the channel. Now let us divide the single channel into N independent sub channels, each with capacity C/N bps. The mean input rate on each of the sub channels will now be λ/N. Recomputing T we getEquation 4

For example, if C is 100 Mbps, the mean frame length, 1/μ, is 10,000 bits, and the frame arrival rate, λ, is 5000 frames/sec, then T = 200 μ sec. Note that if we ignored the queuing delay and just asked how long it takes to send a 10,000 bit frame on a 100-Mbps network, we would get the (incorrect) answer of 100 μ sec. That result only holds when there is no contention for the channel. Now let us divide the single channel into N independent sub channels, each with capacity C/N bps. The mean input rate on each of the sub channels will now be λ/N. Recomputing T we getEquation 4 The mean delay using FDM is N times worse than if all the frames were somehow magically arranged orderly in a big central queue. Precisely the same arguments that apply to FDM also apply to time division multiplexing (TDM). Each user is statically allocated every Nth time slot. If a user does not use the allocated slot, it just lies fallow. The same holds if we split up the networks physically. Using our previous example again, if we were to replace the 100-Mbps network with 10 networks of 10 Mbps each and statically allocate each user to one of them, the mean delay would jump from 200 μ sec to 2 msec. Since none of the traditional static channel allocation methods work well with bursty traffic, we will now explore dynamic methods. 2.2 Media Access Control: Random Access, Controlled AccessChannelizationThe Data Link Layer is responsible for transmission of data between two nodes. Its main functions are-

The mean delay using FDM is N times worse than if all the frames were somehow magically arranged orderly in a big central queue. Precisely the same arguments that apply to FDM also apply to time division multiplexing (TDM). Each user is statically allocated every Nth time slot. If a user does not use the allocated slot, it just lies fallow. The same holds if we split up the networks physically. Using our previous example again, if we were to replace the 100-Mbps network with 10 networks of 10 Mbps each and statically allocate each user to one of them, the mean delay would jump from 200 μ sec to 2 msec. Since none of the traditional static channel allocation methods work well with bursty traffic, we will now explore dynamic methods. 2.2 Media Access Control: Random Access, Controlled AccessChannelizationThe Data Link Layer is responsible for transmission of data between two nodes. Its main functions are-Data Link Control Multiple Access Control  Data Link control –

Data Link control –

The data link control is responsible for reliable transmission of message over transmission channel by using techniques like framing, error control and flow control. For Data link control refer to – Stop and Wait ARQMultiple Access Control –

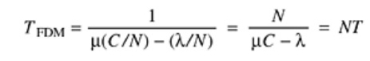

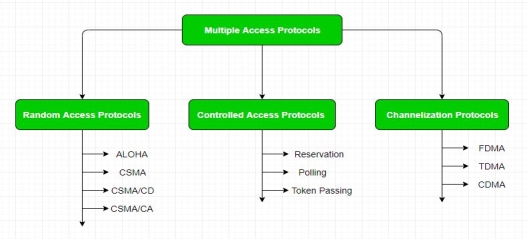

If there is a dedicated link between the sender and the receiver then data link control layer is sufficient, however if there is no dedicated link present then multiple stations can access the channel simultaneously. Hence multiple access protocols are required to decrease collision and avoid crosstalk. For example, in a classroom full of students, when a teacher asks a question and all the students (or stations) start answering simultaneously (send data at same time) then a lot of chaos is created( data overlap or data lost) then it is the job of the teacher (multiple access protocols) to manage the students and make them answer one at a time.Thus, protocols are required for sharing data on non dedicated channels. Multiple access protocols can be subdivided further as – 1. Random Access Protocol: In this, all stations have same superiority that is no station has more priority than another station. Any station can send data depending on medium’s state( idle or busy). It has two features:

1. Random Access Protocol: In this, all stations have same superiority that is no station has more priority than another station. Any station can send data depending on medium’s state( idle or busy). It has two features:There is no fixed time for sending data There is no fixed sequence of stations sending data The Random access protocols are further subdivided as:(a) ALOHA – It was designed for wireless LAN but is also applicable for shared medium. In this, multiple stations can transmit data at the same time and can hence lead to collision and data being garbled.PureAloha:

When a station sends data it waits for an acknowledgement. If the acknowledgement doesn’t come within the allotted time then the station waits for a random amount of time called back-off time (Tb) and re-sends the data. Since different stations wait for different amount of time, the probability of further collision decreases. Vulnerable Time = 2* Frame transmission time Throughput = G exp{-2*G} Maximum throughput = 0.184 for G=0.5SlottedAloha:

It is similar to pure aloha, except that we divide time into slots and sending of data is allowed only at the beginning of these slots. If a station misses out the allowed time, it must wait for the next slot. This reduces the probability of collision. Vulnerable Time = Frame transmission time Throughput = G exp{-*G} Maximum throughput = 0.368 for G=1For more information on ALOHA refer – LAN Technologies(b) CSMA – Carrier Sense Multiple Access ensures fewer collisions as the station is required to first sense the medium (for idle or busy) before transmitting data. If it is idle then it sends data, otherwise it waits till the channel becomes idle. However there is still chance of collision in CSMA due to propagation delay. For example, if station A wants to send data, it will first sense the medium. If it finds the channel idle, it will start sending data. However, by the time the first bit of data is transmitted (delayed due to propagation delay) from station A, if station B requests to send data and senses the medium it will also find it idle and will also send data. This will result in collision of data from station A and B.CSMA access modes- 1-persistent: The node senses the channel, if idle it sends the data, otherwise it continuously keeps on checking the medium for being idle and transmits unconditionally(with 1 probability) as soon as the channel gets idle. Non-Persistent: The node senses the channel, if idle it sends the data, otherwise it checks the medium after a random amount of time (not continuously) and transmits when found idle. P-persistent: The node senses the medium, if idle it sends the data with p probability. If the data is not transmitted ((1-p) probability) then it waits for some time and checks the medium again, now if it is found idle then it send with p probability. This repeat continues until the frame is sent. It is used in Wifi and packet radio systems. O-persistent: Superiority of nodes is decided beforehand and transmission occurs in that order. If the medium is idle, node waits for its time slot to send data. (c) CSMA/CD – Carrier sense multiple access with collision detection. Stations can terminate transmission of data if collision is detected. For more details refer – Efficiency of CSMA/CD(d) CSMA/CA – Carrier sense multiple access with collision avoidance. The process of collisions detection involves sender receiving acknowledgement signals. If there is just one signal (its own) then the data is successfully sent but if there are two signals(its own and the one with which it has collided) then it means a collision has occurred. To distinguish between these two cases, collision must have a lot of impact on received signal. However it is not so in wired networks, so CSMA/CA is used in this case.CSMA/CA avoids collision by:Inter frame space – Station waits for medium to become idle and if found idle it does not immediately send data (to avoid collision due to propagation delay) rather it waits for a period of time called Inter frame space or IFS. After this time it again checks the medium for being idle. The IFS duration depends on the priority of station. Contention Window – It is the amount of time divided into slots. If the sender is ready to send data, it chooses a random number of slots as wait time which doubles every time medium is not found idle. If the medium is found busy it does not restart the entire process, rather it restarts the timer when the channel is found idle again. Acknowledgement – The sender re-transmits the data if acknowledgement is not received before time-out. 2.ControlledAccess:

In this, the data is sent by that station which is approved by all other stations. For further details refer – Controlled Access Protocols3.Channelization:

In this, the available bandwidth of the link is shared in time, frequency and code to multiple stations to access channel simultaneously.Frequency Division Multiple Access (FDMA) – The available bandwidth is divided into equal bands so that each station can be allocated its own band. Guard bands are also added so that no to bands overlap to avoid crosstalk and noise. Time Division Multiple Access (TDMA) – In this, the bandwidth is shared between multiple stations. To avoid collision time is divided into slots and stations are allotted these slots to transmit data. However there is a overhead of synchronization as each station needs to know its time slot. This is resolved by adding synchronization bits to each slot. Another issue with TDMA is propagation delay which is resolved by addition of guard bands.

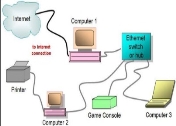

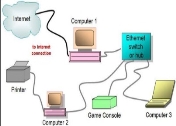

For more details refer – Circuit Switching Code Division Multiple Access (CDMA) – One channel carries all transmissions simultaneously. There is neither division of bandwidth nor division of time. For example, if there are many people in a room all speaking at the same time, then also perfect reception of data is possible if only two person speak the same language. Similarly data from different stations can be transmitted simultaneously in different code languages. 2.3 Wired LAN: Ethernet Protocol Standard Ethernet, Fast Ethernet, Gigabit Ethernet, 10 Gigabit EthernetEthernet is very popular physical layer LAN technology which is in use today. It defines that how many conductors that are required for a connection, the performance thresholds that can be expected, and most importantly provides the framework for data transmission. A standard Ethernet network can transmit the data at a rate up to 10 Megabits per second (10 Mbps). Other LAN types include Token Ring, Fast Ethernet, Gigabit Ethernet, 10 Gigabit Ethernet, Fibre Distributed Data Interface (FDDI), Asynchronous Transfer Mode (ATM) and Local Talk.Ethernet is popular because it strikes a very good balance between speed, cost and ease of installation. These benefits, combined with wide acceptability in the computer marketplace and the ability to support virtually all the popular network protocols, which make Ethernet an ideal networking technology for most of the computer users today.The Institute for Electrical and Electronic Engineers developed an Ethernet standard that is known as IEEE Standard 802.3. This standard defines the rules for configuring an Ethernet network and then also specifies how the elements in an Ethernet network interact with one another and work. By adhering to the IEEE standard, network equipment and network protocols can communicate very efficiently.EthernetIt is a standard communication protocol which is embedded in software and hardware devices. Ethernet is used for building a LAN. The local area network is a computer network that interconnects a group of computers and shares the information through cables or wires.Wired Ethernet NetworkA wired network is when there is an Ethernet cable connected from each port linking one device to another, therefore establishing a network connection. Even when there are multiple devices in different areas of a property a cable connects to each component separately. Generally a hub router or switch is required to operate numerous PCs. CAT6 cable is the standard wire used and many new homes are being pre wired during construction to save time at the same time as future proofing there house for year to come.

HDLCShort for High-level Data Link Control, a transmission protocol used at the data link layer (layer 2) of the OSI seven layer model for data communications. The HDLC protocol embeds information in a data frame that allows devices to control data flow and correct errors. HDLC is an ISO standard developed from the Synchronous Data Link Control (SDLC)standard proposed by IBM in the 1970's.For any HDLC communications session, one station is designated primary and the other secondary. A session can use one of the following connection modes, which determine how the primary and secondary stations interact.Normal unbalanced: The secondary station responds only to the primary station. Asynchronous: The secondary station can initiate a message. Asynchronous balanced: Both stations send and receive over its part of a duplex line. This mode is used for X.25 packet - switching networks. The MAC sublayer is the bottom part of the data link layer. The protocols used to determine who goes next on a multi-access channel belong to a sublayer of the data link layer called the MAC (Medium Access Control) sublayer. The MAC sublayer is especially important in LANs, particularly wireless ones because wireless is naturally a broadcast channel. Broadcast channels are sometimes referred to as multi-access channels or random access channels. The Channel Allocation Problem The channel allocation problem is how to allocate a single broadcast channel among competing users. Static Channel Allocation In Lans And Mans The traditional way of allocating a single channel, such as a telephone trunk, among multiple competing users is Frequency Division Multiplexing (FDM). If there are N users, the bandwidth is divided into N equal-sized portions, each user being assigned one portion. Since each user has a private frequency band, there is no interference between users. When there is only a small and constant number of a user, each of which has a heavy (buffered) load of traffic (e.g., carriers' switching offices), FDM is a simple and efficient allocation mechanism. However, when the number of senders is large and continuously varying or the traffic is bursty, FDM presents some problems. If the spectrum is cut up into N regions and fewer than N users are currently interested in communicating, a large piece of valuable spectrum will be wasted. If more than N users want to communicate, some of them will be denied permission for lack of bandwidth, even if some of the users who have been assigned a frequency band hardly ever transmit or receive anything.However, even assuming that the number of users could somehow be held constant at N, dividing the single available channel into static sub channels is inherently inefficient. The basic problem is that when some users are quiescent, their bandwidth is simply lost. They are not using it, and no one else is allowed to use it either. Furthermore, in most computer systems, data traffic is extremely bursty (peak traffic to mean traffic ratios of 1000:1 are common). Consequently, most of the channels will be idle most of the time. The poor performance of static FDM can easily be seen from a simple queuing theory calculation. Let us start with the mean time delay, T, for a channel of capacity C bps, with an arrival rate of λ frames/sec, each frame having a length drawn from an exponential probability density function with mean 1/μ bits/frame. With these parameters the arrival rate is λ frames/sec and the service rate is μ C frames/sec. From queuing theory it can be shown that for Poisson arrival and service times,

HDLCShort for High-level Data Link Control, a transmission protocol used at the data link layer (layer 2) of the OSI seven layer model for data communications. The HDLC protocol embeds information in a data frame that allows devices to control data flow and correct errors. HDLC is an ISO standard developed from the Synchronous Data Link Control (SDLC)standard proposed by IBM in the 1970's.For any HDLC communications session, one station is designated primary and the other secondary. A session can use one of the following connection modes, which determine how the primary and secondary stations interact.Normal unbalanced: The secondary station responds only to the primary station. Asynchronous: The secondary station can initiate a message. Asynchronous balanced: Both stations send and receive over its part of a duplex line. This mode is used for X.25 packet - switching networks. The MAC sublayer is the bottom part of the data link layer. The protocols used to determine who goes next on a multi-access channel belong to a sublayer of the data link layer called the MAC (Medium Access Control) sublayer. The MAC sublayer is especially important in LANs, particularly wireless ones because wireless is naturally a broadcast channel. Broadcast channels are sometimes referred to as multi-access channels or random access channels. The Channel Allocation Problem The channel allocation problem is how to allocate a single broadcast channel among competing users. Static Channel Allocation In Lans And Mans The traditional way of allocating a single channel, such as a telephone trunk, among multiple competing users is Frequency Division Multiplexing (FDM). If there are N users, the bandwidth is divided into N equal-sized portions, each user being assigned one portion. Since each user has a private frequency band, there is no interference between users. When there is only a small and constant number of a user, each of which has a heavy (buffered) load of traffic (e.g., carriers' switching offices), FDM is a simple and efficient allocation mechanism. However, when the number of senders is large and continuously varying or the traffic is bursty, FDM presents some problems. If the spectrum is cut up into N regions and fewer than N users are currently interested in communicating, a large piece of valuable spectrum will be wasted. If more than N users want to communicate, some of them will be denied permission for lack of bandwidth, even if some of the users who have been assigned a frequency band hardly ever transmit or receive anything.However, even assuming that the number of users could somehow be held constant at N, dividing the single available channel into static sub channels is inherently inefficient. The basic problem is that when some users are quiescent, their bandwidth is simply lost. They are not using it, and no one else is allowed to use it either. Furthermore, in most computer systems, data traffic is extremely bursty (peak traffic to mean traffic ratios of 1000:1 are common). Consequently, most of the channels will be idle most of the time. The poor performance of static FDM can easily be seen from a simple queuing theory calculation. Let us start with the mean time delay, T, for a channel of capacity C bps, with an arrival rate of λ frames/sec, each frame having a length drawn from an exponential probability density function with mean 1/μ bits/frame. With these parameters the arrival rate is λ frames/sec and the service rate is μ C frames/sec. From queuing theory it can be shown that for Poisson arrival and service times, For example, if C is 100 Mbps, the mean frame length, 1/μ, is 10,000 bits, and the frame arrival rate, λ, is 5000 frames/sec, then T = 200 μ sec. Note that if we ignored the queuing delay and just asked how long it takes to send a 10,000 bit frame on a 100-Mbps network, we would get the (incorrect) answer of 100 μ sec. That result only holds when there is no contention for the channel. Now let us divide the single channel into N independent sub channels, each with capacity C/N bps. The mean input rate on each of the sub channels will now be λ/N. Recomputing T we getEquation 4

For example, if C is 100 Mbps, the mean frame length, 1/μ, is 10,000 bits, and the frame arrival rate, λ, is 5000 frames/sec, then T = 200 μ sec. Note that if we ignored the queuing delay and just asked how long it takes to send a 10,000 bit frame on a 100-Mbps network, we would get the (incorrect) answer of 100 μ sec. That result only holds when there is no contention for the channel. Now let us divide the single channel into N independent sub channels, each with capacity C/N bps. The mean input rate on each of the sub channels will now be λ/N. Recomputing T we getEquation 4 The mean delay using FDM is N times worse than if all the frames were somehow magically arranged orderly in a big central queue. Precisely the same arguments that apply to FDM also apply to time division multiplexing (TDM). Each user is statically allocated every Nth time slot. If a user does not use the allocated slot, it just lies fallow. The same holds if we split up the networks physically. Using our previous example again, if we were to replace the 100-Mbps network with 10 networks of 10 Mbps each and statically allocate each user to one of them, the mean delay would jump from 200 μ sec to 2 msec. Since none of the traditional static channel allocation methods work well with bursty traffic, we will now explore dynamic methods. 2.2 Media Access Control: Random Access, Controlled AccessChannelizationThe Data Link Layer is responsible for transmission of data between two nodes. Its main functions are-

The mean delay using FDM is N times worse than if all the frames were somehow magically arranged orderly in a big central queue. Precisely the same arguments that apply to FDM also apply to time division multiplexing (TDM). Each user is statically allocated every Nth time slot. If a user does not use the allocated slot, it just lies fallow. The same holds if we split up the networks physically. Using our previous example again, if we were to replace the 100-Mbps network with 10 networks of 10 Mbps each and statically allocate each user to one of them, the mean delay would jump from 200 μ sec to 2 msec. Since none of the traditional static channel allocation methods work well with bursty traffic, we will now explore dynamic methods. 2.2 Media Access Control: Random Access, Controlled AccessChannelizationThe Data Link Layer is responsible for transmission of data between two nodes. Its main functions are- Data Link control –

Data Link control –The data link control is responsible for reliable transmission of message over transmission channel by using techniques like framing, error control and flow control. For Data link control refer to – Stop and Wait ARQMultiple Access Control –

If there is a dedicated link between the sender and the receiver then data link control layer is sufficient, however if there is no dedicated link present then multiple stations can access the channel simultaneously. Hence multiple access protocols are required to decrease collision and avoid crosstalk. For example, in a classroom full of students, when a teacher asks a question and all the students (or stations) start answering simultaneously (send data at same time) then a lot of chaos is created( data overlap or data lost) then it is the job of the teacher (multiple access protocols) to manage the students and make them answer one at a time.Thus, protocols are required for sharing data on non dedicated channels. Multiple access protocols can be subdivided further as –

1. Random Access Protocol: In this, all stations have same superiority that is no station has more priority than another station. Any station can send data depending on medium’s state( idle or busy). It has two features:

1. Random Access Protocol: In this, all stations have same superiority that is no station has more priority than another station. Any station can send data depending on medium’s state( idle or busy). It has two features:When a station sends data it waits for an acknowledgement. If the acknowledgement doesn’t come within the allotted time then the station waits for a random amount of time called back-off time (Tb) and re-sends the data. Since different stations wait for different amount of time, the probability of further collision decreases.

It is similar to pure aloha, except that we divide time into slots and sending of data is allowed only at the beginning of these slots. If a station misses out the allowed time, it must wait for the next slot. This reduces the probability of collision.

In this, the data is sent by that station which is approved by all other stations. For further details refer – Controlled Access Protocols3.Channelization:

In this, the available bandwidth of the link is shared in time, frequency and code to multiple stations to access channel simultaneously.

For more details refer – Circuit Switching

0 matching results found

Browse by Topics