Unit - 1

Software Engineering Fundamentals

Software evolution is a concept that refers to the process of creating software and then upgrading it on a regular basis for different purposes, such as adding new features or removing obsolete functionality. Change analysis, release preparation, system implementation, and system release to customers are all part of the evolution process.

The cost and effect of these changes was examined to determine how much of the system will be impacted by the reform and how much it will cost to enforce it. A new version of the software system is expected if the proposed improvements are approved. All potential changes are taken into account during release preparation.

The Importance of Software Evolution

Software evaluation is needed for the following reasons:

- Change in requirement with time: The organization's needs and modus operandi of working change significantly over time, so the tools (software) that they use must change to maximize output in this rapidly changing time.

- Environment change: When the working environment changes, the items (tools) that allow us to operate in that environment change proportionally. When the working environment changes, organisations must reintroduce old applications with revised features and functionality in order to adapt to the new environment.

- Errors and bugs: As the age of an organization's deployed software increases, its precision or impeccability decreases, and its ability to handle increasingly complex workloads continues to deteriorate.

- Security risks: Using obsolete software inside an enterprise will put you at risk of a variety of software-based cyberattacks, as well as reveal sensitive data that is inappropriately linked to the software in use. As a result, regular assessments of the security patches/modules used inside the program are needed to prevent such security breaches.

Key takeaway:

Software evolution is a concept that refers to the process of creating software and then upgrading it on a regular basis for different purposes, such as adding new features or removing obsolete functionality.

Software myths are negative attitudes that have caused administrators and technical people alike to have serious problems. Myths about apps spread misinformation and uncertainty. Three sorts of tech myths exist:

1. Management Myths

Software-responsible administrators are also under pressure to control budgets, avoid sliding schedules, and boost efficiency. The management myths are below:

Myth: We also have a book full of software building standards and procedures, isn't that going to teach my people what they need to know?

Reality: The Standards Book might very well exist, but it's not used. The majority of tech professionals are not conscious of its presence. It also does not reflect current practices in software engineering and is complete as well.

Myth: We can add more programmers and catch up if we get behind schedule (sometimes called the Mongolian horde concept).

Reality: The creation of software is not a mechanistic method like manufacturing. When new individuals are introduced, people who have been working have to spend time training newcomers, decreasing the amount of time spent on productive growth efforts. It is possible to add people, but only in a prepared and well-coordinated way.

Myth: I can only relax and let the firm develop it if I plan to outsource the software project to a third party.

Reality: If a company does not understand how to internally handle and control software projects, as its outsourced software projects, it will invariably fail.

2. Developer’s Myths

Developers have these myths:

Myth: I have no way of determining its efficiency until I get the software 'going'.

Reality: From the beginning of a project, one of the most powerful software quality assurance processes can be used, the systematic technical review. Software reviews are a "quality filter" that has been shown to be more reliable than checking for those kinds of software faults to be found.

Myth: Software engineering will make us produce voluminous paperwork that is redundant and will invariably slow us down.

Reality: The engineering of software is not about record creation. It is about quality creation. Better output contributes to decreased rework. Aster delivery times result in reduced rework.

Myth: Our job is finished until we write the software and get it to work.

Reality: "Someone once said, "The faster you start writing code, the longer it takes you to do it. Industry research shows that when it is first shipped to the consumer, between 60 and 80 percent of all effort spent on software will be spent.

3. Customer’s Myths

Customer misconceptions lead to false assumptions and, inevitably, disappointment with the developer (by the customer). The customer myths are below:

Myth: Project specifications change constantly, but since software is modular, adjustments can be easily accommodated.

Reality: It is true that the specifications of software change, but the effect of the change varies with the time it is implemented. The cost effect increases rapidly as improvements are requested during software design. Resources were dedicated and a structure for design was developed. Heavy additional costs may be caused by transition. Changes, when demanded after the development of software, may be far more costly than the same change requested earlier.

Myth: To begin writing programs, a general statement of priorities is enough-we will fill in the specifics later.

Reality: The major cause of failed software attempts is a weak up-front concept. It is important to provide a formal and thorough explanation of the functions, actions, performance, interfaces, design constraints and requirements for validation.

Key takeaway

Myths about apps spread misinformation and uncertainty.

The word "paradigm" comes from the Greek word "example," and it refers to a group of things that have something in common. Software engineering paradigms, often known as software engineering models or software development models, are a type of software engineering paradigm.

We use software process models and paradigms to explain the tasks required for the development of high-quality software systems in order to reduce the potential chaos of producing software applications and systems. The process model or paradigms used to design a given system are highly dependent on the target system's nature. The employment of software paradigms in the creation of software processes has a number of advantages, including the support of a systematic approach and the application of standard techniques and procedures.

The software engineering paradigm, often known as a software process model or the Software Development Life Cycle (SDLC) model, is a development strategy that includes processes, methodologies, and tools. SDLC refers to the period of time that begins with the conceptualization of a software system and ends with its disposal after use.

The following are some of the goals of using software engineering paradigms:

The software development procedure becomes more organised.

Determine the order in which states in software development and evolution occur, as well as the criteria for transitioning to the next stage.

The software engineering paradigm directs the software engineer's work.

A paradigm describes a method or philosophy for designing, developing, and maintaining software. Each paradigm has its own set of benefits and drawbacks, making one paradigm more appropriate for designing a software system in a specific context than another.

The methodologies, tools, procedures, and methods used to design software systems are highly influenced by the paradigm chosen.

History of Software Paradigms

Since the 1950s and 1960s, when the first significant software projects were being developed, computer scientists have been developing Software Development Life Cycle (SDLC) models to regulate the entire development process. Over time, a number of process models have been developed. These models were created to serve as a guide and a conceptual framework for managing the system software project in a methodical, formal, and procedural manner. They also serve as a roadmap for developing software applications. The many duties of the software system project, such as planning, organising, staffing, coordinating, budgeting, and managing software development operations, are guided by software models.

Over time, a number of different process models have emerged and refined. Each reflects an attempt to bring order to an activity that is essentially chaotic. Each model is described in a way that makes it appropriate for controlling and coordinating a software project.

Different software models emphasise different parts of the SDLC stages, which is one of the differences between them. Each focuses on a distinct part of the software life cycle, and each is appropriate for projects in which the highlighted aspects are critical.

These models were classic, traditional, and immature in the early phases of the evolution of software models.

Many descriptions of the classic software life cycle have been developed since the 1950s and 1960s. The "waterfall" model is the first software model. Flowcharts are commonly used to describe software models. These flowcharts show how massive software systems are developed in a single visual representation. These flowcharts are also used to present and describe the corresponding software model to customers, business manager, and development programmers.

The Software Engineering Paradigms (Process Models)

The following are the four basic models:

- The standard software development life cycle (SDLC), generally known as the waterfall model.

- The spiral model

- Incremental process model

- Agile development model

Waterfall model

Winston Royce introduced the Waterfall Model in 1970. This model has five phases: needs analysis and specification, construction, implementation, and evaluation of units, integration and system evaluation, and implementation and maintenance. The steps always follow this way and are not consistent. The developer must complete all stages before the start of the next phase. This model is called the “Waterfall Model”, because its graphic representation is similar to the fall of a waterfall.

1. Requirements analysis and specification phase: The aim of this phase is to understand the exact requirements of the customer and to document them properly. It describes the “what”; of the system to be produced and not “how. “In this phase, a large document called Software Requirement Specification (SRS) document is created which contained a detailed description of what the system will do in the common language.

2. Design Phase: This phase aims to transform the requirements gathered in the SRS into a suitable form which permits further coding in a programming language. It defines the overall software architecture together with high level and detailed design. All this work is documented as a Software Design Document (SDD).

3. Implementation and unit testing: During this phase, design is implemented. If the SDD is complete, the implementation or coding phase proceeds smoothly, because all the information needed by software developers is contained in the SDD.

4. Integration and System Testing: This phase is highly crucial as the quality the end product is determined by the effectiveness of the testing carried out. However, in this phase, the modules are tested for their interactions with each other and with the system.

5. Operation and maintenance phase: Maintenance is the task performed by every user once the software has been delivered to the customer, installed, and operational.

Fig 1: Waterfall model

Advantages of Waterfall model

- All phases are clearly defined.

- One of the most systematic methods for software development.

- Being oldest, this is one of the time-tested models.

- It is simple and easy to use.

Disadvantages of Waterfall Model

● Real Projects rarely follow sequential models.

● It is often difficult for the customer to state all requirements explicitly.

● Poor model for complex and object-oriented projects.

● Poor model for long and ongoing projects.

● High amount of risk and uncertainty.

● Poor model where requirements are at a moderate to high risk of changing.

● This model is suited to automate the existing manual system for which all requirements are known before the design starts. But for new systems, having such unchanging requirements is not possible.

Key takeaway

● The developer must complete all stages before the start of the next phase.

● Simple and simple to comprehend and use.

● Due to the rigidity of the model, it is simple to handle.

● Phases are processed one at a time and completed.

It refers to how well the program performs in comparison to its intended function. The following functions are required:

Functionality: Refers to the degree of performance of the software against its intended purpose. A collection of characteristics that relate to a software's ability to sustain its level of performance under specified conditions for a specified period of time.

The following functions are required:

● Suitability

● Security

● Accuracy

● Interoperability

Reliability: Refers to the ability of software to perform a required function under given conditions for a specified period.

Required functions are:

● Maturity

● Recoverability

Usability: Refers the degree to which software is easy to use. It describes the ease with which the program can be used. The amount of time or effort it takes to learn how to use the app.

The following functions are required:

● Operability

● Understandability

● Learnability

Efficiency: Refers to the ability of software to use system resources in the most effective and efficient manner.

The following functions are required:

● In Resource

● In Time

Maintainability: Refers to the ease with which a software system can be modified to add capabilities, improve system performance, or correct errors.

The following functions are required:

● Testability

● Stability

● Changeability

Portability: Refers to the ease with which software developers can transfer software from one platform to another, without (or with minimum) changes Robustness: it is the ability of a software to handle unexpected errors and faults with ease.

The following functions are required:

● Adaptability

● Installability

● Replaceability

Key takeaway:

Software is more than mere code for a programme.

On the other hand, engineering is all about product creation, using well-defined scientific concepts and methods.

Software engineering is a branch of engineering that uses well-defined scientific concepts, methods and procedures to create software products.

Linear Sequential Development Model

Often referred to as the classic life cycle or waterfall model. This model implies a sequential approach to software development that starts at the system level and progresses through analysis, coding, help, testing, etc.

Each of the five framework activities is carried out by a linear process flow, such as:

1) Information/System Engineering and Modeling

Software is part of a larger system or organization; work starts by defining for all system components and then assigning to software some subset of these requirements. Such modelling is important for the purpose of interaction.

2) Design

In reality, software design is a multi-step process that focuses on different programme attributes, such as data structure, software architecture, representations of interfaces, and procedural information. The design method transforms specifications into a software representation that can be checked for consistency before the beginning of coding.

3) Code generation

A machine-readable type must be converted into the design. This task is performed by the code generating stage. If construction is carried out in a comprehensive way, it is possible to work mechanistically in code generation. The code generator is supposed to produce code that is correct in an exact way.

4) Testing

Program testing starts after code creation. The testing process focuses on the software's logical internals, ensuring that all claims have been tested, and on the externals that perform tests to detect errors and ensure that specified input produces real results that comply with the results needed. Unit testing in computer programming is the process by which code source, sets of one or more computer programme modules along with associated control data are tested to determine if they are fit for use.

5) Support

After distribution to the client, the programme can change. There will be improvements because failures have been found as a result of changes in the external world. Changes can occur in the operating system or due to peripheral device errors.

Disadvantages of the model:

This is the oldest software engineering paradigm. When this model is implemented, however, several issues have been encountered. Below, some of these are mentioned.

● The linear sequential model is rarely pursued by genuine ventures. While iteration can be accommodated by the linear model, it does so indirectly.

● Customers also find it hard to clearly state all the specifications. This model needs this and has trouble handling the complexity of existence.

● Clients have to be careful. Until late in the project time-span, a working version of the programme will not be available. A big error, if undetected before the work programme is checked, may be devastating.

Computer work is fast-paced today and is subject to a never-ending flood of modifications. For this, the linear sequential model is unacceptable, but when the criteria are set and work is preceded linearly, a useful process would be the linear sequential model.

Key takeaways

- Often referred to as the classic life cycle or waterfall model.

- This model implies a sequential approach to software development that starts at the system level.

- Each of the above five framework activities is carried out by a linear process flow.

Prototyping Model

Fig 2: Prototyping model of software development

● Often, a customer defines a set of general objectives for software, but does not identify detailed input, processing, or output requirements. In other cases, the developer may be unsure of the efficiency of an algorithm, the adaptability of an operating system, or the form that human-machine interaction should take. In these and many other situations, a prototyping paradigm may offer the best approach.

● The various phases of this model are

● Listen to Customer: - This is the starting step, where the developer and customer together

- Define the overall objectives for the software,

- Identify the known requirements and

- The analyst then outlines those factors and requirements that are not visible normally but are mandatory from a development point of view.

- Build prototype: - After the identification of the problem a quick design is done which will cause the software to show the output that the customer wants. This quick design leads to the development of a prototype (a temporary working model).

- Customer test drives the prototype: - The customer runs and checks the prototype for its perfection. The prototype is evaluated by the customer and further improvements are made to the prototype unless the customer is satisfied.

- All the stages are repeated until the customer gets satisfied. When the final prototype is fully accepted by the customer then final development processes like proper coding for attaining maintainability, proper documentation, testing for robustness etc. are carried out. And finally the software is delivered to the customer.

Advantages

- It serves as the mechanism for identifying the requirements.

- The developer uses the existing program fragment means it helps to generate the software quickly.

- Continuous developer – Consumer communication is available.

Disadvantages

- Customer considers the prototype as an original working version of the software.

- Developer makes implementation compromises in order to get the prototype working quickly.

- This model is time consuming.

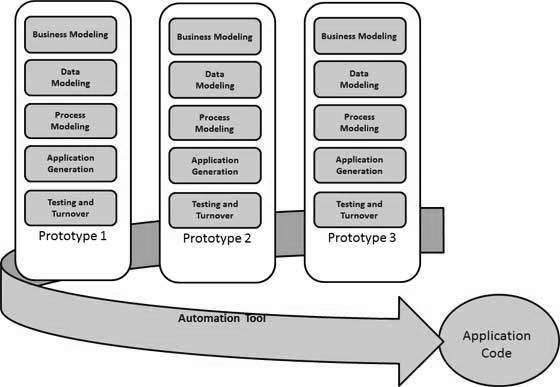

RAD model

The Rapid Application Development (or RAD) paradigm is focused on prototyping and an iterative process with little (or no) planning ahead of time. In general, taking a RAD approach to software development implies focusing less on planning and more on development and creating a prototype. In contrast to the waterfall paradigm, which emphasizes rigorous specification and preparation, the RAD approach focuses on continuously developing needs as the development develops.

RAD places a strong emphasis on prototyping as an alternative to design requirements. This indicates that RAD performs well in situations where the user interface is more important than non-GUI programs. Agile technique and spiral model are part of the RAD model.

Model Design

The steps of the rapid application development (RAD) model are as follows:

- Business modeling: The information flow between several corporate functions is discovered.

- Data modeling: The data items required by the company are defined using the information gathered from business modeling.

- Process modeling: Data objects created in data modeling are converted to create a business information flow in order to achieve a specific business goal. Process descriptions for adding, deleting, and modifying data items are provided.

- Application generation: Using automation techniques, the actual system is built and the coding is completed. This transforms the general concept, procedure, and relevant data into the final product. Because it's still half-baked, this output is referred to as a prototype.

- Testing and turnover: The RAD methodology reduces total testing cycle time by testing prototypes independently during each cycle.

Fig 3: RAD model

Advantages

- Adapting to changing demand is possible.

- It is possible to track progress.

- With the use of sophisticated RAD tools, iteration time can be cut in half.

- In a short period of time, productivity may be achieved with fewer workers.

- Development time is cut in half.

- Component reusability is improved.

- Initial reviews are conducted quickly.

- Encourages comments from customers.

- Many integration challenges can be avoided by integrating from the start.

Disadvantages

- Dependency on technically strong team members for identifying business requirements.

- Only systems that can be modularized can be built using RAD.

- Requires highly skilled developers/designers.

- High dependency on Modelling skills.

- Inapplicable to cheaper projects as cost of Modelling and automated code generation is very high.

- Management complexity is more.

- Suitable for systems that are component based and scalable.

- Requires user involvement throughout the life cycle.

- Suitable for projects requiring shorter development times.

Key takeaway

- The Rapid Application Development paradigm is focused on prototyping and an iterative process with little planning ahead of time.

- RAD places a strong emphasis on prototyping as an alternative to design requirements.

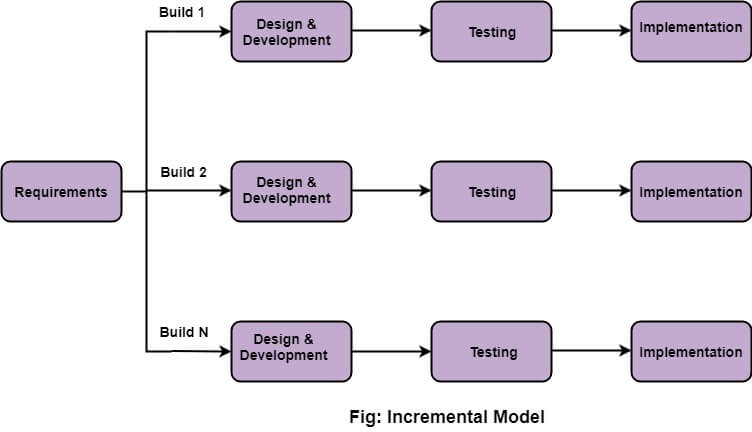

Incremental Process Models

An Incremental model is a software development process where the requirements are divided into multiple independent software cycle modules. In this model, each module goes through the stages of need, construction, implementation, and testing.

All subsequent releases of the module can add a function to the previous release. The process continues until a complete plan is found.

The various phases of the incremental model are as follows:

1. Requirement analysis: In the first phase of the incremental model, the product analysis expertise identifies the requirements. And the system functional requirements are understood by the requirement analysis team. To develop the software under the incremental model, this phase performs a crucial role.

2. Design & Development: In this phase of the Incremental model of SDLC, the design of the system functionality and the development method are finished with success. When software develops new practicality, the incremental model uses style and development phase

3. Testing: In the incremental model, the testing phase checks the performance of each existing function as well as additional functionality. In the testing phase, various methods are used to test the behaviour of each task.

4. Implementation: The implementation phase enables the coding phase of the development system. It involves the final coding that design in the designing and development phase and tests the functionality in the testing phase. After completion of this phase, the number of the product working is enhanced and upgraded up to the final system product.

Fig 4: Incremental model

Key takeaway:

Each module goes through the stages of need, construction, implementation, and testing.

To develop the software under the incremental model, the Requirement phase performs a crucial role.

When software develops new practicality, the incremental model uses the style and development phase.

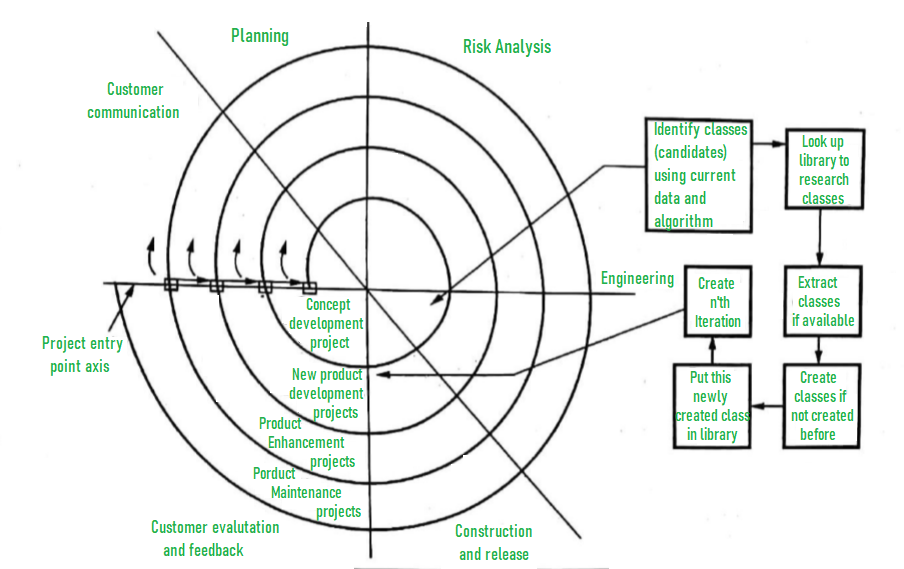

Spiral model

- Evolutionary software process model that couples the iterative nature of prototyping with the controlled and systematic aspects of the linear sequential model.

- It provides the potential for rapid development of incremental versions of the software.

- Using the spiral model, software is developed in a series of incremental releases. During early iterations, the incremental release might be a paper model or prototype. During later iterations, increasingly more complete versions of the engineered system are produced.

- A spiral model is divided into a number of framework activities, also called task regions.

- There are six task regions.

- Customer communication—tasks required to establish effective communication between developer and customer.

- Planning—tasks required to define resources, timelines, and other project related information.

- Risk analysis—tasks required to assess both technical and management risks.

- Engineering—tasks required to build one or more representations of the application.

- Construction and release—tasks required to construct, test, install, and provide user support (e.g., documentation and training).

- Customer evaluation—tasks required to obtain customer feedback based on evaluation of the software representations created during the engineering stage and implemented during the installation stage.

Fig 5: Spiral model of software development

Advantages:

- It is a realistic approach for development of large-scale systems.

- High amount of risk analysis.

- Good for large and mission-critical projects.

- Software is produced early in the software life cycle.

Disadvantages:

- It is not widely used.

- It may be difficult to convince customers (particularly in contract situations) that the evolutionary approach is controllable.

- It demands considerable risk assessment expertise and relies on this expertise for success. If a major risk is not uncovered and managed, problems will undoubtedly occur.

- Can be a costly model to use.

- Risk analysis requires highly specific expertise.

- Project’s success is highly dependent on the risk analysis phase.

- Doesn’t work well for smaller projects.

Key takeaway

- It provides the potential for rapid development of incremental versions of the software.

- Using the spiral model, software is developed in a series of incremental releases.

Component Assembly Model

The Component Assembly Model is similar to the Prototype Model, in which a prototype is created based on the customer's requirements and then sent to the user for evaluation to obtain feedback on the changes that should be made, and the process is repeated until the software meets the needs of businesses and consumers. As a result, it's an iterative development model.

The Component Assembly model was created to address issues that arise during the Software Development Life Cycle (SDLC). Instead of searching for other codes and languages, developers who adopt this model choose the components that are already available and use them to create useful software. The Component Assembly Model is an iterative development model that operates similarly to the Prototype model in that it maintains creating a prototype based on user feedback until the prototype resembles the customer and company specifications.

Furthermore, the Component Assembly methodology is similar to the Rapid Application Development (RAD) model in that it creates a software programme using accessible resources and GUIs. A variety of SDKs are now accessible, making it easier for developers to construct a software with less lines of code. Apart from the coding language, user input, and graphical interaction between the user and the software programme, this strategy allows for plenty of time to focus on other aspects of the programme.

Furthermore, a Component Assembly Model makes use of a number of previously created components and does not require the usage of an SDK to create a programme, instead combining the powerful components to create an effective and efficient software. As a result, one of the most advantageous aspects of the component assembly model is that it saves a significant amount of time throughout the software development process.

The developers merely need to understand the customer's needs, hunt for valuable components that will help them meet those needs, and then put them together to create a programme.

The following is how the model works:

Step 1: Using application data and algorithms, identify all of the required candidate components, i.e. classes.

Step 2: These potential components must be included in the library if they have been used in past software projects.

Step 3: Pre-existing components can be retrieved from the library and used in future development.

Step 4: If the requested component is not in the library, construct or create it according to the requirements.

Step 5: Save your new component in the library. This completes one system iteration.

Step-6: Repeat steps 1–5 until you have n iterations, where n is the number of iterations required to complete the application.

Fig 6: Component assembly model

Requirements Analysis

Requirements Analysis is a well-defined stage in the Software Development Life Cycle model for determining client needs and expectations from a proposed system or application. Requirements describe how a system should act or describe system properties or qualities. It can also be a statement of 'what,' not 'how,' an application is supposed to do. A task in software engineering that connects system level requirements engineering and software design.

Software requirements analysis can be broken down into several categories.

● Problem recognition

● Evaluation and synthesis

● Modeling

● Specification

● Review

The tasks that go into identifying the needs of a new or revised system, taking into account the possibly conflicting requirements of multiple stakeholders, such as users, are referred to as requirements analysis. The success of a project hinges on the study of requirements. It's also known by other terms like requirements collection, requirements capture, and requirements specification.

The term "requirements analysis" can also be used to refer to the actual analysis.

Measureable, testable, and relevant to identified business needs or opportunities, requirements must be established to a level of detail suitable for system design.

Five frequent requirements analysis errors:

● Customers are unsure of what they want.

● During the course of the project, requirements alter.

● Customers set unrealistic deadlines.

● Customers, engineers, and project managers all have communication gaps.

● The development team is unfamiliar with the customer's organization's politics

Requirements Elicitation for Software

An elicitation process must be used to obtain needs before they can be analysed, modelled, or described.

● Initiating the Process

The discomfort of a first date between two adolescents can be compared to the first meeting between a software engineer and a customer. Asking context-free questions is the best way to start a conversation. That is a collection of questions that will lead to a fundamental understanding of the problem, the individuals who want a solution, the nature of the solution that is desired, and the effectiveness of the initial meeting itself.

● Who is the person who has requested this work?

● Who is going to use the solution?

● What are the financial advantages of a successful solution?

● Is there another source for the solution that you need?

The following collection of questions allows the analyst to better grasp the problem and the customer to express his or her thoughts on a solution:

● How would you describe the "good" outcome that a successful solution would produce?

● What problem(s) will be solved by this solution?

● Could you show me the environment where the solution will be implemented?

● Will the approach to the solution be influenced by special performance difficulties or constraints?

● Facilitated Application Specification Techniques

Customers and software engineers have an unspoken "we vs them" mentality. Several independent investigators have created a team-oriented approach to requirements gathering that is used throughout the early stages of analysis and specification to address these issues. The technique is known as assisted application technique (FAST). The following are some basic rules for this technique:

● A meeting is held at a neutral location with both software engineers and customers in attendance.

● There are specified rules for preparation and participation.

● It is proposed that an agenda be created that is formal enough to cover all major themes but informal enough to allow for free flow of ideas.

● The gathering is run by a "facilitator."

● It employs a "defining mechanism."

● The purpose is to define the problem, offer aspects of a solution, negotiate competing approaches, and specify a preliminary set of solution requirements in an environment that promotes goal achievement.

The developer and the customer meet for the first time, and basic questions and responses are used to determine the breadth of the problem and the overall perception of a solution. Before the meeting, the product request was distributed to all attendees. Marketing, software and hardware engineering, and manufacturing are all represented on the FAST team. The first item of discussion at the FAST meeting is the need for and justification for the new product – everyone should agree that the product is justifiable. Once an agreement has been reached, each person will have his or her own list to debate.

The group creates a composite list after individual lists are provided in one topic area. The combined list removes duplicate entries and adds any new ideas that arise throughout the conversation, but nothing is removed. To correctly reflect the product or system to be built, the combined list is trimmed, lengthened, or reworded. In each topic area, the goal is to create a consensus list. Each subteam provides its mini-specs for debate to all FAST attendees. Following the completion of the mini-specs, each FAST participant creates a list of validation criteria for the product or system and delivers it to the team.

● Quality Function Deployment

Quality function deployment (QFD) is a quality management technique for translating customer needs into technical software requirements. Three categories of needs are identified by QFD:

● Normal requirements - During a meeting with a customer, the objectives and goals for a product or system are stated. The customer is satisfied if these prerequisites are met.

● Expected requirements - These specifications are built into the product or system and may be so basic that the customer does not express them explicitly. Their absence will be a major source of disappointment.

● Exciting requirements - When present, these elements go above and beyond the customer's expectations and prove to be extremely fulfilling.

The value of each needed system function is determined by using functional deployment. The data objects and events that the system must consume and produce are identified by information deployment. The functions are linked to these. Finally, task deployment looks at how the system or product behaves in relation to its surroundings. The relative importance of requirements identified throughout each of the three deployments is decided by a value analysis.

● Use-Cases

A software engineer can design a series of scenarios based on requirements acquired in informal meetings to define a thread of usage for the system to be built. To build a use-case, the analyst must first determine the various roles that different categories of people play while the system is in operation. An actor, as defined more formally, is anything that communicates with the system or product and is not part of the system.

It's crucial to understand the difference between an actor and a user. An actor is a type of external entity that has only one function. A use-case can be created once the actors have been identified. The use-case is a description of how an actor interacts with the system. The use-case should be answer below questions:

● What are the key tasks or functions of an actor?

● What system data will the actor obtain, produce, or modify?

● Will the actor be required to notify the system when the external environment changes?

● What information does the actor want the system to provide?

● Is the actor willing to be notified of any unforeseen changes?

In general, a use-case is a written story that outlines an actor's function as they interact with the system.

Analysis Principles

A set of operational principles connects all analysis methodologies.

● A problem's information domain must be represented and understood.

● It is necessary to define the functions that the software will execute.

● The software's behaviour must be depicted.

● Models of information, function, and behaviour must be partitioned in such a way that they reveal detail in layers.

● The focus of the analysis should shift from key data to implementation details.

In addition to these operational analysis concepts, a set of requirements engineering guiding principles is proposed.

● Before you start creating the analysis model, you should first understand the problem.

● Create prototypes that allow users to see how human-machine interaction will take place.

● Keep track of where and why each need came from.

● Use multiple requirements views.

● Sort the requirements.

● Work to clear up any uncertainty.

References:

- Roger S Pressman, Bruce R Maxim, “Software Engineering: A Practitioner’s Approach”, Kindle Edition, 2014.

- Ian Sommerville,” Software engineering”, Addison Wesley Longman, 2014.

- James Rumbaugh. Micheal Blaha “Object oriented Modeling and Design with UML”, 2004.

- Ali Behforooz, Hudson, “Software Engineering Fundamentals”, Oxford, 2009.

- Charles Ritcher, “Designing Flexible Object Oriented systems with UML”, TechMedia, 2008.