Unit - 3

Software Testing

Testing is the method of running a programme with the intention of discovering mistakes. It needs to be error-free to make our applications work well. It will delete all the errors from the programme if testing is performed successfully.

The method of identifying the accuracy and consistency of the software product and service under test is software testing. It was obviously born to verify whether the commodity meets the customer's specific prerequisites, needs, and expectations. At the end of the day, testing executes a system or programme to point out bugs, errors or faults for a particular end goal.

Software testing is a way of verifying whether the actual software product meets the expected specifications and ensuring that the software product is free of defects. It requires the execution of software/system components to test one or more properties of interest using manual or automated methods. In comparison to actual specifications, the aim of software testing is to find mistakes, gaps or missing requirements.

Some like to claim software testing as testing with a White Box and a Black Box. Software Testing, in simple words, means Program Verification Under Evaluation (AUT).

Benefits of software testing

There are some pros of using software testing:

- Cost - effective: It is one of the main benefits of software checking. Testing every IT project on time allows you to save the money for the long term. It costs less to correct if the bugs were caught in the earlier stage of software testing.

- Security: The most vulnerable and responsive advantage of software testing is that people search for trustworthy products. It helps to eliminate threats and concerns sooner.

- Product - quality: It is a necessary prerequisite for any software product. Testing ensures that consumers receive a reliable product.

- Customer - satisfaction: The main goal of every product is to provide its consumers with satisfaction. The best user experience is assured by UI/UX Checking.

Key takeaway:

- The method of identifying the accuracy and consistency of the software product and service under test is software testing.

- Some like to claim software testing as testing with a White Box and a Black Box.

- Software Testing, in simple words, means Program Verification Under Evaluation.

● In this testing technique the internal logic of software components is tested.

● It is a test case design method that uses the control structure of the procedural design test cases.

● It is done in the early stages of software development.

● Using this testing technique software engineer can derive test cases that:

● All independent paths within a module have been exercised at least once.

● Exercise true and false both the paths of logical checking.

● Execute all the loops within their boundaries.

● Exercise internal data structures to ensure their validity.

Advantages:

● As the knowledge of internal coding structure is prerequisite, it becomes very easy to find out which type of input/data can help in testing the application effectively.

● The other advantage of white box testing is that it helps in optimizing the code.

● It helps in removing the extra lines of code, which can bring in hidden defects.

● We can test the structural logic of the software.

● Every statement is tested thoroughly.

● Forces test developers to reason carefully about implementation.

● Approximate the partitioning done by execution equivalence.

● Reveals errors in "hidden" code.

Disadvantages:

● It does not ensure that the user requirements are fulfilled.

● As knowledge of code and internal structure is a prerequisite, a skilled tester is needed to carry out this type of testing, which increases the cost.

● It is nearly impossible to look into every bit of code to find out hidden errors, which may create problems, resulting in failure of the application.

● The tests may not be applicable in a real world situation.

● Cases omitted in the code could be missed out.

Key takeaway:

- It is a test case design method that uses the control structure of the procedural design test cases.

- It does not ensure that the user requirements are fulfilled.

It is also known as “behavioral testing” which focuses on the functional requirements of the software, and is performed at later stages of the testing process unlike white box which takes place at an early stage. Black-box testing aims at functional requirements for a program to derive sets of input conditions which should be tested. Black box is not an alternative to white-box, rather, it is a complementary approach to find out a different class of errors other than white-box testing.

Black-box testing is emphasizing on different set of errors which falls under following categories:

- Incorrect or missing functions

- Interface errors

- Errors in data structures or external database access

- Behavior or performance errors

- Initialization and termination errors.

- Boundary value analysis: The input is divided into higher and lower end values. If these values pass the test, it is assumed that all values in between may pass too.

- Equivalence class testing: The input is divided into similar classes. If one element of a class passes the test, it is assumed that all the class is passed.

- Decision table testing: Decision table technique is one of the widely used case design techniques for black box testing. This is a systematic approach where various input combinations and their respective system behaviour are captured in a tabular form. That’s why it is also known as a cause-effect table. This technique is used to pick the test cases in a systematic manner; it saves the testing time and gives good coverage to the testing area of the software application. Decision table technique is appropriate for the functions that have a logical relationship between two and more than two inputs.

Advantages:

● More effective on larger units of code than glass box testing.

● Testers need no knowledge of implementation, including specific programming languages.

● Testers and programmers are independent of each other.

● Tests are done from a user's point of view.

● Will help to expose any ambiguities or inconsistencies in the specifications.

● Test cases can be designed as soon as the specifications are complete.

Disadvantages:

● Only a small number of possible inputs can actually be tested, to test every possible input stream would take nearly forever.

● Without clear and concise specifications, test cases are hard to design.

● There may be unnecessary repetition of test inputs if the tester is not informed of test cases the programmer has already tried.

● May leave many program paths untested.

● Cannot be directed toward specific segments of code which may be very complex (and therefore more error prone).

● Most testing related research has been directed toward glass box testing.

Key takeaway:

- In Black box testing the main focus is on the information domain.

- This technique exercises the input and output domain of the program to uncover errors in program, function, behavior and performance.

Software Testing is a method of assessing a software application's performance to detect any software bugs. It checks whether the developed software meets the stated specifications and, in order to produce a better product, identifies any defects in the software.

In order to find any holes, errors or incomplete specifications contrary to the real requirements, this is effectively executing a method. It is also defined as a software product verification and validation process.

To ensure that every component, as well as the entire system, works without breaking down, an effective software testing or QA strategy involves testing at all technology stack levels. Some of the Techniques for Software Testing include:

Leave time for fixing: When problems are identified, it is necessary to fix the time for the developers to solve the problems. Often, the business also needs time to retest the fixes.

Discourage passing the buck: You need to build a community that will allow them to jump on the phone or have desk-side talk to get to the bottom of stuff if you want to eliminate back and forth interactions between developers and testers. Everything about teamwork is checking and repairing.

Manual testing has to be exploratory: If any problem can be written down or scripted in specific terms, it could be automated and it belongs to the automated test suite. The software's real-world use will not be programmed, and without a guide, the testers need to break stuff.

Encourage clarity: You need to create a bug report that does not create any uncertainty but offers clarification. It is also necessary for a developer, however, to step out of the way to interact effectively as well.

Test often: This helps to avoid the build-up and crushing of morale from massive backlogs of issues. The safest method is known to be frequent checking.

Key takeaway:

- Software Testing is a method of assessing a software application's performance to detect any software bugs.

- The software's real-world use will not be programmed, and without a guide, the testers need to break stuff.

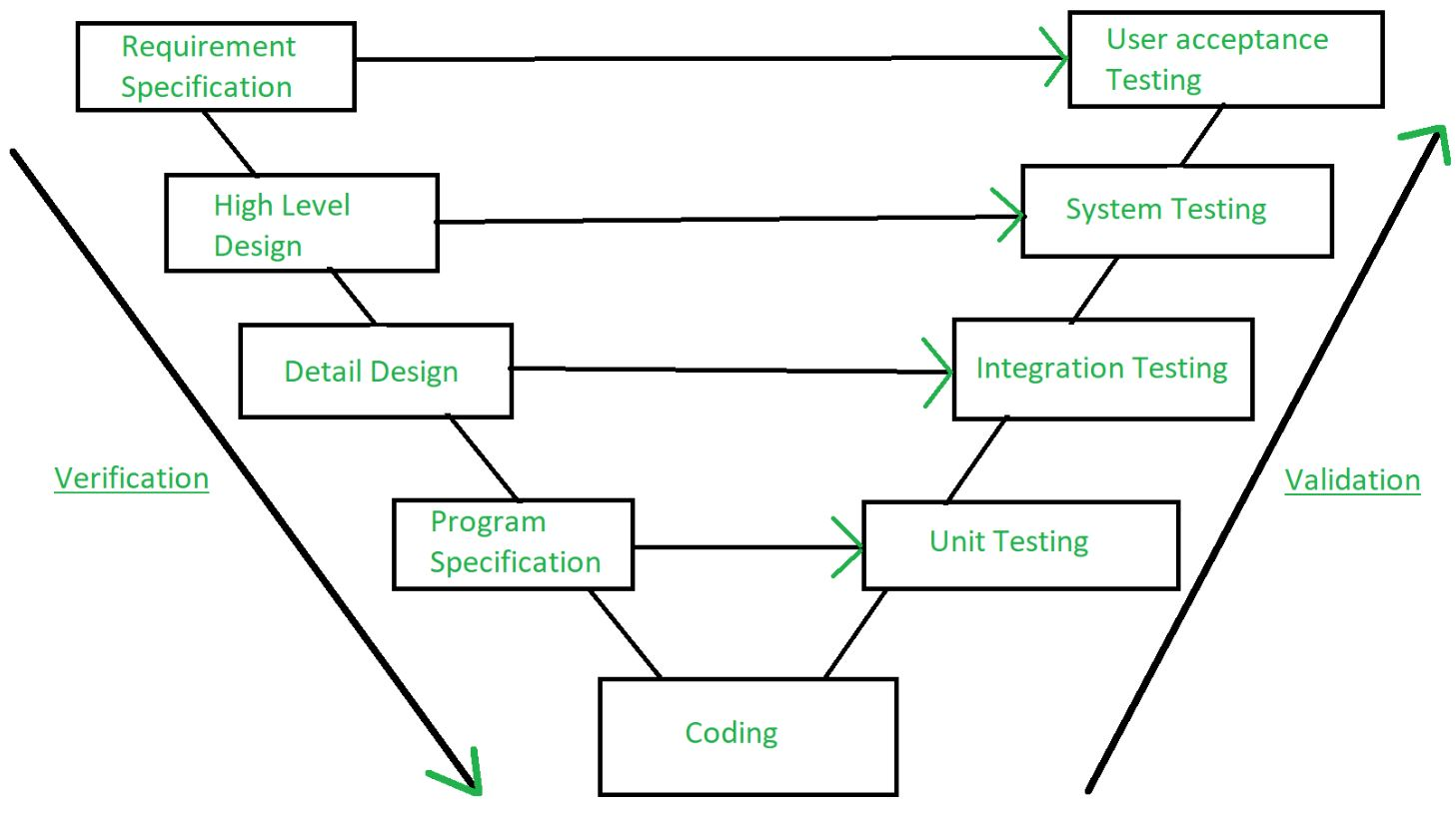

Verification and validation are the processes of investigating whether a software system meets requirements and specifications and fulfills the function necessary.

Barry Boehm defines verification and validation -

Verification: Are we building the product right?

Validation: Are we building the right product?

Fig 1: Verification and validation

Verification:

Verification is the method of testing without any bugs that a program achieves its target. It is the method of ensuring whether or not the product that is produced is right. This tests whether the established product meets the specifications we have. Verification is Static Testing.

Verification tasks involved:

- Inspections

- Reviews

- Walkthroughs

- Desk-checking

Validation: Validation is the process of testing whether the software product is up to the mark or, in other words, the specifications of the product are high. It is the method of testing product validation, i.e. it checks that the correct product is what we are producing. Validation of the real and expected product is necessary.

Validation is the Dynamic Testing

Validation tasks involved:

- Black box testing

- White-box testing

- Unit testing

- Integration testing

Key takeaway:

- Validation is preceded by verification.

- Verification is the method of testing without any bugs that a program achieves its target

- Validation is the process of testing whether the software product is up to the mark.

Software is the only one element of a larger computer-based system. Ultimately, software is incorporated with other system elements (e.g., hardware, people, information), and a series of system integration and validation tests are conducted. These tests fall outside the scope of the software process and are not conducted solely by software engineers. However, steps taken during software design and testing can greatly improve the probability of successful software integration in the larger system.

A classic system testing problem is "finger-pointing." This occurs when an error is uncovered, and each system element developer blames the other for the problem. Rather than indulging in such nonsense, the software engineer should anticipate potential interfacing problems and

● Design error-handling paths that test all information coming from other elements of the system,

● Conduct a series of tests that simulate bad data or other potential errors at the software interface,

● Record the results of tests to use as "evidence" if finger-pointing does occur, and

● Participate in planning and design of system tests to ensure that software is adequately tested.

System testing is actually a series of different tests whose primary purpose is to fully exercise the computer-based system. Although each test has a different purpose, all work to verify that system elements have been properly integrated and perform allocated functions.

Key takeaway:

- Software is the only one element of a larger computer-based system.

- Software is incorporated with other system elements (e.g., hardware, people, information), and a series of system integration and validation tests are conducted.

- System testing is actually a series of different tests whose primary purpose is to fully exercise the computer-based system.

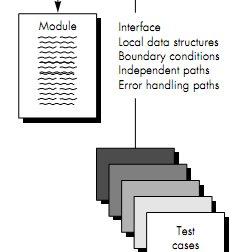

Unit testing focuses verification effort on the smallest unit of software design—the software component or module. Using the component- level design description as a guide, important control paths are tested to uncover errors within the boundary of the module. The relative complexity of tests and uncovered errors is limited by the constrained scope established for unit testing. The unit test is white-box oriented, and the step can be conducted in parallel for multiple components.

Unit Test Considerations

The tests that occur as part of unit tests are illustrated schematically in Figure below. The module interface is tested to ensure that information properly flows into and out of the program unit under test. The local data structure is examined to ensure that data stored temporarily maintains its integrity during all steps in an algorithm's execution. Boundary conditions are tested to ensure that the module operates properly at boundaries established to limit or restrict processing. All independent paths (basis paths) through the control structure are exercised to ensure that all statements in a module have been executed at least once. And finally, all error handling paths are tested.

Fig 2: Unit Test

Tests of data flow across a module interface are required before any other test is initiated. If data does not enter and exit properly, all other tests are moot. In addition, local data structures should be exercised and the local impact on global data should be ascertained (if possible) during unit testing.

Selective testing of execution paths is an essential task during the unit test. Test cases should be designed to uncover errors due to erroneous computations, incorrect comparisons, or improper control flow. Basis path and loop testing are effective techniques for uncovering a broad array of path errors.

Among the more common errors in computation are

● Misunderstood or incorrect arithmetic precedence,

● Mixed mode operations,

● Incorrect initialization,

● Precision inaccuracy,

● Incorrect symbolic representation of an expression.

Comparison and control flow are closely coupled to one another (i.e., change of flow frequently occurs after a comparison). Test cases should uncover errors such as

- Comparison of different data types,

- Incorrect logical operators or precedence,

- Expectation of equality when precision error makes equality unlikely,

- Incorrect comparison of variables,

- Improper or nonexistent loop termination,

- Failure to exit when divergent iteration is encountered, and

- Improperly modified loop variables.

Among the potential errors that should be tested when error handling is evaluated are

● Error description is unintelligible.

● Error noted does not correspond to error encountered.

● Error condition causes system intervention prior to error handling.

● Exception-condition processing is incorrect.

● Error description does not provide enough information to assist in the location of the cause of the error.

Boundary testing is the last (and probably most important) task of the unit test step. Software often fails at its boundaries. That is, errors often occur when the nth element of an n-dimensional array is processed, when the ith repetition of a loop with i passes is invoked, when the maximum or minimum allowable value is encountered. Test cases that exercise data structure, control flow, and data values just below, at, and just above maxima and minima are very likely to uncover errors.

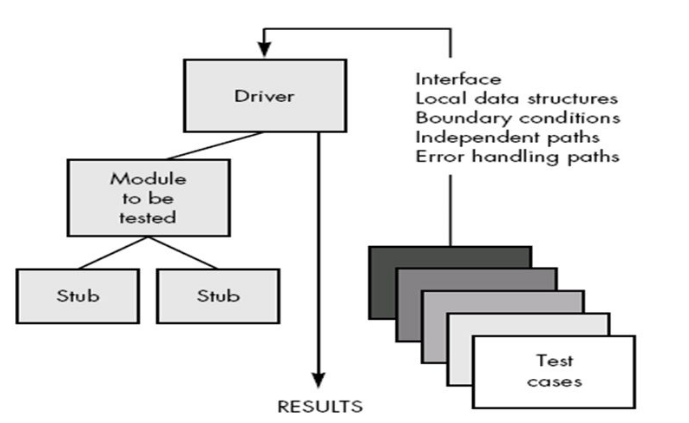

Unit Test Procedures

Unit testing is normally considered as an adjunct to the coding step. After source level code has been developed, reviewed, and verified for correspondence to component level design, unit test case design begins. A review of design information provides guidance for establishing test cases that are likely to uncover errors in each of the categories discussed earlier. Each test case should be coupled with a set of expected results.

Fig 3: Unit Test Environment

Because a component is not a stand-alone program, driver and/or stub software must be developed for each unit test. The unit test environment is illustrated in Figure above. In most applications a driver is nothing more than a "main program" that accepts test case data, passes such data to the component (to be tested), and prints relevant results. Stubs serve to replace modules that are subordinate (called by) the component to be tested.

A stub or "dummy subprogram" uses the subordinate module's interface, may do minimal data manipulation, prints verification of entry, and returns control to the module undergoing testing. Drivers and stubs represent overhead. That is, both are software that must be written (formal design is not commonly applied) but that is not delivered with the final software product. If drivers and stubs are kept simple, actual overhead is relatively low. Unfortunately, many components cannot be adequately unit tested with "simple" overhead software. In such cases, complete testing can be postponed until the integration test step (where drivers or stubs are also used).

Unit testing is simplified when a component with high cohesion is designed. When only one function is addressed by a component, the number of test cases is reduced and errors can be more easily predicted and uncovered.

Advantage of Unit Testing

- Can be applied directly to object code and does not require processing source code.

- Performance profilers commonly implement this measure.

Disadvantages of Unit Testing

- Insensitive to some control structures (number of iterations)

- Does not report whether loops reach their termination condition

- Statement coverage is completely insensitive to the logical operators (|| and &&).

Key takeaway:

- Unit testing focuses verification effort on the smallest unit of software design—the software component or module.

- The unit test is white-box oriented, and the step can be conducted in parallel for multiple components.

- Unit testing is normally considered as an adjunct to the coding step.

Integration testing is a systematic technique for constructing the program structure while at the same time conducting tests to uncover errors associated with interfacing. The objective is to take unit tested components and build a program structure that has been dictated by design.

There is often a tendency to attempt non incremental integration; that is, to construct the program using a "big bang" approach. All components are combined in advance. The entire program is tested as a whole. And chaos usually results! A set of errors is encountered.

Correction is difficult because isolation of causes is complicated by the vast expanse of the entire program. Once these errors are corrected, new ones appear and the process continues in a seemingly endless loop.

Incremental integration is the antithesis of the big bang approach. The program is constructed and tested in small increments, where errors are easier to isolate and correct; interfaces are more likely to be tested completely; and a systematic test approach may be applied.

Top-down Integration

Top-down integration testing is an incremental approach to construction of program structure. Modules are integrated by moving downward through the control hierarchy, beginning with the main control module (main program). Modules subordinate (and ultimately subordinate) to the main control module are incorporated into the structure in either a depth-first or breadth-first manner.

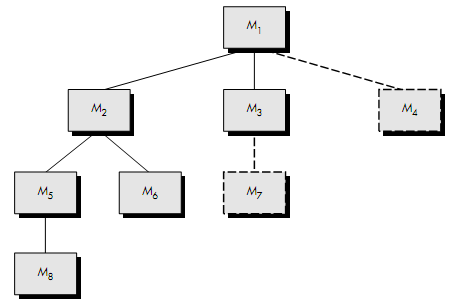

Fig 4: Top down integration

Referring to Figure above, depth-first integration would integrate all components on a major control path of the structure. Selection of a major path is somewhat arbitrary and depends on application-specific characteristics. For example, selecting the left-hand path, components M1, M2, M5 would be integrated first. Next, M8 or (if necessary for proper functioning of M2) M6 would be integrated.

Then, the central and right-hand control paths are built. Breadth-first integration incorporates all components directly subordinate at each level, moving across the structure horizontally. From the figure, components M2, M3, and M4 (a replacement for stub S4) would be integrated first. The next control level, M5, M6, and so on, follows.

The integration process is performed in a series of five steps:

- The main control module is used as a test driver and stubs are substituted for all components directly subordinate to the main control module.

- Depending on the integration approach selected (i.e., depth or breadth first), subordinate stubs are replaced one at a time with actual components.

- Tests are conducted as each component is integrated.

- On completion of each set of tests, another stub is replaced with the real component.

- Regression testing may be conducted to ensure that new errors have not been introduced. The process continues from step 2 until the entire program structure is built.

The top-down integration strategy verifies major control or decision points early in the test process. In a well-factored program structure, decision making occurs at upper levels in the hierarchy and is therefore encountered first. If major control problems do exist, early recognition is essential. If depth-first integration is selected, a complete function of the software may be implemented and demonstrated.

For example, consider a classic transaction structure in which a complex series of interactive inputs is requested, acquired, and validated via an incoming path. The incoming path may be integrated in a top-down manner. All input processing (for subsequent transaction dispatching) may be demonstrated before other elements of the structure have been integrated. Early demonstration of functional capability is a confidence builder for both the developer and the customer.

Top-down strategy sounds relatively uncomplicated, but in practice, logistical problems can arise. The most common of these problems occurs when processing at low levels in the hierarchy is required to adequately test upper levels. Stubs replace low-level modules at the beginning of top-down testing; therefore, no significant data can flow upward in the program structure. The tester is left with three choices:

- Delay many tests until stubs are replaced with actual modules,

- Develop stubs that perform limited functions that simulate the actual module, or

- Integrate the software from the bottom of the hierarchy upward.

The first approach (delay tests until stubs are replaced by actual modules) causes us to loose some control over correspondence between specific tests and incorporation of specific modules. This can lead to difficulty in determining the cause of errors and tends to violate the highly constrained nature of the top-down approach. The second approach is workable but can lead to significant overhead, as stubs become more and more complex.

Bottom-up Integration

Bottom-up integration testing, as its name implies, begins construction and testing with atomic modules (i.e., components at the lowest levels in the program structure). Because components are integrated from the bottom up, processing required for components subordinate to a given level is always available and the need for stubs is eliminated.

A bottom-up integration strategy may be implemented with the following steps:

- Low-level components are combined into clusters (sometimes called builds) that perform a specific software sub-function.

- A driver (a control program for testing) is written to coordinate test case input and output.

- The cluster is tested.

- Drivers are removed and clusters are combined moving upward in the program structure.

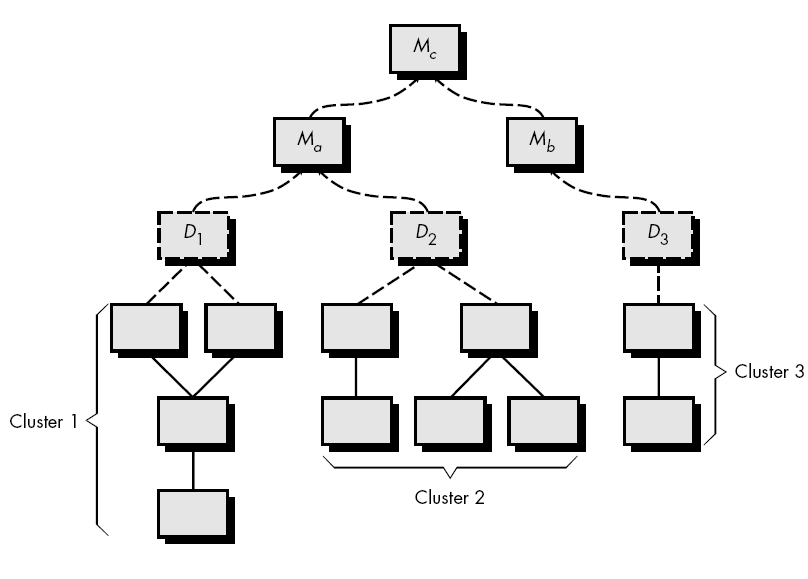

Fig 5: Bottom up integration

Integration follows the pattern illustrated in Figure above. Components are combined to form clusters 1, 2, and 3. Each of the clusters is tested using a driver (shown as a dashed block). Components in clusters 1 and 2 are subordinate to Ma. Drivers D1 and D2 are removed and the clusters are interfaced directly to Ma. Similarly, driver D3 for cluster 3 is removed prior to integration with module Mb. Both Ma and Mb will ultimately be integrated with component Mc, and so forth.

As integration moves upward, the need for separate test drivers lessens. In fact, if the top two levels of program structure are integrated top down, the number of drivers can be reduced substantially and integration of clusters is greatly simplified.

Key takeaway:

- Integration testing is a systematic technique for constructing the program structure while at the same time conducting tests to uncover errors associated with interfacing.

- Top-down integration testing is an incremental approach to construction of program structure.

- The top-down integration strategy verifies major control or decision points early in the test process.

- Bottom-up integration testing, as its name implies, begins construction and testing with atomic modules.

Software testing is a process that can be systematically planned and specified. Test case design can be conducted, a strategy can be defined, and results can be evaluated against prescribed expectations.

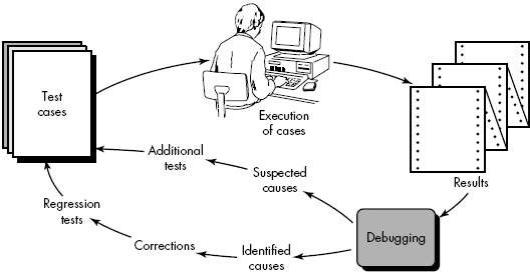

Debugging occurs as a consequence of successful testing. That is, when a test case uncovers an error, debugging is the process that results in the removal of the error. Although debugging can and should be an orderly process, it is still very much an art. A software engineer, evaluating the results of a test, is often confronted with a "symptomatic" indication of a software problem. That is, the external manifestation of the error and the internal cause of the error may have no obvious relationship to one another. The poorly understood mental process that connects a symptom to a cause is debugging.

The Debugging Process

Debugging is not testing but always occurs as a consequence of testing. Referring to Figure above, the debugging process begins with the execution of a test case. Results are assessed and a lack of correspondence between expected and actual performance is encountered. In many cases, the non corresponding data are a symptom of an underlying cause as yet hidden. The debugging process attempts to match symptom with cause, thereby leading to error correction.

Fig 6: Debugging Process

The debugging process will always have one of two outcomes:

● The cause will be found and corrected, or

● The cause will not be found.

In the latter case, the person performing debugging may suspect a cause, design a test case to help validate that suspicion, and work toward error correction in an iterative fashion.

During debugging, we encounter errors that range from mildly annoying (e.g., an incorrect output format) to catastrophic (e.g. The system fails, causing serious economic or physical damage). As the consequences of an error increase, the amount of pressure to find the cause also increases. Often, pressure sometimes forces a software developer to fix one error and at the same time introduce two more.

Debugging Approaches

In general, three categories for debugging approaches may be proposed:

● Brute force,

● Backtracking, and

● Cause elimination.

▪ The brute force category of debugging is probably the most common and least efficient method for isolating the cause of a software error. We apply brute force debugging methods when all else fails. Using a "let the computer find the error" philosophy, memory dumps are taken, run-time traces are invoked, and the program is loaded with WRITE statements. We hope that somewhere in the morass of information that is produced we will find a clue that can lead us to the cause of an error. Although the mass of information produced may ultimately lead to success, it more frequently leads to wasted effort and time. Thought must be expended first!

▪ Backtracking is a fairly common debugging approach that can be used successfully in small programs. Beginning at the site where a symptom has been uncovered, the source code is traced backward (manually) until the site of the cause is found. Unfortunately, as the number of source lines increases, the number of potential backward paths may become unmanageably large.

▪ The third approach to debugging – Cause elimination—is manifested by induction or deduction and introduces the concept of binary partitioning. Data related to the error occurrence are organized to isolate potential causes. A "cause hypothesis" is devised and the aforementioned data are used to prove or disprove the hypothesis. Alternatively, a list of all possible causes is developed and tests are conducted to eliminate each. If initial tests indicate that a particular cause hypothesis shows promise, data are refined in an attempt to isolate the bug.

The optimum approach for implementing a new system must be determined once it has been developed (or purchased). It can be difficult to persuade a group of individuals to learn and adopt a new system. The new software and the business processes that it generates can have far-reaching consequences within the company.

There are various approaches that a company can use to implement a new system. The following are four of the most popular.

Direct cutover: The organisation chooses a date when the old system will no longer be used in the direct-cutover deployment process. Users begin utilising the new system on that date, and the old system becomes unavailable. The benefits of employing this technology are that it is quick and inexpensive. This strategy, however, is also the riskiest. It could be detrimental for the organisation if the new system has an operating fault or is not properly equipped.

Pilot implementation: In this strategy, a small group of employees (called a pilot group) is the first to use the new system before the rest of the company. This has a lower financial impact on the organisation and allows the support team to focus on a smaller number of people.

Parallel operation: In parallel operation, the old and new systems are employed at the same time for a set amount of time. Because the old system is still in operation while the new system is being tested, this strategy is the least dangerous. However, because work is duplicated and complete support is required for both platforms, this is the most expensive method.

Phased implementation: Different functionalities of the new application are utilised while functions from the old system are turned off in a phased installation. This method allows a company to gradually transition from one system to another.

The complexity and relevance of the old and new systems determine the implementation approaches.

Software maintenance is a part of the Software Development Life Cycle. Its primary goal is to modify and update software application after delivery to correct errors and to improve performance. Software is a model of the real world. When the real world changes, the software require alteration wherever possible.

Software Maintenance is an inclusive activity that includes error corrections, enhancement of capabilities, deletion of obsolete capabilities, and optimization.

a) Corrective Maintenance

Corrective maintenance aims to correct any remaining errors regardless of where they may cause specifications, design, coding, testing, and documentation, etc.

b) Adaptive Maintenance

It contains modifying the software to match changes in the ever-changing environment.

c) Preventive Maintenance

It is the process by which we prevent our system from being obsolete. It involves the concept of reengineering & reverse engineering in which an old system with old technology is re-engineered using new technology. This maintenance prevents the system from dying out.

d) Perfective Maintenance

It defines improving processing efficiency or performance or restricting the software to enhance changeability. This may contain enhancement of existing system functionality, improvement in computational efficiency, etc.

Software maintenance phases

a) Identification Phase:

In this phase, all the requests for modifications in the software are identified and analysed.

b) Analysis Phase:

The feasibility, cost and estimation of each validated modification request is performed. The method to integrate changes in existing system is determined.

c) Design Phase:

The new modifications will be put and designed as per requirement specification.

Testing and test cases will also be done in order to check the efficiency of software. Test cases are created for the validation and verification of the system.

d) Implementation Phase

The new modification are implemented with proper planning and integrated in new system.

e) System Testing Phase

To ensure no errors are there in the system testing is done with regression and integration mode.

f) Acceptance Testing Phase:

This testing is done to ensure the modified system has amended as per given requirement of modification, it is done by end user or user or third party.

g) Delivery Phase:

This phase the user starts using the system as acceptance testing has been completed. Users are given manuals and other stuff to understand the changes and final testing will be done now by user.

Boehm maintenance process

In 1983, Boehm proposed a model for the maintenance process which was based upon the economic models and principles. Economics model is nothing new thing, economic decisions are a major building block of many processes and Boehm’s thesis was that economics model and principles could not only improve productivity in the maintenance but it also helps to understand the process very well.

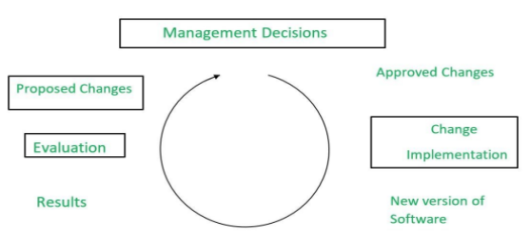

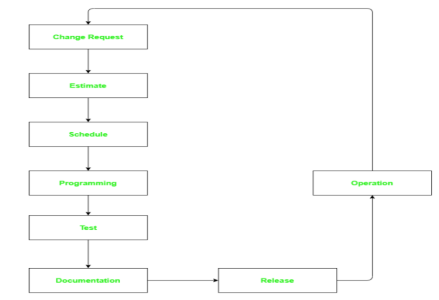

Boehm maintenance process model as a closed-loop cycle is shown below

Boehm had concluded that the maintenance manager’s task is one of the balancing and the pursuit of the objectives of maintenance against the constraint imposed by the environment in which maintenance work is carried out. The maintenance process should be driven by the maintenance manager’s decisions, which are typically based on the balancing of objectives against the constraint. Boehm proposed a formula for calculating the maintenance cost as it is a part of the COCOMO Model. All the collected data from the various projects, the formula was formed in terms of effort.

Taute Software Maintenance Model

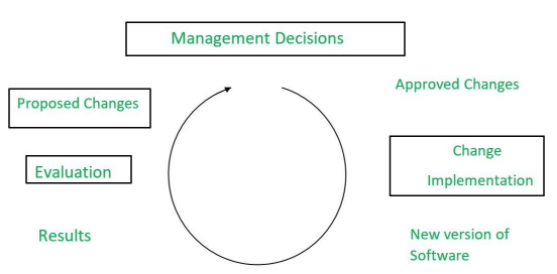

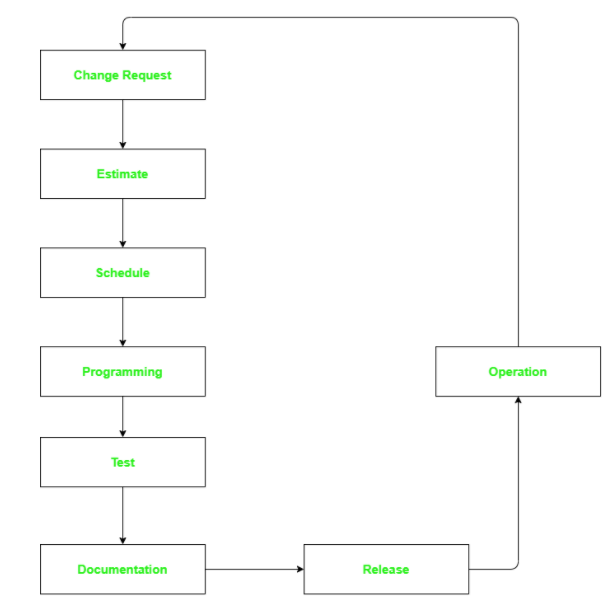

B.J. Taute coined a software maintenance model in 1983. It is very easy to understand and implement. The model depicts software maintenance process as closed loop cycle. Mostly used by developers after execution of software for updating or modification in software.

Taute Model has eight phases in cyclic fashion.

The phases are as following:

Change Request Phase –

In this phase customer request in prescribed format to maintenance team to apply some change to software.

This change can be:

(i) Corrective software maintenance.

(ii) Adaptive software maintenance.

(iii) Perfective software maintenance.

(iv) Preventive software maintenance.

Maintenance team assigns unique identification number to request and detects category of software maintenance,

Estimate Phase –

The maintenance team dedicates this phase for estimating time and effort required to apply requested change. And, to minimize ripple effect caused by change to system, Impact analysis on existing system is also done.

Schedule Phase –

In this phase, team identifies change requests for next scheduled release and may also prepare documents that are required for planning.

Programming Phase –

In this phase, the maintenance team modifies source code of software to implement requested change by customer and updates all relevant documents like design document, manuals, etc. accordingly. The final output of this stage is test version of source code.

Test Phase –

In this phase, maintenance team ensures that modification requested in software is correctly implemented. The source code is then tested with already available test cases. New test cases may also be designed to further test software. This type of testing is known as Regression testing.

Documentation Phase –

After the regression testing, team updates system and user documents before release of software. This helps to maintain co-relation between source code and documents.

Release Phase –

In this phase, the modified software product along with the updated documents are delivered to customer. Acceptance Testing is carried out by users of system.

Operation Phase –

After acceptance testing, software is put under normal operation. During usage, when another problem is identified or new functionality requirement is felt or enhancement of existing capability is desired, customer may again initiate ‘Change request’ process. And similarly, all phases will be repeated to implement this new change.

Quick-Fix Model

This is quick and easy but ad hoc approach used for maintenance. It quickly finds and fix the software without putting consideration on size of the software.

Iterative Enhancement Model

Iterative Enhancement Model is divided into three stages:

- Analysis of software system.

- Classification of requested modifications.

- Implementation of requested modifications.is divided into three stages:

Reuse Oriented Model

Existing system or model parts are analysed and understood so that they can be considered for reuse. Thereafter they go through modification and enhancement, which is done on the basis of new requirements. The final step is integration of modified old system into the new system.

Need for Maintenance

Software maintenance is becoming an important activity of a large number of software organizations. This is no surprise, given the rate of hardware obsolescence, the immortality of a software product per se, and the demand of the user community to see the existing software products run on newer platforms, run in newer environments, and/or with enhanced features. When the hardware platform is changed, and a software product performs some low-level functions, maintenance is necessary.

Also, whenever the support environment of a software product changes, the software product requires rework to cope up with the newer interface. For instance, a software product may need to be maintained when the operating system changes. Thus, every software product continues to evolve after its development through maintenance efforts.

It is necessary to perform software maintenance in order to:-

- Correct errors.

- The needs of the consumer evolve over time.

- Hardware and software specifications are changing.

- To increase the performance of the system.

- To make the code run more quickly.

- To make changes to the components

- To lessen any unfavorable side effects.

As a result, maintenance is needed to ensure that the system continues to meet the needs of its users.

Categories of Maintenance: Preventive, Corrective and Perfective Maintenance

There are basically three types of software maintenance. These are:

- Preventive: It's the method we use to save our system from being redundant. It entails the reengineering and reverse engineering concepts, in which an existing device of old technology is re-engineered with new technology. The machine will not die as a result of this maintenance.

- Corrective: Corrective maintenance of a software product is necessary to rectify the bugs observed while the system is in use.

- Adaptive: A software product might need maintenance when the customers need the product to run on new platforms, on new operating systems, or when they need the product to interface with new hardware or software.

- Perfective: A software product needs maintenance to support the new features that users want it to support, to change different functionalities of the system according to customer demands, or to enhance the performance of the system.

The following are some of the issues in software maintenance:

● Any software program's popular age is taken into account for ten to fifteen years. Because software programme renovation is an ongoing process that could last decades, it is quite costly.

● Older software, which was designed to run on slow machines with limited memory and storage, can't compete with newer, more powerful software on modern technology.

● Changes are typically left unreported, which might lead to increased dispute in the future.

● As time passes, preserving vintage software becomes increasingly expensive.

● Frequently, changes performed can easily affect the software program's original design, making subsequent revisions impossible.

Maintenance Activity

A framework for sequential maintenance process activities is provided by IEEE. It can be used in an iterative fashion and expanded to accommodate bespoke goods and procedures.

These activities complement each of the phases that follow:

Identification & Tracing

It entails activities related to determining the need for modification or maintenance. It is either generated by the user or reported by the system via logs or error messages. The type of maintenance is also classified here.

Analysis

The impact of the alteration on the system, including safety and security issues, is assessed. Alternative solutions are sought if the likely impact is significant. The required modifications are then manifested into specification requirements. The cost of modification and maintenance is calculated and estimated.

Design

New modules that need to be replaced or updated are designed according to the requirements established in the preceding stage. Validation and verification test cases are built.

Implementation

The new modules are coded using the structured design that was generated during the design phase. Unit testing is expected of every coder in parallel.

System Testing

Integration testing is done among newly built modules during system testing. New modules and the system are also subjected to integration testing. Finally, the entire system is tested using regressive testing methodologies.

Acceptance Testing

After internal testing, the system is put to the test with the help of users. If the user has any difficulties at this point, they will be fixed or documented for the future edition.

Delivery

After passing the acceptance test, the system is disseminated throughout the organisation, either as a tiny update package or as a new installation. After the software is delivered, the client does final testing.

In addition to the hard copy of the user handbook, a training facility is available if needed.

Maintenance management

Configuration management is an important aspect of system upkeep. It makes use of version control tools to manage versions, semi-versions, and patches.

References:

1. Roger S Pressman, Bruce R Maxim, “Software Engineering: A Practitioner’s Approach”, Kindle Edition, 2014.

2. Ian Sommerville,” Software engineering”, Addison Wesley Longman, 2014.

3. James Rumbaugh. Micheal Blaha “Object oriented Modeling and Design with UML”, 2004.

4. Ali Behforooz, Hudson, “Software Engineering Fundamentals”, Oxford, 2009.

5. Charles Ritcher, “Designing Flexible Object Oriented systems with UML”, TechMedia, 2008.