UNIT – 3

Data analysis and Interpretation

The investigator of any field is confronted with the issue of what to do with data after it has been gathered. The data mass may be so high that the researcher is unable to put all of it in a form that is gathered in his study.

Much of the data must be reduced to some form suitable for analysis in order to be able to report to a scientific audience a concise set of conclusions or findings.

In an attempt to analyze the information, we must first decide whether to

- Whether the data tabulation is carried out by hand or by computer,

- How the data can be translated into a form that will enable it to be processed effectively and

- What statistical tools or methods are used?

Computers have recently become an essential tool for tabulating and analyzing survey data.

Computer tabulation is encouraged for easy and flexible data handling, even in small-scale studies that employ relatively simple statistical procedures.

Tables of any dimension can be produced by micro and laptop computers and statistical operations can be performed much easier and usually with much less error than is possible manually. Assuming that the database is large and that computer processing of the data is carried out, we will address the following major issues in the data analysis task:

- Preparation of data which includes;

- editing,

- coding, and

- Data entry.

- Exploring, displaying, and examining information to search for meaningful descriptions, patterns, and relationships, including breaking down, examining, and rearranging data.

3.1.1 Editing

Editing the raw data is the usual first step in the analysis. Editing detects mistakes and omissions, corrects them whenever possible and certifies the achievement of minimum data quality standards.

The duty of the editor is to guarantee that information is;

1. Precise, precise,

2. In accordance with the intent of the question or with other information,

3. entered uniformly,

4. Complete, and

5. Arranged for coding and tabulation simplification.

Data editing can be achieved in two ways: field editing and in-house editing, also called central editing.

Field editing is a field supervisor's preliminary editing of data on the same day as the interview. Its purpose is to identify technical omissions, check legibility, and clarify responses that are logically or conceptually inconsistent.

When gaps from interviews are present, instead of guessing what the respondent "would probably have said," a call-back should be made.

A second important task of the supervisor is to re-interview a few respondents, at least on some pre-selected questions, as a validity check. All the questionnaires undergo thorough editing in central or in-house editing. It is a rigorous job done by employees of the central office.

3.1.2 Coding

Coding is the process of assigning responses to numbers or other symbols in order to group the responses into a limited number of classes or categories. Coding allows the investigator to reduce several thousand responses to a few categories containing the critical data intended for the question asked.

When the questionnaire itself is prepared, which we call pre-coding, or after the questionnaire has been administered, numerical coding can be incorporated. The questions, which we call post-coding, were answered.

Pre-coding is necessarily limited primarily to questions whose categories of responses are known beforehand.

These are mainly closed-ended questions (such as sex, religion) or questions that already have a number of answers and therefore do not need to be converted (such as age, number of children).

For data entry, pre-coding is particularly useful because it makes the intermediate step of completing a coding sheet unnecessary. The data is directly accessible from the questionnaire.

Depending on the method of data collection, a respondent interviewer field supervisor or researcher) can assign appropriate numerical responses to the instrument by checking or circling it in the correct coding location

The main advantage of post-coding over pre-coding is that post-coding allows the coder to determine before starting coding which responses are provided by the respondent.

This can contribute to great simplification. For each combination of responses given, post-coding also allows the researcher to code multiple responses to a single variable by writing a different code number.

Coding, whether pre or post, is a two-part method that includes;

1. For each possible answer category, the choice of a different number; and

2. Choosing the appropriate column or column to contain the code numbers for those variables on the computer card.

Some data detail is sacrificed by data coding, but it is necessary for efficient analysis. We could use the code "M" or "C." instead of asking the word Muslim or Christian for a question that asks for the identification of one's religion.

This variable would normally be coded as 1 for Muslims and 2 for Christians. "The "QI" or "VI" type codes are called alphanumeric codes. The codes are numeric when numbers are used exclusively (e.g., 1, 2, etc.).

The codebook is a type of booklet compiled by the survey staff that on a questionnaire tells the meaning of each code from each question.

For instance, the codebook might disclose that the male is coded 1 and the female 2 for question number 10.

The codebook is used as a guide by the investigator to make data entry less prone to error and more efficient. It is also the one that

During analysis, the definitive source for locating the positions of variables in the data file.

If a questionnaire can be pre-coded completely, with an edge-code indicating the location of the variable in the data file, then it is not necessary to have a separate codebook and a blank questionnaire can be used as a codebook.

However, there is not enough space on the questionnaire to identify all codes, especially for post-coding and for open-ended questions that receive many answers.

Coding Non-responses

As a result of failure to provide any answer to a question at all, non-response (or missing cases) occurs, and these are inevitable in any questionnaire.

In order to avoid non-responses, care should be taken, but if these occur, the researcher must devise a system for coding them, preferably a standard system, so that the same code can be used for non-responses irrespective of the specific question.

A numerical code should be assigned to a non-response.

0 and 9 are the numbers most often used for non-response. The number is merely repeated for each column for variables that require more than one column (e.g., 99, 999).

For non-response, any numerical code is satisfactory as long as it is not a number that might occur as a legitimate response.

For instance, if you were to ask the respondent to list the number of children in their family, because you could not distinguish a non-response from a family of nine children, you should not use 9 for non-response.

Code may also need to be assigned in addition to non-response items for "do not know" abbreviated "DK" responses and for "not applicable (NA)" responses, provided that the question does not apply to a particular respondent. Responses to 'Don't know' are often coded as 'O' or 'OO.'

Key Takeaways:

|

Data analysis

In order to discover useful information for business decision-making, data analysis is defined as a process of cleaning, transforming and modeling data. The purpose of Data Analysis is to extract useful data from the data and to make decisions based on the analysis of the data.

A simple example of data analysis is to think about what happened last time or what will happen by choosing that specific decision whenever we make any decision in our day-to-day life. This is nothing but to analyze and make decisions based on our past or future. We collect memories of our past or dreams of our future for that. So that's nothing but an analysis of data. Data Analysis is now the same thing an analyst does for business purposes.

Text Analysis

Data Mining is also referred to as Text Analysis. It is one of the data analysis methods to discover a pattern using databases or data mining tools in large data sets. It has been used to transform raw data into business data. In the market that is used to make strategic business decisions, Business Intelligence instruments are present. Overall, it provides a way of extracting and examining information and deriving patterns, and finally interpreting the data.

Statistical Analysis

Statistical analysis demonstrates "What happen?" in the form of dashboards using past data. Statistical analysis involves information gathering, analysis, interpretation, presentation, and modeling. It analyzes a set of information or a data sample. This type of analysis has two categories - descriptive analysis and inferential analysis.

Descriptive Analysis

Complete data analysis or a sample of summarized numerical data analysis. For continuous data, it shows mean and deviation, while percentage and frequency for categorical data.

Inferential Analysis

Sample analysis from complete data. In this type of analysis, by selecting different samples, you can find different conclusions from the same data.

Diagnostic Analysis

"Why did it happen?" is shown by Diagnostic Analysis by finding the cause from the insight found in Statistical Analysis. This analysis is useful for the determination of data behavior patterns. If your business process has a new problem, then you can look into this analysis to find similar patterns of the problem. And it may have opportunities for the new issues to use similar prescriptions.

Predictive Analysis

By using previous data, Predictive Analysis shows "what is likely to happen" The simplest example of data analysis is that if I bought two clothes based on my savings last year, and if my salary doubles this year, then I can buy four clothes But, of course, this is not easy because you have to think about other circumstances, such as this year's chances of increasing clothing prices, or maybe you want to buy a new bike instead of clothes, or you need to buy a house!

So here, based on existing or past data, this analysis makes predictions about future results. Forecasting is an estimate only. Its reliability is based on how much detailed data you have and how much you dig into it.

Prescriptive Analysis

In order to determine which action to take in a present problem or decision, Prescriptive Analysis combines the insight from all prior analysis. Prescriptive Analysis is used by most data-driven businesses because predictive and descriptive analytics are not sufficient to improve data performance. They analyze the data on the basis of current situations and problems and make decisions.

A broad term that encompasses many different types of data analysis is data analytics. To obtain insight that can be used to enhance things, any type of data can be subjected to data analytics techniques.

Manufacturing companies, for example, often record the runtime, downtime, and work queue for different machines and then analyze the data to plan the workloads better so that the machines operate closer to peak capacity.

In production, data analytics can do much more than point out bottlenecks. To set reward schedules for players who keep the majority of players active in the game, gaming companies use data analytics. Many of the same data analytics are used by content companies to keep you clicking, watching, or re-organizing content to get another view or another click.

There are several different steps involved in the process involved in data analysis:

- The first step is to determine the requirements for the data or how the information is grouped. Data may be segregated by age, population, income, or sex. The values of the data may be numeric or divided by category.

- The process of collecting it is the second step in data analytics. This can be done through a range of sources, such as computers, online sources, cameras, sources for the environment, or through staff.

- It must be organized once the data is collected so that it can be analyzed. On a spreadsheet or other form of software that can take statistical data, organization may take place.

- Before analysis, the information is then cleaned up. This implies that to ensure that there is no duplication or error, it is scrubbed and checked and that it is not incomplete. Before going on to a data analyst to be analyzed, this step helps correct any errors.

Key Takeaways:

|

With the advent of the digital age, data analysis and interpretation have now taken center stage... and the sheer amount of data can be frightening. In fact, a Digital Universe study found that the total data supply in 2012 was 2.8 trillion gigabytes!

Based on that amount of data alone, it is clear that the ability to analyze complex data, generate actionable insights and adapt to new market needs will be the calling card of any successful enterprise in today's global world... all at the speed of thought.

The Digital Age Tools for Big Data are business dashboards. Capable of showing key performance indicators (KPIs) for both quantitative and qualitative data analyses, they are ideal for making fast-paced and data-driven market choices that drive sustainable success for today's industry leaders.

Data dashboards allow companies to engage in real-time and informed decision making through the art of streamlined visual communication, and are key tools in data interpretation. Let's first find a definition to understand what lies behind the meaning of data interpretation.

Interpretation of data refers to the implementation of processes through which information is reviewed in order to arrive at an informed conclusion. Data interpretation assigns a meaning to the analyzed information and determines its meaning and implications.

The significance of the interpretation of data is obvious and this is why it needs to be done properly. Data is very likely to come from various sources and has a tendency to enter with haphazard ordering the analysis process. Analysis of data tends to be highly subjective. That is to say, the nature and objective of interpretation will vary from company to company, probably correlating to the type of information being analyzed. Although several different types of processes are implemented on the basis of the individual nature of data, "quantitative analysis" and "qualitative analysis" are the two broadest and most common categories.

However, it should be understood that visual presentations of data findings are irrelevant before any serious data interpretation inquiry can begin, unless a sound decision is made concerning measurement scales. The measurement scale must be decided for the data before any serious data analysis can begin, as this will have a long-term impact on ROI for data interpretation. The scales that vary include:

- Nominal Scale: non-numeric categories that cannot be quantitatively ranked or compared. Exclusive and exhaustive variables.

- Ordinal Scale: Exclusive categories that are unique and comprehensive but have a logical order. Examples of ordinary scales include quality ratings and agreement ratings (i.e., good, very good, fair, etc., OR agree, strongly agree, disagree, etc.).

- Interval: scale of measurement where information is grouped into categories with orderly and equal distances between the categories. An arbitrary zero point always exists.

- Ratio: Features of all three are included.

3.3.1 Significance of processing data

Easy to make reports – It can be used directly, because the information has been processed. It is possible to organize these processed facts and figures in such a way that they can help operators to quickly conduct analysis. Predefined data helps experts to produce faster reports.

Accuracy and speed - Digitization helps to quickly process data. It is possible to process thousands of files in a minute and save the necessary information from each file. The system will automatically check and process invalid or corrupted data in the process of processing business data. Such processes therefore help businesses ensure high accuracy in the management of information.

Reduce costs – The cost of digital processing is much less than the management and maintenance of paper documents. It decreases the cost of purchasing stationery to store paper documents with information. Therefore, through improving their data management systems, businesses can save millions of dollars each year.

Easy storage - helps to increase storage space, manage information and modify it. It minimizes clutter by eliminating unnecessary paperwork, and also improves search efficiency. A large number of businesses are in need of data processing outsourcing nowadays. For any company looking to improve its effectiveness, this data service is truly a premium service.

Suppose a project has been allocated to you to predict the company's sales. You can't just say that 'X' is the factor that will influence sales.

We understand that there are various elements or variables that will affect sales. Only multivariate analysis can be found to analyze the variables that will majorly affect sales. And it will not be just one variable in most cases.

As we know, sales will depend on the product category, production capacity, and geographical location, and marketing effort, market presence of the brand, analysis of competitors, product cost, and various other variables. Sales is only one example; in any section of most fields, this study can be implemented.

In many industries, like healthcare, multivariate analysis is widely used. In the recent COVID-19 case, a team of data scientists predicted that by the end of July 2020, Delhi would have more than 5lakh COVID-19 patients. This analysis was based on various variables such as government decision-making, public conduct, population, occupation, public transport, health services, and community overall immunity.

Multivariate analysis is also based on the Data Analysis study by Murtaza Haider of Ryerson University on the shore of the apartment, which leads to an increase in cost or a decrease in cost. As per that study, transport infrastructure was one of the major factors. People were thinking of buying a home at a location that offers better transport, and this is one of the least thought of variables at the beginning of the study, according to the analyzing team. But with analysis, this occurred in a few final variables that affected the result.

Exploratory data analysis involves multivariate analysis. The deeper insight of several variables can be visualized based on MVA.

To perform multivariate analysis, there are more than 20 different techniques and which method is best depends on the type of data and the problem you are trying to solve.

Multivariate analysis (MVA) is a statistical method for data analysis involving more than one measurement or observation type. It may also mean solving issues where more than one dependent variable is analyzed with other variables simultaneously.

Advantages and Disadvantages of Multivariate Analysis

- The main advantage of multivariate analysis is that the conclusion drawn is more precise because it considers more than one factor of independent variables affecting the variability of dependent variables.

- The conclusions are more realistic and closer to the situation in real life.

Disadvantages

- The main disadvantage of MVA is that a satisfactory conclusion requires rather complex calculations.

- It is necessary to collect and tabulate many observations for many variables; it is a rather time-consuming method.

Key Takeaways:

|

Testing of hypotheses is an act in statistics by which an analyst tests an assumption about a population parameter. The methodology employed by the analyst depends on the nature and reason for the analysis of the data used.

To evaluate the plausibility of a hypothesis by using sample data, hypothesis testing is used. Such data can come from a larger population, or from a process that generates data. In the following descriptions, the word 'population' will be used for both cases.

- To assess the plausibility of a hypothesis by using sample data, hypothesis testing is used.

- The test provides evidence, given the data, concerning the plausibility of the hypothesis.

- Statistical analysts test a hypothesis by measuring a random sample of the population being analyzed and examining it.

How Hypothesis Testing Works

An analyst tests a statistical sample in hypothesis testing, with the aim of providing evidence about the plausibility of the null hypothesis.

By measuring and examining a random sample of the population that is being analyzed, statistical analysts test a hypothesis. A random population sample is used by all analysts to test two separate hypotheses: the null hypothesis and the alternative hypothesis.

The null hypothesis is usually a population parameter equality hypothesis; e.g., a null hypothesis may state that the mean return of the population is equal to zero. The opposite of a null hypothesis is effectively the alternative hypothesis (e.g., the population mean return is not equal to zero). They are mutually exclusive, therefore, and only one can be true. One of the two hypotheses will always be true, however.

4 Steps of Testing of Hypotheses

A four-step process is used to test all hypotheses:

1. The first step is to state the two hypotheses for the analyst so that only one can be correct.

2. The next step is to formulate an analysis plan that outlines how to evaluate the information.

3. The third step is to execute the plan and to analyze the sample data physically.

4. The fourth and final step is to analyze the findings and either dismiss the null hypothesis or, given the data, state that the null hypothesis is plausible.

Real-world instance

If, for example, a person wants to test that a penny has exactly a 50 percent chance of landing on heads, the null hypothesis would be that 50 percent is correct, and the alternative hypothesis would be that 50 percent is not correct.

Mathematically, the null hypothesis would be represented as Ho: P = 0.5. The alternative hypothesis would be denoted as "Ha" and be identical to the null hypothesis, except with the equal sign struck-through, meaning that it does not equal 50 percent.

It takes a random sample of 100 coin flips, and then tests the null hypothesis. The analyst would assume that a penny does not have a 50 percent chance of landing on heads if it is found that the 100 coin flips were distributed as 40 heads and 60 tails, and would reject the null hypothesis and accept the alternative hypothesis.

If, on the other hand, 48 heads and 52 tails were present, then it is plausible that the coin might be fair and still produce such an outcome. The analyst states that the difference between the expected results (50 heads and 50 tails) and the observed results (48 heads and 52 tails) is "explainable by chance alone" in cases such as this where the null hypothesis is "accepted."

3.5.1 Chi square test

For testing relations between categorical variables, the Chi Square statistic is commonly used. The null hypothesis of the Chi-Square test is that the categorical variables in the population have no relationship; they are independent. An example question of research that could be answered using a Chi-Square analysis would be:

Is there a significant relationship between the intention of voting and the membership of the political party?

How does the Chi-Square statistic work?

The Chi-Square statistics are most commonly used when using cross tabulation to evaluate Independence Tests (also known as a bivariate table). The distributions of two categorical variables are presented simultaneously by Cross tabulation, with the intersections of the categories of the variables appearing in the table cells. By comparing the observed pattern of responses in the cells to the pattern that would be expected if the variables were truly independent of each other, the Independence Test evaluates whether an association exists between the two variables. The researcher can evaluate whether the observed cell counts differ significantly from the expected cell counts by calculating the Chi-Square statistic and comparing it against a critical value from the Chi-Square distribution.

The Chi-Square statistics calculation is fairly straightforward and intuitive:

|

Where fo = The frequency observed (the observed counts in the cells)

And fe = The expected frequency if there was no relationship between the variables

The Chi-Square statistic, as shown in the formula, is based on the difference between what is actually observed in the data and what would be expected if the variables were truly not related.

How is the Chi-Square statistic run in SPSS and how is the output interpreted?

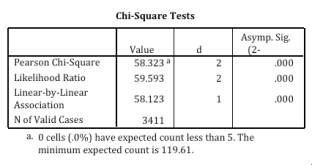

As an option when requesting a cross tabulation in SPSS, the Chi-Square statistic appears. Chi-Square Tests are labeled as the output; Pearson Chi-Square is the Chi-Square statistic used in the Independence Test. By comparing the actual value against a critical value found in a Chi-Square distribution (where degrees of freedom are calculated as # of rows-1 x # of columns-1), this statistic can be evaluated, but it is easier to simply examine the p-value provided by SPSS. The value is labeled as to conclude the hypothesis with 95 percent trust. Sig. Sec.

(Which is the Chi-Square statistic's p-value) should be less than .055 (which is the alpha level associated with a 95 percent confidence level).

Is less than .05 the p-value (labeled Asymp? Sig.)? If so, we can conclude that the variables are not independent of each other and that the categorical variables have a statistical relationship.

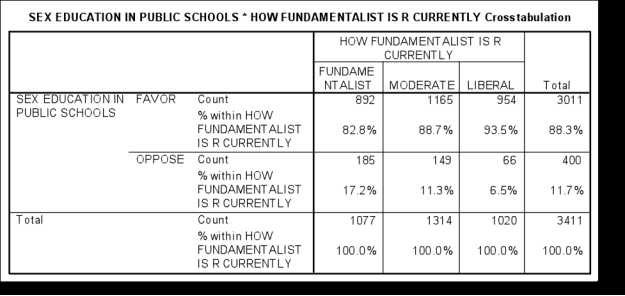

|

|

There is an association in this instance between fundamentalism and views in public schools on teaching sex education. While 17.2% of fundamentalist people are opposed to teaching sex education, only 6.5% of liberals are opposed. The p-value indicates that these variables are not independent of each other and that the categorical variables have a statistically significant relationship.

What are special concerns with regard to the Chi-Square statistic?

When using Chi-Square statistics to evaluate a crosstabulation, there are a number of significant considerations. It is extremely sensitive to sample size because of how the Chi-Square value is calculated; when the sample size is too large (~500), almost any small difference will appear statistically significant. It is also sensitive to the distribution within the cells, and if cells have fewer than 5 cases, SPSS gives a warning message. This can be addressed by always using categorical variables with a limited number of categories (for example, by combining categories to produce a smaller table if necessary).

3.5.2 Zandt-test (for large and small sample)

A z-test is a statistical test used when the variances are known and the sample size is large to determine whether two population meanings are different. The test statistics are assumed to have a normal distribution, and for an accurate z-test to be performed, nuisance parameters such as standard deviation should be known.

A z-statistic, or z-score, is a number representing how many standard deviations a score derived from a z-test is above or below the mean population.

- A z-test is a statistical test to determine if when the variances are known and the sample size is large, two population meanings are different.

- It can be used for testing hypotheses in which a normal distribution follows the z-test.

- A z-statistic, or z-score, is a number that represents the z-test outcome.

- Z-tests are closely related to t-tests, but when an experiment has a small sample size, t-tests are best conducted.

- T-tests also assume that the standard deviation is unknown, whereas z-tests presume that it is known.

How Z-Tests Work

A one-sample location test, a two-sample location test, a paired difference test, and a maximum likelihood estimate are examples of tests that can be conducted as z-tests. Z-tests are closely related to t-tests, but when an experiment has a small sample size, t-tests are best conducted. T-tests also presume that the standard deviation is unknown, whereas z-tests presume that it is known. The assumption of the sample variance equaling the population variance is made if the standard deviation of the population is unknown.

Hypothesis Test

The z-test is also a test of hypotheses in which a normal distribution follows the z-statistic. For greater-than-30 samples, the z-test is best used because, under the central limit theorem, the samples are considered to be approximately normally distributed as the number of samples gets larger. The null and alternative hypotheses, alpha and z-score, should be stated when conducting a z-test. Next, it is necessary to calculate the test statistics and state the results and conclusions.

One-Sample Z-Example Test

Assume that an investor wishes to test whether a stock's average daily return is greater than 1%. It calculates a simple random sample of 50 returns and has an average of 2 percent. Assume that the standard return deviation is 2.5 percent. The null hypothesis, therefore, is when the average, or mean, equals 3%.

The alternative hypothesis, on the other hand, is whether the average return is greater than 3% or less. Assume an alpha is selected with a two-tailed test of 0.05 percent. Therefore, in each tail, there is 0.025 percent of the samples, and the alpha has a critical value of 1.96 or -1.96. The null hypothesis is rejected if the value of z is greater than 1.96 or less than -1.96.

The z-value is calculated by subtracting from the observed average of the samples the value of the average daily return selected for the test, or 1 percent in this case. Next, divide the resulting value by the standard deviation of the number of values observed, divided by the square root. It is therefore calculated that the test statistic is 2.83, or (0.02 - 0.01) / (0.025 / (50)^(1/2)). Since z is greater than 1.96, the investor rejects the null hypothesis and concludes that the average daily return is greater than 1 percent.

Key Takeaways:

|

Reference Books:

- Research methodology in Social sciences, O.R.Krishnaswamy, Himalaya Publication

- Business Research Methods, Donald R Cooper, Pamela Schindler, Tata McGraw Hill