Measurement: Definition

First, you need to understand the basic ideas associated with measurement. Here we consider two main measurement concepts. Scales explain the meaning of the four main scales (name, order, spacing, ratio). Next, we move on to the reliability of the measurements, such as the true score theory and the examination of various reliability estimates.

Second, we need to understand the different types of measures that may be used in social research. Consider four broad categories of measurements. Research studies include the design and implementation of interviews and questionnaires. Scaling involves exploring key ways to develop and implement scales. Qualitative research provides a broad overview of non-numerical measurement approaches. Inconspicuous measurements also offer a variety of measurement methods that do not interfere with or interfere with the context of the study.

Before you can use statistics to analyze your problem, you need to convert the basic material in question into data. This means that you need to establish or adopt a system that assigns values (mostly numbers) to the object or concept that is central to the problem you are investigating. This is not an esoteric process, but it's something you are doing a day. For example, if you buy something in a store, the price you pay is a measure. That is, a number is assigned to the amount of currency exchanged for the goods received. Similarly, once you tread on the size within the morning, the numbers you see are measurements of your weight. Depending on where you live, this number is expressed in pounds or kilograms, but the principle of assigning numbers to physical quantities (weight) applies in both cases.

Not all data need to be numeric. For example, the male and female categories are commonly used to classify people in both science and everyday life, and these categories have essentially no numbers. Similarly, we often talk about the colors of a wide class of objects such as "red" and "blue". These categories represent a great simplification from the infinite variety of colors that exist in the world. This is a very common method and is rarely reconsidered.

How specific you want these categories to be (for example, is "garnet" a different color than "red"? Do you need to assign transgender individuals to different categories?) Is your immediate goal. Depends on. Graphic artists can use even more colors. For example, a spiritual category of color than the average person. Similarly, the level of detail used to classify a survey depends on the purpose of the survey and the importance of understanding the nuances of each variable.

Measurement

Measurement is the process of systematically numbering objects and their properties to facilitate the use of mathematics in studying and explaining objects and their relationships. Some types of measurements are fairly specific. For example, weigh a person in pounds or kilograms, or measure height in feet and inches or meters. Keep in mind that the particular measurement system used is not as important as a consistent set of rules. For example, you can easily convert kilogram measurements to pounds. The system of units may seem arbitrary (try to keep feet and inches for those who grew up in the metric system!), But the results can be used in calculations as long as the system is consistent with the characteristics being measured.

Measurements aren't limited to physical qualities like height and weight. Tests that measure abstractions such as intelligence and academic ability are commonly used in education and psychology. For example, the field of psychometrics is heavily involved in the development and improvement of methods for testing such abstract properties. Confirming that a particular measurement is meaningful is more difficult if it cannot be observed directly. You can test the accuracy of a scale by comparing it to results from another scale that is known to be accurate, but there is no easy way to know. When the intelligence test is accurate because there is no generally agreed method for measuring the "intelligence" of an abstraction. In other words, you don't know what someone's actual intelligence is because there is no specific way to measure it. In fact, you may not even know what "intelligence" really is. This is a completely different situation than measurement. The height or weight of a person. These issues are particularly relevant to the social sciences and education, and many studies have focused on such abstract concepts.

Designing and Writing Items

There are three main types of study design:

Data Collection, Measurement and Analysis.

The type of research problem your organization faces determines your research design, but not the other way around. During the design phase of the survey, you decide which tools to use and how to use them.

Influential study designs usually minimize data bias and increase confidence in the accuracy of the collected data. Designs with minimal margin of error in experimental studies are generally considered desirable results. The key elements of study design are:

1. Exact purpose statement.

2. Techniques implemented to collect and analyze surveys.

3. Methods applied to the analysis of the collected details.

4. Types of survey methods.

5. Possible objections to research.

6. Research setting.

7. Timeline.

8. Measurement of analysis.

Proper research design will lead your research to success. Successful research studies provide accurate and unbiased insights. You need to create a survey that meets all the key characteristics of your design.

Researchers need a clear understanding of the different types of study design in order to choose the model to implement in their study. As with the study itself, study design can be broadly categorized quantitatively and qualitatively.

Qualitative research determines the relationship between collected data and observations based on mathematical calculations. Theories related to naturally occurring phenomena can be proved or disproved using statistical methods. Researchers believe qualitative research design techniques to conclude "why" a specific theory exists and what respondents must say about it.

2. Quantitative Research Design:

Quantitative research is used when statistical conclusions are essential to gather practical insights. The numbers provide a better perspective for making important business decisions. Quantitative research design techniques are required for organizational growth. The insights gained from solid numerical data and analysis have proven to be very effective in making decisions related to the future of the business.

You can further categorize the types of research design into five categories:

1. Descriptive Study Design: Researchers are only interested in describing situations or cases within a study with a descriptive design. This is a theory-based design method developed by collecting, analyzing, and presenting the collected data. This allows researchers to provide insights into the reasons and methods of study. Descriptive design helps others better understand their research requirements. If the problem description is not clear, you can perform an exploratory investigation.

2. Experimental Study Design: The relationship between the cause and effect of the situation is established by the experimental study design. This is a causal model that observes the effect of the independent variable on the dependent variable. For example, the impact of independent variables such as price on dependent variables such as customer satisfaction and brand loyalty is monitored. It is a very practical research design method to contribute to the solution of the problem at hand. The independent variable is manipulated to monitor the shift to the dependent variable.

In the social sciences, it is often used to analyze two groups and observe human behavior. To better understand social psychology, researchers can force participants to change their behavior and study how the individuals around them react.

3. Correlation Study Design: Correlation study is a non-experimental study design technique that helps researchers establish relationships between two closely related variables. Two different groups need this type of research. When evaluating the relationships between two different variables, there are no assumptions and the relationships between them are calculated by statistical analysis techniques.

The correlation between two variables whose values vary between -1 and +1 is determined by the correlation coefficient. A correlation coefficient of +1 indicates a positive relationship between variables, and -1 means a negative relationship between two variables.

4. Diagnostic Study Design: Researchers are trying to assess the root cause of a particular subject or phenomenon in a diagnostic design. This technique helps you learn more about the variables that create the problematic situation.

This design has three parts of research.

a) Problem occurrence

b) Diagnosis of problems

c) Solution to the problem

5. Descriptive Study Design: Descriptive design explores their theory further, using the ideas and ideas of researchers on a topic. This survey details the unexplored aspects of the topic and what, how, and why the survey questions are asked.

Uni-Dimensional and Multi-Dimensional Scales

Scaling is a field of measurement that involves building equipment that associates qualitative configurations with quantitative metric units. Scaling evolved from psychological and educational efforts to measure "immeasurable" constructs such as authoritarianism and self-esteem. In many respects, scaling remains one of the most esoteric and misunderstood aspects of social research measurements. Then we try to measure abstract concepts, which is one of the most difficult research tasks.

Most people don't even understand what scaling is. The basic idea of scaling is explained in the general problem of scaling, including the important difference between scale and response format. Scales generally fall into two major categories: one-dimensional and multidimensional. The one-dimensional scaling method was developed in the first half of the 20th century and is generally named after the inventor. This section describes the following three types of one-dimensional scaling methods.

a) Thurstone or evenly spaced scaling.

b) Likert or "summary" scaling.

c) Guttman or "cumulative" scaling.

From the late 1950s to the early 1960s, measurement theorists developed more advanced techniques for creating multidimensional scales. These techniques are not considered here, but to see the power of these multivariate techniques, it is advisable to consider a concept mapping technique that relies on that approach.

General Scaling Issues

S.S. Stevens came up with what I think is the simplest and simplest definition of scaling. He said:

Scaling is the assignment of objects to numbers according to rules.

But what does that mean? In most scaling, the object is a text statement, usually a statement of attitude or belief. An example is shown in the figure.

There are three statements that explain the attitude towards immigrants. To scale these statements, you need to assign them a number. In general, the result should be at least an interval scale (see Scale), as shown by the ruler in the figure. And what does it mean to "follow the rules"? The statement shows that as you read, your attitude towards immigrants becomes more restrictive. Anyone who agrees with the statements on the list may also agree with all the statements above. list. In this case, the "rules" are cumulative. So what is scaling? This is a way to get a number that can be meaningfully assigned to an object. This is a series of steps. Here are some different approaches:

But first, I need to get rid of one of my pet's pee. People often confuse the idea of scale with response scale. Response scales are a way of collecting responses from people on musical instruments. You may use a dichotomized response scale such as agree / disagree, true / false, or yes / no. Alternatively, you can use an interval response scale such as a 1: 5 or 1: 7 rating. However, if you just attach a response scale to an object or statement, you cannot call that scale. As you can see, scaling involves steps that are independent of the respondent so that you can come up with a number for the object. In true scaling studies, scaling procedures are used to develop equipment (scales) and response scales are used to collect responses from participants. However, simply assigning an item a 1 to 5 response scale does not scale it. The differences are shown in the following table.

Purpose of Scaling

Why do you scale? Would you like to create a text statement or question and use the answer format to collect your answers? First, you may want to scale to test your hypothesis. You may want to know if a structure or concept is one-dimensional or multidimensional (we'll talk more about dimensions later). Scaling may also be done as part of exploratory research. I want to know the dimensions that underlie a series of evaluations. For example, when creating a series of questions, you can use scaling to determine how "hanging together" the questions are, whether to measure one concept or multiple concepts. But perhaps the most common reason for scaling is for scoring purposes. When a participant answers a set of items, they often want to assign a single number that represents their overall attitude or belief. In the figure above, for example, I would like to show one number that represents an individual's attitude towards immigrants.

Curse of Dimensionality

The scale can contain any number of dimensions. Most scales we develop have only a few dimensions. What is a dimension? Think of the dimensions as a number line. When measuring a construct, you need to determine if you can measure the construct properly with a single number line, or if you need more lines.

For example, height is a one-dimensional or one-dimensional concept. The concept of height can be measured very well with just one number line (such as a ruler). Weight is also one-dimensional-we can measure it on a scale. Thirst can also be considered a one-dimensional concept-you are always more or less thirsty. It's easy to see that height and weight are one-dimensional. But what about concepts like self-esteem? If you think you can measure a person's self-esteem well with a single ruler that changes from low to high, you probably have a one-dimensional structure.

What is the two-dimensional concept? Many models of intelligence or achievement presuppose two main aspects: mathematical and linguistic competence. In this type of 2D model, one can say that one has two types of achievements. Some people will have high language skills and low math. For others, the opposite is true. However, if the concept is really two-dimensional, it is not possible to use just one number line to represent the level of that conceptual person. In other words, we need to find a person as a point in two-dimensional (x, y) space to explain the achievement.

Let's take this one step further. What about the 3D concept? Psychologists studying the concept of meaning have theorized that the meaning of a term can be fully explained in three dimensions. In other words, any object can be distinguished or distinguished from each other in three dimensions. They have labelled these three dimensions of activity, assessment, and potency. They called this general semantics a semantic differential. Their theory states that essentially any object can be evaluated along these three dimensions. For example, consider the idea of "ballet". If you like ballet, you will probably rate it as active, high in evaluation, advantageous in evaluation, and powerful in potency. On the other hand, think of the concept of a "book" like a novel. You may rate it as inactive (passive), positively rated (assuming you like it), and nearly average potency. Now, believe the thought of "going to the dentist". Most people rate low activity (passive activity), poor evaluation, and powerlessness (there are few daily activities that feel powerless!). The theorists who came up with the idea of semantic differentials thought that by evaluating a concept in these three dimensions, the meaning of any concept could be well explained. In other words, to explain the meaning of an object, you need to place it as a dot somewhere in the cube (three-dimensional space).

One-dimensional or multi-dimensional?

What are the benefits of using a one-dimensional model? One-dimensional concepts are generally easy to understand. You have it more or less, and that's all. You are either tall or short, heavy or light. It is also important to understand what a one-dimensional scale is as a basis for understanding more complex multidimensional concepts. But the best reason to use one-dimensional scaling is because you believe that the concept you are measuring is actually one-dimensional. As you can see, many well-known concepts (height, weight, temperature) are actually one-dimensional. But if the concept you are studying is actually multidimensional, a one-dimensional scale or number line does not explain it well. If you try to measure your academic performance in one dimension, you're putting everyone in one line, from those with low grades to those with high grades. But how do you score those who have high math grades and are verbally terrible, or vice versa? Such achievements cannot be captured on a one-dimensional scale.

Measurement Scales- Nominal, Ordinal, Interval, Ratio

To perform a statistical analysis of your data, it is important to first understand the variables and what you need to measure with them. There are various scales of statistics, and the data measured using them can be broadly divided into qualitative data and quantitative data.

First, let's understand what variables are. Values change throughout the population and the measurable quantities are called variables. For example, consider a sample of individuals employed. Variables in this population set include industry, location, gender, age, skills, and occupation. The value of the variable varies from employee to employee.

For example, it is virtually impossible to calculate the average hourly wage of US workers. Therefore, the sample audience is randomly selected to better represent a larger population. The average hourly wage for this sample audience is then calculated. Statistical tests can be used to conclude the average hourly wage of a larger population.

The variable scale determines the type of statistical test to use. The mathematical nature of a variable, that is, how it is measured, is considered a measure.

What are nominal, order, interval, and ratio scales?

Each scale is an incremental scale. That is, each scale functions as the previous scale, and all survey question scales such as Likert, Semantic Differential, and Dichotomy are derivatives of these four basic variable scales. Before discussing all four levels of measurement scales in detail with examples, let's take a quick look at what these scales represent.

Nominal scales are naming scales in which variables have no specific order and are simply "named" or labelled. In addition to naming the order scale, all variables are in a particular order. The interval scale provides a label, order, and a specific interval between each variable option. The ratio scale has all the characteristics of the interval scale, and in addition it can correspond to the "zero" value of any variable.

The details of the four scales in surveys and statistics are nominal, order, interval, and ratio.

Nominal Scale: 1st Level Scale

Nominal scales, also known as categorical variable scales, are defined as scales used to label variables into individual classifications and do not include quantitative values or order. This scale is the simplest of the four variable measurement scales. The calculations done on these variables are wasted because there are no optional numbers.

This scale may be used for classification purposes. The numbers associated with variables of this scale are just classification or division tags. The calculations performed on these numbers are useless because they have no quantitative significance.

For questions such as:

Where do you live?

1-Suburbs

2-City

3-Town

Nominal scales are often used in surveys and surveys where only variable labels are important.

For example, there is a customer survey that asks, "Which brand of smartphone do you like?" Options: "Apple"-1, "Samsung" -2, "OnePlus" -3.

In this survey question, only the brand name is important to researchers conducting consumer surveys. You don't need to order any of these brands. However, while acquiring nominal data, researchers base their analysis on relevant labels.

In the example above, if survey respondents select Apple as their preferred brand, the input and associated data will be "1". This helped us quantify and answer the last question (the number of respondents who chose Apple, the number of Samsung, the number of OnePlus), and which one was the highest.

This is the basis of quantitative research, and the nominal scale is the most basic research scale.

Nominal Scale example

a) Political preference.

b) living place.

c) Nominal scale SPSS.

SPSS allows you to specify a scale as a scale (numerical data for intervals or ratio scales), order, or name. Nominal and ordinal data are either alphanumeric or numeric strings.

When you import variable data into an SPSS input file, it is used as a scale variable by default because the data basically contains numbers. It is important to change the name or order, or keep it as a scale, depending on the variables that the data represents.

Order scale: 2nd level scale

The order scale is defined as a variable measure scale used to simply represent the order of variables, not the difference between each variable. These scales are commonly used to represent non-mathematical ideas such as frequency, satisfaction, well-being, and degree of pain. The "ordinal" is similar to the "order", so it's very easy to remember the implementation of this scale. This is exactly the purpose of this scale.

An ordinal scale maintains description quality with a unique order, but the distance between variables cannot be calculated because there is no scale origin. Description Quality indicates tagging properties similar to the nominal scale. In addition, the ordinal scale also has relative positions for variables. There is no fixed start or "true zero" because the origin of this scale does not exist.

Sequence data and analysis

Sequential scale data can be displayed in tabular or graphical format for convenient analysis of the data collected by researchers. You can also analyze ordinal data using methods such as the Mann-Whitney U test and the Kruskal-Wallis H test. These methods are usually implemented to compare two or more ordinal groups.

The Mann-Whitney U test allows researchers to conclude which variable in a group is greater or lesser than another in a randomly selected group. The Clascal Wallis H test allows researchers to analyze whether the median of two or more ordered groups is the same.

Ordinal scale example

Workplace status, tournament team rankings, product quality order, consent or satisfaction order are some of the most common examples of ordering scales. These scales are commonly used in market research to collect and evaluate relative feedback on product satisfaction, perceived changes due to product upgrades, and more.

For example, the following semantic differential scale question:

How satisfied are you with our service?

Very dissatisfied – 1

Dissatisfaction – 2

Neutral – 3

Satisfaction – 4

Very satisfied – 5

The order of the variables is of utmost importance here, and labeling is also important. Very dissatisfied will always be worse than dissatisfaction, and satisfaction will be much worse than satisfaction.

This is when the ordinal scale is one step above the nominal scale. The order is related to the result, and so is the name.

Analyzing results based on order and name is a convenient process for researchers.

If you want to get more information than you collect using the nominal scale, you can use the ordinal scale.

This scale not only assigns values to variables, but also measures the rank or order of variables, such as:

a) Grades.

b) Satisfaction.

c) Happiness.

How satisfied are you with our service?

1-Very dissatisfied.

2-Dissatisfaction.

3-Neural.

4-Satisfaction.

5-Very satisfied.

Interval Scale: 3rd Level Scale

The interval scale is defined as a numeric scale that knows the order of the variables and the differences between them. Variables with familiar, constant, and computable differences are categorized using the interval scale. You can easily remember the main role of this scale. "Interval" indicates "distance between two entities". This is what the interval scale helps to achieve.

These scales are effective because they open the door to statistical analysis of the data provided. You can use the mean, median, or mode to calculate the central tendency of this scale. The only drawback of this scale is that there is no pre-determined starting point or true zero value.

The interval scale contains all the properties of the ordinal scale, plus provides a calculation of the differences between the variables. The main feature of this scale is that the equidistant difference between objects.

For example, consider a Celsius / Fahrenheit temperature scale –

80 degrees is usually above 50 degrees, and therefore the difference between these two temperatures is that the same because the difference between 70 degrees and 40 degrees.

Also, the value 0 is arbitrary because there are negative values for temperature. This makes the Celsius / Fahrenheit temperature scale a classic example of an interval scale.

Interval scales are often chosen in study cases where differences between variables are essential. This cannot be achieved using the nominal or ordinal scale. The interval scale quantifies the difference between two variables, while the other two scales can only associate qualitative values with variables.

Unlike the previous two scales, you can evaluate the mean and median of the ordinal scale.

Interval scales are often used in statistics because you can not only assign numbers to variables, but also calculate based on those values.

Even if the interval scale is amazing, the "true zero" value is not calculated, so the next scale comes up.

Interval Data and analysis

All techniques applicable to nominal and sequential data analysis can also be applied to interval data. Apart from these methods, there are several analytical methods, such as descriptive statistics and correlated regression analysis, which is widely used to analyze interval data.

Descriptive statistics is a term given to the analysis of numerical data that helps describe, depict, or summarize data in a meaningful way and helps calculate mean, median, and mode.

Interval scale example

There are situations where the attitude scale is considered the interval scale.

Apart from the temperature scale, time is also a very common example of the interval scale because the values are already established, constant and measurable.

Calendar years and times also fall into this category of measurement scales.

Likert scales, Net Promoter scores, Semantic differential scales, and bipolar matrix tables are examples of the most commonly used interval scales.

The following questions comes in the interval scale category.

a) How Much Does Your Family Make?

b) What is the temperature in your city?

Ratio Scale: 4th Level Scale

The ratio scale is defined as a variable measure scale that not only produces the order of variables, but also knows the differences between variables along side information about truth value of zero. this is often calculated assuming that the variable has zero options, the difference between the 2 variables is that the same, and there's a selected order between the choices.

The true zero option allows you to use a spread of inference and descriptive analysis techniques to variables. Ratio scales also can establish values for temperature, additionally to the very fact that nominal scales, ordinal scales, and interval scales do everything they will. the simplest samples of ratio scales are weight and height. marketing research uses ratio scales to calculate market share, annual sales, prices for next products, number of consumers, and more.

The ratio scale provides the foremost detailed information because researchers and statisticians can calculate central tendency using statistical methods like mean, median, and mode. Methods like mean, coefficient of variation, and mean also are available on this scale.

The ratio scale corresponds to the characteristics of the opposite three variable measurement scales. That is, the labelling of variables, the importance of the order of variables, and therefore the computable differences between variables (usually equidistant).

There are not any negative values within the ratio scale because there's a real zero value.

To decide when to use the ratio scale, researchers got to observe whether the variable has all the characteristics of the interval scale with the presence of temperature.

The mean, mode, and median are often calculated using the ratio scale.

Ratio Data and Analysis

At a basic level, ratio scale data is quantitative in nature, so you'll use all quantitative chemical analysis techniques like SWOT, TURF, crosstab, and conjoint to calculate ratio data. Some techniques, like SWOT and TURF, analyze ratio data in order that researchers can create a roadmap of the way to improve their products and services, but crosstabs are cross-tabulations to ascertain if new features help the target market. Helps you understand.

Example of ratio scale

The following questions fall under the category of ratio scales.

How tall is your daughter now?

Less than 5 feet.

5 feet 1 inch – 5 feet 5 inches

5ft 6inch-6ft

6 feet or more

What is your weight in kilograms?

Less than 50 kilograms

51-70 kg

71-90 kg

91-110 kg

110 kg or more

Summary – Level of measurement

The four data measures of nominal, order, interval, and ratio are often discussed in academic education. the subsequent easy-to-remember graphs are often useful for statistical testing.

Ratings and Ranking Scale

Definition of Rating Scale

Rating scales are defined as closed-end survey questions used to represent respondent feedback in a specific feature / product / service comparison format. This is one of the most established question types in online and offline surveys where survey respondents are expected to evaluate attributes or features. A rating scale is a variant of a popular multiple-choice question that is widely used to collect information that provides relative information on a particular topic.

Researchers use evaluation scales in their studies once they shall associate qualitative scales with different aspects of a product or function. This scale is commonly used to evaluate product or service performance, employee skills, customer service performance, processes performed for specific goals, and more. Evaluation scale survey questions can be compared to checkbox questions, but evaluation scales provide more information than just information. Yes, No.

Evaluation Scale Type

The ordinal scale is a scale that represents answer options in an ordered manner. The difference between the two answer options may not be calculable, but the answer options are always in a particular unique order. Parameters such as attitude and feedback can be presented using the ordinal scale.

The interval scale is a scale that not only establishes the order of the answer variables, but also calculates the magnitude of the difference between each answer variable. Absolute or true zero values do not exist on the interval scale. Celsius or Fahrenheit temperatures are the most common example of an interval scale. The Net Promoter Score, Likert Scale, and Bipolar Matrix Table are some of the most effective types of interval scales.

There are four main types of valuation scales that can be used appropriately in online surveys.

a) Graphic evaluation scale.

b) Numerical evaluation scale.

c) Descriptive evaluation scale.

d) Comparative evaluation scale.

Graphic Rating Scale: The Graphic Rating Scale indicates answer options on a scale such as 1-3, 1-5, and so on. The Likert scale is an example of a common graphic evaluation scale. Respondents can select specific options with lines or scales to represent their rating. This valuation scale is often implemented by HR managers to evaluate employees.

Numerical Evaluation Scale: The numerical evaluation scale has numbers as answer options, and each number does not correspond to a characteristic or meaning. For example, a visual analogue scale or a semantic differential scale can be presented using a numerical evaluation scale.

Descriptive Rating Scale: The Descriptive Rating Scale explains each response option in detail to respondents. Numerical values are not always associated with descriptive rating scale response options. There is a specific survey. For example, a customer satisfaction survey should elaborate on all response options so that all customers can be thoroughly informed about what they expect from the survey.

Comparative Rating Scale: As the name implies, the Comparative Rating Scale is based on the respondent's point of view of comparison, that is, based on relative measurements or holding other organizations / products / features as a reference. Expect to answer specific questions.

Advantages

However, this can also be a disadvantage. Some respondents do not choose the highest (or lowest) rating. Others automatically assign the same value to all questions.

Ranking Pros and Cons

The weakness of the ranking scale is also its strength. Consumers need to focus on one item over another. However, there may be more than one item that consumers rate equally. Ranking does not disclose that information.

Ranking is a great way to ask a question if you are trying to decide how to compare specific products to each other. In addition to measuring the satisfaction a consumer has with a single item, rankings can be used to see together the unique value of several different items.

Do you remember the soda brand example? Many well-known soda brands have continuously rebranded millions of brands at the expense of sales and public opinion.

These mistakes can be avoided. Polling a consumer-based audience using surveys can have a dramatic impact on new product rollout (or rollout potential). For example, suppose your soda company wants to introduce some new flavours. Once you have completed the evaluation survey to determine which flavours are most preferred, the ranking survey will help you find the best order to release new flavours.

“Rank these three flavours from the ones you like the most to the ones you dislike most.” We already know that flavours are preferred, so we'll start with the flavors we release first, or the flavours we release first, from the ranks we like. Find out the most effective flavours.

In some cases, ranking or comparison questions reduce cross-cultural survey responses and language bias. This could be especially It helps different consumers across the country and in different regions decide how to receive their products.

However, the ranking scale has its own set of problems. Respondents are mentally burdened if there are too many categories. I can't answer the question or the ranking isn't clearly considered.

Complex efforts

You can actually get the best results by using a combination of questions in your survey. Rankings are often a great way to narrow down your data and turn it into a question on a rating scale.

For example, you can ask a consumer to rank the 20 items available in a vending machine from the lowest to the highest. You can then ask your customers to evaluate their likelihood of purchasing each of their favorite items based on the results.

Using the questions together gives you a more comprehensive understanding of what consumers like, what they want, and how they make decisions.

How to get the most out of your research

When it comes to R & D, top companies show that consumer feedback is one of the most important factors in getting the most out of your budget and products.

How do you create a survey that gives you quick and useful insights?

The answer is the question. You can get incredible consumer feedback in minutes by combining different types of questions and retargeting questions that require more detailed data.

Customize your question

Suzy ™ makes it easy to create both rating and ranking questions using multiple-choice options. You can also add free-form questions to your survey to gain more insight into respondents and members.

When you're ready to rebrand your package, use the rating scale to ask members to provide feedback on the proposed package. Or, even better, offer some options and ask you to choose the top three or four.

The Suzy ™ platform gives you room to customize your questions and get better answers from your members. You can randomize the answer choices so that members are unaffected by the order, or allow users to select multiple options to view partial rankings for multiple items.

Thurstone, Likert and Semantic Differential scaling, Paired Comparison

Thurstone Scale: Definition

The Thurstone scale is defined as a one-dimensional scale used to track a respondent's behavior, attitude, or emotion towards a subject. This scale consists of statements about a particular question or topic, and each statement has a number that indicates that the respondent's attitude towards the topic is positive or unfavourable. Respondents indicate a statement they agree and the average is calculated. The average consent or disagreement score is calculated as the respondent's attitude towards the topic.

This scale was developed by Robert Thurstone to estimate measurements at interval levels that look similar. Although the Thurstone scale is built on the basis of the Likert scale, this method of building an attitude scale not only considers the value of each item when assessing the final attitude score, but is also a neutral item. It also corresponds to. The Guttman and Bogardas social distance scales are also variations of the one-dimensional scale that allow you to sort elements hierarchically.

While talking about the Thurstone scale question, there are three scales, but this scale is also known as the equal occurrence interval scale because the most commonly used method is equal occurrence intervals. The other two are a bit more complicated to develop, but still have the same consent / disagreement quiz question type. They are the continuous interval method and the paired comparison method.

How to perform a Thurston scale survey using an example

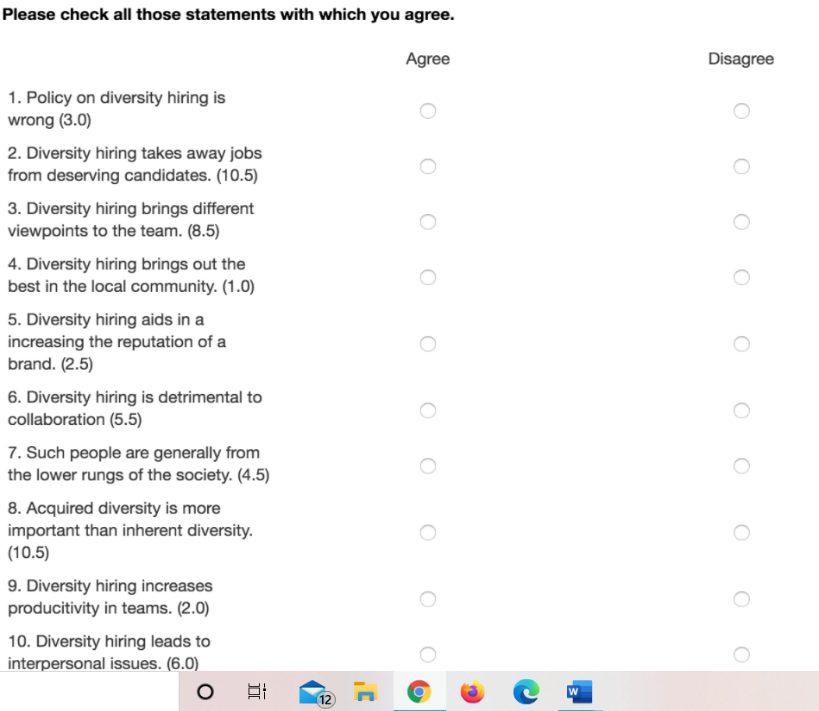

An example of a Thurstone scale survey is understanding the attitudes of employees within an organization towards diversity recruitment within the organization. The Thurstone Scale question has two distinct milestones. Derive the final question, manage the Thurstone scale question, and perform its analysis.

Derive the last question

There are five distinctive steps to deriving the final question. they are:

Step 1-Create a statement: Create a number of consent / disagreement statements on a particular topic. For example, if you want to know people's attitudes towards diversity adoption policies in your organization, you can use a statement like this:

a) The policy on diversity recruitment is wrong.

b) Recruiting diversity robs the right candidates of work.

c) Adopting diversity gives the team different perspectives.

d) The adoption of diversity brings out the best in the community.

e) Adopting diversity helps to enhance the brand's reputation.

Step 2-Rank each statement by the jury: The next step is to have the jury evaluate each item on a scale of 1 to 11. Here, 1 is the most unfavorable attitude towards the common vector – adoption of diversity and 11 is a very positive attitude. It is important to note that the judges must evaluate each option and do not agree or disagree with them.

Step 3 – Calculate Median and / or Interquartile Range (IQR): Then analyze the data collected from all judges and tabulate the mean or median in ascending order. To do. Using the median or average is a personal choice, and the options give accurate results no matter which one you use. If you have 50 statements, you need an average / median of 50 and an IQR of 50.

Step 4 – Sort Tables: The data should be sorted based on the minimum to maximum median / mean, and the IQR for each median / mean should be in descending order. This can be expressed as:

Statement | Median or Mean | IQR |

43 | 1 | 1.25 |

21 | 1 | 1 |

16 | 1.5 | 1 |

3 | 3 | 2 |

6 | 5 | 2 |

28 | 6 | 1 |

37 | 7 | 3 |

9 | 7.5 | 1.5 |

18 | 9 | 2 |

26 | 11 | 2 |

Step 5 – Final Variable or Option Selection: Select an option based on the table above. For example, you can select one item from each mean / median. You want the most consensus statement among the judges. For each median, this is the item with the lowest interquartile range. This is a "rule of thumb". You do not need to select this item. If you determine that the wording is inadequate or ambiguous, select the item above it (IQR is next lowest).

Management and analysis of Thurstone scale questions

Once the final questions are determined, they are shared with the respondents and they choose to agree or disagree. Ratings are shown in parentheses but are not shared with the actual respondents.

Median or average management and analysis: Questions and subsequent options can be managed by respondents using either the median or median in the following formats: The statement weights are summed and divided by the number of checked statements. If the respondent agrees with statements 2, 5, 7, and 10. The attitude score is 10.5 + 2.5 + 4.5 + 6.0 = 23.5 / 4 = 5.8. Dividing the number of statements, this score is just above the midpoint on the scale of 1-11. This score shows a slight advantage in attitudes towards diversity recruitment within the organization.

Simple Count or Percentage Management and Analysis: In the same example above, if the question is managed without an average or median score, the calculation can be expressed as a simple score. The number of agreements on a scale or percentage from 1 to 11. If the respondent agrees with statements 1, 4, 5, 6, 8, 9, and 11. The number of contracts is 7 out of 11, which is 63.63%, which means that the attitude toward diversity recruitment is good.

Features of Thurstone Scale Questions

Some of the distinctive features of the Thurstone scale question are:

They are divided into two stages: Thurstone scale questions will not be asked in the first iteration without a judge's evaluation. This is an important feature of this question, as the options that respondents see are weighted and there is consensus that they are included in the survey.

The mean or median is always calculated: Each option is weighted, so the mean or median is calculated for each option. This is also the basis for the selection method for use in the final survey.

Consent or disagree options only: Respondents make choices only based on their consent or disagreement with the statement.

Use of Thurston Scale Survey

Thurstone scale surveys are used to measure respondents' attitudes towards a particular subject. This scale can be applied to a wide range of market research, including:

a) Opinion-Measuring Surveys: Thurstone-scale questions generate quantifiable data about measuring the strength of opinions of respondents.

b) Measures of attitudes and emotions: This scale is effectively used for customer satisfaction to predict future buying trends and employee involvement in calculating sales.

Likert

What is the Likert Scale?

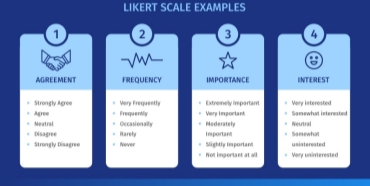

Definition: The Likert scale is a one-dimensional scale used by researchers to collect the attitudes and opinions of respondents. Researchers often use this psychometric scale to understand their views and perspectives on a brand, product, or target market.

The various variations of the Likert scale focus directly on measuring people's opinions, such as the Guttman scale, the Bogardas scale, and the Thurstone scale. Psychologist Rensis Likert has established a distinction between scales that materialize from a collection of responses to groups of items (perhaps eight or more). The response is measured in the range of values.

Likert scale example:

For example, to collect product feedback, researchers use Likert-scale questions in the form of dichotomized optional questions. He / she assembled the question "The product was a good purchase" and the options are listed as for or against. Another way to assemble this question is "state your satisfaction with the product" and you have a choice from very dissatisfied to very satisfied.

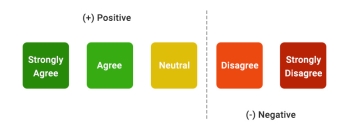

When responding to Likert scale items, the user responds explicitly based on the level of consent or disagreement. These measures allow you to determine the level of approval or disapproval of respondents. The Likert scale assumes that the intensity and intensity of experience is linear. Therefore, assuming that attitudes can be measured, it goes from a perfect match to a perfect mismatch.

Likert scale types and examples

Likert scales are popular among researchers for gathering opinions about customer satisfaction and employee experience. This scale can be divided into two main types.

a) Even the Likert scale.

b) Odd Likert Scale.

Even the Likert scale

Researchers use the Likert scale to collect extreme feedback without offering a neutral option.

4-Point Likert Scale for Importance: This type of Likert Scale allows researchers to include four extreme options without a neutral choice. Here, the various importance is represented by a 4-point Likert scale.

Recommended 8-point possibilities: This is a variation of the 4-point Likert scale described earlier. The only difference is that this scale has eight options for collecting feedback on possible recommendations.

Odd Likert Scale

Researchers use a strange Likert scale to give respondents the option to answer neutrally.

a) 5-Point Likert Scale: Using 5 Answer Options, researchers can use this strange Likert Scale question to choose a neutral answer option that respondents can choose if they do not want to answer from extreme options Gather information about the topic by including.

b) 7-Point Likert Scale: The 7-Point Likert Scale adds two more answer options at both ends of the 5-Point Likert Scale question.

c) 9-Point Likert Scale: The 9-point Likert Scale is very rare, but can be used by adding two more answer options to the 7-Point Likert Scale question.

Features of Likert scale

The Likert scale was born in 1932 in the form of a five-point scale and is now widely used. These measures range from a group of general topics to the most specific topics that ask respondents to indicate their level of consent, approval, or belief. Some important features of the Likert scale are:

a) Relevant Answer: The item should be easily related to the sentence answer, regardless of whether the item-sentence relationship is clear.

b) Scale type: The item always requires two extremum positions and an intermediate answer option that acts as a tick mark between the extremums.

c) Number of answer options: The most common Likert scale is a five-item scale, but using more items will help improve the accuracy of the results.

d) Increased scale reliability: Researchers often increase the edges of the scale to create a 7-point scale by adding "very" to the top and bottom of the 5-point scale. The 7-point scale reaches the upper limit of scale reliability.

e) Use of a wide scale: As a general rule, it is recommended to use the widest possible scale, such as Likert. If desired, you can always group your answers into a concise group for analysis.

f) Lack of Neutral Options: By considering these details, the scale is reduced to even categories (usually 4) to eliminate the possibility of "neutral" on the "forced selection" survey scale.

g) Inherent Variables: The primary Likert record clearly indicates that there may be an indigenous variable whose value indicates the feedback or attitude of the respondent. The underlying variable is, at best, the interval level.

Likert Scale Data and Analysis

Researchers use surveys on a regular basis to measure and analyze the quality of a product or service. The Likert scale is a standard form of classification for research. Respondents gave their opinions (data) on the quality of products / services from the highest to the highest using 2, 4, 5, or 7 levels can be low, or good or bad.

Researchers and auditors typically group the collected data into a hierarchy of four basic measurement levels (nominal, order, interval, and ratio measurement levels) for further analysis.

Nominal data: The data in which the variable-classified answers do not necessarily have quantitative data or order is called nominal data.

Order data: Data that allows you to sort and classify answers, but cannot measure distance, is called order data.

Interval data: Aggregate data that can measure order and distance is called interval data.

Ratio data: Ratio data is analogous to interval data. The only difference is the equally decisive ratio between each data and the absolute "zero" treated as the origin.

Data analysis using nominal, interval, and ratio data is generally transparent and straightforward. Ordinal data analyzes data, especially with respect to Likert or other measures in the survey. This is not a new issue. The effectiveness of processing ordinal data as interval data remains controversial in research analysis in various application areas. Here are some details to stay in mind:

Statistical tests: Researchers sometimes treat ordinal data as interval data because they claim that parametric statistical tests are more powerful than nonparametric alternatives. In addition, inferences from parametric tests are easier to interpret and provide more information than nonparametric options.

Focus on Likert Scales: However, treating ordinal data as interval data without examining the values in the dataset and the purpose of the analysis can mislead or misrepresent the findings. To better analyze scalar data, researchers prefer to consider ordinal data as interval data and focus on the Likert scale.

Median or range for examining data: The universal guideline is that if the data is on a normal scale, the mean and standard deviation are unfounded for detailed statistics, similar to parametric analysis based on a normal distribution. It suggests that it is a parameter. Nonparametric tests are based on the appropriate median or range for inspecting the data.

Best practices for analyzing Likert scale results

Due to the discrete, ordered, and limited scope of Likert element data, there has long been controversy over the most logical way to analyze Likert data. The first option is between parametric and nonparametric tests. The strengths and weaknesses of each type of analysis are commonly described as follows:

Benefits of Likert Scale

There are many advantages to using the Likert scale in market research they are:

Likert scale surveys are a comprehensive method for measuring feedback and information, making them much easier to understand and respond to. This is an important question for measuring opinions and attitudes on a particular topic and will be very helpful in the next step of the survey.

If your organization needs more information about this and other questions within the platform, please refer to our online chat and tell us about your project. We are happy to provide you with one of the best research tools for these needs.

Semantic Differential Scaling

What is the Semantic Differential Scale?

The Semantic Differential Scale is a survey or survey rating scale that asks people to rate a product, company, brand, or any "entity" within the framework of a multipoint rating option. These survey response options are grammatically opposite adjectives. For example, love-hate, satisfaction-dissatisfaction, and likely to come back-it is unlikely to come back with an intermediate option in between.

Surveys or surveys using the Semantic Differential Scale Survey feature are the most reliable way to get information about people's emotional attitudes towards a topic of interest.

Well-known American psychologist Charles Egerton Osgood invented the Semantic Differential Scale to record and effectively utilize this "meaning" of emotional attitudes towards the entity.

Osgood conducted this study in an extensive database and found that the three scales are generally useful, regardless of race, culture, or language.

a) Estimate: A combination similar to good or bad.

b) Authority: Powerful-A pair on the week line.

c) Active: Active-Passive-like combo.

Researchers can use these combinations to measure a variety of subjects, such as customer prospects and employee satisfaction with future product launches.

What is the Semantic Differential Scale?

Researchers use semantic differential scale questions to describe a product, organization, or service, likely / unlikely, happy / sad, like / dislike service, etc., at the extremes of this scale. Ask respondents to rate with multipoint questions that use polar adjectives.

Semantic differential scale examples and question types

1. Slider Rating Scale: Questions with graphical sliders provide respondents with a more interactive way to answer questions on the Semantic Differential Scale.

2. Non-slider rating scale: Non-slider questions use radio buttons that are common to the look and feel of traditional research. Respondents are accustomed to answering.

3. Free-form questions: These questions give users the freedom to express their feelings about an organization, product, or service.

4. Ordering: Ordering questions provide a range for assessing the parameters that respondents find best or worst, depending on their personal experience.

5. Satisfaction Rating: The simplest and most eye-catching Semantic Differential Scale question is the Satisfaction Rating question.

Benefits of Semantic Differential

Things to consider when using semantic differentials

Question Pro provides the resources you need to collect a wide variety of data of all kinds, including the Semantic Differential Survey feature. However, when looking for an alternative solution provider, consider the following:

Create: What do I need to get a free account? Can you sign up in just a few seconds? Is it easy to create a survey after logging in? Can you create your study within minutes, or are you struck by tabs, options, and various windows that are difficult to manage? How about customization? Is it easy to edit the survey to suit your specific needs?

Question Pro gives you the options you need to create a survey.

Distribution: How difficult is it to send once the survey is complete? Does your solution provide access to edit and manage personal mailing lists for distribution? Why not provide a direct hyperlink so that you can easily share your survey on Facebook and LinkedIn? How about providing embeddable HTML code for posting your research on a website or blog?

Question Pro provides all the solutions mentioned.

Analysis: How easy is it to report the results once the respondents' responses have been collected? Can I access the snapshot during the collection process to get an overview of the results? How easy is it to filter data? What if you need a more detailed analysis? Can I export the resolution & Would you like to put them in Excel for a more detailed evaluation?

With Question Pro you can do all of the above.

Using Semantic Differential Scale: Question Pro Process

1. Create a survey: Question Pro gives you access to over 350 different templates for distribution, editing, or simply brainstorming new ideas. Customize the question, question type, order, and color to your exact needs.

2. Collect Answers: Once you've created your survey, you can distribute it by email, direct link, or embed HTML code in your website or blog. You can view a snapshot report of the current response in real time.

3. Analyze Survey Results: Once the survey is complete and the responses have been collected, you can customize and view detailed reports at your fingertips. You can apply filters, manipulate pivot tables, and view trend analysis.

Paired Comparison

Definition: Paired comparison scaling may be a comparison scaling technique that needs respondents to ascertain two objects at an equivalent time and choose one consistent with defined criteria. The resulting data is actually ordered.

Paired comparison scaling is usually used when the stimulus object may be a physical product. The comparison data thus obtained are often analyzed by either method. First, researchers can calculate the share of respondents preferring one object to a different by adding a matrix of every respondent, dividing the entire by the amount of respondents, then multiplying by 100. this manner you'll evaluate all stimulus objects. at an equivalent time.

Second, under the idea of transition (if brand X takes precedence over brand Y and brand Y takes precedence over brand Z, it means brand X takes precedence over brand Z). Paired comparison data are often converted in order . to work out the order, researchers identify the amount of times an object is prioritized by summing all the matrices.

The paired comparison method is effective when the amount of objects is restricted because it requires an immediate comparison. Also, an outsized number of stimulus objects can make comparisons cumbersome. Also, if you violate the transitive assumptions, the order during which the objects are placed can bias the results.

All potential options are visually compared to offer an instantaneous overview of the proper decision. This makes it easy to match the relative importance of the other criteria. Paired comparisons are often a really useful gizmo when there's no objective data available to form a choice. This method is additionally referred to as pair comparison and pairwise comparison.

Priority

The paired comparison method is often utilized in a spread of situations. for instance, when its unclear which priorities are important, or when the metrics are subjective in nature. Paired comparison analysis is additionally useful when potential options are competing with one another, because the best solution is ultimately selected. If there are not any conflicting requirements, it's easier to line priorities.

6 Steps

To apply the paired comparison method, it's knowing use an outsized piece of paper or a chart. Perform the subsequent steps one by one to make sure that your analysis works best.

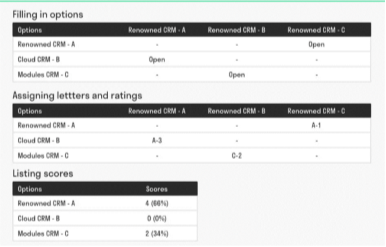

Step 1: Create a table

Create a table with rows and columns and enter options which will be compared against one another within the first row and first column (row and column headers). Empty cells remain empty for now. If you've got 4 options, you've got 4 rows, 4 columns and 16 cells. If you've got 3 options, you'll see 3 rows, 3 columns, 9 cells, and so on.

Step 2: Assign letters

All options are now assigned letters (A, B, C, etc.). the choices are listed within the row and column headers, each with a letter, so you'll properly compare the choices to every other.

Step 3: Block the cell

It is important to dam cells within the table where an equivalent option is duplicated. you ought to also block the cells that contain the comparison displayed within the half of the table. All comparisons got to be done just one occasion.

Step 4: Compare options

The remaining cells compare the row options with the column options. the foremost important optional letters are listed. for instance, if A is compared to C and C may be a more important option, C are going to be written down therein cell.

Step 5: Evaluation option

For the difference in importance, for instance, get a rating within the range of 0 (no difference) to three (significant difference).

Step 6: List the results

The results are integrated by adding all the values for every option in question. you'll convert these sums to percentages if you would like.

Actual paired comparison

To clarify how the paired comparison method works, here is an example. Consider a for-profit company that must choose from three different customer relationship management (CRM) systems. the primary option may be a well-known brand CRM system, the second option may be a CRM system connected to a cloud service, and therefore the third option may be a CRM system consisting of varied modules. These options are assigned characters and placed within the row and column headers. In step 3, some cells are blocked, leaving 4 cells open during this example (see example).

Fill within the score

Now you're able to fill within the table. I chose a rating system from 0 to three . in any case the choices are compared, the winner's options are going to be revealed. A is compared to B, B is compared to C, and C is compared to A.

The first comparison found that A was more important than B, therefore the letter A was written down within the leukocyte. The second comparison found that C was more important than B, therefore the letter C was written down within the open cell. the ultimate comparison may be a and C, and A is additionally more important here. This means that the letter A has been written down in an open cell.

Let's check out the importance here. If A is far more important than B, add 3 after A. If A is of little importance compared to C, the score are going to be 1. Finally, option C is of medium importance compared to B, therefore the score is 2. (See example).

Evaluation

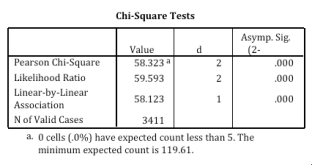

Now that each one the cells are filled in, you'll see the results. First, all scores are added. See the image below.

Paired Comparision Table

Key takeaways:

Sampling –Steps, Types, Sample Size Decision

What is sampling?

Sampling is a technique that selects individual members or a subset of the population and makes statistical inferences from them to estimate the characteristics of the entire population. Market research researchers use a wide variety of sampling methods so that they do not need to survey the entire population to gather practical insights.

It is also a time- and cost-effective method that forms the basis of any research design. Sampling techniques can be used in research software for optimal derivation.

For example, if a pharmaceutical company wants to investigate the harmful side effects of a drug on a country's population, it is almost impossible to conduct a survey study of everyone. In this case, the researcher determines a sample of people from each demographic and then investigates them to provide feedback on drug behavior.

Sampling Process Steps

The operational sampling process can be divided into seven steps as shown below.

1. Definition of target population:

Defining the population of interest for business research is the first step in the sampling process. In general, the target population is defined in terms of elements, sampling units, ranges, and time frames. The definition should be in line with the purpose of the research. For example, if a kitchen equipment company wants to conduct a survey to identify the demand for microwave ovens, it may define the population as "all women over the age of 20 who cook (assuming men rarely cook)". .. However, this definition is too broad to include all households in the country of the population surveyed. Therefore, this definition can be further refined and defined at the sampling unit level. That is, all women over the age of 20 who cook and earn more than Rs.20,000 per month. This reduces the size of the target population and focuses research. The definition of population is further refined by specifying the areas in which researchers need to sample, that is, households in Hyderabad.

A well-defined population reduces the likelihood of including respondents who do not fit the company's research objectives. For example, if the population is defined as all women over the age of 20, researchers could incorporate the opinions of a large number of women who cannot afford to buy a microwave oven.

2. Sampling frame specification:

Once the population definition is clear, researchers need to determine sampling frames. A sampling frame is a list of elements from which a sample can be extracted. Continuing with the microwave example, the ideal sampling frame would be a database containing all households with monthly incomes above Rs.20,000. However, in practice, it is difficult to obtain a thorough sampling frame that exactly fits the requirements of a particular study. In general, researchers use easily available sampling frames such as phone books and lists of credit card and mobile phone users. Different private companies offer databases developed according to different demographic and economic variables. Maps and aerial photographs may also be used as sampling frames. In any case, the ideal sampling frame is one in which the entire population lists the names of its elements only once.

Sampling frame errors pop up if the sampling frame does not accurately represent the entire population, or if some elements of the population are missing. Another drawback of sampling frame is the overexpression. The phone book can be overrepresented by name / household with more than one connection.

3. Specifying the sampling unit:

A sampling unit is a basic unit that contains a single element or group of elements in the population to be sampled. In this case, the household becomes the sampling unit, and all women over the age of 20 who live in that particular home become the sampling factor. If it is possible to identify the exact target audience for a business survey, then every individual element is a sampling unit. This is for the primary sampling unit. However, a convenient and excellent way to sample is to select a household as the sampling unit and interview all women over the age of 20 who cook. This is for a secondary sampling unit.

4. Sampling method selection: The sampling method outlines how to select a sample unit. The choice of sampling method is influenced by the purpose of the business survey, the availability of resources, time constraints, and the nature of the problem being investigated. All sampling methods can be grouped into two different heads, probabilistic sampling and non-probabilistic sampling.

5. Determining sample size:

The sample size plays an important role in the sampling process. There are various ways to classify the techniques used to determine the sample size. Some of these are most important and worth mentioning is whether this technique deals with fixed sampling or sequential sampling, and whether its logic is based on traditional or Bayesian methods. In non-stochastic sampling procedures, budget allocation, heuristics, number of subgroups to analyze, importance of decision, number of variables, nature of analysis, incidence, and completion rate play a major role in determining sample size. However, for probabilistic sampling, the formula is used to calculate the sample size after the acceptable error level and reliability level have been specified. Details of the various techniques used to determine the sample size is described at the end of this chapter.

6. Specify Sampling Plan:

This step outlines the specifications and decisions regarding the implementation of the research process. Suppose a block of cities is a sampling unit and a household is a sampling element. This step outlines sampling planning techniques for identifying homes based on specified characteristics. It includes issues such as how the interviewer gets a systematic sample of the house. What should the interviewer do when the house is vacant? What is the procedure for re-contacting respondents who were absent? All these and many other questions need to be answered for the research process to work smoothly. These are guidelines that assist researchers at every step of the process. Interviewers and their colleagues are mostly field workers, so proper sampling plans can make things easier and eliminate the need to return to seniors when faced with operational problems.

7. Sample selection: This is the final step in the sampling process where the actual selection of sample elements is performed. At this stage, the interviewer should adhere to the rules outlined to facilitate the business investigation. In this step, she implements a sampling plan, selects a sampling plan, and selects the samples needed for the study.

Sampling Type: Sampling Method

There are two types of sampling in market research: probabilistic sampling and non-probabilistic sampling. Let's take a closer look at these two sampling methods.

Type of probability sampling using an example:

Probability sampling may be a sampling technique during which researchers use a probability-theoretic method to pick samples from a bigger population. This sampling method considers all members of the population and forms a sample based on a fixation process.

For example, in a population of 1000 members, there is a 1/1000 chance that all members will be selected as part of the sample. Probabilistic sampling eliminates population bias and gives all members a fair opportunity to be included in the sample.

There are four types of probabilistic sampling methods:

For example, in an organization with 500 employees, if the HR team decides to carry out team building activities, they are likely to prefer to choose a chit from the bowl. In this case, each of the five hundred employees has an equal chance of being selected.

2. Cluster sampling: Cluster sampling is a method in which a researcher divides an entire population into sections or clusters that represent the population. Clusters are identified and included in the sample based on demographic parameters such as age, gender, and location. This makes it easy for survey authors to derive effective inferences from their feedback.

For example, if the US government wants to assess the number of immigrants living in the US mainland, it can be clustered based on states such as California, Texas, Florida, Massachusetts, Colorado, and Hawaii. The survey will be more effective as the results will be organized into states and provide insightful immigration data.

3. Systematic sampling: Researchers use a systematic sampling method to select sample members of the population at regular intervals. You need to choose a sample starting point and sample size that can be repeated at regular intervals. This sampling method is the least time consuming due to the predefined range of this type of sampling method.

For example, researchers plan to collect 500 lineage samples in a population of 5000. He / she numbers each element of the population from 1 to 5000 and selects every 10 people as part of the sample (total population / sample size = 5000/500 = 10).

4. Stratified Random Sampling: Stratified random sampling is a method in which a researcher divides a population into smaller groups that do not overlap but represent the entire population. During sampling, you can organize these groups and extract samples individually from each group.

For example, a researcher who wants to analyze the characteristics of people in different annual income departments creates layers (groups) according to their annual income. Example – $ 20,000, $ 21,000 – $ 30,000, $ 31,000 to $ 40,000, $ 41,000 By doing this, researchers conclude the characteristics of people in different income groups. Marketers can analyze which revenue groups to target and which revenue groups to exclude and create a roadmap with fruitful results.

Types of non-probability sampling using examples

The non-probability method is a sampling method that involves collecting feedback based on the sample selection ability of the researcher or statistician rather than a fixed selection process. In most cases, the output of studies conducted using unlikely samples may be biased in results and may not represent the target population of interest. However, there are situations where non-probability sampling is far more useful than other types, such as the preparatory stage of the survey and the cost constraints for conducting the survey.

The four types of non-probability sampling better explain the purpose of this sampling method.

Convenience Sampling: This method relies on customer surveys in shopping malls and accessibility to objects such as busy street passers-by. It is usually called expedient sampling because it is easy for researchers to perform and contact subjects. Researchers have little authority to select sample elements and are purely based on proximity rather than representativeness. This non-probability sampling method is used when there is a time and cost limit to collecting feedback. Convenience sampling is used when resources are limited, such as in the early stages of research.

For example, start-ups and NGOs typically conduct expedient sampling at malls to distribute leaflets and cause promotions for upcoming events. This is done by standing at the entrance of the mall and randomly distributing pamphlets.

Judgmental or purposeful sampling: Judgmental or purposeful samples are formed at the discretion of the researcher. The researcher purely considers the purpose of the research, as well as the understanding of the target audience. For example, a researcher wants to understand the thinking process of people who are interested in studying for a master's degree. The selection criteria are as follows: "Are you interested in getting a master's degree with ...?" Those who answered "No" are excluded from the sample.

Snowball Sampling: Snowball sampling is a sampling method that researchers apply when it is difficult to track a subject. For example, investigating people without shelters and illegal immigrants is very difficult. In such cases, snowball theory can be used by researchers to track and interview several categories and derive results. Researchers also implement this sampling method in situations where the topic is very sensitive and not openly discussed. For example, a survey to collect information about HIV AIDS. Not many victims answer questions immediately. Nevertheless, researchers can contact the victims and gather information by contacting volunteers related to the people and causes they may know.

Quota Sampling: With quota sampling, the selection of members in this sampling method is based on preset standards. In this case, the samples are formed based on specific attributes, so the resulting samples will be of the same quality as seen in the entire population. This is a quick way to collect samples.

How do you determine the type of sampling to use?

In any study, it is essential to choose the exact sampling method to achieve the research goals. The effect of sampling depends on many factors. There are several steps that expert researchers follow to determine the best sampling method.

Secondary Data Sources

Secondary data refers to data that has already been collected and documented elsewhere for a particular purpose. Secondary data is data collected by someone else for other purposes (although investigators use it for other purposes).

Use census data to gather information, obtain information about the age and gender structure of the population, use hospital records to determine community morbidity and mortality patterns, and keep organization records. Use to determine its activity and collect data from sources such as articles, magazines, get historical information in magazines, books and periodicals

Secondary Data Source:

Secondary data sources include books, private sources, journals, newspapers, websites, government records, and more. Secondary data is known to be readily available compared to primary data. Using these sources requires little research and no human resources.

With the advent of electronic media and the Internet, access to secondary data sources has become easier. Below are some of these highlighted sources.

Book

Books are one of the most traditional ways to collect information. Today, there are books available for all the subjects you can think of. All you have to do when doing research is to find a book on the subject being studied and select from the book repositories available in your area. Books, when carefully selected, are a genuine source of genuine data and can help you prepare for a literature review.

Published source

There are different public sources available for different research topics. The authenticity of the data generated from these sources depends primarily on the author and publisher.

Published sources can be printed or digitized in some cases. It may be paid or free, depending on the author's and publisher's decisions.

Undisclosed sources of personal information

Compared to public sources, this is not readily available and may not be easily accessible. They will only be available if the researcher shares it with another researcher who is not authorized to share it with a third party.