Unit - 4

Introduction to Computer Organization

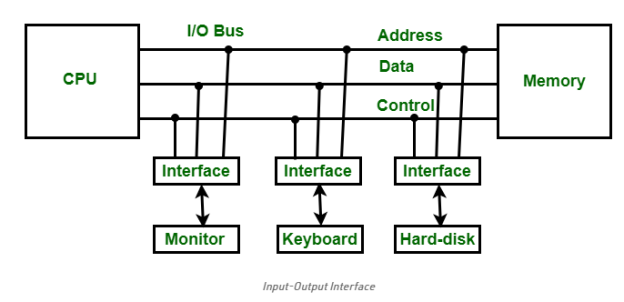

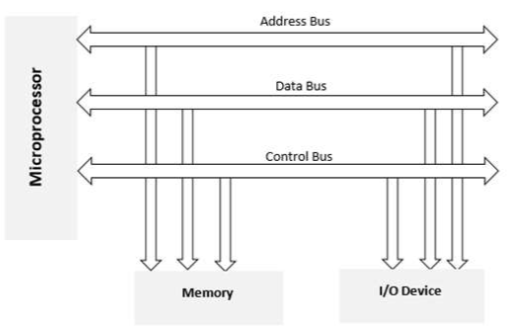

4.1.1 Introduction to Input-Output Interface

The Input-Output Interface (I/O) is a means for moving data between internal storage devices, such as memory, and external peripheral devices. A peripheral device, often known as an input-output device, is a device that provides input and output for a computer. Consider the following scenario: Input devices, such as a keyboard and mouse, offer input to the computer, and output devices, such as a monitor and printer, offer output to the computer. In addition to external hard drives, several peripheral devices that can provide both input and output are also available.

The sole purpose of peripheral devices in a microcomputer-based system is to offer unique communication lines for connecting them with the CPU. Communication links are required to resolve disparities between peripheral devices and the CPU.

The following are the main differences:

1. Peripheral devices are electromagnetic and electromechanical in nature. The CPU is electrical in nature. The modes of functioning of peripheral devices and the CPU differ significantly.

2. Because peripheral devices communicate data at a slower rate than the CPU, there is also a synchronisation mechanism.

3. Data code and formats in peripheral devices differ from those in the CPU and memory

4. Peripheral device operating modes differ, and each can be managed to avoid interfering with the operation of other peripheral devices attached to the CPU.

Additional hardware is required to resolve disparities between the CPU and peripheral devices, as well as to supervise and synchronise all input and output devices.

Functions of Input-Output Interface:

1. It's utilised to keep the CPU's operating speed in sync with the input-output devices.

2. It chooses an input-output device that is appropriate for the input-output device's interpretation.

3. It can send out signals such as control and timing signals.

4. Data buffering is possible through the data bus in this case.

5. There are several types of error detectors.

6. It converts serial data to parallel data and the other way around.

7. It can also convert digital data to analogue signals and the other way around.

4.1.2 Computer Architecture: Input/Output Organisation

Input/Output Subsystem

An efficient way of communication between the central system and the outside environment is provided by a computer's I/O subsystem. It is in charge of the computer system's entire input-output activities.

Peripheral Devices

Peripheral devices are input or output devices that are connected to a computer. These devices, which are considered to be part of a computer system, are designed to read information into or out of the memory unit in response to commands from the CPU. Peripherals are another name for these gadgets.

Consider the following scenario: Keyboards, monitors, and printers are examples of common peripheral devices.

Peripherals are divided into three categories:

1. Input peripherals: These devices allow users to enter data from the outside world into the computer. For instance, a keyboard, a mouse, and so on.

2. Output peripherals: These devices allow data to be sent from the computer to the outside world. For instance, a printer, a monitor, and so on.

3. Input-Output Peripherals: Allows both input (from the outside world to the computer) and output (from the computer to the outside world) (from computer to the outside world). For instance, a touch screen.

Interfaces

A shared boundary between two different components of a computer system that can be used to connect two or more components to the system for communication purposes is known as an interface.

There are two different types of user interfaces:

1. Inteface of the CPU

2. Interface to I/O

Let's take a closer look at the I/O Interface.

Input-Output Interface

For communicating with the CPU, peripherals connected to a computer require particular communication interfaces. In a computer system, there are unique hardware components that govern or regulate input-output transfers between the CPU and peripherals. Because they offer communication links between the CPU bus and peripherals, these components are known as input-output interface units. They provide a means of exchanging data between the internal system and the input-output devices.

4.1.3 Modes of I/O Data Transfer

Data transfer between the central unit and I/O devices can be accomplished in one of three ways, as shown below:

1. Interrupt Initiated I/O

2. Programmed I/O

3. Memory Direct Access

Programmed I/O

I/O instructions written in a computer programme result in programmed I/O instructions. The program's instruction triggers the transfer of each data item.

Typically, the software is in charge of data transport between the CPU and peripherals. Transferring data with programmed I/O necessitates the CPU's ongoing supervision of the peripherals.

Interrupt Initiated I/O

The CPU remains in the programme loop in the programmed I/O approach until the I/O unit indicates that it is ready for data transfer. This is a time-consuming procedure because it wastes the processor's time.

Interrupt started I/O can be used to solve this problem. The interface creates an interrupt when it determines that the peripheral is ready for data transfer. When the CPU receives an interrupt signal, it stops what it's doing and handles the I/O transfer before returning to its prior processing activity.

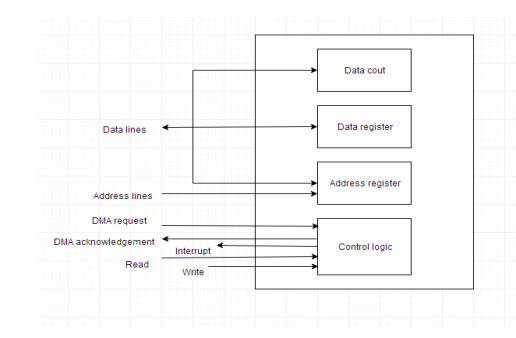

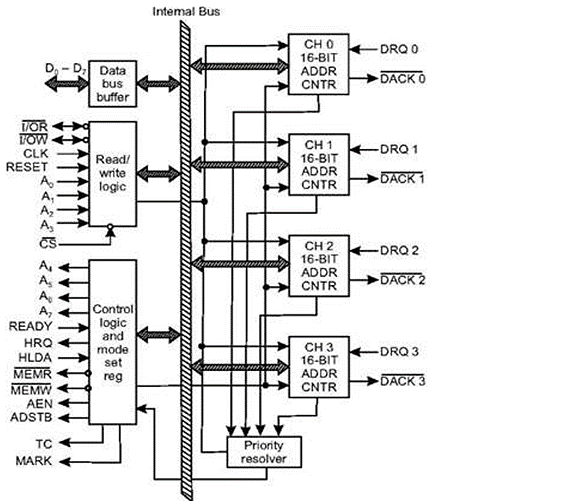

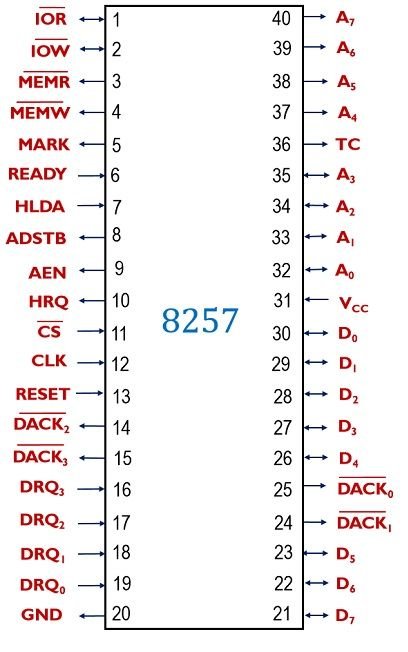

Direct Memory Access

The speed of transmission would be improved by removing the CPU from the path and allowing the peripheral device to operate the memory buses directly. DMA is the name for this method.

The interface transfers data to and from the memory via the memory bus in this case. A DMA controller is responsible for data transport between peripherals and the memory unit.

DMA is used by many hardware systems, including disc drive controllers, graphics cards, network cards, and sound cards, among others. In multicore CPUs, it's also used for intra-chip data transport. In DMA, the CPU would start the transfer, perform other tasks while it was running, and then get an interrupt from the DMA controller when the transfer was finished.

4.1.4 What is the difference between an input and output device?

An input device transmits data to a computer system, which is then processed, and an output device reproduces or displays the results. Input devices only allow data to be entered into a computer, whereas output devices only receive data from another device.

Because they can either accept data input from a human or emit data created by a computer, most devices are either input or output devices. However, some devices, known as I/O devices (input/output devices), can accept input and show output.

A keyboard, for example, sends electrical signals that are received as input, as illustrated in the top half of the image. The computer then interprets the signals and displays them on the monitor as text or graphics. The computer delivers or outputs data to a printer in the lower half of the image. The data is then printed on a piece of paper, which is also referred to as output.

Input/output (I/O) devices

An input/output device can take data from people or another device (input) and transfer it to another device (output) (output). The following are some examples of input/output devices.

1. CD-RW and DVD-RW drives - These drives receive data from a computer (input) and copy it on a readable CD or DVD. The drive may also send data from a CD or DVD (output) to a computer.

2. USB flash drive - A USB flash drive receives and saves data from a computer (input). The drive can also deliver data to a computer or other device (output).

4.2.1 Random Access Memory (RAM) and Read Only Memory (ROM)

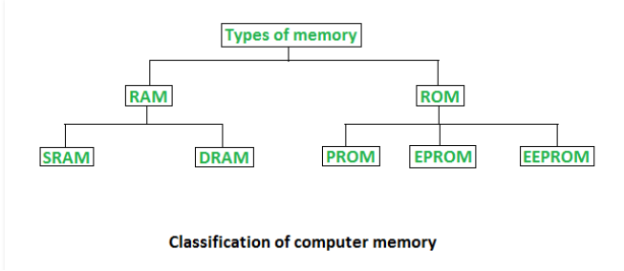

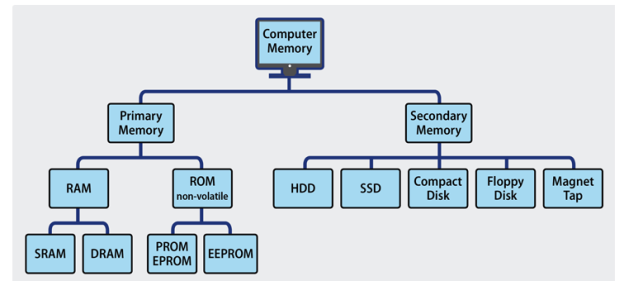

Memory is the most important component of a computer system since it allows it to accomplish simple tasks. There are two types of computer memory: primary memory (RAM and ROM) and secondary memory (hard drive,CD,etc.). Read Only Memory (ROM) is primary-non-volatile memory, while Random Access Memory (RAM) is primary-volatile memory.

1. Random Access Memory (RAM) –

The programmes and data that the CPU requires throughout the execution of a programme are kept in this memory, which is also known as read write memory, main memory, or primary memory.

Because the data is lost when the power is switched off, it is a volatile memory.

SRAM (Static Random Access Memory) and DRAM (Dynamic Random Access Memory) are the two forms of RAM (Dynamic Random Access Memory).

DRAM | SRAM |

1.Constructed of tiny capacitors that leak electricity | 1.Constructed of circuits similar to D flip-flops. |

2.Requires a recharge every few milliseconds to maintain its data. | 2.Holds its contents as long as power is available. |

3.Inexpensive | 3.Expensive

|

4.Slower than SRAM | 4.Faster than DRAM |

5.Can store many bits per chip | 5.Can not store many bits per chip |

6.Uses less power | 6.Uses more power |

7.Generates less heat | 7.Generates more heat |

8.Used for main memory | 8.Used for cache |

2. Read Only Memory (ROM) –

Contains critical information required to operate the system, such as the programme required to start the computer.

It isn't flammable.

It keeps all of its data at all times.

Used in embedded systems or in situations where the programming does not need to be changed.

Calculators and other peripheral devices use it.

ROM is divided into four types: ROM, PROM, EPROM, and EEPROM.

Types of Read Only Memory (ROM) –

- PROM (Programmable read-only memory) – It has the ability to be programmed by the user. The data and instructions in it cannot be modified once they have been programmed.

- EPROM (Erasable Programmable read only memory) – It is reprogrammable. Expose it to ultra violet light to wipe the data on it. Delete all prior data to reprogramme it.

- EEPROM (Electrically erasable programmable read only memory) – The data can be erased without the use of ultra violet light by creating an electric field. Only a piece of the chip can be erased.

Difference between RAM and ROM

RAM | ROM |

1.Temporary storage | 1.Permanent storage |

2.Store data in MBs | 2.Store data in GBs. |

3.Volatile | 3.Non-volatile |

4.Used in normal operations | 4.Used for startup process of computer |

5.Writing data is faster | 5.Writing data is slower |

4.2.2 What is the Difference Between RAM and ROM?

Your computer has both RAM and ROM, which stands for random access memory and read-only memory, respectively.

RAM is a type of volatile memory that stores the files you're working on for a short period of time. Non-volatile memory (ROM) is a type of memory that stores instructions for your computer indefinitely. Learn more about RAM.

Volatile and non-volatile memory

RAM is volatile memory, which means that when you restart or shut down your computer, the information temporarily held in the module is deleted. Because the data is stored electrically on transistors, it vanishes when there is no electric current. When you request a file or piece of information, it is either fetched from the computer's hard drive or from the internet. Because the information is saved in RAM, it is instantaneously available when you transition from one programme or page to another. The memory is wiped when the machine is turned off until the process is restarted. Users can quickly update, upgrade, or extend volatile memory.

Find out if your computer needs more memory.

Non-volatile memory (ROM) is information that is permanently stored on a chip. The memory does not rely on an electric current to save data; instead, binary code is used to write data to individual cells. Non-volatile memory is used for elements of the computer that do not change, such as the software's initial boot-up phase or the firmware instructions that allow your printer to function. The ROM is unaffected by turning off the machine. Users are unable to alter non-volatile memory.

4.2.3 RAM vs ROM: What's the Difference?

What is RAM?

RAM stands for Random Access Memory in its full form. When the power to the PC or laptop is turned off, the information saved in this type of memory is lost. The BIOS can be used to examine the information saved in RAM. It is also known as the computer system's primary memory, temporary memory, cache memory, or volatile memory.

What is ROM?

A Read-Only Memory (ROM) is the full version of the term. It is a form of memory that lasts forever. When the power supply is turned off, the content is not lost. The information in ROM is determined by the computer manufacturer, and it is permanently recorded at the time of production, and it cannot be altered by the user.

Various memory storage devices are compared.

Here's a comparison of several storage devices.

Storage | Speed | Cost | Capacity | Permanent |

Register | Fastest | Highest | Lowest | No |

Hard Disk | Moderate | Very Low | Very High | Yes |

RAM | Very fast | High | Low to Moderate | No |

ROM | Very Fast | High | Very Low | Yes |

CD-ROM | Moderate | Very Low | High | Yes |

4.3.1 Memory

Any physical device capable of temporarily storing information, such as RAM (random access memory), or permanently storing information, such as ROM (read only memory), is referred to as computer memory (read-only memory). Integrated circuits (ICs) are utilised in memory devices, which are used by operating systems, software, and hardware.

What does computer memory look like?

A 512 MB DIMM computer memory module is shown below. This memory module plugs into a computer motherboard's memory slot.

What happens to memory when the computer is turned off?

Because RAM is volatile memory, anything saved in RAM is lost when the computer loses power, as previously stated. For example, a document is stored in RAM while it is being worked on. It would be lost if it was stored to non-volatile memory (e.g., the hard drive) if the computer lost power.

Memory is not disk storage

New computer users frequently become perplexed as to which elements of the machine are memory. Although both the hard drive and RAM are memory, RAM should be referred to as "memory" or "primary memory," while the hard drive should be referred to as "storage" or "secondary storage."

When someone asks how much memory your computer has, the answer is usually between 1 and 16 GB of RAM and several hundred gigabytes, if not a terabyte, of hard drive storage. In other words, your hard drive space is always greater than your RAM.

How is memory used?

When you open a programme, such as your Internet browser, it is loaded into RAM from your hard disc. This procedure enables the programme to interface with the processor at a faster rate. Anything you save to your computer, such as a photo or a video, is stored on your hard drive.

Why is memory important or needed for a computer?

Each component of a computer runs at a variable pace, and computer memory allows your computer to retrieve data rapidly. A computer would be substantially slower if the CPU had to wait for a secondary storage device, such as a hard disc drive.

4.3.2 Computer memory

Main memory

Electromechanical switches, or relays (see computers: The First Computer), and electron tubes were the first memory devices (see computers: The first stored-program machines). As main memory, the first stored-program computers utilised ultrasonic waves in mercury tubes or charges in special electron tubes in the late 1940s. These were the world's first random-access memories (RAM). In contrast to serial access memory, such as magnetic tape, RAM comprises storage cells that may be accessed directly for read and write operations, whereas serial access memory, such as magnetic tape, requires accessing each cell in sequence until the required cell is located.

Magnetic drum memory

In the 1950s, magnetic drums were utilised for both primary and auxiliary memory, with fixed read/write heads for each of several tracks on the outside surface of a revolving cylinder coated with a ferromagnetic substance, albeit data access was serial.

Magnetic core memory

Magnetic core memory, an arrangement of microscopic ferrite cores on a wire grid through which current may be routed to change individual core orientations, was created around 1952. Core memory was the primary form of main memory until it was supplanted by semiconductor memory in the late 1960s due to RAM's inherent advantages.

Semiconductor memory

Semiconductor memory is divided into two types. Flip-flops, a bistable circuit made up of four to six transistors, make up static RAM (SRAM). Once a bit is stored in a flip-flop, it remains there until the opposite value is stored in it. SRAM provides quick data access, but it is physically huge. It's mostly utilised in a computer's central processing unit (CPU) for small pieces of memory called registers, as well as for fast "cache" memory. Instead of storing each bit in a flip-flop, dynamic RAM (DRAM) stores each bit in an electrical capacitor, which is charged or discharged using a transistor as a switch. A DRAM storage cell is smaller than an SRAM storage cell because it contains fewer electrical components. However, accessing its value is more time consuming, and because capacitors eventually leak charge, stored values must be recharged 50 times per second. Nonetheless, DRAM is commonly utilised for main memory since it can carry multiple times as much DRAM as SRAM on the same size chip.

Addresses are assigned to storage cells in RAM. It's customary to divide RAM into 8- to 64-bit "words," or 1 to 8 bytes (8 bits = 1 byte). The size of a word refers to the maximum number of bits that can be transmitted between main memory and the CPU at one time. Every word and, in most cases, every byte has a unique address. Additional decoding circuits are required on a memory chip to choose the set of storage cells at a given address and either save or retrieve the value stored there. A modern computer's primary memory is made up of a number of memory chips, each of which can carry many megabytes (millions of bytes), with additional addressing hardware selecting the correct chip for each address. DRAM also necessitates circuits to detect and refresh its stored values on a regular basis.

It takes longer for main memories to obtain data than it does for CPUs to work on them. DRAM memory access, for example, typically takes 20 to 80 nanoseconds (billionths of a second), but CPU arithmetic operations can take as little as a nanosecond. This inequality can be addressed in a number of ways. CPUs have a limited amount of registers and need very fast SRAM to store current instructions and data. Cache memory is a bigger amount of quick SRAM on the CPU chip (up to several gigabytes). Data and instructions from main memory are copied into the cache, and because programmes frequently exhibit "locality of reference"—that is, they repeatedly execute the same instruction sequence and operate on sets of related data—memory references to the fast cache can be made once values are copied into it from main memory.

Decoding the address to select the proper storage cells takes up a lot of DRAM access time. Because of the locality of reference property, a set of memory addresses will be frequently accessed, and fast DRAM is designed to speed up access to successive addresses following the first. SDRAM (synchronous dynamic random access memory) and EDO (extended data output) are two examples of rapid memory.

Unlike SRAM and DRAM, nonvolatile semiconductor memories do not lose their contents when the power is switched off. Read-only memory (ROM), for example, is not rewritable once it has been produced or written. A transistor is present in each memory cell of a ROM chip for a 1 bit and none for a 0 bit. The bootstrap software that starts a computer and loads its operating system, or the BIOS (basic input/output system) that addresses external devices in a personal computer, are examples of programmes that are fundamental aspects of a computer's function (PC).

EPROM (erasable programmable ROM), EAROM (electrically alterable ROM), and flash memory are examples of rewritable nonvolatile memories, however rewriting takes significantly longer than reading. They are thus utilised as special-purpose memories where writing is rarely required—for example, they can be altered to fix faults or update functionality if utilised for the BIOS.

Auxiliary memory

Auxiliary memory units are one type of computer peripheral. They give up faster access speeds in exchange for more storage capacity and data consistency. Auxiliary memory stores programmes and data for later use It is used to save inactive programmes and archive data because it is nonvolatile (like ROM). Punched paper tape, punched cards, and magnetic drums were all early forms of supplementary storage. Magnetic discs, magnetic tapes, and optical discs have been the most frequent forms of supplementary storage since the 1980s. Auxiliary memory units are one type of computer peripheral. They give up faster access speeds in exchange for more storage capacity and data consistency. Auxiliary memory stores programmes and data for later use It is used to save inactive programmes and archive data because it is nonvolatile (like ROM). Punched paper tape, punched cards, and magnetic drums were all early forms of supplementary storage. Magnetic discs, magnetic tapes, and optical discs have been the most frequent forms of supplementary storage since the 1980s.

Magnetic disk drives

A magnetic substance, such as iron oxide, is used to cover magnetic discs. Hard discs, which are made of rigid aluminium or glass, and removable diskettes, which are made of flexible plastic, are the two sorts. The first magnetic hard drive (HD) was developed by IBM in 1956, and it consisted of 50 21-inch (53-cm) discs with a storage capacity of 5 megabytes. By the 1990s, the standard HD diameter for PCs had reduced to 3.5 inches (about 8.9 cm), with storage capacity exceeding 100 gigabytes (billions of bytes); the usual HD size for portable PCs (“laptops”) had decreased to 2.5 inches (about 8.9 cm) (about 6.4 cm). Diskettes have shrunk from 8 inches (approximately 20 cm) to the current norm of 3.5 inches since Alan Shugart invented the floppy disc drive (FDD) at IBM in 1967. (about 8.9 cm). Since the introduction of optical disc drives in the 1990s, FDDs have become outdated due to their limited capacity (usually less than two megabytes).

The entire assembly is known as a comb, and it consists of numerous discs, or platters, with an electromagnetic read/write head for each surface. The heads are controlled by a microcontroller in the drive, which also incorporates RAM for data transfer to and from the discs. The heads move around the disc surface as it spins at up to 15,000 RPM; the drives are hermetically sealed, allowing the heads to float on a thin film of air near the disk's surface. A small current is provided to the head to magnetise tiny areas on the disc surface for storage; similarly, as the head travels by, magnetised places on the disc generate currents in the head, allowing data to be read. FDDs work in a similar way, although the removable diskettes only rotate at a few hundred times each minute.

The read/write heads must be controlled very precisely since the data is stored in near concentric grooves. Refinements in head control have allowed for smaller and closer track packing—up to 20,000 tracks per inch (8,000 tracks per centimetre) at the turn of the century—resulting in a nearly 30 percent increase in storage capacity per year since the 1980s. RAID (redundant array of cheap discs) is a technology that combines many disc drives to store data redundantly for increased reliability and access speed. High-performance computer network servers employ them.

Magnetic tape

Magnetic tape, like the tape used in tape recorders, has been utilised for supplementary storage, especially for data preservation. Because tape is sequential-access memory, data must be read and written sequentially as the tape is unwound, rather than being retrieved directly from the desired spot on the tape, access time is much slower than that of a magnetic disc. Servers can also use vast collections of cassettes or optical discs, which are selected and loaded by robotic devices, similar to old-fashioned jukeboxes.

Optical discs

The optical compact disc, which was derived from videodisc technology in the early 1980s, is another form of mostly read-only memory. Data is recorded as tiny pits on a single spiral track on plastic discs ranging in diameter from 3 to 12 inches (7.6 to 30 cm), however the most frequent diameter is 4.8 inches (12 cm). A low-power laser and a photocell that generates an electrical signal from the fluctuating light reflected from the pattern of pits produce the pits, which are read by a low-power laser and a photocell. Optical discs are detachable and have significantly more memory capacity than diskettes; the larger ones may hold hundreds of gigabytes of data.

The CD-ROM is a common optical disc (compact disc read-only memory). It can store around 700 megabytes of data and uses an error-correcting code to rectify bursts of errors generated by dust or flaws. Software, encyclopaedias, and multimedia text with sounds and images are all distributed on CD-ROMs. CD-R (CD-recordable) or WORM (write-once read-many) is a type of CD-ROM that allows users to record data but not change it later. Rewritable CD-RW discs can be re-recorded. DVDs (digital video, or versatile, discs), originally designed for recording movies, store data more densely and with more powerful error correction than CD-ROM. DVDs, which are the same size as CDs, typically carry 5 to 17 terabytes of data—several hours of video or millions of text pages.

Magneto-optical discs

Hybrid storage media are magneto-optical discs. In reading, variable polarisation in the reflected light of a low-power laser beam is caused by spots with various magnetization directions. To write all 0s, a powerful laser beam heats each area on the disc, which is subsequently cooled under a magnetic field, magnetising each spot in one direction. The writing operation then reverses the magnetic field's direction to store 1s at the desired location.

Memory hierarchy

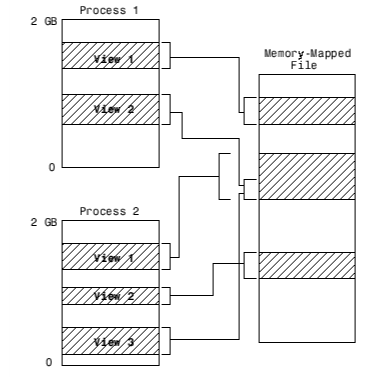

Although the distinction between main and auxiliary memory is useful in general, memory organisation in a computer is organised in a hierarchy of levels, starting with very small, fast, and expensive registers in the CPU and progressing to small, fast cache memory, larger DRAM, very large hard discs, and slow and inexpensive nonvolatile backup storage. Virtual memory, a system that provides programmes with huge address spaces (addressable memory) that may surpass the real RAM in the machine, spans these levels in memory consumption by modern computer operating systems. Virtual memory allots a piece of main memory to each application while storing the remainder of its code and data on a hard disc, transferring blocks of addresses to and from main memory as needed. Virtual memory is possible thanks to the speed of current hard discs and the same locality of reference principle that allows caches to perform well.

Coprocessor, a specialised processor found in some personal computers that performs tasks such as intensive mathematical calculations or graphical display processing. The coprocessor is frequently built to perform such jobs more efficiently than the main processor, resulting in much faster computer performance overall.

Flash memory

Computers and other electronic equipment use flash memory as a data storage medium. Flash memory, unlike prior kinds of data storage, is an EEPROM (electronically erasable programmed read-only memory) type of computer memory that does not require a power source to preserve data.

Masuoka Fujio, a Japanese engineer at the time working for Toshiba Corporation, devised flash memory in the early 1980s as a technique to replace existing data-storage medium such as magnetic tapes, floppy discs, and dynamic random-access memory (DRAM) chips. Ariizumi Shoji, a Masuoka coworker, developed the term flash after comparing the process of memory erasure, which can delete all the data on a complete chip at once, to a camera's flash.

Flash memory is made up of a grid with two transistors at each intersection, the floating gate and the control gate, separated by an oxide layer that insulates the floating gate. The two-transistor cell has a value of 1 when the floating gate is connected to the control gate. A voltage is applied to the control gate, which drives electrons through the oxide layer into the floating gate, changing the cell's value to 0. The floating gate stores the electrons, allowing the flash memory to maintain its data even after the power is switched off. To return the value to 1, a voltage is delivered to the cell. Large parts of a chip, known as blocks, or even the entire chip can be wiped at once when using flash memory.

Flash memory is commonly used in portable devices such as digital cameras, smartphones, and MP3 players. Flash memory is used to store data on USB devices (also known as thumb drives or flash drives) and memory cards. As flash memory became more affordable in the early twenty-first century, it began to replace hard discs in laptop computers.

4.3.3 Types of Computer Memory

Computer memory refers to the various forms of data storage technology that a computer can employ, such as RAM, ROM, and flash memory.

Some computer memory is designed to be extremely fast, allowing the central processor unit (CPU) to quickly retrieve data stored there. Other varieties are meant to be extremely low-cost, allowing enormous volumes of data to be stored there without breaking the bank.

Another difference in computer memory is that some types are non-volatile, meaning they can keep data for a long time even after the power is turned off. And then there are volatile varieties, which are generally speedier but lose all of the data they contain as soon as the power is turned off.

A computer system is made up of a mix of various types of computer memory, and the exact arrangement can be tweaked to achieve the best data processing speed, lowest cost, or a compromise between the two.

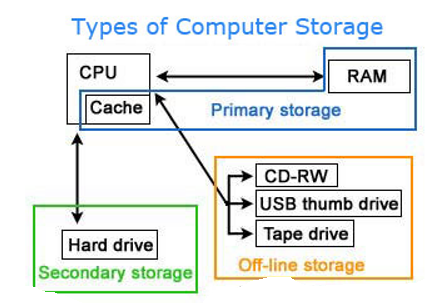

Types of Computer Memory: Primary and Secondary

Although there are many different types of memory in a computer, the most basic distinction is between primary memory, also known as system memory, and secondary memory, often known as storage.

The main distinction between primary and secondary memory is access speed.

Primary memory

Includes ROM and RAM, and is located close to the CPU on the computer motherboard, enabling the CPU to read data from primary memory very quickly indeed. It is used to store data that the CPU needs imminently so that it does not have to wait for it to be delivered.

Secondary memory

Data, on the other hand, normally housed in a separate storage device, such as a hard disc drive or solid state drive (SSD), that is connected to the computer system either directly or over a network. Secondary memory has a lower cost per gigabyte, but read and write speeds are much slower.

A diverse range of computer memory types has been used during the course of computer evolution, each with its own set of strengths and disadvantages.

Primary Memory Types: RAM and ROM

Primary memory can be divided into two categories:

1. RAM (random access memory) is a type of memory that is used to store data.

2. Read-only memory (ROM)

Let's take a closer look at both types of memory.

1) RAM Computer Memory

The abbreviation RAM refers to the fact that data stored in random access memory can be accessed in any order, as the name suggests. To put it another way, any random bit of data can be retrieved in the same amount of time as any other bit.

The most important things to know about RAM memory are that it is very fast, that it can be written to as well as read, that it is volatile (that is, all data stored in RAM memory is lost when it loses power), and that it is very expensive in terms of cost per gigabyte when compared to all other types of secondary memory. Most computer systems use both primary and secondary memory due to the relative high cost of RAM compared to secondary memory types.

Data that needs to be processed right away is relocated to RAM, where it can be read and updated rapidly, keeping the CPU from having to wait. When the data is no longer needed, it is moved to slower but less expensive secondary memory, and the RAM space that is freed up is filled with the next chunk of data to be used.

Types of RAM

- DRAM: The most popular type of RAM used in computers is DRAM, which stands for Dynamic RAM. Single data rate (SDR) DRAM is the oldest form, however modern systems employ quicker dual data rate (DDR) DRAM. DDR is available in numerous variants, including DDR2, DDR3, and DDR4, all of which are faster and more energy efficient than DDR. However, because the two versions are incompatible, DDR2 and DDR3 DRAM cannot be mixed in a computer system. Each cell of DRAM contains a transistor and a capacitor.

2. SRAM: SRAM stands for Static RAM, and it is a form of RAM that is faster than DRAM but more expensive and bulkier because each cell has six transistors. As a result, SRAM is typically only employed as a data cache within a CPU or as RAM in extremely high-end server systems. In a system 3, a modest SRAM cache of the most urgently needed data can result in significant speed benefits. SRAM is two to three times faster than DRAM, although it is more expensive and bulkier. SRAM is often sold in megabytes, whereas DRAM is sold in gigabytes.

3. DRAM consumes more energy than SRAM because it must be refreshed on a regular basis to ensure data integrity, but SRAM, while volatile, does not require constant refreshing while powered up.

2) ROM (Read Only Memory)

ROM stands for read-only memory, and it gets its name from the fact that data may be read from but not written to this type of computer memory. It is a form of computer memory that is located near the CPU on the motherboard and is extremely fast.

ROM is a sort of non-volatile memory, which implies that the data contained in it persists even after the computer is turned off and no power is applied to it. It's comparable to secondary memory in that it's utilised for long-term storing.

When a computer is turned on, the CPU can immediately begin reading data from the ROM without the need for drivers or other complicated software. The ROM typically contains "bootstrap code," which is a series of instructions that a computer must follow to become aware of the operating system stored in secondary memory and to load components of the operating system into main memory so that it can start up and become ready to use.

Simpler electronic devices use ROM to store firmware that executes as soon as the device is turned on.

Types of ROM

PROM, EPROM, and EEPROM are some of the numerous types of ROM accessible.

1. PROM PROM stands for Programmable Read-Only Memory, and it differs from genuine ROM in that a PROM is built in an empty state and then programmed later using a PROM programmer or burner, whereas a ROM is programmed (that is, data is written to it) during the production process.

2. EPROM EPROM stands for Erasable Programmable Read-Only Memory, and data saved in an EPROM may be erased and reprogrammed, as the name implies. Before re-burning an EPROM, it must be removed from the computer and exposed to ultraviolet light.

3. EEPROM (Electronic Flash Memory) The difference between EPROM and EEPROM, which stands for Electrically Erasable Programmable Read-Only Memory, is that the latter may be erased and written to by the computer system in which it is installed. EEPROM is not strictly read-only in this sense. However, because the write process is slow in many circumstances, it is usually reserved for updating programme code such as firmware or BIOS code on a sporadic basis.

Despite the fact that NAND flash memory (such as that found in USB memory sticks and solid state disc drives) is a kind of EEPROM, it is classified as secondary memory.

4.3.4 Secondary Memory Types

Secondary memory refers to a variety of storage devices that can be directly connected to a computer system. Hard disc drives and solid state drives are examples of this (SSDs)

Optical drives (CD or DVD)

Drives for tapes

Secondary memory also includes: Storage arrays connected via a storage area network, including 3D NAND flash arrays (SAN)

Storage devices that can be connected through a standard network (known as network attached storage, or NAS)

Cloud storage is also referred to as "secondary memory."

Differences between RAM and ROM

ROM:

1. The term "non-volatile" refers to a substance that is

2. Easy to read

3. Only used in little amounts

4. Cannot be written in a short period of time

5. It's where you keep your boot instructions or firmware.

6. When compared to RAM, per megabyte stored is relatively expensive.

RAM:

1. Fluctuating

2. Read and write quickly

3. Used as system memory to store data (including computer code) that the CPU must process as soon as possible.

4. In comparison to ROM, it is reasonably inexpensive per megabyte stored, but it is relatively expensive in comparison to secondary memory.

What Technology is Between Primary and Secondary Memory?

A novel memory medium dubbed 3D XPoint has been invented in the last year or two, with features that fall in between main and secondary memory.

3D XPoint is more expensive than secondary memory but faster, and less expensive but slower than RAM. It's also a sort of non-volatile memory.

Because of these qualities, it can be used as a replacement for RAM in systems that require large amounts of system memory but are too expensive to develop using RAM (such as systems hosting in-memory databases). The trade-off is that such systems do not benefit from all of RAM's performance benefits.

Because 3D XPoint is non-volatile, systems that utilise it for system memory can quickly recover from a power outage or other interruption, without having to read all of the data back into system memory from secondary memory.

Key takeaway:

Memory is a mechanism or system for storing data in a computer or other computer hardware and digital electrical devices for immediate use. The terms memory and major storage or main memory are frequently used interchangeably. The word store is an old synonym for memory.

4.4.1 Memory Organization and Addressing

Now we'll take a look at RAM, or Random Access Memory. This is the memory known as "primary memory" or "core memory," which refers to an earlier memory technology in which magnetic cores were utilised to store data on the computer.

The best way to think of primary computer memory is as an array of addressable units. The smallest unit of memory with independent addresses is called an addressable unit. Each byte (8 bits) in a byte-addressable memory unit has its own address, though the computer frequently groups the bytes into larger units (words, long words, etc.) and retrieves that group. In most modern computers, integers are manipulated as 32-bit (4-byte) entities, therefore they are retrieved four bytes at a time.

Byte addressing in computers became crucial, in this author's viewpoint, as a result of the adoption of 8-bit character codes. Many applications benefit from the ability to address single characters because they entail the movement of huge numbers of characters (coded as ASCII or EBCDIC). Word addressing is used by some computers, including the CDC-6400, CDC-7600, and all Cray machines. This is the outcome of a design decision taken when considering the fundamental objective of such computers, which is to perform huge computations using floating point values. The word size in these computers is 60 bits (why not 64? - I'm not sure), which allows for high precision in numeric simulations like fluid flow and weather forecasting.

Memory as a Linear Array

Consider a memory with N bytes of byte-addressable memory. As previously indicated, such a memory can be thought of as the logical equivalent of a C++ array, and is declared as byte memory [N] ; / Address ranges from 0 to (N – 1)

The machine on which these notes were written has 384 MB of main RAM, which is now considered standard but was originally considered enormous. N = 384•1048576 = 402,653,184 because 384 MB = 384•220 bytes and the memory is byte-addressable. The memory size is frequently a power of two or the sum of two powers of two; (256 + 128)•220 = 228 + 227 = 384 MB

When it comes to computer memory, the term "random access" suggests that memory can be accessed at any time with no performance penalty. While this may not be exactly accurate in these days of virtual memory, the basic concept remains the same: the time it takes to access an item in memory is independent of the address provided. In this way, it's analogous to an array in which the time it takes to retrieve a particular entry is independent of the index. A magnetic tape is a sequential access technology, which means that in order to get to an entry, you must first go through all of the previous entries.

Random-access computer memory is divided into two categories. These are the following:

RAM Read-Write Memory

ROM Read-Only Memory

The use of the term "RAM" to refer to a sort of random access memory that might be referred to as "RWM" has a lengthy history, which will be continuing in this course. The main reason is that the names "RAM" and "ROM" are simple to pronounce; try saying "RWM." Keep in mind that random access memory (RAM) and read-only memory (ROM) are the same thing.

Of course, there is no such thing as a pure Read-Only memory; data must be able to be written to the memory at some point in order for it to be read; otherwise, there will be no data in the memory to read. The term "Read-Only" mainly relates to how the CPU accesses data. All ROM variants have the property that standard CPU write operations cannot change their contents. All types of RAM (also known as Read-Write Memory) have the ability to update their contents using standard CPU write operations. Some types of ROM have their contents pre-programmed, while others, known as PROM (Programmable ROM), can have their contents modified using special devices known as PROM Programmers.

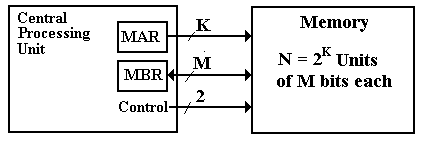

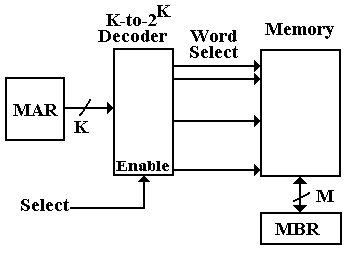

Registers associated with the memory system

Two registers and a number of control signals characterise all memory types, including RAM and ROM. Consider a memory with 2N words and M bits per word. Then there's the MAR (Memory Address Register), which is an N-bit register that specifies the memory address.

The MBR (Memory Buffer Register) is a multi-bit register that stores data for later use be written to the memory or simply read from it This is a registry also known as the MDR (Memory Data Register).

We tell the memory unit what to do with the control signals by recalling what we want it to perform. Consider RAM first (Read Write Memory). There are three duties for memory from the CPU's perspective.

Data is read from memory by the CPU. The contents of the memory are not altered.

Data is written to memory by the CPU. The contents of the memory are updated.

Memory is not accessed by the CPU. The contents of the memory are not altered.

Two control signals are required to indicate the three RAM unit possibilities. There is a standard set.

The memory unit has been selected.

- If 0 is set, the CPU writes to memory; if 1 is set, the CPU reads from it.

A truth table can be used to indicate the activities for a RAM. It's worth noting that when Select = 0,

Select | R/  | Action |

0 | 0 | Memory contents are not changed. |

0 | 1 | Memory contents are not changed. |

1 | 0 | CPU writes data to the memory. |

1 | 1 | CPU reads data from the memory. |

The memory is not being affected in any way. The CPU does not access it, and the contents do not change. When Select is set to 1, the memory is activated, and something occurs.

Now is the time to think about a ROM (Read Only Memory). There are just two duties for memory from the CPU's perspective.

Data is read from memory by the CPU.

Memory is not accessed by the CPU.

To indicate these two possibilities, we just need one control signal. The Select control signal is a natural choice because the signal makes no sense if the memory can't be written by the CPU. The ROM's truth table should be self-evident.

Select | Action |

0 | CPU is not accessing the memory. |

1 | CPU reads data from the memory. |

4.4.2 The Idea of Address Space

Now it's time to separate the concepts of address space and physical memory. The address space specifies the range of addresses (memory array indices) that can be created. Physical memory is typically smaller, either by design (see the topic of memory-mapped I/O below) or by chance.

2N distinct addresses are specified using a N–bit address. In this respect, the address can be thought of as an unsigned N–bit integer with a range of 0 to 2N – 1 inclusive. Another concern is how many address bits are required for M addressable things. The equation 2(N - 1) M 2N gives the result, which is best solved by guessing N.

A binary number stored in the Memory Address Register specifies the memory address (MAR). The range of addresses that can be created is determined by the number of bits in the MAR. 2N different addresses, numbered 0 through 2N – 1, can be specified using N address lines. This is referred to as the computer's address space.

We present three MAR sizes as an example.

Computer | MAR bits | Address Range |

PDP-11/20 | 16 | 0 to 65 535 |

Intel 8086 | 20 | 0 to 1 048 575 |

Intel Pentium | 32 | 0 to 4 294 967 295 |

The now-defunct Digital Equipment Corporation produced the PDP-11/20, an exquisite little machine. People noticed that its address range was too limited as soon as it was built.

The address space is, on average, substantially bigger than the physical memory accessible. My personal computer, for example, has a 232-byte address space (as do all Pentiums), but only 384MB = 228 Plus 227 bytes. The 32–bit address space would have been significantly greater than any quantity of physical memory until recently. Currently, a computer with a fully loaded address space, i.e. 4 GB of physical memory, may be ordered from a variety of vendors. The majority of high-end personal PCs come with 1GB of RAM

A portion of the address space in an architecture with memory-mapped I/O is allocated to addressing I/O registers rather than physical memory. The top 4096 (212) of the address space on the original PDP–11/20, for example, was dedicated to I/O registers, leading to the memory map

0 – 61439 is a range of addresses. Physical memory is available.

61535, 61440, 61440, 61440, 61440, 61440, 61440, I/O registers (61440 = 61536 – 4096) are available.

In a byte-addressable machine, word addresses are used.

Most modern computers have byte-addressable memories, which means that each byte in the memory has a unique address that may be used to address it. A word corresponds to a number of addresses in this addressing method.

Bytes at addresses Z and Z + 1 make up a 16–bit word at address Z

Bytes at addresses Z, Z + 1, Z + 2, and Z + 3 make up a 32–bit word at address Z.

Word addresses are constrained on many computers that use byte addressing.

An even address is required for a 16–bit word.

The address of a 32–bit word must be a multiple of four.

It's a good idea to stick to these word boundaries even if your computer doesn't demand it. Most compilers will do this for you.

Consider a machine having a 32-bit address space and byte-addressable memory. 232 − 1 is the highest byte address. We can deduce from this fact and the address allocation to multi-byte words that

(232 – 2) is the highest address for a 16-bit word, and

Because the 32-bit word addressed at (232 – 4) contains bytes at addresses (232 – 4), (232 – 3), (232 – 2), and (232 – 1), the highest address for a 32-bit word is (232 – 4).

4.4.3 Byte Addressing vs. Word Addressing

N address lines can be used to specify 2N separate addresses, numbered 0 through 2N – 1. As previously stated, N address lines can be used to define 2N separate addresses, numbered 0 through 2N – 1. We've moved on to enquiring about the size of the addressable items. Most current computers are byte-addressable, which means that the addressable item is 8 bits or one byte in size. Other options are available. We'll now look at the benefits and drawbacks of being able to address larger entities.

Consider a computer with a 16–bit address space as an example. There would be 65,536 (64K = 216) addressable entities on the system. The maximum memory size is determined by the addressable entity's size.

64 KB of byte addressable memory

128 KB, 16-bit Word Addressable

256 KB, 32-bit Word Addressable

The maximum memory size for a given address space is greater for larger addressable entities. This may be advantageous for some applications, but it is mitigated by the extremely huge address spaces we already have: 32 bits now, 64 bits soon.

When we consider programmes that process data one byte at a time, the benefits of byte-addressability become evident. In a byte-addressable system, accessing a single byte needs simply issuing a single address. In a 16-bit word-addressable system, computing the address of the word containing the byte, fetching that word, and finally extracting the byte from the two-byte word are all required steps. Although byte extraction procedures are well understood, they are less efficient than retrieving the byte directly. As a result, many current machines can be addressed in bytes.

4.4.4 Big-Endian and Little-Endian

The connection here is to a storey in Jonathan Swift's Gulliver's Travels about two groups of men fighting over whether the big end or the little end of a boiled egg should be broken. Swift's work A Modest Proposal is an outstanding example of biting satire; the student should be aware that he did not write pleasant stories for children, but instead focused on cutting satire.

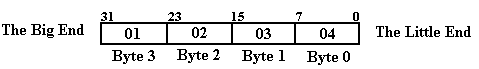

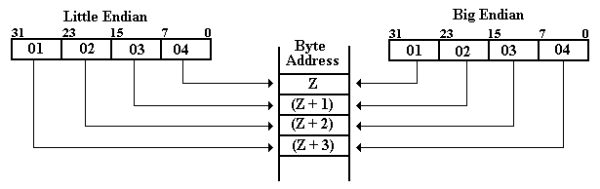

Consider the 32–bit number stored at memory location Z, which is represented by the eight–digit hexadecimal number 0x01020304. This number will be stored in the four successive addresses Z, (Z + 1), (Z + 2), and (Z + 3) in all byte-addressable memory regions. The location of each of the four bytes differs between big-endian and little-endian addresses. Bits 31–24 are represented by 0x01, and bits 23–16 are represented by 0x02.

Bits 15–8 of the word are represented by 0x03, and bits 7–0 of the word are represented by 0x04.

The number 0x01020304 can be written in decimal notation as a 32-bit signed integer.

1•166 + 0•165 + 2•164 + 0•163 + 3•162 + 0•161 + 4•160 = 16,777,216 + 131,072 + 768 + 4 = 16,909,060. 1•166 + 0•165 + 2•164 + 0•163 + 3•162 + 0•161 + 4•160 = 16,777,216 + 131,072 + 768 + 4 = 16,909,060 If you want to think in bytes, this is (01)•166 + (02)•164 + (03)•162 + 04, which gives you the same result. It's worth noting that the number can be considered as having a "large end" and a "small end," as shown in the diagram below.

The “big end” of the number contains the most significant digits, while the “little end” contains the least important digits. Now we'll look at how these bytes are saved in byte-addressable memory. Remember that each byte in a byte-addressable memory has a unique address consisting of two hexadecimal numbers, and that a 32-bit (four-byte) entry at address Z fills the bytes at Z, (Z + 1), (Z + 2), and (Z + 3). Below are the hexadecimal values saved in these four byte addresses.

Address Big-Endian Little-Endian

Z 01 04

Z + 1 02 03

Z + 2 03 02

Z + 3 04 01

Consider the 16–bit number 0A0B, which is represented by the four hex digits 0A0B. Assume that the 16-bit word is located at W; that is, its bytes are located at W and (W + 1). 0x0A is the most significant byte, whereas 0x0B is the least significant byte. Below are the values for the two addresses.

Address Big-Endian Little-Endian

W 0A 0B

W + 1 0B 0A

The diagram below illustrates a graphical representation of these two ordering alternatives for bytes copied from a register into memory. We'll use a 32-bit register with bits numbered 31 through 0 as an example. Which end of the memory is placed first – at address Z? The “big end,” or most significant byte, is written first in big-endian. The “little end,” or least significant byte, is written first in little-endian.

There does not appear to be any benefit to one system over the other. Most people find big–endian more natural, and it makes reading hex dumps (listings of a sequence of memory addresses) easier, though a decent debugger will take care of it for everyone except the unlucky.

The IBM 360 series, Motorola 68xxx, and Sun SPARC are all big-endian computers.

The Intel Pentium and similar computers are examples of little-endian computers.

Most of us aren't immediately affected by the big-endian vs. Little-endian argument. Allow the computer to handle the bytes in whichever sequence it wants as long as the results are good. The only immediate impact on the majority of us will be when trying to transfer data from one machine to another. The fact that network interfaces for computers will translate to and from the network standard, which is big-endian, makes transfer over computer networks easier. The most challenging part will be trying to read various file kinds.

When computer data is “serialised,” or written out one byte at a time, the big-endian vs. Little-endian dispute shows up in file structures. This results in distinct byte orders for the same data, similar to the memory ordering. The file structure's orientation is frequently determined by the system on which the software was first built.

This is a brief list of file types extracted:

Windows BMP, MS Paintbrush, MS RTF, and GIF (little-endian)

Big-endian data types JPEG, MacPaint, Adobe Photoshop

Some apps support both orientations, with a flag in the header record indicating the order in which the file was written.

4.4.5 The Memory Hierarchy

Depending on the point we're trying to make, we employ a variety of logical models of our memory system. The most basic form of memory is a monolithic linear memory, which is a memory that is made up of a single unit (monolithic) and structured as a single-dimensional array (linear). As a logical model, this is adequate, but it misses a large number of critical concerns.

Consider a memory in which the smallest addressable unit is a M–bit word. For the sake of simplicity, we'll suppose that the memory has N = 2K words and that the address space has N = 2K words as well. The memory can be thought of as a one-dimensional array with a name like

Memory: M–bit word array [0.. (N – 1)].

The monolithic view of the memory is shown in the following figure.

Figure: Monolithic View of Computer Memory

The CPU offers K address bits to access N = 2K memory entries, each with M bits, and at least two control signals to manage memory in this monolithic perspective.

The linear perspective of memory is a rational way of thinking about memory organisation. This viewpoint has the virtue of being straightforward, but it has the problem of accurately defining only long-since-obsolete technologies. It is, nevertheless, a consistent model worth mentioning. The linear model is depicted in the diagram below.

The aforementioned model has two flaws, one of which is a slight annoyance and the other of which is a "show–stopper."

The only small issue is the memory's speed; it will have the same access time as standard DRAM (dynamic random access memory), which is roughly 50 nanoseconds. We know we can do better than that, so we seek out other memory organisations.

The memory decoder's design is the "show–stopper" challenge. Consider two popular memory sizes in byte–oriented memory: 1MB (220 bytes) and 4GB (232 bytes).

A 20–to–1,048,576 decoder would be used for a 1MB memory, because 220 = 1,048,576.

A 32–to–4,294,967,296 decoder would be used in a 4GB memory, because 232 = 4,294,967,296.

With current technology, neither of these decoders can be produced at a reasonable cost. It is important to highlight that because other designs provide extra benefits, there is no incentive to complete such a design work.

Now we'll look at two design options that result in simple-to-manufacture systems with adequate performance at a fair cost. The first is a memory hierarchy that uses different levels of cache memory to provide faster access to main memory. Before delving into the concepts of cache memory, it's necessary to first explain two memory fabrication methodologies.

4.4.6 SRAM (Static RAM) and DRAM (Dynamic RAM)

Now we'll look at the technologies that are used to store binary data. The first topic is to create a list of requirements for binary memory implementation devices.

1) Two separate and well-defined states.

2) The gadget must be able to dependably swap states.

3) A spontaneous state shift must have a very low probability.

4) State transitions must be as quick as possible.

5) The device must be tiny and inexpensive in order for huge memory capacities to be viable.

In the second half of the twentieth century, a variety of memory technologies were developed. The majority of these are no longer in use. There are three that deserve special attention:

1) Memory at the Core (now obsolete, but new designs may be introduced soon)

2) RAM that is static

3) RAM that is dynamic

Core Memory

When it was initially utilised on the MIT Whirlwind in 1952, it was a significant advancement. A torus (small doughnut) of magnetic material serves as the basic memory element. A magnetic field in one of two directions can be contained within this torus. These two separate ways enable a two-state device, which is necessary for storing a binary number.

One characteristic of magnetic core memory has stuck with us: the phrase "core memory" is frequently used as a synonym for the computer's primary memory.

Two definitions are required when considering the next two technologies. Memory access time and memory cycle time are two of them. The access time is a measure of true interest. The nanosecond, or one billionth of a second, is the unit of measurement for memory times.

Memory access time is the time it takes for the memory to access data; more precisely, it is the time it takes for the memory address in the MAR to become stable and the data to become available in the MBR.

The minimal time between two independent memory accesses is known as memory cycle time. Because the memory cannot process an independent access while it is in the process of depositing data in the MBR, the cycle time must be at least as long as the access time.

Static RAM

The minimal time between two independent memory accesses is known as memory cycle time. Because the memory cannot process an independent access while it is in the process of depositing data in the MBR, the cycle time must be at least as long as the access time.

Dynamic RAM

DRAM stands for dynamic random access memory and is based on capacitors, which are circuit elements that hold electric charge. Dynamic RAM is less expensive than static RAM and may fit more tightly on a computer chip, allowing for greater memory capacities. DRAM has a slower access time than SRAM, ranging from 60 to 100 nanoseconds.

Our goal in memory design would be to produce a memory unit with SRAM-like performance but DRAM-like cost and package density. Thankfully, this is possible because to a design technique known as "cache memory," in which a fast SRAM memory serves as a front end for a bigger and slower DRAM memory. Because of a feature of computer programme execution known as "programme locality," this approach has been discovered to be advantageous. If a programme accesses a memory location, it is very likely that it will access that site again, as well as places with similar addresses. Cache memory is made possible by programme locality.

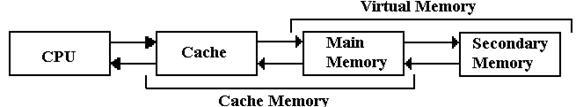

The Memory Hierarchy

In general, the memory hierarchy of a modern computer is as shown in the next figure.

Figure: The Memory Hierarchy with Cache and Virtual Memory

A multi-level memory system has a quicker primary memory and a slower secondary memory, according to our definition. The cache is the speedier primary memory, while the main memory is the secondary memory in cache memory. In this chapter, we'll disregard virtual memory.

It was common practise in early computer designs to include a single-level fast cache in order to boost computer performance. This cache was built on a memory chip that was physically close to the CPU. It became possible to add additional cache that was physically on the CPU chip as succeeding designs enhanced the circuit density on the CPU chip. The Intel Pentium designs now have a two-level cache, such as the following.

L1 cache of 32 KB (Level 1 cache with 16KB for instructions and 16KB for data)

L2 cache of 1 MB (Level 2 cache for both data and instructions)

Main memory: 256 MB

For a given technology, the argument for a two–level cache is based on the idea that smaller memories have a faster access time than bigger memories. It's easy to show that a two–cache (32 KB and 1MB) has a far faster effective access time than a monolithic cache of about 1MB.

4.4.7 Effective Access Time for Multilevel Memory

The success of a multilevel memory system is based on the notion of locality, as we previously established. The hit ratio, which is represented in the memory system's effective access time, is a measure of the system's effectiveness.

A multilayer memory system with primary and secondary memory will be considered. We can use what we've learned so far to both cache memory and virtual memory systems. We'll use cache memory as an example in this lesson. This is something that might be readily adapted to virtual memory.

An accessible item in a conventional memory system is referred to by its address. In a two-level memory system, the address is first examined in primary memory. We have a hit if the referenced item is in the primary memory; otherwise, we have a miss. The hit ratio is calculated by dividing the total number of memory accesses by the number of hits; 0.0 h 1.0. We calculate the effective access time as a function of the hit ratio for a quicker primary memory with an access time TP and a slower secondary memory with an access time TS. TE = h•TP + (1.0 – h)•TS is the formula to use.

RULE: In this formula we must have TP < TS. This inequality defines the terms “primary” and “secondary”. In this course TP always refers to the cache memory.

We'll use cache memory as an example, with a fast cache serving as a front-end for primary memory. Cache hits and cache misses are used in this scenario. In these cases, the hit ratio is also known as the cache hit ratio. Consider the following example: TP = 10 nanoseconds and TS = 80 nanoseconds. The formula for calculating effective access time is as follows:

TE = h10 + (1.0 – h)80. For sample values of hit ratio

Hit Ratio Access Time

0.5 45.0

0.9 17.0

0.99 10.7

The reason cache memory works is that the locality principle allows for high hit ratios; in fact, h 0.90 is a realistic figure. As a result, a multi-level memory structure operates virtually like a very large memory, but with the access latency of a smaller, faster memory. We've devised a method for speeding up our enormous monolithic memory, and we're now looking into methods for fabricating such a huge main memory.

4.4.8 Memory As A Collection of Chips

A group of memory chips makes up all modern computer memory. Interleaving is the technique of translating an address space into a number of smaller chips.

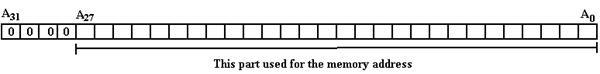

Consider a computer with 256 MB of byte-addressable memory, a 32–bit address space, and byte-addressable memory.

There is 228 bytes of RAM available. This computer is based on the author's personal computer, however the memory size has been changed to a power of two to simplify the example. The MAR addresses can be thought of as 32–bit unsigned integers with high order bit A31 and low order bit A0. We state that only 28-bit addresses are valid, ignoring issues of virtual addressing (essential for operating systems), and that a valid address has the following form.

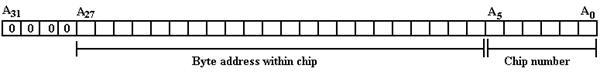

All current computers have multiple chips in their memory that are combined to cover the range of permissible addresses. Assume that the fundamental memory chips in our case are 4MB chips. The 256 MB memory would be made up of 64 chips with the following address space:

6 bits to pick the memory chip (26 = 64) and 22 bits to pick the byte (222 = 4•220 = 4M) within the chip.

Which bits choose the chip and which bits are transferred to the chip is the question. High-order memory interleaving and low-order memory interleaving are two popular choices. Only low-order memory interleaving will be discussed, in which the lower-order address bits pick the chip and the higher-order bits pick the byte.

Low-order interleaving provides a number of performance benefits. This is because subsequent bytes are stored in different chips, so byte 0 is in chip 0, byte 1 is in chip 1, and so on. In our case,

Chip 0 contains bytes 0, 64, 128, 192, etc., and

Chip 1 contains bytes 1, 65, 129, 193, etc., and

Chip 63 contains bytes 63, 127, 191, 255, etc.

Assume the computer's memory and CPU are connected by a 128-bit data bus. It would be feasible to read or write sixteen bytes at a time using the low-order interleaved memory described above, resulting in a memory that is close to 16 times faster. It's worth noting that the gain in memory performance for such an arrangement is constrained by two factors.

1) The memory's chip count, which is 64 in this case.

2) The data bus width in bytes — in this case, 16 is the lower value.

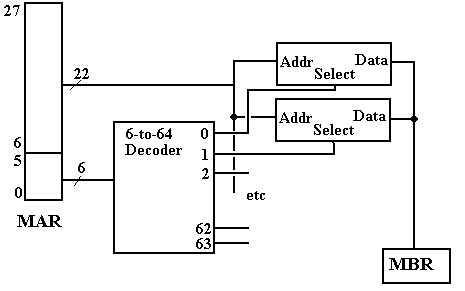

A 6–to–64 decoder or a pair of 6–to–64 decoders could be used in a design to achieve such an address system.

To choose the chip and memory location within the chip, there are 3–8 decoders. The design with the larger decoder is shown in the next diagram.

Figure: Partial Design of the Memory Unit

The high order bits of the address are received by each of the 64 4MB–chips. At any given time, only one of the 64 chips is active. If a memory read or write operation is in progress, just one of the chips will be selected, leaving the others dormant.

A Closer Examination of the Memory "Chip"

We've been able to minimise the problem of constructing a 32–to–232 decoder to that of developing a 22–to–222 decoder so far in our design. We've achieved the speed benefit that interleaving provides, but we still have the decoder issue. Now we'll look at the next step in memory design, which is the difficulty of making a 4MB chip that works.

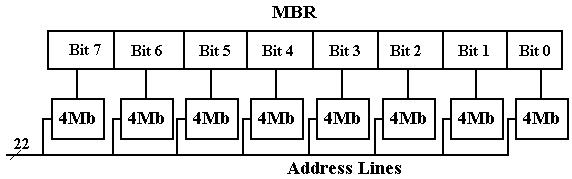

The solution we'll utilise is to make the chip up of eight 4Mb (megabit) chips. The figures below depict the design that was used.

Figure: Eight Chips, each holding 4 megabits, making a 4MB “Chip”

Having one chip represent only one bit in the MBR has an immediate benefit. Because of the nature of chip breakdown, this is the case. If a ninth 4Mb chip is added to the mix, a basic parity memory may be created, with single bit errors being detected by circuitry (not shown) that feeds the nine bits selected into the 8-bit memory buffer register.

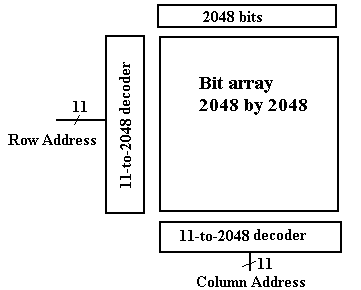

When we look at the decoder circuitry used in the 4Mb device, we can observe a bigger gain. It's logically equivalent to the 22–to–4194304 decoder we stated earlier, but it's made up of two 11–to–2048 decoders, which aren't hard to make.

Consider the 4Mb chip's 4194304 (222) bits as a two-dimensional array of 2048 rows (numbered 0 to 2047), each with 2048 columns (also numbered 0 to 2047). The situation is depicted in the diagram below.

Figure: Memory with Row and Column Addresses

We now add one more feature, to be elaborated below, to our design and suddenly we have a really fast DRAM chip. For ease of chip manufacture, we split the 22-bit address into a 11-bit row address and an 11-bit column address. This allows the chip to have only 11 address pins, with two extra control (RAS and CAS – 14 total) rather than 22 address pins with an additional select (23 total). This makes the chip less expensive to manufacture.

We send the 11-bit row address first and then send the 11-bit column address. At first sight, this may seem less efficient than sending 22 bits at a time, but it allows a true speed-up. We merely add a 2048 SRAM buffer onto the chip and when a row is selected, we transfer all 2048 bits in that row to the buffer at one time. The column select then selects from this faster memory used as an on-chip buffer. Thus, our access time now has two components:

1) The time to select a new row, and

2) The time to copy a selected bit from a row in the SRAM buffer.

This design is the basis for all modern computer memory architectures.

4.4.7 SDRAM – Synchronous Dynamic Random Access Memory

As previously stated, memory's slowness in comparison to the CPU has long been a source of concern among computer designers. SDRAM – synchronous dynamic access memory – is one recent breakthrough that has been employed to overcome this problem.

Up to this point, we've just talked about standard memory types.

SRAM (Static Random Access Memory) is a type of static random access memory.

5–10 nanoseconds is a typical access time.

6 transistors were used, which was expensive and took up a lot of space.

DRAM (Dynamic Random Access Memory) is a type of random-access memory

50–70 nanoseconds is a typical access time.

One capacitor and one transistor were used to make this circuit: it was compact and inexpensive.

In a way, the desirable approach would be to make the entire memory to be SRAM. Such a memory would be about as fast as possible, but would suffer from a number of setbacks, including very large cost (given current economics a 256 MB memory might cost in excess of $20,000) and unwieldy size. The current practice, which leads to feasible designs, is to use large amounts of DRAM for memory. This leads to an obvious difficulty.

1) The access time on DRAM is almost never less than 50 nanoseconds.

2) The clock time on a moderately fast (2.5 GHz) CPU is 0.4 nanoseconds,

125 times faster than the DRAM.

The problem that arises from this speed mismatch is often called the “Von Neumann Bottleneck” – memory cannot supply the CPU at a sufficient data rate. Fortunately there have been a number of developments that have alleviated this problem. We have already discussed the idea of cache memory, in which a large memory with a 50 nanosecond access time can be coupled with a small memory with a 10 nanosecond access time and function as if it were a large memory with a 10.4 nanosecond access time. We always want faster.

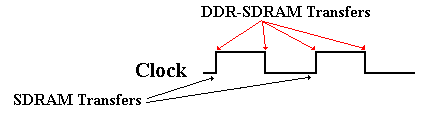

The next development that we shall discuss is synchronous dynamic access memory, SDRAM for short. Although we have not mentioned it, earlier memory was asynchronous, in that the memory speed was not related to any external speed. In SDRAM, the memory is synchronized to the system bus and can deliver data at the bus speed. The earlier SDRAM chips could deliver one data item for every clock pulse; later designs called DDR SDRAM (for Double Data Rate SDRAM) can deliver two data items per clock pulse.

Modern computer designs, in an effort to avoid the Von Neumann bottleneck, use several tricks, including multi-level caches and DDR SDRAM main memory.

As an example, we quote from the Dell Dimension 4700 advertisement of July 21, 2004. The chip has two 16 KB L1 caches (an Instruction Cache and a Data Cache), 1MB L2 cache, and 512 MB of DDR SDRAM that operates with a 400 MHz bus (800 M transfers/sec) with 64 data lines – 8 bytes at a time, for a data rate of 6.4 GB/sec.

The main difference between level 1 (L1) cache and level 2 (L2) cache is that the L1 cache is on the CPU chip, while the L2 cache generally is on a different chip. For example, the IBM S390/G5 chip has 256 KB of L1 cache on the CPU chip and 4MB of L2 cache. It is likely that the existence of two (or more) levels of cache, rather than one large L1 cache, is due to two aspects of area constraints: area on the CPU chip that can be allocated to the L1 cache and the fact that larger SRAM memories tend to be slower than the smaller ones.

L1 cache memory is uniformly implemented as SRAM (Static RAM), while L2 cache memory may be either SRAM or SDRAM.

The SDRAM chip uses a number of tricks to deliver data at an acceptable rate. As an example, let’s consider a modern SDRAM chip capable of supporting a DDR data bus. In order to appreciate the SDRAM chip, we must begin with simpler chips and work up.

We begin with noting an approach that actually imposes a performance hit – address multiplexing. Consider an NTE2164, a typical 64Kb chip. With 64K of addressable units, we would expect 16 address lines, as 64K = 216. In stead we find 8 address lines and two additional control lines

Row Address Strobe (Active Low)

Row Address Strobe (Active Low)

Column Address Strobe (Active Low)

Column Address Strobe (Active Low)

This is how it goes. We structure the memory as a 256-by-256 square array, remembering that 64K = 216 = 28 • 28 = 256 • 256. Two addresses — the row address and the column address – uniquely identify each object in the memory.

The 8-bit address is interpreted in the following fashion.

|

| Action |

0 | 0 | An error – this had better not happen. |

0 | 1 | It is a row address (say the high order 8-bits of the 16-bit address) |

1 | 0 | It is a column address (say the low order 8-bits of the 16-bit address) |

1 | 1 | It is ignored. |

Here is that way that the NTE2164 would be addressed.

1) Assert  = 0 and place the A15 to A8 on the 8-bit address bus.

= 0 and place the A15 to A8 on the 8-bit address bus.

2) Assert  = 0 and place A7 to A0 on the 8-bit address bus.

= 0 and place A7 to A0 on the 8-bit address bus.

For such a design, there are two design goals that are comparable.

1) The memory chip's pin count should be kept as low as possible. We have two options: 8 address pins, RAS, and CAS (10 pins) or 8 address pins, RAS, and CAS (10 pins).

There are 16 address pins in all, including an Address Valid pin (17 pins).

2) On the data bus, the number of address-related lines should be kept to a minimum.

The numbers are the same: 10 vs. 17.

We may now explore the next stage in memory speed-up with this design in mind. FPM–DRAM stands for Fast-Page Mode DRAM.

Quick-Page Mode Page mode in DRAM is an enhancement over traditional DRAM in which the row-address is fixed and data from several columns is read from the sense amplifiers. The information stored in the sense amps is organised into a "open page" that can be accessed easily. This reduces the time it takes to access the same row of the DRAM core.

The transition from FPM–DRAM to SDRAM logically entails making the DRAM interface synchronous to the data bus, with a clock signal propagated on that bus controlling it. The design problem now is figuring out how to make a memory chip that can respond quickly enough. The SDRAM core has the same fundamental design as a traditional DRAM. SDRAM sends data on one of the clock's edges, most commonly the leading edge.

Data at twice the normal rate By sending data at both edges of the clock, SDRAM (DDR–SDRAM) twice the bandwidth available from SDRAM.

Figure: DDR-SDRAM Transfers Twice as Fast

So far, we've used an SRAM memory as an L1 cache to reduce effective memory access time and Fast Page Mode DRAM to access a complete row from the DRAM chip quickly. The difficulty of making the DRAM chip faster continues to bother us. We'll have to speed up the chip a lot if we want to use it as a DDR-SDRAM.

The quantity of SRAM on a DRAM die is rising in modern DRAM designs. In most circumstances, a memory system will have at least 8KB of SRAM on each DRAM chip, allowing data to be transferred at SRAM speeds.

We now have two metrics to consider: delay and bandwidth.

The length of time it takes for the memory to produce the first element of a block is known as latency of data that is contiguous

The rate at which the memory can send data once the row address is known is known as bandwidth has been approved

Making the data bus "wider" – that is, able to transport more data bits at a time – can enhance memory bandwidth. The ideal size of the L2 cache turns out to be half the size of a cache line. So, what exactly is a cache line?

We must return to our explanation of cache memory in order to grasp the concept of a cache line. What happens if a memory read fails due to a cache miss? The byte that has been referenced must be recovered from main memory. By getting not just the required byte but also a number of neighbouring bytes, efficiency is increased.

Memory in the cache is grouped into cache lines. Assume we have an L2 cache with a 16-byte cache line size. 8-byte chunks of data would be transmitted into the L2 cache.

Let's say you need a byte with the address 0x124A and it's not in the L2 cache. The 16 bytes with addresses spanning from 0x1240 to 0x124F would be placed into a cache line in the L2 cache. This might be accomplished in two 8-byte transfers.