|

|

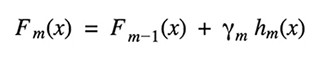

Gradient Boosting Gradient Tree Boosting is a generalization of boosting for loss functions that are differentiable in any way. It can be used to solve problems involving regression and classification. Gradient Boosting is a method of constructing a model in a sequential manner.

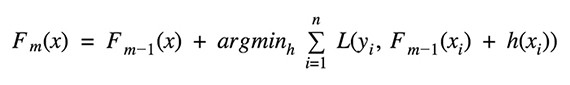

Given the current model Fm-1(x), the decision tree hm(x) is chosen at each stage to minimize a loss function L:

The type of loss function used in regression and classification algorithms differs.

|

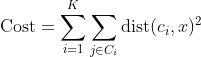

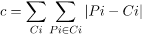

K-Means as an optimization problem Any learning algorithm has the aim of minimizing a cost function. We'll see how, by using centroid as a cluster prototype, we can minimize a cost function called "sum of squared error".

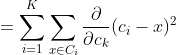

The sum of squared error is the square of all points' distances from their respective cluster prototypes.

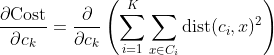

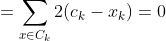

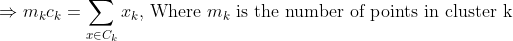

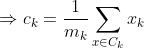

We get by taking the partial derivative of the cost function with respect to and cluster prototype and equating it to 0.

|

|

|

|

|

|