Unit – 5

Memory organization

Q1) Explain memory interleaving?

A1) Memory Interleaving

Abstraction is one of the most important aspects of computing. It is a widely implemented Practice in the Computational field.

Memory Interleaving is less or More an Abstraction technique. Though it's a bit different from Abstraction. It is a Technique that divides memory into several modules such that Successive words in the address space are placed in the Different module.

Consecutive Word in a Module:

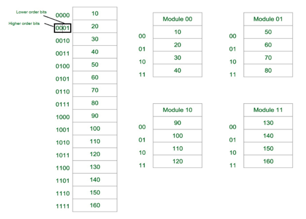

Fig-1: Consecutive Word in a Module

Let us assume 16 Data’s to be Transferred to the Four Module. Where Module 00 be Module 1, Module 01 be Module 2, Module 10 be Module 3 & Module 11 be Module 4. Also, 10, 20, 30….130 are the data to be transferred.

From the figure above in Module 1, 10 [Data] is transferred then 20, 30 & finally, 40 which are the Data. That means the data are added consecutively in the Module till its max capacity.

The most significant bit (MSB) provides the Address of the Module & the least significant bit (LSB) provides the address of the data in the module.

For Example, to get 90 (Data) 1000 will be provided by the processor. This 10 will indicate that the data is in module 10 (module 3) & 00 is the address of 90 in Module 10 (module 3). So,

Module 1 Contains Data: 10, 20, 30, 40

Module 2 Contains Data: 50, 60, 70, 80

Module 3 Contains Data: 90, 100, 110, 120

Module 4 Contains Data: 130, 140, 150, 160

Consecutive Word in Consecutive Module:

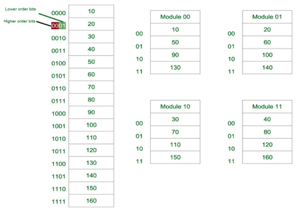

Fig-2: Consecutive Word in Consecutive Module

Now again we assume 16 Data’s to be transferred to the Four Module. But now the consecutive Data are added in the Consecutive Module. That is, 10 [Data] is added in Module 1, 20 [Data] in Module 2 and so on.

The Least Significant Bit (LSB) provides the Address of the Module & the Most significant bit (MSB) provides the address of the data in the module.

For Example, to get 90 (Data) 1000 will be provided by the processor. This 00 will indicate that the data is in module 00 (module 1) & 10 is the address of 90 in Module 00 (module 1). That is,

Module 1 Contains Data: 10, 50, 90, 130

Module 2 Contains Data: 20, 60, 100, 140

Module 3 Contains Data: 30, 70, 110, 150

Module 4 Contains Data: 40, 80, 120, 160

Q2) Why we use Memory Interleaving [Advantages]?

A2) Use of memory interleaving [Advantages]

Whenever Processor requests Data from the main memory. A block (chunk) of Data is Transferred to the cache and then to Processor. So, whenever a cache miss occurs the Data is to be fetched from the main memory. But main memory is relatively slower than the cache. So, to improve the access time of the main memory interleaving is used.

We can access all four Modules at the same time thus achieving Parallelism. From Figure 2 the data can be acquired from the Module using the Higher bits. This method Uses memory effectively.

Q3) Write the Types of Memory Interleaving?

A3) Types of Memory Interleaving

Memory Interleaving is an abstraction technique that divides memory into some modules such that successive words in the address space are placed in the different modules.

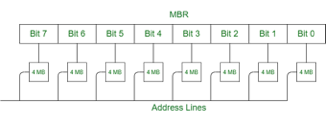

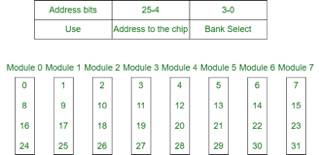

Suppose a 64 MB memory made up of the 4 MB chips as shown in the below:

Fig 3 – Example

We organize the memory into 4 MB banks, each having eight of the 4 MB chips. The memory thus has 16 banks, each of 4 MB.

64 MB memory = 2^26, so 26 bits are used for addressing.

16 = 2^4, so 4 bits of the address select the bank, and 4 MB = 2^22, so 22 bits of the address to each chip.

In general, an N-bit address, with N = L + M, is broken into two parts:

When one of the memory banks is active, the other (2L – 1) are inactive. All banks receive the M-bit address, but the inactive ones do not respond to it.

Classification of Memory Interleaving:

Memory interleaving is classified into two types:

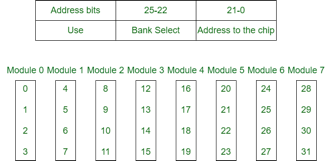

1. High Order Interleaving –

In high-order interleaving, the most significant bits of the address select the memory chip. The least significant bits are sent as addresses to each chip. One problem is that consecutive addresses tend to be in the same chip. The maximum rate of data transfer is limited by the memory cycle time.

It is also known as Memory Banking.

Fig 4 – Memory banking

2. Low Order Interleaving –

In low-order interleaving, the least significant bits select the memory bank (module). In this, consecutive memory addresses are in different memory modules. This allows memory access at much faster rates than allowed by the cycle time.

Fig 5 – low order interleaving memory bank

Q4) Explain Concept of hierarchical memory organization?

A4) Concept of hierarchical memory organization

A memory unit is an essential component in any digital computer since it is needed for storing programs and data.

Typically, a memory unit can be classified into two categories:

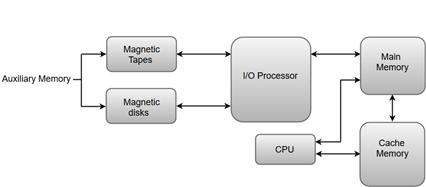

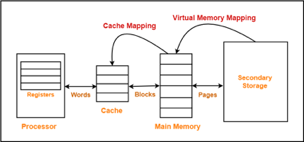

Apart from the basic classifications of a memory unit, the memory hierarchy consists of all of the storage devices available in a computer system ranging from the slow but high-capacity auxiliary memory to relatively faster main memory.

The following image illustrates the components in a typical memory hierarchy.

Fig 6 – Memory hierarchy in a computer system

Auxiliary Memory

Auxiliary memory is known as the lowest-cost, highest-capacity, and slowest-access storage in a computer system. Auxiliary memory provides storage for programs and data that are kept for long-term storage or when not in immediate use. The most common examples of auxiliary memories are magnetic tapes and magnetic disks.

A magnetic disk is a digital computer memory that uses a magnetization process to write, rewrite and access data. For example, hard drives, zip disks, and floppy disks.

Magnetic tape is a storage medium that allows for data archiving, collection, and backup for different kinds of data.

Main Memory

The main memory in a computer system is often referred to as Random Access Memory (RAM). This memory unit communicates directly with the CPU and with auxiliary memory devices through an I/O processor.

The programs that are not currently required in the main memory are transferred into auxiliary memory to provide space for currently used programs and data.

I/O Processor

The primary function of an I/O Processor is to manage the data transfers between auxiliary memories and the main memory.

Cache Memory

The data or contents of the main memory that are used frequently by the CPU are stored in the cache memory so that the processor can easily access that data in a shorter time. Whenever the CPU requires accessing memory, it first checks the required data into the cache memory. If the data is found in the cache memory, it is read from the fast memory. Otherwise, the CPU moves onto the main memory for the required data.

Q5) Describe cache memory?

A5) Cache Memory

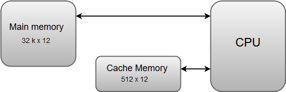

The data or contents of the main memory that are used frequently by the CPU are stored in the cache memory so that the processor can easily access that data in a shorter time. Whenever the CPU needs to access memory, it first checks the cache memory. If the data is not found in the cache memory, then the CPU moves into the main memory.

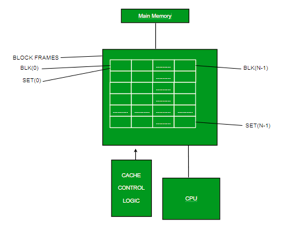

Cache memory is placed between the CPU and the main memory. The block diagram for a cache memory can be represented as:

Fig 7 – Cache memory

The cache is the fastest component in the memory hierarchy and approaches the speed of CPU components.

The basic operation of cache memory is as follows:

● When the CPU needs to access memory, the cache is examined. If the word is found in the cache, it is read from the fast memory.

● If the word addressed by the CPU is not found in the cache, the main memory is accessed to read the word.

● A block of words one just accessed is then transferred from main memory to cache memory. The block size may vary from one word (the one just accessed) to about 16 words adjacent to the one just accessed.

● The performance of the cache memory is frequently measured in terms of a quantity called hit ratio.

● When the CPU refers to memory and finds the word in the cache, it is said to produce a hit.

● If the word is not found in the cache, it is in the main memory and it counts as a miss.

● The ratio of the number of hits divided by the total CPU references to memory (hits plus misses) is the hit ratio.

Cache Memory is a special very high-speed memory. It is used to speed up and synchronizing with a high-speed CPU. Cache memory is costlier than main memory or disk memory but economical than CPU registers. Cache memory is an extremely fast memory type that acts as a buffer between RAM and the CPU. It holds frequently requested data and instructions so that they are immediately available to the CPU when needed.

Cache memory is used to reduce the average time to access data from the Main memory. The cache is a smaller and faster memory that stores copies of the data from frequently used main memory locations. There are various independent caches in a CPU, which store instructions and data.

Fig 8 - Memory

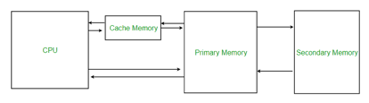

Levels of memory:

● Level 1 or Register –

It is a type of memory in which data is stored and accepted that are immediately stored in the CPU. The most commonly used register is accumulator, Program counter, address register, etc.

● Level 2 or Cache memory –

It is the fastest memory which has faster access time where data is temporarily stored for faster access.

● Level 3 or Main Memory –

It is the memory on which the computer works currently. It is small in size and once power is off data no longer stays in this memory.

● Level 4 or Secondary Memory –

It is external memory that is not as fast as main memory but data stays permanently in this memory.

Q6) Write the application of cache memory?

A6) Application of cache memory

Q7) Describe locality of references?

A7) Locality of References

Since size of cache memory is less as compared to main memory. So, to check which part of main memory should be given priority and loaded in cache is decided based on locality of reference.

Types of Locality of reference

This says that there is a chance that the element will be present in the proximity to the reference point and next time if again searched then more proximity to the point of reference.

2. Temporal Locality of reference

This Least recently used algorithm will be used. Whenever there is page fault occurs within a word will not only load word in the main memory but complete page fault will be loaded because the spatial locality of reference rule says that if you are referring to any word next word will be referred to in its register that’s why we load complete page table so the complete block will be loaded.

Q8) What are the types of cache?

A8) Types of Cache

A primary cache is always located on the processor chip. This cache is small and its access time is comparable to that of processor registers.

2. Secondary Cache –

Secondary cache is placed between the primary cache and the rest of the memory. It is referred to as the level 2 (L2) cache. Often, the Level 2 cache is also housed on the processor chip.

Q9) What do you mean by cache performance?

A9) Cache performance

When the processor needs to read or write a location in the main memory, it first checks for a corresponding entry in the cache.

● If the processor finds that the memory location is in the cache, a cache hit has occurred and data is read from the cache

● If the processor does not find the memory location in the cache, a cache miss has occurred. For a cache miss, the cache allocates a new entry and copies in data from the main memory, then the request is fulfilled from the contents of the cache.

The performance of cache memory is frequently measured in terms of a quantity called the Hit ratio.

Hit ratio = hit / (hit + miss) = no. of hits/total accesses

We can improve Cache performance using higher cache block size, higher associativity, reduce miss rate, reduce miss penalty, and reduce the time to hit in the cache.

Q10) Explain cache mapping?

A10) Cache Mapping:

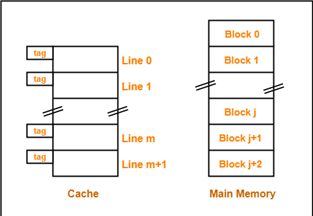

There are three different types of mapping used for cache memory which are as follows: Direct mapping, Associative mapping, and Set-Associative mapping. These are explained below.

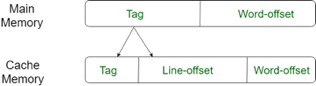

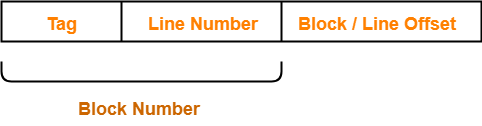

The simplest technique, known as direct mapping, maps each block of main memory into only one possible cache line. or

In Direct mapping, assign each memory block to a specific line in the cache. If a line is previously taken up by a memory block when a new block needs to be loaded, the old block is trashed. An address space is split into two parts index field and a tag field. The cache is used to store the tag field whereas the rest is stored in the main memory. Direct mappings performance is directly proportional to the Hit ratio.

m=number of lines in the cache

For purposes of cache access, each main memory address can be viewed as consisting of three fields. The least significant w bits identify a unique word or byte within a block of main memory. In most contemporary machines, the address is at the byte level. The remaining s bits specify one of the 2s blocks of main memory. The cache logic interprets these s bits as a tag of s-r bits (most significant portion) and a line field of r bits. This latter field identifies one of the m=2r lines of the cache.

Fig 9 – Direct mapping

2. Associative Mapping –

In this type of mapping, the associative memory is used to store content and addresses of the memory word. Any block can go into any line of the cache. This means that the word id bits are used to identify which word in the block is needed, but the tag becomes all of the remaining bits. This enables the placement of any word at any place in the cache memory. It is considered to be the fastest and the most flexible mapping form.

Fig 10 – Associative mapping

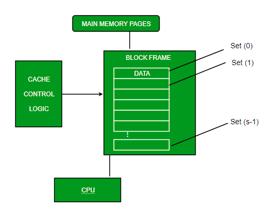

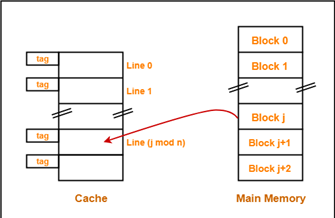

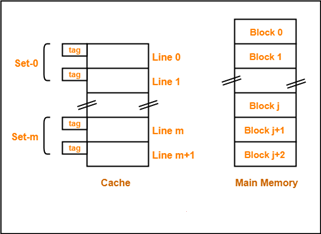

3. Set-associative Mapping –

This form of mapping is an enhanced form of direct mapping where the drawbacks of direct mapping are removed. Set associative addresses the problem of possible thrashing in the direct mapping method. It does this by saying that instead of having exactly one line that a block can map to in the cache, we will group a few lines creating a set. Then a block in memory can map to any one of the lines of a specific set. Set-associative mapping allows that each word that is present in the cache can have two or more words in the main memory for the same index address. Set associative cache mapping combines the best of direct and associative cache mapping techniques.

In this case, the cache consists of several sets, each of which consists of several lines. The relationships are

m = v * k

i= j mod v

where

i=cache set number

j=main memory block number

v=number of sets

m=number of lines in the cache number of sets

k=number of lines in each set

Fig 11 – Set associative mapping

Q11) What do you mean by mapping function?

A11) Mapping Function

When a cache hit occurs,

● The required word is present in the cache memory.

● The required word is delivered to the CPU from the cache memory.

When a cache miss occurs,

● The required word is not present in the cache memory.

● The page containing the required word has to be mapped from the main memory.

● This mapping is performed using cache mapping techniques.

In this article, we will discuss different cache mapping techniques.

Cache Mapping-

● Cache mapping defines how a block from the main memory is mapped to the cache memory in case of a cache miss.

OR

● Cache mapping is a technique by which the contents of the main memory are brought into the cache memory.

The following diagram illustrates the mapping process-

Fig 12 – Mapping process

Now, before proceeding further, it is important to note the following points-

Cache Mapping Techniques-

Cache mapping is performed using the following three different techniques-

1. Direct Mapping-

In direct mapping,

● A particular block of main memory can map only to a particular line of the cache.

● The line number of caches to which a particular block can map is given by-

Cache line number

= (Main Memory Block Address) Modulo (Number of lines in Cache)

Example-

● Consider cache memory is divided into ‘n’ number of lines.

● Then, block ‘j’ of main memory can map to line number (j mod n) only of the cache.

Fig 13 – Direct mapping

The need for a Replacement Algorithm-

In direct mapping,

● There is no need for any replacement algorithm.

● This is because the main memory block can map only to a particular line of the cache.

● Thus, the new incoming block will always replace the existing block (if any) in that particular line.

Division of Physical Address-

In direct mapping, the physical address is divided as-

Fig 14 – Division of Physical address in Direct Mapping

2. Fully Associative Mapping-

In fully associative mapping,

● A block of main memory can map to any line of the cache that is freely available at that moment.

● This makes fully associative mapping more flexible than direct mapping.

Example-

Consider the following scenario-

Fig 15 – Fully associative mapping

Here,

● All the lines of cache are freely available.

● Thus, any block of main memory can map to any line of the cache.

● Had all the cache lines been occupied, then one of the existing blocks will have to be replaced.

The need for a Replacement Algorithm-

In fully associative mapping,

● A replacement algorithm is required.

● The replacement algorithm suggests the block be replaced if all the cache lines are occupied.

● Thus, replacement algorithm like FCFS Algorithm, LRU Algorithm, etc. is employed.

Division of Physical Address-

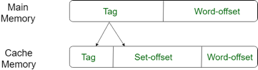

In fully associative mapping, the physical address is divided as-

Fig 16 – Physical address

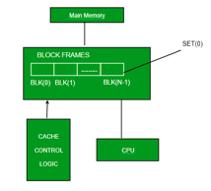

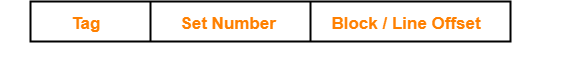

3. K-way Set Associative Mapping-

In k-way set associative mapping,

● Cache lines are grouped into sets where each set contains a k number of lines.

● A particular block of main memory can map to only one particular set of the cache.

● However, within that set, the memory block can map any freely available cache line.

● The set of the cache to which a particular block of the main memory can map is given by-

Cache set number

= (Main Memory Block Address) Modulo (Number of sets in Cache)

Example-

Consider the following example of 2-way set associative mapping-

Fig 17 – 2-way set associative mapping

Here,

● k = 2 suggests that each set contains two cache lines.

● Since cache contains 6 lines, so number of sets in the cache = 6 / 2 = 3 sets.

● Block ‘j’ of main memory can map to set number (j mod 3) only of the cache.

● Within that set, block ‘j’ can map to any cache line that is freely available at that moment.

● If all the cache lines are occupied, then one of the existing blocks will have to be replaced.

The need for a Replacement Algorithm-

● Set associative mapping is a combination of direct mapping and fully associative mapping.

● It uses fully associative mapping within each set.

● Thus, set-associative mapping requires a replacement algorithm.

Division of Physical Address-

In set associative mapping, the physical address is divided as-

Fig 18 – Physical address

Special Cases-

● If k = 1, then k-way set associative mapping becomes direct mapping i.e.

1-way Set Associative Mapping ≡ Direct Mapping

● If k = Total number of lines in the cache, then k-way set associative mapping becomes fully associative mapping.

Q12) What is replacement algorithm?

A12) Replacement Algorithm

In an operating system that uses paging for memory management, a page replacement algorithm is needed to decide which page needs to be replaced when a new page comes in.

Page Fault – A page fault happens when a running program accesses a memory page that is mapped into the virtual address space but not loaded in physical memory.

Since actual physical memory is much smaller than virtual memory, page faults happen. In case of a page fault, Operating System might have to replace one of the existing pages with the newly needed page. Different page replacement algorithms suggest different ways to decide which page to replace. The target for all algorithms is to reduce the number of page faults.

Page Replacement Algorithms:

● FIFO

● Optimal page replacement

● Least recently used

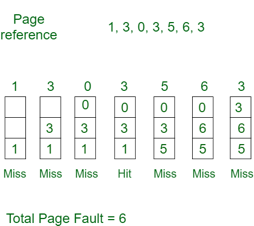

Q13) Describe FIFO?

A13) First In First Out

This is the simplest page replacement algorithm. In this algorithm, the operating system keeps track of all pages in the memory in a queue, the oldest page is in the front of the queue. When a page needs to be replaced page in the front of the queue is selected for removal.

Example-1Consider page reference string 1, 3, 0, 3, 5, 6 with 3-page frames. Find several page faults.

Initially, all slots are empty, so when 1, 3, 0 came they are allocated to the empty slots —> 3 Page Faults.

when 3 comes, it is already in memory so —> 0 Page Faults.

Then 5 comes, it is not available in memory so it replaces the oldest page slot i.e., 1. —>1 Page Fault.

6 comes, it is also not available in memory so it replaces the oldest page slot i.e., 3 —>1 Page Fault.

Finally, when 3 come it is not available so it replaces 0 1 page fault

Belady’s anomaly – Belady’s anomaly proves that it is possible to have more page faults when increasing the number of page frames while using the First in First Out (FIFO) page replacement algorithm. For example, if we consider reference string 3, 2, 1, 0, 3, 2, 4, 3, 2, 1, 0, 4, and 3 slots, we get 9 total page faults, but if we increase slots to 4, we get 10-page faults.

Q14) What is optimal page replacement?

A14) Optimal Page Replacement

In this algorithm, pages are replaced which would not be used for the longest duration of time in the future.

Example-2: Consider the page references 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2, with 4-page frame. Find number of page fault.

Initially, all slots are empty, so when 7 0 1 2 are allocated to the empty slots —> 4 Page faults

0 is already there so —> 0 Page fault.

when 3 came it will take the place of 7 because it is not used for the longest duration of time in the future. —>1 Page fault.

0 is already there so —> 0 Page fault.

4 will takes place of 1 —> 1 Page Fault.

Now for the further page reference string —> 0 Page fault because they are already available in the memory.

Optimal page replacement is perfect, but not possible in practice as the operating system cannot know future requests. The use of Optimal Page replacement is to set up a benchmark so that other replacement algorithms can be analyzed against it.

Q15) Define leased recently used?

A15) Leased Recently Used

In this algorithm, the page will be replaced which is the least recently used.

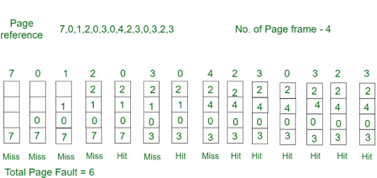

Example-3Consider the page reference string 7, 0, 1, 2, 0, 3, 0, 4, 2, 3, 0, 3, 2 with 4-page frames. Find number of page faults.

Initially, all slots are empty, so when 7 0 1 2 are allocated to the empty slots —> 4 Page faults

0 is already there so —> 0 Page fault.

when 3 came it will take the place of 7 because it is least recently used —>1 Page fault

0 is already in memory so —> 0 Page fault.

4 will takes place of 1 —> 1 Page Fault

Now for the further page reference string —> 0 Page fault because they are already available in the memory.

Q16) What do you mean by write policies?

A16) Write Policies

Normally we can perform both read operation and write operation on the cache memory.

Now when we perform a read operation on cache memory then it does not change the content of the memory. But as it is obvious that any write operation performed on the cache changes the content of the memory. Thus, it is important to perform any write on cache very carefully.

To make sure write operation is performed carefully, we can adopt two cache write methods:

Let's learn about the above two cache write methods in computer architecture.

Write-through policy

The write-through policy is the most commonly used methods of writing into the cache memory.

In the write-through method when the cache memory is updated simultaneously the main memory is also updated. Thus, at any given time, the main memory contains the same data which is available in the cache memory.

It is to be noted that, the write-through technique is a slow process as every time it needs to access the main memory.

Write-back policy

The write-back policy can also be used for cache writing.

During a write operation, only the cache location is updated while following the write-back method. When an update in cache occurs then the updated location is marked by a flag. The flag is known as modified or dirty bit.

When the word is replaced from the cache, it is written into the main memory if its flag bit is set. The logic behind this technique is based on the fact that during a cache write operation, the word present in the cache may be accessed several times (temporal locality of reference). This method helps reduce the number of references to the main memory.

The only limitation to the write-back technique is that inconsistency may occur while adopting this technique due to two different copies of the same data, one in the cache and the other in main memory.