Unit - 3

Memory Management Strategies

Q1) What do you mean by memory management strategy?

A1) Memory management is an operating system feature that handles or manages primary memory and moves processes between main memory and disk during execution. Memory management keeps track of every memory location, regardless of whether it is used by a process or is free. It determines how much memory should be assigned to each process. It determines which processes will be given memory and when. It keeps track of when memory is freed or unallocated and updates the state accordingly.

Address Space

The set of logical addresses that a process refers in its code is referred to as the process address space. When 32-bit addressing is used, for example, addresses can range from 0 to 0x7fffffff; that is, 2^31 possible numbers, for a total theoretical size of 2 gigabytes.

When memory is allocated to a program, the operating system takes care of translating logical addresses to physical addresses. Before and after memory is allocated, a program uses three sorts of addresses -

1. Symbolic addresses - In source code, the addresses are used. The symbolic address space's core elements are variable names, constants, and instruction labels.

2. Relative addresses - A compiler turns symbolic addresses into relative addresses during compilation.

3. Physical addresses - When a program is loaded into main memory, the loader creates these addresses.

In compile-time and load-time address binding techniques, virtual and physical addresses are the same. The execution-time address-binding technique differs between virtual and physical addresses.

A logical address space is the collection of all logical addresses generated by a program. A physical address space is the collection of all physical addresses that correspond to these logical addresses.

The memory management unit (MMU), which is a hardware component, performs the runtime mapping from virtual to physical addresses.

Static Vs Dynamic Loading

The choice between static and dynamic loading must be determined throughout the development of the computer software. If you must load your program statically, the entire program will be compiled and linked at the moment of compilation, leaving no external program or module dependencies. The linker creates an absolute program that includes logical addresses by combining the object program with other appropriate object modules.

If you're building a dynamically loaded program, your compiler will compile it and only supply references to the modules you wish to include dynamically. The rest of the work will be done when the program is executed.

With static loading, the absolute program (and data) is loaded into memory at the time of loading, allowing execution to begin.

If you use dynamic loading, the library's dynamic functions are stored on a disk in relocatable form and loaded into memory only when the program requires them.

Static Vs Dynamic Linking

As previously stated, static linking avoids runtime dependencies by combining all other modules required by a program into a single executable application.

It is not necessary to link the actual module or library with the program when dynamic linking is utilized; instead, a reference to the dynamic module is provided at the time of compilation and linking. Dynamic libraries include Shared Objects in Unix and Dynamic Link Libraries (DLL) in Windows.

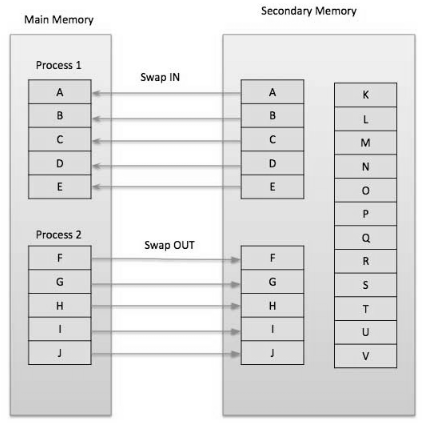

Q2) Explain Swapping?

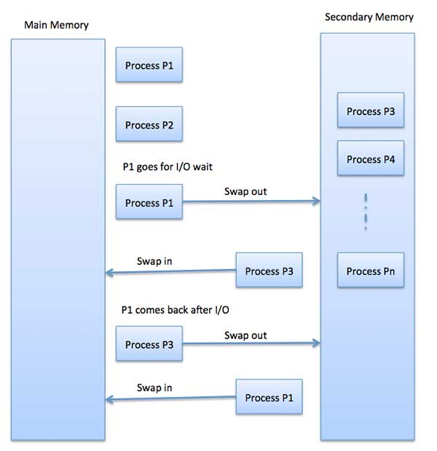

A2) Swapping is a mechanism for temporarily moving a process from main memory to secondary storage (disk) and making that memory available to other processes. The system switches the process from secondary storage to main memory at a later time.

Though the swapping process degrades performance, it aids in the concurrent execution of various and large processes, which is why it's also known as a memory compression approach.

Fig 1: Swapping

The time it takes to relocate the entire process to a secondary disk and then copy it back to memory, as well as the time it takes to reclaim main memory, is included in the total time taken by the swapping operation.

Assume that the user process is 2048KB in size and that the data transmission rate on the standard hard disk where the swapping would take place is roughly 1 MB per second. The 1000K procedure will take some time to move to or from memory.

2048 KB / 1024KB per second

= 2 seconds

= 2000 milliseconds

In terms of in and out time, it will take a total of 4000 milliseconds, plus additional overhead while the process fights for main memory.

Memory allocation

There are normally two partitions in main memory.

● Low Memory − Operating system resides in this memory.

● High Memory − User processes are held in high memory.

Operating system uses the following memory allocation mechanism

1. Single - partition allocation - The relocation-register strategy is employed in this type of allocation to protect user processes from each other as well as from changing operating-system code and data. The value of the shortest physical address is stored in the relocation register, whereas the range of logical addresses is stored in the limit register. The limit register must be less than each logical address.

2. Multiple - partition allocation - This method of allocation divides main memory into a number of fixed-size divisions, each of which should only contain one process. When a partition becomes available, a process is chosen from the input queue and loaded into it. The partition becomes available for another process when the process finishes.

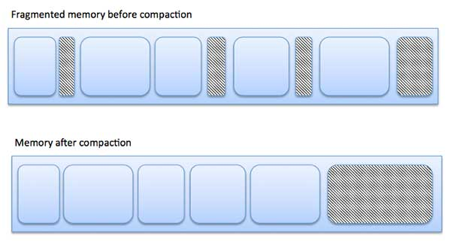

Q3) Write short notes on Fragmentation?

A3) The free memory space is divided up into little bits as processes are loaded and withdrawn from memory. Sometimes, due to their small size, processes are unable to be assigned to memory blocks, and memory blocks stay unused. Fragmentation is the term for this issue.

There are two forms of fragmentation.

1. External fragmentation - Although total memory space is sufficient to satisfy a request or to house a process, it is not contiguous and hence cannot be utilized.

2. Internal fragmentation - The memory block allotted to the process is larger. Because it cannot be used by another process, some memory is left unused.

Fig 2: Fragmentation

The picture below illustrates how fragmentation can lead to memory waste and how a compaction technique can be used to extract more free memory from fragmented memory.

Compaction or shuffle memory contents to place all free memory in one large block can reduce external fragmentation. Relocation must be dynamic in order for compaction to be possible.

Internal fragmentation can be minimized by designating the smallest partition that is yet large enough for the process.

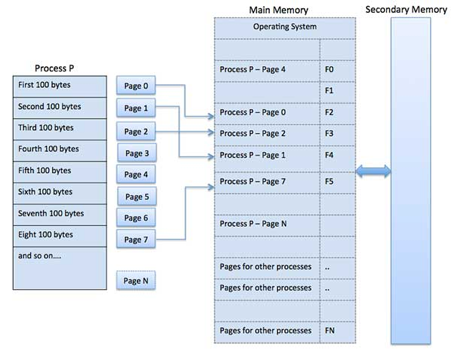

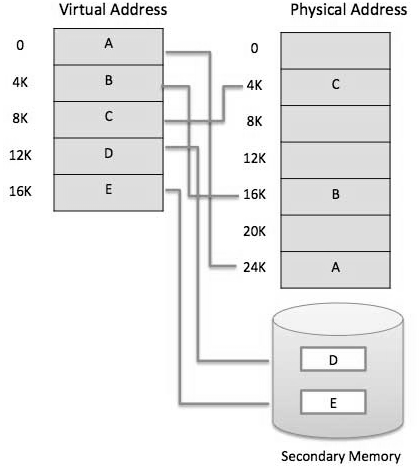

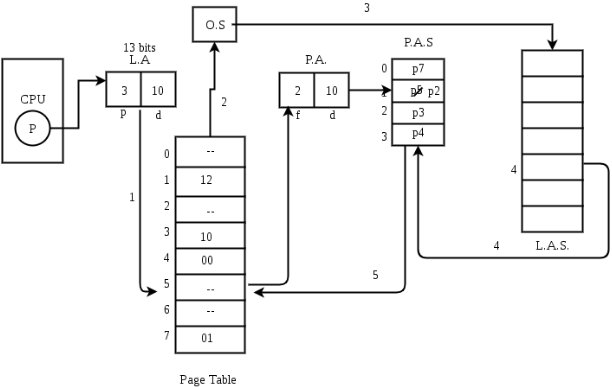

Q4) What is Paging?

A4) More memory can be addressed by a computer than is physically installed on the machine. This additional memory is known as virtual memory, and it is a part of a hard drive configured to simulate the computer's RAM. In order to implement virtual memory, the paging approach is crucial.

Paging is a memory management approach in which the process address space is divided into uniformly sized pieces known as pages (size is power of 2, between 512 bytes and 8192 bytes). The number of pages in the procedure is used to determine its size.

Similarly, main memory is partitioned into small fixed-size blocks of (physical) memory called frames, with the size of a frame being the same as that of a page in order to maximize main memory utilization and avoid external fragmentation.

Fig 3: Paging

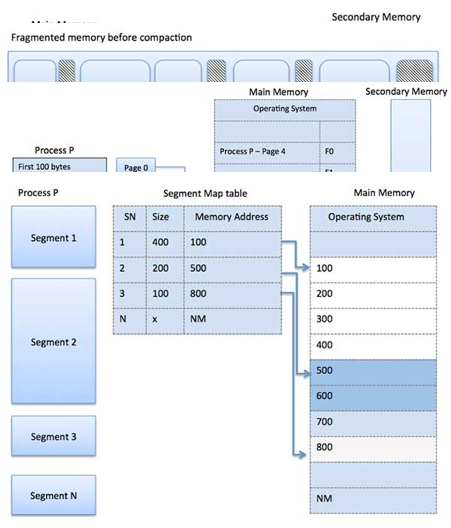

Q5) Explain Segmentation?

A5) Segmentation is a memory management strategy in which each job is broken into numerous smaller segments, one for each module, each of which contains parts that execute related functions. Each segment corresponds to a different program's logical address space.

When a process is ready to run, its associated segmentation is loaded into non-contiguous memory, though each segment is placed into a contiguous block of available memory.

Memory segmentation is similar to paging, except that segment are changeable in length, whereas paging pages are fixed in size.

The program's main function, utility functions, data structures, and so on are all contained within a program segment. For each process, the operating system keeps a segment map table and a list of free memory blocks, together with segment numbers, sizes, and memory addresses in main memory. The table stores the segment's starting address as well as its length for each segment. A value that identifies a segment and an offset are included in a reference to a memory location.

Fig 4: Segmentation

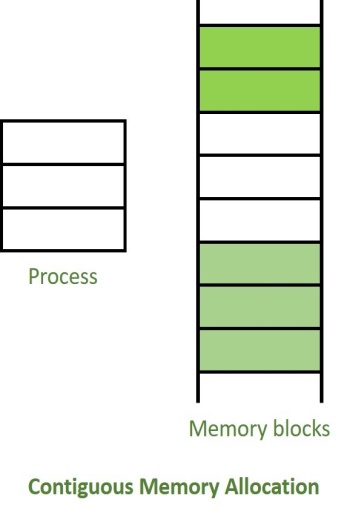

Q6) Describe contiguous and non - contiguous memory allocation?

A6) Contiguous memory allocation is basically a method in which a single contiguous section/part of memory is allocated to a process or file needing it. Because of this all the available memory space resides at the same place together, which means that the freely/unused available memory partitions are not distributed in a random fashion here and there across the whole memory space.

Fig 5: Contiguous memory allocation

The main memory is a combination of two main portions- one for the operating system and other for the user program. We can implement/achieve contiguous memory allocation by dividing the memory partitions into fixed size partitions.

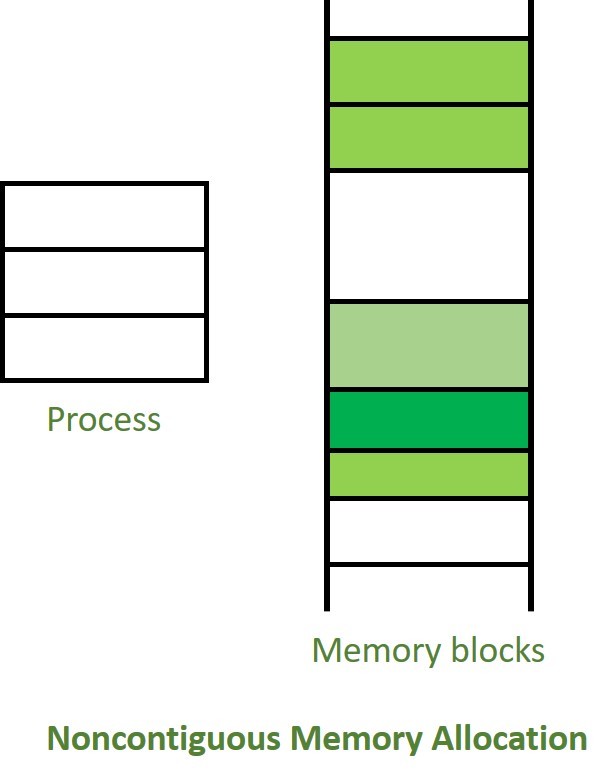

Non-Contiguous Memory Allocation:

Non-Contiguous memory allocation is basically a method on the contrary to contiguous allocation method, allocates the memory space present in different locations to the process as per its requirements. As all the available memory space is in a distributed pattern so the freely available memory space is also scattered here and there.

This technique of memory allocation helps to reduce the wastage of memory, which eventually gives rise to Internal and external fragmentation.

Fig 6: Non-contiguous memory allocation

Q7) Write the difference between contiguous and non-contiguous memory allocation?

A7) Difference between Contiguous and Non-contiguous Memory Allocation:

S.NO. | Contiguous Memory Allocation | Non - Contiguous Memory Allocation |

1. | Contiguous memory allocation allocates consecutive blocks of memory to a file/process. | Non-Contiguous memory allocation allocates separate blocks of memory to a file/process. |

2. | Faster in Execution. | Slower in Execution. |

3. | It is easier for the OS to control. | It is difficult for the OS to control. |

4. | Overhead is minimum as not much address translations are there while executing a process. | More Overheads are there as there are more address translations. |

5. | Internal fragmentation occurs in Contiguous memory allocation method. | External fragmentation occurs in Non-Contiguous memory allocation method. |

6. | It includes single partition allocation and multi-partition allocation. | It includes paging and segmentation. |

7. | Wastage of memory is there. | No memory wastage is there. |

8. | In contiguous memory allocation, swapped-in processes are arranged in the originally allocated space. | In non-contiguous memory allocation, swapped-in processes can be arranged in any place in the memory. |

Q8) Define virtual memory management?

A8) Virtual memory is a memory management functionality of an operating system (OS) that utilizes hardware and software to enable a computer to make up for physical memory deficiencies by incidentally moving data from random access memory (RAM) to disk storage.

● Virtual address space is expanded utilizing active memory in RAM and inactive memory in hard disk drives (HDDs) to frame contiguous addresses that hold both the application and its data.

● Virtual Memory is a storage allocation plot in which secondary memory can be tended to just as it were a piece of main memory.

● The addresses a program may use to reference memory are recognized from the addresses the memory system uses to distinguish physical storage locales, and program created addresses are interpreted consequently to the relating machine addresses.

● The size of virtual storage is constrained by the addressing plan of the computer system and the measure of secondary memory is accessible not by the genuine number of the main storage locations.

● Virtual memory was created when physical memory - the installed RAM - was costly.

● Computers have a limited measure of RAM, so memory can run out, particularly when numerous programs keep running simultaneously.

● A system utilizing virtual memory utilizes a segment of the hard drive to copy RAM. With virtual memory, a system can stack bigger programs or various programs running simultaneously, enabling everyone to work as though it has vast memory and without obtaining more RAM.

● While duplicating virtual memory into physical memory, the OS partitions memory into page files or swap files with a fixed number of addresses. Each page is put away on a disk and when the page is required, the OS copies it from the disk to fundamental memory and makes an interpretation of the virtual addresses into real addresses.

● Current microprocessors expected for universally useful use, a memory management unit, or MMU, is incorporated with the hardware.

● The MMU's main responsibility is to interpret a virtual address into a physical address. A fundamental model is given underneath −

Fig 7: Memory address

● It is a procedure that is actualized utilizing both hardware and software. It maps memory addresses utilized by a program, called virtual addresses computers, into physical addresses in c memory.

- All memory references inside a process are logical addresses that are powerfully converted into physical addresses at run time. This implies a process can be swapped all through fundamental memory with the end goal that it involves better places in primary memory at various times throughout execution.

- A process might be broken into some pieces and these pieces need not be constantly situated in the main memory during execution. The blend of dynamic run-time address interpretation and utilization of page or portion table allows this.

● On the off chance that these attributes are available, at that point, not every one of the pages or portions do not have to be available in the main memory during execution. This implies the required pages should be stacked into memory at whatever point is required.

● Virtual memory is designed utilizing Demand Paging or Demand Segmentation.

Q9) What is demand paging?

A9) Demand paging

● As per the idea of Virtual Memory, to execute some process, just a piece of the process should be available in the main memory which implies that a couple of pages may be available in the main memory whenever.

● The difficult task is to decide which pages should be kept in the main memory and which should be kept in the secondary memory because we can't state ahead of time that a process will require a specific page at a specific time.

● Subsequently, to beat this issue, there is an idea called Demand Paging is presented. It proposes keeping all pages of the frames in the secondary memory until they are required. That means, it says that don't stack any page in the main memory until it is required.

● Demand paging says that pages should possibly be brought into memory if the executing process requests them. This is frequently known as lazy evaluation as just those pages requested by the process are swapped from secondary storage to main memory.

Fig 8: Swapping memory

● On the other hand, in pure swapping, where all memory for a process is swapped from secondary storage to main memory during the process start execution.

● To implement this process needs a page table is utilized. The page table maps logical memory to physical memory.

● The page table uses a bitwise administrator to stamp if a page is valid or invalid. A valid page is one that is present in the main memory. An invalid page is one that is present in secondary memory.

● At the point when a process attempts to get to a page, the following steps are followed as shown in the figure below:

Fig 9: How to get a page

- If the CPU attempts to call a page that is as of now not accessible in the main memory, it creates an interrupt showing memory access fault.

- The OS places the interrupted process in a blocking state. For the execution to continue the OS must call the required page into the memory.

- The OS will scan for the required page in the logical address space.

- The required page will be brought from logical address space to physical address space. The page replacement algorithms are utilized for deciding which page is to be replaced with the new page in the physical address space.

- Updates will be made to the page table accordingly.

- The sign will be sent to the CPU to proceed with the program execution and it will put the process again into a ready state.

● Henceforth at whatever point a page fault happens these steps are performed by the operating system and the required page is brought into memory.

Q10) Write the advantages and disadvantages of demand paging?

A10) Advantages

● Demand paging, instead of loading all pages right away:

● Just loads pages that are requested by the executing process.

● As there is more space in the main memory, more processes can be stacked, decreasing the context switching time, which uses a lot of resources.

● Less loading latency happens at program startup, as less data is gotten from secondary storage and less data is brought into main memory.

● As main memory is costly contrasted with secondary memory, this method helps altogether decrease the bill of material (BOM) cost in advanced cells for instance. Symbian OS had this element.

Disadvantage

● Individual programs face additional latency when they access the page for the first time.

● Minimal effort, low-control implanted systems might not have a memory management unit that supports page replacement.

● Memory management with page replacement algorithms turns out to be somewhat increasingly intricate.

● Conceivable security dangers, including weakness to timing attacks.

● Thrashing may happen because of repeated page faults.

Q11) Write short notes on page replacement?

A11) The page replacement algorithm decides which memory page is to be replaced. The process of replacement is sometimes called swap out or write to disk. Page replacement is done when the requested page is not found in the main memory (page fault).

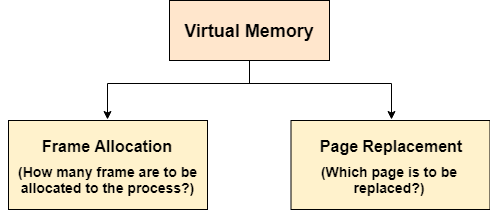

Fig 10: Virtual memory

There are two main aspects of virtual memory, Frame allocation and Page Replacement. It is very important to have the optimal frame allocation and page replacement algorithm. Frame allocation is all about how many frames are to be allocated to the process while the page replacement is all about determining the page number which needs to be replaced in order to make space for the requested page.

What If the algorithm is not optimal?

1. If the number of frames which are allocated to a process is not sufficient or accurate then there can be a problem of thrashing. Due to the lack of frames, most of the pages will be residing in the main memory and therefore more page faults will occur.

However, if OS allocates more frames to the process, then there can be internal fragmentation.

2. If the page replacement algorithm is not optimal then there will also be the problem of thrashing. If the number of pages that are replaced by the requested pages will be referred in the near future, then there will be more number of swap-in and swap-out and therefore the OS has to perform more replacements then usual which causes performance deficiency.

Therefore, the task of an optimal page replacement algorithm is to choose the page which can limit the thrashing.

When a process requests a page and that page is found in main memory, it is referred to as a page hit; otherwise, it is referred to as a page miss or a page fault.

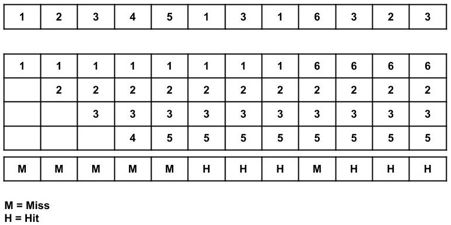

Q12) Explain optimal page replacement algorithm with example?

A12) This algorithm replaces the page which will not be referred for so long in future. Although it cannot be practically implementable but it can be used as a benchmark. Other algorithms are compared to this in terms of optimality.

Example of Optimal page replacement

Total page fault = 6

All four spaces are initially empty, therefore when 1, 2, 3, and 4 arrive, they are assigned to the empty spots in the order of their arrival. This is a page fault because the numbers 1, 2, 3, and 4 are not in memory.

When 5 arrives, it is not in memory, causing a page fault, and it substitutes 4, which will be utilized the most in the future among 1, 2, 3, and 4.

When 1,3,1 arrives, they are already in the memory, i.e., Page Hit, therefore there is no need to update them.

When 6 arrives, it is not found in memory, causing a page fault, and it takes the place of 1.

When 3, 2, 3 appears, it is already in the memory, i.e., Page Hit, thus there is no need to update it.

Page Fault ratio = 6/12

Q13) Describe the least recently used (LRU) replacement algorithm?

A13) This algorithm replaces the page which has not been referred for a long time. This algorithm is just opposite to the optimal page replacement algorithm. In this, we look at the past instead of staring at the future.

Example of LRU

Total page fault = 8

All four spaces are initially empty, therefore when 1, 2, 3, and 4 arrive, they are assigned to the empty spots in the order of their arrival. This is a page fault because the numbers 1, 2, 3, and 4 are not in memory.

Because 5 is not in memory when it arrives, a page fault occurs, and it replaces 1 as the least recently utilized page.

When 1 arrives, it is not in memory, therefore a page fault occurs, and it takes the place of 2.

When 3,1 arrives, it is already in the memory, i.e., Page Hit, therefore there is no need to update it.

When 6 arrives, it is not found in memory, causing a page fault, and it takes the place of 4.

When 3 arrives, it is already in the memory, i.e., Page Hit, therefore there is no need to update it.

When 2 arrives, it is not found in memory, causing a page fault, and it takes the place of 5.

When 3 arrives, it is already in the memory, i.e., Page Hit, therefore there is no need to update it.

Page Fault ratio = 8/12

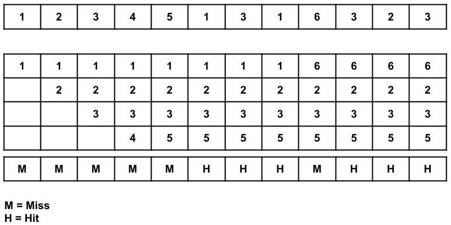

Q14) What is FIFO?

A14) In this algorithm, a queue is maintained. The page which is assigned the frame first will be replaced first. In other words, the page which resides at the rare end of the queue will be replaced on every page fault.

Example of FIFO

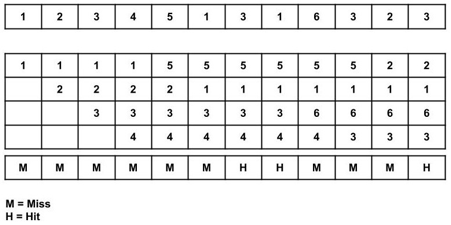

Consider the following size 12 page reference string: 1, 2, 3, 4, 5, 1, 3, 1, 6, 3, 2, 3 with frame size 4 (i.e., maximum 4 pages in a frame).

Total page fault = 9

All four spaces are initially empty, therefore when 1, 2, 3, and 4 arrive, they are assigned to the empty spots in the order of their arrival. This is a page fault because the numbers 1, 2, 3, and 4 are not in memory.

When page 5 arrives, it is not available in memory, so a page fault occurs, and the oldest page in memory, 1, is replaced.

Because 1 is not in memory when it arrives, a page fault occurs, and it replaces the oldest page in memory, i.e., 2.

When 3,1 arrives, it is already in the memory, i.e., Page Hit, therefore there is no need to update it.

When page 6 arrives, it is not in memory, therefore a page fault occurs, and the oldest page in memory, 3, is replaced.

When page 3 arrives, it is not in memory, therefore a page fault occurs, and the oldest page in memory, 4, is replaced.

When page 2 arrives, it is not in memory, therefore a page fault occurs, and the oldest page in memory, 5, is replaced.

When 3 arrives, it is already in the memory, i.e., Page Hit, therefore there is no need to update it.

Page Fault ratio = 9/12 i.e., total miss/total possible cases