Unit – 1

Introduction

Q1) Define and Background of WSN?

A1) Definitions and Background

Sensing and Sensors

Sensing is a technique for gathering data about a physical object or process, such as when events occur (i.e., changes in state such as a drop in temperature or pressure). A sensor is an object that performs such a sensing task.

The human body, for example, is equipped with sensors that may take optical information from the surroundings (eyes), auditory information such as sounds (ears), and olfactory information (nose) (nose). These are instances of remote sensors, which collect data without having to contact the monitored object.

A sensor is a device that converts physical factors or events into signals that can be measured and studied from a technological standpoint. Another term that is frequently used is transducer, which refers to a device that transfers energy from one form to another.

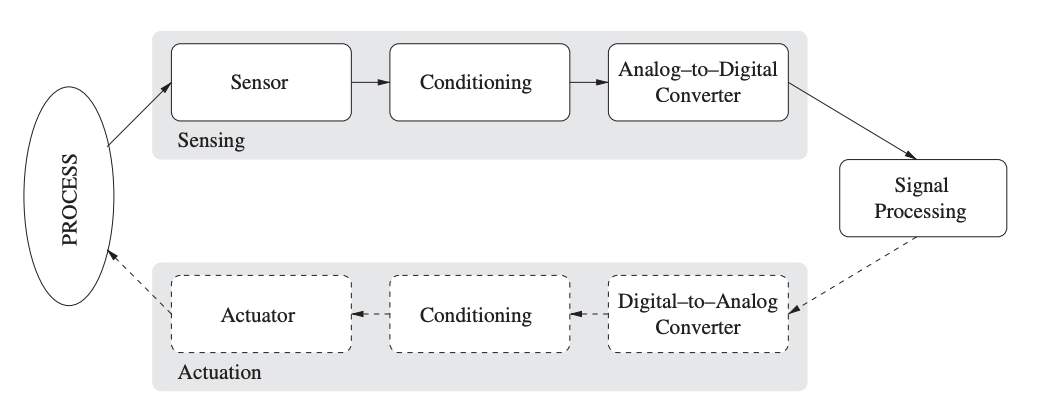

A sensor, then, is a form of transducer that converts physical energy into electrical energy that may be sent to a computer or controller. Figure is an example of the stages involved in a sensing (or data gathering) operation. A sensor device observes phenomena in the physical world (commonly referred to as process, system, or plant).

The resulting electrical signals are often not ready for immediate processing, therefore they pass through a signal conditioning stage. Here, a variety of operations can be applied to the sensor signal to prepare it for further use.

Fig 1: Data acquisition and actuation

Wireless sensor network

While many sensors connect to controllers and processing stations directly (e.g., using local area networks), an increasing number of sensors communicate the collected data wirelessly to a centralized processing station. This is important since many network applications require hundreds or thousands of sensor nodes, often deployed in remote and inaccessible areas. Therefore, a wireless sensor has not only a sensing component, but also on-board processing, communication, and storage capabilities.

With these enhancements, a sensor node is often not only responsible for data collection, but also for in-network analysis, correlation, and fusion of its own sensor data and data from other sensor nodes. When many sensors cooperatively monitor large physical environments, they form a wireless sensor network (WSN).

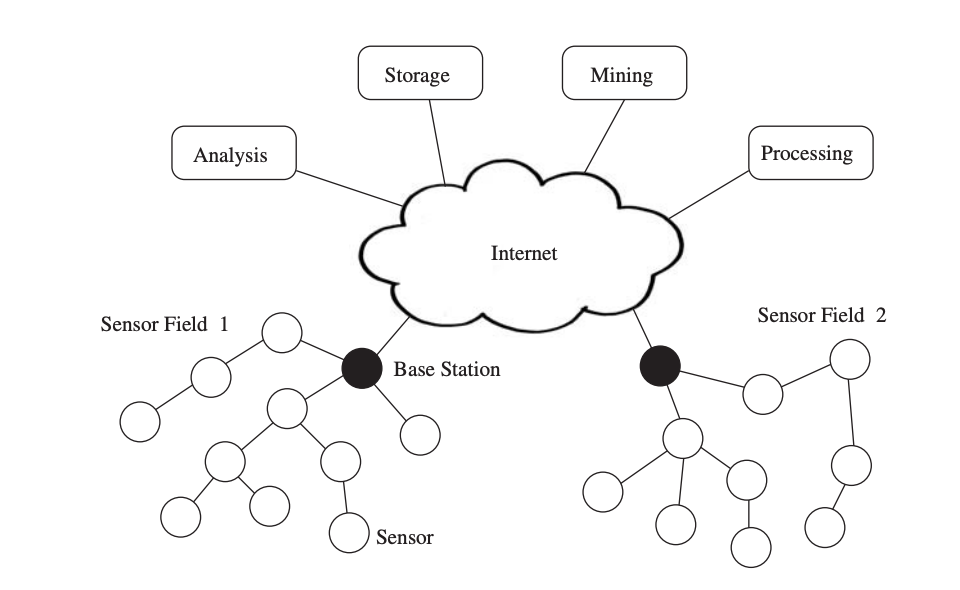

Sensor nodes communicate not only with each other but also with a base station (BS) using their wireless radios, allowing them to disseminate their sensor data to remote processing, visualisation, analysis, and storage systems. For example, Figure shows two sensor fields monitoring two different geographic regions and connecting to the Internet using their base stations.

Fig 2: wireless sensor network

Sensor nodes in a WSN can have a wide range of capabilities; for example, simple sensor nodes may monitor a single physical occurrence, whereas more complicated devices may combine many sensing approaches (e.g., acoustic, optical, magnetic). They can also have different communication capabilities, such as employing ultrasonic, infrared, or radio frequency technologies with different data rates and latencies, for example.

While simple sensors may just gather and convey data about the environment, more powerful devices (i.e., systems with substantial processing, energy, and storage capacities) may additionally conduct complex processing and aggregation operations.

Q2) Explain Challenges and Constraints?

A2) Challenges and Constraints

While sensor networks share many similarities with other distributed systems, they are subject to a variety of unique challenges and constraints. These constraints impact the design of a WSN, leading to protocols and algorithms that differ from their counterparts in other distributed systems.

Energy

The constraint most often associated with sensor network design is that sensor nodes operate with limited energy budgets. Typically, they are powered through batteries, which must be either replaced or recharged (e.g., using solar power) when depleted.

For some nodes, neither option is appropriate, that is, they will simply be discarded once their energy source is depleted. Whether the battery can be recharged or not significantly affects the strategy applied to energy consumption. For non rechargeable batteries, a sensor node should be able to operate until either its mission time has passed or the battery can be replaced.

The length of the mission time depends on the type of application, for example, scientists monitoring glacial movements may need sensors that can operate for several years while a sensor in a battlefield scenario may only be needed for a few hours or days.

As a result, energy efficiency is generally the first and most critical design challenge for a WSN. Every aspect of sensor node and network architecture is influenced by this requirement. The choices made at the physical layer of a sensor node, for example, have an impact on the device's overall energy usage and the design of higher-level protocols.

Switching and leakage energy account for the majority of the energy consumed by CMOS-based CPUs.

ECPU = Eswitch + Eleages = CtotalV2dd + VddIleakΔt

where Ctotal represents the total capacitance switched by the computation, Vdd represents the supply voltage, Ileak represents the leakage current, and Δt represents the computation time.

While switching energy today accounts for the majority of processor energy consumption, it is projected that leakage energy will account for more than half of processor energy consumption in the future (De and Borkar 1999). Progressive shutdown of idle components and software-based solutions such as Dynamic Voltage Scaling are two methods for reducing leakage energy (DVS).

Self - Management

Many sensor network applications are designed to function in remote locations and severe settings, with no infrastructure support or maintenance and repair options. Sensor nodes must therefore be self-managing, in the sense that they must configure themselves, operate and collaborate with other nodes, and adjust to failures, changes in the environment, and changes in the ambient stimuli without the need for human intervention.

● Ad Hoc Deployment

Many wireless sensor network applications don't require individual sensor node positions to be established and engineered. This is especially critical for networks deployed in inaccessible or rural locations.

● Unattended Operation

Many sensor networks must operate without human interaction once deployed, which means that configuration, adaptation, maintenance, and repair must all be done autonomously.

Wireless Networking

A sensor network designer has a variety of issues due to the dependency on wireless networks and communications. For example, attenuation restricts the range of radio transmissions, which means that as a radio frequency (RF) signal propagates across a medium and passes through barriers, it fades (i.e., loses power). The inverse-square law can be used to express the relationship between the received and sent power of an RF signal:

According to this formula, the received power Pr is proportional to the inverse of the square of the signal's distance d from the source. To put it another way, if Prx is the power at distance x, doubling the distance to y = 2x reduces the power at the new distance to Pyr = Pxr / 4.

Decentralized Management

Many wireless sensor networks are too vast and too energy-constrained to rely on centralized algorithms (e.g., at the base station) to perform network management solutions like topology management or routing. Instead, sensor nodes must work together with their neighbors to make localized judgments, i.e. without access to global information.

Design Constraints

While traditional computing systems' capabilities are continually increasing, the fundamental goal of wireless sensor design is to build smaller, less expensive, and more efficient devices.

Sensor nodes have the processing speeds and storage capacity of computer systems from decades ago, driven by the necessity to run specialised applications with minimal energy consumption. Many desirable components, such as GPS receivers, cannot be included due to the need for a tiny form factor and low energy usage.

Security

A large number of wireless sensor networks collect sensitive data. Sensor nodes that are operated remotely and unmanaged are more vulnerable to hostile invasions and attacks. Furthermore, wireless communications allow an attacker to easily listen in on sensor transmissions.

Other Challenges

Many design decisions in a WSN differ from design choices in other systems and networks, as evidenced. The fundamental distinctions between traditional networks and wireless sensor networks. The design of sensor nodes and wireless sensor networks might be hampered by a number of other issues.

Some sensors, for example, may be mounted on moving objects like vehicles or robots, resulting in constantly changing network topologies that necessitate frequent system adaptations at multiple layers, including routing (e.g., changing neighbor lists), medium access control (e.g., changing density), and data aggregation (e.g., changing overlapping sensing regions).

Q3) Write Structural Health Monitoring?

A3) Structural Health Monitoring

A highway bridge in Minnesota abruptly collapsed into the fast-flowing Mississippi river on August 2, 2007. The incident resulted in the deaths of nine persons. Investigators from the National Transportation Safety Board were unable to pinpoint the cause of the tragedy, but they narrowed it down to three possibilities: wear and tear, weather, and the weight of a neighboring construction project that was underway at the time. When the tragedy occurred, the construction project was closing half of the bridge's eight lanes.

Another bridge collapsed two weeks later, on August 14, 2007, at a major Chinese tourist attraction in Fenghuang county, Hunan province, killing 86 people on the spot. China had identified over 6000 bridges that were damaged or considered dangerous, according to the BBC (14 August 2007).

Several news sites, including The Associated Press (3 August 2007) and Time magazine (10 August 2007), published pieces advocating for wireless sensor networks to monitor bridges and similar structures during and after the catastrophes.

Bridges have traditionally been inspected in stages and at various levels (Koh and Dyke 2007):

The first phase is a time-consuming, tedious, inconsistent, and subjective inspection technique (Koh and Dyke 2007), whilst the others require sophisticated tools that are typically costly, heavy, and power-hungry. As a result, establishing automated, efficient, and cost-effective structural health monitoring tools is a hot topic in academia.

Tool-based inspection approaches can be divided into two categories: local and global inspections (Chintalapudi et al. 2006). Local procedures are used to detect cracks in a structure that are exceedingly localized and unnoticeable. These approaches use ultrasonic, thermal, X-ray, magnetic, or optical imaging techniques, but they take a long time and impair the structure's normal operation.

On the other hand, global inspection procedures try to discover a damage or flaw substantial enough to influence the entire structure. Detecting obvious changes in the movements of abutments, balustrades and barriers, bridge bearings, decks, towers, expansion joints, railings, and other structures in response to forced or ambient excitations is a common method.

The amplitude and duration of the excitation; the material from which the structure is formed; the structure's size; the technical constraints in the construction; the structure's age; and other surrounding constraints are all factors that influence modal parameters.

Researchers have recently been working on constructing and testing wireless sensor networks as part of a global inspection system. They are ideal for the work because of three factors:

Q4) Explain Health Care?

A4) Health Care

Wireless sensor networks have been proposed for a variety of health care applications, including monitoring patients with Parkinson's disease, epilepsy, heart patients, stroke or heart attack patients, and the elderly. Health-care apps, unlike the other categories of applications covered thus far, do not work as stand-alone systems. Rather, they are essential components of a large and complicated health and rescue system.

The US Centers for Medicare and Medicaid Services (CMS) projected that the country's national health spending in 2008 was $2.4 trillion. Heart disease and stroke have a combined cost of $394 billion. According to the same analysis, health spending in the United States and many other Western countries would climb. Policymakers, health care providers, hospitals, insurance companies, and patients all seem to be concerned about this.

While many people advocate for preventative health care as a way to lower health-care costs and mortality rates, research reveals that some individuals find some procedures uncomfortable, difficult, and inconvenient in their daily lives (Morris 2007).

Many people, for example, skip checkups or therapy appointments due to scheduling conflicts with their established living and working patterns, fear of overexertion, or transportation costs.

To address these issues, research aims to propose a clear solution that includes the following steps:

● constructing widespread systems that provide patients with a wealth of knowledge about diseases and how to avoid them;

● Health infrastructures are seamlessly integrated with emergency and rescue activities, as well as transportation systems.

● designing reliable and unobtrusive health-monitoring systems that patients can wear to decrease medical personnel's obligations and presence;

● notifying nurses and doctors when medical assistance is required; and

● Creating reliable links between autonomous health-monitoring systems and health institutions to reduce awkward and costly checkup visits.

Available Sensors

The scientific community has been busy developing a variety of wearable and wireless systems that measure heart rate, oxygen level, blood flow, respiratory rate, muscle activity, movement patterns, body inclination, and oxygen consumption in real time (VO2 ). A brief overview of some of the commercially available wireless sensor nodes for health monitoring is provided below:

● pulse oxygen saturation sensors: they measure the percentage of hemoglobin (Hb) saturated with oxygen (SpO2) and heart rate (HR);

● blood pressure sensors; • electrocardiogram (ECG);

● electromyogram (EMG) for measuring muscle activities;

● temperature sensors – both for core body temperature and skin temperature;

● respiration sensors;

● blood flow sensors;

● blood oxygen level sensor (oximeter) for measuring cardiovascular exertion (distress).

Prototypes

● Artificial Retina

Schwiebert et al. (2001) developed a microsensor array that can be implanted in the eye as an artificial retina to assist people with visual impairments. The system consists of an integrated circuit and an array of sensors. The integrated circuit is a multiplexer with on-chip switches and pads to support a 10 × 10 grid of connections; it operates at 40 kHz. Moreover, it has an embedded transceiver for wired and wireless communications.

Each connection in the chip interfaces a sensor through an aluminum probe surface. Before the bonding is done, the entire integrated circuit, except the probe areas, is coated with a biologically inert substance.

● Parkinson’s Disease

Lorincz et al. (2009) and Weaver (2003) propose the use of WSNs to monitor patients with Parkinson’s Disease (PD). The aim is to augment or entirely replace a human observer and to help the physician to fine-tune the medication dosage.

Parkinson’s Disease is a degenerative disorder of the central nervous system. It results from degeneration of neurons in a region of the brain that control movements (substantial nigra), creating a deficiency in neurotransmitter dopamine. Deficiency in dopamine causes severe impairment of motor skills and speech, manifesting itself in tremor of the hands, arms, legs, and jaw; unsteady walk and slowness of movements; and lack of balance and coordination.

Q5) Explain Pipeline Monitoring?

A5) Pipeline Monitoring

Another area of application for wireless sensor networks is the monitoring of gas, water, and oil pipelines. The management of pipelines presents a formidable challenge. Their long length, high value, high risk, and often difficult access conditions require continuous and unobtrusive monitoring. Leakages can occur due to excessive deformations caused by earthquakes, landslides, or collisions with an external force; corrosion, wear, material flaws or even intentional damage to the structure.

Prototype

The PipeNet prototype was developed as a collaboration project between Imperial College, London, Intel Research, and MIT to monitor water pipelines in urban areas.

Its main task is to monitor (1) hydraulic and water quality by measuring pressure and pH, and (2) the water level in combined sewer systems (sewer collectors and combined sewer outflows). Sewerage systems convey domestic sewage, rainwater runoff, and industrial wastewater to sewerage treatment plants.

Historically, these systems are designed to discharge their content to nearby streams and rivers in the event of overflow, such as during periods of heavy rainfall. Subsequently, the combined sewer overflows are among the major sources of water quality impairment.

PipeNet is deployed in three different settings. In the first setting, pressure and pH sensors are installed on a 12 in. cast-iron pipe which supplies drinking water. Pressure data is collected every 5 min for a period of 5 s at a rate of 100 Hz. The wireless sensor node can locally compute minimum, maximum, average, and standard deviation values and communicate the results to a remote gateway. Likewise, pH data is collected every 5 min for a period of 10 s at a rate of 100 Hz. The sensor nodes use a Bluetooth transceiver for wireless communication.

In the second setting, a pressure sensor is employed to measure the pressure in 8 in. cast iron pipe. The data is collected every 5 min for a period of 5 s at a sampling rate of 300 Hz. For this setting, local processing was not supported; instead, the raw data was transmitted to a remote gateway.

Finally, in the third setting, the water level of a combined sewer outflow collector is monitored. Two pressure transducers were placed at the bottom of the collector and an ultrasonic sensor on the top.

Q6) What is Precision Agriculture?

A6) Precision Agriculture

Another interesting area where wireless sensor networks motivated a large number of researchers is precision agriculture. Traditionally, a large farm is taken as a homogeneous field in terms of resource distribution and its response to climate change, weeds, and pests. Accordingly, farmers administer fertilizers, pesticides, herbicides, and water resources. In reality, a large field exhibits wide spatial diversity in soil types, nutrient content, and other important factors. Therefore, treating it as a uniform field can cause inefficient use of resources and loss of productivity.

Precision agriculture is a farm management technique that allows farmers to produce more efficiently by conserving resources. This includes things like micro-monitoring soil, crop, and climate change in a field, as well as offering a decision-making mechanism (DSS). Precision agriculture use Geographic Information System (GIS) management tools such as GPS, radar, and aerial pictures to precisely diagnose a field and apply critical farming resources.

Over the last few years, a slew of technologies have been developed to help and automate precision agriculture. Here are a few examples:

● Yield monitors: These are devices that monitor immediate yield depending on time and distance using mass flow sensors, moisture sensors, and a GPS receiver, among other things. The sensors can detect separator speed, ground speed, grain moisture, and header height, as well as the mass or volume of grain flow (grain flow sensors).

● Yield mapping: GPS receivers are linked to yield monitors to provide spatial coordinates for the data.

● Variable rate fertilizer: Manages the application of fertilizer materials, both liquid and gaseous.

● Weed mapping: While combining, planting, spraying, or field scouting, a farmer might use this tool to map weeds.

● Variable spraying: Spot control can be conducted with the knowledge about weed locations gleaned by weed mapping. Booms can now be turned on and off electronically, and the amount (and blend) of herbicide applied can be changed.

● Topography and boundaries: Allow for the creation of extremely accurate topographic maps that may be used to analyze yield maps, weed maps, and prepare for grassed streams and field divisions, among other things. To improve agricultural planning, field boundaries, roads, yards, tree stands, and wetlands can all be correctly plotted.

● Salinity mapping: This is used to map fields where salinity has an impact. Salinity mapping is useful for deciphering yield maps and weed maps, as well as tracking salinity changes over time.

● Guidance systems: These are devices that can accurately position a moving vehicle within a 12 in. radius (or less). They are useful for spraying and seeding as well as field scouting.

The main challenge in applying precision agriculture technologies is the need to collect an amount of data over several days that is large enough to characterize the entire field. In this regard, wireless sensor networks can be excellent tools as large-scale sensing technologies.

Q7) Write about Underground Mining?

A7) Underground Mining

Finally, another application domain for which wireless sensor networks have been proposed is underground mining.

Underground mining is one of the most dangerous working environments in the world. Perhaps the incident of 3 August 2007 at the Crandall Canyon mine, Utah, USA, is a good example of the danger associated with underground mining. It also highlights some of the contributions of wireless sensor networks to facilitate safe working conditions and rescue operations.

In this fateful incident, six miners were trapped inside the coal mine. Though their precise location was not known, experts estimated that the men were trapped 457 m below ground, 5.5 km away from the mine entrance.

There were different opinions about the exact cause of the accident. The owners of the mine claimed that a natural earthquake was the cause. Seismologists at the University of Utah observed that seismic waves of 3.9 magnitude were recorded on the same day in the area of the mine, leading scientists to suspect that mine operations were the cause of the seismic spikes.

Following the accident, a costly and irksome rescue attempt was undertaken. This included the drilling of 6.4 cm and 26 cm holes into the mine cavity through which an omnidirectional microphone and a video camera were lowered, and an air sample was taken.

The air sample indicated the presence of sufficient oxygen (20 percent ), a small concentration of carbon dioxide, and no trace of methane. The microphone detected no sound and the video camera revealed some equipment, but not the six missing miners.

This evidence caused a mixed anticipation. If the miners were alive, the amount of oxygen was sufficient to sustain life for some additional days. Moreover, the absence of methane gave hope that there would be no immediate danger of explosion as a result of the drilling of holes. However, the absence of carbon dioxide and the evidence from the camera and the microphone undermined the expectation of finding the missing persons alive. More than six labor-intensive days were required to collect the above evidence.

Q8) Write about node architecture?

A8) Node Architecure

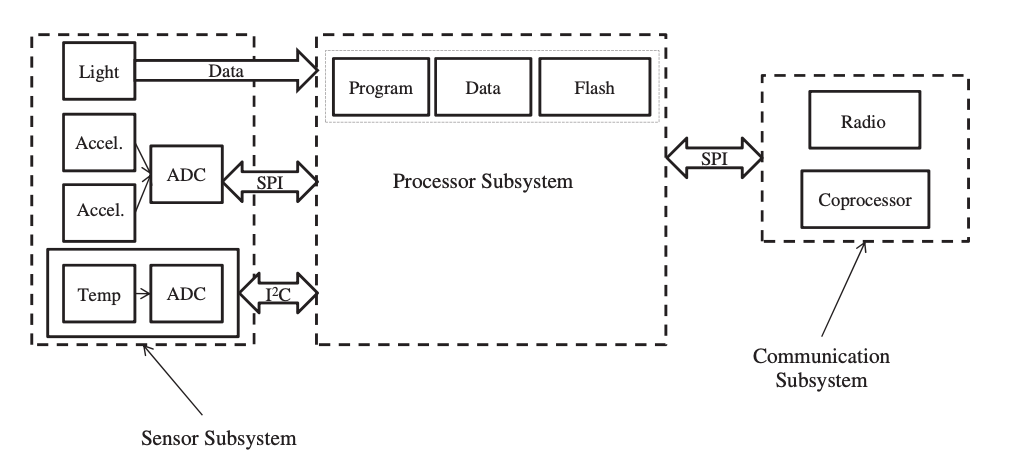

In a wireless sensor network, the wireless sensor nodes are the most important component (WSN). Sensing, processing, and communication all take place through a node. It keeps track of communication protocols and data-processing algorithms and performs them. The physical resources available to the node determine the quality, size, and frequency of the sensed data that can be collected from the network. As a result, designing and implementing a wireless sensor node is an important step.

Sensing, processing, communication, and power subsystems make up the node. When it comes to determining how to assemble and connect these subsystems into a single, programmable node, the designer has a lot of alternatives. The processor subsystem is the node's most important component, and the processor chosen defines the tradeoff between flexibility and efficiency - both in terms of energy and performance. Microcontrollers, digital signal processors, application-specific integrated circuits, and field programmable gate arrays are among the processors available.

The sensing subsystem and the processor can be linked in a variety of ways. One method is to use a multichannel ADC system that combines many high-speed ADCs into a single IC design to connect two or more analog sensors. Crosstalk and uncorrelated noise are known to occur with these sorts of ADCs, lowering the signal-to-noise ratio (SNR) on individual channels. Furthermore, the connected signals can produce spurs that are similar to harmonic terms, lowering the spurious free dynamic range (SFDR) and overall harmonic distortion (THD). However, the effect is negligible for low-frequency transmissions.

Some sensors feature a built-in ADC that can communicate directly with the processor using a typical chip-to-chip protocol. To interface analog devices, most microcontrollers feature one or more inbuilt ADCs.

Fig 4: Architecture of a wireless sensor network

Q9) Explain Sensing Subsystem?

A9) Sensing Subsystem

The sensing subsystem combines one or more physical sensors with one or more analog-to-digital converters and the multiplexing mechanism that allows them to be shared. The sensors serve as a link between the virtual and physical worlds.

Detecting physical phenomena is not a new concept. In the year 132 AD, the Chinese astronomer Zhang Heng constructed the Houfeng Didong Yi - a seismoscope – to estimate the magnitude of seasonal winds and the Earth's movement. Magnetometers, on the other hand, have been in use for over 2000 years.

However, with the introduction of microelectromechanical systems (MEMS), sensing has become a commonplace activity. There are a myriad of sensors available today that measure and quantify physical properties at a low cost. A transducer is a device that transfers one kind of energy into another, most commonly electrical energy, in a physical sensor (voltage). This transducer produces an analog signal with a continuous magnitude as a function of time as its output. To connect a sensor subsystem to a digital processor, an analog-to-digital converter is necessary.

Analog to digital convertor

A sensor's output, which is a continuous analog signal, is converted into a digital signal using an analog-to-digital converter (ADC). There are two steps to this procedure:

Q10) Describe the Processor Subsystem?

A10) The Processor Subsystem

All of the other subsystems, as well as some additional peripherals, are brought together by the processor subsystem. Its primary function is to process (execute) sensing, communication, and self-organization instructions. It includes, among other things, a CPU chip, nonvolatile memory (typically internal flash memory) for storing program instructions, active memory for temporarily storing sensory data, and an internal clock.

While a variety of off-the-shelf processors are available for developing a wireless sensor node, the choice must be made carefully because it influences the node's cost, adaptability, performance, and energy consumption. A designer can use either a field programmable gate array or a digital signal processor if the sensing task is well specified from the start and does not vary over time. In terms of energy usage, these processors are quite efficient, and they are appropriate for most simple sensing jobs. Because these aren't general-purpose processors, the design and implementation process can be time-consuming and expensive.

In many actual circumstances, however, the sensing purpose may need to be changed or modified. Furthermore, the wireless sensor node's software may require periodic updates or remote troubleshooting. At runtime, such tasks need a significant amount of compute and processing space. Special-purpose, energy-efficient processors aren't appropriate in this scenario.

Microcontrollers are currently used in the majority of sensor nodes. Aside from the justifications listed, there are others. WSNs are very new technology, and researchers are still working on developing energy-efficient communication protocols and signal-processing algorithms. The microcontroller is the ideal solution because it requires dynamic code installation and update.

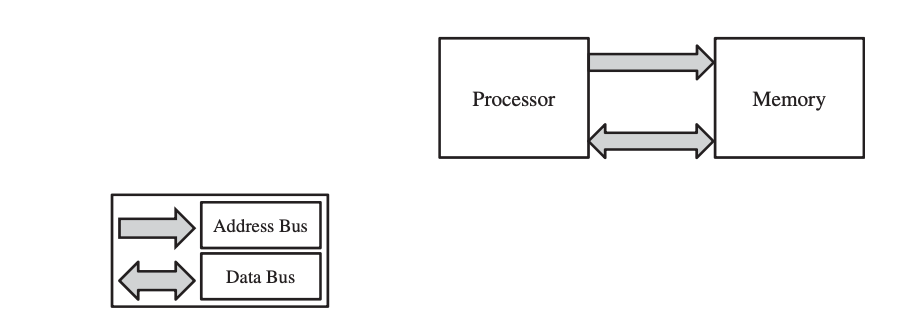

Architectural Overview

The efficient execution of algorithms, which necessitates the movement of data from and to memory, is a fundamental challenge with resource-constrained computers. This comprises the data to be processed or changed, as well as the program instructions. The data in WSNs, for example, comes from physical sensors, whereas the program instructions concern communication, self-organization, data compression, and aggregation methods.

One of the three main computer architectures, Von Neumann, Harvard, or Super-Harvard, can be used to construct the processor subsystem (SHARC). Program instructions and data are stored in a single memory region in the Von Neumann architecture. It allows data to be sent between the processor and the memory via a single bus. Because each data transfer has its own clock, this design has a rather poor processing speed. The Von Neumann architecture is depicted in this simplified diagram.

Fig 5: the Von Neumann architecture

By offering separate memory areas for program instructions and data, the Harvard design differs from the Von Neumann architecture. Each memory space has its own data bus that connects it to the processor. This allows program instructions and data to be accessible simultaneously. A specific single instruction, multiple data (SIMD) operation, a particular arithmetic operation, and bit reverse addressing are also supported by the architecture. It has no virtual memory or memory protection, yet it can readily run multitasking operating systems. The Harvard architecture is depicted in the diagram.

Fig 6: simplified view of the Harvard architecture

Q11) Define Communication Interfaces?

A11) Communication Interface

The way the subcomponents are interconnected with the processor subsystem is just as important as choosing the proper type of processor for the performance and energy consumption of a wireless sensor node.

The entire efficiency of the network that a wireless sensor node sets up is dependent on fast and energy-efficient data transfer across its subsystems.

However, system buses are limited due to the realistic size of the node. While parallel bus communication is faster than serial bus communication, parallel bus communication requires more space. Furthermore, it necessitates a dedicated line for each bit that must be transferred at the same time, whereas the serial bus simply requires a single data line. Parallel buses are never supported in node architecture due to the size of the node.

As a result, serial interfaces including the serial peripheral interface (SPI), general purpose input/output (GPIO), secure data input/output (SDIO), inter-integrated circuit (I2C), and Universal Serial Bus are frequently used (USB). The SPI and I2C buses are the most widely utilized among these.

Serial Peripheral Interface

The Serial Peripheral Interface (SPI – pronounced "spy") is a full-duplex synchronous serial bus that runs at high speeds. It was created in the mid-1980s at Motorola. Although there is no official standard, manufacturers of devices that use the SPI should follow other manufacturers' implementation specifications to ensure proper communication (for example, devices should agree on whether to transmit the most significant bit (MSB) or the least significant bit (LSB) first).

Inter-Integrated Circuit

The inter-integrated circuit (I2C) is a half-duplex synchronous serial bus with multiple masters. Philips Semiconductors developed it, and the company also owns the official standard. Only two bidirectional lines are used in I2C. (unlike SPI, which uses four). I2C's goal is to reduce the cost of connecting devices inside a system by allowing for slower communication speeds. I2C has two speed modes: Fast (up to 400 kbps) and Hs (up to 3.4 Mbps). In previous versions, the 100 kbps rate (Standard-mode) was defined.

Q12) Explain Prototypes?

A12) Prototypes

Here are some examples of prototype node architectures. The architectures were chosen not for their commercial success or energy efficiency, but rather to show the many node realization options.

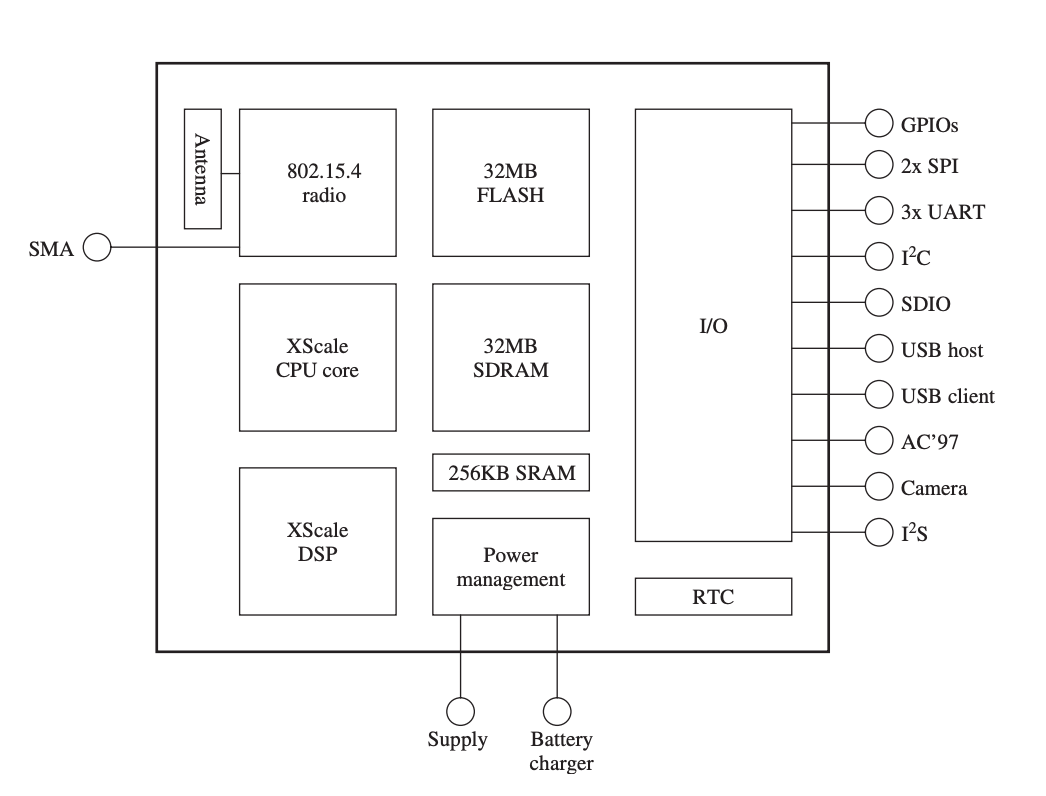

A power management subsystem, a CPU subsystem, a sensing subsystem, a communication subsystem, and an interface subsystem are all part of the IMote sensor node architecture as shown in Figure.

Fig 7: architecture of an IMote sensor node

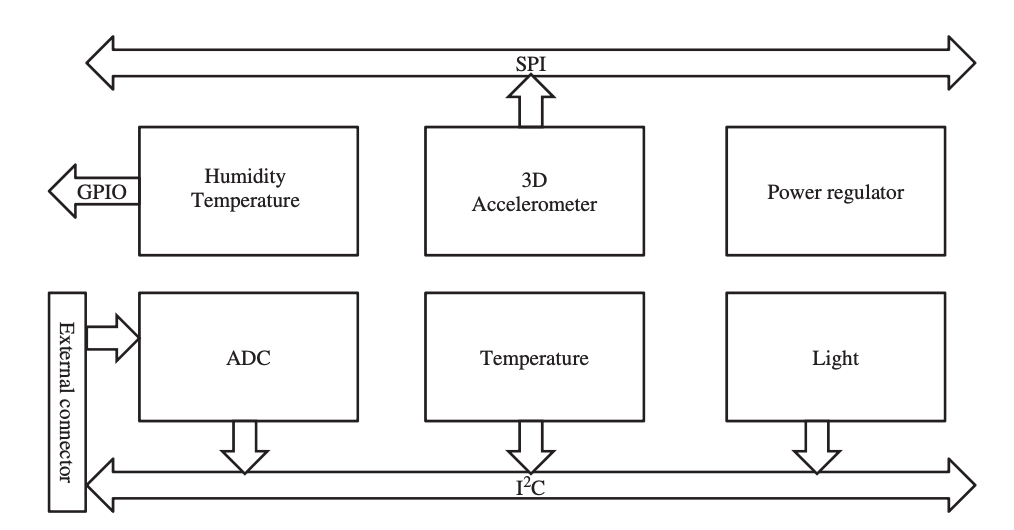

The sensing subsystem depicted in the diagram provides a platform for connecting several sensor boards. A 12-bit, 4-channel ADC, a high-resolution temperature / humidity sensor, a low-resolution digital temperature sensor, and a light sensor are all included in one version of the sensor board. The SPI and I 2C buses are used to connect these devices to the processing subsystem. The I2C bus is used to link low data rate sources, whereas the SPI bus is used to interface high data rate sources, as seen in the diagram.

A core processor (microprocessor) and a digital signal processor are included in the processing subsystem (DSP). The primary processor supports low-voltage (0.85 V) and low-frequency (13 MHz) operation, allowing for low-power operation.

Fig 8: sensing subsystem of the IMote architecture

The communication subsystem, like the sensor subsystem, has an expandable interface to handle many types of radios. The Chipcon (CC2420) transceiver, which implements the IEEE 802.15.4 radio specification, is one implementation. In the 2.4-GHz band, the transceiver offers a transmission rate of 250 kbps over 16 channels.

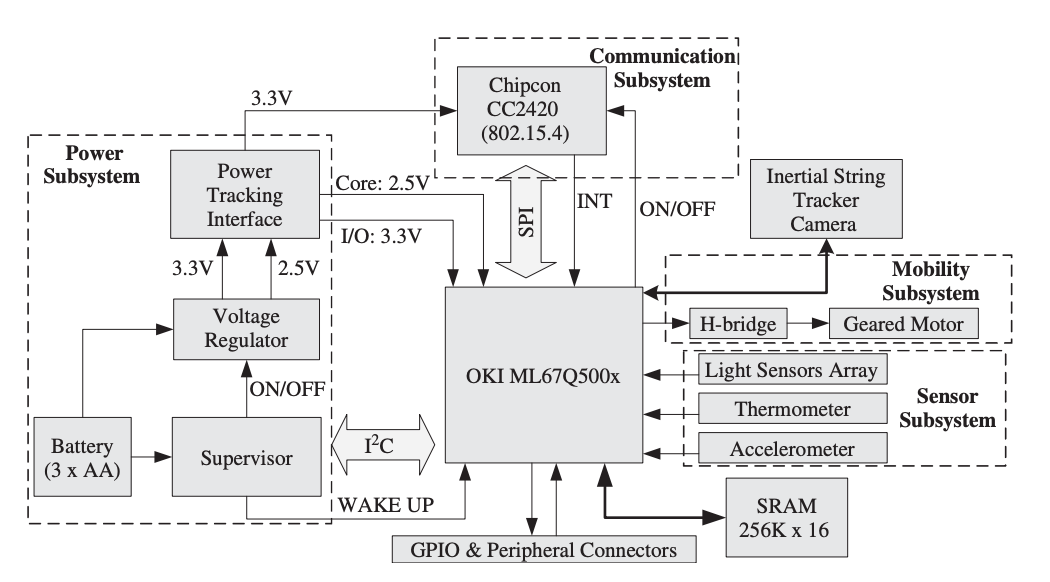

The XYZ Node Architecture

There are four subsystems in the XYZ architecture. The schematic diagram of the node architecture is shown in Figure. The CPU subsystem is built around the ARM7TDMI core microprocessor, which has a maximum frequency of 58 MHz. Depending on the application, the microcontroller can function in two different modes: at 32 bits and 16 bits. The processor subsystem has a 4 KB boot ROM and a 32 KB RAM on-chip, which may be expanded by up to 512 KB of flash memory.

An inbuilt DMA controller, four 10-bit ADC inputs, serial ports (RS232, SPI, I2C, SIO), and 42 multiplexed general-purpose I/O pins are among the peripheral components that connect the processor subsystem to the rest of the system. On two 30-pin headers, the majority of the multiplexed GPIO pins are available, together with the DC voltage provided by the power subsystem or directly by an on-board voltage regulator.

Fig 9: XYZ node architecture

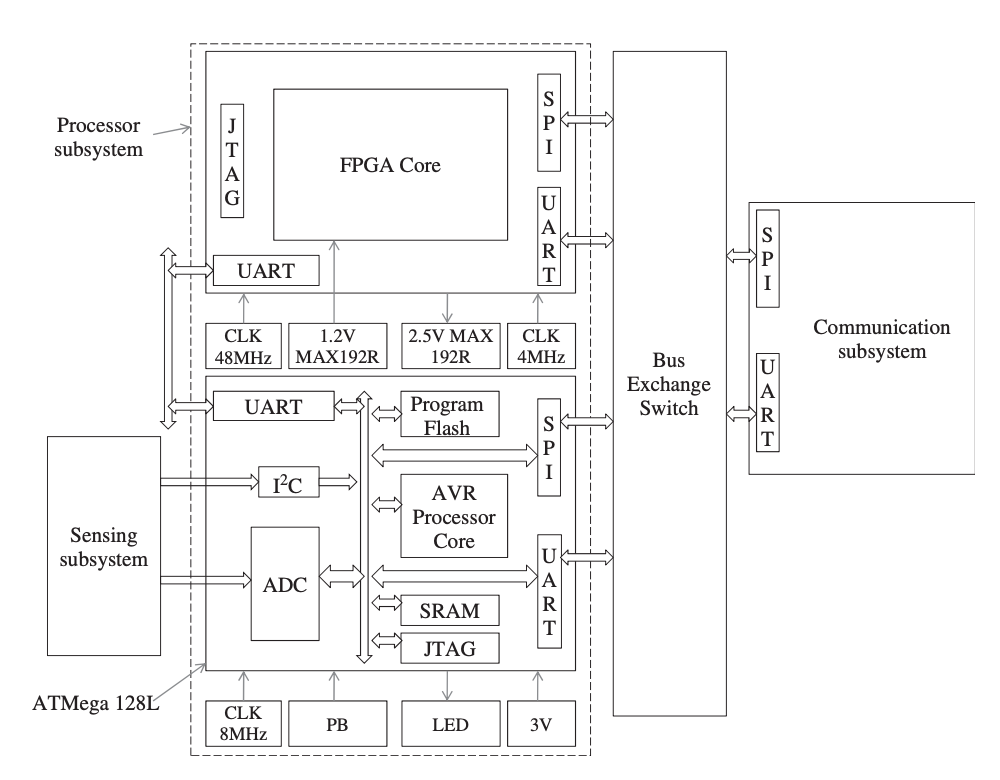

The Hogthrob Node Architecture

The Hogthrob node architecture (Bonnet et al. 2006) was created for a specific purpose: to track the actions of sows in a large-scale pig farm. The major sensing task is based on the notion that there is a direct link between a sow's movement and the onset of estrus.

As a result, a network of nodes worn by sows tracks their activities in order to capture this important status and provide proper care for pregnant sows. In Denmark, for example, a legislation already exists requiring pregnant sows to walk freely in a huge pen.

The typical subsystems make up the architecture of a node. The Hogthrob node's processing subsystems are made up of two processors, a microcontroller, and a field programmable gate array, unlike many other architectures (FPGA).

Controlling the communication subsystem and other peripherals is one of the microcontroller's less difficult, less energy-intensive responsibilities. It also serves as an external timer and ADC converter for the FPGA after it is initialized. The sensor node's actions are coordinated and the sow monitoring program is executed by the FPGA.

Figure displays a partial view of the node architecture and the various interfacing buses.

Fig 10: A partial view of the Hogthrob node architecture

Q13) Define operating system?

A13) Operating System

In a WSN, an operating system (OS) is a thin software layer that sits logically between the node's hardware and the application, providing application developers with fundamental programming abstractions. Its primary function is to allow applications to communicate with hardware resources, plan and prioritize tasks, and arbitrate resource contention between competing applications and services. There are also the following features:

● memory management;

● power management;

● file management;

● Networking;

● a set of programming environments and tools – commands, command interpreters, command editors, compiler, debuggers, etc. – to enable users to develop, debug, and execute their own programs; and

● legal entry points into the operating system for accessing sensitive resources such as writing to input components.

Single-task/multitasking and single user/multiuser operating systems are the two most common classifications for operating systems. A single-task operating system can only handle one task at a time, whereas a multitasking operating system can handle numerous tasks at once.

Multitasking operating systems require a lot of memory to keep track of numerous activities' states, but they allow tasks of varying complexity to run in parallel. The processor subsystem of a wireless sensor node, for example, may interface with the communication subsystem while collecting data from the sensing subsystem.

For this type of situation, a multitasking operating system is the ideal option. Concurrent processing, on the other hand, may not be feasible due to limited resources. Because only one job is run at a time in a single-task OS.

Q14) Describe Functional Aspects?

A14) Data Types

Communication between subsystems is critical in wireless sensor networks (WSNs). These subsystems communicate with one another for a variety of reasons, including data exchange, delegation of functions, and signaling. Interactions are carried out via well-defined protocols and data types that are supported by the operating system. Simple data types are resource economical but have limited expression power, whereas complex data structures have a lot of expression power but cost a lot of resources.

Scheduling

One of the most basic aspects of an operating system is task scheduling. The efficiency of the OS is determined by how well tasks can be planned, prioritized, and completed.

Broadly speaking, there are two scheduling mechanisms: queuing-based and round robin scheduling. In a queuing-based scheduling, tasks originating from the various subsystems are temporarily stored in a queue and executed serially according to a predefined rule. Some operating systems enable tasks to specify priority levels so that they can be given precedence. Queuing-based scheduling can be further classified into first-in-first-out (FIFO) and sorted queue.

Stacks

A stack is a data structure for temporarily storing data objects in memory by heaping them up one on top of the other. Last-in-first-out (LIFO) access is used to access the objects. When the processor core starts running subroutines, it uses stacks to store system state information.

As a result, it "remembers" where to return once the subroutine is finished. Subroutines can also call other subroutines by stacking the current subroutine's state information on top of the preceding state information. When the function is finished, the CPU searches the top of the stack for the first address it finds and jumps to that address.

System Calls

The operating system (OS) provides a number of basic functions that enable separation of concerns, specifically the requirement to decouple the problem of accessing hardware resources and additional low-level services from the implementation specifics of the access methods. These actions are used by users whenever they want to access a hardware resource such as a sensor, watchdog timer, or radio without having to worry about how the hardware is accessed. These basic functions are known as system calls in a UNIX environment.

Handling Interrupts

An interrupt is an asynchronous signal created by a hardware device (such as a sensor, a watchdog timer, or a radio) that causes the processor to stop executing the current instruction and request an interrupt handler. The interrupt handler is given control by the CPU, which maintains the state of the interrupted process in a stack.

When the communication subsystem receives a packet that needs to be handled right away, it may raise an interrupt signal. The CPU subsystem must then interrupt the current instruction's execution and request the proper OS module to handle radio packets.

In addition to hardware devices, the operating system can define a number of system events that can trigger interrupt signals. In some circumstances, the operating system can produce periodic interrupts to allow the processor to monitor the state of hardware resources and alert event handlers if a particular hardware condition is of interest.

Multithreading

A thread is the path followed by a processor or software while it is being executed. Tasks are monolithic in single-task, non preemptive operating systems, and there is only one thread of operation. A task can be broken into numerous logical components in a multithreaded environment, each of which can be scheduled independently and executed concurrently.

Similarly, many tasks coming from various sources can be run in parallel in multiple threads. Threads that are part of the same task share a shared data and address space and can communicate with one another if needed.

A multithreaded operating system has two major advantages:

● tasks do not block other tasks; this is particularly important to deal with tasks pertaining to I/O systems;

● short-duration tasks can be executed along with long-duration tasks.

Thread-Based vs Event-Based Programming

Concurrent activities, particularly those linked to I/O systems, are critical in wireless sensor networks, and the decision is between thread-based and event-based execution paradigms.

Several aspects must be considered when deciding whether threads or events should be supported in an OS, including the necessity for distinct stacks and the need to estimate their maximum size for keeping context information. Within a single program and a single address space, thread-based programs use many control threads. This allows a thread that has been blocked by an I/O device to be paused while other tasks are carried out in separate threads.

Memory Allocation

The memory unit is a valuable asset. That's where the operating system is kept. Data and the software code for the application are also temporarily saved there. The speed with which a piece of software executes is determined by how much memory is allocated and for how long.

A program's memory can be allocated statically or dynamically. Static memory allocation is a memory-saving technique that can only be employed if the program's memory requirements are known ahead of time. Memory is allocated when the program first runs — as part of the execution process – and it is never released.

When the size and duration of the required memory are unknown at the time of software compilation, dynamic memory allocation is utilized. This covers applications that use dynamic data structures and whose memory requirements are unknown at the time of execution. These programs frequently use memory on a temporary basis.

Q15) Explain Non-functional Aspects?

A15) Non-functional Aspects

Separation of Concern

Because of the severely limited resource budget, operating systems designed to serve resource constrained devices and the networks that these devices establish differ from general-purpose operating systems. The operating system and the programs that run on top of it are clearly separated in general-purpose operating systems. These two communicate with one another via well-defined interfaces and system calls. The operating system has a number of services that can be upgraded, debugged, or completely deleted on their own. In wireless sensor networks, such a distinction is difficult to maintain.

In most situations, the operating system is made up of a collection of lightweight modules that can be "wired" together to form a monolithic computer code that handles sensing, processing, and communication. The wiring is done during compilation, resulting in a single system image that can be installed on each node. Some operating systems include an indivisible system kernel as well as a set of library components to aid in the development of applications. Other operating systems include a kernel and a collection of reprogrammable (reconfigurable) low-level services that encapsulate a node's hardware components.

System Overhead

Because an operating system executes program code, it needs its own set of resources. Its size and the types of services it provides to higher-level services and applications determine how much resource it requires. The operating system's overhead is the amount of resources it consumes.

The resources of currently available wireless sensor nodes are measured in kilobytes and a few megahertz. Programs that do sensing, data aggregation, self-organization, network management, and communication must share these resources. In light of these activities, it's important to understand the operating system's overhead.

Portability

Nodes with heterogeneous architectures and operating systems should, in theory, be able to coexist and interact. Existing operating systems, on the other hand, do not currently support this.

The portability of an operating system to deal with the rapid growth of hardware architecture is a related issue. WSNs (wireless sensor networks) are still a new technology. Architectural design has evolved significantly over the last decade, and as other application domains are investigated, this evolution is projected to continue in order to suit unforeseen requirements. As a result, operating systems must be portable and expandable.

Dynamic Reprogramming

It may be essential to reprogram specific parts of the application or the operating system once a WSN has been implemented for the following reasons:

● The network may not run properly if complete knowledge of the deployment setting is not available at the time of deployment.

● Both the application needs and the physical parameters of the networks' operating environment can vary over time; and

● While the network is still operational, it may be required to detect and fix issues.

Because of the enormous number of nodes involved, replacing a piece of software manually may not be possible. Another option is to create an operating system that supports dynamic reprogramming. Dynamic reprogramming appears to be impossible to provide if there is no clear separation between the application and the operating system.

If there is a separation between the two, however, dynamic programming can be supported in theory, but its practical implementation is dependent on a number of parameters.

To begin, the operating system must be able to receive the software update in pieces, assemble them, and temporarily store them in active memory. Second, the OS should double-check that this is truly the most recent version. Third, it should be able to uninstall and configure the outdated program before installing and configuring the new version. All of these eat up resources and may introduce bugs of their own.

Q16) Define Prototypes?

A16) Prototypes

TinyOS

TinyOS is the most popular, well-documented, and tool-assisted WSN runtime environment. Furthermore, it has through a lengthy design and evolution process, resulting in a clear operational concept.

TinyOS's tiny architecture allows it to handle a wide range of applications. The architecture is made up of a scheduler and a set of components that can communicate with one another via well-defined interfaces. Configuration components and modules are two types of components. A configuration component explains how two or more modules are connected (this is referred to as "wiring"), whereas modules are the TinyOS program's basic building blocks.

TinyOS applications are created by combining various configurations into a single executable code. TinyOS lacks a clear line of demarcation between the operating system and the application.

A frame, command handlers, event handlers, and a collection of nonpreemptive activities make up a component. In object-based programming languages, a component is comparable to an object in that it contains a state and interacts with it through well-defined interfaces. Commands, event handlers, and tasks can all be defined in an interface.

Each of these operates on the frame's state while running in the context of the frame. As a result, a component must declare the commands it uses and the events it signals in writing. It is feasible to ascertain the resources required by an application at the time of compilation in this way.

Higher-level components provide commands to lower-level components, and lower-level components indicate events to higher-level components. As a result, higher-level components create event handlers, whereas lower-level components create command processors (or function subroutines). At the bottom of the component hierarchy lies the physical hardware.

SOS

SOS attempts to establish a balance between flexibility and resource efficiency. Unlike TinyOS, it supports runtime reconfiguration and reprogramming of program code. The OS consists of a kernel and a set of modules that can be loaded and unloaded. In functionality, a module is similar to a TinyOS component – it implements a specific task or function.

Moreover, in the same way that TinyOS components can be “wired” to build an application, an SOS application is composed of one or more interacting modules. Unlike a TinyOS component, which has a static place in memory, a module in SOS is a position-independent binary.

Interfaces to the underlying hardware are provided by the SOS kernel. It also allows dynamic memory allocation and has a priority-based scheduling mechanism.

Interaction

Messages (asynchronous communication) and direct calls to registered functions are used to interact with a module (synchronous communication). A message sent from module A to module B should go through the scheduler first, where it will be placed in a priority queue. The kernel then sends the message to the relevant message handler in module B.

Modules are responsible for implementing message handlers that are particular to their purpose. A module can also communicate with another module by calling one of its registered functions directly. Interaction via a function call is faster than communication via messages. This technique necessitates modules registering their public functions with the kernel explicitly.

These functions must be subscribed to by all modules that are interested in them. At the time of module initialization, a system function called ker register fn is called to register a function. The call allows the module to tell the kernel where the function is implemented in its binary image.

Dynamic Reprogramming

SOS supports dynamic reprogramming thanks to five basic features. Modules, for starters, are position-independent binaries that employ relative rather than absolute addresses, making them relocatable. Second, every SOS module implements two sorts of message handlers: init and final. When a module is loaded for the first time, the kernel will call the init message handler. Its goal is to initialize the module, which includes initial periodic timers, function registration, and function subscription.

Before a module is uninstalled, the kernel executes the final message handler. The goal is to allow the module to gracefully quit the system by releasing all resources it holds, including as timers, memory, and registered functions. The kernel performs trash collection after the final message.

Third, SOS employs a linker script to position the init handler of a module at a known offset in the binary during compilation. During module insertion, the script will allow for easy linkage. Fourth, SOS saves a module's state outside of it. The newly inserted module can now inherit the state information of the module it replaces.

Contiki

Contiki is a hybrid operating system. Its kernel operates as an event-driven kernel by default, however multithreading functionality is provided via an application library. The multithreading library is linked with applications that expressly require it using a dynamic linking method.

Contiki, like SOS, recognizes the separation of the kernel's core system support from the rest of the dynamically loadable and reprogrammable services known as processes. The services communicate with one another via submitting events to the kernel. Instead of providing a hardware abstraction, the kernel allows device drivers and applications to interface directly with the hardware.

The kernel's restricted scope makes it simple to reprogramme and replace services. The kernel holds a pointer to the process state, and each Contiki service handles its own state in a private memory. A service, on the other hand, shares the same address space as other services. It also has an event handler and a poll handler that may be turned on or off. Contiki's memory assignment in ROM and RAM is shown in the diagram.

Fig 11: The Contiki operating system: the system programs are partitioned into core services and loaded programs

Contiki is divided into two components, as shown in Figure: the core services are inside the broken lines, while the dynamically loadable services are outside the broken lines. The division is created during the compilation process. The kernel, the program loader, a communication stack with device drivers for communication hardware, and other frequently used services make up the core.