UNIT 3

CPU Scheduling

- What are System modes in an operating system?

Sol:

- A system comprises a limited number of resources to be distributed among various contending processes. The resources are divided into a few types, each comprising some number of indistinguishable instances.

- Memory space, CPU cycles, files, and I/O devices, (for example, printers and DVD drives) are models of resource types. On the off chance that a system has two CPUs, at that point, the resource type CPU has two instances. Also, the resource type printer may have five instances.

- A process must request a resource before utilizing it and must release the resource after utilizing it. A process may request the same number of resources as it requires to complete its assigned task.

- The number of resources mentioned may not exceed all number of resources available in the system. Or we can say that a process can't demand three printers if the system has just two.

- Under the ordinary mode of operation, a process may use a resource in just the accompanying sequence:

- Request. The process request for the resource first. If the request can't be assigned quickly (for instance, if the resource is being utilized by another process), at that point, the mentioning process must wait until it can procure the resource.

- Use. The process can work on the resource (for instance, if the resource is a printer, the process can print on the printer).

- Release. The process releases the resource after utilizing it.

- A lot of processes are in a deadlocked state when each process in the set is waiting for an event that can be caused uniquely by another process in the set.

- The event with which we are fundamentally worried here is resource acquirement and release. The resources might be either physical (for instance, printers, tape drives, memory space, and CPU cycles) or logical resources (for instance, files, semaphores, and monitors).

2. Explain Deadlock characterization

Sol:

- In a deadlock, processes never complete their execution, and system resources are tied up, keeping different jobs from the beginning.

Necessary Conditions

- A deadlock occurs in the operating system when at least two processes need some resource to finish their execution that is held by the different process.

- A deadlock happens if the four Coffman conditions prove to be true. But, these conditions are not related. They are described as follows:

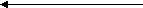

Mutual Exclusion

- There ought to be a resource that must be held by one process at once.

- In the figure below, there is a single instance of Resource 1 and it is held by Process 1 as it were.

Allocated

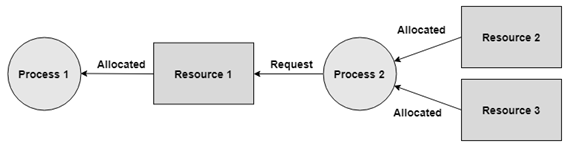

Hold and Wait

- A process can hold numerous resources and still demand more resources from different processes that are holding them.

- In the graph given beneath, Process 2 holds Resource 2 and Resource 3 and is mentioning Resource 1 which is held by Process 1.

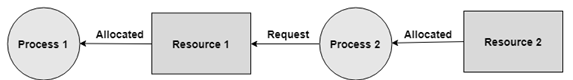

No Preemption

- A resource can't be pre-empted forcefully from a process. A process can just release a resource wishfully.

- In the graph underneath, Process 2 can't preempt Resource 1 from Process 1. It might be discharged when Process 1 gives up it intentionally after its execution is finished.

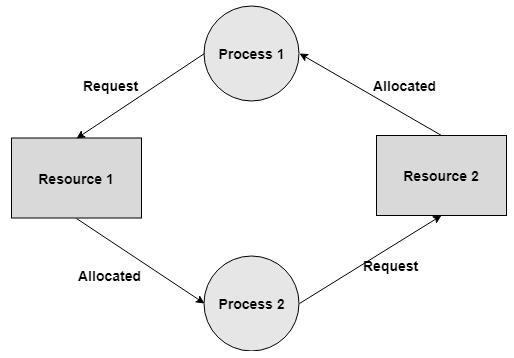

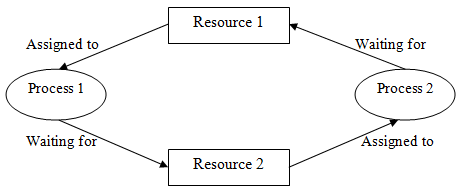

Circular Wait

- A process is waiting for the resource held continuously by another process, which is waiting for the resource held by the third process, etc, till the last process is waiting for a resource held by the first process. These structures around the chain.

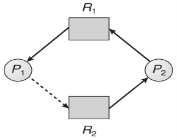

- For instance: Process 1 is assigned Resource 2 and it is requesting Resource 1. Likewise, Process 2 is assigned Resource 1 and it is requesting Resource 2. This structures a circular wait loop.

3. What is Resource-Allocation Graph in an operating system?

Sol:

- A resource allocation graph shows which resource is held by which process and which process is waiting for a resource of a specific kind.

- It is an amazing and straightforward tool to outline how interacting processes can deadlock.

- Therefore, the resource allocation graph describes what is the condition of the system as far as processes and resources are concerned. Like what many resources are accessible, what number of are allotted, and what is the request of each process.

- Everything can be represented in terms of a graph.

- One of the benefits of having a graph is, sometimes it is conceivable to see a deadlock straightforwardly by utilizing RAG, and however you probably won't realize that by taking a glance at the table.

- Yet, the tables are better if the system contains bunches of process and resource and the Graph is better if the system contains less number of process and resource.

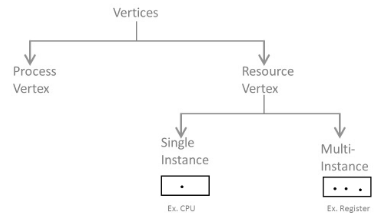

- We realize that any diagram contains vertices and edges. So RAG likewise contains vertices and edges. In RAG vertices are of two sort –

- Process vertex – Every process will be shown as a process vertex. In RAG, the process will be drawn with a circle.

- Resource vertex – Every resource will be shown as a resource vertex. It is likewise of two types –

- Single instance type resource – It is drawn as a rectangle, inside the rectangle, there will be one dot. So the quantity of dots demonstrates what number of instances are available of every resource type.

- Multi-resource instance type resource – It is also shown as a rectangle, inside the rectangle, there will be numerous dots present.

- There are two kinds of edges in RAG –

- Assign Edges-

- Assign edges shows the assigned resources to the processes.

- They are drawn as an arrow pointed towards the process and the tail focuses on the resource.

- Request Edges-

- Request edges show the waiting condition of processes for the resources.

- They are drawn as an arrow where the head of the arrow indicates the instance of the resource and the tail of the arrow focuses on the process.

- On the off chance that a process requires 'n' instances of a resource type, at that point 'n' assign edges will be drawn.

- Assign Edges-

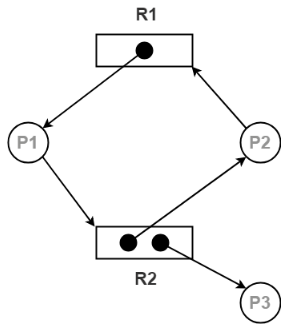

Example- Resource Allocation Graph is shown in the figure below:

The above graph describes the accompanying data:

- There exist three processes in the system P1, P2, and P3 respectively.

- There exist two resources in the system to be specific R1 and R2.

- Resource R1 has a single instance and resource R2 has two instances.

- Process P1 holds one instance of resource R1 and is waiting for an instance of resource R2.

- Process P2 holds one instance of resource R2 and is waiting for an instance of resource R1.

- Process P3 holds one instance of resource R2 and isn't waiting for anything.

4. Explain Methods for handling in an operating system

Sol:

- There are three ways we can manage the deadlock issue:

- We can utilize a protocol to prevent or avoid deadlocks, through which we can guarantee that the system will never enter a deadlock state.

- In a second way, we can enable the system to enter a deadlock state, detect it, and recover from it.

- The third solution is the simplest one in which we can ignore the issue and imagine that deadlock never happens in the system.

- In the third arrangement which is the one utilized by most operating systems, including UNIX also, Windows; it is then up to the application engineer to write programs that handle deadlocks.

- So to avoid deadlocks, the system must have extra data pretty much about all processes. Specifically, the system must recognize what resources a process will or may demand later on.

- Deadlock detection is genuinely direct, however, deadlock recovery requires either prematurely ending processes or appropriating resources, neither of which is a correct option.

- If deadlocks are neither able to prevent nor detected, at that point when a deadlock happens the system will bit by bit slowed down because an increasing number of processes become stuck waiting for resources at present held by the deadlock and by other waiting processes.

5. What is Deadlocks Deadlock prevention in an operating system?

Sol:

- Deadlock prevention algorithms guarantee that in any event one of the essential conditions (Mutual exclusion, hold and wait, no preemption, and circular wait) does not remain constant.

- This methodology includes structuring a system that violates one of the four fundamental conditions required for the event of a deadlock.

- This guarantees the system stays free from the deadlock.

- Anyway, most deadlock prevention algorithms have poor resource usage, and consequently, bring about diminished throughputs.

Mutual Exclusion

- The mutual exclusion condition must hold for non-sharable resources. For instance, a printer can't be at the same time shared by a few processes.

- Sharable resources, conversely, don't require mutually exclusive access and in this manner can't be associated with a deadlock.

- Read-only files are a genuine case of a sharable resource. If many processes endeavor to open a read-only file at the same time, they can be able to access the file simultaneously.

- A process will never wait for a sharable resource. As a rule, be that as it may, we can't prevent deadlock by denying the mutual exclusion condition, since certain resources are inherently non-sharable.

Hold and Wait

- To avoid this condition processes must be kept from holding at least one resource while at the same time waiting for at least one other. There are a few conceivable outcomes for this:

- Necessitate that all processes demand all resources one at the same time. This can be a wastage of system resources if a process needs one resource in the initial stage of its execution and does not need some other resource until some other time.

- Necessitate that processes holding resources must discharge them before requesting new resources, and after that re-acquire the resources alongside the new ones out of a single new demand. This can be an issue if a process has somewhat finished an activity utilizing a resource and, at that point neglects to get it re-allocated after releasing it.

- Both of the strategies portrayed above can prompt starvation if a process requires at least one well-known resources.

No Preemption

- Preemption of process resource allocations can anticipate this state of deadlocks when it is conceivable.

- One methodology is that if a process is compelled to hold up when mentioning another resource, at that point every other resource recently held by this process is certainly discharged, (appropriated), constraining this process to re-acquire the old resources alongside the new resources in a single request.

- Another methodology is that when a resource is requested and not accessible, at that point the system hopes to perceive what different processes right now have those resources and are themselves blocked waiting for some other resource. On the off chance that such a process is discovered, at that point, a portion of their resources may get acquired and added to the list of resources for which the process is waiting.

- Both of these methodologies might be relevant for resources whose states are effectively saved and resorted, for example, registers and memory, yet are commonly not applicable to different devices, for example, printers and tape drives.

Circular Wait

- One approach to stay away from circular wait is to number all resources, and to require that processes request resources just in carefully expanding (or diminishing) order.

- In contrast, to demand resource Rj, a process should initially discharge all Ri with the end goal that i >= j.

- One major test in this plan is deciding the overall request of the various resources.

Approach

- A natural number is allotted to each resource.

- Each process is permitted to demand the resources either in just ascending or descending order of the resource number.

- If ascending request is followed, if a process requires a lesser number of resources, at that point it must release every one of the resources having a bigger number and the other way around.

- This methodology is the most functional methodology and implementable.

- Nonetheless, this methodology may cause starvation however will never prompt deadlock.

6. What is Deadlock avoidance in an operating system?

Sol:

- Deadlock-prevention algorithm prevents the occurrence of deadlock by limiting how demands can be made. The restrictions guarantee that at any rate one of the vital conditions for deadlock can't happen and, thus, deadlock can't occur.

- Conceivable drawbacks of preventing deadlocks by this strategy, in any case, result in low device usage and decreased system throughput.

- An alternative strategy for staying away from deadlocks is to require extra data about how resources are requested.

- In this respect, each request requires that the system keeps a record of the resources as of now available, the resources at present assigned to each process, and the future demands and releases of each process.

- The different algorithms that utilize this methodology vary in the sum and type of data required. The least complex and most valuable model necessitates that each process proclaims the most maximum number of resources of each instance that it might require.

- Given this as earlier data, it is conceivable to develop an algorithm that guarantees that the system will never enter a deadlock state. Such a calculation characterizes the deadlock avoidance approach.

- A deadlock avoidance approach powerfully looks at the resource-assignment state to guarantee that a circular wait condition can never exist.

- The resource-allocation state is characterized by the number of available and allotted resources and the most extreme requests of the processes.

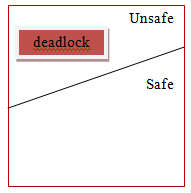

Safe State

- A state is safe if the system can assign resources to each process (up to its most extreme) in some sequence and still will be able to maintain a deadlock-free state.

- In other words, a system is in a safe state if there exists a safe sequence.

- An arrangement of processes <P1, P2, ..., Pn> is a safe arrangement for the present allocation state if, for each Pi, the resource requests that Pi make can be fulfilled by the currently accessible resources in addition to the resources held by all Pj, with j < i.

- In this circumstance, the resources that Pi needs are not quickly available, at that point then Pi can wait until all Pj have completed.

- When all Pj have completed, Pi can acquire the majority of its required resources, complete its assigned task, and return its allotted resources, and will terminate.

- At the point when Pi ends, Pi+l can get its required resources, and so on.

- If no such sequence exists, at that point the system state is said to be unsafe.

- A safe state is not a deadlock state. On the other hand, a deadlock state is an unsafe state. All the unsafe state are not deadlocked.

- Consider a system with 10 magnetic tape drives and 3 processes P0, P1, and P2 respectively. The maximum requirement and current needs of each process are shown in the table below.

Process | Maximum needs | Current allocated |

P0 | 8 | 4 |

P1 | 4 | 2 |

P2 | 8 | 2 |

- Process P0 is having 4 tape drives, P1 is having 2 tape drives and P2 is having 2 tape drives.

- For a safe sequence process, P0 needs 4 more tape drives to completes its execution. Similarly, process P1 needs 2 and P2 needs 6. Total available resources are 2.

- Since process P0 needs 5 tape drives, but availability is only 2, so process P1 will wait till gets resources. Process P1 needs only 2 so its request will be fulfilled and as P1 will finish its execution it will return all resources.

- Now available resources are 4. Then process P2 will wait as available resources are 4 and P2 needs 6.

- The system will now fulfill the needs of P0. Process P0 will execute and release its all resources. Now available resources are 8. And in the last request of the process P2 will be fulfilled.

- So, the safe sequence will be <P1, P0, P2>.

- There is one thing to be noted here that any system can go from a safe state to an unsafe state if any process requests more resources.

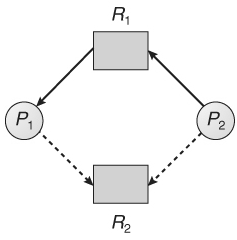

7. Explain Resource-Allocation-Graph Algorithm

Sol:

- Deadlock can be detected in a system through cycles only if the resources have just single instances in the resource-allocation graphs.

- For this situation, unsafe states can be identified and avoided by adding a claim edge in the resource-allocation graph, represented by dashed lines, which point from a process to a resource that it might request later on.

- All claim edges must be added to the graph for a specific process before that process is allowed to demand any resources.

- At the point when a process makes a request, the claim edge Pi->Rj is changed over to a request edge. Essentially when a resource is released, the task returns to a claim edge.

- This methodology works by denying demands that would deliver cycles in the resource-allocation graph, producing claim edges into results.

- Consider for instance what happens when process P2 request resource R2:

- The subsequent resource-allocation graph would have a cycle in it, thus the request can't be granted.

8. What is Banker’s Algorithm?

Sol”

- Resource allocation graph works only for a single instance. In the case of more than one instance, an alternative approach is used known as the banker's algorithm.

- The Banker's Algorithm gets its name since it is a strategy that bankers could use to guarantee that when they loan out resources they will even now have the option to fulfill every one of their client's requests.

- At the point when a process starts, it must state ahead of time the maximum resources it might ask for, up to the amount available on the system.

- At the point when a request is made, the scheduler decides if giving the request would leave the system in a safe state. In case, the process did not get a chance it must wait until the request can be granted securely.

- The banker’s algorithm depends on a few key data structures: (where n is the number of processes and m is the number of resource types.)

- Available[m] demonstrates what numbers of resources are at present available of each kind.

- Max[n][m] demonstrates the maximum request of each process of every resource.

- Allocation[n][m] demonstrates the quantity of every resource class allocated to each process.

- Need[n][m] demonstrates the rest of the resources required of each type for each process. (Note that Need[i][j] = Max[i][j] - Allocation[i][j] for all i, j)

- For simplification of the concept, we mention the accompanying notations/objective facts:

- One row of the Need vector, Need[i], can be treated as a vector comparing to the requirements of process i, and comparatively for Allocation and Max.

- A vector X is viewed as <= a vector Y if X[i] <= Y[i] for all i.

9. What is Safety Algorithm?

Sol:

We would now be able to exhibit the algorithm for seeing if or not a system is in a safe state. This algorithm can be portrayed as follows:

- Assume Work and Finish to be vectors of length m and n, individually. Initialize

Work = Available and Finish[i] = false for i = 0, 1, ... , n - 1.

2. Find an index i with the end goal that both

- Finish[i] = = false

- Needi ≤ Work

On the off chance that no such i exists, go to step 4.

3. Work = Work + Allocation;

Finish[i] = true

Go to step 2.

4. In case Finish[i] = = true for all i, at that point the system is in a safe state.

This algorithm may require a request for m x n2 operations to decide if a state is safe or not.

Resource-Request Algorithm

- Next, we depict the algorithm for deciding if requests can be securely allowed or not.

- Assume Requesti be the request vector for process Pi. If Requesti [j] = = k, at that point process Pi needs k instances of resource type Rj. At the point when a request for resources is made by process Pi, the accompanying actions are made:

- If Requesti ≤ Needi, go to step 2. Otherwise, raise an error condition, since the process has exceeded its maximum claim.

- If Requesti ≤ Available, go to step 3. Otherwise Pi must wait since the resources are not accessible.

- Have the system claim to have assigned the requested resources to process Pi by modifying the state as follows:

Available = Available – Requesti ;

Allocationi = Allocationi + Requesti ;

Needi = Needi – Requesti ;

4. In case the final resource-allocation state is safe, the transaction is finished, and process Pi is allocated its resources. Nonetheless, if the new state is unsafe, at that point Pi must wait for Requesti, and the old resource-allocation state is restored.

10. What is Deadlock detection in an operating system?

Sol:

- Deadlock detection is the process of really verifying that a deadlock exists and distinguishing the processes and resources engaged in the deadlock.

- The essential thought is to check to allocate against resource availability for all conceivable allocation sequences to decide whether the system is in a deadlocked state.

- The deadlock detection algorithm is just 50% of this procedure. When a deadlock is recognized, there should be an approach to recover a few choices exists:

- A single instance of resource: Here to detect deadlock, we can run an algorithm to check for the cycle in the Resource Allocation Graph. The presence of a cycle in the graph is an adequate condition for deadlock.

In the above graph, resource 1 and resource 2 have single occasions. There is a cycle R1 → P1 → R2 → P2. Thus, Deadlock is confirmed.

2. Multiple instances of resources: Detection of the cycle is an essential yet not adequate condition for deadlock detection, for this situation, the system might be in halt fluctuates as per various circumstances.