UNIT 5

I/O Management and Disk Scheduling

- Explain in detail Operating System - I/O Hardware

Sol:

One of the vital jobs of the associate package is to manage varied I/O devices together with the mouse, keyboards, touchpad, disk drives, show adapters, USB devices, Bit-mapped screen, LED, analog-digital converter, On/off switch, network connections, audio I/O, printers, etc.

An I/O system is needed to require associate application I/O request and send it to the physical device, then take no matter response comes back from the device and send it to the appliance. I/O devices are divided into 2 classes classes

•Block devices − A block device is one with that the motive force communicates by causing entire blocks of information. As an example, Hard disks, USB cameras, Disk-On-Key, etc.

•Character devices − a personality device is one with that the motive force communicates by causing and receiving single characters (bytes, octets). As an example, serial ports, parallel ports, sounds cards, etc

Device Controllers

Device drivers are code modules that may be blocked into the associate OS to handle a specific device. The package takes facilitate from device drivers to handle all I/O devices.

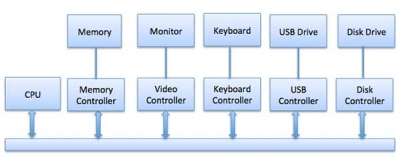

The Device Controller works like an associate interface between a tool and a tool driver. I/O units (Keyboard, mouse, printer, etc.) usually accommodate a mechanical part associated with an electronic part wherever the electronic part is termed the device controller.

There is continuously a tool controller and a tool driver for every device to speak with the operational Systems. a tool controller could also be ready to handle multiple devices. As associate interface its main task is to convert serial bit stream to the dam of bytes, perform error correction as necessary.

Any device connected to the pc is connected by a plug and socket, and also the socket is connected to a tool controller. The following could be a model for connecting the computer hardware, memory, controllers, and I/O devices wherever computer hardware and device controllers all use a standard bus for communication.

Synchronous vs asynchronous I/O

•Synchronous I/O − during this theme computer hardware execution waits whereas I/O issue

•Asynchronous I/O − I/O issue at the same time with computer hardware execution

Communication to I/O Devices

The computer hardware should have some way to pass info associated with an I/O device. There are 3 approaches out there to speak with the computer hardware and Device.

•Special Instruction I/O

•Memory-mapped I/O

•Direct operation (DMA)

Special Instruction I/O

This uses computer hardware directions that are specifically created for dominant I/O devices. These directions usually enable information to be sent to the associate I/O device or scan from the associate I/O device.

Memory-mapped I/O

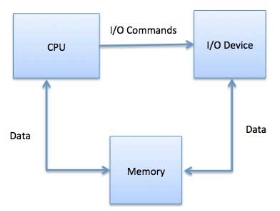

When exploiting memory-mapped I/O, an identical address house is shared by heart and I/O devices. The device is connected to sure main memory locations so the I/O device will transfer a block of information to/from memory while not browsing computer hardware.

While exploiting memory-mapped IO, OS allocates a buffer in memory and informs the I/O device to use that buffer to send information to the computer hardware. I/O device operates asynchronously with computer hardware, interrupts computer hardware once finished.

The advantage to the present methodology is that each instruction that might access memory is wont to manipulate associate I/O device. Memory-mapped IO is employed for many high-speed I/O devices like disks, communication interfaces.

Direct operation (DMA)

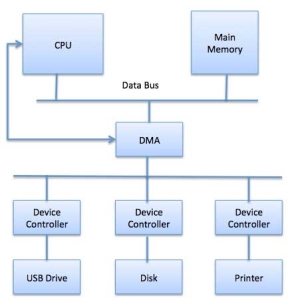

Slow devices like keyboards can generate an associate interrupt to most computer hardware when every computer memory unit is transferred. If a quick device like a disk generated associate interrupt for every computer memory unit, the package would pay most of its time handling these interrupts. Therefore a typical pc uses direct operation (DMA) hardware to cut back this overhead.

Direct operation (DMA) suggests that computer hardware grants I/O module authority to scan from or write to memory while not involved. DMA module itself controls the exchange of information between the main memory and also the I/O device. Computer hardware solely|is merely|is simply|is just|is barely} concerned at the start and finish of the transfer and interrupted only when the entire block has been transferred.

Direct operation desires special hardware referred to as DMA controller (DMAC) that manages the information transfers and arbitrates access to the system bus. The controllers are programmed with supply and destination pointers (where to read/write the data), counters to tracing the number of transferred bytes, and settings, which have I/O and memory sorts, interrupts, and states for the computer hardware cycles.

The operating system uses the DMA hardware as follows −

Step | Description |

1 | A device driver is instructed to transfer disk data to a buffer address X. |

2 | The device driver then instructs the disk controller to transfer data to the buffer. |

3 | Disk controller starts DMA transfer. |

4 | The disk controller sends each byte to the DMA controller. |

5 | DMA controller transfers bytes to buffer, increases the memory address, decreases the counter C until C becomes zero. |

6 | When C becomes zero, the DMA interrupts the CPU to signal transfer completion. |

Polling vs Interrupts I/O

A pc should have some way of sleuthing the arrival of any sort of input. There are 2 ways in which this could happen, referred to as polling and interrupts. Each of those techniques enables method to traumatizing events that may happen at any time which don't seem to be associated with the process, it's presently running.

Polling I/O

Polling is the easiest method for associate I/O device to speak with the processor. The method of sporadically checking the standing of the device to ascertain if it's time for consecutive I/O operation is termed polling. The I/O device merely puts the knowledge during a standing register, and also the processor should return and find the knowledge.

Most of the time, devices won't need attention and once one will it'll have to be compelled to wait till it's next interrogated by the polling program. This is often associated with inefficient methodology and far of the processor's time is wasted on inessential polls.

Compare this methodology to a coach frequently asking each student during a category, one when another, if they have facilitated.

The more efficient method would be for a student to inform the teacher whenever they require assistance.

Interrupts I/O

An alternative scheme for dealing with I/O is the interrupt-driven method. An interrupt is a signal to the microprocessor from a device that requires attention.

A device controller puts an interrupt signal on the bus when it needs the CPU’s attention when the CPU receives an interrupt, It saves its current state and invokes the appropriate interrupt handler using the interrupt vector (addresses of OS routines to handle various events). When the interrupting device has been dealt with, the CPU continues with its original task as if it had never been interrupted.

2. Explain in detail Operating System - I/O Software’s

Sol:

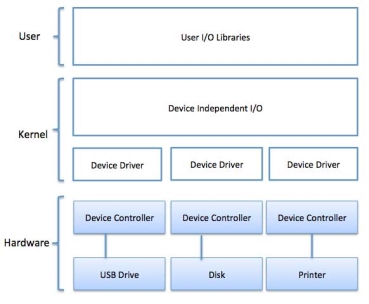

I/O package is usually organized within the following layers −

•User Level Libraries − This provides an easy interface to the user program to perform input and output. For instance, stdin maybe a library provided by C and C++ programming languages.

•Kernel Level Modules − This provides utility to act with the device controller and device freelance I/O modules employed by the device drivers.

•Hardware − This layer includes actual hardware and hardware controller that act with the device drivers and makes hardware alive.

A key thought within the style of the I/O package is that it ought to be device freelance wherever it ought to be attainable to put in writing programs which will access any I/O device while not having to specify the device before. For instance, a program that browses a file as input ought to be able to read a file on a diskette, on a tough disk, or a ROM, while not having to switch the program for every different device.

Device Drivers

Device drivers are package modules that will be obstructed into the associate OS to handle a specific device. OS takes facilitate from device drivers to handle all I/O devices. Utilities encapsulate device-dependent code and implement a typical interface in such how that code contains device-specific register reads/writes. The device driver is usually written by the device's manufacturer and delivered alongside the device on a ROM.

A device driver performs the subsequent subsequent

•To settle for a request from the device freelance package higher than that.

•Interact with the device controller to require and provides I/O and perform needed error handling

•Making positive that the request is dead with success

How a tool driver handles missive of invitation is as follows: Suppose missive of invitation involves browse a block N. If the motive force is idle at the time missive of invitation arrives, it starts affecting the request at once. Otherwise, if the motive force is already busy with another request, it places the new request within the queue of unfinished requests.

Interrupt handlers

An interrupt handler, conjointly referred to as associate interrupt utility routine or ISR, maybe a piece of the package or additional specifically a recall perform in a very OS or additional in a utility, whose execution is triggered by the reception of associate interrupt.

When the interrupt happens, the interrupt procedure will no matter its to handle the interrupt, updates knowledge structures associated wakes up method that was awaiting an interrupt to happen.

The interrupt mechanism accepts associate address ─ a variety that selects a particular interrupt handling routine/function from a tiny low set. In most architectures, this address is associate with offset hold on in a very table referred to as the interrupt vector table. This vector contains the memory addresses of specialized interrupt handlers.

Device-Independent I/O package

The basic performance of the device-independent package is to perform the I/O functions that are common to all or any devices and to supply an even interface to the user-level package. Tho' it's tough to put in writing utterly device freelance package, however we can write some modules that are common among all the devices. Following may be a list of functions of a device-independent I/O package package

•Uniform interfacing for device drivers

•Device naming - mnemotechnic names mapped to Major and Minor device numbers

•Device protection

•Providing a device-independent block size

•Buffering as a result of knowledge returning off a tool can't hold on to the final destination.

•Storage allocation on block devices

•Allocation and cathartic dedicated devices

•Error coverage

User-Space I/O package

These are the libraries that offer a richer and simplified interface to access the practicality of the kernel or ultimately interact with the device drivers. Most of the user-level I/O package consists of library procedures with some exceptions like a spooling system that may be a manner of addressing dedicated I/O devices in a very concurrent execution system.

I/O Libraries (e.g., stdin) are in user-space to supply associate interface to the OS resident device-independent I/O SW. For instance putchar(), getchar(), printf() and scanf() are an example of user-level I/O library stdio accessible in C programming.

Kernel I/O scheme

Kernel I/O scheme is accountable to supply several services associated with I/O. Following are a number of the services provided.

•Scheduling − Kernel schedules a collection of I/O requests to see an honest order during which to execute them. Once associate application problems a block I/O call, the request is placed on the queue for that device. The Kernel I/O hardware rearranges the order of the queue to enhance the system potency and therefore the average reaction time skilled by the applications.

•Buffering − Kernel I/O scheme maintains a memory space referred to as a buffer that stores knowledge whereas they're transferred between 2 devices or between a tool with associate application operation. Buffering is finished to address a speed mate between the producer and shopper of an information stream or to adapt between devices that have different data transfer sizes.

•Caching − Kernel maintains cache memory that is a region of quick memory that holds copies of knowledge. Access to the cached copy is additional economical than access to the first.

•Spooling and Device Reservation − A spool may be a buffer that holds output for a tool, like a printer, that can't settle for interleaved knowledge streams. The spooling system copies the queued spool files to the printer one at a time. In some operation systems, spooling is managed by a system daemon method. In different operation systems, it's handled by an associate in the kernel thread.

•Error Handling − associate OS that uses protected memory will guard against several types of hardware and application errors.

3. Write some of the Application I/O interfaces

Sol:

I/O Interface :

There is need of surface whenever any CPU wants to communicate with I/O devices. The interface is used to interpret address which is generated by CPU. Thus, surface is used to communicate to I/O devices i.e. to share information between CPU and I/O devices interface is used which is called as I/O Interface.

Various applications of I/O Interface :

Application of I/O is that we can say interface have access to open any file without any kind of information about file i.e., even basic information of file is unknown. It also has a feature that can be used to also add new devices to the computer system even it does not cause any kind of interruption to the operating system. It can also be used to abstract differences in I/O devices by identifying general kinds. The access to each of the general kinds is through a standardized set of functions which is called an interface.

Each type of operating system has its category for the interface of device-drivers. The device which is given may ship with multiple device-drivers-for instances, drivers for Windows, Linux, AIX, and Mac OS, devices may is varied by dimensions which are as illustrated in the following table :

S.No. | Basis | Alteration | Example |

1. | Mode of Data-transfer | Character or block | Terminal disk |

2. | Method of Accessing data | Sequential or random | Modem, CD-ROM |

3. | Transfer schedule | Synchronous or asynchronous | Tape, keyboard |

4. | Sharing methods | Dedicated or sharable | Tape, keyboard |

5. | Speed of device | Latency, seek time, transfer rate, the delay between operations |

|

6. | I/O Interface | Read-only, write-only, read-write | CD-ROM graphics controller disk |

- Character-stream or Block:

A character stream or block transfers data in form of bytes. The difference between both of them is that character-stream transfers bytes in the linear way i.e., one after another whereas block transfers whole byte in single unit.

2. Sequential or Random access:

To transfer data in fixed order determined by the device, we use sequential device whereas user to instruct the device to seek to any of data storage locations, the random-access device is used.

3. Synchronous or Asynchronous:

Data transfers with predictable response times are performed by a synchronous device, in coordination with other aspects of the system. Irregular or unpredictable response times not coordinated with other computer events are exhibits by an asynchronous device.

4. Sharable or Dedicated:

Several processes or threads can be used concurrently by the sharable device; whereas a dedicated device cannot.

5. Speed of Operation:

The speed of the device has a range set which is of few bytes per second to a few gega-bytes per second.

6. Read-writ, read-only, write-only:

Different devices perform different operations, some supports both input and output, but others supports only one data transfer direction either input or output.

4. What is the Kernel I/O subsystem in an operating system?

Sol: T

The kernel provides several services associated with I/O. Many services like planning, caching, spooling, device reservation, and error handling – area unit provided by the kernel, s I/O scheme designed on the hardware and device-driver infrastructure. The I/O scheme is additionally accountable for protective itself from errant processes and malicious users.

1.I/O planning –

To schedule, a group of I/O requests suggests that to see an honest order within which to execute them. The order within which application problems the call area unit the most effective selection. Planning will improve the general performance of the system, will share device access permission fairly to all or any the processes, cut back the common waiting time, time interval, turnaround for I/O to finish.

OS developers implement planning by maintaining a wait queue of the request for every device. Once the associate application issue an interference I/O call, The request is placed within the queue for that device. The I/O computer hardware set up the order to enhance the potency of the system.

2.Buffering –

A buffer could be a memory space that stores information being transferred between 2 devices or between a tool associated with an application. Buffering is finished for 3 reasons.

1.1st is to deal with a speed mate between the producer and client of a knowledge stream.

2.The second use of buffering is to adapt information that has completely different data-transfer sizes.

3.Third use of buffering is to support copy linguistics for the appliance I/O, “copy linguistics ” suggests that suppose that associate application desires to write down information on a disk that's holding on in its buffer. It decisions the write() system’s call, providing a pointer to the buffer and also the number specifying the number of bytes to write down.

Q. When the call returns, what happens if the appliance of the buffer changes the content of the buffer?

Ans. With copy linguistics, the version of the information written to the disk is certain to be the version at the time of the appliance call.

3.Caching –

A cache could be a region of quick memory that holds a replica of knowledge. Access to the cached copy is far easier than the initial file. For example, the instruction of the presently running method is to hold on to the disk, cached in physical memory, and traced once more within the CPU’s secondary and first cache.

The main distinction between a buffer and a cache is that a buffer could hold solely the prevailing copy of a knowledge item, whereas cache, by definition, holds a replica on quicker storage of associate item that resides elsewhere.

4.Spooling and Device Reservation –

A spool could be a buffer that holds the output of a tool, like a printer that can't settle for interleaved information streams. Though a printer will serve just one job at a time, many applications may need to print their output at the same time, while not having their output mixes along.

The OS solves this downside by preventing all output continued to the printer. The output of all applications is spooled in a very separate computer file. Once the associate application finishes printing then the spooling system queues the corresponding spool file for output to the printer.

5.Error Handling –

An Os that uses protected memory will guard against several types of hardware and application errors, so an entire system failure isn't the standard results of every minor mechanical flaw, Devices, and I/O transfers will fail in some ways, either for transient reasons, as once a network becomes full or for permanent reasons, as once a controller becomes defective.

6.I/O Protection –

Errors and also the issue of the protection area unit closely connected. A user method could conceive to issue misappropriated I/O directions to disrupt the traditional operate of a system. We can use the varied mechanisms to make sure that such disruption cannot ensue within the system.

To prevent misappropriated I/O access, we tend to outline all I/O directions to be privileged directions. The user cannot issue I/O instruction directly.

5. Explain Transforming I/O request to hardware operation

Sol:

We know that there's acknowledgment between driver and device controller however here question is that however OS connects application request or we can say I/O request to the line of network wires or specific disk sector or we can notify hardware -operations.

To understand the idea allow us to take into account an example that is as follows.

Example –

We area unit reading files from disk. The applying we tend to request can refer to the information by file name. Inside the disk, the classification system maps from file name through file-system directories to get area allocation for the file. In MS-DOS, the name of file maps to the variety that indicates as an entry in the file-access table, which entry to table tells America that that disk blocks area unit allotted to file. In UNIX, name maps to inode variety, and inode variety contains data regarding space-allocation. However here the question arises that however affiliation is formed from filename to disk controller.

The method that's employed by MS-DOS, is comparatively straightforward OS. The primary part of the MS-DOS file name, which is preceding with a colon, is a string that identifies that there's a specific hardware device.

The UNIX operating system uses a completely different methodology from MS-DOS. It represents device names in the regular file-system name area. In contrast to MS-DOS file name, which has colon centrifuge, however, UNIX operating system pathname has no clear separation of device portion. No part of the pathname is the name of the device. UNIX operating system has a mount table that associates with prefixes of pathnames with specific hardware device names.

Modern operational systems gain important flexibility from multiple stages of search tables in the path between request and physical device stages controller. There are general mechanisms is that is employed to pass request between application and drivers. Thus, while not recompiling the kernel, we can introduce new devices and drivers into the laptop. Some OS have the power to load device drivers on demand. At the time of booting, the system first off probes hardware buses to see what devices area unit gift. It's then loaded to necessary drivers, consequently I/O request.

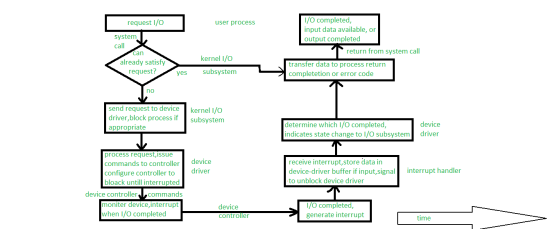

The typical life cycle of obstruction scan request is shown within the following figure. From the figure, we can recommend that I/O operation needs several steps that along consume a sizable amount of mainframe cycles.

Figure – The life cycle of I/O request

1.Supervisor call instruction –

Whenever any I/O request comes, method problems obstruction read() supervisor call instruction to antecedently opened file descriptor of file. The role of system-call code is to see parameters for correctness in the kernel. If the information we tend to place in the type of input is already on the market in the buffer cache, the information goes to came back to the method, and in this case, the I/O request is completed.

2.Different approach if the input isn't on the market –

If the info isn't on the market in buffer cache then physical I/O should be performed. The method is removed from the run queue and is placed on the wait queue for the device, and I/O request is scheduled. Once programming, the I/O system sends a request to the driver via procedure decision or in-kernel message however it depends upon OS by that mode request can send.

3.Role of the driver –

After receiving the request, the driver got to receive information and it'll receive information by allocating kernel buffer area, and once receiving information it'll schedules I/O. Despite everything this, commands are given to the device controller by writing into device-control registers.

4.Role of Device Controller –

Now, the device controller operates device hardware. Information transfer is finished by device hardware.

5.Role of DMA controller –

After information transfer, the driver could poll for standing and information, or it should have got wind of DMA transfer into kernel memory. The transfer is managed by the DMA controller. Eventually once transfers complete, it'll generate an interrupt.

6.Role of interrupt handler –

The interrupt is sent to the correct interrupt handler through the interrupt-vector table. It stores any necessary information, signals driver, and returns from interrupt.

7.Completion of I/O request –

When a driver receives the signal. This signal determines that the I/O request has been completed and conjointly determines the request’s standing, signals kernel I/O system that request has been completed. Once transferring information or come back codes to deal with area kernel moves method from wait queue back to the prepared queue.

8.Completion of supervisor call instruction –

When the method moves to the prepared queue it means that method is unblocked. Once the method is appointed to the mainframe, it means that the method resumes execution after the supervisor call instruction.

6. Explain pseudo parallelism. Describe the process model that makes parallelism easier to deal with.

Sol:

All modern computers can do many things at the same time. For Example, the computer can be reading from a disk and printing on a printer while running a user program. In a multiprogramming system, the CPU switches from program to program, running each program for a fraction of a second.

Although the CPU is running only one program at any instant of time. As CPU speed is very high so it can work on several programs in a second. It gives the user an illusion of parallelism i.e. several processes are being processed at the same time. This rapid switching back and forth of the CPU between programs gives the illusion of parallelism and is termed as pseudo parallelism. As it is extremely difficult to keep track of multiple, parallel activities, to make parallelism easier to deal with, the operating system designers have evolved a process model.

The Process Model

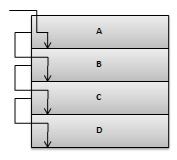

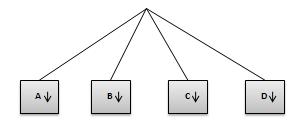

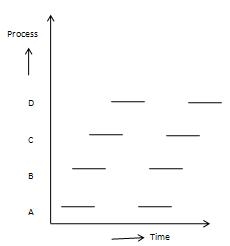

In the process model, all the run-able software on the computer (including the operating system) is organized into a sequence of processes. A process is just an executing program and includes the current values of the program counter, registers, and variables. Each process is considered to have its virtual CPU. The real CPU switches back and forth from process to process. To track CPU switches from program to program, it is convenient/easier to think about a collection/number of processes running in (pseudo) parallel. The rapid switching back and forth is in reality, multiprogramming.

One Program Counter Process Switch

This figure shows the multiprogramming of four programs.

Conceptual model of 4 independent sequential processes.

Only one program is active at any moment. The rate at which processes perform computation might not be uniform. However, usually processes are not affected by the relative speeds of different processes.

7. Shown below is the workload for 5 jobs arriving at time zero in the order given below −

Job | Burst Time |

1 | 10 |

2 | 29 |

3 | 3 |

4 | 7 |

4 | 12 |

Now find out which algorithm among FCFS, SJF, And Round Robin with quantum 10, would give the minimum average time.

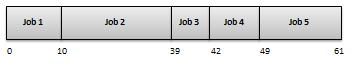

For FCFS, the jobs will be executed as:

Job | Waiting Time |

1 | 0 |

2 | 10 |

3 | 39 |

4 | 42 |

5 | 49 |

| 140 |

The average waiting time is 140/5=28.

For SJF (non-preemptive), the jobs will be executed as:

Job | Waiting Time |

1 | 10 |

2 | 32 |

3 | 0 |

4 | 3 |

5 | 20 |

| 65 |

The average waiting time is 65/5=13.

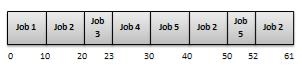

For Round Robin, the jobs will be executed as:

Job | Waiting Time |

1 | 0 |

2 | 32 |

3 | 20 |

4 | 23 |

5 | 40 |

| 115 |

The average waiting time is 115/5=23.

Thus SJF gives the minimum average waiting time.

8. What is the Highest Response Ratio Next (HRN) Scheduling?

Sol:

- HRN is a non-preemptive scheduling algorithm.

- In Shortest Job First scheduling, priority is given to the shortest job, which may sometimes indefinite blocking of longer job.

- HRN Scheduling is used to correct this disadvantage of SJF.

- For determining priority, not only the job's service time but the waiting time is also considered.

- In this algorithm, dynamic priorities are used instead of fixed priorities.

- Dynamic priorities in HRN are calculated as

Priority = (waiting time + service time) / service time.

- So shorter jobs get preference over longer processes because service time appears in the denominator.

- Longer jobs that have been waiting for a long period are also given favorable treatment because waiting time is considered in the numerator.

9. Explain time slicing? How its duration affects the overall working of the system?

Sol:

Time slicing is a scheduling mechanism/way used in time-sharing systems. It is also termed as Round Robin scheduling. The aim of Round Robin scheduling or time-slicing scheduling is to give all processes an equal opportunity to use CPU. In this type of scheduling, CPU time is divided into slices that are to be allocated to ready processes. Short processes may be executed within a single time quantum. Long processes may require several quanta.

The Duration of time slice or Quantum

The performance of the time-slicing policy is heavily dependent on the size/duration of the time quantum. When the time quantum is very large, the Round Robin policy becomes an FCFS policy. Too short quantum causes too many process/context switches and reduces CPU efficiency. So the choice of time quanta is a very important design decision. Switching from one process to another requires a certain amount of time to save and load registers, update various tables and lists, etc.

Consider, as an example, process switch or context switch takes 5 m sec and time slice duration be 20 m sec. Thus CPU has to spend 5 m sec on process switching again and again wasting 20% of CPU time. Let the time slice size be set to say 500 m sec and 10 processes are in the ready queue. If P1 starts executing for the first time slice then P2 will have to wait for 1/2 sec, and the waiting time for other processes will increase. The unlucky last (P10) will have to wait for 5 sec, assuming that all others use their full-time slices. To conclude setting the time slice.

- Too short will cause too many process switches and will lower CPU efficiency.

- Setting too long will cause a poor response to short interactive processes.

- A quantum around 100 m sec is usually reasonable.

10. What are the different principles which must be considered while selecting a scheduling algorithm?

Sol:

The objective/principle which should be kept in view while selecting a scheduling policy is the following −

- Fairness − All processes should be treated the same. No process should suffer indefinite postponement.

- Maximize throughput − Attain maximum throughput. The largest possible number of processes per unit time should be serviced.

- Predictability − A given job should run in about the same predictable amount of time and at about the same cost irrespective of the load on the system.

- Maximum resource usage − The system resources should be kept busy. Indefinite postponement should be avoided by enforcing priorities.

- Controlled Time − There should be control over different times −

- Response time

- Turnaround time

- Waiting time

The objective should be to minimize the above mentioned times.