Unit - 1

Classes of computers

Q1)

A1) Computers on the Basis of Size and Capacity

● Supercomputer

A supercomputer is the most powerful, fastest, and most costly form of computer for data processing. The size and storage capacity of supercomputers are likewise enormous (they can take up a lot of space) and are designed to process large amounts of data quickly and efficiently.

These are designed to accomplish a variety of activities. As a result, numerous CPUs on these supercomputers work in parallel. Multiprocessing or Parallel Processing is the name given to this capability of a supercomputer.

The design of a supercomputer is complex, as it can be heterogeneous, mixing machines with multiple architectures, and it outperforms most existing desktop computers. As a result, this system was upgraded to an ultra-high-performance supercomputer.

Each component of a supercomputer is responsible for a certain duty, such as arranging and solving the most complex problems that necessitate a massive amount of computation.

Uses

● In the fields of energy and nuclear weapons research and development, as well as the design of aircraft, airplanes, and flight simulators.

● Climate research, weather forecasting, and natural disaster prediction

● Launch of a spaceship and a satellite.

● In scientific research laboratories, it is used.

● Used in Chemical and Biological research and for highly calculation complex tasks.

● Mainframe computer

Mainframe computers are multi-programming, high-performance computers with multi-user capabilities, which means they can handle the workload of up to 100 users at once.

The mainframe has a massive storage capacity and a high-speed data processing system. In addition, hundreds of input and output devices can be handled at once.

The mainframe is a powerful computer that can perform complex calculations in parallel and for an extended period of time. These computers contain many microprocessors capable of processing data at excessively high performance and speed.

In practically every parameter, the mainframe outperforms our modern personal computers. The ability to change them "on the fly" on the mainframe computer ensures that operations are not disrupted. Furthermore, the average CPU consumption easily exceeds 85 percent of the total power.

Because the mainframe supports multiple processes at the same time, it can be more powerful than supercomputers.

Uses

Departmental and commercial enterprises such as banks, companies, scientific research institutions, and government departments such as railways primarily employ mainframe computers. These computers have a 24-hour working capacity. Hundreds of people can work on these calculations at the same time.

The mainframe is used to execute duties such as keeping track of payments, research centers, advertising, sending invoices and notices, paying staff, ticket booking, keeping track of user transactions, keeping thorough tax information, and so on.

● Mini computer

A minicomputer is a digital, multi-user computer system with many CPUs connected. As a result, instead of a single person working on these computers, multiple individuals can work on them at the same time. It can also work with other devices such as a printer, plotter, and so on.

Minicomputers are a type of computer that is between microcomputers and minicomputers in terms of capabilities and price. Minicomputers, on the other hand, are larger in terms of size, storage, and performance than mainframes and supercomputers.

Instead of assigning several microcomputers to a single task, which would be time-consuming and expensive, minicomputers are designed to do multiple computing tasks at the same time.

Uses

In industries, bookings, and research centers, minicomputers are employed as real-time applications. Banks, as well as higher education and engineering, use minicomputers to prepare payroll for employees' salaries, keep records, track financial accounts, and so on.

● Micro computer

Today, we use several computers at home, and the microcomputer is the most prevalent. With the invention of microprocessors in 1970, it became possible to utilize computers for personal use at a cheap cost and fair pricing, which became known as the Digital Personal Computer.

Microcomputers are small and have limited storage capacity. These computers are made up of a variety of components, including input and output devices, software, operating systems, networks, and servers, all of which must operate together to form a full Personal Digital Computer.

Microcomputers include more than just a PC or a laptop. Smartphones, Tablets, PDAs, servers, palmtops, and workstations are all examples of microcomputers.

This can be used in any professional environment, as well as at home for personal usage.

Microcomputers are mostly used to record and process people's daily chores and requirements. Although only one person can work at a time on a single PC, the operating system allows for multitasking. To take use of the benefits and improve the user experience, the PC can be connected to the Internet.

Microcomputers have increased in demand for every industry due to the growth of multimedia, tiny equipment, optimized energy usage, and the LAN.

People's increasing desire and necessity for microcomputers has resulted in enormous development of every component linked to microcomputers.

Uses

PCs are widely utilized in a variety of settings, including the home, the office, data collection, business, education, entertainment, publishing, and so on.

In a major organization, it keeps track of details and prepares letters for correspondence, as well as preparing invoices, bookkeeping, word processing, and operating file systems.

IBM, Lenovo, Apple, HCL, HP, and others are some of the top PC makers.

Desktops, tablets, cellphones, and laptops are just a few examples.

The rapid technological advancement of microcomputers. As a result, today's microcomputers can be found in the form of a book, a phone, or even a digital clock.

Q2) Describe the computer on the basis of purpose?

A2) Computer on the Basis of Purposes

● General purpose

Writing a word processing letter, document preparation, recording, financial analysis, printing papers, constructing databases, and calculations are all tasks that general computers can perform with accuracy and consistency.

These computers are often smaller, have less storage space, and are less expensive. The ability of these computers to execute specialized tasks is restricted. Nonetheless, it is adaptable and effective for meeting people's basic needs at home or in the workplace.

Example

Desktops, laptops, smartphones, and tablets are used on daily basis for general purposes.

● Special purpose

These computers are made to carry out a specific or specialized activity. The nature and size of the job determine the size, storage capacity, and cost of such computers. The purpose of these computers is to perform a certain task.

To work effectively, the unique computer requires certain input and output devices, as well as a motherboard that is compatible with the processor.

Weather forecasting, space research, agriculture, engineering, meteorology, satellite operation, traffic control, and chemical science research all employ these computers.

Example

● Automatic teller machines (ATM),

● Washing machines,

● Surveillance equipment,

● Weather-forecasting simulators,

● Traffic-control computers,

● Defense-oriented applications,

● Oil-exploration systems,

● Military planes controlling computers.

Q3) Explain the computer on the basis of hardware design and type?

A3) Computer on the Basis of Hardware Design and Type

● Analog computer

An analog computer uses continuous data to complete tasks (the physical amount that changes continuously). Analog computers are generally used to transform physical quantities such as voltage, pressure, electric current, and temperature to digits.

It can also be used to calculate and measure the length of an object, as well as the amount of voltage that travels through a point in an electrical circuit. Analog computers get all of their information via some kind of measurement.

Analog computers are mostly utilized in research and engineering disciplines. Analog computers are slow, because they are designed to measure rather than count or check things.

They're employed in a variety of industries, including technology, science, research, and engineering. These sorts of computers can only provide approximate estimations because quantities like voltage, pressure, electric current, and temperature are employed more frequently in these fields.

Example

A fuel pump's analog computer measures the amount of petrol that comes out of the pump and displays it in liters. And it figures out how much it's worth. These numbers change over time while measuring the amount, for example, the temperature of a human body changes with time.

Analog computing can be seen in a simple clock, a vehicle's speedometer, a Voltmeter, and other devices.

● Digital computer

A digital computer, as its name implies, depicts the computer's letters, numerical values, or other unique symbols. This computer is the one that calculates the numbers needed to process the information.

They work with electronic signs and use the binary numeral system Binary System 0 or 1 to calculate. Their movement is quick.

It can do arithmetic operations such addition, subtraction, multiplication, and division, as well as logical (mathematical) operations. The majority of computers on the market today are digital computers.

Digital computers are designed to solve equations with almost infinite precision, but they are a little slower than analog computers. They all use a minimal number of key functions to fulfill their tasks and have similar components for receiving, processing, sorting, and transmitting data.

Because of their variety, speed, and power, digital computers are the most popular type of computer today. They operate using discrete electrical signals rather than continuous electrical signals like analog computers do.

One of the most popular and greatest examples of a digital computer is the desktop or laptop computer that we have at home.

Example

● Personal Desktop Computers,

● Calculators,

● Laptops, Smartphones, and Tablets,

● Digital watch,

● Accounting machines,

● Workstations,

● Digital clock, etc.

● Hybrid Computer

A hybrid computer is a complicated computer unit that combines analog and digital characteristics and is controlled by a single control system. The goal of hybrid computer design is to provide capabilities and features present on both analog and digital devices.

The goal of developing a hybrid computer is to build a work unit that combines the finest features of both types of computers. When driving equations, hybrid computers are extraordinarily quick, even when the computations are quite complicated.

Because both qualities of a computer combine to generate a single hybrid computer capable of solving extremely complicated computations or problems, these systems are not only prohibitively expensive but also incapable of solving difficult challenges quickly.

Incorporating the properties of both (analog and digital) computers into hybrid computers allows more complicated equations to be resolved more quickly.

The equation procedure is solved instantly by analog computer systems. However, those solutions do not have to be totally exact.

Application

Hybrid computers are widely employed in a variety of industries, research centers, organizations, and industrial companies (where many equations need to be solved).

Hybrid computer systems and applications have also shown to be far more thorough, accurate, and valuable. Hybrid computers are employed in scientific calculations, as well as military and radar systems.

Q4) What do you mean by trends in technology?

A4) If an instruction set architecture is to be effective, it must be built to withstand rapid technological developments. After all, a successful new instruction set design can persist for decades—the IBM mainframe's core, for example, has been in use for over 40 years. An architect must account for technological advancements that will extend the life of a successful computer.

The designer must be aware of quick changes in implementation technology in order to prepare for the evolution of a computer. Modern implementations require four implementation technologies, all of which are rapidly evolving:

Integrated circuit logic technology - Transistor density grows at a rate of roughly 35% each year, quadrupling in just over four years. Die size increases are less predictable and occur at a slower rate, ranging from 10% to 20% every year. The overall result is a 40 percent to 55 percent annual increase in transistor count on a chip.

Semiconductor DRAM (dynamic random-access memory)—Capacity doubles every two years, increasing by about 40% every year.

Magnetic disk technology - Prior to 1990, density grew at a rate of roughly 30% each year, doubling every three years. Following that, it increased to 60% every year, and then to 100% per year in 1996. It has reduced to 30 percent per year since 2004. Disks are still 50–100 times cheaper per bit than DRAM, notwithstanding this roller coaster of improvement rates.

Network technology - The performance of the network is determined by the performance of the switches as well as the transmission system. These fast changing technologies influence the design of a computer that, with increased speed and technological advancements, may last five or more years.

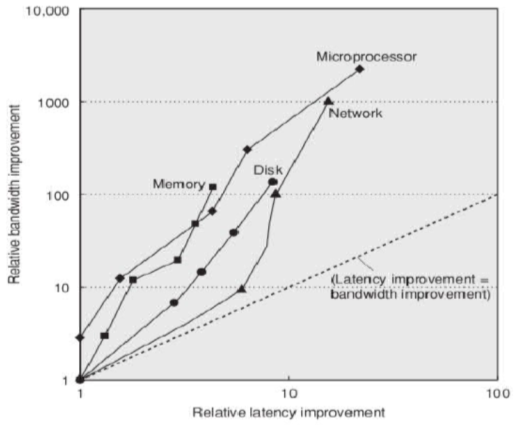

Performance trends - over-bandwidth The total quantity of work done in a certain time, such as megabytes per second for a disk transfer, is known as latency bandwidth or throughput. Latency, or response time, is the time between the start and finish of an event, such as disk access, which is measured in milliseconds. The graph below shows the relative improvement in bandwidth and latency for microprocessors, memory, networks, and disk technological milestones.

Fig 1: relative improvement in bandwidth and latency for microprocessors, memory, networks, and disk technological milestones.

Scaling of Transistor Performance and Wires

The feature size, which is the smallest size of a transistor or wire in either the x or y dimension, defines integrated circuit procedures. The size of features has shrunk from 10 microns in 1971 to 0.09 microns in 2006. The surface area of a transistor determines the number of transistors per square millimeter of silicon; the density of transistors grows quadratically with a linear decrease in feature size.

The improvement in transistor performance, on the other hand, is more complicated. Devices shrink quadratically in the horizontal dimension and also in the vertical dimension when feature sizes shrink. To preserve correct operation and dependability of the transistors, the vertical dimension must be shrunk. This necessitates a drop in operating voltage. This intricate interplay between transistor performance and process feature size is the result of this mix of scaling variables. Transistor performance improves linearly with decreasing feature size, to a first approximation.

Although transistor performance improves as feature size shrinks, wires in an integrated circuit do not. The signal delay of a wire, in particular, grows in proportion to the product of its resistance and capacitance. Wires get shorter as feature sizes reduce, but the resistance and capacitance per unit length get worse. Wire delay has become a major design barrier for large integrated circuits in recent years, and it is frequently more critical than transistor switching delay.

Q5) What are trends in power?

A5) As devices become more powerful, they pose new challenges.

For starters, electricity must be brought in and dispersed throughout the chip, which necessitates the usage of hundreds of pins and many connecting layers in modern microprocessors.

Second, energy is dissipated in the form of heat, which must be eliminated.

Switching transistors, also known as dynamic power, have traditionally consumed the majority of energy in CMOS circuits. The power required per transistor is proportional to the product of the transistor's load capacitance, the square of the voltage, and the switching frequency, with watts being the unit of measurement:

Power dynamic = 1 ⁄ 2 × Capacitive load × Voltage 2 × Frequency switched

Because battery life is more important to mobile devices than power, energy, measured in joules, is the suitable metric:

Energy dynamic = Capacitive load × Voltage

As a result, lowering the voltage dramatically reduces dynamic power and energy, and voltages have plummeted from 5V to little over 1V in 20 years.

Although dynamic power is the primary source of power dissipation in CMOS, static power is becoming more of a concern as leakage current continues to flow even when the transistor is turned off:

Power ststic = Current static × Voltage

As a result, increasing the number of transistors increases power even when they are turned off, and in processors with lower transistor sizes, leakage current increases.

As a result, very low-power systems are gating the voltage to inactive modules to prevent leakage loss.

Example:

Today's microprocessors are built with adjustable voltage, so a 15% reduction in voltage may result in a 15% reduction in frequency. What effect would this have on dynamic power?

Solution - Because the capacitance remains constant, the answer is found in the voltage and frequency ratios:

Power new = (Voltage × 0.85)2 × (Frequency switched × 0.85)

Power old Voltage 2 × Frequency switched

As a result, power consumption is reduced to around 60% of its original level.

Q6) Write about trends in costs?

A6) Although there are some computer systems where prices are less essential, such as supercomputers, cost-sensitive architectures are becoming increasingly significant. Indeed, throughout the last 20 years, the computer industry has focused on using technological advancements to reduce costs and improve performance.

The Impact of Time, Volume, and Commodification

Even without significant advancements in core implementation technology, the cost of a produced computer component reduces over time. The learning curve is the primary idea that brings down costs: production costs drop over time. The change in yield—the percentage of manufactured devices that survive the testing procedure—is the strongest indicator of the learning curve. Designs with twice the yield will cost half as much as those with half the yield, whether it's a chip, a board, or a system.

Understanding how the learning curve affects yield is crucial for estimating expenses over the life of a product.

Microprocessor prices fall over time as well, but the relationship between price and cost is more complicated because they are less standardized than DRAMs. Price tends to track cost closely during a period of intense competition, however microprocessor vendors are unlikely to sell at a loss. The graph depicts the price of Intel microprocessors through time.

The second most important aspect in influencing cost is volume. Increasing volume has a number of cost implications. For starters, they shorten the time it takes to progress along the learning curve, which is related to the number of systems (or chips) produced. Second, because volume promotes purchasing and production efficiency, it lowers costs. Some designers have calculated that with every doubling of volume, cost drops by around 10%.

Commodities are things that are essentially identical and are sold in huge quantities by various sellers. Almost all things available on grocery store shelves, as well as typical DRAMs, disks, displays, and keyboards, are commodities.

It is extremely competitive because numerous manufacturers provide nearly identical products. This rivalry, of course, narrows the difference between cost and selling price, but it also lowers costs.

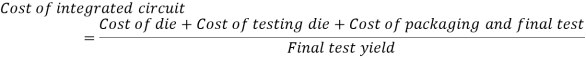

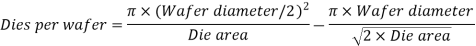

Costs of an integrated circuits

Despite the fact that the cost of integrated circuits has decreased rapidly, the underlying silicon manufacturing method has remained unchanged: A wafer is still checked and sliced into packaged dies. As a result, a packed integrated circuit's price is

We concentrate on die costs, concluding with a summary of the important difficulties in testing and packaging.

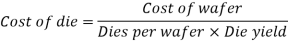

To learn how to anticipate the number of good chips per wafer, you must first understand how many dies fit on a wafer, and then you must understand how to predict the percentage of those dies that will work. It's easy to estimate costs from there:

The sensitivity of this first part of the chip cost equation to die size, as illustrated below, is the most intriguing element of it.

The number of dies per wafer is roughly equal to the wafer's area divided by the die's area. It can be estimated more precisely if

Q7) What is dependability?

A7) Integrated circuits were once considered one of the most trustworthy computer components. The error rate inside the chip was exceptionally low, despite the fact that their pins were exposed and errors may occur across communication channels.

Computers are planned and built at many levels of abstraction. We can recursively descend through a computer, watching components grow into whole subsystems until we reach individual transistors. Although some failures are ubiquitous, such as power loss, many are isolated to a single component in a module. As a result, a module's complete failure at one level may be only a component fault in a higher-level module. This distinction is useful when trying to figure out how to make reliable computers.

From a reference beginning instant, module reliability is a measure of ongoing service accomplishment (or, equivalently, time to failure). As a result, the mean time to failure (MTTF) is a measure of reliability. The inverse of MTTF is a failure rate, which is usually expressed as failures per billion hours of operation, or FIT (for failures in time). The sum of MTTF + MTTR is the mean time between failures (MTBF). Although MTBF is commonly used, MTTF is generally a better term.

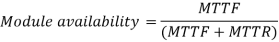

Module availability is a metric for how well a service performs in terms of switching between the two states of accomplishment and interruption. Module availability is important for non-redundant systems that require repair.

Q8) What do you mean by quantitative principle of computer design?

A8) The following are some rules and principles that can be used in computer design and analysis.

Take Advantage of Parallelism

One of the most essential strategies for enhancing performance is to take advantage of parallelism.

Scalability refers to the ability to extend memory, CPUs, and drives, and it is a crucial feature for servers.

Taking use of parallelism among instructions at the level of an individual processor is crucial to achieving high performance. Pipelining is one of the simplest ways to accomplish this. At the level of detailed digital design, parallelism can also be used.

To find a requested item, set-associative caches, for example, utilise numerous banks of memory that are often scanned in simultaneously. Carry-lookahead is a technique used in modern ALUs that employs parallelism to speed up the process of computing sums from linear to logarithmic in number of bits per operand.

Principle of Locality

To find a requested item, set-associative caches, for example, utilise numerous banks of memory that are often scanned in simultaneously. Carry-lookahead is a technique used in modern ALUs that employs parallelism to speed up the process of computing sums from linear to logarithmic in number of bits per operand.

Focusing on the most common instance is effective for both power and resource allocation and performance. Because a processor's instruction fetch and decode unit is utilized far more frequently than a multiplier, it should be optimized first. It also works on reliability.

This principle can be quantified using Amdahl's Law, a fundamental law.

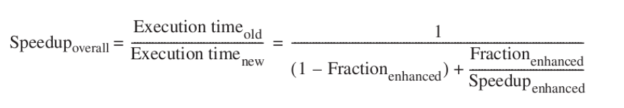

Amdahl's Law can be used to calculate the performance improvement that can be realized by enhancing some aspect of a computer. According to Amdahl's Law, the performance increase from adopting a faster mode of execution is limited by the proportion of time that faster mode can be used.

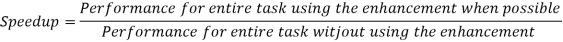

Amdahl's Law specifies the amount of speed that can be obtained by utilizing a certain feature. What exactly is a speedup? Let's pretend we can improve a computer's performance when it's in use. The ratio of speedup is:

Alternatively,

Amdahl's Law provides a simple technique to determine the speedup from a particular upgrade, which is dependent on two factors:

- The percentage of the original computer's computing time that can be converted to take advantage of the boost —For example, if an enhancement can save 20 seconds of a 60-second program's execution time, the fraction is 20/60. We'll call this value Fractionenhancd because it's always less than or equal to one.

2. The enhanced execution mode's benefit; that is, how much faster the task would perform if the enhanced mode were employed throughout the program. This value is the difference between the time spent in the original mode and the time spent in the enhanced mode.

If a component of the program takes 2 seconds in the enhanced mode but 5 seconds in the original mode, the improvement is 5/2. This figure, which is always greater than 1, will be referred to as Speedupenhanced .

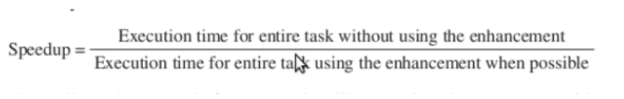

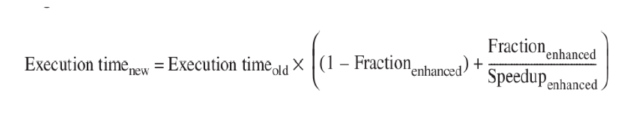

The time spent using the un-improved portion of the computer plus the time spent using the enhancement will be the execution time using the original computer with the enhanced mode:

The ratio of execution times determines the overall speedup:

Amdahl's Law can be used to determine how much an improvement will improve performance and how resources should be distributed to improve cost performance. Clearly, the purpose is to provide resources proportionally to time spent. Amdahl's Law is most useful for evaluating two options' total system performance, but it may also be used to compare two processor design choices.

Q9) What is computing model?

A9) A model of computing is a description of how a mathematical function's output is computed given an input. A model explains the organization of computation units, memory, and communications. Given a computation model, an algorithm's computational complexity can be quantified. Using a model, you may study the performance of algorithms without having to worry about the changes that come with different implementations and technology.

Different models

● The logic circuit

● The finite-state machine

● The random-access machine

● The pushdown automaton, and

● The Turing machine

Q10) What are Logic circuits?

A10) A Boolean function is realized via a logic gate, which is a physical device. A logic circuit, as defined, is a directed acyclic graph with gate labels on all vertices except the input vertices.

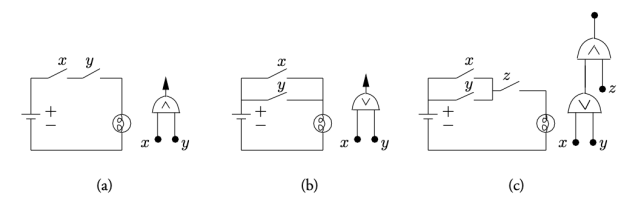

Logic gates can be built using a variety of technologies. Figures (a) and (b) demonstrate electrical circuits for the AND and OR gates made with batteries, lamps, and switches to help visualize concepts. A logic symbol for the gate is shown with each of these circuits.

Fig 2: Three electrical circuits simulating logic circuits

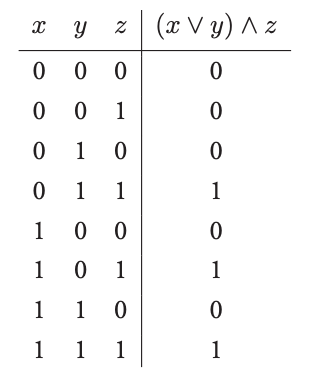

These symbols are used to draw circuits, such as the (x ∨ y) ∧ z circuit shown in Fig. (c). The bulbs are lighted when electrical current travels from the batteries through a switch or switches in these circuits. In this scenario, the circuit's value is True; otherwise, it is False. The truth table for the function mapping the values of the three input variables in Fig. (c) to the value of the single output variable is shown below. When the switch with its name is closed, x, y, and z have a value of 1; otherwise, they have a value of 0.

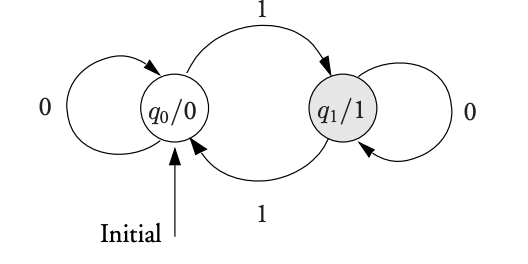

Q11) Write about Finite state machine?

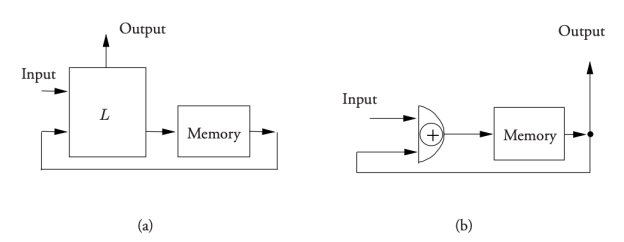

A11) A finite-state machine (FSM) is a memory-based machine. As shown in Fig, it goes through a series of phases in which it takes its current state from the set Q of states and current external input from the set Σ of input letters and combines them in a logic circuit L to produce a successor state in Q and an output letter in. The logic circuit L can be divided into two parts: one computes the next-state function: δ : Q × Σ → Q, whose value is the FSM's next state, and the other computes the output function: λ : Q → Ψ, whose value is the FSM's output in the present state.

Fig 3: (a) The finite-state machine (FSM) model; at each unit of time its logic unit, L, operates on its current state (taken from its memory) and its current external input to compute an external output and a new state that it stores in its memory. (b) An FSM that holds in its memory a bit that is the EXCLUSIVE OR of the initial value stored in its memory and the external inputs received to the present time.

Figure (a) depicts a generic finite-state machine, whereas Figure (b) depicts a concrete FSM that delivers the EXCLUSIVE OR of the current state and the external input as successor state and output. Figure shows the FSM's state diagram. A single FSM can be formed by connecting two (or more) finite-state machines that operate in lockstep. In this scenario, some of one FSM's outputs are used as inputs to the other.

Today, finite-state machines are everywhere. Microwave ovens, VCRs, and autos all include them. They can be straightforward or intricate. The general-purpose computer depicted by the random-access machine is one of the most useful FSMs.

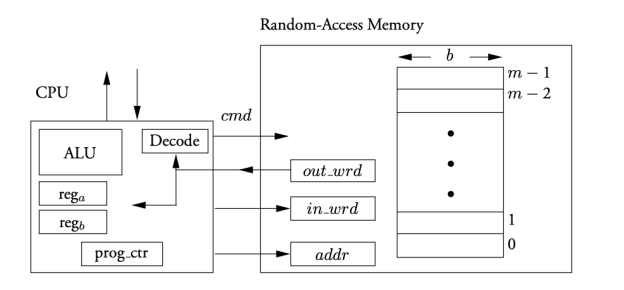

Q12) Random access machine?

A12) As shown in Fig., the (bounded-memory) random-access machine (RAM) is characterized as a pair of interconnected finite-state machines, one serving as a central processing unit (CPU) and the other as a random access memory. Each of the m b-bit words in the random-access memory is designated by an address. It also has an output word (out wrd) and a triple of inputs: a command (cmd), an address (addr), and an input data word (in data) (in wrd ). READ, WRITE, or NO-OP are the options for cmd. A READ command changes the value of out wrd to the value of the data word at address addr, but a NO-OP command does nothing. A WRITE instruction changes the value of in wrd to the data word at address addr.

Fig 4: Random access machine

The CPU's random-access memory stores data as well as programs, which are collections of instructions. The fetch-and-execute cycle is when the CPU repeatedly reads and executes an instruction from random-access memory. Arithmetic, logic, comparison, and jump instructions are among the most common. The CPU uses comparisons to determine whether it should read the next program instruction in sequence or jump to an instruction that is not in sequence.

The general-purpose computer is far more complicated than the preceding sketch of the RAM suggests. It employs a wide range of techniques to attain high speed at a reasonable cost using current technology. Designers have begun to use "superscalar" CPUs, which issue several instructions in each time step, as the number of components that can fit on a semiconductor chip grows. In addition, designers are increasingly using memory hierarchies to emulate expensive fast memories by assembling groups of slower but larger memories with reduced costs per bit.

Q13) What is turing machine?

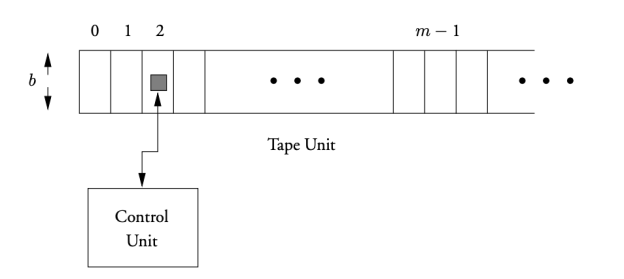

A13) The Turing machine, which consists of a control unit (an FSM) and a tape unit with a potentially unlimited linear array of cells each carrying letters from an alphabet that can be read and written by a tape head driven by the control unit, has an infinite supply of data words. It is assumed that the head can only travel from one cell to the next on the linear array in each time step. (See Figure.) Because no other machine model has been identified that can accomplish tasks that the Turing machine can, it has become a standard model of computation.

Fig 5: Turing machine

The pushdown automata is a special type of Turing machine that uses the tape as a pushdown stack. Only at the top of a stack is data entered, removed, and accessed. A tape with the cell to the right of the tape head always blank can be used to simulate a pushdown stack. When the tape goes away from a cell, it leaves a non-blank sign behind. If it goes to the left, it leaves that cell with a blank.

Some computers are serial, meaning that each time step they perform one action on a fixed quantity of data. Others are parallel, in the sense that they have numerous (typically communicating) subcomputers running at the same time. They can function together in a synchronous or asynchronous mode, and they can be connected by a simple or complicated network. A wire connecting two computers is an example of a rudimentary network. A crossbar switch with 25 switches at the intersection of five columns and five rows of wires is an example of a sophisticated network; closing the switch at the intersection of a row and a column connects the two wires and the two computers to which they are connected.

Q14) Write about Formal languages?

A14) Formal language specification methods have resulted in efficient parsing and recognition of programming languages. The finite-state machine in Fig. Illustrates this. It has a q0 beginning state, a q1 end state, and inputs that can be 0 or 1. When the machine is in state q0, an output of 0 is produced, and when it is in state q1, an output of 1 is created. Before the first input is received, the output is 0.

The output of the FSM in Fig. Is equal to the input after the first input. As shown by induction, the output is the EXCLUSIVE OR of the 1s and 0s among the inputs after numerous inputs. After one input, the inductive hypothesis is clearly true.

Fig 6: A state diagram for a finite-state machine whose circuit model

We prove that it remains true for k+1 inputs, and thus for all inputs, assuming it is true after k inputs. The state is determined only by the output. There are two scenarios to consider: the FSM is either in state q0 or state q1 after k inputs. Based on the value of the k + 1st input, there are two cases to examine for each state. It's clear that following the k + 1st input, the output is the EXCLUSIVE OR of the first k + 1 inputs in all four circumstances.

Q15) Write the difference between mini and mainframe computers?

A15) Difference between mainframe and mini computers

BASIS FOR COMPARISON | MAINFRAME | MINICOMPUTER |

Disk size | Large | Small |

Memory storage | High | Low |

Processing speed | Faster | Slower |

User support | Thousand or millions of users at a time | Hundreds of user can simultaneously connect |

Cost | Expensive | Inexpensive |

Q16) Write the difference between micro and super computers?

A16) Difference between micro and super computer

S.NO | Microcomputer | Supercomputer |

1. | Microcomputers are general-purpose computers that are mostly used for daily work that performs all logic and arithmetic operations. | While supercomputer is used for complex and large mathematical computations. |

2. | Microcomputers are small in terms of size. | While the size of a supercomputer is very large. |

3. | Microcomputers are cheaper than supercomputers. | Whereas the supercomputers are very expensive. |

4. | Microcomputer speeds lower than supercomputers. | Whereas supercomputer speed is extremely high. |

5. | Microcomputers can have multiple operating systems simultaneously. | Whereas at present, supercomputers have Linux and their different operating systems. |

6. | Microcomputers are used in offices, education systems, database management systems, word processing, etc. | Where supercomputers are used in astronomy, data analysis, robot designing, weather forecasting, data mining, etc. |

7. | Microcomputers usually have a processor. | While modern supercomputers can contain thousands of processors. |

8. | Microcomputer was invented by Bill Pentz team. | Whereas supercomputer was invented by Seymour Cray. |

9. | Microcomputers can have a processing speed in the range of 70 to 100 MIPS. | Whereas supercomputers can have a processing speed in the range of 100 to 900 MIPS. |