UNIT 6

UNIT 6

UNIT 6

Memory &Input / Output Systems

1. What are the CHARACTERISTICS OF COMPUTER MEMORY SYSTEM?

CHARACTERISTICS OF COMPUTER MEMORY SYSTEM

1) Location

- CPU registers

- Cache

- Internal Memory(main)

- External (secondary)

2) Capacity

- Word size : Typically equal to the number of bits used to represent a number and to the instruction length.

- For addresses of length A (in bits), the number of addressable units is 2^A.

3) Unit of Transfer

- Word

- Block

4) Access Method

* Sequential Access

- Access must be made in a specific linear sequence.

- Time to access an arbitrary record is highly variable.

* Direct access

- Individual blocks or record have an address based on physical location.

- Access is by direct access to general vicinity of desired information, then some search.

- Access time is still variable, but not as much as sequential access.

* Random access

- Each addressable location has a unique, physical location.

- Access is by direct access to desired location,

- Access time is constant and independent of prior accesses.

* Associative

- Desired units of information are retrieved by comparing a sub-part of unit.

- Location is needed.

- Most useful for searching.

5) Performance

* Access Time (Latency)

- For random access memory, latency is the time it takes to perform. A read or write operation that is the time from the instant that the address is presented to the memory to the instant that the data have been stored available for use.

* Memory Cycle Time

- Access time + additional time required before a second access can begin(refresh time, for example).

* Transfer Rate

- Generally measured in bit/second.

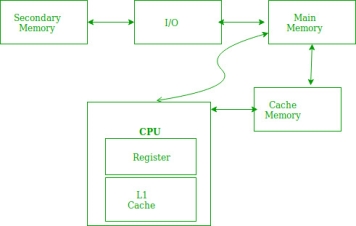

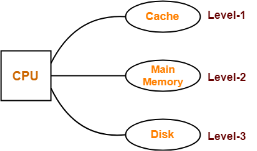

2. Explain Memory Hierarchy

A memory unit is an essential component in any digital computer since it is needed for storing programs and data.

Typically, a memory unit can be classified into two categories:

- The memory unit that establishes direct communication with the CPU is called Main Memory. The main memory is often referred to as RAM (Random Access Memory).

- The memory units that provide backup storage are called Auxiliary Memory. For instance, magnetic disks and magnetic tapes are the most commonly used auxiliary memories.

Apart from the basic classifications of a memory unit, the memory hierarchy consists all of the storage devices available in a computer system ranging from the slow but high-capacity auxiliary memory to relatively faster main memory.

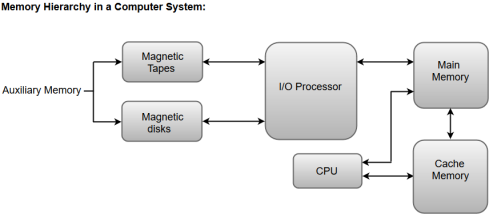

The following image illustrates the components in a typical memory hierarchy.

Auxiliary Memory

Auxiliary memory is known as the lowest-cost, highest-capacity and slowest-access storage in a computer system. Auxiliary memory provides storage for programs and data that are kept for long-term storage or when not in immediate use. The most common examples of auxiliary memories are magnetic tapes and magnetic disks.

A magnetic disk is a digital computer memory that uses a magnetization process to write, rewrite and access data. For example, hard drives, zip disks, and floppy disks.

Magnetic tape is a storage medium that allows for data archiving, collection, and backup for different kinds of data.

Main Memory

The main memory in a computer system is often referred to as Random Access Memory (RAM). This memory unit communicates directly with the CPU and with auxiliary memory devices through an I/O processor.

The programs that are not currently required in the main memory are transferred into auxiliary memory to provide space for currently used programs and data.

I/O Processor

The primary function of an I/O Processor is to manage the data transfers between auxiliary memories and the main memory.

Cache Memory

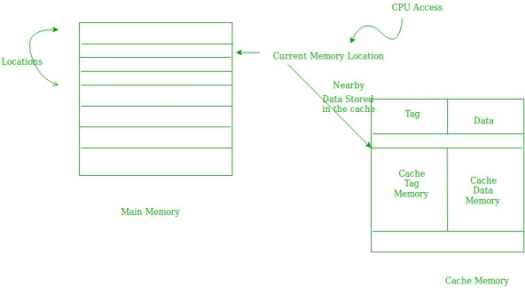

The data or contents of the main memory that are used frequently by CPU are stored in the cache memory so that the processor can easily access that data in a shorter time. Whenever the CPU requires accessing memory, it first checks the required data into the cache memory. If the data is found in the cache memory, it is read from the fast memory. Otherwise, the CPU moves onto the main memory for the required data.

3. Explain Memory read & writes cycle

A memory unit stores binary information in groups of bits called words. Data input lines provide the information to be stored into the memory, Data output lines carry the information out from the memory. The control lines Read and write specifies the direction of transfer of data. Basically, in the memory organization, there are  memory locations indexing from 0 to where l is the address buses. We can describe the memory in terms of the bytes using the following formula:

memory locations indexing from 0 to where l is the address buses. We can describe the memory in terms of the bytes using the following formula:

Where,

l is the total address buses

N is the memory in bytes

For example, some storage can be described below in terms of bytes using the above formula:

1kB= 210 Bytes

64 kB = 26 x 210 Bytes

= 216 Bytes

4 GB = 22 x 210(kB) x 210(MB) x 210 (GB)

= 232 Bytes

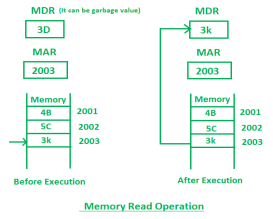

Memory Address Register (MAR) is the address register which is used to store the address of the memory location where the operation is being performed.

Memory Data Register (MDR) is the data register which is used to store the data on which the operation is being performed.

- Memory Read Operation:

Memory read operation transfers the desired word to address lines and activates the read control line. Description of memory read read operation is given below:

In the above diagram initially, MDR can contain any garbage value and MAR is containing 2003 memory address. After the execution of read instruction, the data of memory location 2003 will be read and the MDR will get updated by the value of the 2003 memory location (3D).

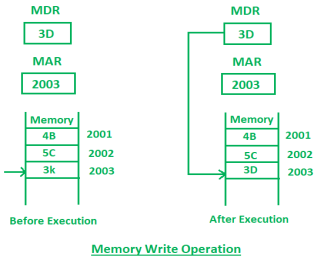

2. Memory Write Operation:

Memory write operation transfers the address of the desired word to the address lines, transfers the data bits to be stored in memory to the data input lines. Then it activates the write control line. Description of the write operation is given below:

In the above diagram, the MAR contains 2003 and MDR contains 3D. After the execution of write instruction 3D will be written at 2003 memory location.

4. What are the Key Characteristics of Computer Memory?

Key Characteristics of Computer Memory

The following expressed are the key characteristics of main computer memories.

- Semiconductor memory

A computer memory composed of a semiconductor is called a semiconductor memory, and the memory of the semiconductor is small in size, low in power, and short in access time. What’s more, when the power is lost, the stored data is also lost, which is a kind of volatile memory.

- Magnetic material memory

A computer memory made of magnetic material is called a magnetic memory. A layer of magnetic material is coated on metal or plastic to store data. The feature is that non-volatile, the data will not disappear after power-off, and the access speed is slow.

- Disk storage

The optical disk storage uses a laser to read on a magneto-optical material, which is non-volatility with good durability and high recording density. It is now used in computer systems as external storage.

RAM & ROM

5. Differences between MASK ROM,FALSH ROM and OTP ROM

The program of MASK ROM is solidified at the factory, and suitable for applications where the program is fixed; FALSH ROM's program can be erased repeatedly, with great flexibility, but at a higher price, and suitable for price-insensitive applications or development purposes; OTP ROM price is between the MASK ROM and FALSH ROM, and can be programmed once, therefore, it is suitable for applications that require both flexibility and low cost, especially for electronic products that need continuous refurbishment and meet rapid mass production.

The commonality between OTP ROM and PROM is that they can only be programmed once.

DDR: Double Date Rate. A common DDR SDRAM refers to a double rate synchronous dynamic random access memory.

The difference between DDR SDRAM and SDRAM: SDRAM transfers data only once in one clock cycle, and it transmits data during the rising period of the clock; while DDR memory transmits data twice in one clock cycle of each period. Also it can achieve higher data rates at the same bus frequency as SDRAM.

6. Why DDR3 can replace DDR2 memory?

Frequency: DDR3 can operate from 800MHz to 1666MHz or more; while DDR2 operates from 533MHz to 1066MHz. In general, DDR3 is twice the frequency of DDR2, and the operating performance is improved by reducing the read and write time by half.

Power consumption: DDR3 can save 16% of energy compared to DDR2, because the new generation of DDR3 operates at 1.5V, while DDR2 operates at 1.8V, which can compensate for the high power generated by excessive operating frequency. At the same time, less energy consumption can extend the life of the component.

Technology: DDR3 memory bank has increased to eight, double the DDR2. So compared to DDR2 pre-read, it will increase efficiency by 50%, which is twice the DDR2 standard.

Main Technical Indicators of Computer Memory

Storage capacity: It generally refers to the number of storage units (N) included in a memory.

Access time (TA): It refers to the memory from the acceptance of the command to the read ∕ write data and stabilizes at the data register (MDP) output.

Memory cycle time (TMC): The minimum time required between two independent access operations, in addition, TMC is usually longer than TA.

Access rate: The total number of bits of information exchanged between main memory and the outside (such as CPU) per unit time.

Reliability: It described by the mean time between failures (MTBF), that is, the average interval time between two failures.

7. Explain Cache Memory – Principle of Locality

Locality of reference refers to a phenomenon in which a computer program tends to access same set of memory locations for a particular time period. In other words, Locality of Reference refers to the tendency of the computer program to access instructions whose addresses are near one another. The property of locality of reference is mainly shown by loops and subroutine calls in a program.

- In case of loops in program control processing unit repeatedly refers to the set of instructions that constitute the loop.

- In case of subroutine calls, everytime the set of instructions are fetched from memory.

- References to data items also get localized that means same data item is referenced again and again.

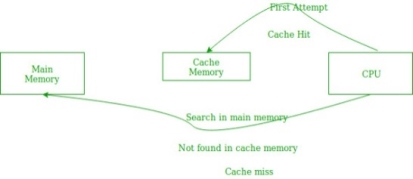

In the above figure, you can see that the CPU wants to read or fetch the data or instruction. First, it will access the cache memory as it is near to it and provides very fast access. If the required data or instruction is found, it will be fetched. This situation is known as a cache hit. But if the required data or instruction is not found in the cache memory then this situation is known as a cache miss. Now the main memory will be searched for the required data or instruction that was being searched and if found will go through one of the two ways:

- First way is that the CPU should fetch the required data or instruction and use it and that’s it but what, when the same data or instruction is required again.CPU again has to access the same main memory location for it and we already know that main memory is the slowest to access.

- The second way is to store the data or instruction in the cache memory so that if it is needed soon again in the near future it could be fetched in a much faster way.

Cache Operation:

It is based on the principle of locality of reference. There are two ways with which data or instruction is fetched from main memory and get stored in cache memory. These two ways are the following:

- Temporal Locality –

Temporal locality means current data or instruction that is being fetched may be needed soon. So we should store that data or instruction in the cache memory so that we can avoid again searching in main memory for the same data.

When CPU accesses the current main memory location for reading required data or instruction, it also gets stored in the cache memory which is based on the fact that same data or instruction may be needed in near future. This is known as temporal locality. If some data is referenced, then there is a high probability that it will be referenced again in the near future.

2. Spatial Locality –

Spatial locality means instruction or data near to the current memory location that is being fetched, may be needed soon in the near future. This is slightly different from the temporal locality. Here we are talking about nearly located memory locations while in temporal locality we were talking about the actual memory location that was being fetched.

Cache Performance:

The performance of the cache is measured in terms of hit ratio. When CPU refers to memory and find the data or instruction within the Cache memory, it is known as cache hit. If the desired data or instruction is not found in the cache memory and CPU refers to the main memory to find that data or instruction, it is known as a cache miss.

Hit + Miss = Total CPU Reference

Hit Ratio(h) = Hit / (Hit+Miss)

Average access time of any memory system consists of two levels: Cache and Main Memory. If Tc is time to access cache memory and Tm is the time to access main memory then we can write:

Tavg = Average time to access memory

Tavg = h * Tc + (1-h)*(Tm + Tc)

8. Explain in detail memory organization

In a computer

- Memory is organized at different levels.

- CPU may try to access different levels of memory in different ways.

- On this basis, the memory organization is broadly divided into two types-

- Simultaneous Access Memory Organization

- Hierarchical Access Memory Organization

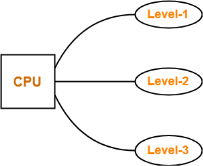

1. Simultaneous Access Memory Organization-

In this memory organization,

- All the levels of memory are directly connected to the CPU.

- Whenever CPU requires any word, it starts searching for it in all the levels simultaneously.

Example-01:

Consider the following simultaneous access memory organization-

Here, two levels of memory are directly connected to the CPU.

Let-

- T1 = Access time of level L1

- S1 = Size of level L1

- C1 = Cost per byte of level L1

- H1 = Hit rate of level L1

Similar are the notations for level L2.

Average Memory Access Time-

Average time required to access memory per operation

= H1 x T1 + (1 – H1) x H2 x T2

= H1 x T1 + (1 – H1) x 1 x T2

= H1 x T1 + (1 – H1) x T2

Important Note In any memory organization,

|

Average Cost Per Byte-

Average cost per byte of the memory

= { C1 x S1 + C2 x S2 } / { S1 + S2 }

Example-02:

Consider the following simultaneous access memory organization-

Here, three levels of memory are directly connected to the CPU.

Let-

- T1 = Access time of level L1

- S1 = Size of level L1

- C1 = Cost per byte of level L1

- H1 = Hit rate of level L1

Similar are the notations for other two levels.

Average Memory Access Time-

Average time required to access memory per operation

= H1 x T1 + (1 – H1) x H2 x T2 + (1 – H1) x (1 – H2) x H3 x T3

= H1 x T1 + (1 – H1) x H2 x T2 + (1 – H1) x (1 – H2) x 1 x T3

= H1 x T1 + (1 – H1) x H2 x T2 + (1 – H1) x (1 – H2) x T3

Average Cost Per Byte-

Average cost per byte of the memory

= { C1 x S1 + C2 x S2 + C3 x S3 } / { S1 + S2 + S3 }

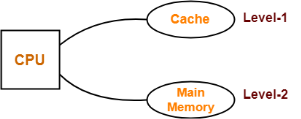

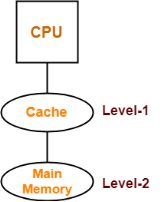

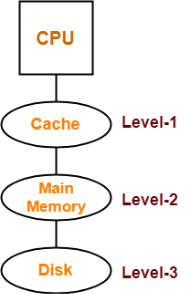

2. Hierarchical Access Memory Organization-

In this memory organization, memory levels are organized as-

- Level-1 is directly connected to the CPU.

- Level-2 is directly connected to level-1.

- Level-3 is directly connected to level-2 and so on.

Whenever CPU requires any word,

- It first searches for the word in level-1.

- If the required word is not found in level-1, it searches for the word in level-2.

- If the required word is not found in level-2, it searches for the word in level-3 and so on.

Example-01:

Consider the following hierarchical access memory organization-

Here, two levels of memory are connected to the CPU in a hierarchical fashion.

Let-

- T1 = Access time of level L1

- S1 = Size of level L1

- C1 = Cost per byte of level L1

- H1 = Hit rate of level L1

Similar are the notations for level L2.

Average Memory Access Time-

Average time required to access memory per operation

= H1 x T1 + (1 – H1) x H2 x (T1 + T2)

= H1 x T1 + (1 – H1) x 1 x (T1 + T2)

= H1 x T1 + (1 – H1) x (T1 + T2)

Average Cost Per Byte-

Average cost per byte of the memory

= { C1 x S1 + C2 x S2 } / { S1 + S2 }

Example-02:

Consider the following hierarchical access memory organization-

Here, three levels of memory are connected to the CPU in a hierarchical fashion.

Let-

- T1 = Access time of level L1

- S1 = Size of level L1

- C1 = Cost per byte of level L1

- H1 = Hit rate of level L1

Similar are the notations for other two levels.

Average Memory Access Time-

Average time required to access memory per operation

= H1 x T1 + (1 – H1) x H2 x (T1 + T2) + (1 – H1) x (1 – H2) x H3 x (T1 + T2 + T3)

= H1 x T1 + (1 – H1) x H2 x (T1 + T2) + (1 – H1) x (1 – H2) x 1 x (T1 + T2 + T3)

= H1 x T1 + (1 – H1) x H2 x (T1 + T2) + (1 – H1) x (1 – H2) x (T1 + T2 + T3)

Average Cost Per Byte-

Average cost per byte of the memory

= { C1 x S1 + C2 x S2 + C3 x S3 } / { S1 + S2 + S3 }

9. GIVE SOME EXAMPLE PROBLEMS BASED ON MEMORY ORGANIZATION-

Problem-01:

What is the average memory access time for a machine with a cache hit rate of 80% and cache access time of 5 ns and main memory access time of 100 ns when-

- Simultaneous access memory organization is used.

- Hierarchical access memory organization is used.

Solution-

Part-01: Simultaneous Access Memory Organization-

The memory organization will be as shown-

Average memory access time

= H1 x T1 + (1 – H1) x H2 x T2

= 0.8 x 5 ns + (1 – 0.8) x 1 x 100 ns

= 4 ns + 0.2 x 100 ns

= 4 ns + 20 ns

= 24 ns

Part-02: Hierarchical Access Memory Organization-

The memory organization will be as shown-

Average memory access time

= H1 x T1 + (1 – H1) x H2 x (T1 + T2)

= 0.8 x 5 ns + (1 – 0.8) x 1 x (5 ns + 100 ns)

= 4 ns + 0.2 x 105 ns

= 4 ns + 21 ns

= 25 ns

Problem-02:

A computer has a cache, main memory and a disk used for virtual memory. An access to the cache takes 10 ns. An access to main memory takes 100 ns. An access to the disk takes 10,000 ns. Suppose the cache hit ratio is 0.9 and the main memory hit ratio is 0.8. The effective access time required to access a referenced word on the system is _______ when-

- Simultaneous access memory organization is used.

- Hierarchical access memory organization is used.

Solution-

Part-01:Simultaneous Access Memory Organization-

The memory organization will be as shown-

Effective memory access time

= H1 x T1 + (1 – H1) x H2 x T2 + (1 – H1) x (1 – H2) x H3 x T3

= 0.9 x 10 ns + (1 – 0.9) x 0.8 x 100 ns + (1 – 0.9) x (1 – 0.8) x 1 x 10000 ns

= 9 ns + 8 ns + 200 ns

= 217 ns

Part-02: Hierarchical Access Memory Organization-

The memory organization will be as shown-

Effective memory access time

= H1 x T1 + (1 – H1) x H2 x (T1 + T2) + (1 – H1) x (1 – H2) x H3 x (T1 + T2 + T3)

= 0.9 x 10 ns + (1 – 0.9) x 0.8 x (10 ns + 100 ns) + (1 – 0.9) x (1 – 0.8) x 1 x (10 ns + 100 ns + 10000 ns)

= 9 ns + 8.8 ns + 202.2 ns

= 220 ns

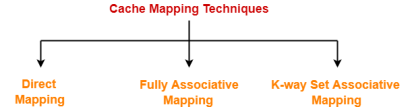

10. Explain in detail Mapping functions

Cache memory bridges the speed mismatch between the processor and the main memory. |

When cache hit occurs,

- The required word is present in the cache memory.

- The required word is delivered to the CPU from the cache memory.

When cache miss occurs,

- The required word is not present in the cache memory.

- The page containing the required word has to be mapped from the main memory.

- This mapping is performed using cache mapping techniques.

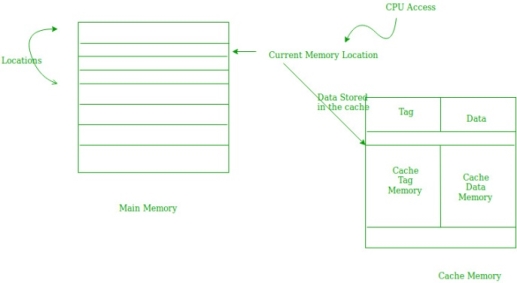

Cache Mapping-

- Cache mapping defines how a block from the main memory is mapped to the cache memory in case of a cache miss.

OR

- Cache mapping is a technique by which the contents of main memory are brought into the cache memory.

The following diagram illustrates the mapping process-

Now, before proceeding further, it is important to note the following points-

NOTES

- Main memory is divided into equal size partitions called as blocks or frames.

- Cache memory is divided into partitions having same size as that of blocks called as lines.

During cache mapping, block of main memory is simply copied to the cache and the block is not actually brought from the main memory.

Cache Mapping Techniques-

Cache mapping is performed using following three different techniques-

- Direct Mapping

- Fully Associative Mapping

- K-way Set Associative Mapping

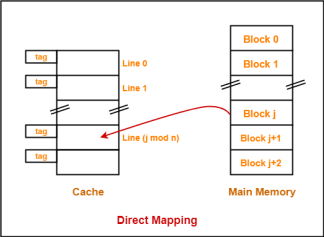

1. Direct Mapping-

In direct mapping,

- A particular block of main memory can map only to a particular line of the cache.

- The line number of cache to which a particular block can map is given by-

Cache line number = ( Main Memory Block Address ) Modulo (Number of lines in Cache) |

Example-

- Consider cache memory is divided into ‘n’ number of lines.

- Then, block ‘j’ of main memory can map to line number (j mod n) only of the cache.

Need of Replacement Algorithm-

In direct mapping,

- There is no need of any replacement algorithm.

- This is because a main memory block can map only to a particular line of the cache.

- Thus, the new incoming block will always replace the existing block (if any) in that particular line.

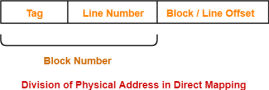

Division of Physical Address-

In direct mapping, the physical address is divided as-

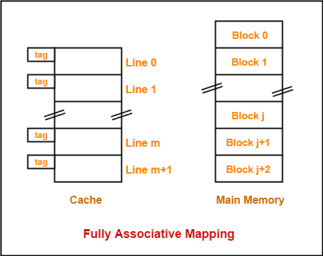

2. Fully Associative Mapping-

In fully associative mapping,

- A block of main memory can map to any line of the cache that is freely available at that moment.

- This makes fully associative mapping more flexible than direct mapping.

Example-

Consider the following scenario-

Here,

- All the lines of cache are freely available.

- Thus, any block of main memory can map to any line of the cache.

- Had all the cache lines been occupied, then one of the existing blocks will have to be replaced.

Need of Replacement Algorithm-

In fully associative mapping,

- A replacement algorithm is required.

- Replacement algorithm suggests the block to be replaced if all the cache lines are occupied.

- Thus, replacement algorithm like FCFS Algorithm, LRU Algorithm etc is employed.

Division of Physical Address-

In fully associative mapping, the physical address is divided as-

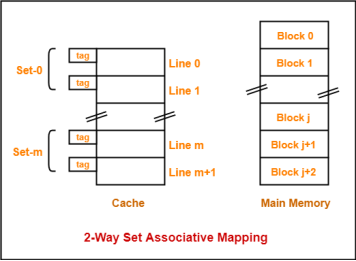

3. K-way Set Associative Mapping-

In k-way set associative mapping,

- Cache lines are grouped into sets where each set contains k number of lines.

- A particular block of main memory can map to only one particular set of the cache.

- However, within that set, the memory block can map any cache line that is freely available.

- The set of the cache to which a particular block of the main memory can map is given by-

Cache set number = ( Main Memory Block Address ) Modulo (Number of sets in Cache) |

Example-

Consider the following example of 2-way set associative mapping-

Here,

- k = 2 suggests that each set contains two cache lines.

- Since cache contains 6 lines, so number of sets in the cache = 6 / 2 = 3 sets.

- Block ‘j’ of main memory can map to set number (j mod 3) only of the cache.

- Within that set, block ‘j’ can map to any cache line that is freely available at that moment.

- If all the cache lines are occupied, then one of the existing blocks will have to be replaced.

Need of Replacement Algorithm-

- Set associative mapping is a combination of direct mapping and fully associative mapping.

- It uses fully associative mapping within each set.

- Thus, set associative mapping requires a replacement algorithm.

Division of Physical Address-

In set associative mapping, the physical address is divided as-

Special Cases-

If k = 1, then k-way set associative mapping becomes direct mapping i.e.

1-way Set Associative Mapping ≡ Direct Mapping |

If k = Total number of lines in the cache, then k-way set associative mapping becomes fully associative mapping.