Unit - 5

Matrices

Q1) What is Linear dependence of vectors?

A1)

Linear dependence-

A set of n – vectors. x1, x2, …….., xr is said to be linearly dependent if there exist scalars. k1, k2, …….,kr not all zero such that

k1 + x2k2 + …………….. + xrkr = 0 … (1)

Important notes-

- Equation (1) is known as a vector equation.

- If the vector equation has non – zero solution i.e. k1, k2, ….,kr not all zero. Then the vector x1, x2, ……….xr are said to be linearly dependent.

- If the vector equation has only trivial solution i.e.

k1 = k2 = …….=kr = 0. Then the vector x1, x2, ……,xr are said to linearly independent.

Linear combination-

A vector x can be written in the form.

x = x1 k1 + x2 k2 + ……….+xrkr

Where k1, k2, ………….., kr are scalars, then X is called linear combination of x1, x2, ……, xr.

Results:

- A set of two or more vectors are said to be linearly dependent if at least one vector can be written as a linear combination of the other vectors.

- A set of two or more vector are said to be linearly independent then no vector can be expressed as linear combination of the other vectors.

Q2) What are the properties of eigen values and eigen vectors?

A2)

Properties of Eigen values:-

- Then sum of the Eigen values of a matrix A is equal to sum of the diagonal elements of a matrix A.

- The product of all Eigen values of a matrix A is equal to the value of the determinant.

- If

λ1, λ2, λ3, . . . , λn

Are n Eigen values of square matrix A then

Are m Eigen values of a matrix A-1.

- The Eigen values of a symmetric matrix are all real.

- If all Eigen values are non –zero then A-1 exist and conversely.

- The Eigen values of A and A’ are same.

Properties of Eigen vector:-

- Eigen vector corresponding to distinct Eigen values are linearly independent.

- If two are more Eigen values are identical then the corresponding Eigen vectors may or may not be linearly independent.

- The Eigen vectors corresponding to distinct Eigen values of a real symmetric matrix are orthogonal.

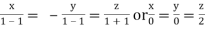

Q3) Find the sum and the product of the Eigen values of  ?

?

A3)

The sum of Eigen values = the sum of the diagonal elements

λ1 + λ2 =1+(-1)=0

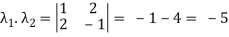

The product of the Eigen values is the determinant of the matrix

On solving above equations we get λ1 = √5 and λ2 = - √5

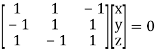

Q4) Find out the Eigen values and Eigen vectors of  ?

?

A4)

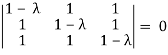

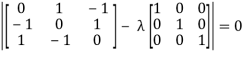

The Characteristics equation is given by |A - λI| = 0

(1 - λ)[1 - 2λ + λ2 -1] - 1[1-λ-1] + 1[1-1+λ] = 0

(1-λ)[1-2λ + λ2 -1] -1[-λ] + 1[λ] = 0

(1-λ)(-2λ+λ2) + λ+λ = 0

-2λ + λ2 +2λ2 -λ3 +2λ = 0

- λ3 + 3λ2 = 0

-λ2(λ-3) = 0

Or λ = 0, 0, 3

Hence the Eigen values are 0,0 and 3.

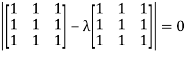

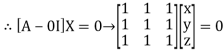

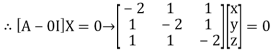

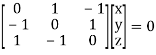

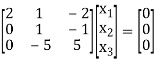

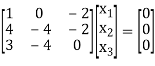

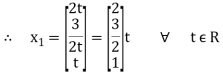

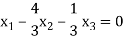

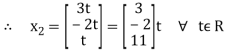

The Eigen vector corresponding to Eigen value λ = 0 is

[A - 0I]X =0

Where X is the column matrix of order 3 i.e.

This implies that x +y + z = 0

Here number of unknowns are 3 and number of equation is 1.

Hence we have (3-1) = 2 linearly independent solutions.

Let z = 0, y = 1 then x = -1 or let z =1, y = 1 then x = -2 z = 0, y = 1

Thus the Eigen vectors corresponding to the Eigen value λ = 0 are (-1,1,0) and (-2,1,1).

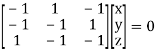

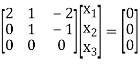

The Eigen vector corresponding to Eigen value λ = 3 is

[A - 3I]X = 0

Where X is the column matrix of order 3 i.e.

This implies that -2x +y + z = 0

x - 2y +z = 0

x + y - 2z = 0

Taking last two equations we get

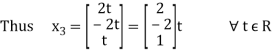

Thus the Eigen vectors corresponding to the Eigen value λ = 3 are (3,3,3).

Hence the three Eigen vectors obtained are (-1,1,0), (-2,1,1) and (3,3,3).

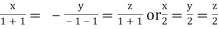

Q5) Find out the Eigen values and Eigen vectors of  ?

?

A5)

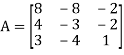

Let A =

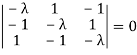

The characteristics equation of A is |A - λI| = 0.

Or - λ(λ2+1)+1(-λ-1)+1(1+λ)=0

Or - λ3 - λ - λ - 1+1+λ = 0

Or -λ(λ2-1) = 0

Or λ = 0, 1, -1

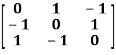

The Eigen vector corresponding to Eigen value λ = 0 is

[A - 0l]X = 0

Where X is the column matrix of order 3 i.e.

Or y - z = 0

-x + z =0

x - y = 0

On solving we get

Thus the Eigen vectors corresponding to the Eigen value λ = 0 is (1,1,1).

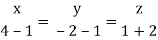

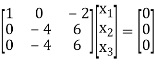

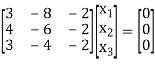

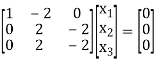

The Eigen vector corresponding to Eigen value λ = 1 is

[A - I]X = 0

Where X is the column matrix of order 3 i.e.

Or -x +y -z = 0

-x-y+z = 0

x - y - z = 0

On solving or .

Thus the Eigen vectors corresponding to the Eigen value λ = 1 is (0,0,2).

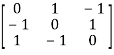

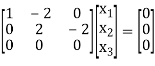

The Eigen vector corresponding to Eigen value λ = - 1 is

[A + I]X = 0

Where X is the column matrix of order 3 i.e.

Or x+y-z = 0

-x+y+z = 0

x - y+z = 0

On solving we get .

Thus the Eigen vectors corresponding to the Eigen value λ = -1 is (2,2,2).

Hence three Eigen vectors are (1,1,1), (0,0,2) and (2,2,2).

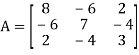

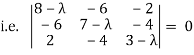

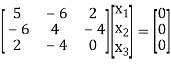

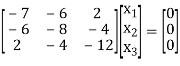

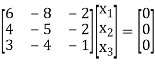

Q6) Determine the Eigen values of Eigen vector of the matrix.

A6)

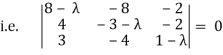

Consider the characteristic equation as, |A - λI| = 0

i.e. (8 - λ) (21 - 10λ + λ2 - 16) + 6(-18 + 6λ+8) + 2(24 - 14 + 2λ) = 0

⇒ (8 - λ)(λ2 - 10λ + 5) + 6(6λ - 10) + 2 (2λ + 10) = 0

⇒ 8λ2 - 80λ +40 - λ3 + 10λ2-5λ + 36λ - 60

⇒ - λ3 + 18λ - 45λ = 0

i.e. λ3 -18λ + 45 λ = 0

Which is the required characteristic equation.

∴ λ(λ2 - 18λ + 45) = 0

λ(λ - 15)(λ - 3) = 0

∴ λ = 0, 15, 3 are the required Eigen values.

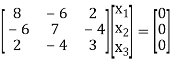

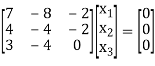

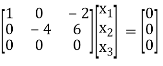

Now consider the equation

[A - λI]x = 0 .......(1)

Case I:

If λ = 0 Equation (1) becomes

R1 + R2

R2 + 3R1, R3 - R1

R3 + 5R2

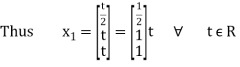

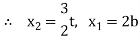

Thus ρ[A - λI] = 2 & n = 3

3 - 2 = 1 independent variable.

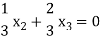

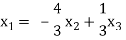

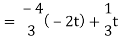

Now rewrite equation as,

2x1 + x2 - 2x3 = 0

x2 - x3 = 0

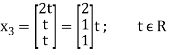

Put x3 = t

∴ x2 = t &

2x1 = - x2 + 2x3

2x1 = -t + 2t

∴ x1 =

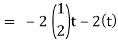

.

.

Is the eigen vector corresponding to λ = t.

Case II:

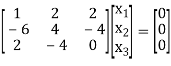

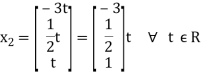

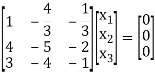

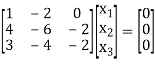

If λ = 3 equation (1) becomes,

R2 + 6R1, R3 - 2R1

Here ρ[A - λI] = 2, n = 3

∴ 3 - 2 = 1 Independent variables

Now rewrite the equations as,

x1 + 2x2 + 2x3 = 0

8x2 + 4x3 = 0

Put x3 = t

x1 = - 2x2 - 2x3

= - 3t

Is the eigen vector corresponding to λ = 3.

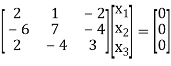

Case III:

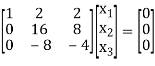

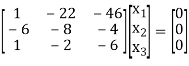

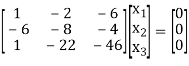

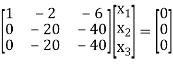

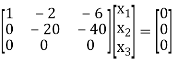

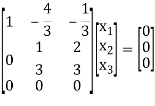

If λ = 15 equation (1) becomes,

R1 + 4R3

R13

R2 + 6R1, R3 - R1

R3 - R2

Here rank of [A - λI] = 2 & n = 3

∴ 3 - 2 = 1 independent variable.

Now rewrite the equations as,

x1 - 2x2 - 6x3 = 0

-20 x2- 40x3 = 0

Put x3 = t

∴ x2 = -2t & x1 = 2t

Is the eigen vector for λ = 13.

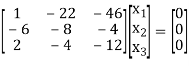

Q7) Find the Eigen values of Eigen vector for the matrix.

A7)

Consider the characteristic equation as |A - λI| = 0

∴ (8 - λ)[-3 + 3λ - λ + λ2 - 8] + 8[4 - 4λ + 6] - 2[-16 + 9 + 3λ] = 0

(8 - λ)[λ2 + 2λ - 11] + 8[+0 - 4λ] -2[3λ-7] = 0

8λ2+16λ - 88 - λ3 - 2λ2 + 11λ + 80 - 32 λ - 6λ + 14 = 0

i.e. - λ3 + 6λ2 - 11λ + 6 = 0

∴ λ3 - 6λ2+ 11λ + 6 = 0

(λ - 1)(λ - 2)(λ - 3) = 0

∴ λ = 1, 2, 3 are the required eigen values.

Now consider the equation

[A - λI]x = 0 ....(1)

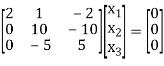

Case I:

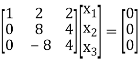

λ = 1 Equation (1) becomes,

R1 - 2R3

R2 - 4R1, R3 - 3R1

R3 - R2

Thus ρ[A - λI] = 2 and n = 3

3 – 2 = 1 independent variables.

3 – 2 = 1 independent variables.

Now rewrite the equations as,

x1 - 2x3 = 0

-4x2 + 6x3 = 0

Put x3 = t

I.e.

The Eigen vector for λ = 1

Case II:

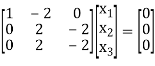

If λ = 2 equation (1) becomes,

R2 - 4R1, R3 - 3R1

Thus ρ(A - λI) = 2 & n = 3

∴ 3 -2 = 1

Independent variables.

Now rewrite the equations as,

Put x3 = t

∴ x2 = - 2t

= 3t

Is the Eigen vector for λ = 2

Now

Case III:

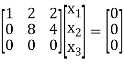

If λ = 3 equation (1) gives,

R1 - R2

R2 - 4R1, R3 - 3 R1

R2 - 4R1, R3 - 3R1

R3 - R2

Thus ρ[A - λI] = 2 & n = 3

∴ 3 - 2 = 1

Independent variables

Now x1 - 2x2 = 0

2x2 - 2x3 = 0

Put x3 = t, x2=t, x1 = 25

Thus

Is the Eigen vector for λ = 3.

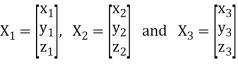

Q8) Describe Reduction to diagonal form.

A8)

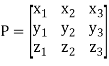

Let A be a square matrix of order n has n linearly independent Eigen vectors Which form the matrix P such that

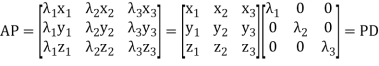

P-1AP = D(diagonal matrix.)

Where P is called the modal matrix and D is known as spectral matrix.

Procedure: let A be a square matrix of order 3.

Let three Eigen vectors of A are  Corresponding to Eigen values λ1, λ2 and λ3.

Corresponding to Eigen values λ1, λ2 and λ3.

Let

AP = A[X1, X2, X3] = [AX1, AX2, AX3] = [λ1X, λ2X2, λ3X3] {by characteristics equation of A}

Or

Or P-1AP = DP-1

Note: The method of diagonalization is helpful in calculating power of a matrix.

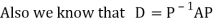

D = P-1 AP .Then for an integer n we have Dn = P1 AnP

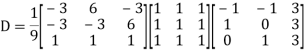

Q9) Diagonalise the matrix

A9)

Let A=

The three Eigen vectors obtained are (-1,1,0), (-1,0,1) and (3,3,3) corresponding to Eigen values .λ = 0, 0, 3

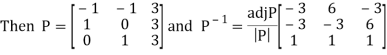

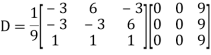

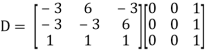

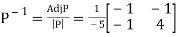

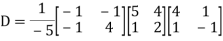

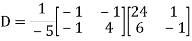

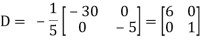

Q10) Diagonalise the matrix ?

?

A10)

Let A =

The Eigen vectors are (4,1),(1,-1) corresponding to Eigen values λ = 6 and 1.

Then  and also

and also

Also we know that D = P1AP

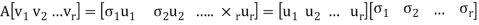

Q11) State Singular value decomposition.

A11)

Singular value decomposition:

The singular value decomposition of a matrix is usually referred to as the SVD. This is the final and best factorization of a matrix

Where U is orthogonal, Σ is diagonal, and V is orthogonal.

In the decomposition

A can be any matrix. We know that if A is symmetric positive definite its eigenvectors are orthogonal and we can write A = QΛQT. This is a special case of a SVD, with U = V = Q. For more general A, the SVD requires two different matrices U and V. We’ve also learned how to write A = SΛS-1, where S is the matrix of n distinct eigenvectors of A. However, S may not be orthogonal; the matrices U and V in the SVD will be

Working of SVD:

We can think of A as a linear transformation taking a vector v1 in its row space to a vector u1 = Av1 in its column space. The SVD arises from finding an orthogonal basis for the row space that gets transformed into an orthogonal basis for the column space: Avi = σiui. It’s not hard to find an orthogonal basis for the row space – the Gram Schmidt process gives us one right away. But in general, there’s no reason to expect A to transform that basis to another orthogonal basis. You may be wondering about the vectors in the null spaces of A and AT. These are no problem – zeros on the diagonal of Σ will take care of them.

Matrix form:

The heart of the problem is to find an orthonormal basis v1, v2, ...vr for the row space of A for which

With u1, u2, ...ur an orthonormal basis for the column space of A. Once we add in the null spaces, this equation will become AV = UΣ. (We can complete the orthonormal bases v1, ...vr and u1, ...ur to orthonormal bases for the entire space any way we want. Since vr+1 . . . vn will be in the null space of A, the diagonal entries σr+1 , . . . σn will be 0.) The columns of U and V are bases for the row and column spaces, respectively. Usually U is not equal to V, but if A is positive definite we can use the same basis for its row and column space.

Q12) State and prove Sylvester’s theorem.

A12)

Sylvester theorem: Let A be the matrix of a symmetric bilinear form, of a finite dimensional vector space, with respect to an orthogonal basis. Then the number of 1’s and -1’s and 0’s is independent of the choice of orthogonal basis.

Proof:

Let {e1, . . . , en} be an orthogonal basis of V such that the diagonal matrix A representing the form has only 1, -1 and 0 on the diagonal. By permuting the basis we can furthermore assume that the first p basis vectors are such that hei , eii = 1, the m next vectors give -1, and the final s = n − (p + m) vectors produce the zeros. It follows that s = n − (p + m) is the dimension of the null-space AX = 0. Therefore the number s = n − (p + m) of zeros on the diagonal is unique. Assume that we have found another orthogonal basis of V such that the diagonal matrix representing the form has first p 0 diagonal elements equal to 1, next m0 diagonal elements equal to -1, and the remaining s = n−(p 0 +m0 ) diagonal elements are zero. Let {f1, . . . , fn} be such a basis. We then claim that the p + m0 vectors e1, . . . , ep, fp 0+1, . . . , fp 0+m0 are linearly independent. Assume the converse, that there is a linear relation between these vectors.

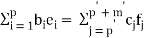

Such a relation we can split as

..........................(1)

..........................(1)

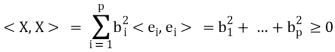

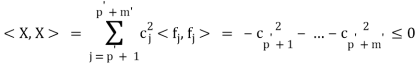

The vector occurring on the left hand side we call X. We have that

The vector X also occurs on the right hand side of (1), and gives that

These two expressions for the number hX, Xi implies that b1 = ··· = bp = 0 and that cp 0+1 = ··· = cp 0+m0 = 0. In other words we have proven tha

The p+ m0 vectors e1, . . . , ep, fp 0+1, . . . , fp 0+m0 are linearly independent. Their total number can not exceed s which gives p+m0 ≤ s. Thus p ≤ p 0 . However, the argument presented is symmetric, and we can interchange the role of p 0 and p, from where it follows that p = p 0 . Thus the number of 1’s is unique. It then follows that also the number of -1’s is unique.

The pair (p, m) where p is the number of 1’s and m is the number of -1’s is called the signature of the form. To a given signature (p, m) we have the diagonal (n × n)-matrix Ip,m whose first p diagonal elements are 1, next m diagonal elements are -1, and the remaining diagonal (n − p − m) elements are 0.

Q13) State Rayleigh's power method.

A13)

Rayleigh's power method-

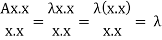

Suppose x be an eigen vector of A. Then the eigen value  corresponding to this eigen vector is given as-

corresponding to this eigen vector is given as-

This quotient is called Rayleigh’s quotient.

Proof:

Here x is an eigen vector of A,

As we know that Ax = λx

We can write-

Q14) Solve the following system of linear equations by using Guass seidel method-

6x + y + z = 105

4x + 8y + 3z = 155

5x + 4y - 10z = 65

A14)

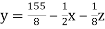

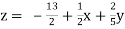

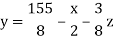

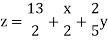

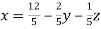

The above equations can be written as,

………………(1)

………………(1)

………………………(2)

………………………(2)

………………………..(3)

………………………..(3)

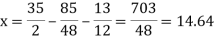

Now put z = y = 0 in first eq.

We get

x = 35/2

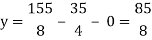

Put x = 35/2 and z = 0 in eq. (2)

We have,

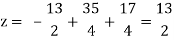

Put the values of x and y in eq. 3

Again start from eq.(1)

By putting the values of y and z

y = 85/8 and z = 13/2

We get

The process can be showed in the table format as below

Iterations | 1 | 2 | 3 | 4 |

|  | 14.64 | 15.12 | 14.98 |

|  | 9.62 | 10.06 | 9.98 |

|  | 4.67 | 5.084 | 4.98 |

At the fourth iteration, we get the values of x = 14.98, y = 9.98 , z = 4.98

Which are approximately equal to the actual values,

As x = 15, y = 10 and y = 5 ( which are the actual values)

Q15) Solve the following system of linear equations by using Gauss-seidel method-

5x + 2y + z = 12

x + 4y + 2z = 15

x + 2y + 5z = 20

A15)

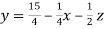

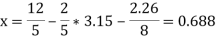

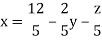

These equations can be written as,

………………(1)

………………(1)

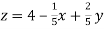

………………………(2)

………………………(2)

………………………..(3)

………………………..(3)

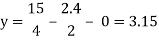

Put y and z equals to 0 in eq. 1

We get,

x = 2.4

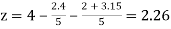

Put x = 2.4, and z = 0 in eq. 2 , we get

Put x = 2.4 and y = 3.15 in eq.(3) , we get

Again start from eq.(1), put the values of y and z , we get

We repeat the process again and again,

The following table can be obtained –

Iterations | 1 | 2 | 3 | 4 | 5 |

| 2.4 | 0.688 | 0.84416 | 0.962612 | 0.99426864 |

| 3.15 | 2.448 | 2.09736 | 2.013237 | 1.00034144 |

| 2.26 | 2.8832 | 2.99222 | 3.0021828 | 3.001009696 |

Here we see that the values are approx. Equal to exact values.

Exact values are, x = 1, y = 2, z = 3.