Unit - 4

Process Cooperation and Synchronization

Q1) Describe concurrency?

A1)

Concurrency is the execution of multiple instruction sequences at a similar time. It happens within the software once there area unit many method threads running in parallel. The running method threads perpetually communicate with one another through shared memory or message passing. Concurrency leads to the sharing of resources result in issues like deadlocks and resource starvation.

It helps in techniques like coordinating the execution of processes, memory allocation, and execution planning for increasing output.

Principles of Concurrency:

Both interleaved and overlapped processes may be viewed as samples of synchronal processes, they each gift similar issues.

The relative speed of execution can't be expected. It depends on the following:

- The activities of different processes

- The means software handles interrupts

- The planning policies of the software

Problems in Concurrency:

- Sharing world resources –

Sharing of worldwide resources safely is troublesome. If 2 processes each building use of a worldwide variable and each performs browse and write that variable, then the order in that numerous browse and write area unit dead is essential.

- Optimal allocation of resources –

It is troublesome for the software to manage the allocation of resources optimally.

- Locating programming errors –

It is troublesome to find a software error as a result of reports area unit sometimes not consistent.

- Locking the channel –

It may be inefficient for the software to easily lock the channel and prevents its use by different processes.

Advantages of Concurrency

- Running of multiple applications –

It modifies to run multiple applications at a similar time.

- Better resource utilization –

It allows that the resources that area unit unused by one application may be used for different applications.

- Better average reaction time –

Without concurrency, every application must be run to completion before succeeding one may be run.

- Better performance –

It allows the higher performance by the software. Once one application uses solely the processor and another application uses solely the disc drive then the time to run each application at the same time to completion is shorter than the time to run every application consecutively.

Drawbacks of Concurrency:

- It is needed to safeguard multiple applications from each other.

- It is needed to coordinate multiple applications through extra mechanisms.

- Additional performance overheads and complexities in operational systems area unit needed for switch among applications.

- Sometimes running too several applications at the same time results in severely degraded performance.

Issues of Concurrency:

- Non-atomic –

Operations that area unit non-atomic however interruptible by multiple processes will cause issues.

- Race conditions –

A race condition happens if the result depends on that of many processes gets to some extent initial.

- Blocking –

Processes will block watching for resources. A method can be blocked for a long amount of your time watching for input from a terminal. If the method is needed to periodically update some knowledge, this can be undesirable.

- Starvation –

It happens once a method doesn't get service to progress.

- Deadlock –

It happens once 2 processes area units are blocked and thus neither will proceed to execute.

Q2) What is the critical section problem?

A2) The critical Section is the part of a program that tries to access shared resources. That resource is also any resource in a very pc sort of a memory location, organization, computer hardware, or any IO device.

The vital section can not be dead by quite one method at an identical time; software faces difficulties in permitting and disallowing the processes from coming into the vital section.

The vital section downside is employed to style a group of protocols which might make sure that the Race condition among the processes can ne'er arise.

To synchronize the cooperative processes, our main task is to resolve the vital section downside. We want to supply an answer in such a way that the subsequent conditions are glad.

Requirements of Synchronization mechanisms

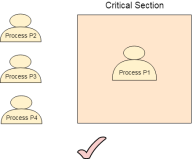

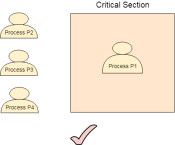

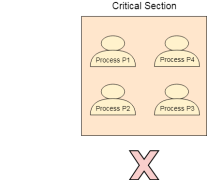

Fig 2 - Synchronization mechanisms

- Progress

Progress means that if one process doesn't need to execute into a critical section then it should not stop other processes to get into the critical section.

2. Bounded Waiting

We should be able to predict the waiting time for every process to get into the critical section. The process must not be endlessly waiting for getting into the critical section.

3. Architectural Neutrality

Our mechanism must be architectural natural. It means that if our solution is working fine on one architecture then it should also run on the other ones as well.

Q3) Define semaphore?

A3) Semaphores are integer variables that are utilized to provide solution to the critical section problem.

A semaphore is represented by S and apart from initialization it is utilize by two atomic operations, wait( ) and signal( ) for process synchronization.

Wait( ): The wait operation decreases the value of S, in case it is positive. On the other hand in case S is negative or zero, at that point no activity is performed.

Wait(S)

{

While (S<=0);

S- - ;

}

Signal( ): The signal( ) operation increases the value of its semaphore S.

Signal(S)

{

S++;

}

All changes to the integer value of the semaphore in the wait () and signal() function must be executed individually. That is, the point at which one process adjusts the semaphore value, no other process can at the same time change that equivalent semaphore value.

Furthermore, on account of wait (S), the testing of the integer value of (S ≤ 0), with its conceivable adjustment (S- - ), must be executed without interruption.

Q4) Write the properties of semaphore?

A4) Properties of Semaphores

● It's basic and consistently have a non-negative integer value.

● Works with numerous processes.

● Can have a wide range of critical section with various semaphores.

● Each critical section has exceptional access semaphores.

● Can allow numerous processes into the critical section at the same time, if desirable.

Q5) What are the types of semaphore?

A5) Types of Semaphores

There are two primary categories of semaphores for example counting semaphores and binary semaphores. Insights regarding these are given as below:

- Counting Semaphores: These are integer number value semaphores and have an unlimited value space. These semaphores are utilized to facilitate the resource access, where the semaphore count is the quantity of accessible resources. On the off chance that the resources are added, semaphore count naturally increases and if the resources are removed, the count is decremented.

- Binary Semaphores: The binary semaphores resemble counting semaphores yet their value is limited to 0 and 1. The wait( ) function possibly works when the semaphore is 1 and the signal operation succeeds when semaphore is 0. It is sometimes simpler to execute binary semaphores than counting semaphores.

Q6) Write the usage of semaphore?

A6) Semaphore Usage

● In practice, semaphores can take on one of two structures:

● Binary semaphores can have one of the two values, 0 or 1. They can be utilized to provide solution to the critical section problem as portrayed above, and can be utilized as mutexes on systems that don't give a different mutex mechanism. The utilization of mutexes for this reason is given in figure below.

Do {

Waiting (mutex);

// critical section

Signal (mutex);

//remainder section

} while (true);

Mutual-exclusion usage with semaphores

● Counting semaphores can take on any integer value, and are generally used to count the remaining number of some resource. The counter is defined to the value of such resources available in the system, and at point the counting semaphore is more than zero, at that point a process can enter a critical section and utilize one of the resources. When the counter gets up to zero (or negative in certain executions), then the process obstructs another process free a resource and increase the counting semaphore with a signal call.

Semaphores can likewise be utilized to synchronize certain tasks between processes. For instance, assume it is significant that process P1 execute instruction S1 before process P2 executes instruction S2.

● First we make a semaphore named sync that is shared by the two processes, and defined it to zero.

● At that point in process P1 we embed the code:

S1;

Signal( sync );

● And in process P2 we embed the code:

Wait( sync );

S2;

● Since sync was initialized to 0, process P2 will block on the wait until P1 executes the call to signal.

Q7) What are the advantages and disadvantages of semaphore?

A7) Advantages of Semaphores

Some of the benefits of semaphores are as per the following:

- Semaphores permit just one process into the critical section. They follow the mutual exclusion standard carefully and are substantially more effective than some different techniques for synchronization.

- There is no resource wastage in view of busy waiting in semaphores as processor time isn't wasted pointlessly to check if a condition is satisfied to enable a process to get to the critical section.

- Semaphores are implemented in the machine independent code of the microkernel. So they are machine independent.

Disadvantages of Semaphores

Some of the disadvantages of semaphores are as per the following:

- Semaphores are complicated so the wait and signal function must be designed in the right order to avoid deadlocks.

- Semaphores are unfeasible for last scale use as their utilization prompts loss of modularity. This happens in light of the fact that the wait and signal tasks avoid the formation of an organized design for the system.

- Semaphores may prompt a priority inversion where low priority processes may access the critical section first and high priority processes later.

Q8) Explain reader writer problem?

A8) The readers-writers problem is a classical problem of process synchronization, it relates to a data set such as a file that is shared between more than one process at a time. Among these various processes, some are Readers - which can only read the data set; they do not perform any updates, some are Writers - can both read and write in the data sets.

The readers-writers problem is used for managing synchronization among various reader and writer process so that there are no problems with the data sets, i.e. no inconsistency is generated.

Let's understand with an example - If two or more than two readers want to access the file at the same point in time there will be no problem. However, in other situations like when two writers or one reader and one writer wants to access the file at the same point of time, there may occur some problems, hence the task is to design the code in such a manner that if one reader is reading then no writer is allowed to update at the same point of time, similarly, if one writer is writing no reader is allowed to read the file at that point of time and if one writer is updating a file other writers should not be allowed to update the file at the same point of time. However, multiple readers can access the object at the same time.

Let us understand the possibility of reading and writing with the table given below:

TABLE 1

Case | Process 1 | Process 2 | Allowed / Not Allowed |

Case 1 | Writing | Writing | Not Allowed |

Case 2 | Reading | Writing | Not Allowed |

Case 3 | Writing | Reading | Not Allowed |

Case 4 | Reading | Reading | Allowed |

The solution of readers and writers can be implemented using binary semaphores.

We use two binary semaphores "write" and "mutex", where binary semaphore can be defined as:

Semaphore: A semaphore is an integer variable in S, that apart from initialization is accessed by only two standard atomic operations - wait and signal, whose definitions are as follows:

- Wait(S)

- {

- While(S <= 0) ;

- S--;

- }

- 2. signal(S)

- {

- S++;

- }

From the above definitions of wait, it is clear that if the value of S <= 0 then it will enter into an infinite loop (because of the semicolon; after while loop). Whereas the job of the signal is to increment the value of S.

The below code will provide the solution of the reader-writer problem, reader and writer process codes are given as follows -

Code for Reader Process

The code of the reader process is given below -

- Static int readcount = 0;

- Wait (mutex);

- Readcount ++; // on each entry of reader increment readcount

- If (readcount == 1)

- {

- Wait (write);

- }

- Signal(mutex);

- --READ THE FILE?

- Wait(mutex);

- Readcount --; // on every exit of reader decrement readcount

- If (readcount == 0)

- {

- Signal (write);

- }

- Signal(mutex);

In the above code of reader, mutex and write are semaphores that have an initial value of 1, whereas the read count variable has an initial value as 0. Both mutex and write are common in reader and writer process code, semaphore mutex ensures mutual exclusion and semaphore write handles the writing mechanism.

The read count variable denotes the number of readers accessing the file concurrently.

The moment variable read count becomes 1, wait operation is used to write semaphore which decreases the value by one. This means that a writer is not allowed how to access the file anymore. On completion of the read operation, read count is decremented by one. When read count becomes 0, the signal operation which is used to write permits a writer to access the file.

Code for Writer Process

The code that defines the writer process is given below:

- Wait(write);

- WRITE INTO THE FILE

- Signal(wrt);

If a writer wishes to access the file, wait operation is performed on write semaphore, which decrements write to 0 and no other writer can access the file. On completion of the writing job by the writer who was accessing the file, the signal operation is performed on write.

Q9) Describe the dining philosopher problem?

A9) The dining philosopher's problem is the classical problem of synchronization which says that Five philosophers are sitting around a circular table and their job is to think and eat alternatively. A bowl of noodles is placed at the center of the table along with five chopsticks for each of the philosophers. To eat a philosopher needs both their right and a left chopstick. A philosopher can only eat if both immediate left and right chopsticks of the philosopher is available. In case if both immediate left and right chopsticks of the philosopher are not available then the philosopher puts down their (either left or right) chopstick and starts thinking again.

The dining philosopher demonstrates a large class of concurrency control problems hence it's a classic synchronization problem.

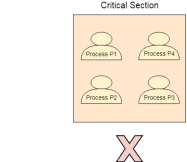

Fig 3 - Five Philosophers sitting around the table

Dining Philosophers Problem- Let's understand the Dining Philosophers Problem with the below code, we have used fig 1 as a reference to make you understand the problem exactly. The five Philosophers are represented as P0, P1, P2, P3, and P4 and five chopsticks by C0, C1, C2, C3, and C4.

- Void Philosopher

- {

- While(1)

- {

- Take_chopstick[i];

- Take_chopstick[ (i+1) % 5] ;

- . .

- . EATING THE NOODLE

- .

- Put_chopstick[i] );

- Put_chopstick[ (i+1) % 5] ;

- .

- . THINKING

- }

- }

Let's discuss the above code:

Suppose Philosopher P0 wants to eat, it will enter in Philosopher() function, and execute take_chopstick[i]; by doing this it holds C0 chopstick after that it execute take_chopstick[ (i+1) % 5]; by doing this it holds C1 chopstick( since i =0, therefore (0 + 1) % 5 = 1)

Similarly suppose now Philosopher P1 wants to eat, it will enter in Philosopher() function, and execute take_chopstick[i]; by doing this it holds C1 chopstick after that it execute take_chopstick[ (i+1) % 5]; by doing this it holds C2 chopstick( since i =1, therefore (1 + 1) % 5 = 2)

But Practically Chopstick C1 is not available as it has already been taken by philosopher P0, hence the above code generates problems and produces race condition.

Q10) What is the solution for dining philosopher problem?

A10) The solution of the Dining Philosophers Problem

We use a semaphore to represent a chopstick and this truly acts as a solution of the Dining Philosophers Problem. Wait and Signal operations will be used for the solution of the Dining Philosophers Problem, for picking a chopstick wait operation can be executed while for releasing a chopstick signal semaphore can be executed.

Semaphore: A semaphore is an integer variable in S, that apart from initialization is accessed by only two standard atomic operations - wait and signal, whose definitions are as follows:

- 1. wait(S)

- {

- While( S <= 0) ;

- S--;

- }

- 2. signal(S)

- {

- S++;

- }

From the above definitions of wait, it is clear that if the value of S <= 0 then it will enter into an infinite loop (because of the semicolon; after while loop). Whereas the job of the signal is to increment the value of S.

The structure of the chopstick is an array of a semaphore which is represented as shown below -

- Semaphore C[5];

Initially, each element of the semaphore C0, C1, C2, C3, and C4 are initialized to 1 as the chopsticks are on the table and not picked up by any of the philosophers.

Let's modify the above code of the Dining Philosopher Problem by using semaphore operations wait and signal, the desired code looks like

- Void Philosopher

- {

- While(1)

- {

- Wait( take_chopstickC[i] );

- Wait( take_chopstickC[(i+1) % 5] ) ;

- . .

- . EATING THE NOODLE

- .

- Signal( put_chopstickC[i] );

- Signal( put_chopstickC[ (i+1) % 5] ) ;

- .

- . THINKING

- }

- }

In the above code, first wait operation is performed on take_chopstickC[i] and take_chopstickC [ (i+1) % 5]. This shows philosopher i have picked up the chopsticks from its left and right. The eating function is performed after that.

On completion of eating by philosopher i the, signal operation is performed on take_chopstickC[i] and take_chopstickC [ (i+1) % 5]. This shows that the philosopher i have eaten and put down both the left and right chopsticks. Finally, the philosopher starts thinking again.

Let's understand how the above code is giving a solution to the dining philosopher problem?

Let value of i = 0( initial value ), Suppose Philosopher P0 wants to eat, it will enter in Philosopher() function, and execute Wait( take_chopstickC[i] ); by doing this it holds C0 chopstick and reduces semaphore C0 to 0, after that it execute Wait( take_chopstickC[(i+1) % 5] ); by doing this it holds C1 chopstick( since i =0, therefore (0 + 1) % 5 = 1) and reduces semaphore C1 to 0

Similarly, suppose now Philosopher P1 wants to eat, it will enter in Philosopher() function, and execute Wait( take_chopstickC[i] ); by doing this it will try to hold C1 chopstick but will not be able to do that, since the value of semaphore C1 has already been set to 0 by philosopher P0, therefore it will enter into an infinite loop because of which philosopher P1 will not be able to pick chopstick C1 whereas if Philosopher P2 wants to eat, it will enter in Philosopher() function, and execute Wait( take_chopstickC[i] ); by doing this it holds C2 chopstick and reduces semaphore C2 to 0, after that, it executes Wait( take_chopstickC[(i+1) % 5] ); by doing this it holds C3 chopstick( since i =2, therefore (2 + 1) % 5 = 3) and reduces semaphore C3 to 0.

Hence the above code is providing a solution to the dining philosopher problem, A philosopher can only eat if both immediate left and right chopsticks of the philosopher are available else philosopher needs to wait. Also at one go two independent philosophers can eat simultaneously (i.e., philosopher P0 and P2, P1 and P3 & P2 and P4 can eat simultaneously as all are the independent processes and they are following the above constraint of dining philosopher problem)

Q11) Explain the sleeping barber problem?

A11) The analogy is predicated upon a theoretical barber look with one barber. There's a barber look that has one barber, one chair, and n chairs for waiting for patrons if there are any to sit down on the chair.

- If there's no client, then the barber sleeps in his chair.

- When a client arrives, he has got to awaken the barber.

- If there are many purchasers and therefore the barber is cutting a customer’s hair, then the remaining customers either wait if their ar empty chairs within the {waiting room|lounge|waiting ara|room} or they leave if no chairs are empty.

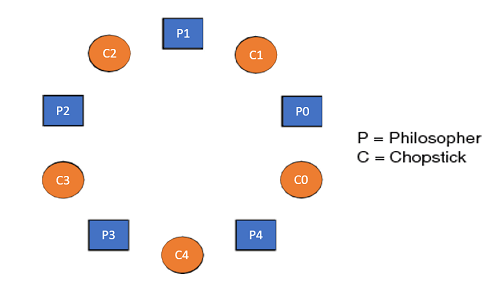

Fig 4 - Example

Solution: the answer to the current downside includes 3 semaphores. First is for the customer that counts the quantity of consumers' gift within the room (customer within the chair isn't enclosed as a result of he's not waiting). Second, the barber zero or one is employed to inform whether or not the barber is idle or is functioning, and therefore the third mutex is employed to supply the mutual exclusion that is needed for the method to execute. Within the solution, the client has the record of the quantity {of clients|of consumers|of shoppers} waiting within the room if the quantity of consumers is adequate to the number of chairs within the room then the approaching customer leaves the store.

When the barber shows up within the morning, he executes the procedure barber, inflicting him to the dam on the semaphore customers as a result of it's at the start zero. Then the barber goes to sleep till the primary client comes up.

When a client arrives, he executes the client procedure the client acquires the mutex for coming into the crucial region, if another client enters thenceforth, the other won't be able to something till the primary one has free the mutex. The client then checks the chairs within the {waiting room|lounge|waiting ara|room} if waiting customers are less then the number of chairs then he sits otherwise he leaves and releases the mutex.

If the chair is accessible then the client sits within the room and increments the variable waiting price and additionally will increase the customer’s semaphore this wakes up the barber if he's sleeping.

At now, the client and barber are each awake, and therefore the barber is prepared to relinquish that person a haircut. Once the haircut is over, the client exits the procedure, and if there aren't any customers in the room barber sleeps.

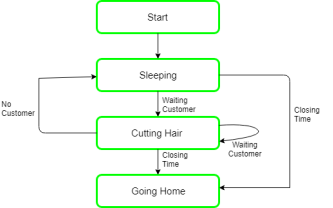

Fig 5 - Example

Algorithm for Sleeping Barber problem:

Semaphore Customers = 0; Semaphore Barber = 0; Mutex Seats = 1; Int FreeSeats = N; Barber { While(true) { /* waits for a customer (sleeps). */ Down(Customers);

/* mutex to protect the number of available seats.*/ Down(Seats);

/* a chair gets free.*/ FreeSeats++;

/* bring customer for haircut.*/ Up(Barber);

/* release the mutex on the chair.*/ Up(Seats); /* barber is cutting hair.*/ } }

Customer { While(true) { /* protects seats so only 1 customer tries to sit In a chair if that's the case.*/ Down(Seats); //This line should not be here. If(FreeSeats > 0) {

/* sitting down.*/ FreeSeats--;

/* notify the barber. */ Up(Customers);

/* release the lock */ Up(Seats);

/* wait in the waiting room if barber is busy. */ Down(Barber); // customer is having hair cut } else { /* release the lock */ Up(Seats); // customer leaves } } } |

Q12) What are the requirements of synchronization mechanisms?

A12)

Requirements of Synchronization mechanisms:

Primary-

- Mutual Exclusion:

Our solution must provide mutual exclusion. By Mutual Exclusion, we mean that if one process is executing inside critical section then the other process must not enter in the critical section.

Fig 2 – Critical Section

2. Progress:

Progress means that if one process doesn't need to execute into critical section then it should not stop other processes to get into the critical section.

Secondary-

- Bounded Waiting:

We should be able to predict the waiting time for every process to get into the critical section. The process must not be endlessly waiting for getting into the critical section.

2. Architectural Neutrality:

Our mechanism must be architectural natural. It means that if our solution is working fine on one architecture then it should also run on the other ones as well.

Q13) What are the advantages of Concurrency?

A13)

Advantages of Concurrency

- Running of multiple applications –

It modifies to run multiple applications at a similar time.

- Better resource utilization –

It allows that the resources that area unit unused by one application may be used for different applications.

- Better average reaction time –

Without concurrency, every application must be run to completion before succeeding one may be run.

- Better performance –

It allows the higher performance by the software. Once one application uses solely the processor and another application uses solely the disc drive then the time to run each application at the same time to completion is shorter than the time to run every application consecutively.

Q14) What are the drawbacks of Concurrency?

A14)

Drawbacks of Concurrency:

- It is needed to safeguard multiple applications from each other.

- It is needed to coordinate multiple applications through extra mechanisms.

- Additional performance overheads and complexities in operational systems area unit needed for switch among applications.

- Sometimes running too several applications at the same time results in severely degraded performance.

Q15) What are the issues of Concurrency?

A15)

Issues of Concurrency:

- Non-atomic –

Operations that area unit non-atomic however interruptible by multiple processes will cause issues.

- Race conditions –

A race condition happens if the result depends on that of many processes gets to some extent initial.

- Blocking –

Processes will block watching for resources. A method can be blocked for a long amount of your time watching for input from a terminal. If the method is needed to periodically update some knowledge, this can be undesirable.

- Starvation –

It happens once a method doesn't get service to progress.

- Deadlock –

It happens once 2 processes area units are blocked and thus neither will proceed to execute.

Q16) Explain Hardware Solution.

A16)

In Synchronization hardware, we have a tendency to explore many a lot of solutions to the critical-section downside mistreatment techniques starting from hardware to computer code based mostly Ap is accessible to application programmers. These solutions are supported the premise of locking; but, the look of such locks is quite subtle.

These Hardware options will build any programming task easier and improve system potency. Here, we have a tendency to gift some easy hardware directions that are accessible on several systems and show however they'll be used effectively in finding the critical-section downside. If we have a tendency to might forestall interrupts from occurring whereas a shared variable was being changed. The critical-section downside can be resolved merely during a uniprocessor atmosphere. During this manner, we'd be reassuring that the present sequence of directions would be allowed to execute so as while not pre-emption. No different directions would be run; thus, no surprising modifications can be created to the shared variable. This can be the approach taken by non-pre-emptive kernels. However sadly, this resolution isn't as possible during a digital computer atmosphere. Since the message is passed to all or any the processors, disabling interrupts on a digital computer is time intense.

System potency decreases once this massage passing delays entry into every important section. Conjointly the impact on a system’s clock is taken into account if the clock is unbroken updated by interrupts. Such a large amount of trendy laptop systems so offers special hardware directions that enable USA that we are able to take a look at and modify the content of a word or to swap the contents of 2 words atomically—that is, collectively uninterruptible unit. We have a tendency to could use these special directions to unravel the critical-section downside during a comparatively easy manner. Currently we have a tendency to abstract the most ideas behind these sorts of directions. The TestAndSet() instruction is outlined as shown in below code.

Boolean take a look at and set(boolean *target)mathematician recreational vehicle = *target;

*target = true;

Return rv;

}

Definition of the take a look at and set() instruction.

The essential characteristic is that this instruction is dead atomically. So, if 2 TestAndSet C) directions ar dead at the same time (each on a distinct CPU), they'll be dead consecutive in some absolute order. We are able to implement mutual exclusion by declaring a mathematician variable lock, initialized to false, if the machine supports the TestAndSet () instruction. The structure of method P, is shown in below.

Example

Do {

Whereas (test and set(&lock)) ;

/* do nothing */

/* important section */

Lock = false;

/* remainder section */

}

While (true);

Mutual-exclusion implementation with take a look at and set().

The SwapO instruction, in distinction to the TestAndSet0 instruction, operates on the contents of 2 words; it's dead atomically. Mutual exclusion is provided as follows if the machine supports the SwapO instruction. Here, a worldwide mathematician variable lock is said and is initialized to false. To boot, every method encompasses a native mathematician variable key. The structure of method P, is shown in figure below.

Int compare_and_swap(int *val, int expected, int new val)temporary worker = *val;

If (*val == expected)

*val = new val;

Come back temp;

}

Definition of the compare and swap() instruction.

Do{

Whereas (compare_and_swap(&lock, 0, 1) != 0) ;

/* do nothing */

/* important section */

Lock = 0;

/* remainder section */

}

While (true);

Mutual-exclusion implementation with the compare and swap() instruction.

Since these algorithms satisfy the mutual-exclusion demand, they are doing not satisfy the bounded-waiting demand. In below code, we have a tendency to gift another rule mistreatment the TestAndSet() instruction that satisfies all the critical-section needs.

Do{

Waiting[i] = true;

Key = true;

Whereas (waiting[i] && key)

Key = take a look at and set(&lock);

Waiting[i] = false;

/* important section */

j = (i + 1) nothing n;

Whereas ((j != i) && !waiting[j])

j = (j + 1) nothing n;

If (j == i)

Lock = false;

Else

Waiting[j] = false;

/* remainder section */

}

While (true);

Bounded-waiting mutual exclusion with take a look at and set().

The same information structures ar mathematician waiting[n]; mathematician lock; These information structures ar initialized to false. To prove the purpose that the mutual exclusion demand is met, we have a tendency to note that method P; will enter its important section provided that either waiting[i] == false or key -- false. The worth of key will become false provided that the TestAndSet() is dead. The primary method to execute the TestAndSet() can realize key == false; all others should wait. The variable waiting[i] could become false provided that another method leaves its important section; just one waiting [i] is ready to false, maintaining the mutual-exclusion demand.

To prove the purpose that the progress demand is met, we have a tendency to note that the arguments bestowed for mutual exclusion conjointly apply here, since a method exiting the important section either set lock to false or sets waiting[j] to false. Each of them enables a method that's waiting to enter its important section to proceed. To prove the purpose that the bounded-waiting demand is met, we have a tendency to note that, once a method leaves its important section, it scans the array waiting within the cyclic ordering (z' + one, i + 2,..., n — 1, 0, ..., i — 1). It designates the primary method during this ordering that's within the entry section (waiting [j] =- true) because the next one to enter the important section.

Any method that waiting to enter its important section can so do thus among n — one turns. Sadly for hardware designers, implementing atomic TestAndSet() directions on multiprocessors isn't a trivial task.