Unit - 5

Quality Management

Q1) What is quality management?

A1) Quality Management

By contributing enhancements to the product development process, Software Quality Management guarantees that the appropriate level of quality is met. SQA aspires to create a team culture, and it is considered as everyone's responsibility.

To ensure cost and schedule adherence, software quality management should be separate from project management. It has a direct impact on process quality and an indirect impact on product quality.

Activities of Software Quality Management

● Quality Assurance - Quality Assurance (QA) strives to establish quality procedures and standards at the organizational level.

● Quality Planning - Develop a quality plan by selecting suitable procedures and standards for a specific project and modifying them as needed.

● Quality Control - Ensure that the software development team follows best practices and standards in order to generate high-quality products.

Q2) Write about Product metrics?

A2) Product metrics are measurements of software products at every level of development, from requirements through fully functional systems. Only software features are measured in product metrics.

Product metrics fall into two classes:

● Dynamic metrics, Metrics that are acquired dynamically as a result of measurements taken during the execution of a programme.

● Static metrics, Measurements taken from system representations such as design, code, or documentation are used to collect static metrics.

Static metrics aid in comprehending, understanding, and preserving the complexity of a software system, whereas dynamic metrics aid in measuring the efficiency and dependability of a programme.

Software quality criteria are frequently strongly tied to dynamic measurements. It is pretty simple to estimate the time required to start the system and to measure the execution time required for certain processes. These are directly tied to the effectiveness of system failures, and the type of failure can be reported, as well as the software's reliability.

Static matrices, on the other hand, have an indirect relationship with quality criteria. To try to develop and confirm the relationship between complexity, understandability, and maintainability, a significant number of these matrices have been presented. Among the static metrics that have been used to evaluate quality qualities, programme or component length and control complexity appear to be the most reliable predictors of understandability, system complexity, and maintainability.

Q3) Describe the metrics for analysis models?

A3) Metrics for Analysis Models

The analytical model has only a few metrics that have been proposed. In the framework of the analysis model, however, metrics can be used for project estimation. These metrics are used to evaluate the analysis model in order to forecast the size of the resulting system. Size is a good predictor of increasing coding, integration, and testing work; it can also be a good indicator of the programme design's complexity. The most frequent approaches for estimating size are function points and lines of code.

Function Point (FP) Metric

A.J Albrecht proposed the function point metric, which is used to quantify system functioning, estimate effort, anticipate the frequency of errors, and estimate the number of components in the system. The function point is calculated utilizing a link between software complexity and information domain value. The number of external inputs, external outputs, external inquires, internal logical files, and external interface files are all information domain values used in function point.

Lines of Code (LOC)

One of the most generally used ways for estimating size is lines of code (LOC). The number of delivered lines of code, excluding comments and blank lines, is referred to as LOC. Because code writing differs from one programming language to the next, it is greatly dependent on the programming language employed. Lines of code written in assembly language, for example, are more than lines of code written in C++ (for a large programme).

Simple size-oriented metrics such as mistakes per KLOC (thousand lines of code), defects per KLOC, cost per KLOC, and so on can be obtained from LOC. Program complexity, development effort, programmer performance, and other factors have all been predicted using LOC. Hasltead, for example, offered a set of metrics that can be used to calculate programme length, volume, difficulty, and development effort.

Q4) Explain Metrics for design model?

A4) A software project's success is primarily determined by the quality and efficacy of the programme design. As a result, developing software measurements from which useful indicators can be inferred is critical. These indications are used to take the necessary measures to build software that meets the needs of the users. Various design metrics are used to represent the complexity, quality, and other aspects of the software design, such as architectural design metrics, component-level design metrics, user-interface design metrics, and object-oriented design metrics.

Computer programme design metrics, like all other software metrics, aren't perfect. The debate regarding their effectiveness and how they should be used is still going on. Many experts believe that more testing is necessary before design measures can be implemented. Nonetheless, designing without measurement is an untenable option.

We may look at some of the most common computer programme design metrics. Each can assist the designer have a better understanding of the design and help it progress to a higher level of excellence.

Architectural Design Metrics

Architectural design metrics are concerned with aspects of the programme architecture, particularly the architectural structure and module efficacy. These metrics are black box in the sense that they don't require any knowledge of a software component's inner workings.

Three levels of software design difficulty are defined by Card and Glass: structural complexity, data complexity, and system complexity.

The structural complexity of a module I is calculated as follows:

S(i) = f 2 out(i)

Where fout(i) is the fan-out7 of module i.

Data complexity is a measure of the complexity of a module's internal interface, and it's defined as

D(i) = v(i)/[ fout(i) +1]

The number of input and output variables transmitted to and from module I is denoted by v(i).

Last but not least, system complexity is defined as the sum of structural and data complexity, expressed as

C(i) = S(i) + D(i)

The overall architectural complexity of the system increases as each of these complexity values rises. As a result, the chance of increased integration and testing effort increases.

The fan-in and fan-out are also used in an earlier high-level architectural design metric presented by Henry and Kafura. The authors propose a complexity metric of the kind (applicable to call and return architectures).

HKM = length(i) x [ fin(i) + fout(i)]2

Where length(i) denotes the number of programming language statements in a module I and fin(i) is the module's fan-in. The definitions of fan in and fan out in this book are expanded by Henry and Kafura to include not only the number of module control connections (module calls), but also the number of data structures from which a module retrieves (fan-in) or updates (fan-out) data. The procedural design can be used to estimate the number of programming language statements for module I in order to compute HKM during design.

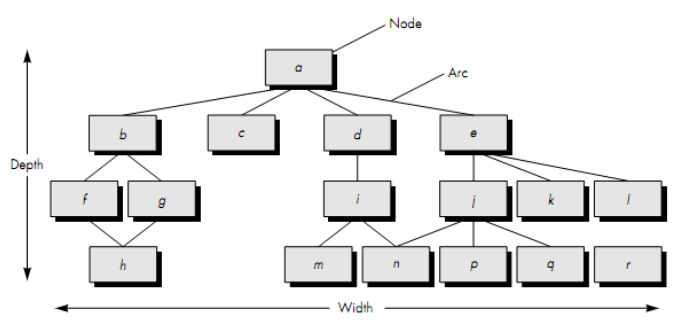

Fenton proposes a set of simple morphology (i.e., shape) metrics for comparing different programme architectures using a set of simple dimensions. The following metrics can be defined using the figure:

Size = n + a

The number of nodes is n, while the number of arcs is a. For the architecture depicted in the diagram.

Q5) Write the metrics for source code?

A5) By using a set of primitive metrics that may be obtained once the design phase is complete and code is written, Halstead offered the first analytic laws for computer science. These measures are outlined in the table below.

Nl = number of distinct operators in a program

n2 = number of distinct operands in a program

N1 = total number of operators

N2= total number of operands.

Halstead devised a phrase for overall programme length, volume, difficulty, development effort, and so on based on these metrics.

The following equation can be used to calculate the length of a programme (N).

N = n1log2nl + n2 log2n2.

The following equation can be used to calculate programme volume (V).

V = N log2 (n1+n2).

Note that programme volume varies by programming language and refers to the amount of data (in bits) required to express a programme. The following equation can be used to compute the volume ratio (L).

L = Volume of the most compact form of a program/Volume of the actual program

L must be less than 1 in this case. The following equation can also be used to compute volume ratio.

L = (2/n1)* (n2/N2).

The following equations can be used to calculate programme difficulty (D) and effort (E).

D = (n1/2)*(N2/n2).

E = D * V

Q6) Write about metrics for testing?

A6) The majority of testing metrics are focused on the testing process rather than the technical aspects of the test. Metrics for analysis, design, and coding are commonly used by testers to aid them in the design and execution of test cases.

The function point can be used to estimate the amount of time it will take to complete a test. By calculating the number of function points in the present project and comparing them to any previous project, several features such as errors identified, number of test cases required, testing effort, and so on may be calculated.

The same metrics that are used in architectural design may be utilized to show how integration testing should be done. Furthermore, cyclomatic complexity can be utilised as a metric in basis-path testing to calculate the number of test cases that are required.

Halstead measurements can be used to calculate testing effort metrics. The following equations can be used to compute Halstead effort (e) given programme volume (V) and programme level (PL).

e = V/ PL

Where

PL = 1/ [(n1/2) * (N2/n2)] … (1)

The percentage of overall testing effort allotted for a specific module (z) can be computed using the equation below.

Percentage of testing effort (z) = e(z)/∑e(i)

With the aid of equation, e(z) is computed for module z. (1). The sum of Halstead effort (e) throughout all of the system's modules is used as the denominator.

Q7) Write about metrics for maintenance?

A7) Metrics have been specifically built for the maintenance activities. The IEEE has suggested the Software Maturity Index (SMI), which gives indicators of software product stability. The following parameters are taken into account when calculating SMI.

● Number of modules in current release (MT)

● Number of modules that have been changed in the current release (Fe)

● Number of modules that have been added in the current release (Fa)

● Number of modules that have been deleted from the current release (Fd)

SMI can be calculated using the equation below if all of the parameters are known.

SMI = [MT– (Fa+ Fe + Fd)]/MT.

When 8MI approaches 1.0, a product begins to stabilise. SMI can also be used as a metric for planning software maintenance efforts by creating empirical models to determine the amount of work required.

Q8) Describe quality?

A8) Quality

Quality in software is an abstract term. It can be hard to identify its existence, but its absence can be easy to immediately see. Therefore, in the quest to enhance software quality, we must first consider the concept of software quality.

“Software quality tests, in the sense of software engineering, how well the software is built (design quality) and how well the software conforms to that design' (quality of conformance). The 'fitness for purpose' of a piece of software is also represented.”

Correctness, maintainability, portability, testability, usability, dependability, efficiency, integrity, reusability, and interoperability are all examples of measurable properties.

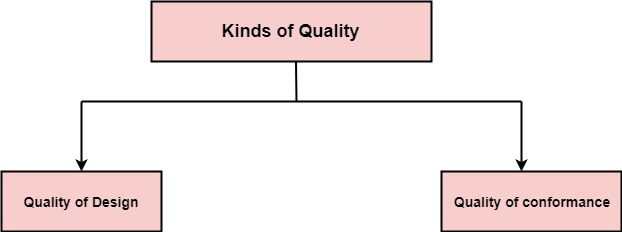

Quality can be divided into two categories:

Fig 1: Types of quality

● Quality of Design: The attributes that designers select for an item are referred to as quality of design. Material quality, tolerances, and performance criteria are all factors that influence design quality.

● Quality of conformance: The degree to which design specifications are followed during manufacturing is known as quality of compliance. The higher the degree of compliance, the higher the level of conformance quality.

Q9) Explain the evolution of quality management?

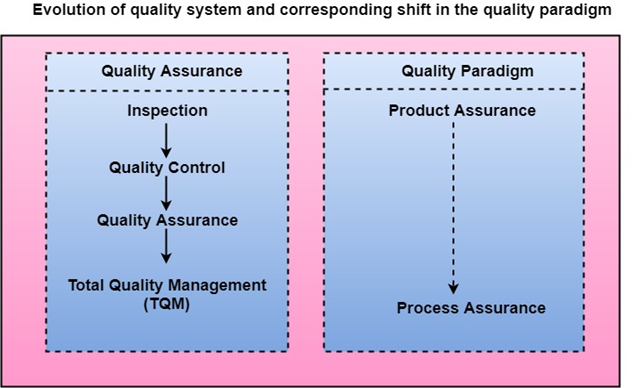

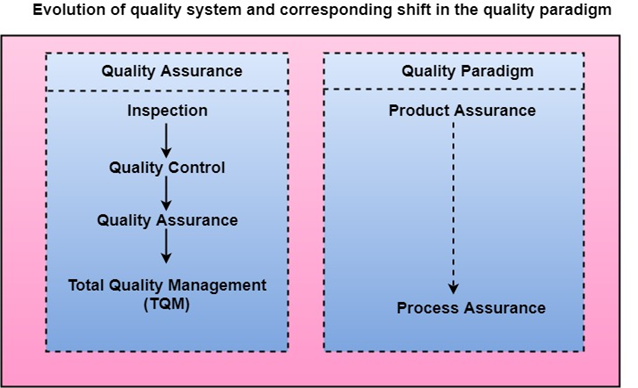

A9) Over the previous five decades, quality systems have become increasingly sophisticated. Prior to World War II, the standard procedure for producing high-quality items was to evaluate the final goods and eliminate any defective components. Organizational quality systems have evolved through four stages since that time, as illustrated in the diagram. The initial goal of product inspection was to establish a technique for quality control (QC).

Quality control focuses on not only finding and removing defective items, but also on determining the reasons of the flaws. As a result, quality control attempts to remedy the causes of faults rather than simply rejecting the items. The creation of quality assurance methods was the next major advancement in quality methods.

The core principle of modern quality assurance is that if an organization's processes are correct and strictly followed, the products must be of high quality. The new quality functions provide instructions for identifying, specifying, analysing, and improving the manufacturing process.

Total quality management (TQM) asserts that an organization's procedures must be continually improved through process measurements. TQM goes beyond quality assurance and seeks for continuous process improvement. TQM goes beyond documenting processes to re-designing them to make them more efficient. Business Process Reengineering is a term associated with TQM (BPR).

The goal of BPR is to reengineer the way business is done in a company. As a result of the discussion above, it can be concluded that the quality paradigm has shifted from product assurance to process assurance over time, as seen in fig.

Fig 2: Evolution of quality system

Q10) Define quality assurance?

A10) The auditing and reporting techniques used to give stakeholders with data needed to make well-informed decisions are referred to as quality assurance.

It refers to how well a system matches predetermined requirements and client expectations. Throughout the SDLC, it is also monitoring the processes and products.

Software quality assurance is a planned and systematic set of operations that ensures that an item or product complies with established technical specifications.

A series of actions aimed at calculating the development or manufacturing process for products.

Quality Assurance Criteria

The following are the quality assurance criteria that will be used to evaluate the software:

● correctness

● efficiency

● flexibility

● integrity

● interoperability

● maintainability

● portability

● reliability

● reusability

● testability

● usability

SQA Encompasses

● An approach to quality management.

● Software engineering technology that works (methods and tools).

● Formal technical reviews that are put to the test during the software development process.

● A testing technique with multiple tiers.

● Control over the documentation of software and the changes that are made to it.

● A technique for ensuring that software development standards are followed.

● Mechanisms for measuring and reporting.

Q11) Define software review?

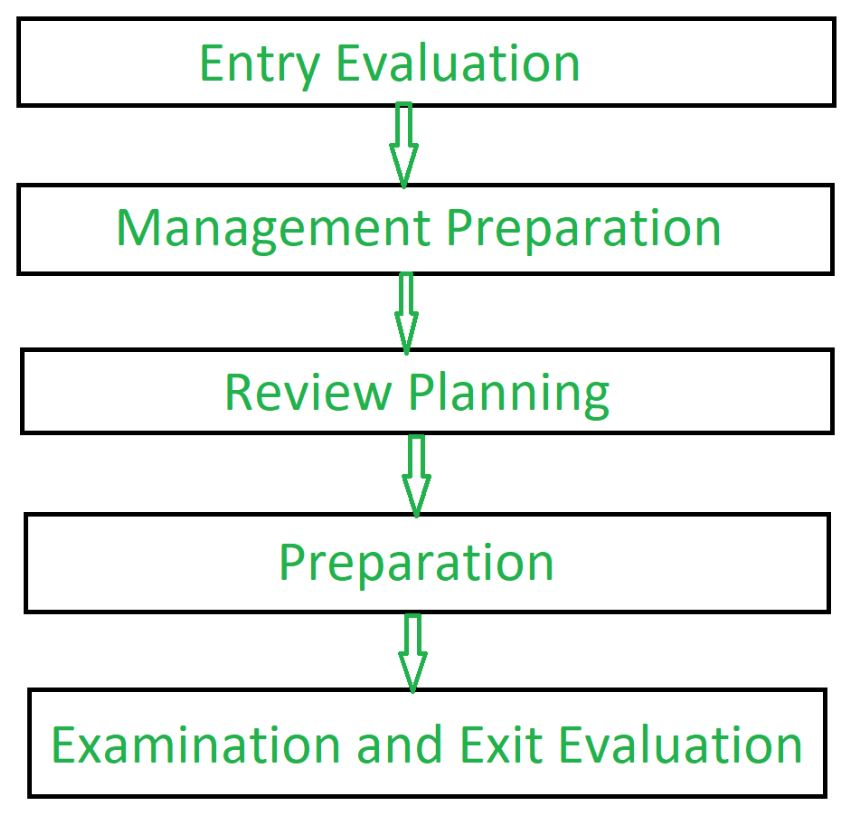

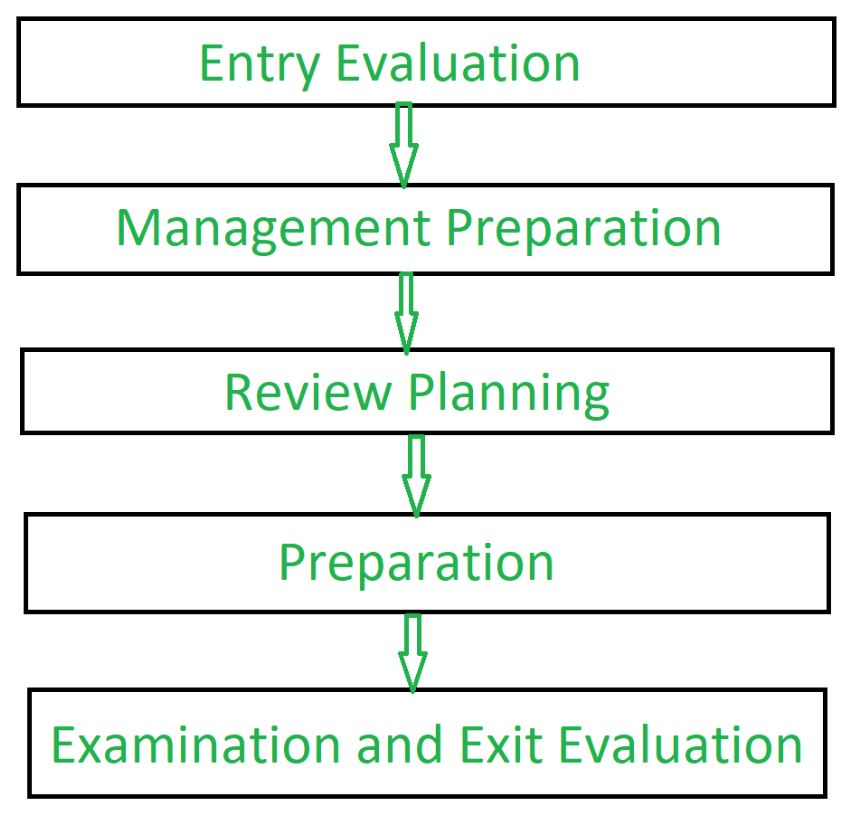

A11) During the early phases of the Software Development Life Cycle, Software Review is a systematic inspection of a software by one or more individuals who work together to detect and repair mistakes and defects in the software (SDLC). Software review is an important part of the Product Development Life Cycle (SDLC), since it aids software developers in confirming the software's quality, functionality, and other critical features and components. It is a comprehensive procedure that includes testing the software product and ensuring that it satisfies the client's needs.

Software review is a manual process that verifies documents such as requirements, system designs, codes, test plans, and test cases.

Objectives of Software Review

The goal of software testing is to:

● To increase the development team's productivity.

● To reduce the amount of time and money spent on testing.

● To reduce the number of flaws in the final software.

● To get rid of shortcomings.

Fig 3: Process of software review

Q12) What are the types of software review?

A12) Types of Software Reviews

There are three primary types of software evaluations:

- Software Peer Review

Peer review is the process of evaluating a product's technical content and quality, and it's usually done by the work product's author and a few other developers.

Peer review is used to analyze or resolve issues in software that is also subjected to quality checks by other team members.

2. Software Management Review

The Software Management Review assesses the current state of the project. This section is where decisions on downstream activities are made.

3. Software Audit Review

A Software Audit Review is a sort of external review in which one or more critics who are not members of the development team organize an independent inspection of the software product and its procedures in order to assess compliance with stated specifications and standards. Managerial personnel are in charge of this.

Q13) Write the advantages of software review?

A13) Advantages

● Defects can be detected early in the development process (especially in formal review).

● Early examination also lowers software maintenance costs.

● It can be used to teach technical writers how to write.

● It can be used to eliminate process flaws that lead to problems.

Q14) What is statistical quality assurance?

A14) Traditional compliance testing methodologies can sometimes just provide pass/fail information, resulting in insufficient quality control measurements, failure root cause identification, and overall quality assurance (QA) in the manufacturing process.

Intertek customizes a feasible QA process called Statistical Quality Assurance (SQA) by combining legal, customer, and vital safety criteria. On a parts-per-million (PPM) basis, SQA is used to discover potential differences in the manufacturing process and predict potential faults. It gives a statistical description of the finished product and addresses quality and safety concerns that may develop during production.

There are three key approaches in SQA:

- Force Diagram - A Force Diagram depicts the testing procedure for a product. The construction of Force Diagrams by Intertek engineers is based on our understanding of foreseeable use, critical manufacturing processes, and critical components with a high failure rate.

- Test-to-failure (TTF) - Unlike any legal testing, TTF informs producers of the number of problems they can expect to encounter in every million units produced. This information is included into the process, which determines whether a product needs quality improvement or is overengineered, resulting in cost savings.

- Intervention - According to the entire production quantity and production lines, products are divided into groupings. Following that, each group is subjected to an intervention. The end result is determined by the Z-value, which is a quality and consistency indication for a product. Manufacturers may localize a defect to a specific lot and manufacturing line with intervention, saving time and money in the process.

Q15) Write the difference between quality assurance and quality control?

A15) Difference between Quality assurance and Quality control

Quality Assurance | Quality Control |

It is a process which deliberates on providing assurance that quality request will be achieved. | QC is a process which deliberates on fulfilling the quality request. |

A QA aim is to prevent the defect. | A QC aim is to identify and improve the defects. |

QA is the technique of managing quality. | QC is a method to verify quality. |

QA does not involve executing the program. | QC always involves executing the program. |

All team members are responsible for QA. | Testing team is responsible for QC. |

QA Example: Verification | QC Example: Validation. |

QA means Planning for doing a process. | QC Means Action for executing the planned process. |

Statistical Technique used on QA is known as Statistical Process Control (SPC.) | Statistical Technique used on QC is known as Statistical Quality Control (SPC.) |

QA makes sure you are doing the right things. | QC makes sure the results of what you've done are what you expected. |

Unit - 5

Quality Management

Q1) What is quality management?

A1) Quality Management

By contributing enhancements to the product development process, Software Quality Management guarantees that the appropriate level of quality is met. SQA aspires to create a team culture, and it is considered as everyone's responsibility.

To ensure cost and schedule adherence, software quality management should be separate from project management. It has a direct impact on process quality and an indirect impact on product quality.

Activities of Software Quality Management

● Quality Assurance - Quality Assurance (QA) strives to establish quality procedures and standards at the organizational level.

● Quality Planning - Develop a quality plan by selecting suitable procedures and standards for a specific project and modifying them as needed.

● Quality Control - Ensure that the software development team follows best practices and standards in order to generate high-quality products.

Q2) Write about Product metrics?

A2) Product metrics are measurements of software products at every level of development, from requirements through fully functional systems. Only software features are measured in product metrics.

Product metrics fall into two classes:

● Dynamic metrics, Metrics that are acquired dynamically as a result of measurements taken during the execution of a programme.

● Static metrics, Measurements taken from system representations such as design, code, or documentation are used to collect static metrics.

Static metrics aid in comprehending, understanding, and preserving the complexity of a software system, whereas dynamic metrics aid in measuring the efficiency and dependability of a programme.

Software quality criteria are frequently strongly tied to dynamic measurements. It is pretty simple to estimate the time required to start the system and to measure the execution time required for certain processes. These are directly tied to the effectiveness of system failures, and the type of failure can be reported, as well as the software's reliability.

Static matrices, on the other hand, have an indirect relationship with quality criteria. To try to develop and confirm the relationship between complexity, understandability, and maintainability, a significant number of these matrices have been presented. Among the static metrics that have been used to evaluate quality qualities, programme or component length and control complexity appear to be the most reliable predictors of understandability, system complexity, and maintainability.

Q3) Describe the metrics for analysis models?

A3) Metrics for Analysis Models

The analytical model has only a few metrics that have been proposed. In the framework of the analysis model, however, metrics can be used for project estimation. These metrics are used to evaluate the analysis model in order to forecast the size of the resulting system. Size is a good predictor of increasing coding, integration, and testing work; it can also be a good indicator of the programme design's complexity. The most frequent approaches for estimating size are function points and lines of code.

Function Point (FP) Metric

A.J Albrecht proposed the function point metric, which is used to quantify system functioning, estimate effort, anticipate the frequency of errors, and estimate the number of components in the system. The function point is calculated utilizing a link between software complexity and information domain value. The number of external inputs, external outputs, external inquires, internal logical files, and external interface files are all information domain values used in function point.

Lines of Code (LOC)

One of the most generally used ways for estimating size is lines of code (LOC). The number of delivered lines of code, excluding comments and blank lines, is referred to as LOC. Because code writing differs from one programming language to the next, it is greatly dependent on the programming language employed. Lines of code written in assembly language, for example, are more than lines of code written in C++ (for a large programme).

Simple size-oriented metrics such as mistakes per KLOC (thousand lines of code), defects per KLOC, cost per KLOC, and so on can be obtained from LOC. Program complexity, development effort, programmer performance, and other factors have all been predicted using LOC. Hasltead, for example, offered a set of metrics that can be used to calculate programme length, volume, difficulty, and development effort.

Q4) Explain Metrics for design model?

A4) A software project's success is primarily determined by the quality and efficacy of the programme design. As a result, developing software measurements from which useful indicators can be inferred is critical. These indications are used to take the necessary measures to build software that meets the needs of the users. Various design metrics are used to represent the complexity, quality, and other aspects of the software design, such as architectural design metrics, component-level design metrics, user-interface design metrics, and object-oriented design metrics.

Computer programme design metrics, like all other software metrics, aren't perfect. The debate regarding their effectiveness and how they should be used is still going on. Many experts believe that more testing is necessary before design measures can be implemented. Nonetheless, designing without measurement is an untenable option.

We may look at some of the most common computer programme design metrics. Each can assist the designer have a better understanding of the design and help it progress to a higher level of excellence.

Architectural Design Metrics

Architectural design metrics are concerned with aspects of the programme architecture, particularly the architectural structure and module efficacy. These metrics are black box in the sense that they don't require any knowledge of a software component's inner workings.

Three levels of software design difficulty are defined by Card and Glass: structural complexity, data complexity, and system complexity.

The structural complexity of a module I is calculated as follows:

S(i) = f 2 out(i)

Where fout(i) is the fan-out7 of module i.

Data complexity is a measure of the complexity of a module's internal interface, and it's defined as

D(i) = v(i)/[ fout(i) +1]

The number of input and output variables transmitted to and from module I is denoted by v(i).

Last but not least, system complexity is defined as the sum of structural and data complexity, expressed as

C(i) = S(i) + D(i)

The overall architectural complexity of the system increases as each of these complexity values rises. As a result, the chance of increased integration and testing effort increases.

The fan-in and fan-out are also used in an earlier high-level architectural design metric presented by Henry and Kafura. The authors propose a complexity metric of the kind (applicable to call and return architectures).

HKM = length(i) x [ fin(i) + fout(i)]2

Where length(i) denotes the number of programming language statements in a module I and fin(i) is the module's fan-in. The definitions of fan in and fan out in this book are expanded by Henry and Kafura to include not only the number of module control connections (module calls), but also the number of data structures from which a module retrieves (fan-in) or updates (fan-out) data. The procedural design can be used to estimate the number of programming language statements for module I in order to compute HKM during design.

Fenton proposes a set of simple morphology (i.e., shape) metrics for comparing different programme architectures using a set of simple dimensions. The following metrics can be defined using the figure:

Size = n + a

The number of nodes is n, while the number of arcs is a. For the architecture depicted in the diagram.

Q5) Write the metrics for source code?

A5) By using a set of primitive metrics that may be obtained once the design phase is complete and code is written, Halstead offered the first analytic laws for computer science. These measures are outlined in the table below.

Nl = number of distinct operators in a program

n2 = number of distinct operands in a program

N1 = total number of operators

N2= total number of operands.

Halstead devised a phrase for overall programme length, volume, difficulty, development effort, and so on based on these metrics.

The following equation can be used to calculate the length of a programme (N).

N = n1log2nl + n2 log2n2.

The following equation can be used to calculate programme volume (V).

V = N log2 (n1+n2).

Note that programme volume varies by programming language and refers to the amount of data (in bits) required to express a programme. The following equation can be used to compute the volume ratio (L).

L = Volume of the most compact form of a program/Volume of the actual program

L must be less than 1 in this case. The following equation can also be used to compute volume ratio.

L = (2/n1)* (n2/N2).

The following equations can be used to calculate programme difficulty (D) and effort (E).

D = (n1/2)*(N2/n2).

E = D * V

Q6) Write about metrics for testing?

A6) The majority of testing metrics are focused on the testing process rather than the technical aspects of the test. Metrics for analysis, design, and coding are commonly used by testers to aid them in the design and execution of test cases.

The function point can be used to estimate the amount of time it will take to complete a test. By calculating the number of function points in the present project and comparing them to any previous project, several features such as errors identified, number of test cases required, testing effort, and so on may be calculated.

The same metrics that are used in architectural design may be utilized to show how integration testing should be done. Furthermore, cyclomatic complexity can be utilised as a metric in basis-path testing to calculate the number of test cases that are required.

Halstead measurements can be used to calculate testing effort metrics. The following equations can be used to compute Halstead effort (e) given programme volume (V) and programme level (PL).

e = V/ PL

Where

PL = 1/ [(n1/2) * (N2/n2)] … (1)

The percentage of overall testing effort allotted for a specific module (z) can be computed using the equation below.

Percentage of testing effort (z) = e(z)/∑e(i)

With the aid of equation, e(z) is computed for module z. (1). The sum of Halstead effort (e) throughout all of the system's modules is used as the denominator.

Q7) Write about metrics for maintenance?

A7) Metrics have been specifically built for the maintenance activities. The IEEE has suggested the Software Maturity Index (SMI), which gives indicators of software product stability. The following parameters are taken into account when calculating SMI.

● Number of modules in current release (MT)

● Number of modules that have been changed in the current release (Fe)

● Number of modules that have been added in the current release (Fa)

● Number of modules that have been deleted from the current release (Fd)

SMI can be calculated using the equation below if all of the parameters are known.

SMI = [MT– (Fa+ Fe + Fd)]/MT.

When 8MI approaches 1.0, a product begins to stabilise. SMI can also be used as a metric for planning software maintenance efforts by creating empirical models to determine the amount of work required.

Q8) Describe quality?

A8) Quality

Quality in software is an abstract term. It can be hard to identify its existence, but its absence can be easy to immediately see. Therefore, in the quest to enhance software quality, we must first consider the concept of software quality.

“Software quality tests, in the sense of software engineering, how well the software is built (design quality) and how well the software conforms to that design' (quality of conformance). The 'fitness for purpose' of a piece of software is also represented.”

Correctness, maintainability, portability, testability, usability, dependability, efficiency, integrity, reusability, and interoperability are all examples of measurable properties.

Quality can be divided into two categories:

Fig 1: Types of quality

● Quality of Design: The attributes that designers select for an item are referred to as quality of design. Material quality, tolerances, and performance criteria are all factors that influence design quality.

● Quality of conformance: The degree to which design specifications are followed during manufacturing is known as quality of compliance. The higher the degree of compliance, the higher the level of conformance quality.

Q9) Explain the evolution of quality management?

A9) Over the previous five decades, quality systems have become increasingly sophisticated. Prior to World War II, the standard procedure for producing high-quality items was to evaluate the final goods and eliminate any defective components. Organizational quality systems have evolved through four stages since that time, as illustrated in the diagram. The initial goal of product inspection was to establish a technique for quality control (QC).

Quality control focuses on not only finding and removing defective items, but also on determining the reasons of the flaws. As a result, quality control attempts to remedy the causes of faults rather than simply rejecting the items. The creation of quality assurance methods was the next major advancement in quality methods.

The core principle of modern quality assurance is that if an organization's processes are correct and strictly followed, the products must be of high quality. The new quality functions provide instructions for identifying, specifying, analysing, and improving the manufacturing process.

Total quality management (TQM) asserts that an organization's procedures must be continually improved through process measurements. TQM goes beyond quality assurance and seeks for continuous process improvement. TQM goes beyond documenting processes to re-designing them to make them more efficient. Business Process Reengineering is a term associated with TQM (BPR).

The goal of BPR is to reengineer the way business is done in a company. As a result of the discussion above, it can be concluded that the quality paradigm has shifted from product assurance to process assurance over time, as seen in fig.

Fig 2: Evolution of quality system

Q10) Define quality assurance?

A10) The auditing and reporting techniques used to give stakeholders with data needed to make well-informed decisions are referred to as quality assurance.

It refers to how well a system matches predetermined requirements and client expectations. Throughout the SDLC, it is also monitoring the processes and products.

Software quality assurance is a planned and systematic set of operations that ensures that an item or product complies with established technical specifications.

A series of actions aimed at calculating the development or manufacturing process for products.

Quality Assurance Criteria

The following are the quality assurance criteria that will be used to evaluate the software:

● correctness

● efficiency

● flexibility

● integrity

● interoperability

● maintainability

● portability

● reliability

● reusability

● testability

● usability

SQA Encompasses

● An approach to quality management.

● Software engineering technology that works (methods and tools).

● Formal technical reviews that are put to the test during the software development process.

● A testing technique with multiple tiers.

● Control over the documentation of software and the changes that are made to it.

● A technique for ensuring that software development standards are followed.

● Mechanisms for measuring and reporting.

Q11) Define software review?

A11) During the early phases of the Software Development Life Cycle, Software Review is a systematic inspection of a software by one or more individuals who work together to detect and repair mistakes and defects in the software (SDLC). Software review is an important part of the Product Development Life Cycle (SDLC), since it aids software developers in confirming the software's quality, functionality, and other critical features and components. It is a comprehensive procedure that includes testing the software product and ensuring that it satisfies the client's needs.

Software review is a manual process that verifies documents such as requirements, system designs, codes, test plans, and test cases.

Objectives of Software Review

The goal of software testing is to:

● To increase the development team's productivity.

● To reduce the amount of time and money spent on testing.

● To reduce the number of flaws in the final software.

● To get rid of shortcomings.

Fig 3: Process of software review

Q12) What are the types of software review?

A12) Types of Software Reviews

There are three primary types of software evaluations:

- Software Peer Review

Peer review is the process of evaluating a product's technical content and quality, and it's usually done by the work product's author and a few other developers.

Peer review is used to analyze or resolve issues in software that is also subjected to quality checks by other team members.

2. Software Management Review

The Software Management Review assesses the current state of the project. This section is where decisions on downstream activities are made.

3. Software Audit Review

A Software Audit Review is a sort of external review in which one or more critics who are not members of the development team organize an independent inspection of the software product and its procedures in order to assess compliance with stated specifications and standards. Managerial personnel are in charge of this.

Q13) Write the advantages of software review?

A13) Advantages

● Defects can be detected early in the development process (especially in formal review).

● Early examination also lowers software maintenance costs.

● It can be used to teach technical writers how to write.

● It can be used to eliminate process flaws that lead to problems.

Q14) What is statistical quality assurance?

A14) Traditional compliance testing methodologies can sometimes just provide pass/fail information, resulting in insufficient quality control measurements, failure root cause identification, and overall quality assurance (QA) in the manufacturing process.

Intertek customizes a feasible QA process called Statistical Quality Assurance (SQA) by combining legal, customer, and vital safety criteria. On a parts-per-million (PPM) basis, SQA is used to discover potential differences in the manufacturing process and predict potential faults. It gives a statistical description of the finished product and addresses quality and safety concerns that may develop during production.

There are three key approaches in SQA:

- Force Diagram - A Force Diagram depicts the testing procedure for a product. The construction of Force Diagrams by Intertek engineers is based on our understanding of foreseeable use, critical manufacturing processes, and critical components with a high failure rate.

- Test-to-failure (TTF) - Unlike any legal testing, TTF informs producers of the number of problems they can expect to encounter in every million units produced. This information is included into the process, which determines whether a product needs quality improvement or is overengineered, resulting in cost savings.

- Intervention - According to the entire production quantity and production lines, products are divided into groupings. Following that, each group is subjected to an intervention. The end result is determined by the Z-value, which is a quality and consistency indication for a product. Manufacturers may localize a defect to a specific lot and manufacturing line with intervention, saving time and money in the process.

Q15) Write the difference between quality assurance and quality control?

A15) Difference between Quality assurance and Quality control

Quality Assurance | Quality Control |

It is a process which deliberates on providing assurance that quality request will be achieved. | QC is a process which deliberates on fulfilling the quality request. |

A QA aim is to prevent the defect. | A QC aim is to identify and improve the defects. |

QA is the technique of managing quality. | QC is a method to verify quality. |

QA does not involve executing the program. | QC always involves executing the program. |

All team members are responsible for QA. | Testing team is responsible for QC. |

QA Example: Verification | QC Example: Validation. |

QA means Planning for doing a process. | QC Means Action for executing the planned process. |

Statistical Technique used on QA is known as Statistical Process Control (SPC.) | Statistical Technique used on QC is known as Statistical Quality Control (SPC.) |

QA makes sure you are doing the right things. | QC makes sure the results of what you've done are what you expected. |

Unit - 5

Quality Management

Q1) What is quality management?

A1) Quality Management

By contributing enhancements to the product development process, Software Quality Management guarantees that the appropriate level of quality is met. SQA aspires to create a team culture, and it is considered as everyone's responsibility.

To ensure cost and schedule adherence, software quality management should be separate from project management. It has a direct impact on process quality and an indirect impact on product quality.

Activities of Software Quality Management

● Quality Assurance - Quality Assurance (QA) strives to establish quality procedures and standards at the organizational level.

● Quality Planning - Develop a quality plan by selecting suitable procedures and standards for a specific project and modifying them as needed.

● Quality Control - Ensure that the software development team follows best practices and standards in order to generate high-quality products.

Q2) Write about Product metrics?

A2) Product metrics are measurements of software products at every level of development, from requirements through fully functional systems. Only software features are measured in product metrics.

Product metrics fall into two classes:

● Dynamic metrics, Metrics that are acquired dynamically as a result of measurements taken during the execution of a programme.

● Static metrics, Measurements taken from system representations such as design, code, or documentation are used to collect static metrics.

Static metrics aid in comprehending, understanding, and preserving the complexity of a software system, whereas dynamic metrics aid in measuring the efficiency and dependability of a programme.

Software quality criteria are frequently strongly tied to dynamic measurements. It is pretty simple to estimate the time required to start the system and to measure the execution time required for certain processes. These are directly tied to the effectiveness of system failures, and the type of failure can be reported, as well as the software's reliability.

Static matrices, on the other hand, have an indirect relationship with quality criteria. To try to develop and confirm the relationship between complexity, understandability, and maintainability, a significant number of these matrices have been presented. Among the static metrics that have been used to evaluate quality qualities, programme or component length and control complexity appear to be the most reliable predictors of understandability, system complexity, and maintainability.

Q3) Describe the metrics for analysis models?

A3) Metrics for Analysis Models

The analytical model has only a few metrics that have been proposed. In the framework of the analysis model, however, metrics can be used for project estimation. These metrics are used to evaluate the analysis model in order to forecast the size of the resulting system. Size is a good predictor of increasing coding, integration, and testing work; it can also be a good indicator of the programme design's complexity. The most frequent approaches for estimating size are function points and lines of code.

Function Point (FP) Metric

A.J Albrecht proposed the function point metric, which is used to quantify system functioning, estimate effort, anticipate the frequency of errors, and estimate the number of components in the system. The function point is calculated utilizing a link between software complexity and information domain value. The number of external inputs, external outputs, external inquires, internal logical files, and external interface files are all information domain values used in function point.

Lines of Code (LOC)

One of the most generally used ways for estimating size is lines of code (LOC). The number of delivered lines of code, excluding comments and blank lines, is referred to as LOC. Because code writing differs from one programming language to the next, it is greatly dependent on the programming language employed. Lines of code written in assembly language, for example, are more than lines of code written in C++ (for a large programme).

Simple size-oriented metrics such as mistakes per KLOC (thousand lines of code), defects per KLOC, cost per KLOC, and so on can be obtained from LOC. Program complexity, development effort, programmer performance, and other factors have all been predicted using LOC. Hasltead, for example, offered a set of metrics that can be used to calculate programme length, volume, difficulty, and development effort.

Q4) Explain Metrics for design model?

A4) A software project's success is primarily determined by the quality and efficacy of the programme design. As a result, developing software measurements from which useful indicators can be inferred is critical. These indications are used to take the necessary measures to build software that meets the needs of the users. Various design metrics are used to represent the complexity, quality, and other aspects of the software design, such as architectural design metrics, component-level design metrics, user-interface design metrics, and object-oriented design metrics.

Computer programme design metrics, like all other software metrics, aren't perfect. The debate regarding their effectiveness and how they should be used is still going on. Many experts believe that more testing is necessary before design measures can be implemented. Nonetheless, designing without measurement is an untenable option.

We may look at some of the most common computer programme design metrics. Each can assist the designer have a better understanding of the design and help it progress to a higher level of excellence.

Architectural Design Metrics

Architectural design metrics are concerned with aspects of the programme architecture, particularly the architectural structure and module efficacy. These metrics are black box in the sense that they don't require any knowledge of a software component's inner workings.

Three levels of software design difficulty are defined by Card and Glass: structural complexity, data complexity, and system complexity.

The structural complexity of a module I is calculated as follows:

S(i) = f 2 out(i)

Where fout(i) is the fan-out7 of module i.

Data complexity is a measure of the complexity of a module's internal interface, and it's defined as

D(i) = v(i)/[ fout(i) +1]

The number of input and output variables transmitted to and from module I is denoted by v(i).

Last but not least, system complexity is defined as the sum of structural and data complexity, expressed as

C(i) = S(i) + D(i)

The overall architectural complexity of the system increases as each of these complexity values rises. As a result, the chance of increased integration and testing effort increases.

The fan-in and fan-out are also used in an earlier high-level architectural design metric presented by Henry and Kafura. The authors propose a complexity metric of the kind (applicable to call and return architectures).

HKM = length(i) x [ fin(i) + fout(i)]2

Where length(i) denotes the number of programming language statements in a module I and fin(i) is the module's fan-in. The definitions of fan in and fan out in this book are expanded by Henry and Kafura to include not only the number of module control connections (module calls), but also the number of data structures from which a module retrieves (fan-in) or updates (fan-out) data. The procedural design can be used to estimate the number of programming language statements for module I in order to compute HKM during design.

Fenton proposes a set of simple morphology (i.e., shape) metrics for comparing different programme architectures using a set of simple dimensions. The following metrics can be defined using the figure:

Size = n + a

The number of nodes is n, while the number of arcs is a. For the architecture depicted in the diagram.

Q5) Write the metrics for source code?

A5) By using a set of primitive metrics that may be obtained once the design phase is complete and code is written, Halstead offered the first analytic laws for computer science. These measures are outlined in the table below.

Nl = number of distinct operators in a program

n2 = number of distinct operands in a program

N1 = total number of operators

N2= total number of operands.

Halstead devised a phrase for overall programme length, volume, difficulty, development effort, and so on based on these metrics.

The following equation can be used to calculate the length of a programme (N).

N = n1log2nl + n2 log2n2.

The following equation can be used to calculate programme volume (V).

V = N log2 (n1+n2).

Note that programme volume varies by programming language and refers to the amount of data (in bits) required to express a programme. The following equation can be used to compute the volume ratio (L).

L = Volume of the most compact form of a program/Volume of the actual program

L must be less than 1 in this case. The following equation can also be used to compute volume ratio.

L = (2/n1)* (n2/N2).

The following equations can be used to calculate programme difficulty (D) and effort (E).

D = (n1/2)*(N2/n2).

E = D * V

Q6) Write about metrics for testing?

A6) The majority of testing metrics are focused on the testing process rather than the technical aspects of the test. Metrics for analysis, design, and coding are commonly used by testers to aid them in the design and execution of test cases.

The function point can be used to estimate the amount of time it will take to complete a test. By calculating the number of function points in the present project and comparing them to any previous project, several features such as errors identified, number of test cases required, testing effort, and so on may be calculated.

The same metrics that are used in architectural design may be utilized to show how integration testing should be done. Furthermore, cyclomatic complexity can be utilised as a metric in basis-path testing to calculate the number of test cases that are required.

Halstead measurements can be used to calculate testing effort metrics. The following equations can be used to compute Halstead effort (e) given programme volume (V) and programme level (PL).

e = V/ PL

Where

PL = 1/ [(n1/2) * (N2/n2)] … (1)

The percentage of overall testing effort allotted for a specific module (z) can be computed using the equation below.

Percentage of testing effort (z) = e(z)/∑e(i)

With the aid of equation, e(z) is computed for module z. (1). The sum of Halstead effort (e) throughout all of the system's modules is used as the denominator.

Q7) Write about metrics for maintenance?

A7) Metrics have been specifically built for the maintenance activities. The IEEE has suggested the Software Maturity Index (SMI), which gives indicators of software product stability. The following parameters are taken into account when calculating SMI.

● Number of modules in current release (MT)

● Number of modules that have been changed in the current release (Fe)

● Number of modules that have been added in the current release (Fa)

● Number of modules that have been deleted from the current release (Fd)

SMI can be calculated using the equation below if all of the parameters are known.

SMI = [MT– (Fa+ Fe + Fd)]/MT.

When 8MI approaches 1.0, a product begins to stabilise. SMI can also be used as a metric for planning software maintenance efforts by creating empirical models to determine the amount of work required.

Q8) Describe quality?

A8) Quality

Quality in software is an abstract term. It can be hard to identify its existence, but its absence can be easy to immediately see. Therefore, in the quest to enhance software quality, we must first consider the concept of software quality.

“Software quality tests, in the sense of software engineering, how well the software is built (design quality) and how well the software conforms to that design' (quality of conformance). The 'fitness for purpose' of a piece of software is also represented.”

Correctness, maintainability, portability, testability, usability, dependability, efficiency, integrity, reusability, and interoperability are all examples of measurable properties.

Quality can be divided into two categories:

Fig 1: Types of quality

● Quality of Design: The attributes that designers select for an item are referred to as quality of design. Material quality, tolerances, and performance criteria are all factors that influence design quality.

● Quality of conformance: The degree to which design specifications are followed during manufacturing is known as quality of compliance. The higher the degree of compliance, the higher the level of conformance quality.

Q9) Explain the evolution of quality management?

A9) Over the previous five decades, quality systems have become increasingly sophisticated. Prior to World War II, the standard procedure for producing high-quality items was to evaluate the final goods and eliminate any defective components. Organizational quality systems have evolved through four stages since that time, as illustrated in the diagram. The initial goal of product inspection was to establish a technique for quality control (QC).

Quality control focuses on not only finding and removing defective items, but also on determining the reasons of the flaws. As a result, quality control attempts to remedy the causes of faults rather than simply rejecting the items. The creation of quality assurance methods was the next major advancement in quality methods.

The core principle of modern quality assurance is that if an organization's processes are correct and strictly followed, the products must be of high quality. The new quality functions provide instructions for identifying, specifying, analysing, and improving the manufacturing process.

Total quality management (TQM) asserts that an organization's procedures must be continually improved through process measurements. TQM goes beyond quality assurance and seeks for continuous process improvement. TQM goes beyond documenting processes to re-designing them to make them more efficient. Business Process Reengineering is a term associated with TQM (BPR).

The goal of BPR is to reengineer the way business is done in a company. As a result of the discussion above, it can be concluded that the quality paradigm has shifted from product assurance to process assurance over time, as seen in fig.

Fig 2: Evolution of quality system

Q10) Define quality assurance?

A10) The auditing and reporting techniques used to give stakeholders with data needed to make well-informed decisions are referred to as quality assurance.

It refers to how well a system matches predetermined requirements and client expectations. Throughout the SDLC, it is also monitoring the processes and products.

Software quality assurance is a planned and systematic set of operations that ensures that an item or product complies with established technical specifications.

A series of actions aimed at calculating the development or manufacturing process for products.

Quality Assurance Criteria

The following are the quality assurance criteria that will be used to evaluate the software:

● correctness

● efficiency

● flexibility

● integrity

● interoperability

● maintainability

● portability

● reliability

● reusability

● testability

● usability

SQA Encompasses

● An approach to quality management.

● Software engineering technology that works (methods and tools).

● Formal technical reviews that are put to the test during the software development process.

● A testing technique with multiple tiers.

● Control over the documentation of software and the changes that are made to it.

● A technique for ensuring that software development standards are followed.

● Mechanisms for measuring and reporting.

Q11) Define software review?

A11) During the early phases of the Software Development Life Cycle, Software Review is a systematic inspection of a software by one or more individuals who work together to detect and repair mistakes and defects in the software (SDLC). Software review is an important part of the Product Development Life Cycle (SDLC), since it aids software developers in confirming the software's quality, functionality, and other critical features and components. It is a comprehensive procedure that includes testing the software product and ensuring that it satisfies the client's needs.

Software review is a manual process that verifies documents such as requirements, system designs, codes, test plans, and test cases.

Objectives of Software Review

The goal of software testing is to:

● To increase the development team's productivity.

● To reduce the amount of time and money spent on testing.

● To reduce the number of flaws in the final software.

● To get rid of shortcomings.

Fig 3: Process of software review

Q12) What are the types of software review?

A12) Types of Software Reviews

There are three primary types of software evaluations:

- Software Peer Review

Peer review is the process of evaluating a product's technical content and quality, and it's usually done by the work product's author and a few other developers.

Peer review is used to analyze or resolve issues in software that is also subjected to quality checks by other team members.

2. Software Management Review

The Software Management Review assesses the current state of the project. This section is where decisions on downstream activities are made.

3. Software Audit Review

A Software Audit Review is a sort of external review in which one or more critics who are not members of the development team organize an independent inspection of the software product and its procedures in order to assess compliance with stated specifications and standards. Managerial personnel are in charge of this.

Q13) Write the advantages of software review?

A13) Advantages

● Defects can be detected early in the development process (especially in formal review).

● Early examination also lowers software maintenance costs.

● It can be used to teach technical writers how to write.

● It can be used to eliminate process flaws that lead to problems.

Q14) What is statistical quality assurance?

A14) Traditional compliance testing methodologies can sometimes just provide pass/fail information, resulting in insufficient quality control measurements, failure root cause identification, and overall quality assurance (QA) in the manufacturing process.

Intertek customizes a feasible QA process called Statistical Quality Assurance (SQA) by combining legal, customer, and vital safety criteria. On a parts-per-million (PPM) basis, SQA is used to discover potential differences in the manufacturing process and predict potential faults. It gives a statistical description of the finished product and addresses quality and safety concerns that may develop during production.

There are three key approaches in SQA:

- Force Diagram - A Force Diagram depicts the testing procedure for a product. The construction of Force Diagrams by Intertek engineers is based on our understanding of foreseeable use, critical manufacturing processes, and critical components with a high failure rate.

- Test-to-failure (TTF) - Unlike any legal testing, TTF informs producers of the number of problems they can expect to encounter in every million units produced. This information is included into the process, which determines whether a product needs quality improvement or is overengineered, resulting in cost savings.

- Intervention - According to the entire production quantity and production lines, products are divided into groupings. Following that, each group is subjected to an intervention. The end result is determined by the Z-value, which is a quality and consistency indication for a product. Manufacturers may localize a defect to a specific lot and manufacturing line with intervention, saving time and money in the process.

Q15) Write the difference between quality assurance and quality control?

A15) Difference between Quality assurance and Quality control

Quality Assurance | Quality Control |

It is a process which deliberates on providing assurance that quality request will be achieved. | QC is a process which deliberates on fulfilling the quality request. |

A QA aim is to prevent the defect. | A QC aim is to identify and improve the defects. |

QA is the technique of managing quality. | QC is a method to verify quality. |

QA does not involve executing the program. | QC always involves executing the program. |

All team members are responsible for QA. | Testing team is responsible for QC. |

QA Example: Verification | QC Example: Validation. |

QA means Planning for doing a process. | QC Means Action for executing the planned process. |

Statistical Technique used on QA is known as Statistical Process Control (SPC.) | Statistical Technique used on QC is known as Statistical Quality Control (SPC.) |

QA makes sure you are doing the right things. | QC makes sure the results of what you've done are what you expected. |