OS1

UNIT 5Memory Management Q1) Give an overview of memory management of an operating systemA2)In operating systems, memory management is the function in charge of dealing with the computers primary memory. Memory management is the usefulness of an operating system which handles or oversees primary memory and moves processes forward and backward between primary memory and disk during execution. Memory management monitors every single memory location, weather it is allotted to some process or it is free. It checks how much memory is to be allotted to processes. It chooses which process will get memory at what time. It tracks at whatever point some memory gets free or unallocated and correspondingly it refreshes the status. This is particular from application memory management, which is the way a process deals with the memory appointed to it by the operating system.

Basic HardwareMain memory and the registers incorporated with the processor itself are the main storage that the CPU can get to straightforwardly. There are machine instructions that take memory addresses as contentions, however none that take disk addresses. Along these lines, any instructions in execution, and any data being utilized by the instructions, must be in one of these direct access storage devices. In the event that the data are not in memory, they should be moved there before the CPU can work on them. Registers that are incorporated with the CPU are commonly available inside one cycle of the CPU clock. Most CPUs can interpret instructions and perform straightforward operations on register contents at the pace of at least one tasks for each clock tick. We first need to ensure that each process has a different memory space. To do this, we need the capability to decide the scope of legal addresses tends to that the process may access and to guarantee that the process can access just these legal addresses. We can give this protection by utilizing two registers, for the most part a base and limit, as outlined in figure below. The base holds the smallest legitimate physical memory address; the limit registers determines the size of the range.

Security of memory space is practiced by having the CPU hardware look at each address created in user mode with the registers. Any attempt by a program executing in user mode to access operating-system memory or other users' memory brings about a snare to the operating system, which treats the endeavour as a fatal error (Figure below). This plan forestalls a user program from (incidentally or purposely) changing the code or data structures of either the operating system or different users.

|

|

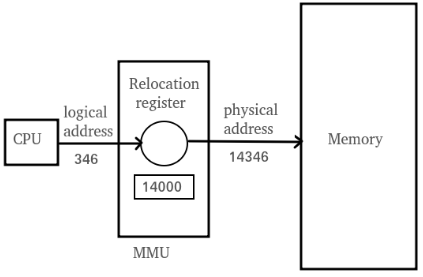

Q2) What is Address Binding of memory management in an operating system?A2) Address binding is the process of mapping with one address space onto the next address space. Logical address is address produced by CPU during execution though Physical Address alludes to location in memory unit (the one that is stacked into memory). Note that user manages just logical address (Virtual address). The logical address experiences interpretation by the MMU or address interpretation unit specifically. The output of this process is the suitable physical address or the location of code/data in RAM. Much of the time, a user program will experience a few stages some of which might be discretionary before being executed. Addresses might be spoken to in various ways during these steps. Addresses in the source program are commonly representative, (for example, count). A compiler will normally bind these symbolic addresses to relocatable addresses. The linkage editor or loader will thusly tie the relocatable addresses to absolute addresses. Traditionally, the binding of instructions and data to memory addresses can be done at any progression en route:

- Compile Time- The principal kind of address binding is compile time address binding. This distributes a space in memory to the machine code of a PC when the program is assembled to an executable binary file. The address binding designates a logical address to the beginning stage of the segment in memory where the object code is put away. The memory assignment is long term and can be modified distinctly by recompiling the program.

- Load Time- On the off chance that memory allotment is assigned at the time the program is allocated, at that point no program can ever move starting with one computer then onto the next in its compiled state. This is on the grounds that the executable code will contain memory distributions that may as of now be being used by different programs on the new computer. In this case, the program's logical addresses are not bound to physical locations until the program is conjured and stacked into memory.

- Execution Time- Execution time address binding normally applies just to factors in programs and is the most well-known type of binding for contents, which don't get compiled. In this situation, the program demands memory space for a variable in a program the first time that variable is experienced during the processing of instructions in the content. The memory will distribute space to that factor until the program arrangement closes, or except if a particular guidance inside the content discharges the memory address bound to a variable.

Q3) What is Dynamic Loading in memory management in an operating system?A3)Dynamic loading means loading the library into the memory during load or run-time. Dynamic loading can be imagined to be like plugins, that is an exe can really execute before the dynamic loading happens. (The dynamic loading for instance can be made utilizing Load Library bring in C or C++.) With dynamic loading, a routine isn't stacked until it is called. All routines are kept on disk in a relocatable load design. The principle program is stacked into memory and is executed. At the point when a routine needs to call another routine, the calling routine first verifies whether the other routine has been stacked. On the off chance that it has not, the relocatable linking loader is called to stack the ideal routine into memory and to refresh the program's address tables to reflects this change. At that point control is gone to the recently stacked routine. The benefits of dynamic loading is that an unused routine is never stacked. This technique is especially helpful when a lot of code are expected to deal with rarely happening cases, for example, error routines. Dynamic loading does not require uncommon help from the operating system. It is the duty of the users to plan their programs to take favourable position of such a strategy. Operating systems may support the programmer, be that as it may, by giving library schedules to execute dynamic loading. Q4) Explain Logical Versus Physical Address space.A4)The address generated by CPU while running a program is known as Logical address. The logical address is virtual address, it doesn't exist physically, along these lines, that is why called as Virtual Address. This address is utilized as a kind of perspective to access the physical memory area by CPU. The term Logical Address Space is utilized for the arrangement of every logical address produced by a program's point of view. The hardware device called Memory-Management Unit is utilized for mapping logical address to its comparing physical address. Physical Address is similar to a physical area of required data in a memory. The user never legitimately manages the physical address however can access by its comparing logical address. The user program creates the logical address and imagines that the program is running in this logical address however the program needs physical memory for its execution, in this way, the logical address must be mapped to the physical address by MMU before they are utilized. The term Physical Address Space is utilized for every single physical addresses relating to the logical addresses in a Logical address space.

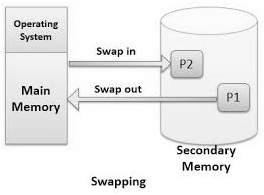

Mapping virtual-address to physical-addresses Q5) What is swapping in memory management?A5)Swapping is a technique wherein a process can be swapped incidentally out of primary memory (or move) to secondary storage (disk) and make that memory accessible to different processes. At some later time, the system swaps back the process from the secondary storage to primary memory. Swapping is a valuable method that empowers a computer to execute programs and control data files bigger than main memory. The operating system copies however much data as could reasonably be expected into main memory, and leaves the rest on the disk. At the point when the operating system needs data from the disk, it trades a bit of data (called a page or section) in main memory with a bit of data on the disk. In spite of the fact that performance is typically influenced by swapping process yet it helps in running numerous and huge processes in parallel and that is the reason Swapping is otherwise called a technique for memory compaction. Swapping includes performing two operations called swapping in and swapping out. The operation of setting the pages or blocks of data from the hard disk to the main memory is called swapping in. Then again, the operation of evacuating pages or blocks of data from main memory to the hard disk is known as swapping out. The swapping method is helpful when bigger program is to be executed or a few operations need to performed on a huge file.

For swapping the sweeper additionally utilizes some address those are otherwise called the logical and physical address of processes. For giving the logical address into the physical address there the accompanying two methodologies utilized. At the point when the memory is given to the process then the specific location is put away by the CPU which is additionally have a section into the segment portrayal table methods where segments a process is running since when the process needs swapping that specific location must be reloaded. Q6) Explain Contiguous Memory Allocation.A6) Contiguous memory allocation is an old style memory allotment model that allocates a process back to back memory blocks (that is, memory blocks having sequential addresses). Contiguous memory allocation is one of the most established memory allotment design. At the point when a process needs to execute, memory is requested by the process. The size of the process is examined and the measure of contiguous main memory accessible to execute the process. In the event that adequate contiguous memory is discovered, the process is allotted memory to begin its execution. Else, it is added to a queue of waiting processes until adequate free contiguous memory is accessible. In the contiguous memory allocation, both the operating system and the user must stay in the main memory. The main memory is separated into two parts one segment is for the operating system and other is for the user program.

|

|

- Static movement: In the static migration swapping never to be performed in light of the fact that the process is consistently have a memory. This isn’t to be changed at the execution time. For instance, the memory which is given to the input and output tasks and the memory which is given to the CPU to executing the processes won't be changed with the goal that this is otherwise called the static migration of the memory.

- Dynamic migration: The dynamic migration is otherwise called the memory which is changed and moves by the processes at the time of execution. So that there must be some system to give memory those processes those are running. The mapping of the logical address into the physical address will be performed at the time of execution or at run time.

Memory Protection Memory protection is a way by which we can control memory access rights on a computer. Its fundamental point is to keep a process from getting memory that has not been assigned to it. Henceforth keeps a bug infected process away from all other different processes, or the operating system itself, and rather brings about a segmentation fault or storage infringement special case being sent to the disturbing process, by and large killing of process.

Memory Allocation There are two different ways of allocating a memory to process as fixed sized partitions or variable sized partitions. Fixed sized partition One of the easiest techniques for allotting memory is to partition memory into a few fixed-sized partitions. In the fixed sized partition, the system is divided into fixed size partition of memory (could possibly be of a similar size) here whole partition is permitted to a process. Each partition may contain precisely one process. Subsequently, the degree of multiprogramming is bound by the quantity of partitions. In this multiple partition strategy there will be a point when a partition is free, a process is chosen from the input queue and is stacked into the free partition else if there is some wastage inside the partition, at that point it is called internal fragmentation. On the other hand, if unequal-size fixed partitions are created the we can assign each process the smallest partition which will fit that process. Advantage: Management or accounting is simple. Disadvantage: Internal discontinuity Variable size partition In the variable size partition, memory if not divided into partitions it is treated as on unit. Whenever a process need some space, then space will be allocated to the process, exactly the same as required. Here a table is maintained to keep a track of memory blocks which are occupied. Advantage: There is no internal discontinuity. Disadvantage: Management is troublesome as memory is ending up simply divided after some time. Q7) What are some of the allocation strategies and define fragmentation?A7) Allocation Strategies As memory compaction is quiet time consuming, the alternate to avoid it is that OS designer assign processes to memory in a more efficient way. Whenever a process will be swapping in, the operating system must decide which free block to allocate to a process so that other processes will also get chance to execute. There are three placement algorithms that need to be considered are first-fit, best-fit, and worst-fit.

- First fit. Assign the first block that is huge enough to accommodate a process. Searching will start from the beginning or where the past first-fit inquiry finished. We can quit looking when we locate a free gap that is enormous enough.

- Best fit. Assign the smallest block that is huge enough. We should look through the whole list, except if the rundown is requested by size. This methodology delivers the smallest remaining block.

- Worst fit. Assign the biggest block. Once more, we should look through the whole list, except if it is arranged by size. This system delivers the biggest extra gap, which might be more valuable than the littler remaining gap from a best-fit methodology. Neither first fit nor best fit is plainly superior to the next as far as storage usage, however first fit is commonly quicker.

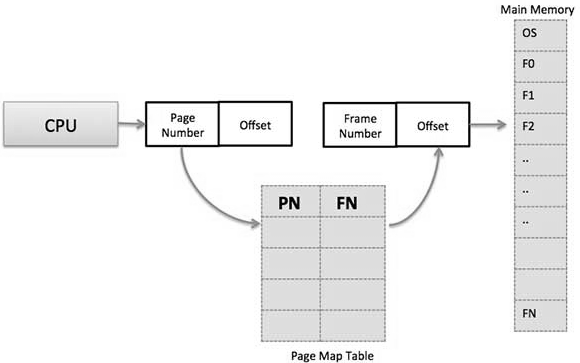

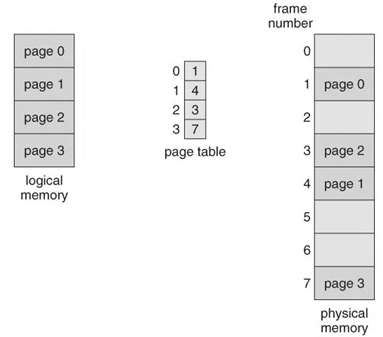

Fragmentation Both the primary fit and best-fit systems for memory allocation experience the ill effects of external fragmentation. As processes are stacked and removed from memory, the free memory space is broken into little pieces. External fragmentation exists when there is sufficient absolute memory space to fulfil a request, however the accessible spaces are not contiguous; storage is divided into an enormous number of little gaps. This fragmentation issue can be serious. One answer for the issue of external fragmentation is compaction. The objective is to rearrange the memory contents in order to put all free memory together in one enormous block. Q8) Explain Paging in detail in memory management.A8)In Operating Systems, Paging is a storage component used to recover processes from the secondary storage into the main memory as pages. The primary thought behind the concept of paging is to isolate each process as pages. The main memory will likewise be partitioned as frames. One page of the process is to be fitted in one of the frames of the memory. The pages are supposed to be store at the various locations of the memory however the need is consistently to locate the contiguous frames or holes. In this design, the operating system recovers data from secondary storage in same-size blocks called pages. Paging is a significant piece of virtual memory usage in current operating systems, utilizing secondary storage to give programs a chance to surpass the size of accessible physical memory. Support for paging has been taken care of by hardware. Nonetheless, ongoing structures have actualized paging by intently coordinating the hardware what's more, operating system, and particularly on 64-bit microprocessors.

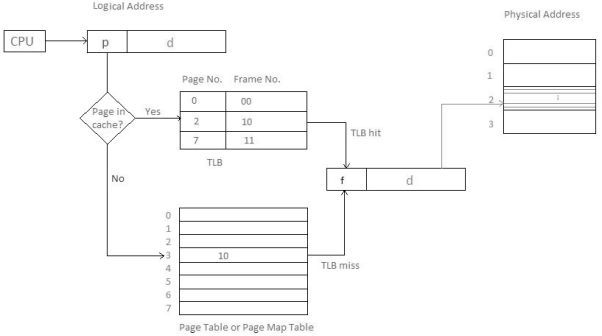

Basic Method The fundamental technique for actualizing paging includes breaking physical memory into fixed-sized blocks called frames and breaking logical memory into blocks of a similar size known as pages. When a process needs to be executed, its pages are stacked into any accessible memory frames from their source (a file system or the support store). The backing store is partitioned into fixed-sized blocks that are of the similar size as the memory frames. The hardware support for paging is represented in figure below. Each address created the CPU is partitioned into two sections: a page number (p) and a page offset (d).

Paging Hardware The page number is utilized as a list into a page table. The page table contains the base location of each page in physical memory. This base location is joined with the page offset to characterize the physical memory address that is sent to the memory unit. The paging model of memory is appeared in figure below.

Paging model of logical and physical memory The page size (like the frame size) is characterized by the hardware. The size of a page is regularly a power of 2, differing between 512 bytes and 16 MB for every page, contingent upon the computer architecture. The choice of a power of 2 as a page size makes the interpretation of a logical address into a page number and a page offset especially simple. In the event that the size of the logical address space is 2m, and a page size is 2n addressing units (bytes or words) then the high-request m - n bits of a logical address assign the page number, and the n low-request bits assign the page offset. Along these lines, the logical address is as per the following:

where p is a index into the page table and d is the displacement inside the page. Since the operating system is overseeing physical memory, it must know of the assignment subtleties of physical memory-which frames are allotted, which frames are accessible, what number of total frames there are, etc. This data is commonly kept in a data structure considered a frame table. The frame table has one section for each physical page frame, demonstrating whether the last mentioned is free or designated and, on the off chance that it is allotted, to which page of which process or processes.

|

|

page number | page offset |

p | d |

m - n | m |

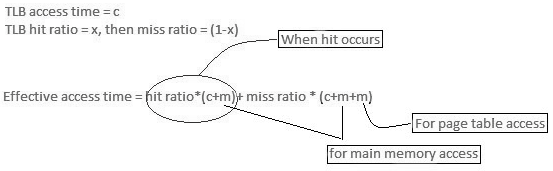

Hardware SupportThe hardware usage of page table should be possible by utilizing committed registers. Be that as it may, the use of register for the page table is agreeable just if page table is small. On the off chance that page table contain enormous number of sections, at that point we can utilize TLB (translation Look-aside cushion), an extraordinary, little, quick look into hardware cache. The TLB is acquainted, fast memory. Every passage in TLB comprises of two sections: a tag and a value. At the point when this memory is utilized, a item is compared with all tags at the same time. If the item is discovered, at that point comparing value is returned.

Main memory access time = m In the event that page table are kept in main memory, Successful Access time = m(for page table) + n(for specific page in page table)

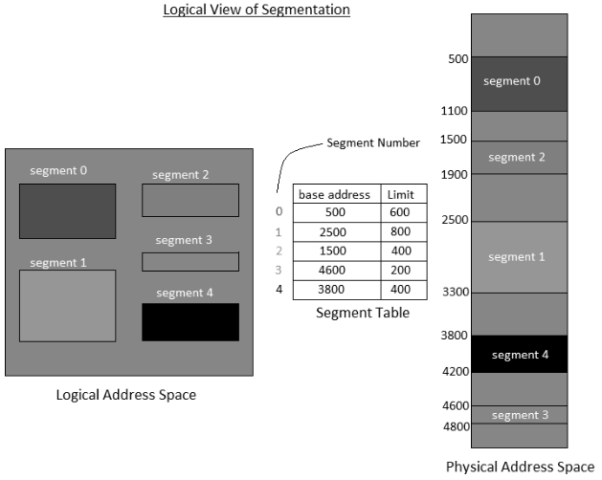

Q10) Explain Segmentation in memory managementA10)Memory segmentation is a computer (primary) memory management method of division of a PC's primary memory into segments or sections. In a computer system utilizing segmentation, a reference to a memory area incorporates a value that distinguishes a segment and an offset (memory area) inside that segment. Segments or sections are likewise utilized in object files of compiled programs when they are connected together into a program image and when the image is stacked into memory. A process is isolated into Segments. The chunks that a program is partitioned into which are not really the majority of similar sizes are called segments. Segmentation gives user's perspective on the process which paging does not give. Here the user's view is mapped to physical memory. There are kinds of segmentation: There is no straightforward connection between logical addresses and physical addresses in segmentation. A table is maintained to stores the data about all such segments and is called Segment Table. Segment Table – It maps two-dimensional Logical address into one-dimensional Physical address. It's each table entry has:

Segments as a rule compare to regular divisions of a program, for example, singular schedules or data tables so division is commonly more noticeable to the programmer than paging alone. Different segments might be made for various program modules, or for various classes of memory utilization, for example, code and data portions. Certain segments might be shared between programs.

|

|

- Virtual memory segmentation – Each process is partitioned into various segments, not which are all inhabitant at any one point in time.

- Simple Segmentation – Each process is partitioned into various segments, which are all stacked into memory at run time, however not really contiguously.

- Base Address: It contains the beginning physical address where the fragments reside in memory.

- Limit: It determines the length of the fragment.

|

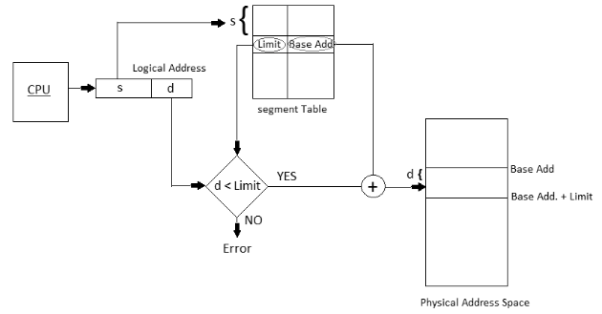

Q11) Explain Translation of Logical Address into Physical Address

A11)Address generated by the CPU is partitioned into: Advantages-No Internal fragmentation. Segment Table consume less space in contrast with Page table in paging. Disadvantages-As processes are stacked and expelled from the memory, the free memory space is broken into little pieces, causing External fragmentation.

|

- Segment number (s): Number of bits required to speak to the portion.

- Segment balance (d): Number of bits required to speak to the size of the fragment.

0 matching results found