DE

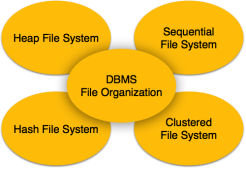

UNIT - 4Data Storage and IndexesQ1) Explain File organisation in DBMS A1) File OrganizationFile Organization defines how file records are mapped onto disk blocks. We have four types of File Organization to organize file records –

Fig 1 – DBMS file organisationHeap File OrganizationWhen a file is created using Heap File Organization, the Operating System allocates memory area to that file without any further accounting details. File records can be placed anywhere in that memory area. It is the responsibility of the software to manage the records. Heap File does not support any ordering, sequencing, or indexing on its own.Sequential File OrganizationEvery file record contains a data field (attribute) to uniquely identify that record. In sequential file organization, records are placed in the file in some sequential order based on the unique key field or search key. Practically, it is not possible to store all the records sequentially in physical form.Hash File OrganizationHash File Organization uses Hash function computation on some fields of the records. The output of the hash function determines the location of disk block where the records are to be placed.Clustered File OrganizationClustered file organization is not considered good for large databases. In this mechanism, related records from one or more relations are kept in the same disk block, that is, the ordering of records is not based on primary key or search key.Q2) What are the File operations in DBMS? A2) File OperationsOperations on database files can be broadly classified into two categories −Update Operations Retrieval Operations Update operations change the data values by insertion, deletion, or update. Retrieval operations, on the other hand, do not alter the data but retrieve them after optional conditional filtering. In both types of operations, selection plays a significant role. Other than creation and deletion of a file, there could be several operations, which can be done on files.Open − A file can be opened in one of the two modes, read mode or write mode. In read mode, the operating system does not allow anyone to alter data. In other words, data is read only. Files opened in read mode can be shared among several entities. Write mode allows data modification. Files opened in write mode can be read but cannot be shared. Locate − Every file has a file pointer, which tells the current position where the data is to be read or written. This pointer can be adjusted accordingly. Using find (seek) operation, it can be moved forward or backward. Read − By default, when files are opened in read mode, the file pointer points to the beginning of the file. There are options where the user can tell the operating system where to locate the file pointer at the time of opening a file. The very next data to the file pointer is read. Write − User can select to open a file in write mode, which enables them to edit its contents. It can be deletion, insertion, or modification. The file pointer can be located at the time of opening or can be dynamically changed if the operating system allows to do so. Close − This is the most important operation from the operating system’s point of view. When a request to close a file is generated, the operating system The organization of data inside a file plays a major role here. The process to locate the file pointer to a desired record inside a file various based on whether the records are arranged sequentially or clustered.Q3) Explain Indexing in DBMS with examples A3) Indexing in DBMSIndexing is used to optimize the performance of a database by minimizing the number of disk accesses required when a query is processed. The index is a type of data structure. It is used to locate and access the data in a database table quickly. Index structure:Indexes can be created using some database columns.

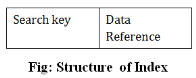

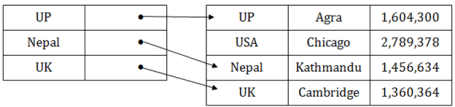

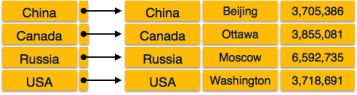

Fig 2 – Structure of IndexThe first column of the database is the search key that contains a copy of the primary key or candidate key of the table. The values of the primary key are stored in sorted order so that the corresponding data can be accessed easily. The second column of the database is the data reference. It contains a set of pointers holding the address of the disk block where the value of the particular key can be found. Indexing Methods

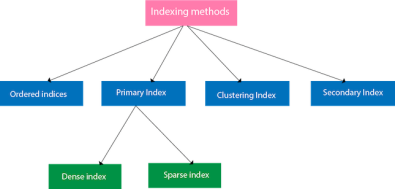

Fig 3 – Indexing MethodsOrdered indicesThe indices are usually sorted to make searching faster. The indices which are sorted are known as ordered indices.Example: Suppose we have an employee table with thousands of record and each of which is 10 bytes long. If their IDs start with 1, 2, 3....and so on and we have to search student with ID-543.In the case of a database with no index, we have to search the disk block from starting till it reaches 543. The DBMS will read the record after reading 543*10=5430 bytes. In the case of an index, we will search using indexes and the DBMS will read the record after reading 542*2= 1084 bytes which are very less compared to the previous case. Primary IndexIf the index is created on the basis of the primary key of the table, then it is known as primary indexing. These primary keys are unique to each record and contain 1:1 relation between the records. As primary keys are stored in sorted order, the performance of the searching operation is quite efficient. The primary index can be classified into two types: Dense index and Sparse index. Dense indexThe dense index contains an index record for every search key value in the data file. It makes searching faster. In this, the number of records in the index table is same as the number of records in the main table. It needs more space to store index record itself. The index records have the search key and a pointer to the actual record on the disk.

Fig 4 – Dense IndexSparse indexIn the data file, index record appears only for a few items. Each item points to a block. In this, instead of pointing to each record in the main table, the index points to the records in the main table in a gap.

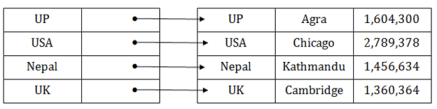

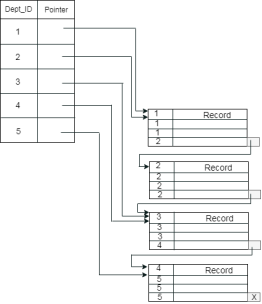

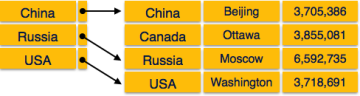

Fig 5 – Sparse IndexClustering IndexA clustered index can be defined as an ordered data file. Sometimes the index is created on non-primary key columns which may not be unique for each record. In this case, to identify the record faster, we will group two or more columns to get the unique value and create index out of them. This method is called a clustering index. The records which have similar characteristics are grouped, and indexes are created for these group. Example: suppose a company contains several employees in each department. Suppose we use a clustering index, where all employees which belong to the same Dept_ID are considered within a single cluster, and index pointers point to the cluster as a whole. Here Dept Id is a non-unique key.

The previous schema is little confusing because one disk block is shared by records which belong to the different cluster. If we use separate disk block for separate clusters, then it is called better technique.

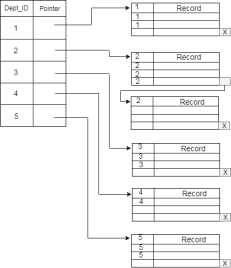

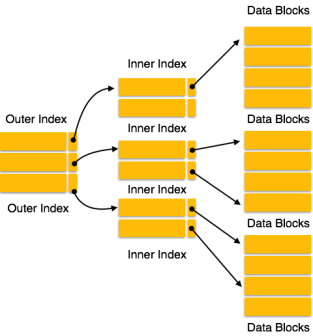

Secondary IndexIn the sparse indexing, as the size of the table grows, the size of mapping also grows. These mappings are usually kept in the primary memory so that address fetch should be faster. Then the secondary memory searches the actual data based on the address got from mapping. If the mapping size grows then fetching the address itself becomes slower. In this case, the sparse index will not be efficient. To overcome this problem, secondary indexing is introduced.In secondary indexing, to reduce the size of mapping, another level of indexing is introduced. In this method, the huge range for the columns is selected initially so that the mapping size of the first level becomes small. Then each range is further divided into smaller ranges. The mapping of the first level is stored in the primary memory, so that address fetch is faster. The mapping of the second level and actual data are stored in the secondary memory (hard disk).

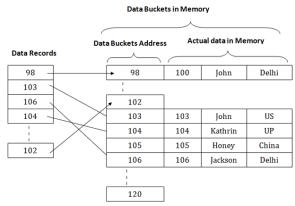

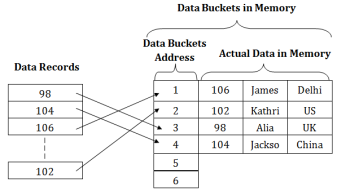

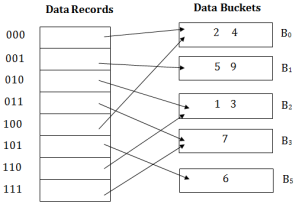

Fig 6 – Secondary IndexFor example:If you want to find the record of roll 111 in the diagram, then it will search the highest entry which is smaller than or equal to 111 in the first level index. It will get 100 at this level. Then in the second index level, again it does max (111) <= 111 and gets 110. Now using the address 110, it goes to the data block and starts searching each record till it gets 111. This is how a search is performed in this method. Inserting, updating or deleting is also done in the same manner. Q4) What is hashing in DBMS? A4) HashingIn a huge database structure, it is very inefficient to search all the index values and reach the desired data. Hashing technique is used to calculate the direct location of a data record on the disk without using index structure.In this technique, data is stored at the data blocks whose address is generated by using the hashing function. The memory location where these records are stored is known as data bucket or data blocks.In this, a hash function can choose any of the column value to generate the address. Most of the time, the hash function uses the primary key to generate the address of the data block. A hash function is a simple mathematical function to any complex mathematical function. We can even consider the primary key itself as the address of the data block. That means each row whose address will be the same as a primary key stored in the data block.

Fig 7 – Data buckets in memoryThe above diagram shows data block addresses same as primary key value. This hash function can also be a simple mathematical function like exponential, mod, cos, sin, etc. Suppose we have mod (5) hash function to determine the address of the data block. In this case, it applies mod (5) hash function on the primary keys and generates 3, 3, 1, 4 and 2 respectively, and records are stored in those data block addresses.

Types of Hashing:

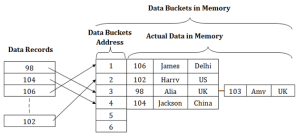

Fig 8 - HashingStatic Hashing Dynamic Hashing Q5) What is static hashing explain with examples? A5) Static HashingIn static hashing, the resultant data bucket address will always be the same. That means if we generate an address for EMP_ID =103 using the hash function mod (5) then it will always result in same bucket address 3. Here, there will be no change in the bucket address.Hence in this static hashing, the number of data buckets in memory remains constant throughout. In this example, we will have five data buckets in the memory used to store the data.

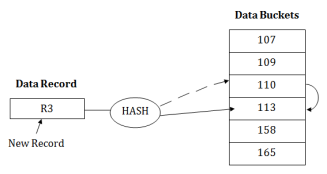

Fig 9 – Static hashingOperations of Static HashingSearching a record When a record needs to be searched, then the same hash function retrieves the address of the bucket where the data is stored.Insert a Record When a new record is inserted into the table, then we will generate an address for a new record based on the hash key and record is stored in that location.Delete a Record To delete a record, we will first fetch the record which is supposed to be deleted. Then we will delete the records for that address in memory.Update a Record To update a record, we will first search it using a hash function, and then the data record is updated.If we want to insert some new record into the file but the address of a data bucket generated by the hash function is not empty, or data already exists in that address. This situation in the static hashing is known as bucket overflow. This is a critical situation in this method.To overcome this situation, there are various methods. Some commonly used methods are as follows:1. Open HashingWhen a hash function generates an address at which data is already stored, then the next bucket will be allocated to it. This mechanism is called as Linear Probing. For example: suppose R3 is a new address which needs to be inserted, the hash function generates address as 112 for R3. But the generated address is already full. So the system searches next available data bucket, 113 and assigns R3 to it.

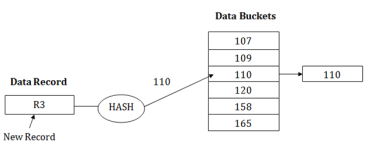

2. Close HashingWhen buckets are full, then a new data bucket is allocated for the same hash result and is linked after the previous one. This mechanism is known as Overflow chaining.For example: Suppose R3 is a new address which needs to be inserted into the table, the hash function generates address as 110 for it. But this bucket is full to store the new data. In this case, a new bucket is inserted at the end of 110 buckets and is linked to it.

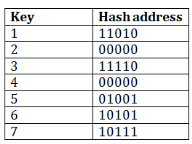

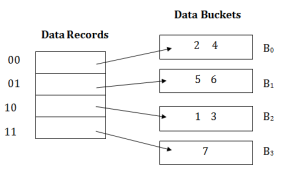

Q6) Explain Dynamic hashing in detail A6) Dynamic HashingThe dynamic hashing method is used to overcome the problems of static hashing like bucket overflow. In this method, data buckets grow or shrink as the records increases or decreases. This method is also known as Extendable hashing method. This method makes hashing dynamic, i.e., it allows insertion or deletion without resulting in poor performance. How to search a keyFirst, calculate the hash address of the key. Check how many bits are used in the directory, and these bits are called as i. Take the least significant i bits of the hash address. This gives an index of the directory. Now using the index, go to the directory and find bucket address where the record might be. How to insert a new recordFirstly, you have to follow the same procedure for retrieval, ending up in some bucket. If there is still space in that bucket, then place the record in it. If the bucket is full, then we will split the bucket and redistribute the records. For example:Consider the following grouping of keys into buckets, depending on the prefix of their hash address:

The last two bits of 2 and 4 are 00. So it will go into bucket B0. The last two bits of 5 and 6 are 01, so it will go into bucket B1. The last two bits of 1 and 3 are 10, so it will go into bucket B2. The last two bits of 7 are 11, so it will go into B3.

Insert key 9 with hash address 10001 into the above structure:Since key 9 has hash address 10001, it must go into the first bucket. But bucket B1 is full, so it will get split. The splitting will separate 5, 9 from 6 since last three bits of 5, 9 are 001, so it will go into bucket B1, and the last three bits of 6 are 101, so it will go into bucket B5. Keys 2 and 4 are still in B0. The record in B0 pointed by the 000 and 100 entry because last two bits of both the entry are 00. Keys 1 and 3 are still in B2. The record in B2 pointed by the 010 and 110 entry because last two bits of both the entry are 10. Key 7 are still in B3. The record in B3 pointed by the 111 and 011 entry because last two bits of both the entry are 11.

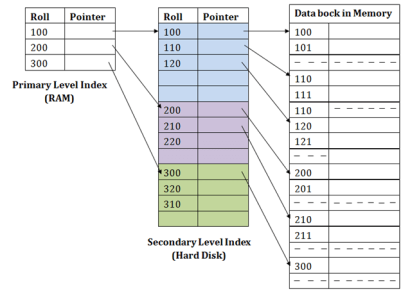

Q7) What are the advantages and disadvantages of dynamic hashing? A7) Advantages of dynamic hashingIn this method, the performance does not decrease as the data grows in the system. It simply increases the size of memory to accommodate the data. In this method, memory is well utilized as it grows and shrinks with the data. There will not be any unused memory lying. This method is good for the dynamic database where data grows and shrinks frequently. Disadvantages of dynamic hashing In this method, if the data size increases then the bucket size is also increased. These addresses of data will be maintained in the bucket address table. This is because the data address will keep changing as buckets grow and shrink. If there is a huge increase in data, maintaining the bucket address table becomes tedious. In this case, the bucket overflow situation will also occur. But it might take little time to reach this situation than static hashing. Q8) What is indexing in DBMS explain in detail?A8) Indexing is a data structure technique to efficiently retrieve records from the database files based on some attributes on which the indexing has been done. Indexing in database systems is similar to what we see in books.Indexing is defined based on its indexing attributes. Indexing can be of the following types −Primary Index − Primary index is defined on an ordered data file. The data file is ordered on a key field. The key field is generally the primary key of the relation. Secondary Index − Secondary index may be generated from a field which is a candidate key and has a unique value in every record, or a non-key with duplicate values. Clustering Index − Clustering index is defined on an ordered data file. The data file is ordered on a non-key field. Ordered Indexing is of two types −Dense Index Sparse Index Dense IndexIn dense index, there is an index record for every search key value in the database. This makes searching faster but requires more space to store index records itself. Index records contain search key value and a pointer to the actual record on the disk.

Fig 10 – Dense Index Sparse IndexIn sparse index, index records are not created for every search key. An index record here contains a search key and an actual pointer to the data on the disk. To search a record, we first proceed by index record and reach at the actual location of the data. If the data we are looking for is not where we directly reach by following the index, then the system starts sequential search until the desired data is found.

Fig 11 – sparse indexMultilevel IndexIndex records comprise search-key values and data pointers. Multilevel index is stored on the disk along with the actual database files. As the size of the database grows, so does the size of the indices. There is an immense need to keep the index records in the main memory so as to speed up the search operations. If single-level index is used, then a large size index cannot be kept in memory which leads to multiple disk accesses.

Fig 12 – Multilevel IndexMulti-level Index helps in breaking down the index into several smaller indices in order to make the outermost level so small that it can be saved in a single disk block, which can easily be accommodated anywhere in the main memory.B+ TreeA B+ tree is a balanced binary search tree that follows a multi-level index format. The leaf nodes of a B+ tree denote actual data pointers. B+ tree ensures that all leaf nodes remain at the same height, thus balanced. Additionally, the leaf nodes are linked using a link list; therefore, a B+ tree can support random access as well as sequential access.Q9) What is the structure of B+ Tree? A9) Structure of B+ TreeEvery leaf node is at equal distance from the root node. A B+ tree is of the order n where n is fixed for every B+ tree.

Fig 13 – B+ TreeInternal nodes −Internal (non-leaf) nodes contain at least ⌈n/2⌉ pointers, except the root node. At most, an internal node can contain n pointers. Leaf nodes −Leaf nodes contain at least ⌈n/2⌉ record pointers and ⌈n/2⌉ key values. At most, a leaf node can contain n record pointers and n key values. Every leaf node contains one block pointer P to point to next leaf node and forms a linked list. B+ Tree InsertionB+ trees are filled from bottom and each entry is done at the leaf node. If a leaf node overflows − Split node into two parts. Partition at i = ⌊(m+1)/2⌋. First i entries are stored in one node. Rest of the entries (i+1 onwards) are moved to a new node. ith key is duplicated at the parent of the leaf. If a non-leaf node overflows − Split node into two parts. Partition the node at i = ⌈(m+1)/2⌉. Entries up to i are kept in one node. Rest of the entries are moved to a new node. B+ Tree DeletionB+ tree entries are deleted at the leaf nodes. The target entry is searched and deleted. If it is an internal node, delete and replace with the entry from the left position. After deletion, underflow is tested, If underflow occurs, distribute the entries from the nodes left to it. If distribution is not possible from left, then Distribute from the nodes right to it. If distribution is not possible from left or from right, then Merge the node with left and right to it. Q10) What is multiple key accesses explain in detail and write its advantages and disadvantages?A10) To handle above situation, multiple key accesses are introduced. Here, we use combination of two or more columns which are frequently queried to get index. In the above example, both DEPT_ID and SALARY are clubbed into one index and are stored in the files. Now what happens when we fire above query? It filters both DEPT_ID = 20 and SALARY = 5000 at a shot and returns the result.This type of indexing works well when all the columns used in the index are involved in the query. In above example we have index on (DEPT_ID, SALARY) and both the columns are used in the query. Hence it resulted quickly. But what will happen when any one of the column is used in the query? This index will not be used to fetch the record. Hence query becomes slower.Imagine another case where we have queried like below. Here both the columns are used differently- we have queried to find the salary less than 5000, not equal to 5000. What will happen in this case?SELECT * FROM EMPLOYEE WHERE DEPT_ID = 20 and SALARY < 5000;This query will use the index and will fetch the result quickly. Reason is it has to find the address of the record in the file where DEPT_ID = 20 and SALARY = 5000 and then it has to return all the records which are stored previous to this address. Hence the query becomes simple and faster.Imagine another case for the same example, where DEPT_ID <20 but SALARY = 5000 is used to fetch the record. What will happen in this case?

This query will not use the index. Why? The order of the columns in the index is (DEPT_ID, SALARY). The multiple key indexes work as below.The condition (DEPT_ID1, SALARY1) < (DEPT_ID2, SALARY2) is used only if

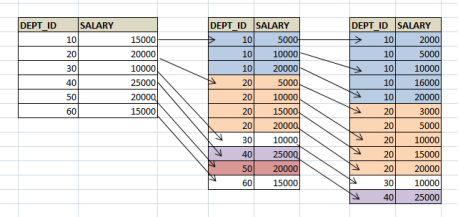

In this example, we are checking for second condition, where we have DEPT_ID <40. But SALARY < 5000 should have been used for the index to be used. But it is not the case in the query. That means, it should have only one condition DEPT_ID <40 or both conditions like DEPT_ID = 20 AND SALARY<5000 to use the index. This method of ordering in index is called lexicographic ordering.Have a look at below index file to understand these examples. We can see that, records are first grouped based on DEPT_ID and then on SALARY. If we have to select DEPT_ID <40 alone, then all the records less than DEPT_ID =40 address have to selected which easy and quick. We get all records in a sequence here. DEPT_ID1 <DEPT_ID2 condition holds well here.If DEPT_ID =20 but SALARY < 5000 condition is used, pointer will go to DEPT_ID = 20 Section in the file and then fetch all the records less than 5000. The index is used in the query making it faster. Here, second conditions for lexicographic works.What if we have to search DEPT_ID <40 and SALARY = 5000, it is same as traversing the whole table. We will not be able to find all requested records at one place.Similarly, if only one column in the index is used, say SALARY = 5000, then also searching is a full table scan. Below diagram clearly shows SALARY = 5000 is scattered in the memory file and have to traverse whole file to get the result. Hence it is not efficient.

In order to create a multiple key indexOne has to thoroughly examine all kinds of queries that are fired on the table. This will help us to decide the columns to be put in the index. Also, it will determine the feasibility of index to run faster queries. That means, if employees’ details are retrieved based on DEPT_ID and SALARY, and then it is feasible use index on both (DEPT_ID, SALARY). Else it is an overhead on table performance. In general case, it is expected to put columns with highest unique value first. That is if create (SALARY, DEPT_ID) index than (DEPT_ID, SALARY) is most efficient. This is because; it will trim down the result set. That means, employees with same salary will have smaller result set than employees in the same department, hence making the search faster. But again selecting the order of columns in the index is up to developer. In our example above, we can see the affect of selecting the index order as (DEPT_ID, SALARY). It gave us more meaningful distribution of records in the file than grouping them based on salary. Also, our query on EMPLOYEE table is such that grouping on DEPT_ID first seems to be better option. Advantages of Multiple Key AccessesIt works efficiently when all the columns of the index is used in the query, provided it satisfies lexicographic ordering. It works efficiently even on larger tables. This is because, records are sorted based on the index. Disadvantages of Multiple Key AccessesIt works only for lexicographic ordering. The second example above does not use the index and cost of the query is high. It does not work efficiently if only one or partial columns of index are involved in querying. It does not use the index to retrieve the data.

|

- removes all the locks (if in shared mode),

- saves the data (if altered) to the secondary storage media, and

- releases all the buffers and file handlers associated with the file.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

SELECT * FROM EMPLOYEE WHERE DEPT_ID < 40 and SALARY = 5000; |

DEPT_ID1 < DEPT_ID2 OR DEPT_ID1 = DEPT_ID2 AND SALARY1 <SALARY2 |

|

0 matching results found