DE

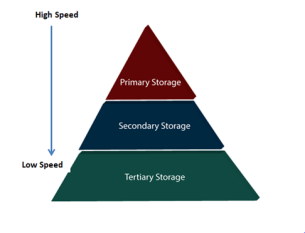

UNIT - 6Recovery SystemQ1)What is Failure classification in DBMS?A1) To find that where the problem has occurred, we generalize a failure into the following categories:Transaction failure System crash Disk failure 1. Transaction failureThe transaction failure occurs when it fails to execute or when it reaches a point from where it can't go any further. If a few transaction or process is hurt, then this is called as transaction failure. Reasons for a transaction failure could be -Logical errors: If a transaction cannot complete due to some code error or an internal error condition, then the logical error occurs. Syntax error: It occurs where the DBMS itself terminates an active transaction because the database system is not able to execute it. For example, The system aborts an active transaction, in case of deadlock or resource unavailability. 2. System CrashSystem failure can occur due to power failure or other hardware or software failure. Example: Operating system error. Fail-stop assumption: In the system crash, non-volatile storage is assumed not to be corrupted.3. Disk FailureIt occurs where hard-disk drives or storage drives used to fail frequently. It was a common problem in the early days of technology evolution. Disk failure occurs due to the formation of bad sectors, disk head crash, and unreachability to the disk or any other failure, which destroy all or part of disk storage. Q2)What is Storage in DBMS what are its different types and what is storage hierarchy?A2) A database system provides an ultimate view of the stored data. However, data in the form of bits, bytes get stored in different storage devices.In this section, we will take an overview of various types of storage devices that are used for accessing and storing data.Types of Data StorageFor storing the data, there are different types of storage options available. These storage types differ from one another as per the speed and accessibility. There are the following types of storage devices used for storing the data:Primary Storage Secondary Storage Tertiary Storage

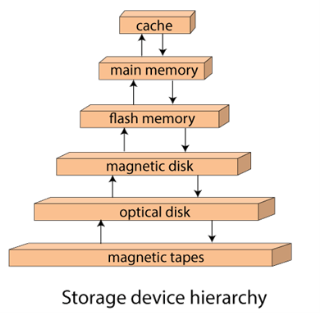

Fig 1 - Types of Data StoragePrimary StorageIt is the primary area that offers quick access to the stored data. We also know the primary storage as volatile storage. It is because this type of memory does not permanently store the data. As soon as the system leads to a power cut or a crash, the data also get lost. Main memory and cache are the types of primary storage.Main Memory: It is the one that is responsible for operating the data that is available by the storage medium. The main memory handles each instruction of a computer machine. This type of memory can store gigabytes of data on a system but is small enough to carry the entire database. At last, the main memory loses the whole content if the system shuts down because of power failure or other reasons. Cache: It is one of the costly storage media. On the other hand, it is the fastest one. A cache is a tiny storage media which is maintained by the computer hardware usually. While designing the algorithms and query processors for the data structures, the designers keep concern on the cache effects. Secondary StorageSecondary storage is also called as Online storage. It is the storage area that allows the user to save and store data permanently. This type of memory does not lose the data due to any power failure or system crash. That's why we also call it non-volatile storage.There are some commonly described secondary storage media which are available in almost every type of computer system:Flash Memory: A flash memory stores data in USB (Universal Serial Bus) keys which are further plugged into the USB slots of a computer system. These USB keys help transfer data to a computer system, but it varies in size limits. Unlike the main memory, it is possible to get back the stored data which may be lost due to a power cut or other reasons. This type of memory storage is most commonly used in the server systems for caching the frequently used data. This leads the systems towards high performance and is capable of storing large amounts of databases than the main memory. Magnetic Disk Storage: This type of storage media is also known as online storage media. A magnetic disk is used for storing the data for a long time. It is capable of storing an entire database. It is the responsibility of the computer system to make availability of the data from a disk to the main memory for further accessing. Also, if the system performs any operation over the data, the modified data should be written back to the disk. The tremendous capability of a magnetic disk is that it does not affect the data due to a system crash or failure, but a disk failure can easily ruin as well as destroy the stored data. Tertiary StorageIt is the storage type that is external from the computer system. It has the slowest speed. But it is capable of storing a large amount of data. It is also known as Offline storage. Tertiary storage is generally used for data backup. There are following tertiary storage devices available:Optical Storage: An optical storage can store megabytes or gigabytes of data. A Compact Disk (CD) can store 700 megabytes of data with a playtime of around 80 minutes. On the other hand, a Digital Video Disk or a DVD can store 4.7 or 8.5 gigabytes of data on each side of the disk. Tape Storage: It is the cheapest storage medium than disks. Generally, tapes are used for archiving or backing up the data. It provides slow access to data as it accesses data sequentially from the start. Thus, tape storage is also known as sequential-access storage. Disk storage is known as direct-access storage as we can directly access the data from any location on disk. Storage HierarchyBesides the above, various other storage devices reside in the computer system. These storage media are organized on the basis of data accessing speed, cost per unit of data to buy the medium, and by medium's reliability. Thus, we can create a hierarchy of storage media on the basis of its cost and speed.Thus, on arranging the above-described storage media in a hierarchy according to its speed and cost, we conclude the below-described image:

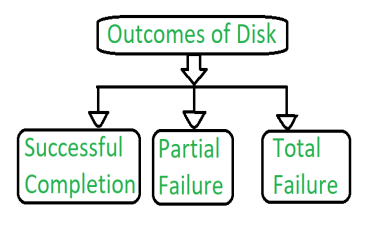

Fig 2 – Storage device hierarchyIn the image, the higher levels are expensive but fast. On moving down, the cost per bit is decreasing, and the access time is increasing. Also, the storage media from the main memory to up represents the volatile nature, and below the main memory, all are non-volatile devices. Q3) What is Stable-Storage Implementation in DBMS?A3) To achieve such storage, we need to replicate the required information on multiple storage devices with independent failure modes. The writing of an update should be coordinate in such a way that it would not delete all the copies of the state and that, when we are recovering from a failure, we can force all the copies to a consistent and correct value, even if another failure occurs during the recovery. In these, we discuss how to meet these needs.The disk write operation results to one of the following outcome:

Figure 3 – Outcomes of Disk Successful completion –

The data will written correctly on disk. Partial Failure –

In this case, failure is occurred in the middle of the data transfer, so that only some sectors were written with the new data, and the sector which is written during the failure may have been corrupted. Total Failure –

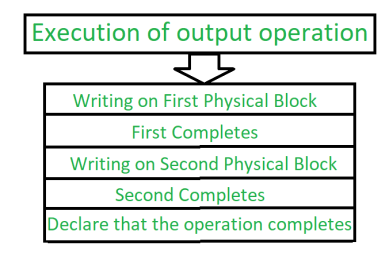

The failure occurred before the disk write started, so the previous data values on the disk remains intact. During writing a block somehow if failure occurs, the system’s first work is to detect the failure and then invoke a recovery process to restore the consistent state. To do that, the system must contain two physical block for each logical block.An output operation is executed as follows:

Figure 4 – Process of execution of output operation Write the information onto the first physical block. When the first write completes successfully, write the same operation onto the second physical block. When both the operation declares successfully, declare the operation as complete. During the recovery from a failure each of the physical block is examined. If both are the same and no detectable error exists, then no further action is necessary. If one block contains detectable errors then we replace its content with the value of the other block. If neither block contains the detectable error, but the block differ in content, then we replace the content of first block with the content of the second block.This procedure of the recovery give us an conclusion that either the write to stable content succeeds successfully or it results in no change.This procedure will be extended if we want arbitrarily large number of copies of each block of the stable storage. With the usage of large number of copies, the chances of the failure reduces. Generally, it is usually reasonable to simulate stable storage with only two copies. The data present in the stable storage is safe unless a failure destroys all the copies. The data that is present in the stable storage is guaranteed to be safe unless a failure destroys all the copies.Because waiting for disk writes to complete is time consuming, many storage arrays add NVRAM as a cache. Since the memory is no-volatile it can be trusted to store the data en route to the disks. In this way it is considered as a part of the stable storage. Writing to the stable storage is much faster than to disk, so performance is greatly improved.Q4) What is Crash Recovery in DBMS explain in detail?A4) DBMS is a highly complex system with hundreds of transactions being executed every second. The durability and robustness of a DBMS depends on its complex architecture and its underlying hardware and system software. If it fails or crashes amid transactions, it is expected that the system would follow some sort of algorithm or techniques to recover lost data.Failure ClassificationTo see where the problem has occurred, we generalize a failure into various categories, as follows −Transaction failureA transaction has to abort when it fails to execute or when it reaches a point from where it can’t go any further. This is called transaction failure where only a few transactions or processes are hurt.Reasons for a transaction failure could be −Logical errors − Where a transaction cannot complete because it has some code error or any internal error condition. System errors − Where the database system itself terminates an active transaction because the DBMS is not able to execute it, or it has to stop because of some system condition. For example, in case of deadlock or resource unavailability, the system aborts an active transaction. System CrashThere are problems − external to the system − that may cause the system to stop abruptly and cause the system to crash. For example, interruptions in power supply may cause the failure of underlying hardware or software failure.Examples may include operating system errors.Disk FailureIn early days of technology evolution, it was a common problem where hard-disk drives or storage drives used to fail frequently.Disk failures include formation of bad sectors, unreachability to the disk, disk head crash or any other failure, which destroys all or a part of disk storage.Storage StructureWe have already described the storage system. In brief, the storage structure can be divided into two categories −Volatile storage − As the name suggests, a volatile storage cannot survive system crashes. Volatile storage devices are placed very close to the CPU; normally they are embedded onto the chipset itself. For example, main memory and cache memory are examples of volatile storage. They are fast but can store only a small amount of information. Non-volatile storage − These memories are made to survive system crashes. They are huge in data storage capacity, but slower in accessibility. Examples may include hard-disks, magnetic tapes, flash memory, and non-volatile (battery backed up) RAM. Q5) What is Recovery and Atomicity in DBMS?A5) When a system crashes, it may have several transactions being executed and various files opened for them to modify the data items. Transactions are made of various operations, which are atomic in nature. But according to ACID properties of DBMS, atomicity of transactions as a whole must be maintained, that is, either all the operations are executed or none.When a DBMS recovers from a crash, it should maintain the following −It should check the states of all the transactions, which were being executed. A transaction may be in the middle of some operation; the DBMS must ensure the atomicity of the transaction in this case. It should check whether the transaction can be completed now or it needs to be rolled back. No transactions would be allowed to leave the DBMS in an inconsistent state. There are two types of techniques, which can help a DBMS in recovering as well as maintaining the atomicity of a transaction −Maintaining the logs of each transaction, and writing them onto some stable storage before actually modifying the database. Maintaining shadow paging, where the changes are done on a volatile memory, and later, the actual database is updated. Q6)What is Log-based Recovery?A8) Log is a sequence of records, which maintains the records of actions performed by a transaction. It is important that the logs are written prior to the actual modification and stored on a stable storage media, which is failsafe.Log-based recovery works as follows −The log file is kept on a stable storage media. When a transaction enters the system and starts execution, it writes a log about it. <Tn, Start>When the transaction modifies an item X, it write logs as follows − <Tn, X, V1, V2>It reads Tn has changed the value of X, from V1 to V2.When the transaction finishes, it logs − <Tn, commit>The database can be modified using two approaches −Deferred database modification − All logs are written on to the stable storage and the database is updated when a transaction commits. Immediate database modification − Each log follows an actual database modification. That is, the database is modified immediately after every operation. Q7) Explain Recovery with Concurrent Transactions in DBMSA7) When more than one transaction are being executed in parallel, the logs are interleaved. At the time of recovery, it would become hard for the recovery system to backtrack all logs, and then start recovering. To ease this situation, most modern DBMS use the concept of 'checkpoints'.CheckpointKeeping and maintaining logs in real time and in real environment may fill out all the memory space available in the system. As time passes, the log file may grow too big to be handled at all. Checkpoint is a mechanism where all the previous logs are removed from the system and stored permanently in a storage disk. Checkpoint declares a point before which the DBMS was in consistent state, and all the transactions were committed.RecoveryWhen a system with concurrent transactions crashes and recovers, it behaves in the following manner −

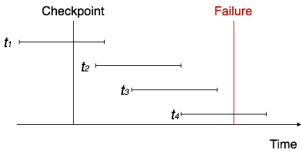

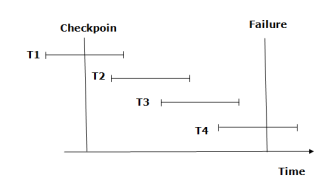

Fig 5 - RecoveryThe recovery system reads the logs backwards from the end to the last checkpoint. It maintains two lists, an undo-list and a redo-list. If the recovery system sees a log with <Tn, Start> and <Tn, Commit> or just <Tn, Commit>, it puts the transaction in the redo-list. If the recovery system sees a log with <Tn, Start> but no commit or abort log found, it puts the transaction in undo-list. All the transactions in the undo-list are then undone and their logs are removed. All the transactions in the redo-list and their previous logs are removed and then redone before saving their logs.Q8) Explain in detail log based recovery in DBMSThe log is a sequence of records. Log of each transaction is maintained in some stable storage so that if any failure occurs, then it can be recovered from there. If any operation is performed on the database, then it will be recorded in the log. But the process of storing the logs should be done before the actual transaction is applied in the database. Let's assume there is a transaction to modify the City of a student. The following logs are written for this transaction. When the transaction is initiated, then it writes 'start' log. When the transaction modifies the City from 'Noida' to 'Bangalore', then another log is written to the file. When the transaction is finished, then it writes another log to indicate the end of the transaction. There are two approaches to modify the database:1. Deferred database modification: The deferred modification technique occurs if the transaction does not modify the database until it has committed. In this method, all the logs are created and stored in the stable storage, and the database is updated when a transaction commits. 2. Immediate database modification: The Immediate modification technique occurs if database modification occurs while the transaction is still active. In this technique, the database is modified immediately after every operation. It follows an actual database modification. Recovery using Log recordsWhen the system is crashed, then the system consults the log to find which transactions need to be undone and which need to be redone. If the log contains the record <Ti, Start> and <Ti, Commit> or <Ti, Commit>, then the Transaction Ti needs to be redone. If log contains record<Tn, Start> but does not contain the record either <Ti, commit> or <Ti, abort>, then the Transaction Ti needs to be undone. Q9) Explain Checkpoints in DBMSThe checkpoint is a type of mechanism where all the previous logs are removed from the system and permanently stored in the storage disk. The checkpoint is like a bookmark. While the execution of the transaction, such checkpoints are marked, and the transaction is executed then using the steps of the transaction, the log files will be created. When it reaches to the checkpoint, then the transaction will be updated into the database, and till that point, the entire log file will be removed from the file. Then the log file is updated with the new step of transaction till next checkpoint and so on. The checkpoint is used to declare a point before which the DBMS was in the consistent state, and all transactions were committed. Recovery using CheckpointIn the following manner, a recovery system recovers the database from this failure:

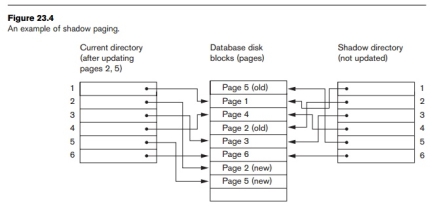

Fig 6 – Recovery using checkpointThe recovery system reads log files from the end to start. It reads log files from T4 to T1. Recovery system maintains two lists, a redo-list, and an undo-list. The transaction is put into redo state if the recovery system sees a log with <Tn, Start> and <Tn, Commit> or just <Tn, Commit>. In the redo-list and their previous list, all the transactions are removed and then redone before saving their logs. For example: In the log file, transaction T2 and T3 will have <Tn, Start> and <Tn, Commit>. The T1 transaction will have only <Tn, commit> in the log file. That's why the transaction is committed after the checkpoint is crossed. Hence it puts T1, T2 and T3 transaction into redo list. The transaction is put into undo state if the recovery system sees a log with <Tn, Start> but no commit or abort log found. In the undo-list, all the transactions are undone, and their logs are removed. For example: Transaction T4 will have <Tn, Start>. So T4 will be put into undo list since this transaction is not yet complete and failed amid. Q10) Explain Shadow Paging in DBMS A10) This recovery scheme does not require the use of a log in a single-user environment. In a multiuser environment, a log may be needed for the concurrency control method. Shadow paging considers the database to be made up of a number of fixed-size disk pages (or disk blocks)—say, n—for recovery purposes. A directory with n entries is constructed, where the ith entry points to the ith database page on disk. The directory is kept in main memory if it is not too large, and all references—reads or writes—to database pages on disk go through it. When a transaction begins executing, the current directory—whose entries point to the most recent or current database pages on disk—is copied into a shadow directory. The shadow directory is then saved on disk while the current directory is used by the transaction.During transaction execution, the shadow directory is never modified. When a write_item operation is performed, a new copy of the modified database page is created, but the old copy of that page is not overwritten. Instead, the new page is writ-ten elsewhere—on some previously unused disk block. The current directory entry is modified to point to the new disk block, whereas the shadow directory is not modified and continues to point to the old unmodified disk block. Figure 23.4 illustrates the concepts of shadow and current directories. For pages updated by the transaction, two versions are kept. The old version is referenced by the shadow directory and the new version by the current directory.

Fig 7 - ExampleTo recover from a failure during transaction execution, it is sufficient to free the modified database pages and to discard the current directory. The state of the data-base before transaction execution is available through the shadow directory, and that state is recovered by reinstating the shadow directory. The database thus is returned to its state prior to the transaction that was executing when the crash occurred, and any modified pages are discarded. Committing a transaction corresponds to discarding the previous shadow directory. Since recovery involves neither undoing nor redoing data items, this technique can be categorized as a NO-UNDO/NO-REDO technique for recovery.In a multiuser environment with concurrent transactions, logs and checkpoints must be incorporated into the shadow paging technique. One disadvantage of shadow paging is that the updated database pages change location on disk. This makes it difficult to keep related database pages close together on disk without complex storage management strategies. Furthermore, if the directory is large, the overhead of writing shadow directories to disk as transactions commit is significant. A further complication is how to handle garbage collection when a transaction commits. The old pages referenced by the shadow directory that have been updated must be released and added to a list of free pages for future use. These pages are no longer needed after the transaction commits. Another issue is that the operation to migrate between cur-rent and shadow directories must be implemented as an atomic operation.

|

|

|

The data will written correctly on disk.

In this case, failure is occurred in the middle of the data transfer, so that only some sectors were written with the new data, and the sector which is written during the failure may have been corrupted.

The failure occurred before the disk write started, so the previous data values on the disk remains intact.

|

|

- <Tn, Start>

- <Tn, City, 'Noida', 'Bangalore' >

- <Tn, Commit>

|

|

0 matching results found